Abstract

This article employs Gaussian process regression (GPR), minimax probability machine regression (MPMR) and extreme learning machine (ELM) for prediction of uplift capacity (–) of suction caisson. This study uses GPR, MPMR and ELM as regression techniques. L/d (L is the embedded length of the caisson and d is the diameter of caisson), undrained shear strength of soil at the depth of the caisson tip (Su), D/L (D is the depth of the load application point from the soil surface), inclined angle (θ) and load rate parameter (Tk) have been adopted as inputs of GPR, MPMR and ELM models. The output of GPR, MPMR and ELM is P. The results of GPR, MPMR and ELM have been compared with the artificial neural network (ANN) model. The developed models have also been used to determine the effect of each input on P. This study shows that the developed GPR, MPMR and ELM are robust models for prediction of P of suction caisson.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The uplift capacity (P) of suction caissons is an important parameter for designing offshore structures. So, the determination of P of suction caisson is an important task in ocean engineering. The value of P depends on different parameters such as passive suction under caisson-sealed cap, self-weight of caisson, frictional resistance along the soil–caisson interface, submerged weight of soil plug inside the caisson and uplift soil (reverse end bearing) bearing pressure (Albert et al. 1987; Rauch 2004). Researchers use different methods for determination of P of suction caisson (Goodman et al. 1961; Hogervorst 1980; Tjelta et al. 1986; Larsen 1989; Steensen-Bach 1992; Dyvik et al. 1993; Clukey and Morrison 1993; Whittle and Kavvadas 1994; Clukey et al. 1995a, b; Cauble 1996; Datta and Kumar 1996; Singh et al. 1996; Rao et al. 1997a, b; El-Gharbawy and Olson 2000; Zdravkovic et al. 2001; Cao et al. 2001, 2002a, b; Luke 2002; Cho et al. 2002). Clukey et al. (1995) determined the response of suction in normally consolidated clays for cyclic TLP loading conditions. Cauble (1996) conducted experiment for determination of behavior of suction caisson in clay. Cao et al. (2001) discussed results of centrifuge test of suction caisson in clay. Luke (2002) described experimental results of suction caisson for determination of axial pullout. El-Gharbawy and Olson (2000) used finite element method (FEM) for verifying the experimental results. The available methods have their own limitations (Rahman et al. 2001). Artificial neural network (ANN) has been successfully used for prediction of P of suction caissons (Rahman et al. 2001). However, ANN has several drawbacks such as low convergence speed, low generalization capability, “black-box approach” and overtraining problem (Park and Rilett 1999; Kecman 2001).

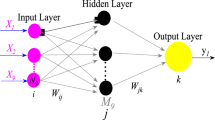

This article examines the capability of Gaussian process regression (GPR), minimax probability machine regression (MPMR) and extreme learning machine (ELM) for determination of P of suction caisson. This study adopts the database collected from the work of Rahman et al. (2001). The database contains information about L/d (L is the embedded length of the caisson and d is the diameter of caisson), undrained shear strength of soil at the depth of the caisson tip (Su), D/L (D is the depth of the load application point from the soil surface), inclined angle (θ), load rate parameter (Tk) and P. Table 1 shows the statistical parameters of the dataset. GPR is developed based on Bayesian concept. The parameters of GPR are assumed random variable. Researchers have successfully applied GPR for solving different problems in engineering (Kongkaew and Pichitlamken 2012; Chen et al. 2013, 2014; Cheng et al. 2015; Alborzpour et al. 2016). MPMR is a probabilistic model. It is constructed by Lanckriet et al. (2002a, b). The different applications of MPMR are available in the researches (Zhou et al. 2013; Shen et al. 2013; Yang and Ju 2014; Huang et al. 2015; Yang and Sun 2016). ELM is the modified version of single-hidden-layer feed-forward neural network (SLFN) (Huang et al. 2006, 2011). It gives excellent generalization performance (Huang et al. 2006). Many applications of ELM are available in the researches (Lu and Sho 2012; Xie et al. 2013; Bazi et al. 2014; Singh et al. 2015; Zhang et al. 2016). The developed GPR, MPMR and ELM have been compared with the ANN model.

1.1 Details of GPR

Let us consider the following datasets (D).

where x is called input, y is called output and N is the number of datasets.

This article uses L/d, D/L, su and θ as input variables. The output of GPR is P.

So, \(x = \left[ {{L \mathord{\left/ {\vphantom {L {d,{D \mathord{\left/ {\vphantom {D {L,s_{u} ,\theta }}} \right. \kern-0pt} {L,s_{u} ,\theta }}}}} \right. \kern-0pt} {d,{D \mathord{\left/ {\vphantom {D {L,s_{u} ,\theta }}} \right. \kern-0pt} {L,s_{u} ,\theta }}}}} \right]\) and \(y = \left[ P \right]\).

GPR uses the following equation for prediction of y.

where f is latent real-valued function and ε is the observation error.

For a new input (xN+1), the expression of yN+1 is given as follows:

where KN+1 is covariance matrix and its expression is given as follows:

where k (xN+1) is vector of covariances between training inputs and the test input and k1 (xN+1) denotes autocovariance of the test input.

The distribution of yN+1 is Gaussian (Williams 1998), and its mean \(\left( {\mu \left( {x_{N + 1} } \right)} \right)\) and variance \(\left( {\sigma^{2} \left( {x_{N + 1} } \right)} \right)\) are given as follows:

For developing the above GPR, the datasets have been divided into the following two groups:Training dataset: This is used to construct the GPR model. This study adopts 51 datasets out of 62 as training dataset (see Table 2).

Testing dataset: This is used to verify the developed GPR. The remaining 11 datasets have been used as testing dataset (see Table 3).

The datasets are scaled between 0 and 1. Radial basis function (\(\exp \left[ {\frac{{ - \left( {x_{i} - x} \right)\left( {x_{i} - x} \right)^{T} }}{{2\sigma^{2} }}} \right]\), where σ is the width of radial basis function) has been used as a covariance function. The program of GPR has been constructed by using MATLAB.

1.2 Details of MPMR

In MPMR, the relation between input (x) and output (y) is given as follows:

where N is the number of datasets, K(xi,x) is kernel function and βi and b are output from the MPMR.

This article uses L/d, D/L, Su and θ as input variables. The output of MPMR is P.

So, \(x = \left[ {{L \mathord{\left/ {\vphantom {L {d,{D \mathord{\left/ {\vphantom {D {L,s_{u} ,\theta }}} \right. \kern-0pt} {L,s_{u} ,\theta }}}}} \right. \kern-0pt} {d,{D \mathord{\left/ {\vphantom {D {L,s_{u} ,\theta }}} \right. \kern-0pt} {L,s_{u} ,\theta }}}}} \right]\) and \(y = \left[ P \right]\).

The following two classes are created from the available training datasets.

The classification boundary between the two classes is defined as regression surface.

MPMR uses the same training datasets, testing datasets and normalization technique as used by the GPR model. Radial basis function has been used as a kernel function. MPMR has been constructed by using MATLAB.

1.3 Details of ELM

Let us consider the following N training samples.

where x is called input and y is called output.

This article uses L/d, D/L, su and θ as input variables. The output of MPMR is P.

So, \(x = \left[ {{L \mathord{\left/ {\vphantom {L {d,{D \mathord{\left/ {\vphantom {D {L,s_{u} ,\theta }}} \right. \kern-0pt} {L,s_{u} ,\theta }}}}} \right. \kern-0pt} {d,{D \mathord{\left/ {\vphantom {D {L,s_{u} ,\theta }}} \right. \kern-0pt} {L,s_{u} ,\theta }}}}} \right]\) and \(y = \left[ P \right]\).

Single-hidden-layer feed-forward network (SLFN) uses the following equation for prediction of y.

where L is the number of hidden nodes, βi is weight and G (ai,xj,bi) is activation function.

Equation (11) is written in the following form.

where \(H = \left[ {\begin{array}{*{20}c} {G\left( {a_{1} ,x_{1} ,b_{1} } \right)} & \cdots & {G\left( {a_{L} ,x_{1} ,b_{L} } \right)} \\ \vdots & \cdots & \vdots \\ {G\left( {a_{1} ,x_{N} ,b_{1} } \right)} & \cdots & {G\left( {a_{L} ,x_{N} ,b_{L} } \right)} \\ \end{array} } \right]_{N \times L}\), \(\beta = \left[ {\begin{array}{*{20}c} {\beta_{1}^{\text{T}} } \\ \vdots \\ {\beta_{L}^{\text{T}} } \\ \end{array} } \right]_{L \times m}\) and \(T = \left[ {\begin{array}{*{20}c} {t_{i}^{\text{T}} } \\ \vdots \\ {t_{N}^{\text{T}} } \\ \end{array} } \right]\).

The value of β is determined from the following expression:

where H−1 is the Moore–Penrose generalized inverse (Serre 2002).

ELM uses the same training datasets, testing datasets and normalization technique as used by the GPR and MPMR models. The program of ELM has been constructed by using MATLAB.

2 Results and Discussion

The performance of GPR depends on the proper choice of ε and σ. The design values of ε and σ have been determined by a trial-and-error approach. The developed GPR gives best performance at ε = 0.003 and σ = 0.5. Figure 1 shows the plot between actual and predicted P for training and testing datasets. The performance of GPR has been assessed in terms of coefficient of correlation (R) value. For a good model, the value of R should be close to one. As shown in Fig. 1, the value of R is close to one for training as well as testing datasets.

The success of MPMR depends on the proper choice of t and σ. The trial-and-error approach has been used to determine the design values of t and σ. The developed MPMR gives best performance at t = 0.007 and σ = 0.8. Figure 2 depicts the performance of training and testing datasets. Fig. 2 shows that the value of R is close to one for training as well as testing datasets. Therefore, the developed MPMR shows its ability for prediction of P.

For developing ELM, radial basis function has been adopted as activation function. There are ten hidden nodes in the ELM. The performance of ELM is depicted in Fig. 3. As shown in Fig. 3, the value of R is close to one. So, the developed ELM predicts P reasonable well.

The developed GPR, MPMR and ELM have been compared with the ANN and FEM models. Comparison has been made for testing datasets (Rahman et al. 2001). Figure 4 illustrates the bar chart of R values of the different models. The developed models are also assessed in terms of root mean square error (RMSE) (Kisi et al. 2013), mean absolute percentage error (MAPE), root mean square error-to-observation’s standard deviation ratio (RSR) (Moriasi et al. 2007), normalized mean bias error (NMBE) (Srinivasulu and Jain 2006), weighted mean absolute percentage error (WMAPE), Nash–Sutcliffe coefficient (NS) (Nash and Sutcliffe 1970), variance account factor (VAF), maximum determination coefficient value (R2), performance index (PI) and adjusted determination coefficient (adj. R2) (Ceryan 2014; Chandwani et al. 2015). Table 4 shows the value of the above parameters of the developed models. The value of NS should be close to one for a perfect model. For a good model, the value of VAF should be close to 100. The performance of GPR, MPMR, ELM and ANN models is almost same. The developed MPMR has control over future prediction. However, the developed GPR, ELM and ANN have no control over future prediction. In GPR, it is assumed that the datasets should follow Gaussian distribution. The developed MPMR, ELM and ANN do not assume any data distribution. The developed GPR, ELM and MPMR use two tuning parameters. However, the ANN model uses many tuning parameters. The major limitation of the developed model is that design parameter is determined by the trial-and-error approach. This study adopts sensitivity (S) analysis to determine the effect of each input on P. The concept has been taken from the work of Liong et al (2000). Figure 5 shows that Tk has maximum impact on P.

3 Conclusion

This article examines the capability of GPR, ELM and MPMR for prediction of P of suction caisson. The detailed methodologies of GPR, ELM and MPMR are described in this article. The developed GPR, ELM and MPMR give excellent performance. The performance of GPR, ELM and MPMR is comparable with the ANN model. User can employ the developed models as quick tools for prediction of P. The developed ELM is fast compared with the other developed models. It can be concluded that the developed GPR, ELM and MPMR are excellent models for prediction of P of suction caisson.

References

Albert LF, Holtz RD, Magris E (1987) The super pile system: a feasible alternate foundation for TLP in deep water. In: Proceedings of the 19th annual offshore technology conference Texas U.S.A., pp 307–314

Alborzpour JP, Tew DP, Habershon S (2016) Efficient and accurate evaluation of potential energy matrix elements for quantum dynamics using Gaussian process regression. J Chem Phys 145(17):1–11

Bazi Y, Alajlan N, Melgani F, AlHichri H, Malek S, Yager RR (2014) Differential evolution extreme learning machine for the classification of hyperspectral images. IEEE Geosci Remote Sens Lett 11(6):1066–1070

Cao J, Phillips R. Popescu R (2001) Physical and numerical modeling on suction caissons in clay. In: Proceedings of the 18th Canadian congress of applied mechanics. Memorial University of New Foundland, St. John’s, Canada, pp 217–218

Cao J, Phillips R, Popescu R, Al-Khafaji Z, Audibert JME(2002) Penetration resistance of suction caissons in clay. In: Proceedings of the 12th international of shore and polar engineering conference Kitakyushu, pp 800–806

Cauble DF. (1996) An experimental investigation of the behavior of a model suction caisson in a cohesive soil. PhD Dissertation, Massachusetts Institute of Technology

Ceryan N (2014) Application of support vector machines and relevance vector machines in predicting uniaxial compressive strength of volcanic rocks. J Afr Earth Sc 100:634–644

Chandwani V, Agrawal V, Nagar R (2015) Modeling slump of ready mix concrete using genetic algorithms assisted training of artificial neural networks. Expert Syst Appl 42(2):885–893

Chen J, Chan LLT, Cheng YC (2013) Gaussian process regression based optimal design of combustion systems using flame images. Appl Energy 111:153–160

Chen J, Yu J, Zhang Y (2014) Multivariate video analysis and Gaussian process regression model based soft sensor for online estimation and prediction of nickel pellet size distributions. Comput Chem Eng 64:13–23

Cheng KW, Chen YT, Fang WH (2015) Gaussian process regression-based video anomaly detection and localization with hierarchical feature representation. IEEE Trans Image Process 24(12):5288–5301

Cho Y, Lee TH, Park JB, Kwag DJ, Chung ES, Bang S(2002) Field tests on suction pile installation in sand. In: Proceedings of the 21st international conference on offshore mechanics and arctic engineering, Oslo, Norway, pp 765–771

Clukey EC, Morrison MJA (1993) A centrifuge and analytical study to evaluate suction caissons for TLP applications in the Gulf of Mexico. In: ASCE proceeding conference on foundations, geotechnical special Publications, Texas, U.S.A., October 24–28, pp 141–156

Clukey EC, Morrison MJ, Gariner J, Corte JF (1995) The response of suction caisson in normally consolidated clays to cyclic TLP loading conditions. In: Proceedings of the 27th annual offshore technology conference, Texas, U.S.A., pp 909–918

Datta M, Kumar P (1996) Suction beneath cylindrical anchors in soft clay. In: Proceedings of the sixth international offshore and polar engineering conference, California, U.S.A., pp 544–548

Dyvik R, Anderson KH, Hansen SB, Christophersen HP (1993) Field tests on anchors in clay. I: description. J Geotech Eng 119(10):1515–1531

El Gharbawy, SL, Olson RE (2000) Modeling of suction caisson foundations. In: Proceedings of the 10th international offshore and polar engineering conference, Seattle, USA

Goodman LJ, Lee CN, Walker FJ (1961) The feasibility of vacuum anchorage in soil. Geotechnique 1(4):356–359

Hogervorst JR (1980) Field trails with large diameter suction piles. In: Proceedings of the 12th annual offshore technology conference, Texas, U.S.A., OTC 3817, pp 217–224

Huang GB, Zhu QY, Siew CK (2006) Extreme learning machine: theory and applications. Neurocomputing 70:489–501

Huang GB, Wang DH, Lan Y (2011) Extreme learning machines: a survey. Int J Mach Learn Cybernet 2:107–122

Huang K, Zhang R, Yin XC (2015) Learning imbalanced classifiers locally and globally with one-side probability machine. Neural Process Lett 41(3):311–323

Kecman V (2001) Learning and soft computing: support vector machines, neural networks, and fuzzy logic models. MIT press, Cambridge

Kisi A, Shiri J, Tombul M (2013) Modeling rainfall-runoff process using soft computing techniques. Comput Geosci 51:108–117

Kongkaew W, Pichitlamken J (2012) A Gaussian process regression model for the traveling salesman problem. J Comput Sci 8(10):1749–1758

Lanckriet GRG, El Ghaoui L, Bhattacharyya C, Jordan MI (2002a) Minimax probability machine. In: Dietterich TG, Becker S, Ghahramani Z (eds) Advances in neural information processing systems, vol 14. MIT Press, Cambridge, MA

Lanckriet GRG, Ghaoui LE, Bhattacharyya C, Jordan MI (2002b) A robust minimax approach to classification. Journal of Machine Learning Research. 3:555–582

Larsen P(1989) Suction anchors as an anchoring system for floating offshore constructions. In: Proceedings of the 21st annual offshore technology conference Houston, Texas, U.S.A., pp 535–540

Liong SY, Lim WH, Paudyal GN (2000) River stage forecasting in Bangladesh: neural network approach. J Comput Civil Eng 14(1):1–8

Lu CJ, Shao YE (2012) Forecasting computer products sales by integrating ensemble empirical mode decomposition and extreme learning machine. Mathematical Problems in Engineering

Luke AM (2002) Axial capacity of suction caissons in normally consolidated kaolinite. M.Sc. Thesis, The University of Texas at Austin

Moriasi DN, Arnold JG, Van Liew MW, Bingner RL, Harmel RD, Veith TL (2007) Model evaluation guidelines for systematic quantification of accuracy in watershed simulations. Trans ASABE. 50(3):885–900

Nash JE, Sutcliffe JV (1970) River flow forecasting through conceptual models Part I—A discussion of principles. J Hydrol 10(3):282–290

Park D, Rilett LR (1999) Forecasting freeway link travel times with a multi-layer feed forward neural network. Comput Aided Civil Infra Struct Eng 14:358–367

Rahman MS, Wang J, Deng W, Carter JP (2001) A neural network model for the uplift capacity of suction caissons. Comput Geotech 28:269–287

Rao SN, Ravi R, Ganapathy C(1997) Pull out behavior of model suction anchors in soft marine clays. In: Proceedings of the seventh international offshore and polar engineering conference, Honolulu, U.S.A., pp 740–744

Rao SN, Ravi R, Ganapathy C (1997) Behavior of suction anchors in marine clays under TLP loading. In: Proceedings of the 16th international conference on offshore mechanics and arctic engineering, Yokohama: Japan, pp 151–155

Rauch AF. (2004) Personal communication on reverse end bearing capacity

Serre D (2002) T matrices: theory and applications. Springer, Berlin

Shen C, Wang P, Paisitkriangkrai S, Van Den Hengel A (2013) Training effective node classifiers for cascade classification. Int J Comput Vision 103(3):326–347

Singh B, Datta M, Gulhati SK (1996) Pullout behavior of superpile anchors in soft clay under static loading. Mar Georesour Geotechnol 14:217–236

Singh R, Kumar H, Singla RK (2015) An intrusion detection system using network traffic profiling and online sequential extreme learning machine. Expert Syst Appl 42(22):8609–8624

Srinivasulu S, Jain A (2006) A comparative analysis of training methods for artificial neural network rainfall-runoff models. Appl Soft Comput 6:295–306

Steensen Bach, JO. (1992) Recent model tests with suction piles in clay and sand. In: Proceedings of the 24th annual offshore technology conference, Texas, U.S.A., pp 323–330

Tjelta TI, Guttormsen TR, Hermstad J (1986) Large-scale penetration test at a deepwater site. In: Proceedings of the 18th annual offshore technology conference, Texas, U.S.A., pp 201–212

Whittle AJ, Kavvadas MJ (1994) Formulation of MIT-E3 constitutive model for over consolidated clays. Journal of Geotechnical Engineering. 120(1):173–198

Williams CKI (1998) Prediction with Gaussian processes: from linear regression and beyond. In: Jordan MI (ed) Learning and inference in graphical models. Kluwer Academic Press, Dordrecht, pp 599–621

Xie P, Chen XL, Su YP, Liang ZH, Li XL (2013) Feature extraction and recognition of motor imagery EEG based on EMD-multiscale entropy and extreme learning machine. Chin J Biomed Eng 32(6):641–648

Yang L, Ju R (2014) A DC programming approach for feature selection in the minimax probability machine. Int J Comput Intell Syst 7(1):12–24

Yang L, Sun Q (2016) Comparison of chemometric approaches for near-infrared spectroscopic data. Anal Methods 8(8):1914–1923

Zdravkovic L, Potts DM, Jardine RJ (2001) A parametric study of the pull-out capacity of bucket foundations in soft clay. Geotechnique 51(1):55–67

Zhang L, Li J, Lu H (2016) Saliency detection via extreme learning machine. Neurocomputing 218:103–112

Zhou Z, Wang Z, Sun X (2013) Face recognition based on optimal kernel minimax probability machine. J Theor Applied Inf Technol 48(3):1645–1651

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Samui, P., Kim, D., Jagan, J. et al. Determination of Uplift Capacity of Suction Caisson Using Gaussian Process Regression, Minimax Probability Machine Regression and Extreme Learning Machine. Iran J Sci Technol Trans Civ Eng 43 (Suppl 1), 651–657 (2019). https://doi.org/10.1007/s40996-018-0155-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40996-018-0155-7