Abstract

Dempster–Shafer evidence theory is widely adopted in a variety of fields of information fusion. Nevertheless, it is still an open issue about how to avoid the counter-intuitive results to combine the conflicting evidences. In order to overcome this problem, an improved conflicting evidence combination approach based on similarity measure and belief function entropy is proposed. First, the credibility degree of the evidences and their corresponding globe credibility degree are calculated on account of the modified cosine similarity measure of the basic probability assignment. Next, according to the globe credibility degree of the evidences, the primitive evidences are divided into two categories, namely, the reliable evidences and the unreliable evidences. In addition, for strengthening the positive effect of the reliable evidences and alleviating the negative impact of the unreliable evidences, a reward function and a penalty function are designed, respectively, to measure the information volume of the different types of the evidences by taking advantage of the Deng entropy function. Then, the weight value that obtained from the first step is modified by making use of the measured information volume. Finally, the modified weights of the evidences are applied for adjusting the body of the evidences before using the Dempster’s combination rule. A numerical example is provided to illustrate that the proposed method is reasonable and efficient in dealing with the conflicting evidences with better convergence. The results show that the proposed method is not only efficient, but also reliable. It outperforms other related methods which can recognise the target more accurate by 98.92%.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the applications of wireless sensor networks, the data collected from the sensors are often imprecise and uncertain. It is still an open issue about how to model and cope with the uncertainty information. To overcome this problem, many useful math tools are proposed, such as the rough sets theory [1, 2], fuzzy sets theory [3,4,5,6,7,8], evidence theory [9, 10], D numbers theory [11, 12], and Z numbers [13,14,15]. In addition, the approaches with hybrid intelligent algorithms are used for decision-making [16,17,18,19,20], supplier selection [21], sensor networks [22] classification recognition [23], risk analysis [24], uncertain information processing [25, 26], optimisation problem [27,28,29], product development [30, 31], and influence diagram [32].

Dempster–Shafer evidence theory, as an efficient uncertainty reasoning tool, was firstly proposed by Dempster [9] and then had been developed by Shafer [10]. On account of the flexibility and effectiveness in modelling the uncertainty and imprecision without relying on prior information, Dempster–Shafer evidence theory is widely used in many fields of information fusion [33,34,35,36]. In spite of having a lot of advantages, Dempster–Shafer evidence theory may generate counter-intuitive results, when it combines very conflicting evidences. To solve this problem, many approaches were proposed and they are classified into two types of methodologies [37,38,39]. One of the methodologies intends to modify the Dempster’s combination rule, and the other one of the methodologies attempts to pretreat the bodies of the evidences before using the Dempster’s combination rule. The main research works focusing on the first methodology include Yager’s method [40], Dubois and Prade’s method [41], and Smets’s method [42]. In fact, the good properties, like commutativity and associativity, are often broken due to modifying the combination rule. Additionally, if the counter-intuitive results are caused by the sensor failure, such a modification is regarded as inconsequence. Therefore, researchers tend to pretreat the bodies of the evidences for the sake of resolving the problem of combining the conflicting evidences.

As to the second methodology, it is very difficult to determine the weight vector. At present, the main research works focusing on the second methodology include Murphy’s method [43], Deng et al.’s method [44], Zhang et al.’s method [45], and Yuan et al.’s method [46]. Murphy [43] proposed to modify and average the bodies of the evidences first, and then combining the averaged evidences. Deng et al.’s weighted average approach [44] overcame the weakness of Murphy’s method to some extent by regarding the distance of evidences. Zhang et al. [45] made an improvement based on Deng et al.’s method and introduced the concept of vector space to handle the conflicting evidences. However, the effect of evidence itself on the weight is ignored. Later on, in order to express the effect of evidence itself to further improve the performance of the fusion result, Yuan et al. [46] introduced the belief entropy [47, 48].

Nevertheless to say, most of the above methods employed Jousselme distance as one of the critical factors to determine the weight vector for modifying the bodies of the evidences, whereas the similarity measure of the evidences [49] based on the modified cosine similarity of the evidence that regards the three factors, i.e. angle, distance, and vector norm, is much more precisely for indicating the clarity of the evidence comparing with the Jousselme distance. It can thus be seen that there is still some room to obtain more appropriate weight for each evidence to achieve more accurate combination results in sensor fusion.

Therefore, in this paper, an improved combination method is proposed to solve the problem of conflicting evidence combination based on the similarity measure of the evidences [49] and belief function entropy [47]. The contribution of this paper is represented below. Based on the modified cosine similarity measure of the basic probability assignment, both of the credibility degree of the evidences and their corresponding globe credibility degree are first calculated. Next, according to the globe credibility degree of the evidences, the primitive evidences are divided into two categories, namely, the reliable evidences and the unreliable evidences. In addition, in order to measure the information volume of the different categories of the evidences, a reward function and a penalty function are designed, respectively, for not only enhancing the positive effect of the reliable evidences, but also alleviating the negative impact of the unreliable evidences on account of the Deng entropy function. Then, the weight value obtained from the first step is modified by making use of the measured information volume. Finally, the modified weight of the evidences is obtained and applied in adjusting the body of the evidences before using the Dempster’s combination rule with \((t - 1)\) times, when there are t number of evidences. The experimental results illustrate that the proposed method is reasonable and efficient in coping with the conflicting evidences with fast convergence.

The rest of this paper is organised as follows. Section 2 briefly introduces the preliminaries of this paper. After that, Sect. 3 proposes the improved method that is based on the similarity measure and belief function entropy. Section 4 illustrates a numerical example to show the effectiveness of the proposed method. Finally, Sect. 5 gives a conclusion.

2 Preliminaries

2.1 Dempster–Shafer Evidence Theory

Dempster–Shafer evident theory [9, 50] is applied to deal with uncertain information, belonging to the category of artificial intelligence. Because of the flexibility and effectiveness in modelling both of the uncertainty and imprecision without prior information, Dempster–Shafer evident theory requires weaker conditions than the Bayesian theory of probability. When the probability is confirmed, Dempster–Shafer evident theory could convert into Bayesian theory, so it is considered as an extension of the Bayesian theory. Dempster–Shafer evident theory has the advantage that it can directly express the “uncertainty” by allocating the probability into the subsets of the set which consists of multiple objects, rather than to an individual object. Furthermore, it is capable of combining the bodies of the evidences to derive a new evidence. The basic concepts are introduced as below.

Definition 1

(Frame of discernment) Let U be a set of mutually exclusive and collectively exhaustive, indicated by

The set U is called frame of discernment. The power set of U is indicated by \(2^{U}\), where

and \(\emptyset\) is an empty set. If \(A \in 2^{U}\), A is called a proposition.

Definition 2

(Mass function) For a frame of discernment U, a mass function is a mapping m from \(2^{U}\) to [0, 1], formally defined by

which satisfies the following condition:

In the Dempster–Shafer evident theory, a mass function can be also called as a basic probability assignment (BPA). If m(A) is greater than 0, A will be called as a focal element, and the union of all of the focal elements is called as the core of the mass function.

Definition 3

(Belief function) For a proposition \(A \subseteq U\), the belief function \(Bel: 2^{U} \rightarrow [0, 1]\) is defined as

The plausibility function \(Pl: 2^{U} \rightarrow [0, 1]\) is defined as

where \(\bar{A} = U - A\).

Apparently, Pl(A) is equal or greater than Bel(A), where the function Bel is the lower limit function of proposition A and the function Pl is the upper limit function of proposition A.

Definition 4

(Dempster’s rule of combination) Let two BPAs \(m_1\) and \(m_2\) on the frame of discernment U and assuming that these BPAs are independent, Dempster’s rule of combination, denoted by \(m = m_1 \oplus m_2\), which is called as the orthogonal sum, is defined as below:

with

where B and C are also the elements of \(2^{U}\), and K is a constant that presents the conflict between two BPAs.

Notice that the Dempster’s combination rule is only practicable for the two BPAs with the condition \(K < 1\).

2.2 Modified Cosine Similarity Measure of BPAs

The similarity between two bodies of the evidences is used to determine whether two evidences are conflict or not. The high similarity means that there is little conflict between the two bodies of the evidences, while the low similarity means that there is high conflict between the two bodies of the evidences. Cosine similarity [51, 52] measures the similarity between two vectors of an inner product space, namely, measuring the cosine of the angle between two vectors based on the direction, but ignoring the impact of the distance of two vectors. Zhang et al. [52] presented an integrated similarity measurement on the basis of distance and angle. Although the integrated similarity measurement considers the strengths of the distance and direction of two vectors, deficiencies still exist. In order to measure the similarity between two vectors more precisely, the modified cosine similarity measure [49] is proposed based on three important factors, i.e. angle, distance, and vector norm.

Definition 5

(Modified cosine similarity of vectors) Let \(B = [b_1, b_2, \ldots , b_n]\) and \(C = [c_1, c_2, \ldots , c_n]\) be two vectors of \(R^n\). The modified cosine similarity between vectors B and C is defined as

where \(\alpha\) is a constant whose value is greater than 1, d is the Euclidean distance between vectors B and C, \(\alpha ^{-d}\) is the distance-based similarity measurement, \(min\left(\frac{|B|}{|C|}, \frac{|C|}{|B|}\right)\) is the minimum of \(\frac{|B|}{|C|}\) and \(\frac{|C|}{|B|}\), and \(si_{cos}(B, C)\) is the cosine similarity. The larger the \(\alpha\) is, the greater the distance impact on vector similarity will be.

Definition 6

(New similarity of BPAs based on the modified cosine similarity) Under the frame of discernment \({\varTheta }= \{v_1, v_2, \ldots , v_N\}\), let \(m_1\) and \(m_2\) be the BPAs of two evidence sources, respectively. The two vectors are expressed as

Then, on the basis of the modified cosine similarity, the belief function vector similarity, denoted as \(SI(Bel_1, Bel_2)\) and the plausibility function vector similarity, denoted as \(SI(Pl_1, Pl_2)\) can be calculated. The new similarity of BPAs is defined as

with

where \(\lambda\) is the total uncertainty of BPAs, which is defined as

Because \(Pl_i(v_j) \ge Bel_i(v_j)\) and \(Bel \ge 0\), if \(Pl_i(v_j) = Bel_i(v_j)\), then \(\lambda = 0\); otherwise, if \(Bel_i(v_j) = 0\), then \(\lambda = 1\). The larger the uncertainty \(\lambda\) is, the higher the influence on the similarity of BPA will be.

2.3 Belief Entropy

A novel belief entropy which is called as the Deng entropy is first proposed by Deng [47]. As the generalisation of the Shannon entropy [53, 54], the Deng entropy is an efficient math tool to measure the uncertain information. It can be used in evidence theory, in which the uncertain information is expressed by the BPA. In such a situation that the uncertainty is expressed by probability distribution, the uncertain degree that is measured by the Deng entropy will be the same as the uncertain degree that is measured by the Shannon entropy. The basic concepts are introduced below.

Let \(A_i\) be a hypothesis of the belief function m, \(|A_i|\) is the cardinality of set \(A_i\). Deng entropy \(E_d\) of set \(A_i\) is defined as follows:

When the belief value is only allocated to the single element, Deng entropy degenerates to Shannon entropy, i.e.

The greater the cardinality of hypotheses is, the greater the Deng entropy of evidence is, so that the evidence contains more information. When an evidence has a big Deng entropy, it is supposed to be better supported by other evidences, which indicates that this evidence plays an important role in the final combination.

The following examples show the effectiveness of the Deng entropy.

Example 1

Supposing there exists a mass function m(A) = 1, its corresponding Shannon entropy, denoted as H and Deng entropy, denoted as \(E_d\), can be obtained as below:

Example 2

Supposing there exists the mass function \(m(A) = m(B) = m(C) = m(D) = 1/4\) in a frame of discernment \(X = \{A, B, C, D\}\), its corresponding Shannon entropy, denoted as H and Deng entropy, denoted as \(E_d\), can be obtained as below:

Example 3

Supposing there exists the mass function \(m(A,B,C,D) = 1\) in a frame of discernment \(X = \{A, B, C, D\}\), its corresponding Deng entropy, denoted as \(E_d\), can be obtained as below:

From Examples 1 and 2, we can notice that when the belief is only allocated to the single elements, the Deng entropy and Shannon entropy are the same. Example 3 shows that the Deng entropy can efficiently measure the uncertainty when the belief is assigned to the multiple elements.

3 The Proposed Method

Formal problem statement Assuming that there are t number of evidences with the bodies of the evidences \(m_i\) \((i = 1, \ldots , t\)), the pretreatment of the bodies of the evidences can be defined as

where WAE(m) represents the weighted average evidence BPA of the primitive t evidences, and \(w_i\) represents the corresponding weight degree of \(m_i\). Then, the final fusing result can be calculated by taking advantage of the Dempster’s rule to combine the weighted average evidence WAE(m) for \(t - 1\) times. From Eq. (16), we recognise that it is critical to find an appropriate weight \(w_i\) for each primitive evidence \(m_i\), because it directly impacts the performance of the final fusing result.

Jousselme distance [55] is widely applied in reflecting the evidence’s clarity, in which the higher the uncertainty of the evidence is, the lower the reliability of the evidence is, while the lower the uncertainty of the evidence is, the higher the reliability of the evidence is. Nevertheless to say, Jousselme distance is not good enough to precisely describe the characteristics of conflict in some certain cases [49]. Comparing with the Jousselme distance, the modified cosine similarity of the evidence [49] that regards the three factors, i.e. angle, distance, and vector norm, is much more precisely for further indicating the clarity of the evidence. Additionally, ambiguity measure is widely applied in uncertainty measure, whereas because of lacking of the information in the Pignistic probability conversion process, a novel belief entropy called as Deng entropy can better measure the uncertainty of the evidence comparing with the ambiguity measure. The below examples depict the Deng entropy’s effectiveness.

Example 4

Supposing there exists the mass function \(m(A) = m(B) = m(C) = 1/3\) in a frame of discernment \(X = \{A, B, C\}\), its corresponding ambiguity measure, denoted as AM and Deng entropy, denoted as \(E_d\), can be obtained as below:

Example 5

Supposing there exists the mass function \(m(A) = 0.05\), \(m(B) = 0.05\), \(m(C) = 0.05\), \(m(ABC) = 0.85\) in a frame of discernment \(X = \{A, B, C\}\), its corresponding ambiguity measure, denoted as AM and Deng entropy, denoted as \(E_d\), can be obtained as below:

Examples 4 and 5 illustrate the effectiveness of the Deng entropy which can better measure the uncertainty of the evidence comparing with the ambiguity measure. Specifically, m in Example 4 is supposed to be more certainty than m in Example 5. However, the value of AM in Examples 4 and 5 is identical. Conversely, the Deng entropy \(E_d = 3.2338\) in Example 4 is more bigger than the Deng entropy \(E_d = 1.5850\) in Example 2 where this result is consistent with the intuition.

Furthermore, due to the environment or the sensor failure problems, the collected evidences are supposed to be divided into two categories, namely, the reliable evidences and the unreliable evidences. Hence, how to identify them and adjust the weights of the reliable evidences and the unreliable evidences, respectively, to further strengthen the positive impact of the reliable evidences and mitigate the negative impact of the unreliable evidences play a very important role in the performance of the final fusing results.

Ultimately, we leverage the modified cosine similarity of the evidence to judge the reliability of the evidences. When the similarity measure between the target evidence and other alternative evidence is great, which means that the target evidence is supported by this evidence, so that the target evidence is supposed to be regarded as a reliable evidence. Otherwise, when the similarity measure between the target evidence and other alternative evidence is small, which means that the target evidence is not supported by this evidence, so that the target evidence is supposed to be regarded as an unreliable evidence. In order to enhance the positive effects of the reliable evidences, the greater weight should be allocated to the reliable evidences. On the other hand, in order to weaken the negative effects of the unreliable evidences, the smaller weight should be allocated to the unreliable evidences. On this basis, we design a reward function and a penalty function to further adjust the weights of the evidences in terms of different types of the evidences.

Definition 7

(Reward function) For a reliable evidence \(m_i\) \((i = 1, \ldots , t\)) with Deng entropy \(E_d\), the reward function is formally defined by

Property 1

The reward function is a monotone increasing function.

Proof

According to the property of the \(exp[\cdot ]\) function, the reward function is a monotone increasing function. Therefore, for the reliable evidences, the greater entropy the evidence has, the better support the evidence has from other evidences and the greater weight can be assigned to the reliable evidence. \(\square\)

Definition 8

(Penalty function) For an unreliable evidence \(m_i\) \((i = 1, \ldots , t\)) with Deng entropy \(E_d\), the penalty function is formally defined by

Property 2

The penalty function is a monotone increasing function.

Proof

Supposing there are two bodies of the evidences \(m_p\) and \(m_q\) with Deng entropy \(E_d(m_p)\) and \(E_d(m_q)\) where \(E_d(m_q) > E_d(m_p)\) \((1 \le p < q \le t\)), then

Because \(E_d(m_q) > E_d(m_p)\), according to the property of the \(exp[\cdot ]\) function,

Hence, \(InV_q - InV_p > 0\), and \(InV_q > InV_p\).

In short, it is a monotone increasing function. Therefore, for the unreliable evidences, the smaller entropy the evidence has, the less support the evidence has from other evidences and the smaller weight can be assigned to the unreliable evidence. \(\square\)

In summary, an improved combination method is proposed in terms of the similarity measure of the evidences and belief function entropy. The flow chart of the proposed method is shown in Fig. 1. And the concrete procedures are listed as below.

- Step 1 :

-

The similarity measure \(SI_{BPA}(ij)\) (\(i,j = 1, 2, \ldots , t\)) between the bodies of the evidences \(m_i\) and \(m_j\) can be obtained by Eqs. (9)–(13). Then, a similarity measure matrix (SMM) can be constructed as follows:

$$\begin{aligned} {\small SMM = \begin{bmatrix} SI_{BPA}(11)&\cdots&SI_{BPA}(1i)&\cdots&SI_{BPA}(1t) \\ \vdots&\vdots&\vdots&\vdots&\vdots \\ SI_{BPA}(i1)&\cdots&SI_{BPA}(ii)&\cdots&SI_{BPA}(it) \\ \vdots&\vdots&\vdots&\vdots&\vdots \\ SI_{BPA}(t1)&\cdots&SI_{BPA}(ti)&\cdots&SI_{BPA}(tt) \\ \end{bmatrix}.\quad } \end{aligned}$$(19) - Step 2 :

-

The support degree of the body of the evidence \(m_i\) is defined as follows:

$$\begin{aligned} Sup(m_i) = \sum _{j=1, j \ne i}^{t} SI_{BPA}(ij), \quad i,j = 1, 2, \ldots , t. \end{aligned}$$(20) - Step 3 :

-

The credibility degree \(Crd_i\) of the body of the evidence \(m_i\) is defined as follows:

$$\begin{aligned} Crd_i = \frac{Sup(m_i)}{\sum _{i=1}^{t} Sup(m_i)}, \quad i = 1, 2, \ldots , t. \end{aligned}$$(21) - Step 4 :

-

The global credibility degree, denoted as \(Crd_{global}\) can be obtained as follows:

$$\begin{aligned} Crd_{global} = \frac{\sum _{i=1}^{t} Crd_i}{t}, \quad i = 1, 2, \ldots , t. \end{aligned}$$(22) - Step 5 :

-

The primitive evidences \(m_i\) \((i = 1, 2, \ldots , t)\) are classified as the reliable evidences and the unreliable evidences:

$$\begin{aligned} m_i = \left\{ \begin{array}{ll} {\text {reliable evidence}},&{}{Crd_i \ge Crd_{global},} \\ {\text {unreliable evidence}},&{}{Crd_i < Crd_{global}.} \end{array} \right. \end{aligned}$$(23) - Step 6 :

-

The Deng entropy \(E_d\) of each body of evidence can be calculated based on Eq. (14).

- Step 7 :

-

On account of the reward function, i.e. Eq. (17), and penalty function, i.e. Eq. (18), the information volume \(InV_i\) \((i = 1, 2, \ldots , t)\) is used to measure the uncertain information for the reliable evidences and the unreliable evidences, respectively.

$$\begin{aligned} InV_i = \left\{ \begin{array}{ll} {exp[E_d(m_i)],}&{}{\text {if} } m_i\;{\text {belongs\;to\;the\;reliable\; evidence}} \\ {exp\left[ -\left( E^{max}_d(m_i)-E_d(m_i)\right) \right] ,}&{}{ \text {if}}\;m_i\;{\text {belongs\;to\;the\;unreliable\;evidence}}\\. \end{array} \right. \end{aligned}$$(24) - Step 8 :

-

Based on both of the credibility degree \(Crd_i\) and the information volume \(InV_i\) of the evidence, the modified weight \(Mod\_Crd_i\) of the body of the evidence is defined as follows:

$$\begin{aligned} Mod\_Crd_i = \frac{Crd_i \times InV_i}{\sum _{i=1}^{t} (Crd_i \times InV_i)}, \quad i = 1, 2, \ldots , t. \end{aligned}$$(25) - Step 9 :

-

On the basis of the modified weight of the body of the evidence \(Mod\_Crd_i\), the weighted average evidence WAE(m) can be obtained as follows:

$$\begin{aligned} WAE(m) = \sum \limits _{i=1}^{t} (Mod\_Crd_i \times m_i), \quad i = 1, 2, \ldots , t. \end{aligned}$$(26) - Step 10 :

-

The weighted average evidence WAE(m) is combined through Dempster’s combination rule, i.e. Eq. (7) by \(t - 1\) times, if there are t number of evidences. Then, the final combination result of multiple evidences can be obtained.

4 Application

In this section, for the sake of demonstrating the effectiveness of the proposal, a numerical example is illustrated.

Example 6

Supposing there are three objects A, B, and C in a multiple sensor-based target recognition system. The frame of discernment is provided as \({\varTheta }= \{A, B, C\}\) that is complete. Suppose that there are five different types of sensors to detect the objects, and five BPAs are collected by the system which are shown in Table 1.

4.1 Evidence Combination Based on the Proposed Method

First, according to Eqs. (19) and (20), the support degree of each body of evidence is calculated as shown in Table 2.

Secondly, by leveraging Eq. (21), the credibility degree of each body of evidence is obtained as shown in Table 3.

Thirdly, by using Eq. (22), the global credibility degree of the evidences can be obtained as shown in Table 3.

Fourthly, by adopting Eq. (23), the primitive evidences are divided into the reliable evidences and the unreliable evidences as shown in Table 4.

Fifthly, based on Eq. (14), the Deng entropy of each body of evidence can be calculated as shown in Table 5.

Sixthly, by applying Eq. (24), the uncertain information for the reliable evidences and the unreliable evidences are measured, respectively, as shown in Table 6.

Seventhly, on the basis of Eq. (25), the modified weight of each body of evidence is generated as shown in Table 7.

Eighthly, according to Eq. (26), the weighted average evidence is computed as shown in Table 8.

Ninthly, by leveraging Eq. (7), the final combination result of multiple evidences can be obtained in Table 9 through combining the weighted average evidence with four times.

4.2 Discussion

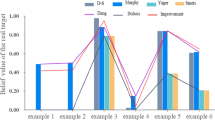

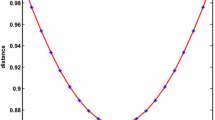

Apparently, we can see that \(m_2\) is highly conflict with other evidences in Example 6. The fusing results by making use of different combination approaches are presented in Table 9. The comparisons of the BPA of the objectives based on different combination rules are shown in Figs. 2, 3, 4, and 5.

For the Dempster’s combination rule [9], it generates counter-intuitive results B and C, when the number of evidences increases from 2 to 5, although the other evidences support the target A.

When there are three evidences, Murphy [43] and Deng et al. [44]’s methods cannot make decisions, because the belief degree assigned to the object A by these two methods is less than 50%. Although Jiang et al. [49], Yuan et al. [46], and the proposed method recognise that the target is A, the belief degree assigned to the target A by the proposed method achieves up to 87.51%, while the belief degree assigned to the target A by Jiang et al. [49] and Yuan et al. [46]’s methods is 57.61 and 82.74%, respectively. It is obvious that the proposed method is not only efficient but also reliable, even if there are only three evidences which include the highly conflicting evidence \(m_2\).

As the number of evidences increases to 5, the accuracy of recognition by the proposed method is improved to 98.92%, which is more greater than Murphy [43], Deng et al. [44], Jiang et al. [49], and Yuan et al. [46]’s methods. Consequently, the proposed method can deal with the conflicting evidences effectually and present reasonable results with better convergence.

In short, the proposed method outperforms other approaches. This is because the proposed method first takes advantage of the modified cosine similarity measure of basic probability assignment. Moreover, the proposed method considers different categories of the evidences and makes use of the Deng entropy function to measure the information volume in terms of the evidence types, where a reward function and a penalty function are designed. After implementing these procedures, the weight of reliable evidences is increased, while the weight of unreliable evidences is decreased, so that the positive effects and negative impacts of the evidences can be enhanced and alleviated, respectively, on the final fusing results than other approaches.

5 Conclusion

In this paper, a new combination approach of the conflicting evidences based on similarity measure of the evidences and belief function entropy was proposed by regarding the similarity among the evidences and the effect of the evidence itself on the weight. The proposed method is a kind of pretreatment of the bodies of the evidences that is effective and feasible to cope with the conflicting evidence combination problem under sensor environment. A numerical example was illustrated to show the efficiency of the proposal with fast convergence.

In the future research work, more factors that may impact the weight of each evidence will be analysed and taken into account to generate more appropriately averaged evidence in the decision-making process.

References

Walczak, B., Massart, D.: Rough sets theory. Chemom. Intell. Lab. Syst. 47(1), 1–16 (1999)

Greco, S., Matarazzo, B., Slowinski, R.: Rough sets theory for multicriteria decision analysis. Eur. J. Oper. Res. 129(1), 1–47 (2001)

Zadeh, L.A.: Fuzzy sets. Inf. Control 8(3), 338–353 (1965)

Uslan, V., Seker, H.: Quantitative prediction of peptide binding affinity by using hybrid fuzzy support vector regression. Appl. Soft Comput. 43, 210–221 (2016)

Liu, H.-C., You, J.-X., You, X.-Y., Shan, M.-M.: A novel approach for failure mode and effects analysis using combination weighting and fuzzy VIKOR method. Appl. Soft Comput. 28, 579–588 (2015)

Mardani, A., Jusoh, A., Zavadskas, E.K.: Fuzzy multiple criteria decision-making techniques and applications-two decades review from 1994 to 2014. Expert Syst. Appl. 42(8), 4126–4148 (2015)

Zhang, R., Ashuri, B., Deng, Y.: A novel method for forecasting time series based on fuzzy logic and visibility graph. Adv. Data Anal. Classif. (2017). https://doi.org/10.1007/s11634-017-0300-3

Chan, K.Y., Engelke, U.: Varying spread fuzzy regression for affective quality estimation. IEEE Trans. Fuzzy Syst. 25(3), 594–613 (2017)

Dempster, A.P.: Upper and lower probabilities induced by a multivalued mapping. Ann. Math. Stat. 38(2), 325–339 (1967)

Shafer, G., et al.: A Mathematical Theory of Evidence, vol. 1. Princeton University Press, Princeton (1976)

Zhou, X., Deng, X., Deng, Y., Mahadevan, S.: Dependence assessment in human reliability analysis based on D numbers and AHP. Nucl. Eng. Des. 313, 243–252 (2017)

Mo, H., Deng, Y.: A new aggregating operator for linguistic information based on D numbers. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 24(06), 831–846 (2016)

Zadeh, L.A.: A note on Z-numbers. Inf. Sci. 181(14), 2923–2932 (2011)

Mohamad, D., Shaharani, S.A., Kamis, N.H.: A Z-number-based decision making procedure with ranking fuzzy numbers method. In: AIP Conference Proceedings, vol. 1635, pp. 160–166. AIP (2014)

Bakar, A.S.A., Gegov, A.: Multi-layer decision methodology for ranking Z-numbers. Int. J. Comput. Intell. Syst. 8(2), 395–406 (2015)

Deng, Y.: Generalized evidence theory. Appl. Intell. 43(3), 530–543 (2015)

Rikhtegar, N., Mansouri, N., Ahadi Oroumieh, A., Yazdani-Chamzini, A., Kazimieras Zavadskas, E., Kildienė, S.: Environmental impact assessment based on group decision-making methods in mining projects. Econ. Res. Ekonomska Istraživanja 27(1), 378–392 (2014)

Chan, K.Y., Kwong, C., Law, M.: A fuzzy ordinary regression method for modeling customer preference in tea maker design. Neurocomputing 142, 147–154 (2014)

Modrak, V., Bednar, S., Semanco, P.: Decision-making approach to selecting optimal platform of service variants. In: Mathematical Problems in Engineering, vol. 2016 (2016)

Fei, L., Wang, H., Chen, L., Deng, Y.: A new vector valued similarity measure for intuitionistic fuzzy sets based on OWA operators. Iran. J. Fuzzy Syst. 15(5), 31–49 (2018)

Liu, T., Deng, Y., Chan, F.: Evidential supplier selection based on DEMATEL and game theory. Int. J. Fuzzy Syst. (2017). https://doi.org/10.1007/s40815-017-0400-4

Liu, J., Lian, F., Mallick, M.: Distributed compressed sensing based joint detection and tracking for multistatic radar system. Inf. Sci. 369, 100–118 (2016)

Liu, Z.-G., Pan, Q., Dezert, J., Martin, A.: Adaptive imputation of missing values for incomplete pattern classification. Pattern Recognit. 52, 85–95 (2016)

Jiang, W., Xie, C., Zhuang, M., Tang, Y.: Failure mode and effects analysis based on a novel fuzzy evidential method. Appl. Soft Comput. 57, 672–683 (2017)

Deng, X., Jiang, W., Zhang, J.: Zero-sum matrix game with payoffs of Dempster–Shafer belief structures and its applications on sensors. Sensors 17(4), 922 (2017)

Yang, Y., Han, D.: A new distance-based total uncertainty measure in the theory of belief functions. Knowl. Based Syst. 94, 114–123 (2016)

Zhang, X., Adamatzky, A., Yang, X.-S., Yang, H., Mahadevan, S., Deng, Y.: A physarum-inspired approach to supply chain network design. Sci. China Inf. Sci. 59(5), 052203 (2016)

Dong, Y., Wang, J., Chen, F., Hu, Y., Deng, Y.: Location of facility based on simulated annealing and “ZKW” algorithms. In: Mathematical Problems in Engineering, vol. 2017

Hu, Y., Du, F., Zhang, H.L.: Investigation of unsteady aerodynamics effects in cycloidal rotor using RANS solver. Aeronaut. J. 120(1228), 956–970 (2016)

Chan, K.Y., Ling, S.H.: A forward selection based fuzzy regression for new product development that correlates engineering characteristics with consumer preferences. J. Intell. Fuzzy Syst. 30(3), 1869–1880 (2016)

Chan, K.Y., Lam, H.K., Dillon, T.S., Ling, S.H.: A stepwise-based fuzzy regression procedure for developing customer preference models in new product development. IEEE Trans. Fuzzy Syst. 23(5), 1728–1745 (2015)

Zheng, H., Deng, Y., Hu, Y.: Fuzzy evidential influence diagram and its evaluation algorithm. Knowl. Based Syst. 131, 28–45 (2017)

Ma, J., Liu, W., Miller, P., Zhou, H.: An evidential fusion approach for gender profiling. Inf. Sci. 333, 10–20 (2016)

Zheng, X., Deng, Y.: Dependence assessment in human reliability analysis based on evidence credibility decay model and IOWA operator. Ann. Nucl. Energy 112, 673–684 (2018)

Zheng, H., Deng, Y.: Evaluation method based on fuzzy relations between Dempster–Shafer belief structure. Int. J. Intell. Syst. (2017). https://doi.org/10.1002/int.21956

Kang, B., Chhipi-Shrestha, G., Deng, Y., Mori, J., Hewage, K., Sadiq, R.: Development of a predictive model for Clostridium difficile infection incidence in hospitals using Gaussian mixture model and Dempster–Shafer theroy. In: Stochastic Environmental Research and Risk Assessment (2017). https://doi.org/10.1007/s00477-017-1459-z

Lefevre, E., Colot, O., Vannoorenberghe, P.: Belief function combination and conflict management. Inf. Fus. 3(2), 149–162 (2002)

Han, D., Deng, Y., Han, C.-Z., Hou, Z.: Weighted evidence combination based on distance of evidence and uncertainty measure. J. Infrared Millim. Waves 30(5), 396–400 (2011)

Jiang, W., Wang, S., Liu, X., Zheng, H., Wei, B.: Evidence conflict measure based on OWA operator in open world. PloS ONE 12(5), e0177828 (2017)

Yager, R.R.: On the Dempster–Shafer framework and new combination rules. Inf. Sci. 41(2), 93–137 (1987)

Dubois, D., Prade, H.: Representation and combination of uncertainty with belief functions and possibility measures. Comput. Intell. 4(3), 244–264 (1988)

Smets, P.: The combination of evidence in the transferable belief model. IEEE Trans. Pattern Anal. Mach. Intell. 12(5), 447–458 (1990)

Murphy, C.K.: Combining belief functions when evidence conflicts. Decis. Support Syst. 29(1), 1–9 (2000)

Deng, Y., Shi, W., Zhu, Z., Liu, Q.: Combining belief functions based on distance of evidence. Decis. Support Syst. 38(3), 489–493 (2004)

Zhang, Z., Liu, T., Chen, D., Zhang, W.: Novel algorithm for identifying and fusing conflicting data in wireless sensor networks. Sensors 14(6), 9562–9581 (2014)

Yuan, K., Xiao, F., Fei, L., Kang, B., Deng, Y.: Conflict management based on belief function entropy in sensor fusion. SpringerPlus 5(1), 638 (2016)

Deng, Y.: Deng entropy. Chaos Solitons Fractals 91, 549–553 (2016)

Zhang, Q., Li, M., Deng, Y.: Measure the structure similarity of nodes in complex networks based on relative entropy. Physica A Stat. Mech. Appl. 491, 749–763 (2017)

Jiang, W., Wei, B., Qin, X., Zhan, J., Tang, Y.: Sensor data fusion based on a new conflict measure. In: Mathematical Problems in Engineering, vol. 2016 (2016)

Shafer, G.: A mathematical theory of evidence. Technometrics 20(1), 242 (1978)

Jousselme, A.-L., Maupin, P.: Distances in evidence theory: comprehensive survey and generalizations. Int. J. Approx. Reason. 53(2), 118–145 (2012)

Zhang, J., Korfhage, R.R.: A distance and angle similarity measure method. J. Assoc. Inf. Sci. Technol. 50(9), 772 (1999)

Shannon, C.E.: A mathematical theory of communication. ACM SIGMOBILE Mobile Comput. Commun. Rev. 5(1), 3–55 (2001)

Yager, R.R.: Entropy and specificity in a mathematical theory of evidence. Int. J. Gen. Sys. 9(4), 249–260 (1983)

Jousselme, A.-L., Grenier, D., Bossé, É.: A new distance between two bodies of evidence. Inf. Fus. 2(2), 91–101 (2001)

Acknowledgements

This research is supported by the Fundamental Research Funds for the Central Universities (Grant No. SWU115008), the National Natural Science Foundation of China (Nos. 61672435, 61702427, 61702426), and the 1000-Plan of Chongqing by Southwest University (No. SWU116007).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All the authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Xiao, F. An Improved Method for Combining Conflicting Evidences Based on the Similarity Measure and Belief Function Entropy. Int. J. Fuzzy Syst. 20, 1256–1266 (2018). https://doi.org/10.1007/s40815-017-0436-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40815-017-0436-5