Abstract

In this paper, we investigate the estimation problems of unknown parameters of the Kumaraswamy distribution under type I progressive hybrid censoring. This censoring scheme is a combination of progressive type I and hybrid censoring schemes. We derive the maximum likelihood estimates of parameters using an expectation-maximization algorithm. Bayes estimates are obtained under different loss functions using the Lindley method and importance sampling procedure. The highest posterior density intervals of unknown parameters are constructed as well. We also obtain prediction estimates and prediction intervals for censored observations. A Monte Carlo simulation study is performed to compare proposed methods and one real data set is analyzed for illustrative purposes.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In many practical studies of interest including survival analysis, clinical trials, industrial and mechanical applications, often reliability and life testing experiments are performed and based on observed data, different procedures can be used to obtain various inferences upon relevant unknown quantities such as failure probabilities, quantiles, reliability characteristics and so on. In general, efficiency of different inferences rely upon observed data. There are many situations including life testing experiments where observed data are censored in nature. In the literature, different censoring methodologies have been proposed to appropriately analyze various physical phenomena. Type I and type II censoring schemes are the two most commonly used procedures in this regard. Consider a situation where n items are put on a life testing experiment. Then, in type I censoring, the experiment continues up to a pre-specified time duration T and no observation is recorded after this time point. Similarly, in type II censoring, it continues until a pre-fixed number of failures m (\(\le n\)) has been observed. The drawback of type I censoring is that one may not collect enough failure observations before the end of experiment, and the drawback of type II censoring is that the experimental time may be very long. Epstein [18] initially discussed the concept type I hybrid censoring as a mixture of these two basic censoring schemes. In this case, the experiment is terminated at a random time \(T_0\) given by \(\min \{X_m, T\}\), where T is a pre-specified time and \(X_m\) is the m-th failure time. Childs et al. [10] proposed a life test, called type II hybrid censoring, that stops when a pre-specified m number of failure times is observed or the time T has reached, whichever happens later. That is, the termination time of the experiment is \(T_0=\max \{X_m, T\}\). According to the above construction, the number of failure observations is random. In particular, it is possible to have less than m failure observations in type I hybrid censoring, while in type II hybrid censoring, we will have at least m failure observations. If an experimenter desires to remove live units at points other than the final termination point of a life test, the above censoring schemes will not be of use to the experimenter. The above censoring schemes do not allow for units to be removed from the test at points other than the final termination point. As indicated by Balakrishnan and Aggarwala [4], this allowance will be desirable when a compromise between reduced time of experimentation and the observation of at least some extreme lifetimes is sought, or when some of the surviving units in the experiment that are removed early on can be used for some other tests. As in the case of accidental breakage of experimental units or loss of contact with individuals under study, the loss of test units at points other than the termination point may also be unavoidable. These reasons lead us into the area of progressive censoring. Given the censoring scheme \((R_1,R_2,\ldots ,R_m)\) and n units put simultaneously on a life test, the operation of progressive censoring is to remove some surviving units from the test before the termination time of experiment. Kundu and Joarder [9] and Childs et al. [25] combined the concepts of type I hybrid censoring and progressive censoring to develop the type I progressive hybrid censoring scheme. The type I progressive hybrid censoring scheme can briefly be described as follows.

Suppose n test units are put on a life test and the progressive censoring scheme \((R_1,R_2,\ldots ,R_m)\) are fixed before the start of experiment. The time point T is also fixed beforehand. At the time of the first failure \(X_{1:m:n}\), \(R_1\) surviving units are removed randomly from the test. At the time of the second failure \(X_{2:m:n}\), \(R_2\) units are removed from the \((n-R_1 -2)\) surviving units, and so on, and the test continues till its termination point \(T^{*}=min\{T, X_{m:m:n}\}\). If the m-th failure occurs before T, that is \(X_{m:m:n}<T\), then the observed failures are given by \(X_{1:m:n},X_{2:m:n},\ldots ,X_{m:m:n}\) and the test stops at time \(X_{m:m:n}\) by removing remaining \(R_m=n-m-\sum _{i=1}^{m-1}R_i\) units from the test. On the other hand, if \(X_{m:m:n}>T\), then we observe the sample \(X_{1:m:n},X_{2:m:n},\ldots ,X_{j:m:n}\), (\(j < m\)), and the test stops at time T by removing remaining \(R^*_j=n-j-\sum _{i=1}^{j}R_{i}\) units from the test. In this topic, much statistical inference work has been done by several authors including, for example, [22, 28, 35]. A recent account on type I progressive hybrid censoring can be found in the monograph by Balakrishnan and Cramer [5], or in the review article by Balakrishnan and Kundu [7]. Among others, we also refer to [20, 29, 36] for some more useful inferential results on this scheme.

Kumaraswamy [24] proposed a more general probability density function for double bounded random processes, which is known as Kumaraswamy distribution. Although the Kumaraswamy distribution was introduced in 1980, this distribution seems to have attracted attention comparatively recently. The probability density function (PDF) and cumulative distribution function (CDF) of Kumaraswamy distribution are given by, respectively,

and

where \(\alpha >0\) and \(\beta >0\) are shape parameters. The range of this distribution is the same as that of the beta distribution. Both of the distributions share many structural properties depending upon their parameter values. Interestingly, the CDF of Kumaraswamy distribution has a nice analytical expression. This makes it more useful in practice than the beta distribution whose CDF is not easily tractable. Eldin et al. [15] indicated that the Kumaraswamy distribution is applicable to many natural phenomena whose outcomes have lower and upper bounds, such as the heights of individuals, scores obtained on a test, atmospheric temperatures, hydrological data, etc. They also pointed out that the Kumaraswamy distribution could be appropriate in situations where scientists use probability distributions which have infinite lower and/or upper bounds to fit data, when in reality the bounds are finite. In recent past few years, the Kumaraswamy distribution and its extension have gained some attention among researchers and interesting results have been obtained, see for instance, [2, 11, 12, 21, 31, 32, 38, 40]. One may also refer to [8, 16, 17, 34, 41] for some general interesting inference results.

Recently, the Kumaraswamy distribution was applied to the area of reliability analysis. It seems that the applications of the Kumaraswamy distribution will be criticized because its range is between 0 and 1. We provide two reasons for the necessity of the Kumaraswamy distribution as follows. The first reason is that, in practice, the lifetime cannot be actually infinite and there is a large enough point on the probability tail at the time the products are dropped or replaced, and hence it may be appropriate to use a bound distribution to analyze these lifetime data (see, e.g., [1, 42]). The second reason is that there are many random variables and random processes appeared from practical applications whose element values are bounded both at the lower and upper ends. (see, e.g., [19, 37]). Under these two reasons, the data from associated ares can be normalized and are fitted by a bound distribution with range (0, 1). Therefore, Kumaraswamy distribution could be used as a potential model in reliability and lifetime studies as well as other application fields (see, e.g., [33]). In the last five years, some researchers applied the Kumaraswamy distribution and its extension to the reliability analysis. For example, [14] dealt with the Bayesian and non-Bayesian estimation of multicomponent stress-strength reliability. Sultana et al. [39] considered estimation of unknown parameters \(\alpha \) and \(\beta \) under hybrid censoring and obtained point and interval estimates by using maximum likelihood method and Bayesian approach. Kizilaslan and Nadar [27] discussed the uniformly minimum variance unbiased and exact Bayes estimates of reliability in a multicomponent stress-strength model based on a bivariate Kumaraswamy distribution. In this paper, we investigate the problems of estimation and prediction for the Kumaraswamy distribution based on type I progressive hybrid censoring.

The rest of this paper is organized as follows: In Sect. 2, we compute the maximum likelihood estimators (MLEs) of unknown parameter \(\alpha \) and \(\beta \) of the Kumaraswamy distribution based on type I progressively hybrid censored samples. We also derive the Bayes estimators under three different loss functions. In Sect. 3, we obtain the prediction estimates and prediction intervals of censored observations in Bayesian framework. A Monte Carlo simulation study is performed in Sect. 4 to compare the performance of proposed estimator. A real data set is analyzed in Sect. 5 for illustrative purposes. Finally, some conclusions are made in Sect. 6.

2 Parameter Estimations

In this section, we are going to derive the MLEs of the parameters from a type I progressively hybrid censored Kumaraswamy samples. We will also obtain the Bayes estimators under different loss function and for the parameters of Kumaraswamy distribution.

2.1 Maximum Likelihood Estimation

Suppose that n units from a Kumaraswamy distribution are placed on a test with type I progressive hybrid censoring. The observed data under considered censoring scheme may be one of the following two cases:

Then, the likelihood function with type I progressive hybrid censoring is given by

For the simplicity of notation, we will use \(x_i\) instead of \(x_{i:m:n}\). The likelihood function of \(\alpha \) and \(\beta \) can be written as

where d and \(U(\alpha ,\,\beta )\) are, respectively, given by

and

The log-likelihood function may then be written as

Taking derivatives with respect to \(\alpha \) and \(\beta \) of Eq. (3) and equating them to zero, we obtain the likelihood equations for \(\alpha \) and \(\beta \) to be

and

where

and

Note that \(u_2(\alpha )\) does not depend on \(\beta \). Thus, Eq. (5) yields the MLE of \(\beta \) to be

Substituting Eq. (6) into Eq. (4), the MLE of \(\alpha \) can be obtained by solving the nonlinear equation

Because Eq. (7) cannot be solved in an explicit form, a numerical method such as Newton–Raphson iteration must be employed to obtain the MLE of \(\alpha \). The Newton–Raphson algorithm requires the second derivatives of the log-likelihood function. Since the computations of the derivatives may be very complicated, [23] used the fixed point approach to solve the MLE. They also proved that the graphical method proposed by [6] reduces in fact to the fixed point solution. The fixed point approach is easy to implement and does not require the derivation of a given function. Its convergence speed is faster than the Newton–Raphson method.

2.1.1 EM Algorithm

It is impossible to obtain the MLEs \(\hat{\alpha }\) and \(\hat{\beta }\) of parameters \(\alpha \) and \(\beta \) in closed forms because the likelihood equations are nonlinear in nature. One can employ some numerical methods mentioned above to solve these equations for MLEs. Instead an expectation-maximization (EM) algorithm can be implemented for this purpose. This algorithm which was introduced by [13]. Recall that under type I progressive hybrid censoring, two different situations may arise. For the Case I, we assume that \(\varvec{X}=(X_{(1)},X_{(2)},\ldots ,X_{(m)})\) denote the observed data and \(\varvec{Y}=(\varvec{Y}_1,\varvec{Y}_2,\ldots ,\varvec{Y}_m)\) denote the progressively censored data, where \(\varvec{Y}_g\) denotes a \(1\times R_g\) vector such that \(\varvec{Y}_g=(Y_{g1}, Y_{g2},\ldots ,Y_{gR_g})\), \(g=1,2,\ldots ,m\). Here the complete data set can be written as \(\varvec{Z}=(\varvec{X},\varvec{Y})\). Likewise for the Case II, we assume that \(\varvec{X}=(X_{(1)},X_{(2)},\ldots ,X_{(j)})\) are observed data, \(\varvec{Y}=(\varvec{Y}_1,\varvec{Y}_2,\ldots ,\varvec{Y}_j)\) are the progressively censored data, where \(\varvec{Y}_s\) is a \(1\times R_s\) vector such that \(\varvec{Y}_s=(Y_{s1},Y_{s2},\ldots ,Y_{sR})\), \(s=1,2,\ldots ,d\), and \(\varvec{Y}'=(Y_1',Y_2',\ldots ,Y_{R_j^*}')\) are the censored data when the experiment stops. The complete data set for this case can be written as \(\varvec{Z}=(\varvec{X},\varvec{Y},\varvec{Y}')\). Following [3], we write the likelihood function as

where

and

We apply E-step of the algorithm on M and N and then observe that

and

The expectations involved in the above two expressions are computed as

and

Thus, we have

and

In M-step, we maximize the above functions with respect to parameters \(\alpha \) and \(\beta \). In Case I, given the j-th stage estimate of \(\alpha \), the updated \((j+1)\)-th estimate can be computed from the following equation:

Subsequently, the estimate of parameter \(\beta \) can be obtained as

Proceeding similarly for the Case II, we obtain the updated estimate of \(\alpha \) by solving the equation

The updated estimate of \(\beta \) can then be obtained as

This iterative process can be repeated until the desired accuracy is achieved.

2.2 Bayesian Estimation

In this section, we derive the Bayes estimators of unknown parameters \(\alpha \) and \(\beta \) of Kumaraswamy distribution based on type I progressive hybrid censoring. These estimators are obtained under symmetric and asymetric loss function, such as squared error, linex and entropy loss functions. The most useful loss function for obtaining Bayes estimators is the squared error loss which is defined as \(L_S(\mu , \hat{\mu })=(\hat{\mu }-\mu )^2\), where \(\mu \) is estimated by \(\hat{\mu }\). We know that the corresponding bayes estimator \(\hat{\mu }_S\) of \(\mu \) is the posterior mean of \(\mu \). However, the linex loss is defined as \(L_l(\mu , \hat{\mu })=e^{h\,(\hat{\mu }-\mu )}-h\,(\hat{\mu }-\mu )-1\), \(h\ne 0,\) where h is the shape parameter of the loss function. For further details one can see [43]. The corresponding Bayes estimator of \(\mu \) under this loss function is obtain as \(\hat{\mu }_L=-\frac{1}{h}\log {\{E_{\mu }(e^{-h\,\mu }|\varvec{x})\}}\). Whereas, the entropy loss function is defined as \(L_e(\mu ,\hat{\mu })=(\frac{\hat{\mu }}{\mu })^w-w\,\log {(\frac{\hat{\mu }}{\mu })}-1\), \(w\ne 0\). For this loss function, the Bayes estimator is obtained as \(\hat{\mu }_E=E_{\mu }(\mu ^{-w}|\varvec{x})^{\frac{1}{w}}\). To make Bayesian inference, we need to assume the prior distributions of unknown parameters.

Here we assume that \(\alpha \) has a prior gamma distribution with hyper-parameters a and b, \(\beta \) has a prior gamma distribution with hyper-parameters p and q, and \(\alpha \) and \(\beta \) are independent. Thus, the joint prior is of the form

where a, b, p, and q are positive real numbers. We mention that when both the model parameters \(\alpha \) and \(\beta \) are unknown then there does not exist a natural conjugate bivariate prior distribution for unknown parameters. In such situations gamma prior distributions may be considered which are highly flexible as well. We refer to [26] for further discussion on this topic. It follows that the joint posterior distribution of \(\alpha \) and \(\beta \) is given by

where

Since the posterior distribution in Eq. (8) is intractable and hence, the posterior means cannot be obtained in closed forms. This indicates that we can apply the method proposed by [30] or importance sampling algorithm to obtain the desired estimators of \(\alpha \) and \(\beta \), and this is discussed in the next subsection.

2.2.1 Lindley Method

Here, we use Lindley method to obtain bayes estimators of the unknown parameter. It is seen that all the Bayes estimators \(\hat{\mu }_S\), \(\hat{\mu }_L\), and \(\hat{\mu }_E\) are posterior expectation of some parametric function of unknown parameters. Therefore, the Bayes estimator of \(g(\alpha ,\beta )\) under some loss function with respect to the distribution \(\pi (\alpha ,\beta |\varvec{x})\), is given by

where \(l(\alpha ,\beta \mid \varvec{x})\) is the log-likelihood function of \((\alpha ,\beta )\) and \(\rho (\alpha ,\beta )=\log {\pi (\alpha ,\beta )}\). Using the Lindley method, the approximation of \({\hat{g}}(\alpha ,\beta )\) can be written as

Here \(\sigma _{i,j}\) is the (i, j)-th elements of matrix \(\bigl [-\frac{\partial ^2 l}{\partial \alpha \partial \beta }\bigr ]^{-1}\), \(i,j=1,2\) and \(g_{\alpha \alpha }\) is the second order partial derivative of \(g(\alpha ,\beta )\) with respect to \(\alpha \) and similar interpretations hold for other expressions as well. All quantities are evaluated at the MLEs \((\hat{\alpha },\hat{\beta })\) and involved expressions are given below as

where

and

In the above computations, we consider \(g(\alpha ,\beta )=\alpha \) and \(g(\alpha ,\beta )=\beta \) to obtain the desired Lindley approximations of the Bayes estimates of \(\alpha \) and \(\beta \), respectively. For the squared error loss function \(L_s\), we get \(g(\alpha ,\beta )=\alpha \), \(g_{\alpha }=1\), \(g_{\alpha \alpha }=g_{\alpha \beta }=g_{\beta \alpha }=g_{\beta \beta }=g_{\beta }=0\). Similarly, when \(g(\alpha ,\beta )=\beta \), \(g_{\beta }=1\), \(g_{\beta \beta }=g_{\alpha \beta }=g_{\beta \alpha }=g_{\alpha \alpha }=g_{\alpha }=0\). However, for the linex loss function \(L_l\), in this case we have

\(g(\alpha ,\beta )=e^{-h\,\alpha }\), \(g_{\alpha }=-h\,e^{-h\,\alpha }\), \(g_{\alpha \alpha }=h^2\,e^{-h\,\alpha }\), \(g_{\beta \beta }=g_{\alpha \beta }=g_{\beta \alpha }=g_{\beta }=0\). Similarly, for \(g(\alpha ,\beta )=\beta \) we can also calculate the required trems in Eq. (9). Finally, for the entropy loss function \(L_e\), in this case we notice that \(g(\alpha ,\beta )=\alpha ^{-w}\), \(g_{\alpha }=-w\alpha ^{-(w+1)}\), \(g_{\alpha \alpha }=w(w+1)\alpha ^{-(w+2)}\) and \(g_{\beta \beta }=g_{\alpha \beta }=g_{\beta \alpha }=g_{\beta }=0\). Similarly, for \(g(\alpha ,\beta )=\beta \) we can also calculate the required trems in Eq. (9).

2.2.2 Importance Sampling

The Lindley method cannot be used to construct the Bayes intervals of the unknown parameters. In this section, we provide the importance sampling method for computing the Bayes estimates of parameters and also construct the highest posterior density (HPD) intervals of parameters. Let G(a, b) be the density function of a gamma distribution with parameter a and b. We can rewrite the joint posterior distribution of \(\alpha \) and \(\beta \) as

where

and \(h(\alpha ,\beta )=e^{-\sum _{i=1}^{d}\log (1-x_i^\alpha )}\bigl [q-V(\alpha ,\beta )\bigr ]^{-d-p}\). The following steps are used to obtain the Bayes estimators of \(g(\alpha ,\beta )\).

-

Step 1. Generate \(\beta _1 \sim G_{\beta }(\cdot ,\cdot )\).

-

Step 2. Generate \(\alpha _1 \sim G_{\alpha \mid \beta }(\cdot ,\cdot )\).

-

Step 3. Repeat the above two steps s times and generate samples \((\alpha _1,\beta _1)\), \((\alpha _2,\beta _2),\ldots \), \((\alpha _s,\beta _s)\).

-

Step 4. Now, the Bayes estimate of \(g(\alpha ,\beta )\) under \(L_s\), \(L_l\), \(L_e\) loss functions are, respectively, given by

$$\begin{aligned} {\tilde{g}}_{BS}(\alpha ,\beta ) &= \frac{\sum _{i=1}^{s}g(\alpha _i,\beta _i)h(\alpha _i,\beta _i)}{\sum _{i=1}^{s}h(\alpha _i,\beta _i)},\\ {\tilde{g}}_{BL}(\alpha ,\beta ) &= -\frac{1}{h}\log {\left( \frac{\sum _{i=1}^{s}e^{-h\,g(\alpha _i,\beta _i)}h(\alpha _i,\beta _i)}{\sum _{i=1}^{s}h(\alpha _i,\beta _i)}\right) }, \end{aligned}$$and

$$\begin{aligned} {\tilde{g}}_{BE}(\alpha ,\beta )=\left( \frac{\sum \nolimits _{i=1}^{s}g(\alpha _i,\beta _i)^{-w}\,h(\alpha _i,\beta _i)}{\sum \nolimits _{i=1}^{s}h(\alpha _i,\beta _i)}\right) ^{-\frac{1}{w}}. \end{aligned}$$

The Bayes estimates of \(\alpha \) and \(\beta \) can be obtained by considering \(g(\alpha ,\beta )=\alpha \) and \(g(\alpha ,\beta )=\beta \) in the above computation, respectively.

3 Prediction of Censored Observations

Predicting the censored observations on the basis of the known information is an important issue in statistics. Bayesian approach is useful in predicting the censored observations by using the predictive distribution. Here we obtain the prediction estimates and prediction intervals of censored observations based on the information that observed data come from a type I progressively hybrid censored sample. Let \(\varvec{x}=(x_1,x_2,\ldots ,x_d)\) denote a type I progressively hybrid censored sample with the censoring scheme \((R_1,R_2,\ldots ,R_d)\). Further, let \(\varvec{y}_i=(y_{i1},y_{i2},\ldots ,y_{iR_i})\) be the lifetimes of units which are censored at the i-th stage. We wish to obtain the prediction estimate of y (\(=y_{ik}\), \(k=1,2,\ldots ,R_i\), \(i=1,2,\ldots ,d\)) and also construct the prediction interval. The conditional distribution of y given type I progressively hybrid censored data is obtained as

for \(k=1,2,\ldots ,R_i\) and \(i=1,2,\ldots ,d\). By forming the product of Eqs. (3) and (8), and integrating out over the set \(\{(\alpha ,\beta );\; 0<\alpha<\infty ,0<\beta <\infty \}\), the predictive distribution is obtained as

Under squared loss function, the Bayes predictor of y is the mean of predictive distribution. That is,

where

One can use the importance sampling to obtain \({\hat{y}}\). Let \(\{(\alpha _l,\beta _l), l=1,2,\ldots ,N\}\) denote the samples generated from the posterior distribution \(\pi (\alpha ,\beta |\varvec{x})\) as described in Sect. 2.2.2. Then, we have

We mention that the prediction of censored observation that occurs after T can be taken care similarly.

Next, we obtain the prediction intervals of censored observations. The survival function given type I progressively hybrid censored data can be obtained as

Then, the posterior predictive survival function is

Finally, the two-sided \(100(1-\tau )\%\) equi-tailed prediction interval (L, U) of censored observation y can be computed by solving the following nonlinear equations:

4 Simulation Study

In this section, we perform a Monte Carlo simulation study to compare the performance of different estimators of unknown parameters of the Kumaraswamy distribution. We also assess the behavior of predictors of censored observations under the considered censoring scheme. The performance of different estimators is compared in terms of corresponding average estimates and mean square error (MSE) values. For this purpose, we generate type I progressively hybrid censored samples using various sampling schemes by considering different combinations of (n, m) and assuming that T is either 0.53 or 0.79. We used the R statistical software for all computations. The MLEs of \(\alpha \) and \(\beta \) are computed by using the EM algorithm. Bayes estimates of parameters are computed with respect to a gamma prior distribution under some symmetric and asymmetric loss functions. These estimates are computed by using Lindley method and importance sampling techniques. Both MLEs and Bayes estimates of parameters are obtained for arbitrarily taken unknown parameters \(\alpha =1.5\) and \(\beta =2.5\). Accordingly hyperparameters in gamma prior are assigned as \(a=3\), \(b=2\), \(p=5\), and \(q=2\). The removal patterns of progressive censoring schemes are listed in Table 1. In Table 2, we tabulate all the average estimates of \(\alpha \) and \(\beta \) along with MSEs. In this table, LI and IS represent the Bayes estimates obtained by using Lindley method and important sampling, respectively, under squared error loss function. The values in the parentheses denote the MSEs. Bayes estimates and MSES, corresponding to linex loss and entropy loss functions with loss parameters h, w are taken the values \(-0.25, 0.5\), are represented in Tables 3 and 4. Small values of h and w provide reasonably good estimates. Also, as increase in effective sample size then the corresponding MSEs tend to decrease. From all these tables, we can observe that Bayes estimates of parameters \(\alpha \) and \(\beta \) shows better performance than the corresponding MLEs. It can be further observed that the MLEs of unknown parameters compete well with the respective Lindley estimates. The performance of importance sampling estimates is quite good for almost all the tabulated schemes and for both the parameters. We tend to get better estimation results with an increase in effective sample size. Since Lindley estimates are computationally less intensive and their performance is also good, we recommend its use in making further inference upon unknown parameters of the Kumaraswamy distribution under type I progressive hybrid censoring.

5 Real Data Analysis

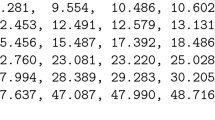

In this section, we analyze a real data set which describes the monthly water capacity from the Shasta reservoir in California, USA. The data are recorded for the month of February from 1991 to 2010 (see for details on the website http://cdec.water.ca.gov/reservoir_map.html). The observed data are:

We first fit the Kumaraswamy distribution to this data set. For comparison purposes, three more distributions such as generalized exponential, Poisson-exponential, and Burr XII distributions are also fitted. We judge the goodness of fit using various criteria, for example, negative log-likelihood criterion (NLC), Akaike information criterion (AIC), corrected AIC (AICc), and Bayesian information criterion (BIC). Smaller values of these criteria indicate that a model better fits the data. From the values reported in Table 5, we conclude that the Kumaraswamy distribution fits the data set good compared to the other models. Thus, the considered model can be used to make inference from the given data set. We consider different censoring schemes \(S_1=(10,0^{*9})\) and \(S_2=(2,0^{*4},5,3,0^{*3})\) by taking \((n,m)=(20,10)\) (here \((1^{*5}, 0)\), for example, means that the censoring scheme employed is (1, 1, 1, 1, 1, 0)). The generated data under these schemes are listed in Table 6. The MLEs and Bayes estimates under all the considered loss functions, of both the unknown parameters are presented in Table 7. In this table, the Bayes estimates are obtained with respect to a noninformative prior distribution where hyper-parameters are assigned as zero value. In general, the Bayes estimates are smaller than the MLEs. In Tables 8 and 9, we present prediction estimates and prediction intervals of observations censored before and after T at different stages i and \(R_{j}^{*}\) of the experiment.

6 Conclusions

In this paper, we consider the estimation and prediction problems for Kumaraswamy distribution when data come from a type I progressive hybrid censoring. The MLEs and Bayes estimates are derived. We use the EM algorithm to obtain the MLEs, and also use the Lindley method and importance sampling approach to obtain the Bayes estimates under various loss functions. The simulation results show that the proposed methods perform well. A numerical example are also analyzed using the proposed methods of estimation and prediction.

References

Aban IB, Meerschaert MM, Panorska AK (2006) Parameter estimation for the truncated pareto distribution. J Am Stat Assoc 101:270–277

Ahmed SO, Reyad HM (2016) Bayesian and E-Bayesian estimation for the Kumaraswamy distribution based on type-II censoring. Int J Adv Math Sci 4:10–17

Alma OG, Belaghi RA (2016) On the estimation of the extreme value and normal distribution parameters based on progressive type-II hybrid censored data. J Stat Comput Simul 86:569–596

Balakrishnan N, Aggarwala R (2000) Progressive censoring - theory, methods, and applications. Birkhäuser, Boston

Balakrishnan N, Cramer E (2014) The art of progressive censoring: applications to reliability and quality. Birkhäuser, Boston

Balakrishnan N, Kateri M (2008) On the maximum likelihood estimation of parameters of Weibull distribution based on complete and censored data. Stat Prob Lett 78:2971–2975

Balakrishnan N, Kundu D (2013) Hybrid censoring: models, inferential results and applications. Comput Stat Data Anal 57:166–209

Chen M-H, Shao Q-M (1999) Monte Carlo estimation of Bayesian credible and HPD intervals. J Comput Gr Stat 8:69–92

Childs A, Chandrasekar B, Balakrishnan N (2008) Exact likelihood inference for an exponential parameter under progressive hybrid censoring. In: Vonta F, Nikulin M, Limnios N, Huber-Carol C (eds) Statistical models and methods for biomedical and technical systems. Birkhäuser, Boston, pp 319–330

Childs A, Chandrasekar B, Balakrishnan N, Kundu D (2003) Exact likelihood inference based on type-I and type-II hybrid censored samples from the exponential distribution. Ann Inst Stat Math 55:319–330

Cordeiro GM, Nadarajah S, Ortega EMM (2012) The Kumaraswamy Gumbel distribution. Stat Methods Appl 21:139–168

Cordeiro GM, Ortega EMM, Silva GO (2014) The Kumaraswamy modified Weibull distribution: theory and applications. J Stat Comput Simul 84:1387–1411

Dempster AP, Laird NM, Rubin DB (1977) Maximum likelihood from incomplete data via the EM algorithm. J R Stat Soc Ser B 39:1–38

Dey S, Mazucheli J, Anis MZ (2017) Estimation of reliability of multicomponent stress-strength for a Kumaraswamy distribution. Commun Stat Theory Methods 46:1560–1572

Eldin MM, Khalil N, Amein M (2014) Estimation of parameters of the Kumaraswamy distribution based on general progressive type II censoring. Am J Theor Appl Stat 3:217–222

Elgarhy M, Haq MAu, ul Ain Q (2018) Exponentiated generalized Kumaraswamy distribution with applications. Ann Data Sci 5:273–292

El-Sherpieny EA, Elsehetry MM (2019) Type II Kumaraswamy half logistic family of distributions with applications to exponential model. Ann Data Sci 6:1–20

Epstein B (1954) Truncated life tests in the exponential case. Ann Math Stat 25:555–564

Ganji A, Ponnambalam K, Khalili D, Karamouz M (2006) Grain yield reliability analysis with crop water demand uncertainty. Stoch Environ Res Risk Assess 20:259–277

Hemmati F, Khorram E (2013) Statistical analysis of the log-normal distribution under type-II progressive hybrid censoring schemes. Commun Stat Simul Comput 42:52–75

Jones MC (2009) Kumaraswamys distribution: a beta-type distribution with some tractability advantages. Stat Methodol 6:70–81

Kayal T, Tripathi YM, Rastogi MK, Asgharzadeh A (2017) Inference for Burr XII distribution under type I progressive hybrid censoring. Commun Stat Simul Comput 46:7447–7465

Kernane T, Raizah ZA (2009) Fixed point iteration for estimating the parameters of extreme value distributions. Commun Stat Simul Comput 38:2161–2170

Kumaraswamy P (1980) A generalized probability density function for double-bounded random processes. J Hydrol 46:79–88

Kundu D, Joarder A (2006) Analysis of type-II progressively hybrid censored data. Comput Stat Data Anal 50:2509–2528

Kundu D, Pradhan B (2009) Bayesian inference and life testing plans for generalized exponential distribution. Sci China Ser A Math 52:1373–1388

Kizilaslan F, Nadar M (2018) Estimation of reliability in a multicomponent stress-strength model based on a bivariate Kumaraswamy distribution. Stat Pap 59:307–340

Lin C-T, Chou C-C, Huang Y-L (2012) Inference for the Weibull distribution with progressive hybrid censoring. Comput Stat Data Anal 56:451–467

Lin C, Huang Y (2012) On progressive hybrid censored exponential distribution. J Stat Comput Simul 82:689–709

Lindley DV (1980) Approximate Bayesian method. Trabajos de Estadistica 31:223–237

Mitnik PA (2013) New properties of the Kumaraswamy distribution. Commun Stat Theory Methods 42:741–755

Nadar M, Papadopoulos A, Kizilaslan F (2013) Statistical analysis for Kumaraswamy’s distribution based on record data. Stat Pap 54:355–369

Nadarajah S (2008) On the distribution of Kumaraswamy. J Hydrol 348:568–569

Olson D, Shi D (2007) Introduction to business data mining. McGraw-Hill, Irwin

Park S, Balakrishnan N, Kim SW (2011) Fisher information in progressive hybrid censoring schemes. Statistics 45:623–631

Tomer SK, Panwar MS (2015) Estimation procedures for Maxwell distribution under type-I progressive hybrid censoring scheme. J Stat Comput Simul 85:339–356

Seifi A, Ponnambalam K, Vlach J (2000) Maximization of manufacturing yield of systems with arbitrary distributions of component values. Ann Oper Res 99:373–383

Sindhu TN, Feroze N, Aslam M (2013) Bayesian analysis of the Kumaraswamy distribution under failure censoring sampling scheme. Int J Adv Sci Technol 51:39–58

Sultana F, Tripathi YM, Rastogi MK, Wu S-J (2018) Parameter estimation for the Kumaraswamy distribution based on hybrid censoring. Am J Math Manag Sci 37:243–261

Wang BX, Wang XK, Yu K (2017) Inference on the Kumaraswamy distribution. Commun Stat Theory Methods 46:2079–2090

Shi Y (2014) Big data: history, current status, and challenges going forward. Bridge US Natl Acad Eng 44:6–11

Zhang T, Xie M (2011) On the upper truncated Weibull distribution and its reliability implications. Reliab Eng Syst Saf 96:194–200

Zellner A (1986) Bayesian estimation and prediction using asymmetric loss functions. J Am Stat Assoc 81:446–451

Acknowledgements

Authors thank the reviewer and Editor for their useful comments to improve our manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest with respect to the research, authorship and/or publication of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sultana, F., Tripathi, Y.M., Wu, SJ. et al. Inference for Kumaraswamy Distribution Based on Type I Progressive Hybrid Censoring. Ann. Data. Sci. 9, 1283–1307 (2022). https://doi.org/10.1007/s40745-020-00283-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40745-020-00283-z