Abstract

This article deals with the constant–stress partially accelerated life test using type I and type II censored data in the presence of competing failure causes. Suppose that the occurrence time of the failure cause follows Weibull distribution. Maximum likelihood technique is employed to estimate the population parameters of the distribution. The performance of the theoretical estimators of the parameters are evaluated and investigated by using a simulation algorithm.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In life testing and reliability experiments, time to failure data obtained under normal operating conditions is used to analyze the products failure time distribution and its associated parameters. The continuous improvement in manufacturing design creates a problem in obtaining information about lifetime of some products and materials with high reliability at the time of testing under normal conditions. Under such conditions the life testing becomes very expensive and time consuming. To obtain failures quickly, a sample of these materials is tested at more severe operating conditions than normal ones. These conditions are referred to as stresses, which may be in the form of temperature, voltage, force, humidity, pressure, vibrations, etc. This type of testing is called accelerated life testing (ALT), where products are run at higher than usual stress conditions, to induce early failures in a short time. The life data collected from such accelerated tests is then analyzed and extrapolated to estimate the life characteristic under normal operating conditions by using a proper life stress relationship. There are situations where a life stress relationship is not known and cannot be assumed, i.e., the data obtained from ALT cannot be extrapolated to normal conditions. In such situations, partially accelerated life testing (PALT) is used. In PALT, test units are run at both normal and accelerated conditions.

1.1 Constant Stress ALT

The stresses can be applied in various ways, namely; constant-stress, step-stress, and progressive-stress (see Nelson [16]). Under step-stress PALT, a test item is first run at normal use condition and, if it does not fail for a specified time, then it is run at accelerated use condition until failure occurs or the observation is censored. On the other hand a progressive-stress ALT lets the stress level to increase linearly and continuously on any surviving test units. A constant-stress ALT (CS-PAL) is the most common type where each test unit is subjected to only one chosen stress level until its failure or the termination of the test, whichever occurs first.

For an overview of the CS-PALT, there is an amount of literature on designing CS-PALT for example, Bai and Chung [3], Bai et al. [4], Abdel-Ghani [1], Hassan [9], Abdel-Hamid [2], Ismail [11], Ismail et al. [12], Wang and Cheng [21], Kamal et al. [13], Srivastava and Mittal [19, 20], Hassan et al. [10], and Mahmoud et al. [15].

1.2 Competing Risks Schemes

In reliability analysis, the failure of items may be attributable to more than one cause at the same time. Theses “causes” are competing for the failure of the experimental unit. This problem is known as the competing risks model in the statistical literature. In the competing risks data analysis, the data consists of a failure time and the associated cause of failure. The causes of failure may be assumed to be independent or dependent. In this paper, we assume the latent failure time model, as suggested by Cox [5], where the failure times are independently distributed. For several examples, where the failure is due to more than cause of failure, see Crowder [6]. Considered a life time experiment with \( n \in N \) identical units, where its lifetimes are described as independent and identically distributed (i.i.d) random variables \( X_{1} , \ldots , X_{n} \). Without loss of generality; assume that there are only two causes of failure. We have \( T_{i} = \hbox{min} \left\{ {X_{1i} , X_{2i} } \right\} \) for \( i = 1, \ldots , n \), where \( X_{1i} , X_{2i} \) denotes the latent failure time of the ith unit under first and second cause of failure, respectively. We assumed that the latent failure times \( X_{1i} \) and \( X_{2i} \) are independent, and the pairs \( \left( {X_{1i} , X_{2i} } \right) \) are i.i.d. The observed failure time is given by the random variable \( T_{i} = \hbox{min} \left\{ {X_{1i} , X_{2i} } \right\} \). The survival function of the random variable \( T \) is defined as

where \( \bar{F}\left( . \right) = 1 - F\left( . \right) \) is the survival function. On using the relation \( f\left( x \right) = - \frac{\partial }{\partial x}\bar{F}\left( x \right) \), we get the densities

Recently, some authors have investigated the competing failure models in ALT, see for example, Shi et al. [17], Han and Kundu [8], Haghighi and Bae [7], Zhang et al. [22], Shi et al. [18] and Lone et al. [14].

The Weibull distribution is a very popular model and it has been extensively used over the past decades for modeling data in reliability, engineering and bio-logical studies. In this paper, we consider the estimation problem for the CS-PALT competing failure model from Weibull distribution under type I censoring (TIC) and type II censoring (TIIC). The rest of this paper is organized as follows. In Sect. 2, under TIC and TIIC schemes, a CS-PALT competing failure model from Weibull distribution is described and some basic assumptions are given. In Sect. 3, we obtain the maximum likelihood (ML) estimators of the acceleration factor and unknown parameters for CS-PALT competing model under TIC. Section 4 gives the ML estimators of the acceleration factor and unknown parameters for CS-PALT competing model under TIIC. The simulation results of all proposed methods for different sample sizes and for different censoring schemes are presented in Sect. 5.

2 Model Description and Assumptions

This section displays the main assumptions for product life test in CS-PALT competing failure model. Also, the test procedures in CS-PALT based on TIC and TIIC schemes when the lifetime of competing failures are assumed to have Weibull distribution are explained.

2.1 Model Description

The test procedure in CS-PALT is considered as follows:

Total \( n \) items are divided into two groups:

Group 1 consists of \( n_{1} = n\left( {1 - \pi } \right) \), \( \left( {1 - \pi } \right) \) is sample proportion items allocated to normal conditions.

Group 2 consists of \( n_{2} = n\pi \) remaining items are subjected to accelerated conditions.

Each item in Group 1 and Group 2 is run at constant level of stress until the test terminates when the censoring time \( \tau \) in case of TIC or the rth failure in case of TIIC is reached.

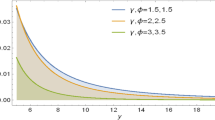

The lifetimes \( T_{i} ,\quad i = 1, 2, \ldots , n\left( {1 - \pi } \right) \), of items allocated at normal conditions follow Weibull distribution with shape parameter \( \theta , \) scale parameter \( \lambda \) and have the probability density function (pdf) and cumulative distribution function (cdf) as follows:

$$ f\left( {t_{i} } \right) = \theta \lambda t_{i}^{\theta - 1} e^{{ - \lambda t_{i}^{\theta } }} t_{i} ;\quad \theta , \lambda > 0, $$(1)and,

$$ F\left( {t_{i} } \right) = 1 - e^{{ - \lambda t_{i}^{\theta } }} , $$(2)where, the observed ordered failure times are \( t_{\left( 1 \right)} < \cdots < t_{{\left( {n_{u} } \right)}} < \tau \) under TIC and \( n_{u} \) is the number of failed items at normal conditions. While the observed rth ordered failure is \( t_{\left( 1 \right)} < t_{\left( 2 \right)} < t_{\left( 3 \right)} \cdots < t_{\left( r \right)} \) under TIIC.

The lifetimes \( X_{j} ,\quad j = 1, 2, \ldots , n\pi \) of items allocated at accelerated conditions follow a Weibull distribution with shape parameter \( \theta \) and scale parameter \( \lambda \) and have the pdf and cdf as follows:

$$ f\left( {x_{j} } \right) = \theta \lambda \beta \left( {\beta x_{j} } \right)^{\theta - 1} e^{{ - \lambda \left( {\beta x_{j} } \right)^{\theta } }} ;\quad x_{j} , \theta , \lambda > 0 , \beta > 1, $$(3)and,

$$ F\left( {x_{j} } \right) = 1 - e^{{ - \lambda \left( {\beta x_{j} } \right)^{\theta } }} , $$(4)where, the observed ordered failure times are \( x_{\left( 1 \right)} < \cdots < x_{{\left( {n_{a} } \right)}} < \tau \) and \( n_{a} \) is the number of failed items at accelerated conditions under TIC. While the observed ordered rth failure is \( x_{\left( 1 \right)} < x_{\left( 2 \right)} < x_{\left( 3 \right)} \cdots < x_{\left( r \right)} \) under TIIC.

2.2 Basic Assumption

-

The lifetimes \( T_{i} ,\quad i = 1, 2, \ldots , n\left( {1 - \pi } \right) \) of items allocated at normal conditions are i.i.d random variables

-

The lifetimes \( X_{j} ,\quad j = 1, 2, \ldots , n\pi \) of items allocated at accelerated conditions are i.i.d random variables

-

The lifetimes \( T_{i} \) and \( X_{j} \) are mutually independent.

3 ML Estimators Under TIC Competing Risks Data

Suppose that the observed values of the total lifetime T of size \( n\left( {1 - \pi } \right) \) at normal condition are \( t_{\left( 1 \right)} , t_{\left( 2 \right)} , \ldots , t_{{\left( {n\left( {1 - \pi } \right)} \right)}} \), and the observed values of the total lifetime X of size \( n\pi \) at accelerated condition are \( x_{\left( 1 \right)} , x_{\left( 2 \right)} , \ldots , x_{{\left( {n\pi } \right)}} \). Let \( \delta_{ui} \) and \( \delta_{ai} \) denote the failure indicators such that

and

The likelihood function for TIC competing risks data when the cause of failure is known at normal conditions is given by

where, \( t_{i} = t_{\left( i \right)} \), and \( \bar{\pi } = 1 - \pi \). Substituting (1), (2), (3) and (4) in likelihood function (5), then:

Also, the likelihood function for TIC competing risks data when the cause of failure is known at accelerated conditions is given by

Since the lifetimes of \( t_{1} , \ldots , t_{{n_{u} }} \) and \( x_{1} , \ldots , x_{{n_{a} }} \) are iid then the total likelihood function for TIC competing risks data when the cause of failure is known at normal and accelerated conditions \( \left( {t_{1} ; \quad \delta_{u1} \ldots , t_{{n\bar{\pi }}} ; \quad \delta_{{un\bar{\pi }}} , x_{1} ;\quad \delta_{a1} \ldots , x_{n\pi } ;\quad \delta_{an\pi } } \right) \) is given by:

where, \( \bar{\delta }_{ui} = 1 - \delta_{ui} \) and \( \bar{\delta }_{aj} = 1 - \delta_{aj} \). The ML estimators \( \hat{\theta }_{1} , \hat{\theta }_{2} , \hat{\lambda }_{1} ,\hat{\lambda }_{2} , \hat{\beta }_{1} \) and \( \hat{\beta }_{2} \) of the parameters and acceleration factors \( \theta_{1} , \theta_{2} , \lambda_{1} , \lambda_{2} , \beta_{1} \) and \( \beta_{2} \) are the values which maximize the likelihood function. The logarithm of the likelihood function \( l_{1} = \ln L_{1i} \) is given by:

The first derivatives of the logarithm of the likelihood function (6) with respect to \( \theta_{k} , \lambda_{k} \) and \( \beta_{k} \) are given by:

and

where, \( n_{ku} = \sum\nolimits_{i = 1}^{{n\bar{\pi }}} {\delta_{kui} } \), \( n_{ka} = \sum\nolimits_{j = 1}^{{n\bar{\pi }}} {\delta_{kaj} } \), \( n_{k0} = n_{ku} + n_{ka} \) and \( k = 1,2 \).

Setting Eqs. (7), (8) and (9) by zeros we obtain three nonlinear equations. The system of these nonlinear equations cannot be solved analytically. So, we can apply numerical solution via iterative techniques to get the ML estimators.

Additionally, the asymptotic variances and covariance matrix of the ML estimators of \( \theta_{k} , \lambda_{k} \) and \( \beta_{k} \) can be approximated by numerically inverting the asymptotic Fisher-information matrix F. It is composed of the negative second and mixed derivatives of the natural logarithm of the likelihood function evaluated at the ML estimates. So, the elements of the Fisher information are given by

For interval estimation of the parameters, the \( 3 \times 3 \) observed information matrix \( I\left( \varPhi \right) = \left\{ {I_{u, v} } \right\} \) for \( \left( {u, v} \right) = \left( {\theta , \lambda ,\beta } \right) \). Under the regularity conditions, the known asymptotic properties of the ML method ensure that: \( \sqrt n \left( {\hat{\varPhi } - \varPhi } \right)\mathop \to \limits^{d} N_{3} \left( {0, I^{ - 1} \left( \varPhi \right)} \right) \) as \( n \to \infty \) where \( \mathop \to \limits^{d} \) means the convergence in distribution, with mean \( 0 = \left( {0, 0, 0} \right)^{T} \) and \( 3 \times 3 \) covariance matrix \( I^{ - 1} \left( \varPhi \right) \) then, the \( 100\left( {1 - \upsilon } \right)\% \) confidence intervals for \( \theta , \lambda \) and \( \beta \) are given, respectively, as follows

where \( Z_{\upsilon /2} \) is the \( \left[ {100\left( {1 - \upsilon /2} \right)} \right] \) th standard normal percentile and \( var\left( . \right) \)’s denote the diagonal elements of \( I^{ - 1} \left( \varPhi \right) \) corresponding to the model parameters.

4 ML Estimators Under TIIC Competing Risks Data

Suppose that the observed values of the total lifetime T of size \( n\left( {1 - \pi } \right) \) at normal condition are \( t_{\left( 1 \right)} , t_{\left( 2 \right)} , \ldots , t_{\left( r \right)} \), and the observed values of the total lifetime X of size \( n\pi \) at accelerated condition are \( x_{\left( 1 \right)} , x_{\left( 2 \right)} , \ldots , x_{\left( r \right)} \). Let \( \delta_{ui} \) and \( \delta_{ai} \) denote the failure indicators such that

and

The total likelihood function for TIIC competing risks data when the cause of failure is known at normal \( \left( {t_{i} , \delta_{ui} } \right) \) and accelerated conditions \( \left( {x_{j} , \delta_{aj} } \right) \) are respectively given by:

and,

Then, the total likelihood function for TIIC competing risks data when the cause of failure is known at normal and accelerated conditions \( \big( t_{1} ; \quad \delta_{u1} \ldots , t_{{n\bar{\pi }}} ; \quad \delta_{{un\bar{\pi }}} , x_{1} ;\quad \delta_{a1} \ldots , x_{n\pi } ; \quad \delta_{an\pi } \big) \) is:

The ML estimators \( \hat{\theta }_{1} , \hat{\theta }_{2} , \hat{\lambda }_{1} ,\hat{\lambda }_{2} , \hat{\beta }_{1} \) and \( \hat{\beta }_{2} \) of the parameters and acceleration factor \( \theta_{1} , \theta_{2} \lambda_{1} , \lambda_{2} , \beta_{1} \) and \( \beta_{2} \) are the values which maximize the likelihood function. The logarithm of the likelihood function \( l_{2} = \ln L_{2i} \) is given by:

The first derivatives of the logarithm of the likelihood function (11) with respect to \( \theta_{k} , \lambda_{k} , \beta_{k} \) and \( k = 1, 2 \) are given by:

and

Setting Eqs. (12), (13) and (14) by zeros we obtain three nonlinear equations. As mentioned in the previous section, the system of these nonlinear equations cannot be solved analytically. So, numerical solution is applied via iterative techniques to obtain the ML estimators.

The asymptotic variance covariance matrix of \( \theta_{k} , \lambda_{k} \) and \( \beta_{k} \) is obtained by inverting the Fisher information matrix, so the elements of the Fisher information are obtained as follows

By similar way, the approximate confidence intervals of \( \theta_{k} , \lambda_{k} \) and \( \beta_{k} \) under TIIC competing risk are obtained by using Eq. (10).

5 Simulation Study

In this section, a simulation study is carried out to evaluate the performance of the estimates. The estimates of the acceleration factor \( \left( {\beta_{1} , \beta_{2} } \right) \) and population parameters \( \left( {\theta_{1} , \theta_{2} , \lambda_{1} , \lambda_{2} } \right) \) are evaluated in terms of their mean squared errors (MSEs) and biases. The numerical procedure is designed as below:

A random sample of size \( n_{1} = n\left( {1 - \pi } \right), \) where \( \pi = 0.4 \) is the proportion and \( n \) is the total sample size, is generated under normal conditions. So, we generate samples from \( W_{1} \sim Weibull\left( {n_{1} , \theta_{1} ,\lambda_{1} } \right) \) and \( W_{2} \sim Weibull\left( {n_{1} , \theta_{2} , \lambda_{2} } \right) \). In view of two samples we generate new samples \( t_{1} = \left( {t_{\left( 1 \right)} , t_{\left( 2 \right)} , t_{\left( 3 \right)} , \ldots ., t_{{\left( {n_{1} } \right)}} } \right) \) where \( T = min\left( {W_{1} , W_{2} } \right) \).

A random sample of size \( n_{2} = n\pi \) is generated under accelerated conditions. So, we generate samples from \( W_{1} \sim Weibull\left( {n_{2} , \theta_{1} , \beta_{1} , \lambda_{1} } \right) \) and \( W_{2} \sim Weibull\left( {n_{2} , \theta_{2} , \beta_{2} , \lambda_{2} } \right) \). Based on this two samples we generate new samples \( x_{2} = \left( {x_{\left( 1 \right)} , x_{\left( 2 \right)} , x_{\left( 3 \right)} , \ldots ., x_{{\left( {n_{2} } \right)}} } \right) \) where \( X = min\left( {W_{1} , W_{2} } \right) \).

In TIC, let \( \tau = 1.5, \) while, in TIIC, let r = 10 for sample sizes 50, 75 and 100.

For some choices of unknown parameters and accelerated factor, the above process is repeated 1000 times

The average values of biases and MSEs are computed.

Numerical outcomes are listed in Tables 1 and 2. The following observations can be detected as follows:

The MSEs and biases decrease as n increases under TIC and TIIC data (see Tables 1, 2).

For fixed value of \( \left( {\lambda_{1} , \theta_{2} , \lambda_{2} , \beta_{1} , \beta_{2} } \right) \) and as the value of \( \theta_{1} \) increases, the MSEs and biases of estimates of \( \left( {\theta_{1} , \lambda_{1} , \theta_{2} , \lambda_{2} } \right) \) are increasing except the MSEs and biases for estimates of \( \beta_{1} \) and \( \beta_{2} \) are decreasing under TIC data (see Table 1).

For fixed value of \( \left( {\theta_{2} , \lambda_{2} , \beta_{1} , \beta_{2} } \right) \), as the value of \( \theta_{1} \) decreases and \( \lambda_{1} \) increases, the MSEs and biases of estimates for \( (\lambda_{1} , \beta_{1} , \beta_{2} ) \) are increasing but the MSEs and biases for estimates of \( \left( {\theta_{1} , \theta_{2} , \lambda_{2} } \right) \) are decreasing under TIC data (see Table 1).

For fixed value of \( \left( {\theta_{1} , \lambda_{2} , \beta_{1} , \beta_{2} } \right) \) and as the value of \( (\lambda_{1} , \theta_{2} ) \) is decreasing, the MSEs and biases for estimates of \( \left( {\lambda_{2} , \beta_{1} , \beta_{2} } \right) \) are increasing but the MSEs and biases for estimates of \( \left( {\theta_{1} , \theta_{2} , \lambda_{1} } \right) \) are decreasing under TIC data (see Table 1).

For fixed value of \( \left( {\theta_{1} , \lambda_{1} , \beta_{1} , \beta_{2} } \right) \), as the value of \( \lambda_{2} \). decreases and \( \theta_{2} \) increases, the MSEs and biases of estimates of \( \left( {\lambda_{2} , \theta_{2} } \right) \) are increasing but the MSEs and biases of estimates for \( \left( {\theta_{1} , \lambda_{1} , \beta_{1} , \beta_{2} } \right) \) are decreasing under TIC data (see Table 1).

For fixed value of \( \left( {\theta_{1} , \lambda_{1} , \theta_{2} , \beta_{2} } \right) \) and as the value of \( \left( {\lambda_{2} , \beta_{1} } \right) \) increases, the MSEs and biases of estimates for \( \left( {\theta_{1} , \lambda_{1} , \theta_{2} , \beta_{1} } \right) \) are increasing except the MSEs and biases of estimates for \( \lambda_{2} \) and \( \beta_{2} \) are decreasing under TIIC data (see Table 1).

For fixed value of \( \left( {\theta_{1} , \lambda_{1} , \theta_{2} , \lambda_{2} } \right) \) and as the value of \( \left( {\beta_{1} , \beta_{2} } \right) \) decreases, the MSEs and biases of estimates of \( \left( {\theta_{2} , \lambda_{1} , \lambda_{2} , \beta_{1} , \beta_{2} } \right) \) are increasing but the MSEs and biases of estimates for \( \theta_{1} \) are increasing under TIC data (see Table 1).

When the value of \( \left( {\lambda_{1} , \theta_{2} , \lambda_{2} , \beta_{1} , \beta_{2} } \right) \) is fixed and the parameter value of \( \theta_{1} \) increases, the MSEs and biases for estimates of \( \left( {\theta_{1} , \lambda_{2} , \beta_{1} , \beta_{2} } \right) \) are increasing while the MSEs and biases for estimates of \( (\lambda_{1} , \theta_{2} ) \) are decreasing based on TIIC (see Tables 2).

For fixed value of \( \left( {\theta_{2} , \lambda_{2} , \beta_{1} , \beta_{2} } \right) \), as the value of \( \theta_{1} \) decreases, and the value of \( \lambda_{1} \) increases, the MSEs and biases for estimates of \( \left( {\lambda_{1} , \beta_{1} , \beta_{2} } \right) \) are increasing but the MSEs and biases for estimates of \( \left( {\theta_{1} , \theta_{2} , \lambda_{2} } \right) \) are decreasing under TIIC data (see Table 2).

Under TIIC, when the value of \( \left( {\theta_{1} , \lambda_{2} , \beta_{1} , \beta_{2} } \right) \) is fixed and the value of \( \left( {\theta_{2} , \lambda_{1} } \right) \) are decreasing, the MSEs and biases for estimates of \( \left( {\theta_{1} , \theta_{2} , \lambda_{1} , \lambda_{2} , \beta_{1} , \beta_{2} } \right) \) are increasing (see Table 2).

Under TIIC, when the value of \( \left( {\theta_{1} , \lambda_{1} , \beta_{1} , \beta_{2} } \right) \) is fixed, the value of \( \lambda_{2} \) are decreasing and the value of \( \theta_{2} \) are increasing, the MSEs and biases for estimates \( \left( {\theta_{1} , \theta_{2} , \lambda_{1} , \lambda_{2} , \beta_{1} , \beta_{2} } \right) \) are decreasing (see Table 2).

For fixed value of \( \left( {\theta_{1} , \lambda_{1} , \theta_{2} , \beta_{2} } \right) \) and as the value of \( \left( {\lambda_{2} , \beta_{1} } \right) \) increases, the MSEs and biases for estimates of \( \left( {\theta_{1} , \theta_{2} , \lambda_{2} , \beta_{1} , \beta_{2} } \right) \) are increasing but the MSEs and biases for estimates of \( \left( {\lambda_{1} } \right) \) are decreasing under TIIC data (see Table 2).

When the value of \( \left( {\theta_{1} , \theta_{2} , \lambda_{1} , \lambda_{2} } \right) \) is fixed and the value of \( \left( {\beta_{1} , \beta_{2} } \right) \) decreases, the MSEs and biases for estimates of \( \left( {\theta_{1} , \theta_{2} , \beta_{2} } \right) \) are increasing while the MSEs and biases for estimates of \( \left( {\lambda_{1} , \lambda_{2} , \beta_{1} } \right) \) are decreasing based on TIIC (see Table 2).

Change history

18 April 2020

In the original publication of the article the ORCID ID of the co-author Amal S. Hassan has been published incorrectly

References

Abdel-Ghani MM (1998) Investigations of some lifetime models under partially accelerated life tests. PhD thesis, Department of Statistics, Faculty of Economics and Political Science, Cairo University, Egypt

Abdel-Hamid AH (2009) Constant stress partially accelerated life tests for Burr type XII distribution with progressive type II censoring. Comput Stat Data Anal 53(7):2511–2523

Bai DS, Chung SW (1992) Optimal design of partially accelerated life tests for the exponential distribution under type I censoring. IEEE Trans Reliab 41:400–406

Bai DS, Chung SW, Chun YR (1993) Optimal design of partially accelerated life tests for the lognormal distribution under type I censoring. Reliab Eng Syst Saf 40(1):85–92

Cox DR (1959) The analysis of exponentially distributed with two types of failure. J R Stat Ser B 21:411–421

Crowder MJ (2001) Classical competing risks model. Chapman & Hall/CRC, New York

Haghighi F, Bae SJ (2015) Reliability estimation from linear degradation and failure time data with competing risks under a step-stress accelerated degradation test. IEEE Trans Reliab 64(3):960–971

Han D, Kundu D (2015) Inference for a step-stress model with competing risks for failure from the generalized exponential distribution under type-I censoring. IEEE Trans Reliab 64(1):31–43

Hassan AS (2007) Estimation of the generalized exponential distribution parameters under constant stress partially accelerated life testing using type I censoring. Egypt Stat J Inst Stat Stud Res Cairo Univ 51(2):48–62

Hassan AS, Assar MS, Zaky AN (2015) Constant-stress partially accelerated life tests for inverted Weibull distribution with multiple censored data. Int J Adv Stat Probab 3(1):72–82

Ismail AA (2009) Planning constant stress partially accelerated life tests with type II censoring: the case of Weibull failure distribution. Int J Stat Econ 3:39–46

Ismail AA, Abdel-Ghaly AA, El-Khodary EH (2011) Optimum constant stress life test plans for paretodistribution under type I censoring. J Stat Comput Simul 81(12):1835–1845

Kamal M, Zarrin S, Islam AU (2013) Constant stress partially accelerated life test design for inverted Weibull distribution with type I censoring. Algorithms Res 2(2):43–49

Lone SA, Rahman R, Islam AU (2017) Step stress partially accelerated life testing plan for competing risks using adaptive type I progressive hybrid censoring. Pak J Stat 33(4):237–248

Mahmoud MAW, Sagheer RM, Nagaty H (2017) Inference for constant-stress partially accelerated life test model with progressive type-II censoring scheme. J Stat Appl Probab 6(2):373–383

Nelson W (1990) Accelerated life testing: statistical models, data analysis and test plans. Wiley, New York

Shi X, Liu F, Shi Y (2016) Bayesian inference for step-stress partially accelerated competing failure model under Type II progressive censoring. Math Probl Eng. https://doi.org/10.1155/2016/2097581

Shi YM, Jin L, Wei C, Yue HB (2013) Constant-stress accelerated life test with competing risks under progressive type-II hybrid censoring. Adv Mater Res 712–715:2080–2083

Srivastava PW, Mittal N (2013) Failure censored optimum constant stress partially accelerated life tests for the truncated logistic life distribution. Int J Bus Manag 3(2):41–66

Srivastava PW, Mittal N (2013) Optimum constant stress partially accelerated life tests for the truncated logistic life distribution under time constraint. Int J Oper Res Nepal 2:33–47

Wang FK, Cheng YF (2012) Estimating the Burr XII parameters in constant stress partially accelerated life tests under multiple censored data. Commun Stat Simul Comput 41(9):1711–1727

Zhang C, Shi Y, Wu M (2016) Statistical inference for competing risks model in step stress partially accelerated life tests with progressively type I hybrid censored Weibull life data. J Comput Appl Math 297:65–74

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hassan, A.S., Nassr, S.G., Pramanik, S. et al. Estimation in Constant Stress Partially Accelerated Life Tests for Weibull Distribution Based on Censored Competing Risks Data. Ann. Data. Sci. 7, 45–62 (2020). https://doi.org/10.1007/s40745-019-00226-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40745-019-00226-3