Abstract

In the corner milling process, processing energy consumption is a very important objective, since the energy efficiency of CNC machine is barely above 14.8%. Meanwhile, the excessive processing temperature will increase the thermal deformation of the product and leads to quality decline. Improper process parameters will lead to unnecessary high temperature and energy consumption. By optimizing the process parameters, the appropriate temperature and Specific Energy Consumption can be obtained. This study investigated into modeling Specific Energy Consumption and temperature in corner milling process using variable-fidelity metamodels. The adopted variable-fidelity metamodels are constructed by Hierarchical Kriging, in which 48 sets of low-fidelity data obtained from the AdvantEdge software simulation are used to reflect the trends of the metamodels, and 16 sets of high-precision data obtained from physical experiments are used to calibrate the trends. The experimental cost is reduced and the prediction accuracy is increased by making full use of both sets of data. An improved K-means Multi-objective Particle Swarm Optimization algorithm was adopted and applied on the multi-objective corner milling parameters optimization problem to find satisfactory specific energy consumption and temperature. The obtained Pareto solutions can provide guidance for selecting process parameters according to different requirements, such as reducing energy consumption or temperature.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the explosion of population in the world, the foreseeable energy shortage is realistic and severe. Global warming and energy crisis are big titles. It’s estimated that manufacturing sector consumes almost 90% of energy use in modern industry. Metal removal process is one of the main ways of manufacturing [1], and computer numerical control (CNC) machine is an energy consumption giant in the manufacturing industry [2], whose energy efficiency is barely above 14.8% [3]. It's projected that around 6–40% of energy saving can be achieved through parameter optimization in CNC machining [4]. Many methods have been developed for such a usage, for example, Misaka et al. [5] integrated co-kriging method, a modification of kriging, with measurement data of CNC machine to predict roughness of final product. Jang et al. [6] takes the process parameters as a part of the cutting energy model, establishes a specific energy consumption model using an artificial neural network, and implements levenberg–Marquardt back propagation algorithm. Finally, particle swarm optimization (PSO) is used for global optimization to determine the process parameters by minimizing the energy consumption. Zhou et al. [7] combined Grey Relational Analysis and RBF network to predict Grey Relational Correlation (GRC), with an average error of 5%. In these process parameters optimization problems, the prediction models of optimization objectives are first constructed, and then the optimization algorithm is used to obtain the optimal solution.

In this sense, heuristic algorithms, such as Genetic algorithm [8], Particle Swarm Optimization (PSO) [9] and Teaching Learning Based Optimization [10], can be applied for the optimization problem. The optimization process can be recapitulated as a negotiation between energy intake and workpiece quality outcome, which are often in conflict with each other. The negotiation centers around parameter inputs. In recent years, many meta-heuristic algorithms are developed and applied on engineering problems. To name a few, Dragonfly Algorithm [11], Imperialist Competitive Algorithm [12] and Ant Colony Optimization [13] All received wide attention and modifications [14, 15]. The design of optimizer is focused on computational efficiency, acquisition of global optimum solution, and solution diversity [16]. The most ideal optimizer meets all the requirements at a high standard, however the No Free Lunch theorem suggests that there is no such an algorithm in reality [17]. In practice, one has to sacrifice a certain advantage to improve another. In general, every algorithm has their own favorable problem, where the algorithm is better performed than others. This theoretical base motivates researchers to discover and modify algorithms.

In light of the rising global temperature, carbon emission is a major consideration due to current derogating climate problem [18]. At the same time, CNC tool is one of the main tools in machining, so it is very important to build a model to analyze its energy consumption [19, 20]. Many related studies take energy consumption as an important target of optimization as well [21]. Chen et al. [22] considered both electrical and embodied material energy as optimization objectives, and established a model to solve the problem. Li et al. [23] considered Specific Energy Consumption (SEC) and cost as objectives, and then utilized regression and Adaptive Multi-objective Particle Swarm Optimization to model and solve the problem. Besides energy intake and carbon emission concerns, extending Tool Life (TL) is also an optimizable concern. Zhang et al. [24] and Rajemi et al. [25] took TL and their energy metric into optimization model and applied optimizers for a solution.

The temperature of workpiece and tool during machining process is an aspect of concern. It is an important indicator for green manufacturing, so lowering the temperature as much as possible is an effective way to improve energy efficiency. Most energy waste occurs in machining process appears in the form heat [26]. Machining accuracy is also an important indicator for green precision manufacturing [27], but the temperature is too high will cause the tool and the workpiece to have the deformation thus to affect the machining accuracy. What’s more, high temperature in machining process can influence tool wear, shape tolerance and residual stress of chemical part of the workpiece [28] and can damage surface integrity of workpiece [29]. In the research of predicting milling temperature, one of the early methods is to establish the numerical model based on the finite difference method. Shear energy, tool friction and thermal balance are considered in the model, and the temperature field distribution of the machining system can be predicted [30]. Chenwei et al. proposed an improved analytical method to predict cutting temperature, with relative difference between 0.49% and 9.00%. Besides analytical methods, Finite Element Method (FEM) is also available. Davoudinejad et al. [31] proposed the use of Lagrangian explicit finite element formula for thermal–mechanical coupling transient analysis to achieve the purpose of building a 3D finite element model. Muaz and Choudhury [32] adopted a commercial software for prediction of temperature in machining process. In this paper, the general software Abaqus/Explicit is used for temperature-displacement coupling analysis to obtain the surface temperature of the processed parts and compare it with the experimental measured temperature. The results show that the temperature prediction error is only about 6.1%.

The above studies either use physical experiment data or use simulation data to construct prediction models. The former causes high cost, while the latter is not accurate enough. In this paper, a variable-fidelity metamodel, which is called Hierarchical Kriging (HK) is adopted. In the Hierarchical Kriging, the precision physical experiment data and simulation data are collected together to finally construct a model, which can balance the modeling cost and the model accuracy. The relationship between the process parameters and the two optimization objectives (the temperature and the SEC) can be modeled by Hierarchical Kriging. Afterwards, a self-modified MOPSO based on K-means clustering was proposed to solve the multi-objective milling parameter optimization problem. Eventually, the Pareto front was found. And the optimal parameters should be picked from the Pareto front according to the different requirements. The overall research process is shown in the Fig. 1.

The following paper is organized as follow: Sect. 2 is the modeling of milling process and devoted to description of HK model; Sect. 3 will be focused around K-means MOPSO and its validation; Sect. 4 discusses the modeling and optimization on actual data obtained from physical experiment and simulation; Sect. 5 draws conclusion on this study.

2 Formulation of the Optimization Problem

In order to obtain the multi-objective solution set, the Pareto front, the gray box model has to be formulized in input-out mathematical manner. The method proposed in this paper to establish input–output relationship of the process is HK. After construction of HK model, K-means MOPSO will be applied to solve multi-objective problem in this scenario.

2.1 Corner Milling Schematic

Corner milling uses a corner rounding milling cutter to remove volumes from workpiece. The cutter spins in high spindle speed, which traveling through the cutting path, removing the volume positioned on the path. The schematic of corner milling process is depicted in Fig. 2. Where a is the main view, b and c are the top view and aL schematic diagram.

Figure 3 is a three-dimensional diagram of milling. As shown in Figs. 2 and 3, the circular tool has been in contact with the workpiece before it comes into contact with the workpiece at the center of the tool. It is assumed that the workpiece begins to contact at the edge of the tool at time T1, and the center point of the tool is aligned with the bottom at time T2.

As Fig. 2 shows, the workpiece’s width is B, and its length is L, with a height of H. The volume separated by dash-line is the volume to be removed, whose width is ae, and has a height of ap. In terms of cutting parameters, ae and ap are also refereed as cutting width and cutting depth. Diameter of milling cutter is D. The removal volume is not totally separated until the cutter finishes its travel from beginning to end. After one travel, the Material Removed Volume (MRV) is calculated as Eq. (1).

where ap, ae and L are cutting depth, cutting width and length of MRV, which are three edges of a cuboid.

For each travel, the length traveled by cutter is not equal to L, The distance traveled by cutter tool is described as Eq. (2).

where aL is called approach distance, which is the distance between center and peripheral when the cutter touches workpiece. As is shown in Fig. 2c. The equation for aL is shown as Eq. (3).

where D is the cutter diameter, ad is cutting width for the process.

2.2 Model of the Corner Milling Process

2.2.1 Decision Variables

As shown in Fig. 2, cutting depth ap and cutting width ae are two parameters of inputs. There are two more cutting parameters: feed rate fz (mm/tooth), and spindle speed n (r/min). The above mentioned four variables are all for our corner milling process inputs.

2.2.2 Objective Functions

Considering the energy saving purpose and quality preserving, our objective functions are decided on SEC and temperature. SEC represents the energy consumption in the milling process, while the milling temperature is closely related to the machining accuracy and the quality of the finished product.

The energy consumption for a machine center during milling process is versatile. To simply put, the energy intake can be roughly divided into: idle power, cutting power, and auxiliary power. Auxiliary power is static, which usually cannot be optimized by changing input parameters. Parameter changes will mostly impact cutting power, and idle power is inevitable. Hence, our energy consumption includes idle power and cutting power during cutting process, and regardless of auxiliary power. Assume that E is the energy consumed in machining process, the definition of E can be described Eq. (4).

where Pbasic is the power when spindle system starts and feeding is yet to begin, Pcut is the cutting power, and \(\eta\) is the mechanical efficiency from the milling center. Pcut is calculated via cutting force measured. Tcut is the time spent for one travel. Given the circumferential force Fc (N), and cutting velocity Vc (m/min), Pcut can be calculated Eq. (5).

where cutting velocity is calculated formula (6).

where D is diameter of cutter, and n is spindle speed. After acquisition of Vc, Tcut can be calculated using Eq. (7) below:

where aL is approach distance, L is length of workpiece, n is spindle speed, fz is feed rate, and z is insert number. With the above given, SEC can be formulated as Eq. (8).

where Ecut is the energy spent on cutting, Eidle is the idle energy consume, and MRV is computed according to Eq. (1).

Milling temperature has an important effect on the workpiece, the tool and the milling process. Too high temperature will cause the workpiece and the cutter size to have the expansion, or will affect the surface residual stress and the crystal phase characteristic, will have the serious influence to the processing quality. At the same time, most of the tool energy waste in milling is reflected in the form of cutting heat. Therefore, the effective control and reduction of temperature is a better method to improve machining quality and energy utilization.

The modeling of temperature is more empirical, unlike SEC, which is analytical. In prediction of temperature, the Hierarchical Kriging (HK) model was employed, due to the complex nature of heat transfer and temperature distribution of workpiece. And the HK method will be introduced in Sect. 2.4. Simply put, the expression of temperature in each process can be written as Eq. (9).

where TEMP is short for temperature, and the inputs for HK model are milling parameters.

2.3 Constraints

All machining centers are subjected to spindle speed n and feed rata fz limits, and the size of workpiece and cutter will simultaneously determine maximum cutting depth ap and cutting width ae for a process. In this paper, the operation is only subjected to range limits of input parameters, since the machine center is powerful enough to undertake any parameter combination, and the temperature will not be too extreme. In short, the limits for our optimization problem can be expressed as formula (10).

Henceforth, with the completion of modeling machining gray box, optimization algorithm will be introduced and discussed.

2.4 Hierarchical Kriging

2.4.1 Basic Theory of Hierarchical Kriging

Hierarchical Kriging is an extension of ordinary Kriging, wherein low-fidelity model is constructed to guide the high-fidelity model for high precision [33]. Hierarchical Kriging model is a kind of variable-fidelity metamodel. In order to obtain Hierarchical Kriging model, a low-fidelity approximation model, and then use it for later prediction. The main idea is to use low-fidelity sample points to establish the first-level kriging model to predict the trend of the model, and then use high-fidelity sample points to interpolate the first-level kriging model for the second time. That is, the accuracy of the first-level kriging model make improvements to get the final prediction model. Since the obtained prediction model is established hierarchically using kriging interpolation, it is named Hierarchical Kriging. In the process of establishing a Hierarchical Kriging approximation model, a low-fidelity kriging model needs to be established first, and then a low-fidelity kriging model is used to build a high-fidelity kriging model. A brief introduction is given here [34].

For an m-dimensional problem, assume that the low-fidelity function is \({\text{Y}}_{\text{lf}}\): Rm → R, the high-fidelity function Y: Rm → R prediction, and the high-fidelity function Y sample points:

Corresponding response:

where n is the number of sample points calculated with high accuracy. (S, YS) represents the high-fidelity sample point data set in the vector space. Similarly, assuming that the low-fidelity function samples nlf sample points Slf, the corresponding response is Ylf.

Low-fidelity model can be expressed Eq. (13).

In which \(\beta_{0,lf}\) is a constant to be determined, and \(Z_{lf} (x)\) is a stable stationary gaussian process. Assume that sample set is \((S_{lf} ,y_{s,lf} )\), after applying Kriging interpolation, the low-fidelity model prediction result of any untried position x can be expressed Eq. (14).

In Eq. (12), \(\beta_{0,lf} = (E^{T} R_{lf}^{ - 1} E)^{ - 1} E^{T} R_{lf}^{ - 1} (y_{s,lf} )\). Where \(R_{lf}^{{}}\) is a matrix of \(n_{lf} \times n_{lf}\) dimensions, and \(R_{lf}^{{}}\) signifies correlation of sample positions. \(n_{lf}\) is the number of low-fidelity points.\(E\) is column vector with \(n_{lf}\) dimensions, and its elements are all 1. \(r_{lf}^{{}}\) is a vector that represents the correlation of untried positions and tried positions [35].

In contrast with ordinary Kriging, Hierarchical Kriging is written Eq. (15).

As shown in Eq. (15), low-fidelity model \(\hat{y}_{lf}\) is multiplied with a undetermined constant \(\beta_{0}\) as a global tendency (or mean) to guide stationary gaussian process \(Z(x)\). Stationary gaussian process \(Z(x)\) has means of zero and covariance as the Eq. (16).

where \(\sigma^{2}\) is variance of \(Z(x)\); \(R(x,x^{\prime})\) is the spatial correlation function, which is dependent on Euclidian distance of \(x\) and \({x}^{^{\prime}}\).

When high-fidelity model can be obtained using a linear combination of high-fidelity data \(y_{s}\), the untried position x can be predicted via Hierarchical Kriging as in Eq. (17).

In the Equation above, \(\beta_{0} = (F^{T} R^{ - 1} F)^{ - 1} F^{T} R^{ - 1} y_{s}\), who is a proportionality coefficient that shows how correlated high-fidelity/low-fidelity model is;\(F\) is a column vector with \(n_{hf}\) dimensions whose elements are all 1. \(n_{hf}\) is the number of high-fidelity samples;\(R\) is the correlation matrix between high-fidelity samples. \(V_{HK}\) only relates to tried positions, and can be calculated during the process of Kriging interpolation. Once \(V_{HK}\) is acquired, predicting the untried point \(x\)’s response \(\hat{y}(x)\) requires recalculation of \(r^{T}\) and \(\hat{y}_{lf}\). Notice that \(\hat{y}_{lf} (x)\) is the low-fidelity model computed using the equation mentioned above [36].

2.4.2 Comparison Between Different Prediction Model

In the field parameter optimization, a variety of modeling techniques are popular among scholars, such as Artificial Neural Networks (ANN) [37], RSM [38] and polynomial fitting. Despite success of those methodologies above, few models can handle variable-fidelity datasets. In ANN, RSM and polynomial fitting, data from all sources were treated equally, contributed to same to the model regardless of their accuracy and fidelity. What’s more, ANN requires relatively large volume of dataset to guarantee performance. In order to lower cost from experiments and utilize low-fidelity dataset from simulation, hierarchical scheme should be adopted. HK model is a form of variable-fidelity model. Unlike ANN, HK requires fewer training samples.

Different from RSM and polynomial fitting, HK model make use of the low-fidelity samples to construct a general trend surface, and then construct high-fidelity model based on the trend surface using high-fidelity sample data. This schematic enables incorporation of low-fidelity samples, meanwhile eliminating inaccuracy from low-fidelity samples, and allowing high-fidelity samples to play more important role. HK has demonstrated its applicability in optimization problems. Yang et al. [39] employed HK for the parametric optimization of deep penetration laser wielding; Yuepeng et al. [40] utilize HK for optimization of roter blade design in helicopters regarding roter noise. In both studies above, simulation software was employed so that cost of experiment can be reduced.

Four data from physical experiment were selected in order to prove the superiority of HK. And the details of the physical experiment will be described in Sect. 4. The results are showed in Tables 1 and 2. Despite the fact that some experimental points are better than HK modeling results, the average error of HK is significantly lower than ANN and RSM.

3 Proposed K-means MOPSO

In order to solve the multi-objective problem, the K-means MOPSO was designed for the solution. MOPSO was chosen for our application and modification due to its simplicity and low degree of complexity. Genetic Algorithm involves Evaluation Selection, Crossover and Mutation in an epoch, whereas PSO only includes Evaluation and Velocity Update in a row, in terms of single-objective problems. When the problem requires multi-objective solution, the computational complexity will rapidly rise. Our K-means MOPSO incorporates K-means clustering for multi-objective problems. K-means clustering is a simple and effective clustering algorithm [41]. The use of K-means clustering is aimed at avoidance of crowdedness.

3.1 Workflow of K-means MOPSO

K-means clustering is organized into classic PSO in order to eliminate crowded solution. The workflow of K-means goes like MOPSO, and its flowchart is shown in Fig. 4.

When there are clusters in the final solution, the solution cannot represent the Pareto front well. MOPSO cannot avoid crowdedness in the solution, because MOPSO guides the swarm towards non-dominant points. This strategy makes the swarm gathered at some elite points. To avoid the swarm grouped at some non-dominant points, instead of guiding the swarm towards the elites, our strategy is to guide the swarm towards the centers generated from K-means clustering. Such strategy allows some freedom for the individuals, so that the individuals may not cluster around one position. In addition to K-means clustering, non-dominant archive and outer archive are introduced into our optimizer. Non-dominant archive stores non-dominant solutions in current generation, and outer archive stores non-dominant solutions obtained in the whole process. After the construction of K-means MOPSO, the algorithm was further tested, and the results were in the next section.

3.2 Evaluation Results of K-means MOPSO

To validate our modified K-means MOPSO, several benchmarks were applied to the optimizer, and their test results are recorded and shown. The standard that measures the quality of Pareto front, Generational Distance and Maximum Spread [42] are introduced first, then the standard for our K-means MOPSO on the benchmarks are shown.

3.2.1 Definition of Generational Distance

Generational Distance marks the distance between true Pareto front and the obtained Pareto front [43]. GD is the measure of how close the optimizer’s solution is to the true Pareto front. As a independent measurement, its effectiveness is irrelevant to accuracy of our optimizer. GD is defined in the following Eq. (18).

where m is the number of solutions obtained;\(d_{i}\) is the Euclidian distance between obtained solution front and the true Pareto front.

3.2.2 Definition of Maximum Spread

Maximum Spread represents the coverage of the obtained solution front. It calculates the coverage according to the volume of hypercube made up from extreme values. MS is computed as Eq. (19).

where k is the dimension of the objective. \(f_{i}^{\max }\) and \(f_{i}^{\min }\) are respectively the maximum and minimum for the solution set in the ith dimension. \(F_{i}^{\max }\) and \(F_{i}^{\min }\) are the maximum and minimum for the true Pareto front set in terms of ith dimension.

3.2.3 Evaluation Result of K-means MOPSO

In order to acquire evaluation results of our K-means MOPSO, four benchmark functions, ZDT1, ZDT2, ZDT4 and ZDT6 were tested on our optimizer. The definitions of the benchmarks are described in Table 3.

The abovementioned four test benchmarks were solved by K-means MOPSO respectively to demonstrate the applicability of our algorithm. Hyperparameter setup for K-means MOPSO is listed on Table 4. \(\omega\), C1 and C2 were determined according to ref. [44]. The comparison of ground-truth Pareto front and our solution is shown in Fig. 5. Judging from Fig. 5, it’s evident that K-means MOPSO can very effectively obtain solution close to the true Pareto front. Additionally, K-means MOPSO achieved favorable results regarding GD and MS. The input of benchmarks is two-dimensional data. The results are listed in Table 5.

4 Case Study

In this paper, experiments were conducted on a machine center, and Advantage simulation software. Physical experiments can provide high-fidelity data with high cost, while the simulation can provide low-fidelity data with low cost. After obtaining the experiments, data obtained were processed for Hierarchical-Kriging modeling, which was then used for K-means MOPSO for solution of our multi-objective problem.

4.1 Physical Experiment Setup and Design

The machined parts are set as valve parts and made of H62 brass with wide range of uses and high mechanical properties. The quality of the milling valve will directly affect the tightness of the valve, so the process is also the key to the quality of the valve [45]. In order to produce better quality H62 parts, researching into H62 brass machining input and outcome becomes necessary. The chemical composition of the experimental material brass H62 is shown in Table 6.

The physical experiment was carried out in the Center of Mikron UCP800 Duro machine, and the cutter was processed with a diameter of 8 mm tungsten steel end milling cutter. The workpiece is H62 brass metal block with the size of 120 mm × 70 mm × 30 mm. In order to facilitate fixation and milling, holes are drilled at the bottom as shown in the Fig. 6. In order to describe the outcomes, Kistler9123C1011 three-component dynamometer was employed to measure force in three X, Y and Z directions during the whole milling process. T650SC thermal camera was deployed to record the milling procedure for the temperature information. Specifications of all the items involved are listed in Table 7. The photo of experimental setup for physical experiments is shown in Fig. 7.

Design of the experimental trial points is abided by Orthogonal Design of Experiments (ODE) [46]. ODE is the design when many levels of inputs are properly arranged to combinations. ODE is capable of ensure practicability, meanwhile eliminate number of experiments sharply. The sample points are evenly distributed in variable space, which makes the experiment more representative and generic [47].

As previously mentioned, the decision variable is constituted of four elements: spindle speed n, feed rate fz, cutting depth ap, and cutting width ae. Based on the facilities in the laboratory, our elements are divided into 4 levels. According the 4 levels and 4 elements, totally 16 experiments were contrived. The design was in consideration of machining center and cutter tool, which is shown in Table 8.

4.2 Acquisition and Analysis of High/Low Fidelity Data

To model the outcomes of the physical machining process, dynamometer Kistler9123C1011 and thermal camera T650SC were deployed to measure the force and temperature.

The three-way dynamometer is installed on the spindle and rotates with the tool. In order to facilitate the calculation of power, it is necessary to convert the measured three-way force along the tool rotation into the three-way force fixed on the coordinate axis based on the workpiece. Axis Z of the rotating coordinate axis and fixed coordinate axis recombine and are perpendicular to the machining plane, so coordinate transformation is not required.

The transformation of coordinate system is shown in Fig. 8. Assume the force in that rotating coordinate in X direction is Fx1, and similarly Fy1 for Y direction. Assume that forces in accordance with feed direction and vertical to cutter-workpiece intersection direction are Fx2 and Fy2.

With Θ calculated as the above, Fx2 and Fy2 can be calculated as:

After the abovementioned process, forces in X and Y direction are obtained, with force in Z direction, the static coordination, where Fc can be obtained as:

From the result gained above, according to Eq. (5), cutting power Pc can be obtained, thus linking the raw dynamometer data to modeling. Until here, the power acquisition is completed. The next will discuss how the temperature is measured.

During the temperature measurement experiment, the milling process was recorded with the T650SC infrared measuring instrument and researchIR software was connected to the computer for data storage. In order to ensure the accuracy of the results, the temperature range was set between 20 and 500 degrees, and the thermal emissivity was set at 0.05. The frames filmed were processed using Flir tool + software to obtain temperature data. Select the path area cut by the tool in the video, as shown in the Fig. 9, the curve of the maximum temperature change with time in this area at each moment can be derived, and finally the peak temperature of the tool we need can be obtained.

4.3 Processing and Calculation of Physical Experiments Data and Simulations Data

With the measurement and computation above, after conducting the orthogonal experiments, the results of forces, power and temperatures are acquired and listed in Table 9.

Another part of our experiment is conducted on Third Wave AdvantEdge software using computers to reduce cost. AE is a simulation software based on material properties, whose simulation result is consistent with reality. Performing experiments on AE first can lay the theoretical base guiding production. Many unrepeatable processes can be replicated by simulation software easily, and some uncontrollable nature of the parts can be avoided [48]. Some material parameters and cutting parameters are set in combination with physical experiment during the pretreatment. After calculation, the data such as milling force and temperature can be extracted by opening the post-processing interface, as shown in the Fig. 10.

Basically, the data from physical experiments and from AE is the same, hence they are processed according to the same procedure. The only difference is that physical experiment is more accurate and credulous. Since simulation is inexpensive, another 48 experiments were designed according to ODE principal, to supplement the physical experiment. Another 16 experiments, whose design is exactly the same as physical experiment, were conducted to examine the precision of AE.

The 16 comparison results are listed in Table 10. Judging from Table 10, the simulation can appropriately reflect physical experiment results, with average 8% error in terms of power, and 7% error in terms of temperature.

As can be seen from Tables 9 and 10, temperature and SEC are in most cases mutually contradictory. Lower cutting temperatures generally result in lower thermal deformation of tools and tool wear during the cutting process. And Low level cutting parameters usually result in low power. However, according to Eq. (8), it can be seen that a lower MRV may lead to a larger SEC. In this case, the temperature and energy consumption are optimized to obtain the corresponding cutting parameters.

After the experimental and simulation data were obtained, ANOVA of MATLAB was used to confirm whether the cutting parameters had significant effects on the temperature and power results. The general expressions of one-way ANOVA are shown in the Table 11 below.

After analysis, there were significant differences between n, f, ad, ap and P in the experimental data, while there were no significant differences between them and TEMP. The results were shown in the Tables 12 and 13. In the simulation data, there are significant differences between n, f, ad, ap, but no significant differences between them and TEMP. The results are shown in the Tables 14 and 15.

After confirming that AdvantEdge is reliable and accurate, the supplement 48 experiments were performed to obtain low-fidelity data. The cutting parameters and the results for the 48 simulation experiments are shown in Table 16.

4.4 Multi-objective Optimization of CNC Corner Milling Parameters Based on HK and K-means MOPSO

The results from both physical experiments and simulations were fed into HK to construct variable fidelity model for the prediction of machining outcomes. The code was implemented in Matlab 2018b, with the help of DACE toolkit [14]. The hyperparameters for HK power agent model are showed in Table 17. And the hyperparameters for HK temperature agent model are showed in Table 18.

initial value for theta \(\theta = \left[ {\theta_{1} ,\theta_{2} ,...,\theta_{n} } \right]\), where n is the dimension of decision space; upper and lower boundary for \(\theta\); regression model regpoly; and correlation function corr. For HK model, low-fidelity model and high-fidelity all take in a set of hyperparameters, except high-fidelity model’s regression model is the corresponding low-fidelity Kriging model. Using the setup, HK model was constructed and validated on 4 additional simulation experiments. The 4 simulations were referred as validation experiments, and the results were shown in Table 19.

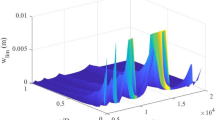

It’s evident from Table 19, that HK model successfully predicted power and temperature for the milling process, with average error of power at 8%, and average error of temperature at 6%. With confidence in the proposed HK model, the K-means MOPSO was incorporated with HK to yield multi-objective solution for the optimization problem. Hyperparameter setup for K-means in case study is in accordance with that of ZDT benchmark test, which is listed in Table 4. The only difference is that the population size and archive size here are all 400. The result of the pareto solution is shown in Fig. 11.

Some selected Pareto solutions were listed in Table 20. The results were selected from the original pareto set by every 40 solutions. Judging from the selected Pareto solutions, it’s evident that temperature and SEC are conflicting each other, with one increasing and the other shrinking and vise versa. While the goal is to maintain a low level of both metrics, in practice it’s only possible to sacrifice for lowering the other. What’s more, the parameter inputs to Pareto solutions shows that maintaining a low spindle speed, together with other parameters in low number, achieves low temperature, but less energy-efficient. Keeping the spindle speed at a high level, meanwhile increasing feed, cutting width and cutting depth, will ensure high energy-efficiency, however the temperature of workpiece can be relatively high.

5 Conclusions

To the best of the authors’ knowledge, there exists little research on the optimization of H62 machining process. And in the milling, research of temperature as the target is less. The study of temperature change can better control the processing quality and additional energy dissipation, while the SEC as the target can both reflect the machining quality and efficiency.

In this study, process parameters are optimized to obtain appropriate temperature and SEC in corner milling. In order to balance modeling accuracy and cost, high/low fidelity data of SEC and temperature during corner milling H62 brass processing were obtained by using physical experiment and AE simulation. HK model was constructed based on the two variable-fidelity data sets, and the K-means MOPSO was examined and applied on the multi-objective optimization problem. Finally, the solution was obtained, and verified using AdvantEdge. The following conclusions can be drawn from the study:

-

(1)

The accuracy difference between simulation and physical experiment is analyzed. AdvantEdge simulation software can properly output results with average error in prediction of temperature and power at 8% and 7% respectively;

-

(2)

A high-precision, high-efficiency, low-cost model is constructed. Hierarchical Kriging model can accurately predict power and temperature fast but with low cost, and showed overall error of 8% and 6% for power and temperature;

-

(3)

An efficient and high-precision model solving method is adopted. The adopted K-means MOPSO is effective by showing favorable results of GD and MS in solving benchmark ZDT1, ZDT2, ZDT 4 and ZDT6. The improved K-means MOPSO reduces the complexity of the algorithm itself, making it more efficient in obtaining the optimal cutting parameters. And the result recommended high-level of spindle speed for low SEC, and low spindle speed for low temperature.

-

(4)

This study has reference and guiding significance for corner milling of H62 brass parts. Influence factors of processing parameters on processing results is analyzed. The temperature and energy consumption can be well controlled by this model, and the appropriate cutting parameters can be selected to optimize the processing quality and energy consumption.

References

Wang, B., Liu, Z., Song, Q., Wan, Yi., & Ren, X. (2020). An approach for reducing cutting energy consumption. International Journal of Precision Engineering and Manufacturing-Green Technology, 7(5–8), 35–51.

Shi, K. N., Ren, J. X., Wang, S. B., Liu, N., Liu, Z. M., Zhang, D. H., & Lu, W. F. (2019). An improved cutting power-based model for evaluating total energy. Journal of Cleaner Production, 231, 1330–1341. https://doi.org/10.1016/j.jclepro.2019.05.323

Salahi, N., & Jafari, M. A. (2016). Energy-performance as a driver for optimal production planning. Applied Energy, 174, 88–100. https://doi.org/10.1016/j.apenergy.2016.04.085

Newman, S. T., Nassehi, A., Imani-Asrai, R., & Dhokia, V. (2012). Energy efficient process planning for CNC machining. CIRP Journal of Manufacturing Science and Technology, 5(2), 127–136. https://doi.org/10.1016/j.cirpj.2012.03.007

Misaka, T., Herwan, J., Ryabov, O., Kano, S., Sawada, H., Kasashima, N., & Furukawa, Y. (2020). Prediction of surface roughness in CNC turning by model-assisted response surface method. Precision Engineering, 62, 196–203. https://doi.org/10.1016/j.precisioneng.2019.12.004

Jang, D., Jung, J., & Seok, J. (2016). Modeling and parameter optimization for cutting energy reduction in MQL milling process. International Journal of Precision Engineering and Manufacturing-Green Technology, 3(1), 5–12. https://doi.org/10.1007/s40684-016-0001-y

Zhou, J., Ren, J., & Yao, C. (2017). Multi-objective optimization of multi-axis ball-end milling Inconel 718 via grey relational analysis coupled with RBF neural network and PSO algorithm. Measurement, 102, 271–285. https://doi.org/10.1016/j.measurement.2017.01.057

Sahu, N. K., & Andhare, A. B. (2017). Modelling and multiobjective optimization for productivity improvement in high speed milling of Ti–6Al–4V using RSM and GA. Journal of the Brazilian Society of Mechanical Sciences and Engineering, 39(12), 5069–5085. https://doi.org/10.1007/s40430-017-0804-y

Malghan, R. L., Rao, K. M. C., Shettigar, A. K., Rao, S. S., & D Souza, R. J. (2017). Application of particle swarm optimization and response surface methodology for machining parameters optimization of aluminium matrix composites in milling operation. Journal of the Brazilian Society of Mechanical Sciences and Engineering, 39(9), 3541–3553. https://doi.org/10.1007/s40430-016-0675-7

Rao, K. V. (2019). A novel approach for minimization of tool vibration and surface roughness in orthogonal turn milling of silicon bronze alloy. Silicon, 11(2), 691–701. https://doi.org/10.1007/s12633-018-9953-6

Mirjalili, S. (2016). Dragonfly algorithm: a new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Computing and Applications, 27(4), 1053–1073.

Teimouri, R., & Baseri, H. (2015). Forward and backward predictions of the friction stir welding parameters using fuzzy-artificial bee colony-imperialist competitive algorithm systems. Journal of Intelligent Manufacturing, 26(2), 307–319. https://doi.org/10.1007/s10845-013-0784-4

Abdullah, H., Ramli, R., & Wahab, D. A. (2017). Tool path length optimisation of contour parallel milling based on modified ant colony optimisation. The International Journal of Advanced Manufacturing Technology, 92(1–4), 1263–1276. https://doi.org/10.1007/s00170-017-0193-5

Acı, Ç. İ, & Gülcan, H. (2019). A modified dragonfly optimization algorithm for single-and multiobjective problems using brownian motion. Computational Intelligence and Neuroscience, 2019, 1.

Yang, Y. (2018). Machining parameters optimization of multi-pass face milling using a chaotic imperialist competitive algorithm with an efficient constraint-handling mechanism. Computer Modeling in Engineering & Sciences, 116(3), 365–389.

Khalilpourazari, S., & Khalilpourazary, S. (2018). Optimization of production time in the multi-pass milling process via a Robust Grey Wolf Optimizer. Neural Computing and Applications, 29(12), 1321–1336. https://doi.org/10.1007/s00521-016-2644-6

Gómez, D., & Rojas, A. (2016). An empirical overview of the no free lunch theorem and its effect on real-world machine learning classification. Neural Computation, 28(1), 216–228. https://doi.org/10.1162/NECO_a_00793

Li, C., Tang, Y., Cui, L., & Li, P. (2015). A quantitative approach to analyze carbon emissions of CNC-based machining systems. Journal of Intelligent Manufacturing, 26(5), 911–922.

Han, F., Li, Li., Cai, W., Li, C., Deng, X., & Sutherland, J. W. (2020). Parameters optimization considering the trade-off between cutting power and MRR based on linear decreasing particle swarm algorithm in milling. Journal of Cleaner Production. https://doi.org/10.1016/j.jclepro.2020.121388

Luoke, Hu., Cai, W., Shu, L., Kangkang, Xu., Zheng, H., & Jia, S. (2020). Energy optimisation for end face turning with variable material removal rate considering the spindle speed changes. International Journal of Precision Engineering and Manufacturing-Green Technology, 8, 1–14.

Luan, X., Zhang, S., & Li, G. (2018). Modified power prediction model based on infinitesimal. International Journal of Precision Engineering and Manufacturing-Green Technology, 5(1), 71–80. https://doi.org/10.1007/s40684-018-0008-7

Chen, X., Li, C., Jin, Y., & Li, L. (2018). Optimization of cutting parameters with a sustainable consideration of electrical energy and embodied energy of materials. The International Journal of Advanced Manufacturing Technology, 96(1–4), 775–788.

Li, C., Chen, X., Tang, Y., & Li, L. (2017). Selection of optimum parameters in multi-pass face milling for maximum energy efficiency and minimum production cost. Journal of Cleaner Production, 140, 1805–1818.

Zhang, H., Deng, Z., Fu, Y., Lv, L., & Yan, C. (2017). A process parameters optimization method of multi-pass dry milling for high efficiency, low energy and low carbon emissions. Journal of cleaner production, 148, 174–184.

Rajemi, M. F., Mativenga, P. T., & Aramcharoen, A. (2010). Sustainable machining: selection of optimum turning conditions based on minimum energy considerations. Journal of Cleaner Production, 18(10–11), 1059–1065.

Chenwei, S., Zhang, X., Bin, S., & Zhang, D. (2019). An improved analytical model of cutting temperature in orthogonal cutting of Ti6Al4V. Chinese Journal of Aeronautics, 32(3), 759–769.

Zhang, Y., Zhang, Z., Zhang, G., & Li, W. (2020). Reduction of energy consumption and thermal deformation in wedm by magnetic field assisted technology. International Journal of Precision Engineering and Manufacturing-Green Technology, 2(7), 391–404.

Karaguzel, U., & Budak, E. (2018). Investigating effects of milling conditions on cutting temperatures through analytical and experimental methods. Journal of Materials Processing Technology, 262, 532–540.

Liu C, He Y, Wang Y, Li Y, Wang S, Wang L, Wang Y. (2020). Effects of process parameters on cutting temperature in dry machining of ball screw. ISA transactions, 101, 493–502.

Lazoglu, I., & Altintas, Y. (2002). Prediction of tool and chip temperature in continuous and interrupted machining. International Journal of Machine Tools and Manufacture, 42(9), 1011–1022. https://doi.org/10.1016/S0890-6955(02)00039-1

Davoudinejad, A., Tosello, G., Parenti, P., & Annoni, M. (2017). 3D finite element simulation of micro end-milling by considering the effect of tool run-out. Micromachines, 8(6), 187. https://doi.org/10.3390/mi8060187

Muaz, M., & Choudhury, S. K. (2020). A realistic 3D finite element model for simulating multiple rotations of modified milling inserts using coupled temperature-displacement analysis. International journal of advanced manufacturing technology, 107(1–2), 343–354. https://doi.org/10.1007/s00170-020-05085-4

Hu, J., Zhou, Q., Jiang, P., Shao, X., & Xie, T. (2018). An adaptive sampling method for variable-fidelity surrogate models using improved hierarchical kriging. Engineering Optimization, 50(1), 145–163.

Han, Z. H., & Görtz, S. (2012). Hierarchical kriging model for variable-fidelity surrogate modeling. Aiaa Journal, 50(9), 1885–1896.

Chao, S., Yang, X., & Song, W. (2016). Efficient aerodynamic optimization method using hierarchical Kriging model combined with gradient. Journal of Aviation, 037(007), 2144–2155.

Huang M, Yang X, Peng X. (2017). Efficient variable-fidelity multi-point aerodynamic shape optimization based on hierarchical kriging. 55th AIAA Aerospace Sciences Meeting.

Xu, L., Huang, C., Li, C., Wang, J., Liu, H., & Wang, X. (2020). A novel intelligent reasoning system to estimate energy consumption and optimize cutting parameters toward sustainable machining. Journal of Cleaner Production, 261, 121160.

Li, C., Xiao, Q., Tang, Y., & Li, L. (2016). A method integrating Taguchi, RSM and MOPSO to CNC machining parameters optimization for energy saving. Journal of Cleaner Production, 135, 263–275.

Yang, Y., Gao, Z., & Cao, L. (2018). Identifying optimal process parameters in deep penetration laser welding by adopting Hierarchical-Kriging model. Infrared Physics and Technology, 92, 443–453.

Yuepeng, B. U., Wenping, S., Zhonghua, H., & ZHANG Y, ZHANG L. . (2020). Aerodynamic/aeroacoustic variable-fidelity optimization of helicopter rotor based on hierarchical kriging model. Chinese Journal of Aeronautics, 33(2), 476–492.

Mousa, A. A., El-Shorbagy, M. A., & Farag, M. A. (2017). K-means-clustering based evolutionary algorithm for multi-objective resource allocation problems. Applied Mathematics and Information Sciences, 11(6), 1681–1692. https://doi.org/10.18576/amis/110615

Zhan, Z. H., Zhang, J., Li, Y., & Chung, S. H. (2009). Adaptive particle swarm optimization. IEEE Transactions on Systems, Man, and Cybernetics, Part B: Cybernetics, 39(6), 1362–1381.

Wang, H., Jin, Y., & Yao, X. (2017). Diversity assessment in many-objective optimization. Cybernetics IEEE Transactions on, 47(6), 1510–1522.

Xinlei, J. (2006). PSO-based Multi-objective Optimization Algorithm Research and Its Applications. Hangzhou: Zhejiang University.

Bo, L. H., & Ma, J. L. (2019). A brief discussion of standardization practice of aeronautical valve production. China Standardization, 14, 290–293. https://doi.org/10.13535/j.cnki.11-4406/n.2015.21.024

Ruijiang, L., Yewang, Z., Chongwei, W., & Jian, T. (2010). Study on the design and analysis methods of orthogonal experiment. Experimental Technology and Management, 9, 52–55.

Linlin, Z., Liping, Z., & Xiaoying, L. (2013). Experimental study on aluminum alloy high-speed milling based on orthogonal test design. Journal of Chengdu Aeronautic Polytechnic, 4, 16.

He, M., & K. L. . (2014). Optimization of structural parameters of end mill in high speed milling 6061 aluminum alloy based on AdvantEdge. Tool Engineering, 48(10), 29r–32r.

Acknowledgements

This research is supported by the National Natural Science Foundation of China under grant no. 51705182

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yang, Y., Wang, Y., Liao, Q. et al. CNC Corner Milling Parameters Optimization Based on Variable-Fidelity Metamodel and Improved MOPSO Regarding Energy Consumption. Int. J. of Precis. Eng. and Manuf.-Green Tech. 9, 977–995 (2022). https://doi.org/10.1007/s40684-021-00338-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40684-021-00338-3