Abstract

The goal of Direct Instruction (DI) is to teach content as effectively and efficiently as possible. To do this, instructional designers must identify generative relations or strategies that allow the learner to respond correctly to untaught situations. The purpose of content analysis is to identify generative relations in the domain to be taught and arrange the content in such a way that it supports maximally generative instruction. This article explains the role of content analysis in developing DI programs and provides examples and nonexamples of content analysis in five content domains: spelling, basic arithmetic facts, earth science, basic language, and narrative language. It includes a brief sketch of a general methods of conducting a content analysis. It concludes that content analysis is the foundation upon which generative instruction is built and that instructional designers could produce more effective, efficient, and powerful programs by attending explicitly and carefully to content analysis.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

The goal of Direct Instruction (DI) is to teach content as effectively and efficiently as possible. All students benefit from learning more content in less instructional time. For example, if students quickly learn to read in early grades, then their reading skills give them access to infinitely more learning opportunities in subsequent grades and throughout their lives. But if they slowly learn to read or learn in later grades, they cannot begin to apply their reading skills to building other repertoires as soon—their slower skill development in one area limits their rate of learning in numerous other areas. For students whose skills are lagging behind typical expectations, including students with disabilities, efficiency of instruction becomes extremely important. In order to catch up to typically developing peers they must learn more in less time. For students with more significant disabilities, efficiency of instruction is even more important. For them, inefficient instruction puts a more severe limit on skill development and independence.

To teach effectively and efficiently, DI authors attend to many aspects of instruction that are not typically identified, systematically analyzed, and optimized in education. To the naïve observer, the most salient features of DI are those involved in interactions between teacher and student: signaling, group unison responding, scripting, and such (see Rolf & Slocum, this issue). Important as those are, they can overshadow the more subtle features of content analysis and instructional design that are responsible for much of DI’s effectiveness and efficiency.

One of the foundational principles of DI is that effective and efficient teaching requires teaching for generativity (Engelmann, 1969; see also Stokes & Baer, 1977).Footnote 1 Teaching is generative to the degree that it enables the learner to respond appropriately to untrained situations. For example, if reading instruction enables students to read untaught words, it is generative. If one reading program enables students to reading more untaught words with less instruction than another program, the first program is more generative than the second. In any relatively large and complex content domain, there are simply too many combinations of relevant stimuli, responses, and contexts to teach them all individually. Instruction must be generative in order to teach complex content domains effectively. Even relatively simple domains such as color identification require generative instruction—learners must respond correctly to untaught examples of yellow objects. Further, in domains that might be taught in numerous ways, generative instruction will be more efficient enabling learners to do more with less extensive instruction.

The importance of teaching for generativity has long been recognized by behavior analysts (e.g., Stokes & Baer, 1977). However, what has been less widely recognized is that our ability to teach for generality is based on how we analyze the content domain. The content domain is the broad topic that is to be taught. Examples include basic language, social skills, reading comprehension, mathematics, and computer programming. Content analysis is the discovery of generalizable relations within the content domain. These generalizable relations then become the organizing structure of instruction.

To give a simple example, in the content domain of beginning reading one content analysis could treat the meaning of words as the main organizing structure. This would lead the instructional designer to develop sets of words for instruction based on meaning and immediate usefulness (e.g., one’s own name and names of family members, body parts, emergency signs, food). Another content analysis could organize words based on their shared phonic elements (e.g., words with specific letter-sounds, words with the consonant-vowel-consonant pattern). Assuming that all other aspects of instruction were well-designed, these two different content analyses would lead to very different learner repertoires. In the first case, students would learn a small set of words and would likely make limited generalizations. In the second case, students acquire skills that allow reading of a wide variety of texts and make broad generalizations to untaught words. Only the content analysis based on phonic elements that are shared across words would likely produce a truly useful reading repertoire. This example illustrates the implications of content analysis for student learning. Content analysis is often much subtler than implied by the previous example; in general, it is invisible unless one specifically examines an instructional program to identify evidence of the content analysis. Although subtle in appearance, content analysis is massive in function; it determines the learner repertoires that can be produced by instruction.

In DI, the purpose of content analysis is to (1) identify generative relations—relations that can produce effective behavior in untaught situations and (2) arrange the content in such a way that it supports maximally generative instruction. We can operationalize the purpose of content analysis as maximizing the ratio of repertoire generated to instructional time. Given that an instructional strategy is effective, and effectively delivered, instructional time is the primary variable that limits learning. Thus, the generativity of instruction can be measured in terms of time-efficiency. By including instructional time as the denominator, this ratio of generativity recognizes that we must attend to the time it takes to teach each generative relation, and compare this time to the time that would be required to teach the content in other ways. In some cases, a generative relation may exist, but teaching this relation may be less time-efficient than teaching all the examples separately. A logical content analysis must be followed by an empirical analysis of whether teaching the identified generative relations actually increase instructional efficiency.

We are using the term “generative” to include all behavioral relations that can produce effective behavior in untaught instances. Generativity encompasses all sorts of generalization relations, including simple unidimensional stimulus generalization (such as identifying red objects) as well as the complex relations involved in natural concepts (e.g., identifying shoes, dogs, or laptop computers; Herrnstein et al., 1976), and response generalization. Minimal stimulus–response units and the patterns by which they are combined to respond to novel situations as described by Skinner (1957) are important relations that produce generative responding (e.g., learning to say the sounds of letters then recombining these letter-sound relations to read words). Skinner (1957) also described manipulative autoclitic frames that enable the learner to engage in appropriate complex behavior in response to novel demands. For example, we can learn from definitions in the form of “____ means ____.” Derived relational responding (e.g., Hayes et al., 2004) and rule-governance (e.g., Skinner, 1957) can produce nearly infinite generativity. For example, given an appropriate history, hearing “when you get to the traffic light, turn right” can endow the traffic light with a discriminative-like function for turning right. Many other behavioral relations are also involved.

Content analysis is not primarily concerned with distinguishing among the various behavioral relations that can produce generative behavior—the goal is to find generative relations in the content and arrange instruction to take best advantage of these relations. However, it can be useful to be aware of the different behavioral relations that can produce generativity so that instances of these relations are more likely to be recognized in the content. Content analysis is not about demonstrating that given behavioral relations can be applied; it is about teaching the domain as efficiently as possible using all relevant generative relations.

In the literature on DI, content analysis has also been called “sameness analysis,” emphasizing the fact that it is based on identifying elements of responding that are the same across a universe of items (Carnine, 1991). For example, the instructional designer might recognize that thunderstorms, shore winds, ocean currents, and movement of magma in the Earth’s mantle all share the sameness of being driven by convection. The instructional designer can use that sameness to develop an efficient instructional program. Each of the behavioral relations mentioned above capitalize on some element of sameness across exemplars. In this literature, the term “big idea” has also been used to refer to instances of any of these behavioral relations that support generativity (Carnine et al., 1994; Coyne et al., 2011; Watkins & Slocum, 2004). In the previous example, we might say that convection is a big idea that unites many specific instances in Earth science.

In the spirit of DI, we recognize that the concept of content analysis can be best taught through careful exemplification. We now turn to a series of juxtaposed examples and nonexamples. Some of these examples will be familiar to most readers and some much less so. These examples are a small sample of all content analyses in DI designed to illustrate the concept of content analysis. We hope to build two big ideas across these examples. The first is to clarify the concept of content analysis and exemplify its importance. The second is to point out that although some powerful content analyses are well-known, others are the product of deep expertise in the content area.

Content Analysis in Spelling

Many students struggle with spelling despite recent evidence indicating that spelling is regularly taught in schools (Graham et al., 2008). Graham (1999) found that teachers often employ one of four strategies when determining which words to include in spelling lessons: (1) allow students to select their own words, (2) commonly misspelled words, (3) commonly encountered words, and (4) words that represent different but related patterns. In a more recent study, Post and Carreker (2002) found that teachers typically build spelling word lists based on themes, such as holidays, types of animals, or instructional units from other domains (e.g., science, social studies). Thus, the tacit content analysis that underlies much spelling instruction is that there are no generative relations to be learned, so one might as well organize the content based on student motivation, related meanings, or frequency of errors. None of these approaches represent generative relations—this is a nonexample of DI content analysis. There are two powerful strategies, or big ideas, that can produce highly generative spelling repertoires: the phonic strategy (Institute of Child Health & Human Development, 2000) and the morphographic strategy (Dixon, 1991).

In the phonic strategy, the student hears a word and responds separately to each phoneme (sound unit) by writing the most common corresponding grapheme (letter or set of letters). This strategy is built upon the sameness of phoneme–grapheme correspondence that applies across many words. This strategy can be extended through phonic rules such as “when a word ends with the sound ā, it is usually spelled ‘ay.’” Although phonic strategies do not produce appropriate responses to all English words, they do result in correct spelling of a great many words (Institute of Child Health & Human Development, 2000). In addition, many words that cannot be spelled correctly with the phonic strategy alone can be supported by this strategy. There are many words in which the phonic strategy results in appropriate spelling of some of the sounds and reduces the number of possibilities for other sounds. For example, in the word “gleam,” the sounds /gl/ and /m/ can be derived phonetically. The ē sound is commonly spelled “ee,” “ea,” and “e” plus a final e. So, application of the phonic strategy would allow derivation of two thirds of the sounds and would reduce the remaining sound to a matter of memorizing a choice among three options—far better than memorizing the spelling of the entire word. This illustrates the important fact that teaching generalizations can be powerful even when they are not sufficient by themselves. The generalizations of phonic spelling can greatly reduce the memorization load required for learning irregular spelling words, even when some word-specific memorization is necessary.

The morphographic strategy (Dixon, 1991; Dixon & Engelmann, 2001; Simonsen & Dixon, 2004) is less well-known and highly generative. It applies to words that are made up of multiple morphographs. A morphograph is the smallest unit of written language that has meaning—prefixes, bases, and suffixes. In English, morphographs have highly consistent spelling, and a limited number of rules for combining morphographs can produce the correct spelling of many words (Dixon & Engelmann, 2001). Figure 1 shows an example of a set of seven morphographs and the 28 complex words that can be spelled correctly based on these morphographs and the associated combining rules.

Generativity of Morphographic Analysis of Spelling. Note. Adapted from Watkins & Slocum (2004, p. 30.)

Many multimorphographic words can be formed by simply spelling one morphograph after the other. For example, recovered is formed by combining the prefix “re,” the base word “cover,” and the suffix “ed.” For other multimorphographic words, the last letter in a morphograph must be dropped, doubled, or changed; in these cases, students must apply a set of rules to combine morphographs. For example, students must learn to apply the rule, “When a word ends in 'e' and the next morphograph begins with a vowel, drop the 'e,’” as with skating.

Another example of the generativity of teaching spelling using morphographs supports spelling the /er/ sound in words that name people by their profession or activities (e.g., farmer, professor, runner, supervisor). Students can learn the rule, “if there is a -ion (pronounced “shun”) form of the word, it is spelled with ‘or.’” Specific examples include supervisor (supervision), instructor (instruction), and educator (education); nonexamples include farmer (there is no “farmtion”) and runner (there is no “runtion”). The DI spelling program, Spelling Through Morphographs (Dixon & Engelman, 2001), teaches 750 morphographs and 14 rules from which students can derive the correct spelling of over 12,000 words. A secondary example of the generativity of Spelling through Morphographs is that students also learn the meaning of the morphographs. Applying this knowledge allows students to derive the meaning of many unfamiliar vocabulary terms.

The fact that morphographs tend to retain their spelling across many English words is the underlying sameness that supports the morphographic strategy. Each morphographic rule involves additional samenesses. For example, the rule “When a word ends in 'e' and the next morphograph begins with a vowel, drop the 'e,’” is based on the sameness that the spelling of many English words follows this pattern. These rules are big ideas.

The DI spelling series, Spelling Mastery (Dixon & Engelmann, 2006), combines phonic and morphographic strategies with strategic memorization of irregular words to produce far more generative repertoires than can be found in mainstream spelling instruction. In earlier lessons, students are taught to rely more heavily on phonemic strategies and in later lessons students are taught to use the morphographic strategies described above. Another important facet of content analysis that is well-exemplified by spelling is the importance of recognizing irregularities—items for which responding based on a generality will reliably produce incorrect responses. Throughout the Spelling Mastery program, specific words that do not conform to the rules are taught individually as irregular words.

Content Analysis in Basic Arithmetic Facts

There are 100 basic addition facts with addends from 0 to 9, and 100 corresponding subtraction facts. The common assumption is that all 200 facts must be memorized by rote, that is, as paired-associates. This naïve acceptance of a traditional approach to basic arithmetic is a nonexample of content analysis. With a more powerful content analysis, the volume of memorization required to learn these facts can be greatly reduced (Johnson, 2008; Stein et al., 2018). The most important strategy in teaching arithmetic facts is the use of number families. A number family is a triplet of numbers that can be manipulated to derive a set of related facts (Poncy et al., 2010; Stein et al., 2018). For example, in addition and subtraction, 2, 3, and 5 is a number family; it can produce four basic facts: 2 + 3 = 5, 3 + 2 = 5, 5 – 2 = 3, 5 – 3 = 2. Rather than teaching students to memorize four individual facts, we can teach them one fact family and the relations necessary to produce four facts using that fact family. In addition to the fact families, we can teach simple rules for adding and subtracting zero and one (i.e., “When you add or subtract zero, you end up with the same number,” and “When you add one, you end up with the next number.”). Learning these four rules allow students to derive 72 addition/subtraction facts involving zero and one. Learning 36 families allow them to derive the remaining 128 addition/subtraction facts. Thus, four rules and 36 families yield 200 facts. This content analysis is based on the sameness, or big idea, of the relations between addition and subtraction facts. Figure 2 illustrates the generativity of this content analysis to addition/subtraction facts. The analysis also applies to multiplication and division facts.

Content Analysis in Earth Science

Earth science is often taught as a series of unrelated topics, such as geology, weather, and oceanography (Haas, 1991; Nolet et al., 1993). Treating topics as unrelated is an example of poor content analysis. A careful content analysis in this domain reveals that convection is a big idea that can be applied to understanding many seemingly disparate topics. The key component relations of convection are: (1) when fluids (i.e., liquids and gasses) are heated, they expand and become less dense; (2) when fluids become less dense, they rise; (3) when heated fluids rise, they may lose contact with their heat source and cool; (4) when fluids cool, they contract and become denser; and (5) when fluids become denser, they descend. This understanding of convection can be applied to numerous superficially diverse phenomena: water in a heated pot, air in a room, on-shore and off-shore winds, wind circulation in thunder clouds and the production of hail, patterns of winds at various latitudes (e.g. polar winds, westerlies, trade winds), ocean currents, and plate tectonics (see Figure 3). This content analysis is not only generative of specific understanding of the mechanism driving various meteorological, oceanographic, and geological phenomena, it also supports understanding of otherwise unseen similarities across these systems (Harniss et al., 2004). In addition, understanding convection when studying earth sciences is likely to support students to apply their understanding of convection in other contexts, such as cooking, thereby improving the efficiency of instruction. This content analysis is based on the understanding that convection is a sameness, or big idea, that explains numerous specific earth science topics.

Content Analysis in Basic Language

A significant accomplishment in early language development is learning to derive new responses based on verbal relations (Hayes et al., 2001; Skinner, 1957). A key early example of this repertoire is learning new names based on the word “is” and contextual features. For example, a caregiver could hold a kitchen implement and tell a child, “This is a cheese grater.” Children who have the ability to learn from this kind of instruction can learn many more names than those who have not gained this skill. Of course, “is” is not the only relation of this type. Relational frame theory (RFT) has identified numerous relations that allow derivation of new behavior (Hayes et al., 2001).

DI program designers also recognized the generative power of these basic verbal relations and built an early language skills curriculum around them (Engelmann & Osborn, 1976, 1999): a prime example of content analysis of the domain of basic language. If a child can be taught to respond to relations among stimuli that are signaled by verbal statements, this teaching has near infinite potential generativity. Once the relation comes under contextual control of verbal statements, a near infinite variety of such verbal statements can produce precisely tuned novel behavior without specific instruction (or with greatly reduced instruction).

A DI content analysis of the basic language domain includes teaching the types of relations identified in the experimental literature including coordination, opposition, distinction, comparison, hierarchical, temporal, spatial, conditional, and deictic (Kelso, 2007). The DI program, Language for Learning (Engelman & Osborn, 1999; originally published in 1976 as DISTAR Language) teaches all of these types of relations, introducing them systematically and gradually across the program. Figure 4 shows the sequence of introduction of these types of relations in Language for Learning. For example, the deictic relations of I/you and now/then are taught early in the program and systematically applied to a wide variety of context throughout the program. The I/you discrimination is featured in exercises that juxtapose items in which the teacher asks, “Say the whole thing about what you are doing” and students respond, “I am touching the floor” with items in which the teacher asks, “Say the whole things about what I am doing” and students respond, “You are touching your ear.” (Of course, specific actions vary across exercises.) Later in the program, the temporal aspect of deictic relations is taught in exercises juxtaposing items that ask, “What will we do?,” “What are we doing?,” and “What were we doing before?” The content analysis is based on the samenesses, or big ideas, of coordination, distinction, temporal relations, and so on.

Content Analysis in Narrative Language

Narrative language is the domain of telling and understanding stories. It includes both nonfictional and fictional narratives and crosses the modalities of hearing, reading, and watching (e.g., movies/videos; Carnine et al., 2017; Hughes et al., 1997). The story grammar structure (Stein & Glenn, 1979) is a powerful content analysis in this domain. Story grammar describes the structural components that occur in narratives in a manner analogous to sentence grammar describing the structural elements of a sentence. The most basic and frequently occurring story grammar elements are (1) setting, (2) character, (3) plot (which includes an initiating event, attempt, and consequence), and (4) resolution (Stein & Glenn, 1979). These story grammar elements are key to the content analysis of both creating and understanding narrative language. If students can respond discriminatively to these elements (e.g., tact them, describe the specifics of a particular element in a particular story), their ability to answer both immediate and delayed (recall) questions about novel stories is enhanced (Kim et al., 2018; Spencer et al., 2013; Spencer et al., 2015; Spencer et al., 2018). Thus, after learning the story grammar structure, students can generate additional benefits from subsequent new narratives that they hear, read, or view. Because story grammar can increase comprehension of any novel narrative, its effects would be a function of the number of narratives that a learner reads. And given that a student will read many thousands of narrative texts, the generativity of improved comprehension is very high, indeed.

Students can be taught to construct narratives using the story grammar structure (see Spencer, this issue). They can learn the skill of including explicit settings, characters, plot elements, and resolutions in novel narratives that they generate (Favot et al., 2018). It is important to note that the generated narratives are not limited to fiction (Spencer & Slocum, 2010; Petersen et al., 2014; Weddle et al., 2016). This skill contributes to producing comprehensible recounting of actual events—an ability that has great social importance. Because the story grammar skills can improve the effectiveness of any narrative that the learner generates, the generativity of teaching the construction of narratives using story grammar elements is a function of the number of narratives that the child generates across their lifetime. The big idea of this content analysis is the “samenesses” across narratives—they all include settings, characters, plot, and resolution.

Conclusion

The content analyses described above illustrate the content analyses that drive the design of DI programs. Numerous other content analyses, including those with high levels of generativity (e.g., teaching students to decode words, fractions concepts and operations, logical writing structures) provide the foundation for DI programs. The examples given above are limited to the area of academic instruction because published DI programs are concentrated in this area. Content analysis is equally relevant to other content typically taught to clients and students, such as social skills and functional skills, as well as content typically taught to professionals, such as instructional and behavior management repertoires.

Even these few examples include many different behavioral relations. As we mentioned earlier, the point of content analysis is not to demonstrate a particular type of relation or to favor one type of relation over others; the point is to discover the relations that enable optimal generativity of instruction. Instruction based on these content analyses may involve any behavioral relation and often implies a complex mix of them.

The most important messages about content analysis for behavior analysts are: (1) that it exists; (2) that it is the foundation for all subsequent instructional design and delivery; and (3) that it determines the upper limit of the generativity and efficiency possible in an instructional program. All of the critical subsequent steps of instructional design (e.g., selection and sequencing of examples, clear wording, designing teacher–student interactions, data-based decision making) are based on a content analysis. If the content analysis is poor, then generativity of the instruction will be minimal no matter how well the other factors are implemented. For example, if the content analysis of a reading program is based on words as individual units (i.e., sight words), the subsequent phases of instructional design may produce a program that helps students memorize individual words, but will not be able to produce the broad generalization of a program with a phonic-based content analysis. If the content analysis is of high quality and the other factors are well-implemented, then the instruction will be highly generative. A high-quality content analysis is necessary but not sufficient to produce generative instruction. The problem is that the content analysis is all too often tacit rather than explicit; it is too often built on an unexamined assumption that there is no important order in the content domain, or based on a naïve understanding of the subject matter inherited from the culture at large. At other times, content analysis is based on existing instructional programs or textbooks without critical analysis from the point of view of generativity. Sometimes content analyses are derived from skill assessments, without identifying generative relations that could be taught to produce the repertoires sampled by the assessment. None of these are likely to identify the most generative relations and optimize the generativity of instruction.

Content analysis is subtle—it is not superficially obvious in instructional programs. As a result, we are often not aware of the vast differences in content analyses across programs and the importance of content analysis in designing programs. One extreme example is that many teachers and therapists are not aware of the implications of rote instruction in sight words (i.e., content analysis that there are no important generalizations in decoding words) compared to teaching phonics (i.e., content analysis based on highly generative relations).

Ways to Conduct a Content Analysis

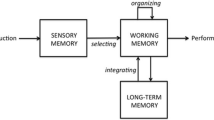

Behavior analysts can incorporate higher quality content analysis in their instruction and thereby increase instructional efficiency and generativity. The process of content analysis involves an iterative cycle of logical and empirical analyses (see Figure 5). The logical analysis identifies potentially teachable relations that could produce broad learner repertoires; the empirical analysis tests whether these repertoires are actually produced by the instruction. Although we can describe broad strategies for the logical content analysis, there is no simple procedure that will reliably produce optimal results across all domains. Therefore, instructional designers must keep the function of content analysis front and center—to identify teachable relations (i.e., samenesses) across items or topics in the content domain that will support maximum generalization and instructional efficiency.

The first step in content analysis is to engage with the content domain and generate possible analyses. This may involve some combination of three complementary tactics: collaborating with content experts, reading research in the content domain, and independently generating the analysis. Many instructional designers appear to be unaware that experts in content areas often have already developed remarkable content analyses, although they may describe them using unfamiliar technical terms. Each of the examples above are well-known by experts in their respective content domains. Behavior analysts who are interested in developing generative instruction would do well to collaborate with experts who have deep knowledge of their content domains. In most cases, these experts will not be behavior analysts and will speak a very different language. However, in some cases, such as derived relational responding, powerful analyses of content domains have been developed by behavior analysts. The key is for the behavior analyst to focus on the relations that they are describing rather than the terms with which they are describing it.

Examples of this collaboration between behavior analysts and content experts include the morphographic spelling content analysis and the analysis of story grammar for understanding and generating narrative language. In the spelling analysis, a linguist familiar with morphographic structures in English (Dixon) collaborated with a DI developer (Engelmann) to produce Spelling Mastery (Dixon & Engelmann, 2001). In the narrative language analysis, a behavior analyst (Spencer) collaborated with a speech-language pathologist with a background in linguistics (Peterson) to create an effective instructional program, Story Champs (Spencer et al., 2015; Spencer, this issue).

A second tactic—closely related to the first—is to read research on the content domain. For example, extensive research on reading has identified powerful relations that can produce highly generative repertoires. These include the alphabetic principle (the generalized bidirectional relation between letters and sounds), phonological manipulation skills (e.g., rhyming, saying the first phoneme in a word, separately articulating the phonemes in a word), and sounding out (seeing letters, saying each phoneme, then saying the word; cf. Carnine et al., 2017; National Institute of Child Health & Human Development, 2000). A behavior analyst interested in designing beginning reading instruction could begin their content analysis by reading literature such as this.

A third tactic for obtaining a logical analysis of the content domain is to create it independent of experts in the domain and/or existing literature. The designer begins by explicitly identifying and describing the repertoire to be targeted. Next, they identify sameness (i.e., shared features or big ideas) of the elements of the target repertoire. These samenesses might be described in behavioral terms as any of the behavioral relations that have been named and exemplified previously in this article. They might also be described in the language of instructional design using terms such as concepts, rules, strategies, and big ideas. In this task, all of these terms (the more technical and the less technical) function as prompts to help the designer identify potentially teachable relations.

There is no algorithm for the process of identifying generative relations within a content area because each content area offers different possibilities and challenges. Important samenesses may not be obvious from a superficial examination of the content area; for many domains, deeper content knowledge is necessary to be able to identify these relations. Examples of this include the morphographic analysis of spelling and the story grammar analysis of narratives described above. The examples provided above illustrate only a small fraction of that variety. Thus, the analyst must study the domain extensively and resist the temptation to assume that a superficial understanding of a content domain is all that there is to know. Still, the behavior analyst can bring their understanding of the various generative behavioral relations to bear as powerful prompts when combined with extensive study of the content domain.

Whether the instructional designer is collaborating with content experts, reading relevant research, studying the domain directly, or some combination of these tactics, the designer should generate numerous possible content analyses. The results should be compared to the criterion of maximizing generativity—producing the most learning from the smallest amount of teaching. This process of generating possible analyses and evaluating them continues until the instructional designer is satisfied that a sufficiently generative logical content analysis is present.

The logical analysis of the content domain (generating possible analyses and logically comparing them) is only a part of the process of content analysis. The other component is empirical analysis—the designer must determine whether the relations can be taught to the relevant students with sufficient efficiency that the logically identified relations actually result in generative outcomes. It is an empirical question whether teaching students a potentially generative relation is actually more time efficient than teaching simpler relations. We cannot assume that logical generativity will result in empirical generativity. The results of empirical studies may suggest a need for additional logical analysis of the content domain.

Content analysis is a critical element of instructional development—it places an upper limit on generativity—but it is only one component. In order to realize the potential that may be inherent in a generative content analysis, an elaborate process of instructional design must be carried out and effective structures and processes for interacting with students must be developed. The concepts entailed by the content analysis must be analyzed in terms of variable and critical features (Johnson & Bulla, this issue), the juxtaposition of examples and nonexamples must be developed (Twyman, this issue), formats for communication with learners must be written, systems for responding to student performance must be developed (Rolf & Slocum, this issue), and of course, the whole instructional package must be empirically refined through field test cycles and the final program must be experimentally evaluated. Combining these activities with a generative content analysis, as DI does, is key to producing instruction that is effective and efficient for students with and without disabilities.

Notes

We use the term “generative” as Stokes and Baer used the term “generalization.” We prefer the former to avoid confusion with the narrower terms “stimulus generalization” and “response generalization.”

References

Carnine, D. (1991). Curricular interventions for teaching higher order thinking to all students: Introduction to the special series. Journal of Learning Disabilities, 24(5), 261–269. https://doi.org/10.1177/00222194910240050.

Carnine, D. W., Jones, E. D., & Dixon, R. C. (1994). Mathematics: Educational tools for diverse learners. School Psychology Review, 23(3), 406–427. https://doi.org/10.1080/02796015.1994.12085721.

Carnine, D. W., Silbert, J., Kame’enui, E. J., Slocum, T. A., & Travers, P. (2017). Direct Instruction reading (6th ed.). Pearson.

Coyne, M., Kame’enui, E. J., & Carnine, D. (Eds.). (2011). Effective teaching strategies that accommodate diverse learners. Pearson Prentice Hall.

Dixon, R. C. (1991). The application of sameness analysis to spelling. Journal of Learning Disabilities, 34(5), 285–291. https://doi.org/10.1177/002221949102400505.

Dixon, R. C., & Engelman, S. (2001). Spelling through morphographs. SRA/McGraw-Hill.

Dixon, R. C., & Engelmann, S. (2006). Spelling mastery. McGraw-Hill.

Engelmann, S. (1969). Preventing failure in the primary grades. Simon & Schuster.

Engelmann. S., & Osborn, J. (1976). DISTAR language I and II. SRA.

Engelmann. S., & Osborn, J. (1999). Language for learning. SRA/McGraw-Hill.

Favot, K., Carter, M., & Stephenson, J. (2018). The effects of an oral narrative intervention on the fictional narrative retells of children with ASD and severe language impairment: A pilot study. Journal of Developmental & Physical Disabilities, 30(5), 615–637. https://doi.org/10.1007/s10882-018-9608-y.

Graham, S. (1999). Handwriting and spelling instruction for students with learning disabilities: A review. Learning Disability Quarterly, 22(2), 78–98. https://doi.org/10.2307/1511268.

Graham, S., Morphy, P., Harris, K. R., Fink-Chorzempa, B., Saddler, B., Moran, S., & Mason, S. (2008). Teaching spelling in the primary grades: A national survey of instructional practices and adaptations. American Education Research Journal, 45(3), 796–825. https://doi.org/10.3102/0002831208319722.

Haas, M. E. (1991). An analysis of the social science and history concepts in elementary social studies textbooks, grade 1–4. Theory and Research in Social Education, 19, 211–220. https://doi.org/10.1080/00933104.1991.10505637.

Harniss, M., Hollenbeck, K., & Dickson, S. (2004). Content areas. In N. Marchand-Martella, Slocum, & R. Martella (Eds.), Introduction to Direct Instruction (pp. 246–279). Pearson.

Hayes, S. C., Barnes-Holmes, D., & Roche, B. (2001) Relational frame theory: A post-Skinnerian approach to language and cognition. Kluwer Academic/Plenum.

Herrnstein, R. J., Loveland, D. H., & Cable, C. (1976). Natural concepts in pigeons. Journal of Experimental Psychology: Animal Behavior Processes, 2(4), 285–302. https://doi.org/10.1037/0097-7403.2.4.285.

Hughes, D. L., McGillivray, L., & Schmidek, M. (1997). Guide to narrative language: Procedures for assessment. Thinking Publications.

Institute of Child Health & Human Development. (2000). Report of the National Reading Panel: Teaching children to read: An evidence-based assessment of the scientific research literature on reading and its implications for reading instruction. (NIH Publication No. 00-4769). U.S. Government Printing Office.

Johnson, K. R. (2008). Morningside mathematics fluency: Math facts (Vols. 1 – 6) [Curriculum program]. Morningside Press.

Johnson, K., & Bulla, A. J. (this issue). Creating the components for teaching concepts. Behavior Analysis in Practice.

Kelso, G. L. (2007). Language for learning: A relational frame theory perspective. . Paper presented at a symposium, Language for Learning, Children with Autism, and Relational Frame Theory (C. Watkins, chair), conducted at the Association of Behavior Analysis Convention, San Diego, CA.

Kim, S. Y., Rispoli, M., Lory, C., Gregori, E., & Brodhead, M. T. (2018). The effects of a shared reading intervention on narrative story comprehension and task engagement of students with autism spectrum disorder. Journal of Autism & Developmental Disorders, 48(10), 3608–3622. https://doi.org/10.1007/s10803-018-3633-7.

Nolet, V. W., Tindal, G., & Blake, G. (1993). Focus on assessment and learning in content classes (Training Module No. 4). University of Oregon, Research, Consultation, and Teaching Program.

Petersen, D. B., Brown, C. L., Ukrainetz, T. A., Wise, C., Spencer, T. D., & Zebre, J. (2014). Systematic individualized narrative language intervention on the personal narratives of children with autism. Language, Speech, & Hearing Services in Schools, 45, 67–86. https://doi.org/10.1044/2013_LSHSS-12-0099.

Poncy, B. C., McCallum, E., & Schmitt, A. J. (2010). A comparison of behavioral and constructivist interventions for increasing math-fact fluency in a second-grade classroom. Psychology in the Schools, 47(9), 917–930. https://doi.org/10.1002/pits.20514.

Post, Y. V., & Carreker, S. (2002). Orthographic similarity and phonological transparency in spelling. Reading & Writing: An Interdisciplinary Journal, 15, 317–340. https://doi.org/10.1023/A:1015213005350.

Rolf, K. R., & Slocum, T. A. (this issue). Features of Direct Instruction: Interactive lessons. Behavior Analysis in Practice.

Simonsen, F. L., & Dixon, R. (2004). Spelling. In N. Marchand-Martella, T. Slocum, & R. Martella (Eds.), Introduction to Direct Instruction (pp. 178–205). Pearson.

Skinner, B. F. (1957). Verbal behavior. Appleton Century Crofts.

Spencer, T. D. (this issue). Ten instructional design efforts to help behavior analysts take up the torch of Direct Instruction.

Spencer, T. D., Kajian, M., Petersen, D. B., & Bilyk, N. (2013). Effects of an individualized narrative intervention on children’s storytelling and comprehension skills. Journal of Early Intervention, 35(3), 243–269. 10.1177%2F1053815114540002.

Spencer, T. D., Petersen, D. B., Slocum, T. A., & Allen, M. M. (2015). Large group narrative intervention in Head Start classrooms: Implications for response to intervention. Journal of Early Childhood Research, 13(2), 196–217. https://doi.org/10.1177/1476718X13515419.

Spencer, T. D., & Slocum, T. A. (2010). The effect of a narrative intervention on story retelling and personal story generation skills of preschoolers with risk factors and narrative language delays. Journal of Early Intervention, 32(3), 178–199. https://doi.org/10.1177/1053815110379124.

Spencer, T. D., Weddle, S. A., Petersen, D. B., & Adams, J. A. (2018). Multi-tiered narrative intervention for preschoolers: A Head Start implementation study. NHSA Dialog, 20(1), 1–28.

Stein, N. L. & Glenn, C. G. (1979). An analysis of story comprehension in elementary school children. In R. Freedle (Ed.), Multidisciplinary approaches to discourse processing (pp. 53–120). Ablex. https://doi.org/10.1353/lan.1980.0030.

Stein, M., Kinder, D., Rolf, K., Silbert, J., & Carnine, D. W. (2018). Direct Instruction mathematics (5th ed.). Pearson.

Stokes, T. F., & Baer, D. M. (1977). An implicit technology of generalization. Journal of Applied Behavior Analysis, 10(2), 349–367. https://doi.org/10.1901/jaba.1977.10-349.

Twyman, J. S. (this issue). You have the big idea, concept, and some examples….now what? Behavior Analysis in Practice.

Watkins, C. L., & Slocum, T. A. (2004). The components of Direct Instruction. In N. Marchand-Martella, T. Slocum, & R. Martella (Eds.), Introduction to Direct Instruction (pp. 28–65). Pearson.

Weddle, S. A., Spencer, T. D., Kajian, M., & Petersen, D. B. (2016). An examination of a multitiered system of language support for culturally and linguistically diverse preschoolers: Implications for early and accurate identification. School Psychology Review, 45(1), 109–132. https://doi.org/10.17105/SPR45-1.109-132.

Funding

Production of this manuscript was supported in part by a grant from the Department of Education, H325D170080.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of Interest/Competing Interests

Dr. Slocum is the co-author of the textbook Direct Instruction Reading. Ms. Rolf is a co-author of the textbook Designing Effective Mathematics Instruction: A Direct Instruction Approach.

Data Availability

Not applicable

Code availability

Not applicable

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Slocum, T.A., Rolf, K.R. Features of Direct Instruction: Content Analysis. Behav Analysis Practice 14, 775–784 (2021). https://doi.org/10.1007/s40617-021-00617-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40617-021-00617-0