Abstract

In this paper, we study the direct/indirect stability of locally coupled wave equations with local Kelvin-Voigt dampings/damping, where we assume that the supports of the dampings and the coupling coefficients are disjoint. First, we prove the well-posedness, strong stability, and polynomial stability for some one dimensional coupled systems. Moreover, under some geometric control conditions, we prove the well-posedness and strong stability in the multi-dimensional case.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The direct and indirect stability of locally coupled wave equations with local damping has arouses much interests in recent years. The study of coupled systems is also motivated by several physical considerations like Timoshenko and Bresse systems (see for instance Wehbe and Ghader 2021; Bassam et al. 2015; Akil et al. 2020, 2021; Akil and Badawi 2022; Abdallah et al. 2018; Fatori et al. 2014; Fatori and Monteiro 2012). The exponential or polynomial stability of the wave equation with local Kelvin-Voigt damping is considered in Liu and Rao (2006), Tebou (2016), Burq and Sun (2022), for instance. On the other hand, the direct and indirect stability of locally coupled wave equations with local viscous dampings are analyzed in Alabau-Boussouira and Léautaud (2013), Kassem et al. (2019), Gerbi et al. (2021). In this paper, we are interested in locally coupled wave equations with local Kelvin-Voigt dampings. Before stating our main contributions, let us mention similar results for such systems. In 2019, et al. in Hayek et al. (2020), studied the stabilization of a multi-dimensional system of weakly coupled wave equations with one or two locally Kelvin-Voigt damping and non-smooth coefficient at the interface. They established different stability results. In 2021, et al. in Wehbe et al. (2021), studied the stability of an elastic/viscoelastic transmission problem of locally coupled waves with non-smooth coefficients, by considering:

where \(a, b_0, L >0\), \(c_0 \ne 0\), and \(0<\alpha _1<\alpha _2<\alpha _3<\alpha _4<L\). They established a polynomial energy decay rate of type \(t^{-1}\). In the same year, Akil et al. in 2021, studied the stability of a singular local interaction elastic/viscoelastic coupled wave equations with time delay, by considering:

where \(a, \kappa _1, L>0\), \(\kappa _2, c_0 \ne 0\), and \(0<\alpha<\beta<\gamma <L\). They proved that the energy of their system decays polynomially in \(t^{-1}\). In 2021, Akil et al. in 2021, studied the stability of coupled wave models with locally memory in a past history framework via non-smooth coefficients on the interface, by considering:

where \(a, b_0, L >0\), \(c_0 \ne 0\), \(0<\alpha<\beta<\gamma <L\), and \(g:[0,\infty ) \longmapsto (0,\infty )\) is the convolution kernel function. They established an exponential energy decay rate if the two waves have the same speed of propagation. In case of different speed of propagation, they proved that the energy of their system decays polynomially with rate \(t^{-1}\). In the same year, Akil et al. in 2022, studied the stability of a multi-dimensional elastic/viscoelastic transmission problem with Kelvin-Voigt damping and non-smooth coefficient at the interface, they established some polynomial stability results under some geometric control condition. In those previous literature, the authors deal with the locally coupled wave equations with local damping and by assuming that there is an intersection between the damping and coupling regions. The aim of this paper was to study the direct/indirect stability of locally coupled wave equations with Kelvin-Voigt dampings/damping localized via non-smooth coefficients/coefficient and by assuming that the supports of the dampings and coupling coefficients are disjoint. In the first part of this paper, we consider the following one dimensional coupled system:

with fully Dirichlet boundary conditions,

and the following initial conditions

In this part, for all \(b_0, d_0 >0\) and \(c_0 \ne 0\), we treat the following three cases:

Case 1 (See Figure 1):

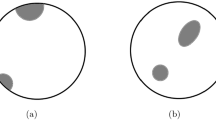

Case 2 (See Figure 2):

Case 3 (See Figure 3):

While in the second part, we consider the following multi-dimensional coupled system:

with full Dirichlet boundary condition

and the following initial condition

where \(\Omega \subset \mathbb R^N\), \(N\ge 2\) is an open and bounded set with boundary \(\Gamma \) of class \(C^2\). Here, \(b,c\in L^{\infty }(\Omega )\) are such that \(b:\Omega \rightarrow \mathbb R_+\) is the viscoelastic damping coefficient, \(c:\Omega \rightarrow \mathbb R\) is the coupling function and

and

In the first part of this paper, we study the direct and indirect stability of system (1.1)-(1.4) by considering the three cases (C1), (C2), and (C3). In Sect. 2.1, we prove the well-posedness of our system by using a semigroup approach. In Sect. 2.2, by using the general criteria of Arendt-Batty, we prove the strong stability of our system in the absence of the compactness of the resolvent. Finally, in Sect. 2.3, by using a frequency domain approach combined with a specific multiplier method, we prove that our system decay polynomially of type \(t^{-4}\) or \(t^{-1}\).

In the second part of this paper, we study the indirect stability of System (1.5)-(1.8). In Sect. 3.1, we prove the well-posedness of our system by using a semigroup approach. Finally, in Sect. 3.2, under some geometric control condition, we prove the strong stability of this system.

2 Direct and indirect stability in the one dimensional case

In this section, we study the well-posedness, strong stability, and polynomial stability of system (1.1)-(1.4).

2.1 Well-posedness

In this section, we will establish the well-posedness of System (1.1)-(1.4) using semigroup approach. The energy of system (1.1)-(1.4) is given by

Let \(\left( u,u_{t},y,y_{t}\right) \) be a regular solution of (1.1)-(1.4). Multiplying (1.1) and (1.2) by \(\overline{u_t}\) and \(\overline{y_t}\), respectively, then using the boundary conditions in (1.3), we get

Thus, if (C1) or (C2) or (C3) holds, we get \(E^\prime (t)\le 0\). Therefore, system (1.1)-(1.4) is dissipative in the sense that its energy is non-increasing with respect to time t. Let us define the energy space \({\mathcal {H}}\) by

The energy space \({\mathcal {H}}\) is equipped with the following inner product:

for all \(U=\left( u,v,y,z\right) ^\top \) and \(U_1=\left( u_1,v_1,y_1,z_1\right) ^\top \) in \({\mathcal {H}}\). We define the unbounded linear operator \({\mathcal {A}}: D\left( {\mathcal {A}}\right) \subset {\mathcal {H}}\longrightarrow {\mathcal {H}}\) by

and

Now, if \(U=(u,u_t,y,y_t)^\top \) is the state of system (1.1)-(1.4), then it is transformed into the following first-order evolution equation:

where \(U_0=(u_0,u_1,y_0,y_1)^\top \in \mathcal H\).

Proposition 2.1

If (C1) or (C2) or (C3) holds. Then, the unbounded linear operator \(\mathcal A\) is m-dissipative in the Hilbert space \(\mathcal H\).

Proof

For all \(U=(u,v,y,z)^{\top }\in D({\mathcal {A}})\), we have

which implies that \({\mathcal {A}}\) is dissipative. Now, similar to Proposition 2.1 in Wehbe et al. (2021), we can prove that there exists a unique solution \(U=(u,v,y,z)^{\top }\in D({\mathcal {A}})\) of

Then \(0\in \rho ({\mathcal {A}})\) and \({\mathcal {A}}\) is an isomorphism and since \(\rho ({\mathcal {A}})\) is open in \({\mathbb {C}}\) (see Theorem 6.7 (Chapter III) in Kato 1995), we easily get \(R(\lambda I -{\mathcal {A}}) = {{\mathcal {H}}}\) for a sufficiently small \(\lambda >0 \). This, together with the dissipativeness of \({\mathcal {A}}\), imply that \(D\left( {\mathcal {A}}\right) \) is dense in \({{\mathcal {H}}}\) and that \({\mathcal {A}}\) is m-dissipative in \({{\mathcal {H}}}\) (see Theorems 4.5, 4.6 in Pazy 1983). \(\square \)

According to Lumer–Phillips theorem (see Pazy 1983), then operator \(\mathcal A\) generates a \(C_{0}\)-semigroup of contractions \(e^{t\mathcal A}\) in \(\mathcal H\) which gives the well-posedness of (2.1). Then, we have the following result:

Theorem 2.2

For all \(U_0 \in \mathcal H\), system (2.1) admits a unique weak solution

Moreover, if \(U_0 \in D(\mathcal A)\), then the system (2.1) admits a unique strong solution

2.2 Strong stability

In this section, we will prove the strong stability of system (1.1)-(1.4). We define the following conditions:

or

The main result of this part is the following theorem:

Theorem 2.3

Assume that (SSC1) or (C2) or (SSC3) holds. Then, the \(C_0\)-semigroup of contractions \(\left( e^{t{\mathcal {A}}}\right) _{t\ge 0}\) is strongly stable in \({\mathcal {H}}\); i.e. for all \(U_0\in {\mathcal {H}}\), the solution of (2.1) satisfies

According to Theorem A.2, to prove Theorem 2.3, we need to prove that the operator \(\mathcal A\) has no pure imaginary eigenvalues and \(\sigma (\mathcal A)\cap i\mathbb R\) is countable. Its proof has been divided into the following Lemmas:

Lemma 2.4

Assume that (SSC1) or (C2) or (SSC3) holds. Then, for all \({\lambda }\in {\mathbb {R}}\), \(i{\lambda }I-{\mathcal {A}}\) is injective, i.e.

Proof

From Proposition 2.1, we have \(0\in \rho ({\mathcal {A}})\). We still need to show the result for \({\lambda }\in \mathbb R^{*}\). For this aim, suppose that there exists a real number \({\lambda }\ne 0\) and \(U=\left( u,v,y,z\right) ^\top \in D(\mathcal A)\) such that

Equivalently, we have

Next, a straightforward computation gives

Inserting (2.2) and (2.4) in (2.3) and (2.5), we get

with the boundary conditions

\(\bullet \) Case 1: Assume that (SSC1) holds. From (2.2), (2.4), and (2.6), we deduce that

Using (2.7), (2.8), and (2.10), we obtain

Deriving the above equations with respect to x and using (2.10), we get

Using the unique continuation theorem, we get

Using (2.13) and the fact that \(u(0)=y(L)=0\), we get

Now, our aim is to prove that \(u=y=0 \ \text {in} \ (c_1,c_2)\). For this aim, using (2.14) and the fact that \(u, y\in C^1([0,L])\), we obtain the following boundary conditions:

Multiplying (2.7) by \(-2(x-c_2){\overline{u}}_x\), integrating over \((c_1,c_2)\) and taking the real part, we get

using integration by parts and (2.15), we get

Multiplying (2.8) by \(-2(x-c_1){\overline{y}}_x\), integrating over \((c_1,c_2)\), taking the real part, and using the same argument as above, we get

Adding (2.17) and (2.18), we get

Using Young’s inequality in (2.19), we get

consequently, we get

Thus, from the above inequality and (SSC1), we get

Next, we need to prove that \(u=0\) in \((c_2,L)\) and \(y=0\) in \((0,c_1)\). For this aim, from (2.22) and the fact that \(u,y \in C^1([0,L])\), we obtain

It follows from (2.7), (2.8) and (2.23) that

Holmgren uniqueness theorem yields

Therefore, from (2.2), (2.4), (2.14), (2.22) and (2.25), we deduce that

\(\bullet \) Case 2: Assume that (C2) holds. From (2.2), (2.4) and (2.6), we deduce that

Using (2.7), (2.8) and (2.26), we obtain

Deriving the above equations with respect to x and using (2.26), we get

Using the unique continuation theorem, we get

From (2.29) and the fact that \(u(0)=y(0)=0\), we get

Using the fact that \(u,y\in C^1([0,L])\) and (2.30), we get

Now, using the definition of c(x) in (2.7)-(2.8), (2.26) and (2.31), we get

Again, using the fact that \(u,y\in C^1([0,L])\), we get

Now, using the same argument as in Case 1, we obtain

consequently, we deduce that

\(\bullet \) Case 3: Assume that (SSC3) holds. Using the same argument as in Cases 1 and 2, we obtain

Step 1. The aim of this step is to prove that

For this aim, multiplying (2.7) by \({\overline{y}}\) and (2.8) by \({\overline{u}}\), then using integrating by parts over (0, L), and (2.6), we get

Adding (2.35) and (2.36), taking the imaginary part, we get (2.34).

Step 2. Multiplying (2.7) by \(-2(x-c_2){\overline{u}}_x\), integrating over \((c_1,c_2)\) and taking the real part, we get

using integration by parts in (2.37) and (2.33), we get

Using Young’s inequality in (2.38), we obtain

Inserting (2.34) in (2.39), we get

According to (SSC3) and (2.34), we get

Step 3. Using the fact that \(u\in H^2(c_1,c_2)\subset C^1([c_1,c_2])\), we get

Now, from (2.7), (2.8) and the definition of c, we get

From the above systems and Holmgren uniqueness Theorem, we get

Consequently, using (2.33), (2.41) and (2.43), we get \(U=0\). The proof is thus completed. \(\square \)

Lemma 2.5

Assume that (SSC1) or (C2) or (SSC3) holds. Then, for all \(\lambda \in {\mathbb {R}}\), we have

Proof

See Lemma 2.5 in Wehbe et al. (2021) (see also Akil et al. 2021). \(\square \)

Proof of Theorems 2.3

From Lemma 2.4, we obtain that the operator \({\mathcal {A}}\) has no pure imaginary eigenvalues (i.e. \(\sigma _p(\mathcal A)\cap i\mathbb R=\emptyset \)). Moreover, from Lemma 2.5 and with the help of the closed graph theorem of Banach, we deduce that \(\sigma (\mathcal A)\cap i\mathbb R=\emptyset \). Therefore, according to Theorem A.2, we get that the C\(_0 \)-semigroup \((e^{t\mathcal A})_{t\ge 0}\) is strongly stable. The proof is thus complete. \(\square \)

2.3 Polynomial stability

In this section, we study the polynomial stability of system (1.1)-(1.4). Our main results in this part are the following theorems:

Theorem 2.6

Assume that (SSC1) holds. Then, for all \(U_0 \in D(\mathcal A)\), there exists a constant \(C>0\) independent of \(U_0\) such that

Theorem 2.7

Assume that (SSC3) holds . Then, for all \(U_0 \in D(\mathcal A)\) there exists a constant \(C>0\) independent of \(U_0\) such that

According to Theorem A.3, the polynomial energy decays (2.44) and (2.45) hold if the following conditions

and

are satisfied. Since condition (\(H_1\)) is already proved in Sect. 2.2. We still need to prove (\(H_2\)), let us prove it by a contradiction argument. To this aim, suppose that (\(H_2\)) is false, then there exists

with

such that

For simplicity, we drop the index n. Equivalently, from (2.47), we have

2.3.1 Proof of Theorem 2.6

In this section, we will prove Theorem 2.6 by checking the condition (\(H_2\)). For this aim, we will find a contradiction with (2.46) by showing \(\Vert U\Vert _{{\mathcal {H}}}=o(1)\). For clarity, we divide the proof into several Lemmas. By taking the inner product of (2.47) with U in \({\mathcal {H}}\), we remark that

Thus, from the definitions of b and d, we get

Using (2.48), (2.50), (2.52), and the fact that \(f_1,f_3\rightarrow 0\) in \(H_0^1(0,L)\), we get

Lemma 2.8

The solution \(U\in D(\mathcal A)\) of system (2.48)−(2.51) satisfies the following estimations

Proof

We give the proof of the first estimation in (2.54), the second one can be done in a similar way. For this aim, we fix \(g\in C^1\left( [b_1,b_2]\right) \) such that

The proof is divided into several steps as folllows:

Step 1. The goal of this step is to prove that

From (2.48), we deduce that

Multiplying (2.56) by \(2g{\overline{v}}\) and integrating over \((b_1,b_2)\), then taking the real part, we get

Using integration by parts in the left-hand side of the above equation, we get

Consequently, we get

Using Young’s inequality, we obtain

From the above inequalities, (2.57) becomes

Inserting (2.53) in (2.58) and the fact that \(f_1 \rightarrow 0\) in \(H^1_0(0,L)\), we get (2.55).

Step 2. The aim of this step is to prove that

Multiplying (2.49) by \(-2g\left( \overline{au_x+bv_x}\right) \), integrating by parts over \((b_1,b_2)\) and taking the real part, we get

consequently, we get

By Young’s inequality, (2.52), and (2.53), we have

Inserting (2.61) in (2.60), then using (2.52), (2.53) and the fact that \(f_2 \rightarrow 0\) in \(L^2(0,L)\), we get (2.59). Step 3. The aim of this step is to prove the first estimation in (2.54). For this aim, multiplying (2.49) by \(-i{\lambda }^{-1}{\overline{v}}\), integrating over \((b_1,b_2)\) and taking the real part, we get

Using (2.52), (2.53), the fact that v is uniformly bounded in \(L^2(0,L)\) and \(f_2\rightarrow 0\) in \(L^2(0,L)\), and Young’s inequalities, we get

Inserting (2.55) and (2.59) in (2.63), we get

which implies that

Using the fact that \({\lambda }\rightarrow \infty \), we can take \({\lambda }> 4m_{g'}^2\). Then, we obtain the first estimation in (2.54). Similarly, we can obtain the second estimation in (2.54). The proof has been completed. \(\square \)

Lemma 2.9

The solution \(U\in D(\mathcal A)\) of system (2.48)-(2.51) satisfies the following estimations

Proof

First, let \(h\in C^1([0,c_1])\) such that \(h(0)=h(c_1)=0\). Multiplying (2.49) by \(2a^{-1}h\overline{(au_x+bv_x)}\), integrating over \((0,c_1)\), using integration by parts and taking the real part, then using (2.52), the fact that \(u_x\) is uniformly bounded in \(L^2(0,L)\) and \(f_2 \rightarrow 0\) in \(L^2(0,L)\), we get

From (2.48), we have

Inserting (2.67) in (2.66), using integration by parts, then using (2.52), (2.54), and the fact that \(f_1 \rightarrow 0 \) in \(H^1_0 (0,L)\) and v is uniformly bounded in \(L^2 (0,L)\), we get

Now, we consider the following cut-off functions \(p_1,p_2\in C^1([0,b_2])\), such that

Finally, take \(h(x)=xp_1(x)+(x-c_1)p_2(x)\) in (2.68) and using (2.52), (2.53), (2.54), we get the first estimation in (2.65). By using the same argument, we can obtain the second estimation in (2.65). The proof is thus completed. \(\square \)

Lemma 2.10

The solution \(U\in D(\mathcal A)\) of system (2.48)−(2.51) satisfies the following estimations

Proof

First, from (2.48) and (2.49), we deduce that

Multiplying (2.70) by \(2(x-b_2){\bar{u}}_x\), integrating over \((b_2,c_1)\) and taking the real part, then using the fact that \(u_x\) is uniformly bounded in \(L^2(0,L)\) and \(f_2 \rightarrow 0\) in \(L^2(0,L)\), we get

Remark that from (2.65) and (2.48), we get

Using integration by parts in (2.71), then using the above estimations, and the fact that \(f_1\rightarrow 0\) in \(H_0^1(0,L)\) and \({\lambda }u\) is uniformly bounded in \(L^2(0,L)\), we get

consequently, by using Young’s inequality, we get

Then, we get

Finally, from the above estimation and the fact that \(f_1 \rightarrow 0\) in \(H^1_0 (0,L)\), we get the first two estimations in (2.69). By using the same argument, we can obtain the last two estimations in (2.69). The proof has been completed. \(\square \)

Lemma 2.11

The solution \(U\in D(\mathcal A)\) of system (2.48)−(2.51) satisfies the following estimation

Proof

Inserting (2.48) and (2.50) in (2.49) and (2.51), we get

Multiplying (2.75) by \(2(x-c_2)\overline{u_x}\) and (2.76) by \(2(x-c_1)\overline{y_x}\), integrating over \((c_1,c_2)\) and taking the real part, then using the fact that \(\Vert F\Vert _\mathcal H=o(1)\) and \(\Vert U\Vert _\mathcal H=1\), we obtain

and

Using integration by parts, (2.69), and the fact that \(f_1, f_3 \rightarrow 0\) in \(H^1_0(0,L)\), \(\Vert u\Vert _{L^2(0,L)}=O({\lambda }^{-1})\), and \(\Vert y\Vert _{L^2(0,L)}=O({\lambda }^{-1})\), we deduce that

Inserting (2.79) in (2.77) and (2.78), then using integration by parts and (2.69), we get

Adding (2.80) and (2.81), we get

Applying Young’s inequalities, we get

Finally, using (SSC1), we get the desired result. The proof has been completed. \(\square \)

Lemma 2.12

The solution \(U\in D(\mathcal A)\) of system (2.48)−(2.51) satisfies the following estimations

Proof

Using the same argument of Lemma 2.9, we obtain (2.83). \(\square \)

Proof of Theorem 2.6. Using (2.53), Lemmas 2.8, 2.9, 2.11, 2.12, we get \(\Vert U\Vert _{{\mathcal {H}}}=o(1)\), which contradicts (2.46). Consequently, condition \(\mathrm{(H2)}\) holds. This implies the energy decay estimation (2.44).

2.3.2 Proof of Theorem 2.7

In this section, we will prove Theorem 2.7 by checking the condition (\(H_2\)), that is by finding a contradiction with (2.46) by showing \(\Vert U\Vert _{{\mathcal {H}}}=o(1)\). For clarity, we divide the proof into several Lemmas. By taking the inner product of (2.47) with U in \({\mathcal {H}}\), we remark that

Then,

Using (2.48) and (2.84), and the fact that \(f_1 \rightarrow 0\) in \(H^1_0(0,L)\), we get

Lemma 2.13

Let \(0<\varepsilon <\frac{b_2-b_1}{2}\); the solution \(U\in D({\mathcal {A}})\) of the system (2.48)−(2.51) satisfies the following estimation

Proof

First, we fix a cut-off function \(\theta _1\in C^{1}([0,c_1])\) such that

Multiplying (2.49) by \({\lambda }^{-1}\theta _1 {\overline{v}}\), integrating over \((0,c_1)\), using integration by parts, and the fact that \(f_2 \rightarrow 0\) in \(L^2(0,L)\) and v is uniformly bounded in \(L^2(0,L)\), we get

Using (2.84), (2.85), the fact that \(\Vert U\Vert _{{\mathcal {H}}}=1\), and the definition of \(\theta _1\), we get

Inserting the above estimation in (2.88), we get the desired result (2.86). The proof has been completed. \(\square \)

Lemma 2.14

The solution \(U\in D({\mathcal {A}})\) of the system (2.48)−(2.51) satisfies the following estimation:

Proof

Let \(h\in C^1([0,c_1])\) such that \(h(0)=h(c_1)=0\). Multiplying (2.49) by \(2h\overline{(u_x+bv_x)}\), integrating over \((0,c_1)\) and taking the real part, then using integration by parts, (2.84), the fact that \(u_x\) is uniformly bounded in \(L^2(0,L)\), and the fact that \(f_2 \rightarrow 0\) in \(L^2(0,L)\), we get

Using (2.84) and the fact that v is uniformly bounded in \(L^2(0,L)\), we get

From (2.48), we have

Inserting (2.92) in (2.91), using integration by parts, the facts that v is uniformly bounded in \(L^2(0,L)\), and \(f_1 \rightarrow 0\) in \(H^1_0(0,L)\), we get

Inserting (2.93) in (2.90), we obtain

Now, we fix the following cut-off functions:

Taking \(h(x)=x\theta _2(x)+(x-c_1)\theta _3(x)\) in (2.94), then using (2.84) and (2.85), we get

Finally, from (2.85), (2.86) and (2.95), we get the desired result (2.89). The proof has been completed. \(\square \)

Lemma 2.15

The solution \(U\in D(\mathcal A)\) of system (2.48)−(2.51) satisfies the following estimations

Proof

First, using the same argument of Lemma 2.10, we claim (2.96). Inserting (2.48), (2.50) in (2.49) and (2.51), we get

Multiplying (2.98) and (2.99) by \({\lambda }{\overline{y}}\) and \({\lambda }{\overline{u}}\), respectively, integrating over (0, L), then using integration by parts, (2.84), the fact that \(\Vert U\Vert _\mathcal H=1\) and \(\Vert F\Vert _\mathcal H=o(1)\), we get

Adding (2.100), (2.101), then taking the imaginary parts, we get the desired result (2.97). The proof is thus completed. \(\square \)

Lemma 2.16

The solution \(U\in D(\mathcal A)\) of system (2.48)−(2.51) satisfies the following estimations:

Proof

First, Multiplying (2.98) by \(2(x-c_2){\bar{u}}_x\), integrating over \((c_1,c_2)\) and taking the real part, using the fact that \(\Vert U\Vert _\mathcal H=1\) and \(\Vert F\Vert _\mathcal H=o(1)\), we get

Using integration by parts in (2.103) with the help of (2.96), we get

Applying Young’s inequality in (2.104), we get

Using (2.97) in (2.105), we get

Finally, from the above estimation, (SSC3) and (2.97), we get the desired result (2.102). The proof has been completed. \(\square \)

Lemma 2.17

Let \(0<\delta <\frac{c_2-c_1}{2}\). The solution \(U\in D(\mathcal A)\) of system (2.48)−(2.51) satisfies the following estimations:

Proof

First, we fix a cut-off function \(\theta _4\in C^1([0,L])\) such that

Multiplying (2.99) by \(\theta _4{\bar{y}}\), integrating over (0, L), then using integration by parts, \(\Vert F\Vert _{{\mathcal {H}}}=o(1)\) and \(\Vert U\Vert _{{\mathcal {H}}}=1\), we get

Using (2.102), the definition of \(\theta _4\), and the fact that \({\lambda }u\) is uniformly bounded in \(L^2(0,L)\), we get

Finally, Inserting (2.110) in (2.109), we get the desired result (2.111). The proof has been completed. \(\square \)

Lemma 2.18

The solution \(U\in D(\mathcal A)\) of system (2.48)−(2.51) satisfies the following estimations:

Proof

Let \(q\in C^1([0,L])\) such that \(q(0)=q(L)=0\). Multiplying (2.99) by \(2q{\bar{y}}_x\) integrating over (0, L), using (2.102), and the fact that \(y_x\) is uniformly bounded in \(L^2(0,L)\) and \(\Vert F\Vert _{{\mathcal {H}}}=o(1)\), we get

Now, take \(q(x)=x\theta _5(x)+(x-L)\theta _6(x)\) in (2.112), such that

Then, we obtain the first four estimations in (2.111). Now, multiplying (2.98) by \(2q\left( \overline{u_x+bv_x}\right) \) integrating over (0, L), then using the fact that \(u_x\) is uniformly bounded in \(L^2(0,L)\), (2.84), and \(\Vert F\Vert _{{\mathcal {H}}}=o(1)\), we get

By taking \(q(x)=(x-L)\theta _7(x)\), such that

we get the the last two estimations in (2.111). The proof has been completed. \(\square \)

Proof of Theorem 2.7. Using (2.85), Lemmas 2.14, 2.16, 2.17 and 2.18, we get \(\Vert U\Vert _{{\mathcal {H}}}=o(1)\), which contradicts (2.46). Consequently, condition \(\mathrm{(H2)}\) holds. This implies the energy decay estimation (2.45)

3 Indirect stability in the multi-dimensional case

In this section, we study the well-posedness and the strong stability of system (1.5)-(1.8).

3.1 Well-posedness

In this section, we will establish the well-posedness of (1.5)-(1.8) by using semigroup approach. The energy of system (1.5)-(1.8) is given by

Let \((u,u_t,y,y_t)\) be a regular solution of (1.5)-(1.8). Multiplying (1.5) and (1.6) by \(\overline{u_t}\) and \(\overline{y_t}\), respectively, then using the boundary conditions (1.7), we get

using the definition of b, we get \(E'(t)\le 0\). Thus, system (1.5)-(1.8) is dissipative in the sense that its energy is non-increasing with respect to time t. Let us define the energy space \({\mathcal {H}}\) by

The energy space \({\mathcal {H}}\) is equipped with the inner product defined by

for all \(U=(u,v,y,z)^\top \) and \(U_1=(u_1,v_1,y_1,z_1)^\top \) in \({\mathcal {H}}\). We define the unbounded linear operator \({\mathcal {A}}_d:D\left( {\mathcal {A}}_d\right) \subset {\mathcal {H}}\longrightarrow {\mathcal {H}}\) by

and

If \(U=(u,u_t,y,y_t)\) is a regular solution of system (1.5)-(1.8), then we rewrite this system as the following first-order evolution equation:

where \(U_0=(u_0,u_1,y_0,y_1)^{\top }\in \mathcal H\). For all \(U=(u,v,y,z)^{\top }\in D({\mathcal {A}}_d )\), we have

which implies that \({\mathcal {A}}_d\) is dissipative. Now, similar to Proposition 2.1 in Akil et al. (2022), we can prove that there exists a unique solution \(U=(u,v,y,z)^{\top }\in D({\mathcal {A}}_d)\) of

Then \(0\in \rho ({\mathcal {A}}_d)\) and \({\mathcal {A}}_d\) is an isomorphism and since \(\rho ({\mathcal {A}}_d)\) is open in \({\mathbb {C}}\) (see Theorem 6.7 (Chapter III) in Kato 1995), we easily get \(R(\lambda I -{\mathcal {A}}_d) = {{\mathcal {H}}}\) for a sufficiently small \(\lambda >0 \). This, together with the dissipativeness of \({\mathcal {A}}_d\), implies that \(D\left( {\mathcal {A}}_d\right) \) is dense in \({{\mathcal {H}}}\) and that \({\mathcal {A}}_d\) is m-dissipative in \({{\mathcal {H}}}\) (see Theorems 4.5, 4.6 in Pazy 1983). According to Lumer–Phillips theorem (see Pazy 1983), then the operator \(\mathcal A_d\) generates a \(C_{0}\)-semigroup of contractions \(e^{t\mathcal A_d}\) in \(\mathcal H\) which gives the well-posedness of (3.3). Then, we have the following result:

Theorem 3.1

For all \(U_0 \in \mathcal H\), system (3.3) admits a unique weak solution

Moreover, if \(U_0 \in D(\mathcal A)\), then the system (3.3) admits a unique strong solution

3.2 Strong stability

In this section, we will prove the strong stability of system (1.5)-(1.8). First, we fix the following notations:

Let \(x_0\in {\mathbb {R}}^{d}\) and \(m(x)=x-x_0\) and suppose that (see Figure 4)

The main result of this section is the following theorem:

Theorem 3.2

Assume that (GC) holds and

where \(C_{p,\omega _c}\) is the Poincarré constant on \(\omega _c\). Then, the \(C_0-\)semigroup of contractions \(\left( e^{t{\mathcal {A}}_d}\right) \) is strongly stable in \({\mathcal {H}}\); i.e. for all \(U_0\in {\mathcal {H}}\), the solution of (3.3) satisfies

Proof

First, let us prove that

Since \(0\in \rho ({\mathcal {A}}_d)\), we still need to show the result for \(\lambda \in {\mathbb {R}}^{*}\). Suppose that there exists a real number \(\lambda \ne 0\) and \(U=(u,v,y,z)^\top \in D({\mathcal {A}}_d)\), such that

Equivalently, we have

Next, a straightforward computation gives

consequently, we deduce that

Inserting (3.5) in (3.6), then using the definition of c, we get

From (3.9) we get \(\Delta u=0\) in \(\omega _b\) and from (3.10) and the fact that \({\lambda }\ne 0\), we get

Now, inserting (3.5) in (3.6), then using (3.9), (3.11) and the definition of c, we get

Using Holmgren uniqueness theorem, we get

It follows that

Now, our aim is to show that \(u=y=0\) in \(\omega _c\). For this aim, inserting (3.5) and (3.7) in (3.6) and (3.8), then using (3.9), we get the following system:

Let us prove (3.4) by the following three steps:

Step 1. The aim of this step is to show that

For this aim, multiplying (3.15) and (3.16) by \({\bar{y}}\) and \({\bar{u}}\), respectively, integrating over \(\Omega \) and using Green’s formula, we get

Adding (3.21) and (3.22), then taking the imaginary part, we get (3.20).

Step 2. The aim of this step is to prove the following: identity

For this aim, multiplying (3.15) by \(2(m\cdot \nabla {\bar{u}})\), integrating over \(\omega _c\) and taking the real part, we get

Now, using the fact that \(u=0\) in \(\partial \omega _c\), we get

Using Green’s formula, we obtain

Using (3.17) and (3.19), we get

Inserting (3.27) in (3.26), we get

Inserting (3.25) and (3.28) in (3.24), we get (3.23).

Step 3. In this step, we prove (3.4). Multiplying (3.15) by \((d-1){\overline{u}}\), integrating over \(\omega _c\) and using (3.17), we get

Adding (3.23) and (3.29), we get

Using (GC), we get

Using Young’s inequality and (3.20), we get

and

Inserting (3.32) in (3.30), we get

Using (SSC) and (3.20) in the above estimation, we get

In order to complete this proof, we need to show that \(y=0\) in \({\widetilde{\Omega }}\). For this aim, using the definition of the function c in \({\widetilde{\Omega }}\) and using the fact that \(y=0\) in \(\omega _c\), we get

Now, using Holmgren uniqueness theorem, we obtain \(y=0\) in \({\widetilde{\Omega }}\) and consequently (3.4) holds true. Moreover, similar to Lemma 2.5 in Akil et al. (2022), we can prove \(R(i{\lambda }I-\mathcal A_d)=\mathcal H, \ \forall {\lambda }\in \mathbb R\). Finally, by using the closed graph theorem of Banach and Theorem A.2, we conclude the proof of this Theorem. \(\square \)

Let us notice that, under the sole assumptions (GC) and (SSC), the polynomial stability of System (1.5)-(1.8) is an open problem.

4 Conclusion and open problems

4.1 Conclusion

Concerning the first part of this paper: In Ghader et al. (2020) and (Ghader et al. 2021), Ghader et al. considered an elastic-viscoelastic wave equation with one locally Kelvin-Voigt damping and with an internal or boundary time delay. They got an optimal polynomial energy decay rate of type \(t^{-4}\). In 2021, Akil et al. in 2021 considered a singular locally coupled elastic-viscoelastic wave equations with one singular locally Kelvin-Voigt damping such that the region of damping and the region of coupling are intersecting, a polynomial energy decay rate is established of type \(t^{-1}\). Indeed, the case when the regions of damping and coupling are disjoint is still an open problem. In this paper, we are interested in considering this case. In fact, in the first part of this paper, we consider the case of direct stability of one-dimensional coupled-wave equations; i.e., the two wave equations are damped. We note that the position of the coupling region plays a very important role. We proved the following two cases:

-

If we divide the bar into 7 pieces; the first piece is the elastic part without coupling, the second piece is a viscoelastic part without coupling, the third piece is the elastic part without coupling, the fourth piece is a viscoelastic part without coupling, the fifth piece is the elastic part without coupling, the sixth piece is the elastic part with coupling, and the last piece is the elastic part without coupling (see (C2) and Figure 2). In this case, our system is always asymptotically stable and a polynomial energy decay rate of type \(t^{-4}\) has been obtained.

-

If we divide the bar into 7 pieces; the first piece is the elastic part without coupling, the second piece is a viscoelastic part without coupling, the third piece is the elastic part without coupling, the fourth piece is the elastic part with coupling, the fifth piece is the elastic part without coupling, the sixth piece is a viscoelastic part without coupling, and the last piece is the elastic part without coupling (see (C1) and Figure 1). Our system is strongly stable if the coupling coefficient satisfies

In this case, a polynomial energy decay rate of type \(t^{-4}\) has been proved. Concerning the second part of this paper,We consider a locally coupled wave equations with one locally Kelvin–Voigt damping such that the damping region and the coupling region are disjoint (see (C3) and Figure 3). When the two wave equations propagate at the same speed \((a=1)\) and the coupling coefficient satisfies the following condition:

In this case, our system is always strongly stable and a polynomial energy decay rate of type \(t^{-1}\) has been obtained.

Concerning the third part of this paper: In 2022, In Akil et al. (2022) Akil et al. considered multidimensional locally coupled wave equations with locally Kelvin-Voigt damping. If the regions of the coupling and the damping coefficients are intersecting, without any geometric conditions and without any conditions on the coefficients, the authors proved that the system is strongly stable. Also, under the Geometric control condition (GCC) the authors proved a polynomial energy decay rate of type \(t^{-1}\). In the third part of this paper, we consider the same system under the condition that the coupling and the damping region are disjoint. When the two wave equations propagate at the same speed \((a=1)\), the part of the boundary of the coupling region satisfies a Multiplier Geometric condition (see (GC)), and the coupling coefficient satisfies the following condition:

we prove that our system is strongly stable.

4.2 Open problems

In this part, we present some open problems:

- (OP1):

-

The optimality of the polynomial decay rate of the system (1.1)-(1.4) remains an open problem.

- (OP2):

-

For the first part of this paper: Can we get stability results if the coupling coefficient does not satisfy (4.1)?

- (OP3):

-

For the second part of this paper: Can we get stability results if the coupling coefficient does not satisfy (4.2) or if the two waves equations propagate at different speeds (i.e. \(a\ne 1\))?

- (OP4):

-

For the third part of this paper: Can we get stability results if the coupling coefficient does not satisfy any Geometric conditions or the coupling coefficient does not satisfy (4.3) or if the two waves equations propagate at different speeds (i.e. \(a\ne 1\))?

References

Abdallah F, Ghader M, Wehbe A (2018) Stability results of a distributed problem involving Bresse system with history and/or Cattaneo law under fully Dirichlet or mixed boundary conditions. Math Methods Appl Sci 41(5):1876–1907

Akil M, Chitour Y, Ghader M, Wehbe A (2020) Stability and exact controllability of a Timoshenko system with only one fractional damping on the boundary. Asymptot Anal 119(221–280):3–4

Akil M, Badawi H, Nicaise S, Wehbe A (2021) On the stability of Bresse system with one discontinuous local internal Kelvin-Voigt damping on the axial force. Zeitschrift für angewandte Mathematik und Physik 72(3):126

Akil M, Badawi H, Nicaise S, Wehbe A (2021) Stability results of coupled wave models with locally memory in a past history framework via nonsmooth coefficients on the interface. Math Methods Appl Sci 44(8):6950–6981

Akil M, Badawi H, Wehbe A (2021) Stability results of a singular local interaction elastic/viscoelastic coupled wave equations with time delay. Commun Pure & Appl Anal 20(9):2991–3028

Akil M, Badawi H (2022) The influence of the physical coefficients of a Bresse system with one singular local viscous damping in the longitudinal displacement on its stabilization. Evol Equ & Control Theo

Akil M, Issa I, Wehbe A (2022) A n-dimensional elastic\(\backslash \)viscoelastic transmission problem with kelvinvoigt damping and non smooth coefficient at the interface. SeMA J

Alabau-Boussouira F, Léautaud M (2013) Indirect controllability of locally coupled wave-type systems and applications. J Math Pures Appl (9) 99(5):544–576

Arendt W, Batty CJK (1988) Tauberian theorems and stability of one-parameter semigroups. Trans Amer Math Soc 306(2):837–852

Bassam M, Mercier D, Nicaise S, Wehbe A (2015) Polynomial stability of the Timoshenko system by one boundary damping. J Math Anal Appl 425(2):1177–1203

Batty CJK, Duyckaerts T (2008) Non-uniform stability for bounded semi-groups on Banach spaces. J Evol Equ 8(4):765–780

Borichev A, Tomilov Y (2010) Optimal polynomial decay of functions and operator semigroups. Math Ann 347(2):455–478

Burq N, Sun C (2022) Decay rates for Kelvin-Voigt damped wave equations II: The geometric control condition. Proc Amer Math Soc 150(3):1021–1039

Fatori LH, Monteiro RN (2012) The optimal decay rate for a weak dissipative Bresse system. Appl Math Lett 25(3):600–604

Fatori LH, Monteiro RN, Sare HDF (2014) The Timoshenko system with history and Cattaneo law. Appl Math Comput 228:128–140

Gerbi S, Kassem C, Mortada A, Wehbe A (2021) Exact controllability and stabilization of locally coupled wave equations: theoretical results. Z Anal Anwend 40(1):67–96

Ghader M, Nasser R, Wehbe A (2020) Optimal polynomial stability of a string with locally distributed kelvin-voigt damping and nonsmooth coefficient at the interface. Math Methods Appl Sci 44(2):2096–2110

Ghader M, Nasser R, Wehbe A (2021) Stability results for an elastic-viscoelastic wave equation with localized kelvin-voigt damping and with an internal or boundary time delay. Asympt Anal 125(1–2):1–57

Hayek A, Nicaise S, Salloum Z, Wehbe A (2020) A transmission problem of a system of weakly coupled wave equations with Kelvin-Voigt dampings and non-smooth coefficient at the interface. SeMA J 77(3):305–338

Kassem C, Mortada A, Toufayli L, Wehbe A (2019) Local indirect stabilization of N-d system of two coupled wave equations under geometric conditions. C R Math Acad Sci Paris 357(6):494–512

Kato T (1995) Perturbation Theory for Linear Operators. Springer, Berlin Heidelberg

Liu Z, Rao B (2005) Characterization of polynomial decay rate for the solution of linear evolution equation. Z Angew Math Phys 56(4):630–644

Liu K, Rao B (2006) Exponential stability for the wave equations with local Kelvin-Voigt damping. Z Angew Math Phys 57(3):419–432

Pazy A (1983) Semigroups of linear operators and applications to partial differential equations, volume 44 of Applied Mathematical Sciences. Springer-Verlag, New York

Tebou L (2016) Stabilization of some elastodynamic systems with localized Kelvin-Voigt damping. Discrete Contin Dyn Syst 36(12):7117–7136

Wehbe A, Issa I, Akil M (2021) Stability results of an elastic/viscoelastic transmission problem of locally coupled waves with non smooth coefficients. Acta Appl Math 171(1):23

Wehbe A, Ghader M (2021) A transmission problem for the timoshenko system with one local kelvin-voigt damping and non-smooth coefficient at the interface. Comput Appl Math 40(8):1–46

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Carlos Conca.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A. Some notions and stability theorems

Appendix A. Some notions and stability theorems

In order to make this paper more self-contained, we recall in this short appendix some notions and stability results used in this work.

Definition A.1

Assume that A is the generator of \(C_0-\)semigroup of contractions \(\left( e^{tA}\right) _{t\ge 0}\) on a Hilbert space H. The \(C_0-\)semigroup \(\left( e^{tA}\right) _{t\ge 0}\) is said to be

-

(1)

Strongly stable if

$$\begin{aligned} \lim _{t\rightarrow +\infty } \Vert e^{tA}x_0\Vert _H=0,\quad \forall \, x_0\in H. \end{aligned}$$ -

(2)

Exponentially (or uniformly) stable if there exists two positive constants M and \(\varepsilon \) such that

$$\begin{aligned} \Vert e^{tA}x_0\Vert _{H}\le Me^{-\varepsilon t}\Vert x_0\Vert _{H},\quad \forall \, t>0,\ \forall \, x_0\in H. \end{aligned}$$ -

(3)

Polynomially stable if there exists two positive constants C and \(\alpha \) such that

$$\begin{aligned} \Vert e^{tA}x_0\Vert _{H}\le Ct^{-\alpha }\Vert x_0\Vert _{H},\quad \forall \, t>0,\ \forall \, x_0\in D(A). \end{aligned}$$\(\square \)

To show the strong stability of the \(C_0\)-semigroup \(\left( e^{tA}\right) _{t\ge 0}\) we rely on the following result due to Arendt and Batty (1988):

Theorem A.2

Assume that A is the generator of a C\(_0-\)semigroup of contractions \(\left( e^{tA}\right) _{t\ge 0}\) on a Hilbert space H. If A has no pure imaginary eigenvalues and \(\sigma \left( A\right) \cap i{\mathbb {R}}\) is countable, where \(\sigma \left( A\right) \) denotes the spectrum of A, then the \(C_0\)-semigroup \(\left( e^{tA}\right) _{t\ge 0}\) is strongly stable. \(\square \)

Concerning the characterization of polynomial stability stability of a \(C_0-\)semigroup of contraction \(\left( e^{tA}\right) _{t\ge 0}\) we rely on the following result due to Borichev and Tomilov (2010) (see also Batty and Duyckaerts 2008 and Liu and Rao 2005):

Theorem A.3

Assume that A is the generator of a strongly continuous semigroup of contractions \(\left( e^{tA}\right) _{t\ge 0}\) on \({\mathcal {H}}\). If \( i{\mathbb {R}}\subset \rho (A)\), then for a fixed \(\ell >0\) the following conditions are equivalent:

\(\square \)

Rights and permissions

About this article

Cite this article

Akil, M., Badawi, H. & Nicaise, S. Stability results of locally coupled wave equations with local Kelvin-Voigt damping: Cases when the supports of damping and coupling coefficients are disjoint. Comp. Appl. Math. 41, 240 (2022). https://doi.org/10.1007/s40314-022-01956-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-022-01956-6