Abstract

The spectral norm of an even-order tensor is defined and investigated. An equivalence between the spectral norm of tensors and matrices is given. Using derived representations of some tensor expressions involving the Moore–Penrose inverse, we investigate the perturbation theory for the Moore–Penrose inverse of tensor via Einstein product. The classical results derived by Stewart (SIAM Rev 19:634–662, 1977) and Wedin (BIT 13:217–232, 1973) for the matrix case are extended to even-order tensors. An implementation in the Matlab programming language is developed and used in deriving appropriate numerical examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

For a positive integer N, let \({\mathbf {I}}_1,\ldots ,{\mathbf {I}}_N\) be positive integers. An order N tensor \({\mathcal {A}}=( {\mathcal {A}}_{ {i_{1}i_{2}\ldots i_{{N}}} })_{1\le i_{j}\le {\mathbf {I}}_{j}}\), \((j = 1,\ldots , {N})\) is a multidimensional array with \({\mathfrak {I}}={\mathbf {I}}_{1}{\mathbf {I}}_{2}\cdots {\mathbf {I}}_{N}\) entries, where \({\mathbf {I}}_1,\ldots ,{\mathbf {I}}_N\) are positive integers. Let \({\mathbb {C}}^{{\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{N}}\) (resp. \({\mathbb {R}}^{{\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{N}}\)) be the set of the order N tensors of dimension \({\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{N}\) over complex numbers \({\mathbb {C}}\) (resp. real numbers \({\mathbb {R}}\)).

The conjugate transpose of a tensor \({\mathcal {A}} = ({\mathcal {A}}_{ i_{1}\ldots i_{M}j_{1} \ldots j_{N}}) \in {\mathbb {C}}^{{\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{M} \times {\mathbf {J}}_{1} \times \cdots \times {\mathbf {J}}_{N}}\) is denoted by \({\mathcal {A}}^{*}\) and elementwise defined as \(({\mathcal {A}}^{*})_{ j_{1} \ldots j_{N} i_{1} \ldots i_M}=(\overline{{\mathcal {A}}})_{ i_{1} \ldots i_{M} j_{1} \ldots j_N} \in {\mathbb {C}}^{{\mathbf {J}}_{1} \times \cdots \times {\mathbf {J}}_{N} \times {\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{M}}\), where the overline means the conjugate operator. When the tensors are defined over \({\mathbb {R}}\), the tensor \({\mathcal {A}}^{\mathrm T}\) satisfying \(({\mathcal {A}}^{\mathrm T})_{ j_{1} \ldots j_{N} i_{1} \ldots i_M}=({\mathcal {A}})_{ i_{1}\ldots i_{M} j_{1} \ldots j_N} \in {\mathbb {C}}^{{\mathbf {J}}_{1} \times \cdots \times {\mathbf {J}}_{N} \times {\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{M}}\) is called the transpose of \({\mathcal {A}}\).

The Einstein product of tensors is defined in Einstein (2007) by the operation \(*_{N}\) via

where \({\mathcal {A}} \in {\mathbb {C}}^{{\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{N}}\times {\mathbf {K}}_{1} \times \cdots \times {\mathbf {K}}_{{N}}}\), \({\mathcal {B}} \in {\mathbb {C}}^{{\mathbf {K}}_{1}\times \cdots \times {\mathbf {K}}_{{N}}\times {\mathbf {J}}_{1}\times \cdots \times {\mathbf {J}}_{{M}}}\) and \({\mathcal {A}}*_{N}{\mathcal {B}} \in {\mathbb {C}}^{{\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{N}} \times {\mathbf {J}}_{1} \times \cdots \times {\mathbf {J}}_{{M}}}\). The associative law of this tensor product holds. In the above formula, when \({\mathcal {B}} \in {\mathbb {C}}^{{\mathbf {K}}_{1} \times \cdots \times {\mathbf {K}}_{{N}}}\), then

where \({\mathcal {A}}*_{N}{\mathcal {B}} \in {\mathbb {C}}^{{\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{N}}}\). When \({\mathcal {A}} \in {\mathbb {C}}^{{\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{N}}}\) and \({\mathcal {B}}\) is a vector \({\mathbf {b}} = (b_{i})\in {\mathbb {C}}^{{I}_{{N}}}\), the product is defined by operation \(\times _{{N}}\) via

where \({\mathcal {A}}\times _{{N}}{\mathcal {B}} \in {{\mathbb {C}}^{{\mathbf {I}}_{1} \times \cdots \times {I}_{{N}-1}}}\).

Definition 1.1

(Sun et al. 2016) Let \({\mathcal {A}} \in {\mathbb {C}}^{{\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{N}} \times {\mathbf {K}}_{1} \times \cdots \times {\mathbf {K}}_{{N}}}\). The tensor \({\mathcal {X}} \in {\mathbb {C}}^{{\mathbf {K}}_{1} \times \cdots \times {\mathbf {K}}_{{N}} \times {\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{N}}}\) which satisfies

is called the Moore–Penrose inverse of \({\mathcal {A}}\), abbreviated by M-P inverse, denoted by \({\mathcal {A}}^\dagger \). If the equation (i) of the above equations \((1^T)\)–\((4^T)\) holds, then \({\mathcal {X}}\) is called an (i)-inverse of \({\mathcal {A}}\), denoted by \({\mathcal {A}}^{(i)}\).

For a tensor \({\mathcal {A}} \in {\mathbb {C}}^{{I}_{1} \times \cdots \times {I}_{{N}} \times {I}_{1} \times \cdots \times {I}_{{N}}}\), if there exists a tensor \({\mathcal {X}}\), such that \({\mathcal {A}}*_{{N}}{\mathcal {X}} = {\mathcal {X}}*_{{N}}{\mathcal {A}} = {\mathcal {I}}\), then \({\mathcal {X}}\) is called the inverse of \({\mathcal {A}}\), denoted by \({\mathcal {A}}^{-1}\). Clearly, if \({\mathcal {A}}\) is invertible, then \({\mathcal {A}}^\dagger = {\mathcal {A}}^{-1}\).

The Moore–Penrose inverse of matrices and linear operators plays an important role in theoretical study and numerical analysis in many areas, such as the singular matrix problems, ill-posed problems, optimization problems, total least-squares problem (Xie et al. 2019; Zheng et al. 2017), and statistical problems (Ben-Israel and Greville 2003; Cvetkovic-Illic and Wei 2017; Wang et al. 2018; Wei 2014). As a continuation of these results, operations with tensors have become increasingly prevalent in recent years (Che and Wei 2019; Che et al. 2019; Ding and Wei 2016; Harrison and Joseph 2016; Medellin et al. 2016; Qi and Luo 2017; Wei and Ding 2016). Brazell et al. (2013) introduced the notion of ordinary tensor inverse. Sun et al. (2014, 2016) prove the existence and uniqueness of the Moore–Penrose inverse and \(\{i,j,k\}\)-inverses of even-order tensors with the Einstein product. Panigrahy and Mishra (2018) and Sun et al. (2014, 2016) defined the Moore–Penrose inverse and \(\{i\}\)-inverses \((i = 1, 2, 3, 4)\) of even-order tensors with the Einstein product. In addition, the general solutions of some multilinear systems were given in terms of defined generalized inverses. A few further characterizations of different generalized inverses of tensors in conjunction with the new method to compute the Moore–Penrose inverse of tensors were considered in Behera and Mishra (2017). The weighted Moore–Penrose inverse in tensor spaces was introduced in Ji and Wei (2017). In addition, a characterization of the least-squares solutions to a multilinear system as well as the relationship between the weighted minimum-norm least-squares solution of a multilinear system and the weighted Moore–Penrose inverse of its coefficient tensor were considered in Ji and Wei (2017). Sun et al. (2018) defined \(\{i\}\)-inverses for \(i = 1, 2, 5\) and the group inverse of tensors, assuming a general tensor product. Panigrahy et al. (2018) proved some additional properties of the Moore–Penrose inverse of tensors via the Einstein product and also derived a few necessary and sufficient conditions for the reverse-order law for the Moore–Penrose inverse of tensors. Several new sufficient conditions which ensure the reverse-order law of the weighted Moore–Penrose inverse for even-order square tensors were presented in Panigrahy and Mishra (2019). Recently, Ji and Wei (2018) investigated the Drazin inverse of even-order tensors with Einstein product. Liang and Zheng (2018) defined an iterative algorithm for solving Sylvester tensor equation based on the Einstein product.

Using another definition of the tensor product, some basic properties for order 2 left (right) inverse and product of tensors were given in Bu et al. (2014). The generalized inverse of tensors was established in Jin et al. (2017) using tensor equations and the t-product of tensors. The definition of generalized tensor function via the tensor singular value decomposition based on the t-product was introduced in Miao et al. (2019). In addition, the least-squares solutions of tensor equations as well as an algorithm for generating the Moore–Penrose inverse of a tensor were proposed in Jin et al. (2017), Shi et al. (2013).

On the other hand, the additive and multiplicative perturbation models have been investigated frequently during the past decades. For more details, the reader is referred to the references (Cai et al. 2011; Liu et al. 2008; Meng and Zheng 2010; Stewart 1977; Wedin 1973; Wei and Ling 2010; Wei 1999). The classical results derived by Stewart (1977) and Wedin (1973) have been improved in Li et al. (2013), Xu et al. (2010b). The acute perturbation of the group inverse was investigated in Wei (2017). Some results related to the perturbation of the oblique projectors which include the weighted pseudoinverse were presented in Xu et al. (2008, 2010a). Some optimal perturbation bounds of the weighted Moore–Penrose inverse under the weighted unitary invariant norms, the weighted Q-norms and the weighted F-norms were obtained in Xu et al. (2011). A sharp estimation for the perturbation bounds of weighted Moore–Penrose inverse was considered in Ma (2018). Meyer (1980) presented a perturbation formula with application to Markov chains. The authors extended the formula to the Drazin inverse \(A^{\mathrm D}\). Two finite-time convergent Zhang neural network models for time-varying complex matrix Drazin inverse have been presented in Qiao et al. (2018). An explicit formula for perturbations of an outer inverse under certain conditions was given in Zhang and Wei (2008). The perturbation analysis for the nearest \(\{1\}\), \(\{1, i\}\), and \(\{1, 2, i\}\)-inverses with respect to the multiplicative perturbation model was considered in Meng et al. (2017).

In addition, the perturbation theory for the tensor eigenvalue and singular value problems of tensors has been investigated recently. The perturbation bounds of the tensor eigenvalue and singular value problems of even-order tensors were considered in Che et al. (2016). The explicit estimation of the backward error for the largest eigenvalue of an irreducible nonnegative tensor was given in Li and Ng (2014).

Our intention in the present paper is to extend the results concerning the perturbation of the Moore–Penrose inverse from the complex matrix space to more general results in the tensor space. According to this goal, our intention is to extend the classical results derived in Stewart (1977) and Wedin (1973) for the matrix case to even-order tensors.

The null spaces and the ranges of tensors were introduced in Ji and Wei (2017).

Definition 1.2

(Ji and Wei 2017) For \({\mathcal {A}} \in {\mathbb {C}}^{{\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{N}} \times {\mathbf {K}}_{1} \times \cdots \times {\mathbf {K}}_{{N}}}\), the range \({\mathcal {R}}({\mathcal {A}})\) and the null space \({\mathcal {N}}({\mathcal {A}})\) of \({\mathcal {A}}\) are defined by

where \({\mathcal {O}}\) is an appropriate zero tensor.

Definition 1.3

(Orthogonal Projection) The orthogonal projection onto a subspace \({\mathcal {R}}({\mathcal {A}})\) is denoted by \({P}_{{\mathcal {A}}}\) and defined as

Clearly, \({P}_{{\mathcal {A}}}\) is the Hermitian and idempotent, and \({\mathcal {R}}({P}_{{\mathcal {A}}}) = {\mathcal {R}}({\mathcal {A}})\). Similarly

is the projection onto \({\mathcal {R}}({\mathcal {A}}^*)\).

Definition 1.4

(Complement of projection) The projection onto \({\mathcal {R}}({\mathcal {A}})^{\bot }\) will be denoted by

Likewise

will denote the projection onto \({\mathcal {R}}({\mathcal {A}}^*)^{\bot }\).

Main contributions of the manuscript can be summarized as follows.

- (1)

The spectral norm of a tensor is defined and investigated.

- (2)

Useful representations of \({{\mathcal {A}}}*_N{\mathcal A}^\dagger \) and \({{\mathcal {I}}}-{{\mathcal {A}}}*_N{\mathcal A}^\dagger \) are derived.

- (3)

The perturbation theory for the Moore–Penrose inverse of even-order tensors via Einstein product is considered using derived representations of some tensor expressions involving the Moore–Penrose inverse. Therefore, derived results represent the first contribution to the perturbation of the Moore–Penrose inverse of tensors.

The rest of this paper is organized as follows. The spectral tensor norm is defined and investigated in Sect. 2. Useful representations of \({{\mathcal {A}}}*_N{{\mathcal {A}}}^\dagger \) and \({{\mathcal {I}}}-{{\mathcal {A}}}*_N{{\mathcal {A}}}^\dagger \) are derived in Sect. 3. Section 4 generalizes some results from the matrix theory to the perturbation theory for the Moore–Penrose inverse of even-order tensor via Einstein product. Numerical examples are presented in Sect. 5.

2 Spectral norm of tensors

To simplify presentation, we use the additional notation

where \({\mathbf {I}}_1,\ldots ,{\mathbf {I}}_N\) are positive integers. Then, the tensor \({\mathcal {A}} \in {\mathbb {C}}^{{\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{M}} \times {\mathbf {K}}_{1} \times \cdots \times {\mathbf {K}}_{{N}}}\) is denoted shortly by \({\mathcal {A}} \in {\mathbb {C}}^{{\mathbf {I}}(M) \times {\mathbf {K}}(N)}\). The identity tensor \({\mathcal {I}}\) of the order \({\mathbf {I}}(N)\times {\mathbf {I}}(N)\) tensor is defined as in Brazell et al. (2013) by

where

denotes the Kronecker delta operator.

The Frobenius inner product of two tensors \({\mathcal {A}}, {\mathcal {B}} \in {\mathbb {C}}^{{\mathbf {I}}({N})}\) is defined as

If \(({\mathcal {A}}, {\mathcal {B}}) = 0\), then \({\mathcal {A}}\) is orthogonal to \({\mathcal {B}}\). The Frobenius norm of \({\mathcal {A}}\) is defined by \(\Vert {\mathcal {A}}\Vert _F=\sqrt{({\mathcal {A}}, {\mathcal {A}})}.\)

A complex (real) tensor of order m dimension n is defined by \({\mathcal {A}}=({\mathcal {A}}_{ i_1 \ldots i_m})\), \({\mathcal {A}}_{ i_1\ldots i_m}\in {\mathbb {C}} ({\mathbb {R}})\), where \(i_j=1,\ldots ,n\) for each \(j= 1, \ldots ,m\). If \({\mathbf {x}}=(x_1,\ldots ,x_n)^{\mathrm T}\) is an n-dimensional vector, then \({\mathbf {x}}^m=x \otimes x \otimes \cdots \otimes x\) is considered as an mth order n-dimensional rank-one tensor with entries \({ (x^m)_{i_{1}, \ldots , i_{m}}= x_{i_1}\ldots x_{i_m}}\), where “\(\otimes \)” is the Kronecker product of vectors. Then

is the tensor product of \({\mathcal {A}}\) and \({\mathbf {x}}^m\). A tensor–vector multiplication of a tensor \({\mathcal {A}}=(a_{i_1,\ldots ,i_m})\in {\mathbb {C}}^{n\times \cdots \times n}\) of order m dimension n and an n-dimensional vector \(x=(x_1, x_2,\ldots ,x_n)^{\mathrm T}\) is an n-dimensional vector \({\mathcal {A}}x^{m-1}\), whose ith component is equal to

The eigenvalue of a tensor was introduced in Qi (2005). A complex number \(\lambda \) is called an eigenvalue of A and \({\mathbf {x}}\) is an eigenvector of A associated with \(\lambda \) if the equation

is satisfied.

Recently, Liang et al. (2019) proposed a new definition of the eigenvalue of an even-order square tensor. Let \({\mathcal {A}} \in {\mathbb {C}}^{{\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{N}} \times {\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{N}}}\) be given. If a nonzero tensor \({\mathcal {X}} \in {\mathbb {C}}^{{\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{N}}}\) and a complex number \(\lambda \) satisfy

then \(\lambda \) is an eigenvalue of the tensor \( {\mathcal {A}}\) and \( {\mathcal {X}}\) the eigentensor with respect to \(\lambda \).

In addition, we found the following definition of the tensor spectral norm from Li (2016).

Definition 2.1

(Li 2016) For a given tensor \({\mathcal {T}}\in {\mathbb {R}}^{{\mathbf {I}}_{1}\times {\mathbf {I}}_2\times \cdots \times {\mathbf {I}}_N}\), the spectral norm of \({\mathcal {T}}\), denoted by \(\Vert {\mathcal {T}}\Vert _{\sigma }\), is defined as

where \(\Vert {\mathbf {x}}\Vert _F\) denotes the Frobenius norm of the vector \({\mathbf {x}}\) and \({\mathbf {x}}_1\otimes \cdots \otimes {\mathbf {x}}_N\) means the outer product of vectors: \(({\mathbf {x}}_1\otimes \cdots \otimes {{\mathbf {x}}_N)}_{i_1,\ldots ,i_N}=({\mathbf {x}}_1)_{i_1}\cdots ({\mathbf {x}}_N)_{i_N}\).

Essentially, \(\Vert {\mathcal {T}}\Vert _{\sigma }\) is the maximal value of the Frobenius inner product between \({\mathcal {T}}\) and the rank-one tensor \({\mathbf {x}}_1\otimes \cdots \otimes {\mathbf {x}}_N\) whose Frobenius norm is one.

Let the eigenvalues of a complex even-order square tensor are defined as in (2.1). By \(\lambda _{\min }({\mathcal {K}})\) and \(\lambda _{\max }({\mathcal {K}})\), we denote the smallest and largest eigenvalue of a tensor \({\mathcal {K}}\), respectively. Similarly, \({\mu _{1}({\mathcal {K}})}\) stands for the largest singular value of a tensor \({\mathcal {K}}\).

Lemma 2.1

Let \({\mathcal {A}} \in {\mathbb {C}}^{{\mathbf {I}}({N}) \times {\mathbf {K}}({N})}\). Then, the spectral norm of \({\mathcal {A}}\) can be defined as

where \(\lambda _{\max }\left( {\mathcal {A}}^{*}*_{{N}}{\mathcal {A}}\right) \) denotes the largest eigenvalue of \({\mathcal {A}}^{*}*_{{N}}{\mathcal {A}}\) and \(\mu _{1}({\mathcal {A}})\) is the largest singular value of \({\mathcal {A}}\).

Proof

It is necessary to verify that the definition (2.2) satisfies properties of a norm function.

(1) Clearly, \(\Vert {\mathcal {A}}\Vert _{2} \ge 0 \), and \(\Vert {\mathcal {A}}\Vert _{2} = 0\) if and only if \({\mathcal {A}}=0\),

(2) The second property of \(\Vert {\mathcal {A}}\Vert _{2}\) can be verified using

(3) Since

immediately from the definition of the spectral norm it follows that

Therefore, (2.2) is a valid definition of the matrix norm. \(\square \)

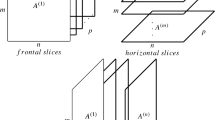

Our intention is to determine the spectral norm of a tensor explicitly using the approach based on the matricization or unfolding. Matricization is the transformation that transforms a tensor into a matrix. Let \({\mathbf {I}}_1,\ldots ,{\mathbf {I}}_M,{\mathbf {K}}_1,\ldots ,{\mathbf {K}}_N\) be positive integers. Assume that \({\mathfrak {I}}, {\mathfrak {K}}\) are positive integers defined by

Denote by \(\mathrm {Mat}({\mathcal {A}})\) the matrix obtained after the matricization

which transforms a tensor \({\mathcal {A}}\in {\mathbb {C}}^{{\mathbf {I}}({M}) \times {\mathbf {K}}({N})}\) into the matrix \(A\in {\mathbb {C}}^{{\mathfrak {I}}\times {\mathfrak {K}}}\). An arbitrary tensor \({\mathcal {A}}\) can be unfolded into an appropriate matrix A in different ways.

It is known that the spectral norm of the tensor is bounded by the spectral norm of the matricized tensor, i.e., \(\Vert \mathrm {Mat}({\mathcal {A}})\Vert _{\sigma }\ge \Vert {\mathcal {A}}\Vert _{\sigma }\) (see, for example, Li 2016).

One approach in the matricization, denoted by \(\psi :{\mathbb {C}}^{{\mathbf {I}}({M}) \times {\mathbf {K}}({N})}\mapsto {\mathbb {C}}^{{\mathfrak {I}}\times {\mathfrak {K}}}\) was proposed in Liang and Zheng (2018, Definition 2.4) (see also Brazell et al. 2013). The matricization \(\psi \) is defined by

where \({i}=(i_1,\ldots ,i_M)^{\mathrm T}\) and

To define an effective procedure for the tensor matricization, we use the reshaping operation denoted as rsh, which was introduced in Panigrahy et al. (2018). Later, we define the spectral norm of a tensor by means of the spectral norm of the reshaped tensor. This operation can be implemented by means of the standard Matlab function reshape.

Definition 2.2

(Panigrahy et al. 2018) Let \({\mathbf {I}}_1,\ldots {\mathbf {I}}_M,{\mathbf {K}}_1,\ldots {\mathbf {K}}_N\) be given integers. Assume that \({\mathfrak {I}}, {\mathfrak {K}}\) are the integers defined (2.3). The reshaping operation

transforms a tensor \({\mathcal {A}}\in {\mathbb {C}}^{{\mathbf {I}}({M}) \times {\mathbf {K}}({N})}\) into the matrix \(A\in \mathbb C^{{\mathfrak {I}}\times {\mathfrak {K}}}\) using the Matlab function reshape as follows:

The inverse reshaping is the mapping defined by

The following result from Panigrahy et al. (2018) will be useful.

Lemma 2.2

(Panigrahy et al. 2018) Let \({\mathcal {A}} \in {\mathbb {C}}^{{\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{N}}\times {\mathbf {K}}_{1} \times \cdots \times {\mathbf {K}}_{{N}}}\) and \({\mathcal {B}} \in {\mathbb {C}}^{{\mathbf {K}}_{1}\times \cdots \times {\mathbf {K}}_{{N}}\times {\mathbf {L}}_{1}\times \cdots \times {\mathbf {L}}_{{N}}}\) be given tensors, integers \({\mathfrak {I}},{\mathfrak {K}}\) are computed as in (2.3) and \({\mathfrak {L}}={\mathbf {L}}_{1}{\mathbf {L}}_{2}\cdots {\mathbf {L}}_{N}\). Then

where \(A=\mathrm {rsh}\left( {\mathcal {A}}\right) \in \mathbb C^{{\mathfrak {I}}\times {\mathfrak {K}}},B=\mathrm {rsh}\left( {\mathcal {B}}\right) \in {\mathbb {C}}^{{\mathfrak {K}}\times {\mathfrak {L}}}\).

Applying the inverse reshaping operator \(\mathrm {rsh}^{-1}()\) on both sides in (2.4), it can be concluded that \(\mathrm {rsh}^{-1}(AB) = \mathrm {rsh}^{-1}(A)*_{N} \mathrm {rsh}^{-1}(B) = {\mathcal {A}}*_{N}{\mathcal {B}}\).

Now, our intention is to approximate the tensor norm \(\Vert {\mathcal {A}}\Vert _2\) by an effective computational procedure. For this purpose, we propose Algorithm 1 for computing \(\mathrm {rsh}^{-1}(A)\) in terms of the Singular Value Decomposition (SVD) of A. Since eigenvalues in Liang et al. (2019) are defined for even-order square tensors, our further investigation will be restricted to even-order tensors.

In Lemma 2.3, we show that the tensor \({\mathcal {A}}\) in Algorithm 1 is defined well.

Lemma 2.3

The tensor \({\mathcal {A}}\) in Algorithm 1 is defined well.

Proof

Under the assumptions of Algorithm 1, an application of Lemma 2.2 gives

which confirms \({\mathcal {A}}=\mathrm {rsh}^{-1}(A)\). \(\square \)

As a consequence of Algorithm 1, Lemma 2.4 shows that the spectral norm is invariant with respect to the function \(\mathrm {rsh}\).

Lemma 2.4

Let \({\mathcal {A}} \in {\mathbb {C}}^{{\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{N}}\times {\mathbf {K}}_{1} \times \cdots \times {\mathbf {K}}_{{N}}}\) be a given tensor and integers \({\mathfrak {I}},{\mathfrak {K}}\) are computed as in (2.3). Then

where \(A\in {\mathbb {C}}^{{\mathfrak {I}}\times {\mathfrak {K}}}\).

Proof

According to Algorithm 1, the tensor \({\mathcal {A}} \in {\mathbb {C}}^{{\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{N}}\times {\mathbf {K}}_{1} \times \cdots \times {\mathbf {K}}_{{N}}}\) possesses the same singular values as the matrix \(A\in {\mathbb {C}}^{{\mathfrak {I}}\times {\mathfrak {K}}}\). \(\square \)

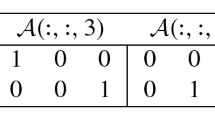

Example 2.1

Let \({\mathcal {A}} = rand(2,2,2,2)\) is defined by

then

Simple verification shows that \(\Vert {\mathcal {A}}\Vert _2=\Vert A\Vert _2=2.6201.\)

Various definitions of the tensor rank can be found in the relevant literature. For more details see Brazell et al. (2013), and Comon et al. (2009). An alternative definition of the tensor rank was introduced in Panigrahy et al. (2018).

Definition 2.3

(Panigrahy et al. 2018) Let \({\mathcal {A}}\in {\mathbb {C}}^{{\mathbf {I}}({N}) \times {\mathbf {K}}({N})}\) and \(A=reshape \left( {\mathcal {A}},{\mathfrak {I}}, {\mathfrak {K}}\right) =\mathrm {rsh}({\mathcal {A}})\in \mathbb C^{{\mathfrak {I}}\times {\mathfrak {K}}}\) are defined as in Algorithm 1. Then, the tensor rank of \({\mathcal {A}}\) is denoted by \(\mathrm {rshrank}({\mathcal {A}})\) and defined by \(\mathrm {rshrank}({{\mathcal {A}}})=\mathrm {rank}({A})\).

3 Preliminary results

For \(a \in {\mathbb {C}}\), let \(a^\dagger = a^{-1}\), if \(a \ne 0\) and \(a^\dagger = 0\), if \(a = 0\). Following this notation, the tensor \({\mathcal {D}} \in {\mathbb {C}}^{{\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{N}} \times {\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{N}}}\) is called diagonal if all its entries are zero except \({\mathcal {D}}_{i_{1} \ldots i_{{N}}i_{1} \ldots i_{{N}}}\), that is

where \({\mathcal {D}}_{i_{1} \ldots i_{{N}}i_{1}\ldots i_{{N}}}\) is a complex number. Particularly, a diagonal tensor becomes a unit tensor in this case \({\mathcal {D}}_{ i_{1}\cdots i_{{N}}j_{1}\ldots j_{{N}}}= \delta _{i_1j_1}\cdots \delta _{i_Nj_N}\), where

is the Kronecker delta, then \({\mathcal {D}}\) is a unit tensor, denoted by \({\mathcal {I}}\). It follows from Definition 1.1 that the Moore–Penrose inverse \({\mathcal {D}}^\dagger \in {\mathbb {C}}^{{\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{N}} \times {\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{N}}}\) of the diagonal tensor defined in (3.1) is equal to

It is easy to see that if \({\mathcal {D}}\) is a diagonal tensor, then \({\mathcal {D}}*_{{N}}{\mathcal {D}}^\dagger \) and \({\mathcal {D}}^\dagger *_{{N}}{\mathcal {D}}\) are diagonal tensors, whose diagonal entries are 1 or 0.

The tensor \({\mathcal {A}} \in {\mathbb {C}}^{{\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{N}} \times {\mathbf {I}}_{1} \times \cdots \times {\mathbf {I}}_{{N}}}\) is orthogonal if \({\mathcal {A}}*_{{N}}{\mathcal {A}}^{*} = {\mathcal {A}}^{*}*_{{N}}{\mathcal {A}} = {\mathcal {I}}\).

The computation of the Moore–Penrose inverse of a tensor was proposed in Brazell et al. (2013), Sun et al. (2016). This method is restated in Lemma 3.1.

Lemma 3.1

(Brazell et al. 2013; Sun et al. 2016) For a tensor \({\mathcal {A}} \in {\mathbb {C}}^{{\mathbf {I}}({N}) \times {\mathbf {K}}({N})}\), the singular value decomposition (SVD) of \({\mathcal {A}}\) has the form:

where \({\mathcal {U}} \in {\mathbb {C}}^{{\mathbf {I}}({N}) \times {\mathbf {I}}({N})}\) and \({\mathcal {V}} \in {\mathbb {C}}^{{\mathbf {K}}({N}) \times {\mathbf {K}}({N})}\) are orthogonal tensors, \({\mathcal {D}} \in {\mathbb {C}}^{{\mathbf {I}}({N}) \times {\mathbf {K}}({N})}\) is a diagonal tensor satisfying

wherein \(\mu _{i_{1} \ldots i_{{N}}}\) are the singular values of \({\mathcal {A}}\). Then

where

and

An effective algorithm for computing the Moore–Penrose inverse of a tensor in the form (3.3) was presented in Algorithm 1 from Huang et al. (2018). To compute the Moore–Penrose inverse by means of (3.3), it is necessary to compute the transpose of a tensor. For this purpose, we developed the following Algorithm 2.

Example 3.1

This example is aimed to verification of Algorithm 2. Consider \({\mathcal {A}}=\mathrm {rand}(2,2,2,2)\) equal to

Then, \(A = \mathrm {rsh}\left( {\mathcal {A}},4,4\right) \) is equal to

Furthermore, the results of Algorithm 2 is equal to \({\mathcal {A}}=\mathrm {reshape}(A^{\mathrm T},2,2,2,2)\), which gives

On the other hand, a direct calculation gives

Therefore, the result of direct calculation coincides with (3.4).

Lemma 3.2

Let \({\mathcal {A}} \in {\mathbb {C}}^{{\mathbf {I}}({N}) \times {\mathbf {K}}({N})}\) be a given tensor, let \(\mu _{i_{1},\cdots ,i_{{N}}}\) be the singular values of \({\mathcal {A}}\) and \(\nu _{i_{1},\cdots ,i_{{L}}}\) be the nonzero singular values of \({\mathcal {A}}\). Then

where \(\nu _{\min }({\mathcal {A}})\) denotes the smallest nonzero singular value of \({\mathcal {A}}\).

Proof

The identity \(\Vert {\mathcal {A}}\Vert _{2} = \mu _{1}({\mathcal {A}})\) follows from Lemma 2.1. Since \(\nu _{i_{1},\ldots ,i_{{L}}}\) are nonzero singular values of \({\mathcal {A}}\) defined in (3.2), it follows from (3.3) that \(\left( \nu _{i_{1},\cdots ,i_{{L}}}\right) ^{-1} > 0\) are the nonzero singular values of \({\mathcal {A}}^\dagger \). Accordingly, \(\Vert {\mathcal {A}}^\dagger \Vert _{2} =\mu _{1}(A^\dagger )= \frac{1}{\nu _{\min }({\mathcal {A}})}\). \(\square \)

A useful representation for \({{\mathcal {A}}}*_N{{\mathcal {A}}}^\dagger \) is derived in Lemma 3.3.

Lemma 3.3

Let \({\mathcal {A}}\in {\mathbb {C}}^{{\mathbf {I}}({N}) \times {\mathbf {K}}({N})}\) be an arbitrary tensor and the positive integers \({\mathfrak {I}}, {\mathfrak {K}}\) are defined in (2.3). Then

Proof

We follow Algorithm 1 from Huang et al. (2018) to define \({\mathcal {A}}^\dagger \) and \({\mathcal {B}}^\dagger \). According to Step 1, it is necessary to reshape \({\mathcal {A}}\in {\mathbb {C}}^{{\mathbf {I}}({N}) \times {\mathbf {K}}({N})}\) into a matrix \(A\in {\mathbb {C}}^{{\mathfrak {I}}\times {\mathfrak {K}}}\), where \({\mathfrak {I}}, {\mathfrak {K}}\) are defined in (2.3). This transformation is denoted by \(\mathrm {rsh}({\mathcal {A}})=A\). Step 2 assumes the Singular Value Decomposition (SVD) of A of the form \([U_A,D_A,V_A]=SVD(A)\), which implies \(A=U_A D_A V_A^*\), where \(U_A\in {\mathbb {C}}^{{\mathfrak {I}}\times {\mathfrak {I}}}\) and \(V_A\in {\mathbb {C}}^{{\mathfrak {K}}\times {\mathfrak {K}}}\) are unitary and the matrix \(D_A\in {\mathbb {C}}^{{\mathfrak {I}}\times {\mathfrak {K}}}\) is of the diagonal form:

where

is diagonal with singular values of A on the main diagonal and

are appropriate zero blocks. According to Step 3, we perform the reshaping operations:

Then, compute

and

According to Step 4 of Algorithm 1 from Huang et al. (2018)

Now, the tensor \({{\mathcal {A}}}\) possesses the representation:

Later, one can verify

where \(I_{{\mathfrak {I}}_R\times {\mathfrak {I}}_R}\) is the identity \({\mathfrak {I}}_R\times {\mathfrak {I}}_R\) matrix. Consequently, \({{\mathcal {A}}}*_N{{\mathcal {A}}}^\dagger \) possesses the representation (3.5). \(\square \)

The result of Proposition 3.1 will be useful.

Proposition 3.1

(Meng and Zheng 2010) Let \(W\in {\mathbb {C}}^{n\times n}\) be a unitary matrix with the block form:

Then, \(\Vert W_{12}\Vert = \Vert W_{21}\Vert \) for any unitarily invariant norm.

4 Main results

For the sake of convenience, we assume that the following condition holds

Lemma 4.1

If the Condition (4.1) is satisfied, then

Proof

According to the Lemma 3.2, we get

so

Then

which completes the proof. \(\square \)

Next, we give the decomposition of \({\mathcal {B}}^\dagger - {\mathcal {A}}^\dagger \).

Theorem 4.1

Let \({\mathcal {A}}, {\mathcal {E}} \in {\mathbb {C}}^{{\mathbf {I}}({N}) \times {\mathbf {K}}({N})}\) and \({\mathcal {B}} = {\mathcal {A}} + {\mathcal {E}}\). Then

Proof

After some verifications, one can obtain

According to the properties \((3^T)\) and \((1^T)\) from Definition 1.1, it follows that

where \({\mathcal {O}}\in {\mathbb {C}}^{{\mathbf {K}}({N}) \times {\mathbf {I}}({N})}\) is an appropriate zero tensor. Consequently

Analogously, we arrive to

which further implies

The conclusion can be obtained. \(\square \)

Lemma 4.2

If \({\mathcal {O}} \ne {\mathcal {P}} \in {\mathbb {C}}^{{\mathbf {K}}({N}) \times {\mathbf {K}}({N})}\), and \({\mathcal {P}}^{2}={\mathcal {P}}={\mathcal {P}}^{*}\), then

Proof

Since

it follows that

Therefore, \(\Vert {\mathcal {P}}\Vert _{2}=1\) in the case \({\mathcal {P}} \ne {\mathcal {O}}\). \(\square \)

Theorem 4.2

Let \({\mathcal {A}}, {\mathcal {E}} \in {\mathbb {C}}^{{\mathbf {I}}({N}) \times {\mathbf {K}}({N})}, {\mathcal {B}} = {\mathcal {A}} + {\mathcal {E}}{.}\) If the Condition (4.1) is satisfied, then

Proof

Since \(({\mathcal {I}}-{\mathcal {A}}*_{{N}}{\mathcal {A}}^\dagger )^{2}=({\mathcal {I}}-{\mathcal {A}}*_{{N}}{\mathcal {A}}^\dagger )=({\mathcal {I}}-{\mathcal {A}}*_{{N}}{\mathcal {A}}^\dagger )^{*}\) and \(({\mathcal {I}}-{\mathcal {B}}^\dagger *_{{N}}{\mathcal {B}})^{2}=({\mathcal {I}}-{\mathcal {B}}^\dagger *_{{N}}{\mathcal {B}})=({\mathcal {I}}-{\mathcal {B}}^\dagger *_{{N}}{\mathcal {B}})^{*}\), by Lemma 4.2

and from Theorem 4.1

An application of Lemma 4.1 initiates

Furthermore, the inequality (4.3) can be verified taking into account \(\bigtriangleup = \Vert {\mathcal {A}}^\dagger \Vert _{2}\Vert {\mathcal {E}}\Vert _{2}\). \(\square \)

Theorem 4.3

If \({\mathcal {A}}, {\mathcal {E}} \in {\mathbb {C}}^{{\mathbf {I}}({N}) \times {\mathbf {K}}({N})}, {\mathcal {B}} = {\mathcal {A}} + {\mathcal {E}}\), and \(\mathrm {rshrank}({{\mathcal {A}}})=\mathrm {rshrank}({{\mathcal {B}}})\), then

where \({\mathcal {I}}\) is the identity \({\mathbf {I}}(N)\times {\mathbf {I}}(N)\) tensor.

Proof

According to Lemma 3.3, it follows that

Furthermore, using Lemma 2.2 and

it follows that

Similarly, in view of \(\mathrm {rshrank}({{\mathcal {B}}})=\mathrm {rshrank}({{\mathcal {A}}})\), it follows \(\mathrm {rank}({B})=\mathrm {rank}({A})\), where \(\mathrm {rsh}({\mathcal {A}})=A\) and \(\mathrm {rsh}({\mathcal {B}})=B\). The SVD of \({\mathcal {B}}\) is given by \([U_B,D_B,V_B]=SVD(B)\). Now, consider the reshaping operations

where

is diagonal with singular values of B on the main diagonal. This causes

and further

Now, observe the tensor products \({{\mathcal {U}}}_A^**_N {\mathcal U}_B\) and \({{\mathcal {U}}}_B^**_N {{\mathcal {U}}}_A\). They are also unitary and equal to

where

In addition, it can be verified that \(\Vert {\cdot }\Vert _2\) is a unitary invariant tensor norm (Govaerts and Pryce 1989), which implies in conjunction with Lemma 2.2:

An application of Lemma 2.4 further implies

Finally, using the result from Govaerts and Pryce (1989), it follows that

On the other hand, in dual case, it follows that

The proof can be completed by verifying \( \mu _{1}\left( {\mathcal {W}}_{12}\right) =\mu _{1}\left( {\mathcal {W}}_{21}^*\right) . \) Indeed, according to Proposition 3.1, it follows that \(\Vert W_{12}\Vert _2=\Vert W_{21}\Vert _2\). The proof can be completed using the result \(\Vert W_{21}\Vert _2=\Vert W_{21}^*\Vert _2\) from Govaerts and Pryce (1989). \(\square \)

Corollary 4.1

If \({\mathcal {A}}, {\mathcal {E}} \in {\mathbb {C}}^{{\mathbf {I}}({N}) \times {\mathbf {K}}({N})}\), \({\mathcal {B}} = {\mathcal {A}} + {\mathcal {E}}\), and \(\mathrm {rshrank}({{\mathcal {A}}}) = \mathrm {rshrank}({{\mathcal {B}}})\), let

then

Proof

Clearly, \({\mathcal {G}}={\mathcal {B}}^\dagger *_{{N}}{\mathcal {B}}*_{{N}}{\mathcal {B}}^\dagger *_{{N}}({\mathcal {I}}-{\mathcal {A}}*_{{N}}{\mathcal {A}}^\dagger )\). Then

since

Therefore, \(\Vert {\mathcal {I}}-{\mathcal {B}}*_{{N}}{\mathcal {B}}^\dagger \Vert _{2}=1\), applying Theorem 4.3, one can obtain

Thus, the statement can be obtained. \(\square \)

Theorem 4.4

Let \({\mathcal {A}}, {\mathcal {E}} \in {\mathbb {C}}^{{\mathbf {I}}({N}) \times {\mathbf {K}}({N})}, {\mathcal {B}} = {\mathcal {A}} + {\mathcal {E}}\) and \({\mathfrak {I}},{\mathfrak {K}}\) are defined as in (2.3). If the Condition (4.1) is satisfied, then

where \(\triangle = \Vert {\mathcal {A}}^\dagger \Vert _{2}\Vert {\mathcal {E}}\Vert _{2}\) and the parameter k is defined as follows:

- (1)

if \(\mathrm {rshrank}({{\mathcal {A}}}) < \min ({\mathfrak {I}}, {\mathfrak {K}})\), then \(k = \frac{1+\sqrt{5}}{2}\);

- (2)

if \(\mathrm {rshrank}({{\mathcal {A}}}) = \min ({\mathfrak {I}}, {\mathfrak {K}})\), then \(k = \sqrt{2}\);

- (3)

if \(\mathrm {rshrank}({{\mathcal {A}}}) = {\mathfrak {I}} ={\mathfrak {K}}\), then \(k = 1\).

Proof

Let

By the Lemma 4.1, we can get

where \(\triangle = \Vert {\mathcal {A}}^\dagger \Vert _{2}\Vert {\mathcal {E}}\Vert _{2} = \Vert ({\mathcal {A}}^\dagger )^{*}\Vert _{2}\Vert {\mathcal {E}}^{*}\Vert _{2}\). Let

then

(1) Let \({\mathcal {X}} \in {\mathbb {C}}^{{I}_{1} \times \cdots \times {I}_{{N}} \times {K}_{1} \times \cdots \times {K}_{{N}}}, \Vert {\mathcal {X}}\Vert _{2} = 1\), and \({\mathcal {X}} = {\mathcal {X}}_{1}+{\mathcal {X}}_{2}\), where

Clearly, \({\mathcal {X}}_{1}\) and \({\mathcal {X}}_{2}\) are orthogonal; hence,

Therefore, there must be an angle \(\varphi \) which makes

Then

where \({\mathcal {Y}}_{1}={\mathcal {F}}*_{{N}}{\mathcal {X}}_{1},\ {\mathcal {Y}}_{2}={\mathcal {G}}*_{{N}}{\mathcal {X}}_{2},\ {\mathcal {Y}}_{3}={\mathcal {H}}*_{{N}}{\mathcal {X}}_{1} \).

Since

It is easy to verify that \({\mathcal {Y}}_{3}\) is orthogonal to \({\mathcal {Y}}_{1}\) and \({\mathcal {Y}}_{2}\); therefore

Therefore

(2) If \(\mathrm {rshrank}({{\mathcal {A}}})= \mathrm {rshrank}({{\mathcal {B}}}) = {\mathfrak {K}} < {\mathfrak {I}}\), owing to

then \(({\mathcal {I}}-{\mathcal {B}}^\dagger *_{{N}}{\mathcal {B}}) = {\mathcal {O}}\); therefore, \({\mathcal {H}}={\mathcal {O}}, {\mathcal {Y}}_{3}={\mathcal {O}}\). If rank\({(A)} =\) rank\({{(B)}} = {\mathfrak {I}} < {\mathfrak {K}}\), owing to

then \(({\mathcal {I}}-{\mathcal {A}}*_{{N}}{\mathcal {A}}^\dagger )={\mathcal {O}}\), so \({\mathcal {G}}={\mathcal {O}}, {\mathcal {Y}}_{2}={\mathcal {O}}\). When one of \({\mathcal {Y}}_{2}\) or \({\mathcal {Y}}_{3}\) is the zero tensor

Hence

(3) In the case \(\mathrm {rshrank}({{\mathcal {A}}})= \mathrm {rshrank}({{\mathcal {B}}}) = {\mathfrak {I}} = {\mathfrak {K}}\), according to (2), we know \({\mathcal {G}}={\mathcal {H}}={\mathcal {O}}\), so the conclusion is established. \(\square \)

Next, we introduce the condition number of the Moore–Penrose inverse for tensor \({\mathcal {A}}\):

Theorem 4.5

If the Condition (4.1) is satisfied, then

Proof

The statement can be verified using Theorem 4.4 and the definition of \({\mathbb {K}}_{2}({\mathcal {A}})\). \(\square \)

Theorem 4.5 shows that the perturbation \({\mathcal {E}}\) of \({\mathcal {A}}\) has little influence on \({\mathcal {A}}^\dagger \) when the condition number \({\mathbb {K}}_{2}({\mathcal {A}})\) is small, and when the condition number \({\mathbb {K}}_{2}({\mathcal {A}})\) is large, the influence of \({\mathcal {E}}\) on the disturbance to \({\mathcal {A}}^\dagger \) may be larger.

5 Examples

Example 5.1

This example is aimed to the verification of the inequality (4.2). Let the tensor \({\mathcal {A}} = 10^3*\mathrm {rand}(2,2,2,2)\) be defined by

and let \({\mathcal {E}} = 10^{-1}*\mathrm {rand}(2,2,2,2)\) be defined by

Then, \({\mathcal {B}} = {\mathcal {A}}+{\mathcal {E}}\) is defined by

It holds, \(\mathrm {rshrank}({{\mathcal {A}}})=\mathrm {rshrank}({{\mathcal {B}}})=4\). In addition, an application of Huang et al. (2018, Algorithm 1) gives the Moore–Penrose inverse of \({\mathcal {A}}\):

and the following Moore–Penrose inverse of \({\mathcal {B}}\):

In view of (2.5), it is easy to check that the tensor norms are equal to \(\Vert {\mathcal {A}}^\dagger \Vert _{2} =0.026095036211067\), \(\Vert {\mathcal {E}}\Vert _{2}=0.235145716909881\) and \(\bigtriangleup = \Vert {\mathcal {A}}^\dagger \Vert _{2}\Vert {\mathcal {E}}\Vert _{2}=0.006136135997641 < 1\). Therefore, Condition (4.1) is satisfied. Then, \(\Vert {\mathcal {B}}^\dagger \Vert _{2} =0.026093083833995\) and \(\frac{\Vert {\mathcal {A}}^\dagger \Vert _{2}}{1 - \bigtriangleup }= 0.026256147502919.\) Hence, the inequality (4.2) in Lemma 4.1 is verified.

Example 5.2

The tensors in Example 5.1 are invertible. Example 5.2 is aimed to the verification of the inequality (4.2) in singular tensor case. To this end, let \({\mathcal {A}}, {\mathcal {E}}\in {\mathbb {R}}^{(2\times 2)\times (2\times 2)}\) with

and

Then, the tensor \({\mathcal {B}} = {\mathcal {A}}+{\mathcal {E}}\) is defined by

It holds, \(\mathrm {rshrank}({{\mathcal {A}}})=\mathrm {rshrank}({{\mathcal {B}}})=2\). In addition, an application of Huan et al. (2018, Algorithm 1) gives the following Moore–Penrose inverse of \({\mathcal {A}}\):

Similarly

Following (2.5), it is easy to check \(\Vert {\mathcal {A}}^\dagger \Vert _{2} =0.005446932213520\), \(\Vert {\mathcal {E}}\Vert _{2}=0.149158220173799\) and \(\bigtriangleup = \Vert {\mathcal {A}}^\dagger \Vert _{2}\Vert {\mathcal {E}}\Vert _{2}=8.124547143759799\cdot 10^{-4} < 1\). Therefore, Condition (4.1) is satisfied. Then, \(\Vert {\mathcal {B}}^\dagger \Vert _{2} = 0.005446449437497\) and \(\frac{\Vert {\mathcal {A}}^\dagger \Vert _{2}}{1 - \bigtriangleup }= 0.005451361197625,\) which confirms the inequality (4.2) in Lemma 4.1.

Example 5.3

This example is a continuation of Example 5.1 to verify the inequality (4.3) proved in Theorem 4.2. Therefore, for the tensors \({\mathcal {A}}\) and \({\mathcal {E}}\) defined in Example 5.1, we have

and

Hence, inequality (4.3) of Theorem 4.2 is valid.

Example 5.4

This example is a continuation of Example 5.2 to verify the inequality (4.3) proved in Theorem 4.2. Therefore, for the tensors \({\mathcal {A}}\) and \({\mathcal {E}}\) defined in Example 5.2, we have

and

Hence, inequality (4.3) of Theorem 4.2 is confirmed.

Example 5.5

We shall use again the settings of Example 5.2 to verify the validity of equality (4.4).

After appropriate calculations, one can verify

In addition

Hence

Example 5.6

This example is a continuation of Example 5.2 with the aim to verify the validity of inequality (4.5). It is possible to compute

In addition, \(\Vert {\mathcal {E}}\Vert _{2}\Vert {\mathcal {A}}^\dagger \Vert _{2} \Vert {\mathcal {B}}^\dagger \Vert _{2}=4.424993522104842\cdot 10^{-6}.\) Therefore, the inequality (4.5) is verified.

Example 5.7

In this example, we verify cases (1)–(3) of Theorem 4.4.

Case (1) The tensors \({\mathcal {A}}, {\mathcal {E}}\) and \({\mathcal {B}}\) are reused from Example 5.2. It can be verified that \(\mathrm {rshrank}({{\mathcal {A}}})=2 < 4=\min ({\mathfrak {I}}, {\mathfrak {K}})\). From example 5.4, we have

and since \(\bigtriangleup = \Vert {\mathcal {A}}^\dagger \Vert _{2}\Vert {\mathcal {E}}\Vert _{2}=8.124547143759799\cdot 10^{-4}\) (see Example 5.2), it follows

Hence, inequality (4.6) of Theorem 4.4 is valid.

Case (2) We consider the tensors \({\mathcal {A}}, {\mathcal {E}}\) and \({\mathcal {B}}\) from Example 5.1. It is clear that \(\mathrm {rshrank}({{\mathcal {A}}})= 4=\min ({\mathfrak {I}}, {\mathfrak {K}})\). From Example 5.3, we have

and since \(\bigtriangleup = \Vert {\mathcal {A}}^\dagger \Vert _{2}\Vert {\mathcal {E}}\Vert _{2}= 0.006136135997641\) (see Example 5.1), we have

Hence, inequality (4.6) of Theorem 4.4 is valid.

Case (3) We consider the tensors \({\mathcal {A}}, {\mathcal {E}}\) and \({\mathcal {B}}\) from Example 5.1. Then, \(\mathrm {rshrank}({{\mathcal {A}}})= 4={\mathfrak {I}}={\mathfrak {K}}\). As in the previous case [Case (2)], we have

and

6 Concluding remarks

The aim of this paper is to generalize some results about the perturbation theory of the matrix pseudoinverse to tensors. For this purpose, we derive several useful representations and introduce some notions. The spectral norm of even-order tensors is defined by a computationally effective definition and investigated. In addition, useful representations of \({{\mathcal {A}}}*_N{{\mathcal {A}}}^\dagger \) and \({{\mathcal {I}}}-{{\mathcal {A}}}*_N{{\mathcal {A}}}^\dagger \) are derived. As a result, we explore the perturbation bounds for Moore–Penrose inverse of tensor via Einstein product. Unlike to so far exploited approaches which were developed only in the tensor or in the matrix case, our approach assumes an exact transition from one to another space. In this way, it is possible to extend many of known results from the matrix case into the multiarray case. The results derived in current research extend the classical results in the matrix case, derived by Stewart (1977) and Wedin (1973). It is shown that the influence of the perturbation in the tensors depends on exactly defined condition number. Illustrative numerical examples also confirm derived theoretical results.

Recently, Ji and Wei (2017, 2018) investigated the weighted Moore–Penrose inverses and the Drazin inverse of even-order tensors with Einstein product. It is natural to investigate possible extensions of derived results to the perturbation bounds for the weighted Moore–Penrose inverses and the Drazin inverse of tensors via Einstein product.

References

Behera R, Mishra D (2017) Further results on generalized inverses of tensors via the Einstein product. Linear Multilinear Algebra 65:1662–1682

Ben-Israel A, Greville TNE (2003) Generalized inverses: theory and applications, 2nd edn. Springer, New York

Brazell M, Li N, Navasca C, Tamon C (2013) Solving multilinear systems via tensor inversion. SIAM J Matrix Anal Appl 34:542–570

Bu C, Zhang X, Zhou J, Wang W, Wei Y (2014) The inverse, rank and product of tensors. Linear Algebra Appl 446:269–280

Cai LX, Xu WW, Li W (2011) Additive and multiplicative perturbation bounds for the Moore–Penrose inverse. Linear Algebra Appl 434:480–489

Che M, Wei Y (2019) Randomized algorithms for the approximations of Tucker and the tensor train decompositions. Adv Comput Math 45:395–428

Che M, Qi L, Wei Y (2016) Perturbation bounds of tensor eigenvalue and singular value problems with even order. Linear Multilinear Algebra 64:622–652

Che M, Qi L, Wei Y (2019) Stochastic \(R_0\) tensors to stochastic tensor complementarity problems. Optim Lett 13:261–279

Comon P, ten Berge JMF, De Lathauwer L, Castaing J (2009) Generic and typical ranks of multi-way arrays. Linear Algebra Appl 430:2997–3007

Cvetkovic-Illic DS, Wei Y (2017) Algebraic properties of generalized inverses. Springer, Singapore

Ding W, Wei Y (2016) Solving multi-linear systems with \({{\cal{M}}}\)-tensors. J Sci Comput 68:689–715

Djordjević DS, Rakočević V (2008) Lectures on generalized inverses. Faculty of Sciences and Mathematics, University of Niš, Niš

Einstein A (2007) The foundation of the general theory of relativity. In: Kox A, Klein M, Schulmann R (eds) The collected papers of Albert Einstein, vol 6. Princeton University Press, Princeton, pp 146–200

Govaerts W, Pryce JD (1989) A Singular value inequality for block matrices. Linear Algebra Appl 125:141–148

Harrison AP, Joseph D (2016) Numeric tensor framework: exploiting and extending Einstein notation. J Comput Sci 16:128–139

Huang S, Zhao G, Chen M (2018) Tensor extreme learning design via generalized Moore–Penrose inverse and triangular type-2 fuzzy sets. Neural Comput Appl. https://doi.org/10.1007/s00521-018-3385-5

Ji J, Wei Y (2017) Weighted Moore–Penrose inverses and the fundamental theorem of even-order tensors with Einstein product. Front Math China 12:1317–1337

Ji J, Wei Y (2018) The Drazin inverse of an even-order tensor and its application to singular tensor equations. Comput Math Appl 75:3402–3413

Jin H, Bai M, Benítez J, Liu X (2017) The generalized inverses of tensors and an application to linear models. Comput Math Appl 74:385–397

Li Z (2016) Bounds on the spectral norm and the nuclear norm of a tensor based on tensor partitions. SIAM J Matrix Anal Appl 37:1440–1452

Li W, Ng MK (2014) The perturbation bound for the spectral radius of a nonnegative tensor. Adv Numer Anal 2014, Article ID 109525. https://doi.org/10.1155/2014/109525

Li Z, Xu Q, Wei Y (2013) A note on stable perturbations of Moore–Penrose inverses. Numer Linear Algebra Appl 20:18–26

Liang M-L, Zheng B (2018) Gradient-based iterative algorithms for solving Sylvester tensor equations and the associated tensor nearness problems. arXiv:1811.10378v1

Liang M-L, Zheng B, Zhao R-J (2019) Tensor inversion and its application to the tensor equations with Einstein product. Linear Multilinear Algebra 67:843–870

Liu X, Wang W, Wei Y (2008) Continuity properties of the \(\{\rm 1\}\)-inverse and perturbation bounds for the Drazin inverse. Linear Algebra Appl 429:1026–1037

Ma H (2018) Acute perturbation bounds of weighted Moore–Penrose inverse. Int J Comput Math 95:710–720

Medellin D, Ravi VR, Torres-Verdin C (2016) Multidimensional NMR inversion without Kronecker products: multilinear inversion. J Magn Reson 269:24–35

Meng L, Zheng B (2010) The optimal perturbation bounds of the Moore–Penrose inverse under the Frobenius norm. Linear Algebra Appl 432:956–963

Meng L, Zheng B, Ma P (2017) Perturbation bounds of generalized inverses. Appl Math Comput 298:88–100

Meyer C (1980) The condition number of a finite Markov chain and perturbation bounds for the limiting probabilities. SIAM J Algebr Discrete Methods 1:273–283

Miao Y, Qi L, Wei Y (2019) Generalized tensor function via the tensor singular value decomposition based on the T-product. arXiv:1901.0425v2

Panigrahy K, Mishra D (2018) An extension of the Moore–Penrose inverse of a tensor via the Einstein product. arXiv:1806.03655v1

Panigrahy K, Mishra D (2019) Reverse order law for weighted Moore–Penrose inverses of tensors. arXiv:1901.01527v1

Panigrahy K, Behera R, Mishra D (2018) Reverse order law for the Moore–Penrose inverses of tensors. Linear Multilinear Algebra. https://doi.org/10.1080/03081087.2018.1502252

Qi L (2005) Eigenvalues of a real supersymmetric tensor. J Symb Comput 40:1302–1324

Qi L, Luo Z (2017) Tensor analysis: spectral theory and special tensors. SIAM, Philadelphia

Qiao S, Wang X, Wei Y (2018) Two finite-time convergent Zhang neural network models for time-varying complex matrix Drazin inverse. Linear Algebra Appl 542:101–117

Shi X, Wei Y, Ling S (2013) Backward error and perturbation bounds for high order Sylvester tensor equation. Linear Multilinear Algebra 61:1436–1446

Stanimirović PS, Ćirić M, Katsikis VN, Li C, Ma H (2018) Outer and \((b,c)\) inverses of tensors. Linear Multilinear Algebra. https://doi.org/10.1080/03081087.2018.1521783

Stewart G (1977) On the perturbation of pseudoinverses, projections and linear least squares problems. SIAM Rev 19:634–662

Sun L, Zheng B, Bu C, Wei Y (2014) Some results on the generalized inverse of tensors and idempotent tensors. arXiv:1412.7430v1

Sun L, Zheng B, Bu C, Wei Y (2016) Moore–Penrose inverse of tensors via Einstein product. Linear Multilinear Algebra 64:686–698

Sun L, Zheng B, Bu C, Wei Y (2018) Generalized inverses of tensors via a general product of tensors. Front Math China 13:893–911

Wang G, Wei Y, Qiao S (2018) Generalized inverses: theory and computations, 2nd edn, Developments in Mathematics, vol 53. Springer, Singapore; Science Press, Beijing

Wedin P (1973) Perturbation theory for pseudo-inverses. BIT 13:217–232

Wei Y (1999) On the perturbation of the group inverse and oblique projection. Appl Math Comput 98:29–42

Wei Y (2014) Generalized inverses of matrices, chapter 27. In: Hogben L (ed) Handbook of linear algebra, 2nd edn. Chapman and Hall/CRC, Boca Raton

Wei Y (2017) Acute perturbation of the group inverse. Linear Algebra Appl 534:135–157

Wei Y, Ding W (2016) Theory and computation of tensors. Academic Press, London

Wei M, Ling S (2010) On the perturbation bounds of g-inverse and oblique projections. Linear Algebra Appl 433:1778–1792

Xie P, Xiang H, Wei Y (2019) Randomized algorithms for total least squares problems. Numer Linear Algebra Appl 26(1):e2219 16 pp

Xu Z, Sun J, Gu C (2008) Perturbation for a pair of oblique projectors \(AA_{M, N}^\dagger \) and \(AA_{M, N}^\dagger \). Appl Math Comput 203:432–446

Xu Z, Gu C, Feng B (2010a) Weighted acute perturbation for two matrices. Arab J Sci Eng 35:129–143

Xu Q, Wei Y, Gu Y (2010b) Sharp norm-estimations for Moore–Penrose inverses of stable perturbations of Hilbert \(C^*\)-module operators. SIAM J Numer Anal 47:4735–4758

Xu W, Cai L, Li W (2011) The optimal perturbation bounds for the weighted Moore–Penrose inverse. Electron J Linear Algebra 22:521–538

Zhang N, Wei Y (2008) A note on the perturbation of an outer inverse. Calcolo 45:263–273

Zheng B, Meng L, Wei Y (2017) Condition numbers of the multidimensional total least squares problem. SIAM J Matrix Anal Appl 38:924–948

Acknowledgements

The authors would like to thank the editor and two referees for their detailed comments. This research is supported by the bilateral project between China and Serbia “The theory of tensors, operator matrices and applications (No. 4-5)”. H. Ma would like to thank Prof. Dragana S. Cvetković Ilić for her kind invitation and great hospitality; thank Prof. D.S. Djordjević and Prof. V. Rakoćević for their nice monograph (Djordjević and Rakočević 2008). Partial work is completed during her visiting at University of Nis̆ in 2017.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Jinyun Yuan.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Haifeng Ma is supported by the bilateral project between China and Poland (No. 37-18). Predrag S. Stanimirović gratefully acknowledges support from the Research Project 174013 of the Serbian Ministry of Science.

Rights and permissions

About this article

Cite this article

Ma, H., Li, N., Stanimirović, P.S. et al. Perturbation theory for Moore–Penrose inverse of tensor via Einstein product. Comp. Appl. Math. 38, 111 (2019). https://doi.org/10.1007/s40314-019-0893-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-019-0893-6