Abstract

In this paper, we will present a variational PDE-based image inpainting model in which we have used the square of the \(L^2\) norm of Hessian of the image u as regularization term. The Euler–Lagrange equation will lead us to a fourth-order linear PDE. For time discretization, we have used convexity splitting and the resulting semi-discrete scheme is solved in Fourier domain. Stability analysis for the semi-discrete scheme is carried out. We will demonstrate some numerical results and compare with \(\text {TV}-L^2\) and \(\text {TV}-H^{-1}\) model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Image inpainting is a process of filling in the missing part of an image from the information of its surroundings. Mathematically inpainting of an image is an extrapolation of the image. Image inpainting is an important area of research in the field of image processing. Inpainting has lot of applications in real life such as in restoration of ancient frescoes and in reduction of artifacts in MRI, CT, and PET images.

There are several methods for inpainting such as exemplar-based (Criminisi et al. 2003), stochastic methods (Li 2011), texture synthesis (Efros and Leung 1999), wavelet based (Dobrosotskaya and Bertozzi 2008) and PDE-based methods (Chan and Shen 2001a, b; Bertozzi et al. 2007a). We will focus on PDE-based methods. PDE-based methods influenced the researcher because it has a strong mathematical background and most of the physical phenomenon can be represented by PDEs. It helps us to understand the underlying physics and can come up with new fruitful model and numerical scheme.

In PDE-based methods the PDE can be obtained by minimizing an energy functional or we can propose a PDE directly for inpainting. If the PDE is obtained by minimizing an energy functional then the method is called a variational method.

Let u be the restored (inpainted) image from the given image f defined on a domain \(\varOmega \subset \mathbb {R}^2\) with \(D\subset \varOmega \) as missing part (to be inpainted) then the energy E(u) used for minimizing in the variational inpainting method has the form:

where \(B_1\) and \(B_2\) are two Banach spaces with \(B_2 \subseteq B_1\), \(f\in B_1\) and \(\lambda \) defined as

with \(\lambda _0 \gg 1\).

The term R(u) is called regularization term, \(\Vert f-u\Vert ^2_{B_1}\) is called fidelity term and \(\lambda \) is called fidelity parameter which forces the inpainting image to remain closer to the original image outside the domain D. Regularization term R(u) plays an important role in image inpainting. Several models have been proposed by choosing different R(u).

The first variational method for inpainting is introduced in Chan and Shen (2001a). They have chosen \(R(u)=\int _{\varOmega }|\nabla u| \mathrm{d}x\mathrm{d}y\), total variation (TV) of u, and \(B_1=L^2\) and \(B_2=\text {BV}(\varOmega )\), space of bounded variation. This inpainting model (Chan and Shen 2001a) is known as \(\text {TV}-L^2\) inpainting model. The Euler–Lagrange equation for \(\text {TV}-L^2\) model will give a second-order equation.

The problem with the second-order models is that they are not able to fill the large gaps and unable to connect the level lines in the missing domain. So the authors Chan and Shen (2001b) proposed a third-order model based on TV which is called curvature-driven diffusion (CDD) model. This work is motivated by the work of Perona and Malik (1990) introduced for image denoising.

Later on motivated by the segmentation work of Nitzberg et al. (1993) and Chan et al. (2002) a fourth-order model called Euler Elastica (EE) model was proposed. This work is also related to the earlier work of Masnou and Morel (1998) but the new approach is a functionalized model and in the current model have consider elastica energy in terms of u, whereas in Masnou and Morel (1998) elastica curve is chosen for the level lines of the image. In EE model R(u) is chosen as \(\int _{\varOmega } (a + b\kappa ^2)|\nabla u| \mathrm{d}x\mathrm{d}y\), where a and b are constants and \(\kappa =\nabla \cdot \frac{\nabla u}{|\nabla u|}\) is curvature.

The first work for PDE-based inpainting was proposed by Bertalmio et al. (2000). They imitate the technique of museum artists and propose a third-order nonlinear PDE for inpainting. In the later work (Bertalmio et al. 2001), authors found that the third-order PDE proposed in Bertalmio et al. (2000) is connected to two-dimensional Navier–Stokes (NS) equation. They found that the problem proposed in Bertalmio et al. (2000) is related to the inviscid Euler equations from incompressible flow where the image intensity acts like the stream function in the fluid problem. Esedoglu and Shen (2002) have proposed an inpainting model based on the Mumford and Shah (1989) image segmentation model and they have shown that this model is not suitable for inpainting. So in the same paper (Esedoglu and Shen 2002), they improve the model using the idea of Euler Elastica (Chan et al. 2002) model. They penalize square of the curvature along an edge contour and proposed a model called Mumford–Shah–Euler image inpainting model. This model results in a fourth-order nonlinear parabolic PDE containing a small parameter.

Later on, Bertozzi et al. (2007a) have proposed a new inpainting model by modifying the Cahn–Hilliard equation generally used in material science for phase separation phenomena. In the later work (Bertozzi et al. 2007b), authors have done some analysis of the Cahn–Hilliard-based inpainting model. Since the Cahn–Hilliard model (Bertozzi et al. 2007a) contains a bimodal potential the model is effective for binary image inpainting only. Burger et al. (2009) have generalized the model of Bertozzi et al. for inpainting of gray-scale images. The model of Burger et al. (2009) is also known as \(\text {TV}-H^{-1}\) model. Recently, many authors have tried to generalize the binary inpainting model of Bertozzi et al. in Cherfils et al. (2017), Vijayakrishna (2015) and Theljani et al. (2017). In Schönlieb and Bertozzi (2011), authors have used the binary inpainting model bitwise to gray-scale image for gray-scale image inpainting.

As we mentioned earlier that because of the bimodal potential, the Cahn–Hilliard model is applicable for binary image only so now the question is can we avoid the potential term and still get a model which is applicable for both binary and gray images. In this paper, we have shown that indeed we can get rid of the potential term and the proposed model is motivated by the denoising work (Kašpar and Zitová 2003). The generalized model (Burger et al. 2009) is nonlinear in nature which is difficult to solve and time consuming. So in this current work we will propose a linear model which is faster and effective for gray-scale image inpainting.

The paper is organized as follows: in Sect. 2 we have discussed some related existing models and followed it by our proposed model. Section 3 talks about the numerical scheme for our proposed model. In Sect. 4, we have presented the existence and uniqueness theorem, and an overview of the proof and at the end we have presented the stability analysis of the numerical method. In Sect. 5, we have presented the numerical results of our model and also compared our results with models such as \(\text {TV}-L^2\) and \(\text {TV}-H^{-1}\). Finally, in Sect. 6 we draw some conclusion about our model and calculated results.

2 Proposed model and some existing model

2.1 \(\text {TV}-L^2\) model

\(\text {TV}-L^2\) inpainting model is a variational type of inpainting model. In this case, regularization term \(R(u)=\int _{\varOmega }|\nabla u|\mathrm{d}x\mathrm{d}y\) and fidelity term is taken in \(L^2\) norm. The minimization energy is:

Corresponding Euler–Lagrange equation will be

Then the steepest descent equation for E is

2.2 Cahn–Hilliard inpainting model

This model is based on the Cahn–Hilliard equation (6) which arises in material science to simulate the physical phenomena called phase separation for a heated mixture of alloys. It simulates the evolutionary process of a mixed binary alloy separating into separate metal ingredients over a particular time interval. It is basically divided into two stages that occurs right after the sudden cooling of the mixture. The Cahn–Hilliard equation has the following form:

where \(W(u)=u^2(1-u)^2\) is bimodal (double-well) potential.

Bertozzi et al. (2007a) have modified this CH equation (6) by adding the fidelity term \(\lambda (f-u)\) and used it for binary image inpainting. So the modified Cahn–Hilliard equation used for inpainting is as follows:

This equation is sum of gradient of two energies. The first part is gradient of the energy \(E_1\) in \(H^{-1}\) norm with

and the fidelity part is gradient of \(E_2\) in \(L^2\) norm where

They have used convexity splitting to both the energies \(E_1\) and \(E_2\), which is proposed by Eyre (1998) for solving this equation.

2.3 \(\text {TV}-H^{-1}\) model

As the double-well potential W(u) is present in Eq. (7), CH inpainting model is applicable for binary inpainting only. The model proposed in Burger et al. (2009) is a generalization of CH model for gray-value image. With an approximation to the TV functional \(\text {TV} (u)\) in terms of \(\epsilon \) and a bimodal potential W(u), they have defined a sequence of functionals \(J^{\epsilon }(u, v)\) involving an entirely different fidelity term in \(H^{-1}\) norm to that of the usual \(L^2\) fidelity. For a given f and \(v\in L^2(\varOmega )\), define \(J^{\epsilon }(u, v)\) as follows:

which was shown to \(\varGamma \)-converge, as \(\epsilon \rightarrow 0\) in the topology of \(L^1(\varOmega )\), to J(u, v), given by,

It was practically accomplished for the generalized inpainting using sub-gradients (\(\partial \)) of the TV functional \(\text {TV}(u)\) within the flow, which leads to structure inpainting with smooth curvature of level sets. The inpainted image u of \(f\in L^2(\varOmega )\) shall evolve via

where \(\text {TV}(u)\) can be defined using the distributional derivative of u, i.e. Du, as

For the sake of computations a regularized form for the sub-gradient \(p=\nabla \cdot \frac{\nabla u}{|\nabla u|}\) with a parameter \(\delta \) given by \(\nabla \cdot \frac{\nabla u}{\sqrt{|\nabla u|^2+\delta ^2}}\) was considered. For more information on the nature of \(p\in \partial \text {TV}(u)\) and various parameters involved in the model, refer Burger et al. (2009) which also includes a proof on the existence of a minimizer to \(J^{\epsilon }(u,v)\) using fixed point theory approach.

2.4 Proposed model

The model which we are going to propose is of variational type. We choose the regularization term R(u) as \(\int _{\varOmega }(u_{xx}^2+2u_{xy}^2+u_{yy}^2)\mathrm{d}x\mathrm{d}y\), which is used for denoising in Kašpar and Zitová (2003) and \(B_1=B_2=L^2(\varOmega )\). Then the minimization energy (1) is reduced to:

The corresponding Euler–Lagrange equation is:

where \(\varDelta ^2\) is biharmonic operator and \(\lambda \) is same as in (2).

But biharmonic operator is smoothing the images so we want a parameter \(\epsilon \) to control the smoothing. So we consider the following:

If we evolve with respect to time t then the equation will reduces to:

Note that our model is a linear model and does not contain any potential term. We impose the boundary condition:

where \(\nu \) is the outward normal vector to the boundary of \(\varOmega \).

Remark

In Papafitsoros et al. (2013), an attempt has been made without much success to deal with binary inpainting problem using (17) for \(\lambda =2\). No discussion on either numerical scheme or on methodology is provided in Papafitsoros et al. (2013).

3 Convexity splitting and numerical scheme

The numerical solution has been obtained using the finite difference approach similar to Bertozzi et al. (2007a). The proposed PDE model is first discretized in time according to the convexity splitting of the related functional, proposed by Eyre (1998) and then the discrete Fourier transform has been applied on the entire expression to get an explicit discrete formula for the solution in Fourier domain. Finally, the discrete inverse Fourier transform has been taken on the solution obtained in Fourier domain to get the required solution in special domain.

Convexity splitting methods are used widely in optimization. It usually used to solve gradient system and also applicable to the evolution equations which do not follow a variational principle. Consider the problem of the type:

In convexity splitting, we split the energy E as

where \(E_c\) and \(E_e\) are strictly convex, i.e. \(-E_e\) is concave. In the resulting scheme, convex part is considered implicitly and concave part is taken explicitly.

For the numerical approximation of u(t), the convexity splitting of E being used with the time-step \(\varDelta t\) as follows:

Here \(U_{k+1}\) and \(U_k\) denote the approximations to u(t) at \(t=t_{k+1}(=(k+1)\varDelta t)\) and \(t=t_k(=k \varDelta t)\), respectively. Like Cahn–Hilliard equation (7), Eq. (17) can be derived as the gradient flow of the energies \(F_1\) in \(H^{-1}\) and \(F_2\) in \(L^2\) norm, where

The convexity splitting idea for our model is as follows. Science \(F_1\) is convex so we will split \(F_2\) only. Write \(F_2=F_{21}-F_{22}\) with

Hence, the resulting time-stepping scheme for the above-stated choices of convexity splittings for \(F_1\) and \(F_2\) is

where \(\nabla _{H^{-1}}\) is gradient in \(H^{-1}\) inner product and \(\nabla _{L^2}\) is gradient w.r.t the \(L^2\) inner product. Finally, we arrive at a super-positioned gradient flow for the energy that evolves from two different inner products from the Hilbert spaces \(H^{-1}\) and \(L^2\) as

where \( C_2\) are positive and should be large enough to make \(F_{21}\) and \(F_{22}\) as convex functionals.

For the numerical evaluations of our proposed model, we used an unconditionally stable numerical scheme convexity splitting (21) for the time variable and the Fourier spectral (Gillette 2006) method for the space variable.

Applying the two-dimensional (2D) Discrete Fourier Transform (DFT) on (21) to get an explicit formula for \({\hat{u}}_{k+1}\) in frequency domain, given by

with \(M_{l,m}\) for \(l=0, 1, 2, \dots , N-1\) and \(m=0, 1, 2, \dots , M-1\) are given by Gillette (2006),

where \(N\times M\) is the size of the image, and \({\varDelta x}\) and \({\varDelta y}\) are the step size in x direction and y direction, respectively.

At every step, we compute the 2D-inverse DFT of \({\hat{u}}^{n+1}\) and extract its real part to get \(u^{n+1}\). Here we have used the relation \(\widehat{\varDelta u}(l,m)=M_{l,m} {\hat{u}}(l,m)\) whose derivation is given in the thesis of Gillette (2006).

4 Analysis of proposed model

In this section, we will prove the existence and uniqueness of the solution of both the stationary equation (16) and the parabolic equation (17). The existence of the solution of the Eq. (16) follows from the Lax–Milgram lemma and for Eq. (17), we will follow the Faedo–Galerkin approach. Before going into the proof let us define the space \(V:=\{v\in H^2(\varOmega ) : \frac{\partial v}{\partial \nu }=0 \quad \text {on} \quad \partial \varOmega \}\) and define the energy norm on V as \(\Vert v \Vert _V=(\epsilon \Vert \varDelta v\Vert ^2 +\lambda _0 \Vert v\Vert ^2)^{\frac{1}{2}}\).

Lemma 1

Temam (1997) For any \(\lambda >0,\) \(\Vert \cdot \Vert _V\) is a norm in V which is equivalent to \(H^2\) norm.

4.1 Existence of steady-state equation

Here we will prove the existence and uniqueness of the steady-state equation (16). Eq. (16) can be written as

and impose the boundary condition

Now multiplying (23) by \(v\in V\) and integrating over \(\varOmega \) we get

Applying integration by parts and using the two times in the first term and using the boundary condition we get the weak form of the Eq. (23) as

Theorem 1

There exist a unique solution of Eq. (25) in the space V which continuously depend on initial data.

Proof

Eq. (25) can be written as

where the bilinear form \(a(u,v)=\int _{\varOmega }\varDelta u \varDelta v+\int _{\varOmega } \lambda u v\) and the liner form \(L(v)=\int _{\varOmega }\lambda fv\). Clearly the bilinear form a is coercive. We will prove the boundedness of the bilinear form a in \(\Vert \cdot \Vert _V\) norm.

Hence a is bounded and boundedness of f is obvious. Hence by Lax–Milgram lemma the theorem follows.

4.2 Existence and uniqueness of Eq. (17)

Multiplying (17) by \(v \in V\) and integrating over \(\varOmega \), and using the Green’s formula and the boundary condition (18) we get the weak form as

with \(u(x,y,0)=f(x,y)\).

Theorem 2

Let \(f\in L^2(\varOmega )\), and every \(T>0,\) there exists a unique solution u of the initial boundary value problems (17) and (18) which belongs to \(C([0,T];L^2)\cap L^2([0,T];V)\).

Proof

The existence proof follows a similar argument as in Bertozzi et al. (2007b). First we establish an \(L^2\) estimate involving the fidelity term. Putting \(v=u\) in (26) we get

Now

Using this estimate in (27) we get

which implies

Applying Gronwall’s lemma we will get a priori bound on u in \(L^2\) norm on any interval [0, T). If \(\lambda \) is sufficiently large then we will get a uniform in time bound of \(u(\cdot , t)\) in the \(L^2\) norm.

The existence result follows by invoking Galerkin method and passing to the limit.

Uniqueness Let u and v be two different solutions of the problem (17). We have

On subtracting one from the other and taking \(z:=u-v\) it will be

Taking inner product with z on both sides.

or

Notice that \(\frac{\mathrm{d}}{\mathrm{d}t}\Vert z\Vert ^2 \le 0 \) for \(z(0)=0\) and \(\frac{\mathrm{d}}{\mathrm{d}t}\Vert z\Vert ^2 \le 0\) implies \(\Vert z(t)\Vert =0 \ \forall \ t \ge 0 \). Hence \( z(\mathbf{x},t)=0\) for almost every \( \mathbf{x} \in \varOmega \) and \(\forall \ t \ge 0 \). For every \(t\ge 0,u(\mathbf{x},t)=v(\mathbf{x},t),\ \text {a.e.}\ \mathbf{x}\in \varOmega \) where \(\mathbf{x} = (x,y)\).

4.3 Unconditional stability of the scheme

Theorem 3

Let u be the exact solution of (17) and at time \(k\varDelta t\) the exact solution denoted by \(u_k=u(k\varDelta t)\) for a time step \(\varDelta t > 0\) and \(k\in \mathbb {N}.\) Let \(U_k\) be the solution of (21) at k-th iteration with constant \(C_2>\lambda _0\). Then the following statements holds :

-

(a)

Assuming \(\Vert u_{tt}\Vert \) and \(\Vert \varDelta ^2 u_t\Vert _2\) are bounded, the scheme is consistent with the continuous equation and it is of order 1 in time.

-

(b)

For fixed T, the solution \(U_k\) is bounded on [0, T], for all \(\varDelta t>0\). More over for \(k\varDelta t\le T,\) we have

$$\begin{aligned} \Vert U_k\Vert ^2+\varDelta t K_1\Vert \varDelta U_k\Vert ^2 \le e^{K T}(\Vert U_0\Vert ^2 +\varDelta t K_1\Vert \varDelta U_0\Vert ^2+ \varDelta t TC(\varOmega ,D,\lambda _0,f)), \end{aligned}$$(33)for every \(\varDelta t>0\), and \(K_1\) and K are some constants and constant C depending on \(\varOmega ,D,\lambda _0,f\).

-

(c)

The discretization error \(e_k=u_k-U_k\). For smooth solution \(u_k\) and \(U_k,\) the error \(e_k\) converges to 0 as \(\varDelta t\rightarrow 0\). For fixed \(T>0\) and \(k\varDelta t\le T,\) we have \(\Vert e_k\Vert ^2+\varDelta t M_1\Vert \varDelta e_k\Vert ^2 \le \frac{T}{M_2}e^{M_3T}(\varDelta t)^2\) for suitable positive constants \(M_1,M_2,M_3\).

Proof

(a) Let \(\tau _k\) be the local truncation error. Then \(\tau _k\) can be obtained by subtracting Eq. (17) from (21).

Using the Taylor series theorem and the assumption on \(\Vert u_{tt}\Vert \), \(\Vert \varDelta ^2 u_t\Vert _2\) and \(\Vert u_t\Vert _2\) we will get the global truncation error \(\tau \) as

Putting value of \(u_t\) from Eq. (17) in (34) we get

\(\square \)

Proof

(b) Let us consider the discrete model

Multiply Eq. (36) with \(U_{k+1}\) and integrate over \(\varOmega \) we get

Using Young’s inequality we obtain

Now we use the estimate

Using the estimate (38) in Eq. (37) rearranging the terms we get

Since \(C_2>\lambda _0>1\), the coefficients in (39) are positive. Multiply both side of above inequality with \(2\varDelta t\) and putting \(M_1=1+\varDelta t(C_2-1)\) and \(M_2=1+\varDelta t(C_2+2\lambda _0^2)\) we get

Dividing by \(M_1\) we have

the last inequality we get by multiplying the 2nd term of the bracket by \(\frac{M_2}{M_1}\) which is bigger than 1.

By induction it follows that

Hence for \(k\varDelta t\le T\) we obtain

which gives the required result. \(\square \)

Proof

(c) Subtracting Eq. (21) from (35) and rearranging the terms we get that the discretization error \(e_k=u_k-U_k\) satisfies

Multiply by \(e_{k+1}\) and integrating over \(\varOmega \) we get

Applying Young’s inequality leads to

After simplifying we get

Multiply both side \(2\varDelta t\) and putting \(M_1=1+\varDelta t(C_2-1)\) and \(M_2=1+\varDelta t(C_2+\lambda _0^2)\) we get

Dividing by \(M_1\) and adjusting the coefficient we get

By induction on k we obtain

where the information \(e_0=0\) and \(1\le \frac{M_2}{M_1}=1+K_1\varDelta t\) have been used. Hence, using bound on truncation error and \(k\varDelta t\le T\) we obtain

5 Numerical results

Here, we will present numerical results of our model on some standard test images typically used for inpainting. We will compare our results with the results of \(\text {TV}-L^2\) model (5) and \(\text {TV}-H^{-1}\) inpainting model. To compare the quality of the result we have calculated PSNR, SNR and SSIM of resulting images. Now we will introduce the above-mentioned measures.

-

(a)

Peak signal to noise ratio (PSNR) is the ratio of maximum possible value of the original image and the mean squared error between the original and the resulting image. The formula for PSNR is as follows:

$$\begin{aligned} \text {PSNR}= 10 \log _{10}\frac{\sum _{i=1}^{\mathrm{row}}\sum _{j=1}^{\mathrm{col}} {255}^2}{\sum _{i=1}^{\mathrm{row}}\sum _{j=1}^{\mathrm{col}}[I(i,j)-B(i,j)]^2}, \end{aligned}$$where I is the original image and B is the recovered image. Higher PSNR indicates the better the processed image. \(\text {SNR}=\frac{\sigma _I^2}{\sigma _B^2}\) where \(\sigma ^2\) is variance.

-

(b)

Signal to noise ratio (SNR) is defined as the ratio of the variance of the original image and recovered image. Higher SNR implies the better inpainting.

-

(c)

Structural Similarity Index (SSIM) is defined to measure the similarity between two images (Wang and Bovik 2002). SSIM is defined as follows:

$$\begin{aligned} \text {SSIM}(I,B)=\frac{(2\mu _I\mu _B+c_1)(2\sigma _{IB}+c_2)}{(\mu _I^2+\mu _B^2+c_1)(\sigma _I^2+\sigma _B^2+c_2)} \end{aligned}$$where \(\mu ,\sigma \) represents mean and variance of the corresponding image and \(c_1=(0.01L)^2,c_2=(0.03L)^2\), here dynamic range \(L=255\). Higher the SSIM implies the better recovery of the image.

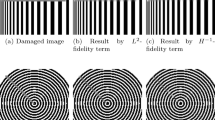

The parameters used for our model are as follows: \( \lambda _0=10^5,\ \varDelta t=1, \ \varDelta x=\varDelta y=0.25\) and the constants \(C_2=\lambda _0\). For the parameters of \(\text {TV}-H^{-1}\) and \(\text {TV}-L^2\), we have followed Sch önlieb (2009) and for these two models we have used the code available on the web (Schönlieb 2012). Our results are calculated in two stages. In the first stage, we put \(\epsilon =2\) and in the second stage we set \(\epsilon \) to 0.1. In the first stage, the process repairs the damaged portion and second stage helps to get better quality of the image obtained in the first stage. We have calculated PSNR in each 50 iterations and stopped our algorithm when difference between the two consecutive calculated PSNR is less than \(10^{-3}\). In Fig. 1, we have shown a damaged image and the recovered image by our model. For this image, the first stage lasts for 1400 iterations and second stage is run with \(\epsilon =0.01\) for 100 iterations.

In Figs. 2 and 3, we have shown inpainting result of an elephant image with two different inpainting domains. In the first row of the corresponding figure, we have shown the original and damaged image of an elephant and in the second row we have shown the inpainting results of \(\text {TV}-L^2\), \(\text {TV}-H^{-1}\) and our model. From the figure, one can see that our results are visually better (see: tree, back of elephant) than the other two. Table 1 contains the measures PSNR, SNR and SSIM for all the three methods. From the table we can see that our model gives the best results among the three in terms of PSNR, SNR and SSIM. Also our model takes less time in comparison of the other two although models.

In the first row of Fig. 4, we have presented the original kaleidoscope image and a damaged image with some missing part, and the second row contains the inpainting results of \(\text {TV}-L^2\), \(\text {TV}-H^{-1}\) and our model, respectively. From the figure, one can see that our result is visually better (see lower part), brighter than the other two results and our result is more closer to the original one. In Table 1, we have noted down the PSNR, SNR and SSIM values of all the above-mentioned models for kaleidoscope image. From the table, we can see that in terms of PSNR, SNR and SSIM our method gives the best result among the three. Also, our model consumes less time than other two.

Figs. 5, 6 and 7 demonstrate few inpainting of Lena image. We have taken three different inpainting domains with different sizes. Also, the results of our model are compared with the results of \(\text {TV}-L^2\) and \(\text {TV}-H^{-1}\) models. To compare the quality of the recovered images, we have calculated PSNR, SNR and SSIM and they are reported in Table 1. From Table 1, one can see that our model gives better result than other two models in terms of PSNR, SNR and SSIM. Also we have reported the CPU time and number of iterations. For our model, iteration indicates counts of 1st phase + counts of 2nd phase. From the Table 1, we can conclude that our model is faster than other two.

In Figs. 8, 9 and 10, we have shown similar kind of results to Barbara image. In Table 1, we have reported PSNR, SNR, SSIM and CPU time for all the three Barbara images. Here also we can see that our model beats the other two in terms of PSNR, SNR and SSIM. Our model is faster than other two.

Comparison of results of our model and modified Cahn–Hilliard (mCH) model (Bertozzi et al. 2007a) with different size of gap to the strip. Left to right: damaged image, result by our model and result by mCH model

In the first row of Fig. 11, we have shown an image with variation of gray colour having sharp edges and a damaged image with hole of different size and in the second row we have shown the inpainting results of \(\text {TV}-L^2\), \(\text {TV}-H^{-1}\) and our model, respectively. From the figure, we can see that the result of \(\text {TV}-L^2\) is not able to recover the image completely but our model and \(\text {TV}-H^{-1}\) recover the image except at the edges. From the Table 1, we can see that our model gives better result in terms of PSNR and SNR but in terms of SSIM, \(\text {TV}-H^{-1}\) slight good result than our model but our model is faster than other two for this case also. Note: the damaged Lena and Barbara image are taken from the paper Li (2011).

Comparison of results of our model and modified Cahn–Hilliard (mCH) model (Bertozzi et al. 2007a) on cross and vertical strip image. Left to right: damaged image, result by our model and result by mCH model

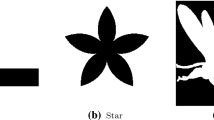

Now we will apply our model to some binary images and will compare our results with the results of modified Cahn–Hilliard (mCH) model (Bertozzi et al. 2007a). For the following results, the parameters for our model are chosen as \(\epsilon =1, \lambda _0=10^2, \mathrm{d}t=1, \varDelta x=\varDelta y=\frac{1}{8}\ \text {and}\ C_2=\lambda _0\) and for Cahn–Hilliard inpainting we have followed Gillette (2006), where the parameters are set as \( \lambda _0=5 \times 10^5, \varDelta t=1, \varDelta x=0.01= \varDelta y\ \text {and}\ C_1=300, C_2=3 \times \lambda _0\) and \(\epsilon =0.5\) in the first stage and .01 in the second stage. We have calculated PSNR in each 50 iterations and stopped our algorithm when difference between the two consecutive calculated PSNR is less than \(10^{-2}\). The final result is obtained by setting the pixels of value \(\le \) 0.5 as 0 and > 0.5 value to 1.

In Fig. 12, we have shown the inpainted result of a strip with different size of gaps and also compared our results with the results of mCH model. For first three images, the results are obtained in 100 iterations and took around 0.50 s, and the last two images it took 150 and 700 iterations, respectively, and their corresponding CPU time are 0.72 and 2.97 s for our model. Similarly, for the first four images the results are obtained in 100 iterations in 1st stage and 50 iterations in second stage, and for the last image the 1st stage runs for 1000 iterations and the second stage runs for 800 iterations. The CPU time for mCH model is around 0.90 s for the first four images and for the last image it took 9.21 s. From the figure, one can see that our model gives the better result than mCH model. Also we have reported PSNR, SNR and SSIM for all these images in Table 2.

In Fig. 13, we have shown the inpainted result of a cross-image and a vertical strip, and also compared our results with the results of mCH model. For our model 100 iterations are enough for both the images and it takes 0.51 s. For mCH model, we run the code for 200 iterations in 1st stage and the second stage runs for 100 iterations for both the images and it took 1.76 s. From the figure one can notice that our model gives the better result than mCH model.

6 Conclusion

Here we have presented a new fourth-order PDE model which do not contain any nonlinear potential terms and the same model is effective for both the binary and gray-scale images. An unconditionally stable scheme based on convexity splitting strategy has been used for solving the proposed PDE. Numerical results show the superiority of our model over \(\text {TV}-L^2\) and \(\text {TV}-H^{-1}\) model.

References

Aubert G, Kornprobst P (2006) Mathematical problems in image processing: partial differential equations and the calculus of variations, vol 147. Springer, Bwelin

Bertalmio M, Sapiro G, Caselles V, Ballester C (2000) Image inpainting. In: Proceedings of the 27th annual conference on Computer graphics and interactive techniques (SIGGRAPH ’00), New Orleans, LU, pp 417–424

Bertalmio M, Bertozzi AL, Sapiro G (2001) Navier–Stokes, fluid dynamics, and image and video inpainting. In: Proceedings of the 2001 IEEE computer society conference on computer. Vision and pattern recognition. CVPR 2001, vol 1, pp 355–362

Bertozzi AL, Esedoglu S, Gillette A (2007a) Inpainting of binary images using the Cahn–Hilliard equation. IEEE Trans Image Process 16(1):285–291

Bertozzi AL, Esedoglu S, Gillette A (2007b) Analysis of a two-scale Cahn–Hilliard model for image inpainting. Multiscale Model Simul 6(3):913–936

Burger M, He L, Schönlieb CB (2009) Cahn–Hilliard inpainting and a generalization for grayvalue images. SIAM J Imaging Sci 2(4):1129–1167

Chan TF, Shen J (2001a) Mathematical models for local non-texture inpaintings. SIAM J Appl Math 62(3):1019–1043

Chan TF, Shen J (2001b) Non-texture inpainting by curvature driven diffusions (CDD). J Vis Commun Image Rep 12(4):436–449

Chan TF, Kang SH, Shen J (2002) Euler’s elastica and curvature-based inpainting. SIAM J Appl Math 63(2):564592

Chan TF, Shen JH, Zhou HM (2006) Total variation wavelet inpainting. J Math Imaging Vis 25(1):107–125

Cherfils L, Fakih H, Miranville A (2017) A complex version of the Cahn–Hilliard equation for grayscale image inpainting. Multiscale Model Simul 15:575–605

Criminisi A, Perez P, Toyama K (2003) Object removal by exemplar-based inpainting. IEEE Int Conf Comput Vis Pattern Recognit 2:721–728

Deo SG, Lakshmikantham V, Raghavendra V (1997) Textbook of ordinary differential equations. Tata McGraw-Hill, New York

Dobrosotskaya JA, Bertozzi AL (2008) A wavelet-laplace variational technique for image deconvolution and inpainting. IEEE Trans Image Process 17(5):657–663

Efros AA, Leung TK (1999) Texture synthesis by non-parametric sampling. In: IEEE international conference on computer vision, Corfu, Greece

Esedoglu S, Shen JH (2002) Digital inpainting based on the Mumford–Shah–Euler image model. Eur J Appl Math 13(4):353–370

Evans LC (2010) Partial differential equations. Graduate studies in mathematics. American Mathematical Society, Providence

Eyre D (1998) An unconditionally stable one-step scheme for gradient systems. Unpublished

Fife PC (2000) Models for phase separation and their mathematics. Electron J Differ Equ 48:1–26

Gillette A (2006) Image Inpainting using a modified Cahn–Hilliard equation. PhD thesis, University of California, Los Angeles

Kašpar R, Zitová B (2003) Weighted thin-plate spline image denoising. Pattern Recognit 36:3027–3030

Li X (2011) Image recovery via hybrid sparse representations: a deterministic annealing approach. IEEE J Sel Top Signal Process 5(5):953–962

Masnou S, Morel J (1998) Level lines based disocclusion. In: 5th IEEE international conference on image processing, Chicago, pp 259–263

Mumford D, Shah J (1989) Optimal approximations by piecewise smooth functions and associated variational problems. Commun Pure Appl Math 42:577–685

Nitzberg N, Mumford D, Shiota T (1993) Filtering, segmentation, and depth. Lecture notes in computer science. Springer, Berlin

Papafitsoros K, Schönlieb CB, Sengul B (2013) Combined first and second order total variation inpainting using split Bregman. Image Process. Line 3:112136

Perona P, Malik J (1990) Scale-space and edge detection using anisotropic diffusion. IEEE Trans Pattern Anal Mach Intell 12(7):629–639

Rudin LI, Osher S, Fatemi E (1992) Nonlinear total variation based noise removal algorithms. Physica D 60:259–268

Sch önlieb CB (2009) Modern PDE techniques for image inpainting. PhD thesis, DAMTP, University of Cambridge

Schönlieb, (2012) Higher-order total variation inpainting. File Exchange, MATLAB Central

Schönlieb CB, Bertozzi A (2011) Unconditionally stable schemes for higher order inpainting. Commun Math Sci 9(2):413–457

Temam R (1997) Infinite dimensional dynamical systems in mechanics and physics, vol 68. Springer, Berlin

Theljani A, Belhachmi Z, Kallel M, Moakher M (2017) Multiscale fourth order model for image inpainting and low-dimensional sets recovery. Math Methods Appl Sci 40:3637–3650

Vijayakrishna R (2015) A unified model of Cahn–Hilliard greyscale inpainting and multiphase classification. PhD thesis, IIT Kanpur, India

Wang Z, Bovik AC (2002) A universal image quality index. IEEE Signal Process Lett 9(3):81–84

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Cristina Turner.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Rathish Kumar, B.V., Halim, A. A linear fourth-order PDE-based gray-scale image inpainting model. Comp. Appl. Math. 38, 6 (2019). https://doi.org/10.1007/s40314-019-0768-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-019-0768-x