Abstract

This article concerns with a computational scheme to solve two-dimensional stochastic fractional integral equations (2DSFIEs), numerically. In these equations, the fractional integral is considered in the Riemann–Liouville sense. The proposed method is essentially based on two-dimensional hat basis functions and its fractional operational matrices. The fractional-order operational matrices of integration are applied to reduce the solution of 2DSFIEs to the solution of a system of linear equations which can be solved using a direct method or iterative method. Some results concerning the convergence analysis associated with the proposed technique are discussed. In addition, we establish the rate of convergence of this approach for solving 2DSFIEs is \(O(h^2)\). Finally, some examples are solved using present method to indicate the pertinent features of the method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fractional calculus is introduced to fill the existing gap for describing different phenomena in real life. After introducing fractional calculus, many problems in physics, chemistry, biology, and engineering are modeled as fractional differential equations (Dabiri and Butcher 2017), fractional partial differential equations (Moghaddam and Machado 2017), or fractional integral equations. For example Bagley–Torvik equation (Youssri 2017), Nizhnik–Novikov–Veselov equations (Osman 2017), evolution equations (Abdel-Gawad and Osman 2013), and fractional diffusion equation (Yang et al. 2016). There are numerous methods for solving these equations like homotopy perturbation method (Pandey et al. 2009), Adomian decomposition method (Li and Wang 2009), wavelet method (Lepik 2009), Lucas polynomial sequence approach (Abd-Elhameed and Youssri 2017a), orthonormal Chebyshev polynomial method (Abd-Elhameed and Youssri 2017b), and many other methods which are not mentioned them, here.

Since 1960, by increasing computational power, some random factors are inserted to deterministic integral equations and are created stochastic integral equations such as stochastic integral equations (Mohammadi 2015) or stochastic integro-differential equations (Dareiotis and Leahy 2016; Mei et al. 2016). In more cases, the analytical solutions of these equations are not exist or finding their analytic solution is very difficult. Thus, presenting an accurate numerical method is an essential requirement in numerical analysis. Numerical solution of stochastic integral equations because of the randomness has its own difficulties. In recent years, mathematicians studied numerous methods to obtain the numerical solution of stochastic differential equations (Higham 2001; Tocino and Ardanuy 2002; Dehghan and Shirzadi 2015; Kamrani 2015; Gong and Rui 2015; Mao 2015; Zhou and Hu 2016) or stochastic integral equations (Mirzaee and Samadyar 2017a, b; Mirzaee et al. 2017, 2018; Mirzaee and Samadyar 2018a, b, c). The reader should know the concept of independence, expected values, variance, and fundamental definition of stochastic process which are necessary to read papers in this field.

According to the above explanations, two-dimensional stochastic fractional integral equations are used to model various problems occur in different sciences (Denisov et al. 2009). In many cases, these equations can not be solved analytically. Therefore, presenting an accurate and efficient numerical method is an essential requirement in numerical analysis. In this paper, numerical solution of 2DSFIEs via two-dimensional hat basis functions are investigated. In general, 2DSFIEs have the following form:

where \((x,y)\in D=([0,T]\times [0,T])\) and \(r=(r_1,r_2)\in (0,\infty )\times (0,\infty )\). In addition, g(x, y), \(k_1(x,y,s,t)\) and \(k_2(x,y,s,t)\) are the known and continuous functions and f(x, y) is unknown function which should be approximated. Moreover, \(\Gamma \) denotes Gamma function and B(t) is Brownian motion process which satisfies the following properties:

-

\(B(t)-B(s)\) for \(t>s\) is independent of the past. That means for \(0<u<v<s<t<T\), the increments \(B(t)-B(s)\) and \(B(v)-B(u)\) are independent.

-

The increment \(B(t)-B(s)\) for \(t>s\) has Normal distribution with mean zero and variance \(t-s\).

-

B(t) for \(t\ge 0\) are continuous functions of t.

In this paper, we use hat basis functions to get numerical solution of 2DSFIEs. Different advantages with the proposed numerical method are listed as follows:

- \(\checkmark \) :

-

Using these functions, equation under consideration is converted to a system of algebraic equations which can be easily solved.

- \(\checkmark \) :

-

The proposed scheme is convergent and the rate of convergence is \(O(h^2)\).

- \(\checkmark \) :

-

The unknown coefficients of the approximation of the function with these basis are easily calculated without any integration. Therefore, the computational cost of the proposed numerical method is low.

- \(\checkmark \) :

-

Because of the simplicity of hat functions, this method is a powerful mathematical tool to solve various kinds of equations with little additional works.

Using a linear mapping, any closed interval [0, T] can be converted to closed interval [0, 1]. Therefore, we let \(T=1\) in Sects. 5 and 6.

2 Fundamental concepts

2.1 Fractional calculus

There are many definitions for fractional integrals and fractional derivatives. For example the Riemann–Liouville, Caputo, Weil, Hadamard, Riesz, Grunwald–Letnikov and Erdelyi–Kober. Among them, Riemann–Liouville definition usually is used for fractional integrals, whereas the Caputo definition is frequently applied for fractional derivatives (Podlubny 1999; Kilbas et al. 2006).

Definition 1

The definition of Riemann–Liouville fractional integral operator \(I^{r_1}\) of order \(r_1>0\) on \(L^1[a,b]\) is as follows Asgari and Ezzati (2017):

The most important properties of operator \(I^{r_1}\) are listed in the following:

-

1.

\((I^0f)(x)=f(x)\),

-

2.

\((I^{r_1}I^{r_2}f)(x)=(I^{r_1+r_2}f)(x)\),

-

3.

\((I^{r_1}I^{r_2}f)(x)=(I^{r_2}I^{r_1}f)(x)\).

Definition 2

Let \(r=(r_1,r_2)\in (0,\infty )\times (0,\infty )\) and \(f(x,y)\in L^1(D)\). The definition of left-side mixed Riemann–Liouville fractional integral f of order r is as follows Vityuk and Golushkov (2004):

2.2 Hat functions and their properties

In this section, we define one-dimensional (1D) and two-dimensional (2D) hat basis functions and use them to construct a new efficient method for solving 2DSFIEs, numerically.

2.2.1 1D-hat basis functions

1D-hat basis functions usually are defined on the interval [0, 1]. In the following definition, we present the more general case and extend the interval [0, 1] to the interval [0, T]. The interval [0, T] is divided to n subintervals of equal lengths h where \(h=\frac{T}{n}\).

Definition 3

The family of first \((n+1)\) 1D-hat basis functions on the interval [0, T] are defined as follows Babolian et al. (2009):

For \(i=1,2,\ldots ,n-1\),

and

An arbitrary function f(x) can be approximated using 1D-hat basis functions as follows:

where

The most important reason for using 1D-hat basis functions to approximate function f(x) is that the entries of vector F in Eq. (5) can be computed as follows:

In addition, an arbitrary function k(x, y) cab be expanded using 1D-hat basis functions as follows:

where \(K=[k_{ij}]\) is the \((n+1)\times (n+1)\) coefficients matrix which

2.2.2 2D-hat basis functions

Definition 4

2D-hat basis functions are defined on the interval \([0,T]\times [0,T]\) as follows:

where \(\phi _i(x)\) and \(\phi _j(y)\) are 1D-hat basis functions.

A bivariate function f(x, y) can be expanded using 2D-hat basis functions as follows:

where

and

and \(\otimes \) denote Kronecker product.

The entries of vector F in Eq. (12) can be computed as follows:

In addition, every function with four variables k(x, y, s, t) can be expanded using 2D-hat basis functions as follows:

where K is coefficients matrix of order \((n+1)^2\times (n+1)^2\).

From 2D-hat basis functions elementary properties, we conclude

where F is a column vector of order \((n+1)^2\) and \(\tilde{F}=diag(F)\).

Moreover, for every matrix A of order \((n+1)^2\times (n+1)^2\), we get

where \(\tilde{A}\) is a column vector of order \((n+1)^2\) and the elements of \(\tilde{A}\) are diagonal entries of matrix A.

3 Operational matrix of fractional order

In this section, we derive fractional-order operational matrix and fractional-order stochastic operational matrix of integration for hat basis function.

3.1 Fractional-order operational matrix of integration

We utilize fractional operational matrix in confronting with fractional differential equations and fractional integral equations.

If the following relation be satisfied, then matrix \(P^{r_1}\) is named fractional-order operational matrix:

Theorem 1

The fractional-order hat basis functions operational matrix of integration \(P^{r_1}\) is a matrix of order \((n+1)\times (n+1)\) which can be computed as follows Tripathi et al. (2013):

where

Using Eqs. (13) and (18), we get

where \(\mathbf P ^r=P^{r_1}\otimes P^{r_2}\).

3.2 Fractional-order stochastic operational matrix of integration

Theorem 2

Matrix \(P_s^{r_1}\) is called fractional-order stochastic operational matrix, if the following relation be satisfied:

where \(P_s^{r_1}\) is given by

where

Proof

Using part by part integration formula, we have

Remark

Additional explanation to get Eq. (23) is as follows:

Therefore

Since \(B(0)=0\), so the first term in Eq. (24) is zero. By multiplying Eq. (24) in \(\frac{1}{\Gamma (r_1)}\), we get Eq. (23).

In addition, we can expand \(\frac{1}{\Gamma (r_1)}\int _0^x (x-s)^{r_1-1}\phi _i(s)\mathrm{d}B(s)\), using hat basis functions as follows:

where

Using definition of 1D-hat basis function and Eq. (26), we get

For \(i=1,2,\ldots ,n-1,\) we get

Finally, for \(i=n\), we have

This complete the proof. \(\square \)

Using Eqs. (13) and (21), we have

where \(\mathbf P _s^r=P_s^{r_1}\otimes P_s^{r_2}\).

4 The proposed algorithm to solve 2DSFIEs

This section is devoted to find numerical solution of 2DSFIEs. We present a numerical method that, using the matrices provided in the previous section, transforms the original Eq. (1) to a linear system of algebraic equations. The numerical solution of Eq. (1) is obtained by solving this linear system.

We approximate the functions f(x, y), g(x, y), and \(k_i(x,y,s,t), i=1,2,\) in terms of 2D-hat basis functions as follows:

where G and \(K_i, i=1,2,\) are known \((n+1)^2\times 1\) column vector and known \((n+1)^2\times (n+1)^2\) matrices, respectively, whereas F is unknown \((n+1)^2\times 1\) column vector which should be determined.

The result of substituting of Eq. (28) into Eq. (1) is

Now, using Eq. (16) concludes

Now, using 2D-fractional-order operational matrix together with 2D-fractional-order stochastic operational matrix of integration computed in Eqs. (20) and (27), we get

Let \(R_1=K_1\tilde{F}{} \mathbf P ^r\) and \(R_2=K_2\tilde{F}{} \mathbf P _s^r\) and apply Eq. (17). Thus, we have

or

Equation (31) is a very simple system with \((n+1)^2\) linear equations and \((n+1)^2\) unknown variables. We can solve this system using an appropriate iterative method such as Jacobi method or Gauss–Seidel method. After solving this system, the numerical solution of Eq. (1) is computed from Eq. (28) as

5 Error analysis

This section is devoted to get the rate of convergence of the suggested method for solving 2DSFIEs. We prove that the rate of convergence is \(O(h^2)\). We define

where \(D=[0,1]\times [0,1]\).

Theorem 3

Let \(g(x,y)\in C^2(D)\) and \(g_n(x,y)\) be the expansion of g(x, y) using 2D-hat basis functions. Mirzaee and Hadadiyan established that

Proof

See Mirzaee and Hadadiyan (2016). \(\square \)

Theorem 4

Assume that \(k(x,y,s,t)\in C^2(D\times D)\) and \(k_n(x,y,s,t)\) be approximation of k(x, y, s, t) using 2D-hat basis functions. Mirzaee and Hadadiyan proved that

Proof

See Mirzaee and Hadadiyan (2016). \(\square \)

Theorem 5

Suppose that f(x, y) is the exact solution of Eq. (1) and \(f_n(x,y)\) be the approximate solution of Eq. (1) using proposed algorithm. Moreover, suppose that the following assumptions are satisfied:

-

(i)

\(\Vert f(x,y)\Vert \le \mathcal {N}, \quad (x,y)\in D\),

-

(ii)

\(\Vert k_i(x,y,s,t)\Vert \le \mathcal {L}_i, \quad i=1,2,\quad (x,y,s,t)\in D\times D,\)

-

(iii)

\(1-\frac{1}{\Gamma (r_1)\Gamma (r_2)} \bigl (\mathcal {L}_1+C_1h^2+\mathcal {M}^2\mathcal {L}_2+\mathcal {M}^2C_2h^2\bigl )>0,\)

where \(\mathcal {M}=\sup \{B(x);\ 0\le x\le 1\}\) and the constants N, \(L_1\), \(L_2\), \(C_1\), and \(C_2\) are generic constants. Then, we have

Proof

Let \(g_n(x,y)\) and \(k_{in}(x,y,s,t), i=1,2,\) be the approximate functions of g(x, y) and \(k_i(x,y,s,t),\), respectively. Therefore, we have

From Eqs. (1) and (33), we can write

Thus

Since \(|x-s|<1\) and \(|y-t|<1\), so

We define \(\mathcal {M}=\sup \{B(x);\ 0\le x\le 1\}.\) Since \(x<1, y<1\) and using this definition, we get

From assumptions (i) and (ii) and Theorem 4, we conclude that

From Eqs. (36) and (37) and using Theorem 3 and assumption (iii), we get

From Eq. (38), we conclude \(\Vert f(x,y)-f_n(x,y)\Vert \simeq O(h^2).\) \(\square \)

6 Numerical examples

In this section, some numerical examples have been solved using proposed method explained in the previous section to demonstrate the accuracy and efficiency of this method. The values of exact solution, approximate solution, and absolute error at the some selected points is reported in tables. To clarify accuracy and efficiency of the present method, the values of absolute error are computes as follows:

where f(x, y) and \(f_n(x,y)\) are the exact solution and approximate solution of 2DSFIEs, respectively. All of the computational reported in tables have been obtained by running some computer programs written in MATLAB software. In addition, the “pinv” command is used to solve the generated linear system of algebraic equations.

Example 1

Let us consider the following 2DSFIEs:

with the exact solution \(f(x,y)=1\).

We solve this example for two cases \(r_1=\frac{7}{2}, r_2=\frac{5}{2}\) and \(r_1=\frac{9}{2}, r_2=\frac{7}{2}\). For case \(r_1=\frac{7}{2}, r_2=\frac{5}{2}\), we have

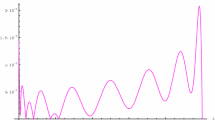

In this case, the values of approximate solution and absolute error obtained from present method for \(n=2,3\) is reported in Table 1. In addition, absolute error for \(n=2\) and \(n=3\) are plotted in Figs. 1 and 2, respectively.

For case \(r_1=\frac{9}{2}, r_2=\frac{7}{2}\), we have

In this case, the values of approximate solution and absolute error obtained from present method for \(n=2,3\) are reported in Table 2. In addition, absolute error for \(n=2\) and \(n=3\) are plotted in Figs. 3 and 4, respectively.

Absolute error of Example 1 for \(n=2\)

Absolute error of Example 1 for \(n=3\)

Absolute error of Example 1 for \(n=2\)

Absolute error of Example 1 for \(n=3\)

Example 2

Let us consider the following 2DSFIEs:

where

with the exact solution \(f(x,y)=xy\).

The values of approximate solution and absolute error achieved from present method for \(n=2,3\) are reported in Table 3. Also, absolute error for \(n = 2\) and \(n = 3\) are plotted in Figs. 5 and 6, respectively. Moreover, computational time of these examples are compared in the Table 4.

Absolute error of Example 2 for \(n=2\)

Absolute error of Example 2 for \(n=3\)

7 Conclusion

In this article, 2D-hat basis functions have been applied to provide an efficient numerical approach to solve 2DSFIEs. For this goal, first, we calculate operational matrix and stochastic operational matrix of fractional order, and then, using these matrices, the solution of considered problem is converted to the solution of linear system of algebraic equations. Some results concerning the convergence and error analysis associated with the present method are discussed and we establish the rate of convergence of this approach for solving 2DSFIEs is \(O(h^2)\). Finally, some numerical examples are solved using proposed method to confirm applicability of this technique. The numerical results reported in the tables verify that the suggested algorithm is very accurate (Table 4).

References

Abdel-Gawad HI, Osman MS (2013) On the variational approach for analyzing the stability of solutions of evolution equations. Kyungpook Math J 53(4):661–680

Abd-Elhameed WM, Youssri YH (2017a) Generalized Lucas polynomial sequence approach for fractional differential equations. Nonlinear Dyn 89(2):1341–1355

Abd-Elhameed WM, Youssri YH (2017b) Fifth-kind orthonormal Chebyshev polynomial solutions for fractional differential equations. Comput Appl Math 1–25

Asgari M, Ezzati R (2017) Using operational mattix of two-dimensional Bernstein polynomials for solving two-dimensional integral equations of fractional order. Appl Math Comput 307:290–298

Babolian E, Mansouri Z, Hatamzadeh-Varmazyar S (2009) Numerical solution of nonlinear Volterra-Fredholm integro-differential equations via direct method using triangular functions. Comput Math Appl 58(2):239–247

Dabiri A, Butcher EA (2017) Efficient modified Chebyshev differentiation matrices for fractional differential equations. Commun Nonlinear Sci Numer Simul 50:284–310

Dareiotis K, Leahy JM (2016) Finite difference schemes for linear stochastic integro-differential equations. Stoch Proc Appl 126(10):3202–3234

Dehghan M, Shirzadi M (2015) Numerical solution of stochastic elliptic partial differential equations using the meshless method of radial basis functions. Eng Anal Bound Elem 50:291–303

Denisov SI, Hanggi P, Kantz H (2009) Parameters of the fractional Fokker–Planck equation. Europhys Lett 85(4):40007

Gong B, Rui H (2015) One order numerical scheme for forward-backward stochastic differential equations. Appl Math Comput 271:220–231

Higham DJ (2001) An algorithmic introduction to numerical simulation of stochastic differential equations. SIAM Rev 43(3):525–546

Kamrani M (2015) Numerical solution of stochastic fractional differential equations. Numer Algorithms 68(1):81–93

Kilbas AA, Srivastava HM, Trujillo JJ (2006) Theory and applications of fractional differential equations. Elsevier Science B.V, Amsterdam

Lepik Ü (2009) Solving fractional integral equations by the Haar wavelet method. Appl Math Comput 214(2):468–478

Li C, Wang Y (2009) Numerical algorithm based on Adomian decomposition for fractional differential equations. Comput Math Appl 57(10):1672–1681

Mao X (2015) The truncated Euler–Maruyama method for stochastic differential equations. J Comput Appl Math 290:370–384

Mei H, Yin G, Wu F (2016) Properties of stochastic integro-differential equations with infinite delay: regularity, ergodicity, weak sense Fokker–Planck equations. Stoch Proc Appl 126(10):3102–3123

Mirzaee F, Hadadiyan E (2016) Application of two-dimensional hat functions for solving space-time integral equations. J Appl Math Comput 51:453–486

Mirzaee F, Samadyar N (2017a) Application of operational matrices for solving system of linear Stratonovich Volterra integral equation. J Comput Appl Math 320:164–175

Mirzaee F, Samadyar N (2017b) Application of orthonormal Bernstein polynomials to construct a efficient scheme for solving fractional stochastic integro-differential equation. Optik Int J Light Electron Opt 132:262–273

Mirzaee F, Samadyar N (2018) Numerical solution of nonlinear stochastic ItVolterra integral equations driven by fractional Brownian motion. Math Methods Appl Sci 41(4):1410–1423

Mirzaee F, Samadyar N (2018) Using radial basis functions to solve two dimensional linear stochastic integral equations on non-rectangular domains. Eng Anal Bound Elem (in press)

Mirzaee F, Samadyar N (2018) Convergence of Legendre wavelet collocation method for solving nonlinear Stratonovich Volterra integral equations. Comput Methods Differ Equ 6(1):80–97

Mirzaee F, Samadyar N, Hosseini SF (2017) A new scheme for solving nonlinear Stratonovich Volterra integral equations via Bernoullis approximation. Appl Anal 96(13):2163–2179

Mirzaee F, Samadyar N, Hosseini SF (2018) Euler polynomial solutions of nonlinear stochastic Itô-Volterra integral equations. J Comput Appl Math 330:574–585

Moghaddam BP, Machado JAT (2017) A stable three-level explicit spline finite difference scheme for a class of nonlinear time variable order fractional partial differential equations. Comput Math Appl 73(6):1262–1269

Mohammadi F (2015) A wavelet-based computational method for solving stochastic Itô-Volterra integral equations. J Comput Phys 298:254–265

Osman MS (2017) Multiwave solutions of time-fractional (2+1)-dimensional Nizhnik–Novikov–Veselov equations. Pramana J Phys 88(4):67

Pandey RK, Singh OP, Singh VK (2009) Efficient algorithms to solve singular integral equations of Abel type. Comput Math Appl 57(4):664–676

Podlubny I (1999) Fractional differential equations: an introduction to fractional derivatives, Fractional differential equations, Some methods of their solution and some of their applications. Academic Press, Cambridge

Tocino A, Ardanuy R (2002) Runge–Kutta methods for numerical solution of stochastic differential equations. J Comput Appl Math 138(2):219–241

Tripathi MP, Baranwal VK, Pandey RK, Singh OP (2013) A new numerical algorithm to solve fractional differential equations based on operational matrix of generalized hat functions. Commun Nonlinear Sci Numer Simul 18(6):1327–1340

Vityuk AN, Golushkov AV (2004) Existence of solutions of systems of partial differential equations of fractional order. Nonlinear Oscil 7(3):318–325

Yang XJ, Machado JAT, Srivastava HM (2016) A new numerical technique for solving the local fractional diffusion equation: two-dimensional extended differential transform approach. Appl Math Comput 274:143–151

Youssri YH (2017) A new operational matrix of Caputo fractional derivatives of Fermat polynomials: an application for solving the Bagley–Torvik equation. Adv Differ Equ 2017(1):73

Zhou S, Hu Y (2016) Numerical approximation for nonlinear stochastic pantograph equations with Markovian switching. Appl Math Comput 286:126–138

Acknowledgements

The authors would like to state our appreciation to the editor and referees for their costly comments and constructive suggestions which have improved the quality of the current paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by José Tenreiro Machado.

Rights and permissions

About this article

Cite this article

Mirzaee, F., Samadyar, N. Application of hat basis functions for solving two-dimensional stochastic fractional integral equations. Comp. Appl. Math. 37, 4899–4916 (2018). https://doi.org/10.1007/s40314-018-0608-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40314-018-0608-4

Keywords

- Stochastic fractional integral equations

- Fractional calculus

- Operational matrix

- Hat basis functions

- Brownian motion process

- Error analysis