Abstract

In this paper, we present some new necessary and sufficient optimality conditions in terms of Clarke subdifferentials for approximate Pareto solutions of a nonsmooth vector optimization problem which has an infinite number of constraints. As a consequence, we obtain optimality conditions for the particular cases of cone-constrained convex vector optimization problems and semidefinite vector optimization problems. Examples are given to illustrate the obtained results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper mainly deals with constrained vector optimization problems formulated as follows:

where fi, i ∈ I := {1,…,m}, and gt, t ∈ T, are local Lipschitz functions from a Banach space X to \(\mathbb {R}\), Ω is a nonempty and closed subset of X, and T is an arbitrary (possibly infinite) index set. Optimization problems of this type relate to semi-infinite vector optimization problems, provided that the space X is finite-dimensional, and to infinite vector optimization problems if X is infinite-dimensional (see [2, 16]). The modeling of problems as (VP) naturally arises in a wide range of applications in various fields of mathematics, economics, and engineering; we refer the readers to the books [16, 35] and to the papers [4, 5, 7,8,9,10, 13, 14, 18, 23] with the references therein.

Our main concern is to study the optimality conditions for approximate Pareto solutions of problem (VP). It should be noted here that the study of approximate solutions is very important because, from the computational point of view, numerical algorithms usually generate only approximate solutions if we stop them after a finite number of steps. Furthermore, the solution set may be empty in the general noncompact case (see [20, 22, 26, 27, 33, 38]) whereas approximate solutions exist under very weak assumptions (see Propositions 2.1 and 2.2 in Section 2 below).

In the literature, there are many publications devoted to optimality conditions for approximate solutions of semi-infinite/infinite scalar optimization problems (see, for example, [13, 23, 24, 29,30,31, 34, 36]). However, in contrast to the scalar case, there are a few works dealing with optimality conditions for approximate Pareto solutions of semi-infinite/infinite vector optimization problems (see [25, 28, 37]). In [28, 37], the authors obtained necessary and sufficient optimality conditions for approximate Pareto solutions of a convex semi-infinite/infinite vector optimization problem under various kind of Farkas–Minkowski constraint qualifications. By using the Chankong–Haimes scalarization method, Kim and Son [25] established some necessary optimality conditions for approximate quasi Pareto solutions of a local Lipschitz semi-infinite vector optimization problem.

In this paper, we present some necessary conditions of the Karush–Kuhn–Tucker type for approximate (quasi) Pareto solutions of the problem (VP) under a Slater-type constraint qualification hypothesis. Sufficient optimality conditions for approximate (quasi) Pareto solutions of the problem (VP) are also provided by means of introducing the concepts of (strictly) generalized convex functions, defined in terms of the Clarke subdifferential of local Lipschitz functions. The obtained results improve the corresponding ones in [25, 28, 37]. As an application, we establish optimality conditions for cone-constrained convex vector optimization problems and semidefinite vector optimization problems. In addition, some examples are also given for illustrating the obtained results.

In Section 2, we recall some basic definitions and preliminaries from the theory of vector optimization and variational analyses. Section 3 presents the main results. The application of the obtained results in Section 3 to cone-constrained convex vector optimization problems and semidefinite vector optimization problems is addressed in Section 4.

2 Preliminaries

2.1 Approximate Pareto Solutions

Let \(\mathbb {R}^{m}_{+} := \{y := (y_{1}, \ldots , y_{m}) | y_{i}\geqq 0,~ i\in I\}\) be the nonnegative orthant in \(\mathbb {R}^{m}\). For \(a, b\in \mathbb {R}^{m}\), by \(a\leqq b\), we mean \(b-a\in \mathbb {R}^{m}_{+}\); by a ≤ b, we mean \(b-a\in \mathbb {R}^{m}_{+}\setminus \{0\}\); and by a < b, we mean \(b-a\in \operatorname {int}\mathbb {R}^{m}_{+}\).

Definition 2.1

(See [32]) Let \(\xi \in \mathbb {R}^{m}_{+}\) and \(\bar x\in C\). We say that(i) \(\bar {x}\) is a weakly Pareto solution (resp. a Pareto solution) of (VP) if there is no x ∈ C such that

(ii) \(\bar x\) is a ξ-weakly Pareto solution (resp. a ξ-Pareto solution) of (VP) if there is no x ∈ C such that

(iii) \(\bar x\) is a ξ-quasi-weakly Pareto solution (resp. a ξ-quasi Pareto solution) of (VP) if there is no x ∈ C such that

Remark 2.1

If ξ = 0, then the concepts of a ξ-Pareto solution and a ξ-quasi-Pareto solution (resp. a ξ-weakly Pareto solution and a ξ-quasi-weakly Pareto solution) coincide with the concept of a Pareto solution (resp. a weakly Pareto solution). Hence, when dealing with approximate Pareto solutions, we only consider the case that \(\xi \in \mathbb {R}^{m}_{+}\setminus \{0\}\).

Definition 2.2

Let A be a subset in \(\mathbb {R}^{m}\) and \({\bar {y}} \in \mathbb {R}^{m}\). The set \(A \cap ({\bar {y}} - \mathbb {R}^{m}_{+})\) is called a section of A at \({\bar {y}}\) and denoted by \([A]_{\bar {y}}.\) The section \([A]_{\bar {y}}\) is said to be bounded if and only if there is \(a\in \mathbb {R}^{m}\) such that

Remark 2.2

Let \(\bar y\) be an arbitrary element in f(C). It is easily seen that every ξ-Pareto solution (resp. ξ-quasi Pareto solution) of (VP) on \(C\cap f^{-1}\left ([f(C)]_{\bar y}\right )\) is also a ξ-Pareto one (resp. ξ-quasi Pareto one) of (VP) on C.

The following results give some sufficient conditions for the existence of approximate Pareto solutions of (VP).

Proposition 2.1

(Existence of approximate Pareto solutions) Assume that f(C) has a nonempty bounded section. Then for each \(\xi \in \mathbb {R}^{m}_{+}\setminus \{0\},\) the problem (VP) admits at least one ξ-Pareto solution.

Proof

The assertion follows directly from Remark 2.2 and [17, Lemma 3.1], so is omitted. □

Proposition 2.2

(Existence of approximate quasi Pareto solutions) If f(C) has a nonempty bounded section, then for every \(\xi \in \operatorname {int} \mathbb {R}^{m}_{+},\) the problem (VP) admits at least one ξ-quasi Pareto solution.

Proof

Let x0 ∈ C be such that the section of f(C) at f(x0) is bounded. By Proposition 2.1, there exists \(\bar x \in C\) such that \(f(\bar x)\leqq f(x^{0})\) and

Consequently,

By the continuity of f and the closedness of C, for each x ∈ C, the set

is closed. By [1, Theorem 3.1], there exists x∗∈ C such that \(f(x^{*})<f(\bar x)\) and

Consequently,

Thus, x∗ is a ξ-quasi Pareto solution of (VP). The proof is complete. □

2.2 Normals and Subdifferentials

For a Banach space X, the bracket 〈⋅,⋅〉 stands for the canonical pairing between space X and its dual X∗. The closed unit ball of X is denoted by BX. The closed ball with center x and radius δ is denoted by B(x,δ). Let A be a nonempty subset of X. The topological interior, the topological closure, and the convex hull of A are denoted, respectively, by intA, clA, and coneA. The symbol A∘ stands for the polar cone of a given set A ⊂ X, i.e.,

Let \(\varphi \colon X \to \mathbb {R}\) be a local Lipschitz function. The Clarke generalized directional derivative of φ at \(\bar x\in X\) in the direction d ∈ X, denoted by \(\varphi ^{\circ }(\bar x; d)\), is defined by

The Clarke subdifferential of φ at \(\bar x\) is defined by

Let S be a nonempty closed subset of X. The Clarke tangent cone to S at \(\bar x\in S\) is defined by

where dS denotes the distance function to S. The Clarke normal cone to S at \(\bar x\in S\) is defined by

The following lemmas will be used in the sequel.

Lemma 2.1

(See [12, p. 52]) Let φ be a local Lipschitz function from X to \(\mathbb {R}\) and S be a nonempty subset of X. If \(\bar x\) is a local minimizer of φ on S, then

Lemma 2.2

(See [12, Proposition 2.3.3]) Let \(\varphi _{l}\colon X\to \mathbb {R}\), l = 1,…,p, \(p\geqq 2\), be a local Lipschitz around \(\bar x\in X\). Then, we have the following inclusion:

Lemma 2.3

(See [12, Proposition 2.3.12]) Let \(\varphi _{l}\colon X\to {\mathbb {R}}\), l = 1,…,p, be a local Lipschitz around \(\bar x\in X\). Then, the function \( \phi (\cdot ):=\max \limits \{\varphi _{l}(\cdot )|l=1, \ldots , p\}\) is also a local Lipschitz around \(\bar x\) and one has

3 Optimality Conditions

Hereafter, we assume that the following assumptions are satisfied:(i) T is a compact topological space;(ii) X is separable; or T is metrizable and ∂gt(x) is upper semicontinuous (w∗) in t for each x ∈ X.

Denote by \(\mathbb {R}_{+}^{|T|}\) the set of all functions \(\mu \colon T\to \mathbb {R}_{+}\) such that μt := μ(t) = 0 for all t ∈ T except for finitely many points. The active constraint multipliers set of the problem (VP) at \(\bar x\in {\Omega }\) is defined by

For each x ∈ X, put \(G(x):=\max \limits _{t\in T} g_{t}(x)\) and

Fix \(\xi \in \mathbb {R}^{m}_{+}\setminus \{0\}\). The following theorem gives a necessary optimality condition of fuzzy Karush–Kuhn–Tucker type for ξ-weakly Pareto solutions of the problem (VP).

Theorem 3.1

Let \(\bar x\) be a ξ-weakly Pareto solution of the problem (VP). If the following constraint qualification condition holds:

then, for any δ > 0 small enough, there exist \(x_{\delta }\in C\cap B(\bar x, \delta )\) and \(\lambda :=(\lambda _{1}, \ldots , \lambda _{m})\in \mathbb {R}^{m}_{+}\) with \({\sum }_{i\in I}\lambda _{i}=1\) such that

where clconv(⋅) denotes the closed convex hull with the closure taken in the weak∗-topology of the dual space X∗.

Proof

For each x ∈ X, put \(\psi (x):=\max \limits _{i\in I}\{f_{i}(x)-f_{i}(\bar x)+\xi _{i}\}.\) Then, we have \(\psi (\bar x)=\max \limits _{i\in I}\{\xi _{i}\}\). Since \(\bar x\) is a ξ-weakly Pareto solution of (VP), one has

Clearly, ψ is locally Lipschitz and bounded from below on C. By (3.1), we have

By the Ekeland variational principle [15, Theorem 1.1], for any δ > 0, there exists xδ ∈ C such that \(\|x_{\delta }-\bar x\|<\delta \) and

For each x ∈ C, put

Then, xδ is a minimizer of φ on C. By Lemma 2.1, we have

By Lemma 2.2 and [21, Example 4, p. 198], one has

Thanks to Lemma 2.3, we have

We claim that there exists \(\bar {\delta }>0\) such that for all \(x\in B(\bar x, \bar {\delta })\) there is d ∈ T(x;Ω) satisfying G∘(x;d) < 0. Indeed, if otherwise, then there exists a sequence {xk} converging to \(\bar x\) such that \(G^{\circ }(x^{k}; d)\geqq 0\) for all \(k\in \mathbb {N}\) and d ∈ T(x;Ω). Hence, \(G^{\circ }(x^{k}; \bar d)\geqq 0\) for all \(k\in \mathbb {N}\). By the upper semicontinuity of G∘(⋅,⋅), we have:

contrary to condition (𝓤).

By [19, Theorem 6] and [36, Theorem 2.1], for each \(\delta \in (0, \bar \delta )\), we have

Combining this with (3.2)–(3.4), we obtain the desired assertion. □

Remark 3.1

By using approximate subdifferentials, Lee et al. [28, Theorem 8.3] derived some necessary optimality conditions for ξ-weakly Pareto solutions of a convex infinite vector optimization problem. However, we are not familiar with any results on optimality conditions for ξ-weakly Pareto solutions of a nonconvex problem of type (VP). Theorem 3.1 may be the first result of this type. We also note here that when T is a finite set, the result in Theorem 3.1 is a corresponding result of [11, Theorem 3.4], where the optimality condition was given in terms of the limiting subdifferential.

Theorem 3.2

Let \(\bar x\) be a ξ-quasi-weakly Pareto solution of the problem (VP). If the condition (𝓤) holds at \(\bar x\) and the convex hull of \(\left \{\bigcup \partial g_{t}(\bar x)| t\in T(\bar x)\right \}\) is weak∗-closed, then, there exist \(\lambda :=(\lambda _{1}, \ldots , \lambda _{m})\in \mathbb {R}^{m}_{+}\) with \({\sum }_{i\in I}\lambda _{i}=1\), and \(\mu \in A(\bar x)\) such that

Proof

For each x ∈ X, put \({\Phi } (x):=\max \limits _{i\in I}\{f_{i}(x)-f_{i}(\bar x)+\xi _{i}\|x-\bar x\|\}.\) Then, \({\Phi }(\bar x)=0\). Since \(\bar x\) is a ξ-quasi-weakly Pareto solution of (VP), we have

This means that \(\bar x\) is a minimizer of Φ on C. By Lemma 2.1, one has

By Lemma 2.3, we have

Since condition (𝓤) holds at \(\bar x\) and the convex hull of \(\left \{\bigcup \partial g_{t}(\bar x)| t\in T(\bar x)\right \}\) is weak∗-closed, we obtain

To finish the proof of the theorem, it remains to combine (3.6), (3.7), and (3.8). □

Remark 3.2

(i) When X is a finite-dimensional space and the constraint functions \(g_{t}\colon X\to \mathbb {R},\)t ∈ T, are local Lipschitz with respect to x uniformly in t ∈ T, i.e., for each x ∈ X, there is a neighborhood U of x and a constant K > 0 such that

then the set \(\left \{\bigcup \partial g_{t}(x)| t\in T(x)\right \}\) is compact. Consequently, its convex hull is always closed.(ii) Recently, by using the Chankong–Haimes scalarization scheme (see [6]), Kim and Son [25, Theorem 3.3] obtained some necessary optimality conditions for ξ-quasi Pareto solutions of a local Lipschitz semi-infinite vector optimization problem. We note here that condition (𝓤) is weaker than the following condition (𝒜i) used in [25, Theorem 3.3]:

Thus, Theorem 3.2 improves [25, Theorem 3.3]. To illustrate, we consider the following example:

Example 3.1

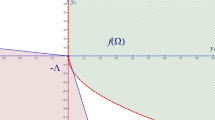

Let \(f\colon \mathbb {R}\to \mathbb {R}^{2}\) be defined by f(x) := (f1(x),f2(x)), where

and f2(x) := 0 for all \(x\in \mathbb {R}\). Assume that \({\Omega }=\mathbb {R}\), T = [1,2], and gt(x) = tx for all \(x\in \mathbb {R}\). Then, the feasible set of (VP) is \(C=(-\infty , 0]\). Let \(\bar x:=0\in C\). Clearly, for any \(\xi \in \mathbb {R}^{2}_{+}\setminus \{0\}\), \(\bar x\) is a ξ-quasi-weakly Pareto solution of (VP). It is easy to check that

Hence, for each \(d\in \mathbb {R}\), we have

Clearly, every d < 0 satisfies condition (𝓤). However, for every i = 1,2, condition (𝒜i) does not hold. Thus, Theorem 3.2 can be applied for this example, but not [25, Theorem 3.3].

The following concepts are inspired from [10].

Definition 3.1

Let f := (f1,…,fm) and gT := (gt)t∈T.(i) We say that (f,gT) is generalized convex on Ω at \(\bar x\) if, for any x ∈Ω, \(z_{i}^{*}\in \partial f_{i}(\bar x)\), i = 1,…,m, and \(x^{*}_{t}\in \partial g_{t}(\bar x)\), t ∈ T, there exists \(\nu \in T(\bar x; {\Omega })\) satisfying

and

(ii) We say that (f,gT) is strictly generalized convex on Ω at \(\bar x\) if, for any \(x\in {\Omega }\setminus \{\bar x\}\), \(z_{i}^{*}\in \partial f_{i}(\bar x)\), i = 1,…,m, and \(x^{*}_{t}\in \partial g_{t}(\bar x)\), t ∈ T, there exists \(\nu \in T(\bar x; {\Omega })\) satisfying

and

Remark 3.3

Clearly, if Ω is convex and fi, i ∈ I, and gt, t ∈ T are convex (resp. strictly convex), then (f,gT) is generalized convex (resp. strictly generalized convex) on Ω at any \(\bar x\in {\Omega }\) with \(\nu :=x-\bar x\) for each x ∈Ω. Furthermore, by a similar argument in [10, Example 3.2], we can show that the class of generalized convex functions is properly larger than the one of convex functions.

Theorem 3.3

Let \(\bar x\in C\) and assume that there exist \(\lambda :=(\lambda _{1}, \ldots , \lambda _{m})\in \mathbb {R}^{m}_{+}\) with \({\sum }_{i\in I}\lambda _{i}=1\), and \(\mu \in A(\bar x)\) satisfying (3.5).(i) If (f,gT) is generalized convex on Ω at \(\bar x\), then \(\bar x\) is a ξ-quasi-weakly Pareto solution of (VP).(ii) If (f,gT) is strictly generalized convex on Ω at \(\bar x\), then \(\bar x\) is a ξ-quasi Pareto solution of (VP).

Proof

We will follow the proof scheme of [11, Theorem 3.13]. By (3.5), there exist \(z_{i}^{*}\in \partial f_{i}(\bar x)\), i = 1,…,m, \(x^{*}_{t}\in \partial g_{t}(\bar x)\), t ∈ T, \(b^{*}\in B_{X^{*}}\), and \(\omega ^{*}\in N(\bar x; {\Omega })\) such that

or, equivalent

We first prove (i). On the contrary, if \(\bar x\) is not a ξ-quasi-weakly Pareto solution of (VP), then there is x ∈ C such that

From this and the fact that \({\sum }_{i\in I}\lambda _{i}=1\), we obtain

Since (f,gT) is generalized convex on Ω at \(\bar x\), for such x, there exists \(\nu \in T(\bar x; {\Omega })\) such that

Hence,

Combining this with the facts that \(x, \bar x\in C\), and \(\mu \in A(\bar x)\), we conclude that

contrary to (3.9).

We now prove (ii). Assume on the contrary that \(\bar x\) is not a ξ-quasi Pareto solution of (VP), i.e., there exists y ∈ C satisfying

This implies that \(y\neq \bar x\) and

Since (f,gT) is strictly generalized convex on Ω at \(\bar x\), for such y, there exists \(\vartheta \in T(\bar x; {\Omega })\) such that

An analysis similar to that in the proof of (3.10) shows that

contrary to (3.11). □

Remark 3.4

The conclusions of Theorem 3.3 are still valid if (f,gT) is a generalized convex in the sense of Chuong and Kim [10, Definition 3.3].

We now present an example which demonstrates the importance of the generalized convexity of (f,gT) in Theorem 3.3. In particular, condition (3.5) alone is not sufficient to guarantee that \(\bar x\) is a ξ-quasi-weakly Pareto solution of (VP) if the generalized convexity of (f,gT) on Ω at \(\bar x\) is violated.

Example 3.2

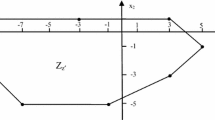

Let \(f\colon \mathbb {R}\to \mathbb {R}^{2}\) be defined by f(x) := (f1(x),f2(x)), where

for i = 1,2. Assume that \({\Omega }=\mathbb {R}\), T = [0,1], and gt(x) = x − t for all \(x\in \mathbb {R}\) and t ∈ [0,1]. Then, the feasible set of (VP) is \(C=(-\infty , 0]\). Let \(\bar x:=0\in C\). Clearly, fi, i = 1,2, and gt, t ∈ T, are local Lipschitz at \(\bar x\). An easy computation shows that

Take arbitrarily \(\xi =(\xi _{1}, \xi _{2})\in \mathbb {R}^{2}_{+}\setminus \{0\}\) satisfying \(\xi _{i}<\frac {1}{\pi }\) for all i = 1,2. Then, we see that \(\bar x\) satisfies condition (3.5) for \(\lambda _{1}=\lambda _{2}=\frac {1}{2}\), and μt = 0 for all t ∈ T. However, \(\bar x\) is not a ξ-quasi-weakly Pareto solution of (VP). Indeed, let \(\hat {x}=-\frac {1}{\pi }\in C\). Then

as required. We now show that (f,gT) is not generalized convex on Ω at \(\bar x\). Indeed, by choosing \(z_{i}^{*}=0\in \partial f_{i}(\bar x)\) for i = 1,2, we have

4 Applications

4.1 Cone-Constrained Convex Vector Optimization Problems

In this subsection, we consider the following cone-constrained convex vector optimization problem:

where the function f and the set Ω are as in the previous sections, K is a closed convex cone in a normed space Y, and g is a continuous and K-convex mapping from X to Y. Recall that the mapping g is said to be K-convex if

Let Y∗ be the dual space of Y and K+ be the positive polar cone of K, i.e.,

Then, K+ is weak∗-closed. Moreover, it is easily seen that

where gs(x) := 〈s,g(x)〉. Hence, the problem (CCVP) is equivalent to the following vector optimization problem:

In order to apply the results in Section 3 to problem (4.1), we need to have a compact set of indices, which is not the case with the cone K+. However, since Y is a normed space, it is easily seen that the set \(K^{+}\cap B_{Y^{\ast }}\) is weak∗-compact and

Hence, we can rewrite problem (4.1) as

where \({T}:= K^{+} \cap B_{Y^{\ast }}.\)

Proposition 4.1

Let \(\xi \in \mathbb {R}^{m}_{+}\setminus \{0\}\) and \(\bar x\) be a ξ-quasi-weakly Pareto solution of the problem (CCVP). If condition (𝓤) holds at \(\bar x\) and the convex hull \(\operatorname {co}\left \{\bigcup \partial g_{s}(\bar x)| s\in {I}(\bar x)\right \}\) is weak∗-closed, then, there exist \(\lambda :=(\lambda _{1}, \ldots , \lambda _{m})\in \mathbb {R}^{m}_{+}\) with \({\sum }_{i\in I}\lambda _{i}=1\), and \(\zeta \in K^{+}\) such that

Proof

By Theorem 3.2, there exist \(\lambda :=(\lambda _{1}, \ldots , \lambda _{m})\in \mathbb {R}^{m}_{+}\) with \({\sum }_{i\in I}\lambda _{i}=1\), and \(\mu \in {A}(\bar x)\) such that

Note that for each s ∈ T, the function gs is continuous and convex on X. Moreover, since \(\mu \in {A}(\bar {x})\), there exists only finitely many μs,s ∈ T, differ from zero. Hence,

where \(\zeta :={\sum }_{s \in {T}}\mu _{s} s\). Clearly, ζ ∈ K+. The proof is complete. □

Remark 4.1

Assume that Ω is convex. Since g is continuous and K-convex, we see that if fi,i ∈ I, are convex (resp. strictly convex), then (f,gT) is generalized convex (resp. strictly generalized convex) on Ω at any \(\bar x\in {\Omega }\) with \(\nu :=x-\bar x\) for each x ∈Ω. Thus, by Theorem 3.3, (4.2) is a sufficient condition for a point \(\bar {x}\in C\) to be a ξ-quasi-weakly Pareto (resp. ξ-quasi Pareto) solution of (CCVP) provided that fi,i ∈ I, are convex (resp. strictly convex).

4.2 Semidefinite Vector Optimization Problem

Let f : ℝn → ℝ be a local Lipschitz continuous function, Ω ⊂ ℝn be a nonempty closed convex subset of ℝn, and g : ℝn → Sp be a continuous mapping, where Sp denotes the set of p × p symmetric matrices. For a p × p matrix A = (aij), the notion trace(A) is defined by

We suppose that Sp is equipped with a scalar product A ∙ B := trace(AB), where AB is the matrix product of A and B. A matrix A ∈ Sp is said to be a negative semidefinite (resp. positive semidefinite) matrix if \(\langle v, Av\rangle \leqq 0\) (resp. \(\langle v, Av\rangle \geqq 0\)) for all v ∈ ℝn. If matrix A is negative semidefinite (resp. positive semidefinite matrix), it is denoted by A ≼ 0 (resp. A ≽ 0).

We now consider the following semidefinite vector optimization problem:

Let us denote by \(S^{p}_{+}\) the set of all positive semidefinite matrices of Sp. It is well known that \(S^{p}_{+}\) is a proper convex cone, i.e., it is closed, convex, pointed, and solid (see [3]), and that \(S^{p}_{+}\) is a self-dual cone, i.e., \((S^{p}_{+})^{+}=S^{p}_{+}\). Hence, the problem (SDVP) can be rewritten under the form of the problem (CCVP), where \(K:=S^{p}_{+}\). For Λ ∈ K, the function gΛ becomes a function from ℝn to ℝ defined by

If g is affine, i.e., \(g(x):=F_{0}+{\sum }_{i=1}^{n} F_{i}x_{i}\), where F0,F1,…,Fn ∈ Sp are the given matrices, then the subdifferential of the function gΛ(x) is equal to

In that case, by Remark 3.2, the second assumption of Proposition 4.1 can be removed. Thus, we obtain the following result.

Proposition 4.2

Let \(\xi \in \mathbb {R}^{m}_{+}\setminus \{0\}\). If \(\bar x\) is a ξ-quasi-weakly Pareto solution of the problem (SDVP), then there exist \(\lambda :=(\lambda _{1}, \ldots , \lambda _{m})\in \mathbb {R}^{m}_{+}\) with \({\sum }_{i\in I}\lambda _{i}=1\), and \({\Lambda } \in S^{p}_{+}\) such that

Remark 4.2

Since g is an affine mapping, by Theorem 3.3, (4.3) is a sufficient condition for a point \(\bar {x}\in C\) to be a ξ-quasi-weakly Pareto (resp. ξ-quasi Pareto) solution of (SDVP) provided that fi,i ∈ I, are convex (resp. strictly convex).

References

Araya, Y.: Ekeland’s variational principle and its equivalent theorems in vector optimization. J. Math. Anal. Appl. 346, 9–16 (2008)

Bonnans, J.F., Shapiro, A.: Perturbation Analysis of Optimization Problems. Springer (2000)

Blekherman, G., Parrilo, P.A., Thomas, R.R.: Semidefinite Optimization and Convex Algebraic Geometry. SIAM, Philadelphia (2013)

Canovas, M.J., López, M.A., Mordukhovich, B.S., Parra, J.: Variational analysis in semi-infinite and infinite programming. I. Stability of linear inequality systems of feasible solutions. SIAM J. Optim. 20, 1504–1526 (2009)

Caristi, G., Ferrara, M., Stefanescu, A.: Semi-infinite multiobjective programming with generalized invexity. Math. Rep. 12, 217–233 (2010)

Chankong, V., Haimes, Y.Y.: Multiobjective Decision Making. North-Holland Publishing Co., New York (1983)

Chuong, T.D., Huy, N.Q., Yao, J.-C.: Subdifferentials of marginal functions in semi-infinite programming. SIAM J. Optim. 20, 1462–1477 (2009)

Chuong, T.D., Huy, N.Q., Yao, J.-C.: Stability of semi-infinite vector optimization problems under functional perturbations. J. Glob. Optim. 45, 583–595 (2009)

Chuong, T.D., Huy, N.Q., Yao, J.-C.: Pseudo-lipschitz property of linear semi-infinite vector optimization problems. Eur. J. Oper. Res. 200, 639–644 (2010)

Chuong, T.D., Kim, D.S.: Nonsmooth semi-infinite multiobjective optimization problems. J. Optim. Theory Appl. 160, 748–762 (2014)

Chuong, T.D., Kim, D.S.: Approximate solutions of multiobjective optimization problems. Positivity 20, 187–207 (2016)

Clarke, F.H.: Optimization and Nonsmooth Analysis. Wiley-Interscience, New York (1983)

Dinh, N., Goberna, M.A., López, M. A., Son, T.Q.: New Farkas-type constraint qualifications in convex infinite programming. ESAIM Control Optim. Calc. Var. 13, 580–597 (2007)

Dinh, N., Mordukhovich, B.S., Nghia, T.T.A.: Qualification and optimality conditions for DC programs with infinite constraints. Acta. Math. Vietnam. 34, 123–153 (2009)

Ekeland, I.: On the variational principle. J. Math. Anal. Appl. 47, 324–353 (1974)

Gorberna, M.A., López, M. A.: Linear Semi-infinite Optimization. Wiley, Chichester (1998)

Ha, T.X.D.: Variants of the Ekeland variational principle for a set-valued map involving the Clarke normal cone. J. Math. Anal. Appl. 316, 346–356 (2006)

Hettich, R., Kortanek, K.O.: Semi-infinite programming: theory, methods, and applications. SIAM Rev. 35, 380–429 (1993)

Hiriart-Urruty, J.B.: On optimality conditions in nondifferentiable programming. Math. Program. 14, 73–86 (1978)

Huy, N.Q., Kim, D.S., Tuyen, N.V.: Existence theorems in vector optimization with generalized order. J. Optim. Theory Appl. 174, 728–745 (2017)

Ioffe, A.D., Tikhomirov, V.M.: Theory of Extremal Problems. Stud. Math Appl. 6, North-Holland, Amsterdam (1979)

Jahn, J.: Existence theorems in vector optimization. J. Optim. Theory Appl. 50, 397–406 (1986)

Kanzi, N., Nobakhtian, S.: Optimality conditions for nonsmooth semi-infinite programming. Optimization 59, 717–727 (2010)

Kanzi, N.: Constraint qualifications in semi-infinite systems and their applications in nonsmooth semi-infinite programs with mixed constraints. SIAM J. Optim. 24, 559–572 (2014)

Kim, D.S., Son, T.Q.: An approach to 𝜖-duality theorems for nonconvex semi-infinite multiobjective optimization problems. Taiwanese J. Math. 22, 1261–1287 (2018)

Kim, D.S., Phạm, T.S., Tuyen, N.V.: On the existence of Pareto solutions for polynomial vector optimization problems. Math. Program. 177, 321–341 (2019)

Kim, D.S., Mordukhovich, B.S., Phạm, T.S., Tuyen, N.V.: Existence of efficient and properly efficient solutions to problems of constrained vector optimization. https://arxiv.org/abs/1805.00298

Lee, G.M., Kim, G.S., Dinh, N.: Optimalityconditionsforapproximatesolutionsofconvexsemi-infinitevectoroptimizationproblems. In: Ansari, Q.H., Yao, J.-C. (eds.) RecentDevelopmentsinVectorOptimization,VectorOptimization, vol. 1, pp 275–295. Springer, Berlin (2012)

Li, C., Zhao, X.P., Hu, Y.H.: Quasi-slaterandFarkas–Minkowskiqualificationsforsemi-infiniteprogrammingwithapplications. SIAMJ.Optim. 23, 2208–2230 (2013)

Long, X.J., Xiao, Y.B., Huang, N.J.: Optimalityconditionsofapproximatesolutionsfornonsmoothsemi-infiniteprogrammingproblems. J.Oper.Res.Soc.China 6, 289–299 (2018)

Loridan, P.: Necessaryconditionsforε-optimality. Math.Program.Study 19, 140–152 (1982)

Loridan, P.: 𝜖-solutionsinvectorminimizationproblems. J.Optim.TheoryAppl. 43, 265–276 (1984)

Luc, D.T.: TheoryofVectorOptimization.Springer (1989)

Mishra, S.K., Jaiswal, M., LeThi, H.A.: Nonsmoothsemi-infiniteprogrammingproblemusinglimitingsubdifferentials. J.Glob.Optim. 53, 285–296 (2012)

Reemtsen, R., Rückmann, J.J.: Semi-InfiniteProgrammingNonconvexOptimizationandItsApplications, vol. 25. KluwerAcademicPublishers, Boston (1998)

Son, T.Q., Strodiot, J.J., Nguyen, V.H.: ε-Optimalityandε-Lagrangiandualityforanonconvexprogrammingproblemwithaninfinitenumberofconstraints. J.Optim.TheoryAppl. 141, 389–409 (2009)

Shitkovskaya, T., Kim, D.S.: ε-efficientsolutionsinsemi-infinitemultiobjectiveoptimization. RAIROOper.Res. 52, 1397–1410 (2018)

Tuyen, N.V.: ConvergenceoftherelativeParetoefficientsets. TaiwaneseJ.Math. 20, 1149–1173 (2016)

Acknowledgments

The authors would like to thank the anonymous referee and the handling Associate Editor for their valuable remarks and detailed suggestions that allowed us to improve the original version.

Funding

The research of Ta Quang Son was supported by Vietnam National Foundation for Science and Technology Development (NAFOSTED) under grant number 101.01-2017.08. The research of Nguyen Van Tuyen was supported by the Ministry of Education and Training of Vietnam (grant number B2018-SP2-14). The research of Ching-Feng Wen was supported by the Taiwan MOST (grant number 107-2115-M-037-001) as well as the grant from Research Center for Nonlinear Analysis and Optimization, Kaohsiung Medical University, Taiwan.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’sNote

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Son, T.Q., Van Tuyen, N. & Wen, CF. Optimality Conditions for Approximate Pareto Solutions of a Nonsmooth Vector Optimization Problem with an Infinite Number of Constraints. Acta Math Vietnam 45, 435–448 (2020). https://doi.org/10.1007/s40306-019-00358-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40306-019-00358-x

Keywords

- Approximate Pareto solutions

- Optimality conditions

- Clarke subdifferential

- Semi-infinite vector optimization

- Infinite vector optimization