Abstract

Background

At the turn of the century, a new format of cricket [Twenty20 (T20)] was introduced that has led to more matches being played. Since then, it has been debated whether T20 cricket has increased the risk of overuse injuries.

Objectives

The primary aim of this study was to meta-analyse the cricket injury rates in the twenty-first century. The secondary aims were to explore the risk factors and mechanisms of injury by analysing correlates such as age, format, era of play, country, player type, etc., and to conduct a qualitative analysis of the published studies.

Methods

Several databases were searched using keywords “cricket” and “injur*” and 24 papers reporting cricket injuries fitted the inclusion criteria. Fifteen papers included data on exposure time and were used to calculate injury rates to perform sub-group analysis.

Results

Pooled data on 12,511 players revealed 7627 injuries, and the 1.12 million hours of cricket play from 15 studies reporting exposure time showed an injury rate of 53.16 (95% confidence interval 51.84–54.52) per 10,000 h of play. There were no statistically significant differences in injury rates based on age, format, era of play, country, player type and injury definitions. Bowling biomechanics and workload were identified as the major risk factors for bowling injuries.

Conclusions

This review shows that injury rates in junior and amateur cricket are higher than the injury rates of comparable cohorts playing other popular non-contact or quasi-contact team sports. There is not enough evidence to conclude that T20 cricket has increased injury rates.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The overall injury rate for cricket in the twenty-first century is 53.16 (95% confidence interval 51.84–54.52) per 10,000 h of play. |

Injury rates in junior and amateur cricket are higher than the injury rates of comparable cohorts playing popular non-contact or quasi-contact team sports. |

Bowling biomechanics and workload variations are the major risk factors for bowling injuries. |

Improvements in warm-up and conditioning, muscle strength and stability, and fielding techniques may reduce the burden of injuries in cricket. |

1 Introduction

When a 156 g, rock-hard cricket ball strikes the human body at a speed of greater than 100 km/h, there is a potential for serious injury. However, like many quasi-contact sports, the risk of cricket-related injuries is not limited to contact injuries. In 1984, Australian cricket team doctor, A.B. Corrigan, first pointed out that an increase in the number of matches was linked to an increase in the incidence of overuse injuries [1]. This rise has been amplified progressively with increased participation in the sport [2,3,4], and the introduction of the fast-paced Twenty20 (T20) cricket format at the turn of the millennium [5].

The first official T20 cricket match was played between English counties in 2003, followed by the first international match in 2004. This version of the game quickly gained popularity in all test-playing nations, and now all of them have domestic T20 leagues. The viewership of popular T20 leagues, such as the Indian Premier League (IPL), is more than 175 million [4, 6], and its brand value was estimated to be US $4.2 billion in 2016 [7]. Such leagues are usually scheduled in the off-season and attract international players, leading to fewer rest days between games and fluctuations in workload pattern. T20 cricket is also often characterised as an ‘explosive version of the game’, referring to the dynamic and action-filled format of this game, which is played at higher intensity per unit time and requires more power and agility than multi-day cricket [8,9,10]. The high number of T20 matches, combined with players participating in more matches over a shorter period of time with little rest in the off season, may have increased the susceptibility of players to overuse injuries [5]. There is also anecdotal evidence that fast bowlers have a lower bowling workload during T20 matches, both in terms of volume and intensity, as a result of fewer overs and a higher proportion of slower deliveries than in longer formats. This results in a spike in workload when playing longer-format cricket, which may lead to injuries. These factors have influenced the coaching staff to modify player training by including high volumes of strength and power training in their routines [11, 12].

To date, most cricket injury epidemiology studies have used the definition of injury from Orchard et al. [13] consensus statement of “any injury or other medical condition that either: (a) prevents a player from being fully available for selection for a major match or (b) during a major match, causes a player to be unable to bat, bowl or keep wicket when required by either the rules or the team’s captain”. The limitations of this definition have been previously identified in the literature [14, 15]. However, the key limitations are:

-

1.

No account of non-time-loss injuries (e.g. a player getting injured on the last ball of the series, after which there is a significant break and the player makes a complete recovery before the next match; in that case, the injury will not be recorded).

-

2.

No consideration of exceptional cases: an injured all-rounder may still play as a batsman despite not being able to bowl [16].

-

3.

An imprecise description of ‘other medical conditions’, which may not be related to injuries sustained while playing or training for cricket (e.g. a player may miss a game due to headache or influenza, or even through a rare event such as a lightning strike [17].

This definition of injury could have been improved by restricting the term injuries to refer to ‘musculoskeletal injuries’ instead of “any injury or other medical condition”. This would have provided more clarity to develop a logical link between exposure and injuries. Nevertheless, some cricket injury epidemiological studies have used other variations of injury definitions [18,19,20,21,22]. Kountouris et al. [19] studied the relationship between quadratus lumborum muscle asymmetry and lumbar spine (LS) injury, but their inclusion criteria did not require the player to miss a match or be unable to bowl. An injury was noted when a fast bowler reported lower back pain and had to be referred to a sports medicine specialist. Leary et al. [23] and Saw et al. [20] did not categorise injuries as only ‘match time-loss’, but included any condition that caused a player to present to the team physiotherapist. In addition, Bayne et al. [18] expanded the definition to count asymptomatic participants with radiological evidence of lumbar bone stress as being injured.

Due to the limitations of the Orchard et al. [13] consensus definition, a new consensus statement on injury surveillance was published in 2016 [24]. New injury definitions were added in this consensus statement to cater for a variety of conditions and reporting formats. These included “general time-loss” injury, through which training time lost could also be counted as an injury and “medical attention” injuries, which catered for all non-time-loss injuries. These inclusions should assist in broadening the scope of injury surveillance in cricket. To date, there is only one publication using the updated consensus statement [25] that reports injuries in Australian elite male cricketers. This publication specifies a rate for match time-loss injuries of 155 per 1000 days of play and an average annual injury prevalence of 12.5% over the past decade. The variability of reporting in the cricket injury literature creates a challenge for coaches, physicians and policy makers alike to understand the real burden of cricket injury and compare it with other sporting codes. This heterogeneity in the literature includes reporting injuries in terms of raw injury numbers [26, 27], incidence [18, 19, 21, 28, 29] or prevalence [30,31,32], or as injury rates (IRs) in multiple formats such as injuries per hours of exposure [33,34,35], per days of play [23, 36], per number of participations [37] or per number of balls bowled [22, 32].

To understand the relationship between exposure (active play time) and injuries, it is important that injuries are reported in terms of injury rate (IR) per hour/s of exposure. This method of reporting is now being recognised as the new standard in sports medicine literature [38,39,40,41]. It is important that cricket establishes this method as a consistent convention of reporting injuries as this will allow comparison of mean IRs among various sporting codes; for example, between an hour of cricket play and soccer play. Therefore, there is a need to synthesise the existing literature and pool published data to report an IR expressed in terms of exposure hours.

To date, there have been multiple epidemiological studies and literature reviews on cricket injury; however, there have been no systematic reviews of cricket injury data since the advent of T20 cricket. As the game has evolved in the past 15 years, the aim of this paper is to document the IRs among cricketers from different age groups, skill levels and geographical locations. These meta-data will assist in policy development for injury prevention in cricket. The secondary aim of this paper is to explore the factors that influence or cause injuries, such as age, format, era of play, country, player type, etc. Unpacking this information systematically may lead to better understanding of injury mechanisms and future development of injury prevention strategies.

2 Methods

2.1 Protocol and Registration

This systematic review was registered at PROSPERO (CRD42017055176) and is reported in accordance with the Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) guidelines.

2.2 Search Strategy and Inclusion/Exclusion Criteria

The databases searched were MEDLINE, SPORTDiscus, PubMed, Web of Science, EMBASE and Google Scholar from January 2000 to September 2016 to find relevant studies relating to cricket injuries. The initial search strategy used was used keywords “cricket” AND “injur*”. All papers where cricket-related injuries were reported in male, able-bodied, recreational to elite cricketers aged between 12 and 50 years were included.

Papers excluded from the review included case reports, literature reviews and conference abstracts; studies from which injury data were not extractable; and studies of biomechanics, workloads and injury mechanisms rather than injury epidemiology with no extractable player exposure data.

2.3 Selection of Studies

All articles were screened independently by two researchers (NS, TS). The publications that were not eliminated by review of the title or abstract were separately appraised for inclusion. Where there was a disagreement on inclusion, a third researcher (RS) was contacted. The references of all included publications were examined for potentially eligible papers and PRISMA guidelines were used to carry out article screening.

2.4 Data Extraction

Extracted information on participants and study characteristics included age range, level of competition, injury data, type of study and length of follow-up. The injury data included details on the injury definition, prevalence or incidence, IR, anatomical region and injury type. For studies that did not report exposure hours, qualitative data on risk factors and mechanisms of injury were extracted. Content analysis was performed by categorisation of the content into themes.

2.5 Assessment of Methodological Quality

Study quality was assessed using the Downs and Black checklist [42], which was modified to include nine of the 27 criteria that logically fitted the study design in the review (Electronic Supplementary Material Appendix S1). This was done by two researchers (NS and LS); where there was a disagreement on scoring, a third researcher (RS) was contacted. No studies were eliminated based on study quality.

2.6 Definitions

2.6.1 Injury Definition

For data extraction we used the injury definitions from the “International Consensus Statement on Injury Surveillance in Cricket: A 2016 Update”, which included match time-loss, general time-loss, medical attention, and player-reported and imaging abnormality injuries [24]. This more inclusive definition of injury enabled inclusion criteria to capture a broad range of cricket injury epidemiological studies. However, for the purpose of sub-group analysis, we categorised studies based on either the first consensus definition by Orchard et al. [13]—referred to here as ‘consensus definition 2005’ or other definitions of injury listed in Table 2. This was necessary to compare studies using different variations of definitions.

2.6.2 Injury Rate Definition (Exposure Hours)

IR was defined as the number of injuries per hour of athletic exposure. Generally, IR is expressed per 1000 or 10,000 h of athletic exposure (match time + training time or only match time). We used IR expressed per 10,000 h of athletic exposure due to a relatively low rate of cricket injuries compared with contact sports [43]. IR was extracted from the included studies or, if it was not given, the IR formula [40] was used to calculate it:

This information allowed studies to be pooled in sub-groups for calculation of pooled IR. Some studies report injury match incidence per 10,000 player hours [32], which is synonymous with IR per 10,000 exposure hours. For the purpose of this review we used IR per 10,000 exposure hours.

2.7 Statistics

All the data were extracted to Microsoft Excel® 2013 (Microsoft Corp., Redmond, WA, USA), which was also used for the basic calculations. SPSS® (Statistical Package for the Social Sciences) version 24.0 (SPSS Inc., Chicago, IL, USA) was used for statistical analysis. For pooled analysis only studies with extractable information on player exposure hours or injuries were included. Conversion of injuries/player days to injuries/10,000 exposure hours was done using the Orchard et al. [44] 2002 conversion rate for the hours of play, where a day of play in a first-class game or test match was equivalent to 6 h of play per day, and a 1-day (OD) match was 6 h 40 min (6.66 h) [44]. The T20 format of cricket was taken as 2.66 h/day (extrapolating 40 overs of play time from the 6.66 h of 100 overs played in OD cricket). A similar number is also achieved using the International Cricket Council (ICC) guidelines for bowling 15 overs/h, i.e. 40 overs/15 = 2.66. Once the format of play was established, injuries/x days were initially converted to a decimal number (dividing injuries by the number of days) to find injuries per day. This decimal was then divided by 6.66 for OD matches, 6 for test/first-class matches or 2.66 for T20 matches to give injuries per hour.

For studies using a mix of OD and test matches that did not report the different IRs between the formats, an average (6.33) was used as a conversion rate.

As a theoretical example, if there were ten injuries in 1000 player days, then the IR per day would be 10/1000 or 0.01 injuries per day. Injuries per hour can be calculated by dividing 0.01 by 6.33, which equals 0.00158 injuries per hour or 0.00158 × 10,000 = 15.8 injuries per 10,000 h of play.

2.7.1 Data Conversions

Some papers did not yield easily convertible statistics (either injuries/player hours or injuries/player days) and had to undergo individual conversions: Orchard et al. [31] did not report number of injuries and exposure hours directly, but reported seasonal injury incidence, team days played and player days of exposure. We extrapolated the number of injuries from the seasonal injury incidence by team using the following formula: injury incidence per 1000 player days = (number of injuries/player days of exposure) × 1000. We subsequently converted the team days played to hours of exposure by (1) multiplying it by 6.5 to obtain the number of players exposed on the field per team (the average number of players exposed was considered to be 13 per two teams or 6.5 per team, as at any given time there are 13 players on the field—11 from the fielding team and two from the batting team) [13]; and (2) further multiplying the resultant numbers based on the format of play as discussed in Sect. 2.7. After summing the total hours of match exposure, we used the IR formula to compute injuries/10,000 player hours. There was a four-season crossover period of injury data between this study and that by Orchard et al. [32], which reported IR from the 2001–2002 to 2004–2005 seasons. Our computed IR values were similar to those of Orchard et al. [32], thus providing a cross-validation for our conversions.

In another study, Mansingh et al. [34] included time-loss injuries sustained from exposure outside the match, but did not provide the exposure time for player outside the matches. As both the IR for different formats together with the proportion of injuries in these formats were reported, we were able to extrapolate total number of injuries and the exposure hours. As player exposure time for off-the-field injuries was not reported, we excluded those injuries to eliminate the overestimation of IR.

Finch et al. [37] provided IRs for training and matches, as well as the average length of matches and training sessions (180 and 90 min, respectively). We extrapolated total hours by multiplying match participation sessions by 3 h and training sessions by 1.5 h. With total injuries already given, IR was calculated.

Blanch et al. [45] provided the injury data pertaining to bowling injuries in fast bowlers, excluding their batting and fielding injuries. This study also provided the total number of overs bowled. We extrapolated the exposure time based on ICC regulations of 15 overs per hour of play. However, cricketing rules allow a bowler to bowl only alternate overs, so that a bowler can only bowl an approximate maximum number of 7.3 overs in an hour. As we were only considering data for fast bowlers we used a conservative estimate of seven overs per hour. To obtain the total hours of exposure, we divided the total number of overs bowled by seven to get the number of hours required to bowl the reported overs.

Frost and Chalmers [33] did not provide enough information to combine the domestic and international IRs. Subsequently the domestic IR was used for analysis as a conservative estimate.

2.7.2 Coding and Analysis

Data were categorised into several groups for analysis. These groups were then coded for SPSS® (Table 1). Some important aspects of the categorisation included the grouping of studies based on age into junior (cricketers under the age of < 19 years) and open (adult + junior) age categories. The open category was necessary as some junior cricketers make an early debut in the elite circle, such as first-class or senior competitions, and their individual IRs while playing with adult cricketers were therefore unavailable for stratification in the junior category. Studies were categorised as pre-2008, as no studies published before 2008 have T20 injury data, or as post-2008, which included T20 injury data from injuries published in or after 2008. In terms of the format of play, we grouped the studies into three categories: (1) short format (OD/T20) [35,36,37]; (2) all formats [31,32,33,34, 44,45,46,47]; and (3) unspecified (where the format or length of matches was not mentioned) [22, 23]. To determine whether there were differences in the IRs based on the definition of injury, we classified the studies into three groups, i.e. consensus definition 2005, combination of two definitions and combination of three definitions; see Sect. 2.6.1 and Table 2. Five studies [32,33,34,35, 46] used the consensus definition 2005, three studies [22, 23, 45] used a combination of two definitions and five studies [31, 36, 37, 44, 47] used a combination of three definitions (Table 2).

As the data were non-parametric we used the Kruskal–Wallis H test [sometimes also called the ‘one-way ANOVA (analysis of variance) on ranks’], which is a rank-based non-parametric test used to determine if there are statistically significant differences between two or more groups of an independent variable on a continuous or ordinal dependent variable. The non-parametric alternative to the one-way ANOVA, and an extension of the Mann–Whitney U test, allows the comparison of more than two independent groups [48]. To understand trends, analysis using linear regression method was performed. Where Levene’s test for homogeneity of variance was violated, linear regression by a weighted least squares (WLS) was used. WLS regression is robust in determining coefficients regardless of normality, but the significance has a better inference only when the sample size is large [49].

3 Results

The initial search yielded 1582 studies with 556 duplicates. Two authors, NS and TS, systematically screened the remaining 1029 studies to identify 24 studies which fitted the inclusion criteria (Fig. 1). The pooled results had a total of 7627 injuries in 12,511 players. Nine of these studies did not report exposure hours, but were used for qualitative analysis to understand the aetiology and mechanisms of injury. Fifteen had extractable data on exposure hours or IRs and were used to calculate the pooled IR (Table 3). These 15 studies were also assessed for quality by using the modified Downs and Black checklist (Electronic Supplementary Material Appendix S1). None of the studies were below 50% on the quality score and the mean score for all studies was 80%. After pooling 1.12 million hours of cricket play from these 15 studies, the IR was calculated as 53.16 (95% confidence interval [CI] 51.84–54.52) per 10,000 h of play. This pooled IR would have placed our study at number seven on the list of the 15 studies from which IR data were extracted. Of these 15 studies, 13 were used for comparative sub-group analysis, because two studies [30, 50] had to be excluded as they only reported injuries specific to a particular body region.

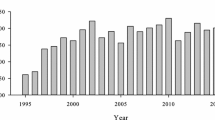

3.1 Era of Play

As international T20 cricket started in 2007, a linear regression between IRs and year of publications was performed to understand if there was a trend. Since Levene’s test for homogeneity of variance was violated, we used linear regression by WLS method to predict IR variations from the year of publication. The results showed a non-significant trend [F(1,11) = 0.105; p > 0.05], with an R2 = 0.170.

3.2 Age

There were ten studies in the adult/open category [23, 31,32,33,34, 36, 44,45,46,47] and three junior studies [22, 35, 37]. The Kruskal–Wallis H test showed no significant difference for age Chi squared (χ2) (1) = 0.257 (p = 0.612) between juniors with a mean rank injury incidence of 8.33 and the open/adult category with a mean rank injury incidence of 6.6 (Table 4).

3.3 Country of Play

Since most studies were conducted in Australia [22, 31, 32, 37, 45,46,47], we compared the Australian IR with that for the rest of the world [22, 23, 33,34,35,36]. The Kruskal–Wallis H test was used to compare the two groups and the results showed that Australia had an average IR of 113.6 per 10,000 h and other countries had an average IR of 96.03 per 10,000 h. There was no significant difference in the IR χ2(1) = 1.653 (p = 0.199).

3.4 Format of Play

A Kruskal–Wallis H test applied to the three format categories showed no significant difference χ2(2) = 2.198 (p = 0.333). The short format of cricket had a mean rank injury incidence of 8.67; mixed had a mean rank injury incidence of 5.75; and unspecified had a mean rank injury incidence of 9.5. There were no studies specifically on IRs in test cricket.

3.5 Player Type

There were five studies [22, 33, 45,46,47] that reported data on IRs in bowlers and eight [23, 31, 32, 34,35,36,37, 44] for all player types (inclusive of bowlers). The Kruskal–Wallis H test was used to compare the two groups and the results showed bowlers had a mean injury incidence of 188.38 (95% CI 18.7–358), and all players had a mean injury incidence of 53.7 (95% CI 20.1–87.2). The difference in mean IRs between bowlers and other player types did not reach statistical significance χ2(1) = 1.736 (p = 0.188).

3.6 Definitions of Injury

The variation in injury definitions used in different studies did not achieve significance [χ2(2) = 5.020; p = 0.081]. The mean rank of injury incidence (MRII) was highest for a combination of three definitions (MRII = 4), a combination of two definitions had MRII = 9.67 and one definition had MRII = 8.4.

3.7 Risk Factors and Mechanisms of Injury

Our analysis showed that the most frequent themes identified in the included studies for mechanisms or risk factors injury were related to bowling biomechanics [18, 34, 44, 45, 51], workload [18, 29, 31, 45, 47, 50, 52,53,54], lack of warm-up and conditioning [28, 34, 44], muscle strength and stability [36, 46, 51, 54], ground hardness [35, 36, 52], age [37, 52], fielding technique [26, 34, 35, 37, 44] and previous injury [28, 55, 56]. We divided these risk factors in three categories: (1) easily modifiable, e.g. lack of warm-up and conditioning, muscle strength and stability, fielding technique; (2) modifiable with difficulty, e.g. bowling biomechanics, ground hardness; and (3) non-modifiable, e.g. previous injury and age. The recommendations for injury prevention for each of these categories are elaborated in Sect. 4.

4 Discussion

This review shows that IRs in junior and amateur cricketers (129.7/10,000 h) are higher than the average IRs of a comparable cohort playing other non-contact or quasi-contact team sports such as soccer (35.4/10,000 h) [39, 40, 57], basketball (33.19/10,000 h) [58, 59] and tennis (40.2/10,000 h) [60, 61]. Orchard et al. [62] reported that the trend for increased IRs among elite cricketers may be attributed to an increased participation in T20 cricket at the international level. Although this research was conducted on elite cricketers, its findings may also be pertinent for junior and amateur cricketers. Greater player participation in T20 cricket means that batters generate higher bat speeds and run harder, bowlers exert more effort from the very first ball, and fielders sprint more often to intercept the ball. The increased physical demand results in 50–100% more sprints per hour in T20 cricket than in multi-day cricket [63], predisposing cricketers not conditioned for this format to a higher risk of injury than when playing multi-day cricket. While there was evidence to suggest that the T20 format could be a factor contributing to the increase in the IRs in recent years [62], it was interesting to note that no studies with reportable injury incidences investigated test cricket by itself. Most studies focused on a mix of formats or only the shorter version of cricket. Future studies should be conducted to determine IRs and exposure hours for the longer formats of the game.

We found no significant differences in IR among covariates such as format, country of play, player type and definition of injury used. However, the results were still of interest because the non-significance of data can be attributed to the heterogeneous and non-standardised methods of data gathering across the studies leading to large standard deviations, the differing injury definitions, variation in data collecting methods (cross-sectional questionnaires, retrospective data analysis and prospective studies) and small sample size when studies were sub-grouped. For example, junior cricketers had a higher rate of injuries (129.7/10,000 h) than adults (98.2/10,000 h), albeit the junior IRs were based on only three studies. This indicates that longitudinal injury epidemiological studies among junior cricketers are limited and further investigations are needed to develop a better understanding of injury susceptibility in this cohort.

Australia had a higher IR (113.6/10,000 h) than other countries (98.2/10,000 h) (p > 0.05). We were only able to make a comparison between Australia and other countries because of the limited number of studies elsewhere in the world. Given that the ‘other countries’ group included countries with a similar cricket infrastructure to that of Australia, i.e. England, New Zealand and South Africa, it would have been interesting to pool data from studies conducted in the sub-continent or developing countries where the ground conditions are different. It is possible that Australia may have, for instance, a significantly different IR to India, but there were not enough studies to extract IR data to enable this comparison. The variability in IRs across countries mandates the promotion of standardised injury surveillance methods. This was one of the driving forces behind the 2016 consensus statement on cricket injury [24]. In the future, if researchers and cricket administrative bodies could report injury data from the sub-continent and other developing countries, a robust comparison can be made.

The studies investigating bowlers had a higher IR (188.38/10,000 h) than all cricketers (53.7/10,000 h) (p > 0.05). A probable reason for the difference being non-significant could be that some studies reporting IRs on all player types also had inclusion of bowling injuries, and segregation of IRs by player type was not possible. This may have led to an increase of CIs, resulting in a non-significant difference between the groups of players.

Biomechanics was identified as a key injury factor. This was because fast bowlers have the highest IRs when compared with other player types, and more studies therefore investigated the mechanisms for these injuries. Two studies in our review directly linked the biomechanics of the bowling action with increased risk of LS injury in fast bowlers [64, 65]. Mixed bowling action (Fig. 2) and contralateral side flexion (Fig. 3) at the delivery stride were linked to increased risk of lumbar injury [21, 66,67,68]. The mixed bowling action is defined as “shoulder counter-rotation (i.e. the maximum amount of rotation of the rear shoulder away from the batter in the transverse plane during the delivery stride) greater than 30° or pelvis-shoulder separation angle greater than 30° on front foot contact” [67, 69]. Ranson et al. [70] suggested that a long-term (2-year) coaching intervention can alter bowling technique significantly in young fast bowlers. Their intervention resulted in significant improvement in side-on shoulder alignment at back foot contact (p = 0.002) and decreased shoulder counter-rotation (p = 0.001). These interventions, if carried out at the junior level, require comprehensive training of junior coaches.

Similarly, large contralateral side flexion was seen in fast bowlers with lower-back injury compared with their non-injured counterparts (mean difference of contralateral flexion ranging between 4.9° and 9.6°; p < 0.05) [18]. However, Ranson et al. [71] stated that excessive lower trunk extension rather than counter-rotation is a risk factor for acute lumbar stress fractures [71]. Despite the contradictory findings, we conclude that excessive lateral trunk flexion stresses different areas of the lumbar bone, in particular the pars interarticularis, which is an area of vertebrae located between the spinal and the transverse processes. Although we are unsure of the magnitude of force required to cause lumbar bone stress injury (LBSI), skeletal immaturity at younger age and high workloads or spike in bowling workloads have been identified as risk factors [68, 72]. Since adolescent fast bowlers are likely to have a lower proportion of cortical bone (compact bone) and a higher proportion of trabecular bone (spongy bone) than their adult counterparts [73, 74], high bowling workloads may cause repetitive micro-trauma to the LS. The micro-trauma is due to a combination of high ground-reaction forces and large axial rotations through the LS creating a cumulative shearing stress causing LBSI, which may ultimately lead to a stress fracture [21, 75]. For that reason, cricket boards around the world recommend workload cut-offs for fast bowlers [76, 77]. However, monitoring of these workloads at the community level is difficult as generally there is one coach for multiple players and many junior cricketers playing for multiple teams, which makes tracking of workloads challenging.

Previous injury is also a major predictor of future injuries [22, 78]. However, research around this covariate in the cricket injury epidemiology literature is limited. Orchard et al. [56] showed that the relative risk (RR) of injury, which is the ratio of injury incidence in the exposed group compared to the non-exposed group, increases if there is a lumbar stress fracture. For example, lumbar stress fractures predispose fast bowlers to a higher risk of muscular strains in the calf (RR 4.1; 95% CI 2.4–7.1), quadriceps (RR 2.0; 95% CI 1.1–3.5) and hamstrings (RR 1.5; 95% CI 1.03–2.1). However, lumbar stress fractures are protective for knee cartilage injury (RR 0.1; 95% CI 0.0–0.4). Similarly, the risk of sustaining a tendon injury is nearly doubled if there was a previous injury in the same season (odds ratio 1.85; 95% CI 1.33–2.55) [55]. We were not able to find any studies on adolescent cricketers linking previous injury as a risk factor.

There was no significant difference in IRs for year as a binary variable when pre-2008 and post-2008 studies were compared, indicating that IRs have not changed significantly over time. This was also supported by the WLS, which found no significant difference for year as a continuous variable and injury incidence rate. The non-significant result may be attributed to the fact that some post-T20 era studies induced longitudinal data from pre-T20 era [31, 45]. Interestingly, although non-significant, there appeared to be a decrease in IRs as there was an increase in generality of the injury definition, i.e. when the injury definition was broad (see Table 2). This is a confounding result as one would assume that a broader injury definition will capture a higher IR. However, the five studies with three components to their injury definition had small sample size in terms of player exposure hours. These smaller sample sizes could account for the contradictory result. The reason why larger studies used narrow injury definitions could be because it simplifies data collection, as a broader definition may require more resources and more research personnel. In the future, it will be interesting to see if researchers incorporate the new consensus injury definition, which is broad, and collect data on exposure hours.

4.1 Limitations

Reporting cricket injuries is a challenge, as the game has a unique format and at one time only 13 of the 22 players in the two teams are on the field. Secondly, the workloads observed by players are markedly different; for example, a fast bowler compared to a wicket-keeper [8, 63]. Finally, many of the modern-day cricketers are all-rounders, which means their workload is distributed across all facets of the game. Therefore, ideally IRs should be calculated strictly based on the workload for the specialised facet of cricket; however, with the data reported in the existing cricket injury epidemiology literature, this was not possible. Hence, it was only practical to pool the cumulative workload to find an average IR for all players. This may not be an accurate depiction of IRs for specialised playing positions.

The exposure hours in the study conducted by Frost and Chalmers [33] may have been overestimated as they were extrapolated from the domestic IR and the number of injuries, giving a conservative IR. Considering each study represented approximately 7% of the pool and that data from Frost and Chalmers [33] only raised the pooled IR by 4%, we decided to include it. Three studies focusing on radiological evidence of injuries [18, 19, 54] did not report on player exposure hours, and hence they were excluded from the analysis. Data from these studies may have improved the power of our analysis.

5 Conclusions

Pooling of data from multiple cricket injury epidemiology studies based on exposure hours not only provides a better understanding of the IRs in cricket but also assists in comparing IRs with other non-contact sports. The pooled analysis reduces the bias reported in individual studies and provides us with an accurate estimate of IRs. Analysis of different covariates, such as age and format of play, provides a more complete understanding of injuries in cricket. This review concluded that there was not enough evidence to determine if the introduction of T20 cricket increased IRs; therefore, further studies, with standardised surveillance methods, are needed. There was a difference between IRs of junior cricketers (130 injuries/10,000 h) and the open age category (98 injuries/10,000 h), and IRs in junior and amateur cricket were higher than the IRs of comparable cohorts playing other popular non-contact or quasi-contact team sports. Although statistically non-significant, this finding may be of clinical significance to health practitioners and sports administrative bodies.

Effort towards mitigating the easily modifiable risk factors by improvements in warm-up and conditioning, muscle strength and stability, and fielding technique may reduce the burden of injury. There is a wealth of literature advocating the effectiveness of injury prevention programmes on reduction of overuse injuries [38, 40, 79, 80], with their implementation curbing the IRs by 40% in adolescent team sports [IR ratio 0.60 (95% CI 0.48–0.75)] [39]. Given that most well-designed injury prevention programmes [57, 79, 81, 82] have integrated components of warm-up, conditioning, muscle strength, agility and stability, it is foreseeable that such programmes may play a pivotal role in modulation of injury risk factors in cricket. Future research should aim at reporting IRs in junior and non-elite cricketers and track practice exposure time. This information will allow us to understand the relationship between exposure and injury thoroughly.

References

Corrigan AB. Cricket injuries. Aust Fam Phys. 1984;13(8):558–9 (62).

Cricket Australia. Cricket becomes the leading participation sport in Australia 2016. http://www.cricketaustralia.com.au/media/media-releases/cricket-becomes-the-leading-participation-sport-in-australia/2016-08-23 Accessed 01 Jul 2017.

Appadurai A. Playing with modernity: the decolonization of Indian cricket. Altre Modernità. 2015;14:1–24.

Majumdar B. Commonwealth games 2010: the index of a “new” India? Soc Res. 2011;78(1):231–54.

Orchard JW, Kountouris A, Sims KJ, Orchard J, Beakley DT, Brukner PD. Change to injury profile of elite male cricketers in the T20 era. N Z J Sports Med. 2015;42(1):13–7.

Narayan PK, Rath BN, Prabheesh KP. What is the value of corporate sponsorship in sports? Emerg Mark Rev. 2016;26:20–33. https://doi.org/10.1016/j.ememar.2016.02.003.

Duff & Phelps. Playing with a straight bat: a concise report on brand values in the Indian Premier League. 2016. http://www.duffandphelps.com/assets/pdfs/publications/valuation/iplbrand2016.pdf. Accessed 01 Aug 2017.

Petersen C, Pyne D, Portus MR, Dawson B. Quantifying positional movement patterns in Twenty20 cricket. Int J Perform Anal Sport. 2009;9(2):165–70.

McNamara DJ, Gabbett TJ, Naughton G. Assessment of workload and its effects on performance and injury in elite cricket fast bowlers. Sports Med. 2017;47(3):503–15. https://doi.org/10.1007/s40279-016-0588-8.

Scanlan AT, Berkelmans DM, Vickery WM, Kean CO. A review of the internal and external physiological demands associated with batting in cricket. Int J Sports Physiol Perform. 2016;11(8):987–97.

Karppinen S. Strength training for fast bowlers: resistance to resistance training. Conference abstract presented at conference of science, medicine and coaching in cricket, Gold Coast, Qld, Australia, 2010.

Taliep MS, Prim SK, Gray J. Upper body muscle strength and batting performance in cricket batsmen. J Strength Cond Res. 2010;24(12):3484–7. https://doi.org/10.1519/JSC.0b013e3181e7261b.

Orchard JW, Newman D, Stretch R, Frost W, Mansingh A, Leipus A. Methods for injury surveillance in international cricket. Br J Sports Med. 2005. https://doi.org/10.1136/bjsm.2004.012732.

Mitchell R, Hayen A. Defining a cricket injury. J Sci Med Sport. 2005;8(3):357–8. https://doi.org/10.1016/S1440-2440(05)80047-7.

Clarsen B, Myklebust G, Bahr R. Development and validation of a new method for the registration of overuse injuries in sports injury epidemiology: the Oslo Sports Trauma Research Centre (OSTRC) overuse injury questionnaire. Br J Sports Med. 2013;47(8):495–502. https://doi.org/10.1136/bjsports-2012-091524.

Moonda F. Injured Kallis unable to bowl, but can bat. ESPN Cricinfo. 2012. http://www.espncricinfo.com/australia-v-south-africa-2012/content/story/592429.html. Accessed 2 Aug 2017.

CricInfo. Lightning strike fells Test star. ESPN Sports Media. 2003. http://www.espncricinfo.com/westindies/content/story/132767.html. Accessed 3 Aug 2017.

Bayne H, Elliott B, Campbell A, Alderson J. Lumbar load in adolescent fast bowlers: a prospective injury study. J Sci Med Sport. 2016;19(2):117–22. https://doi.org/10.1016/j.jsams.2015.02.011.

Kountouris A, Portus M, Cook J. Quadratus lumborum asymmetry and lumbar spine injury in cricket fast bowlers. J Sci Med Sport. 2012;15(5):393–7. https://doi.org/10.1016/j.jsams.2012.03.012.

Saw R, Dennis RJ, Bentley D, Farhart P. Throwing workload and injury risk in elite cricketers. Br J Sports Med. 2011;45(10):805–8. https://doi.org/10.1136/bjsm.2009.061309.

Engstrom CM, Walker DG. Pars interarticularis stress lesions in the lumbar spine of cricket fast bowlers. Med Sci Sports Exerc. 2007;39(1):28–33. https://doi.org/10.1249/01.mss.0000241642.82725.ac.

Gregory PL, Batt ME, Wallace WA. Comparing injuries of spin bowling with fast bowling in young cricketers. Clin J Sport Med. 2002;12(2):107–12. https://doi.org/10.1097/00042752-200203000-00007.

Leary T, White JA. Acute injury incidence in professional county club cricket players (1985–1995). Br J Sports Med. 2000;34(2):145–7. https://doi.org/10.1136/bjsm.34.2.145.

Orchard JW, Ranson C, Olivier B, Dhillon M, Gray J, Langley B, et al. International consensus statement on injury surveillance in cricket: a 2016 update. Br J Sports Med. 2016;50(20):1245–51. https://doi.org/10.1136/bjsports-2016-096125.

Orchard JW, Kountouris A, Sims K. Incidence and prevalence of elite male cricket injuries using updated consensus definitions. Open Access J Sports Med. 2016;7:187–94. https://doi.org/10.2147/OAJSM.S117497.

Ahearn N, Bhatia R, Griffin S. Hand and wrist injuries in professional county cricket. J Hand Surg Asian Pac. 2015;20(1):89–92.

Olivier B, Stewart AV, Olorunju SAS, McKinon W. Static and dynamic balance ability, lumbo-pelvic movement control and injury incidence in cricket pace bowlers. J Sci Med Sport. 2015;18(1):19–25. https://doi.org/10.1016/j.jsams.2013.10.245.

Stretch RA. Cricket injuries: a longitudinal study of the nature of injuries to South African cricketers. Br J Sports Med. 2003;37(3):250–3. https://doi.org/10.1136/bjsm.37.3.250.

Saw R, Dennis RJ, Bentley D, Farhart P. Throwing workload and injury risk in elite cricketers. Br J Sports Med. 2011;45(10):805–8. https://doi.org/10.1136/bjsm.2009.061309.

Dhillon MS, Garg B, Soni RK, Dhillon H, Prabhakar S. Nature and incidence of upper limb injuries in professional cricket players a prospective observation. Sports Med Arthrosc Rehabil Ther Technol. 2012;4(1):42–5.

Orchard J, James T, Kountouris A, Blanch P, Sims K, Orchard J. Injury report 2011: Cricket Australia. Sport Health. 2011;29(4):16–29.

Orchard JW, James T, Portus MR. Injuries to elite male cricketers in Australia over a 10-year period. J Sci Med Sport. 2006;9(6):459–67. https://doi.org/10.1016/j.jsams.2006.05.001.

Frost WL, Chalmers DJ. Injury in elite New Zealand cricketers 2002–2008: descriptive epidemiology. Br J Sports Med. 2014;48(12):1002–7. https://doi.org/10.1136/bjsports-2012-091337.

Mansingh A, Harper L, Headley S, King-Mowatt J, Mansingh G. Injuries in West Indies cricket 2003–2004. Br J Sports Med. 2006;40(2):119–23. https://doi.org/10.1136/bjsm.2005.019414.

Das NS, Usman J, Choudhury D, Osman NAA. Nature and pattern of cricket injuries: the Asian Cricket Council under-19, elite cup, 2013. PLoS One. 2014;9(6):e100028.

Ranson C, Hurley R, Rugless L, Mansingh A, Cole J. International cricket injury surveillance: a report of five teams competing in the ICC Cricket World Cup 2011. Br J Sports Med. 2013;47(10):637–43. https://doi.org/10.1136/bjsports-2012-091783.

Finch CF, White P, Dennis R, Twomey D, Hayen A, Finch CF, et al. Fielders and batters are injured too: a prospective cohort study of injuries in junior club cricket. J Sci Med Sport. 2010;13(5):489–95. https://doi.org/10.1016/j.jsams.2009.10.489.

Al Attar WSA, Soomro N, Sinclair PJ, Pappas E, Sanders RH. Effect of injury prevention programs that include the nordic hamstring exercise on hamstring injury rates in soccer players: a systematic review and meta-analysis. Sports Med. 2017;47(5):907–16. https://doi.org/10.1007/s40279-016-0638-2.

Soomro N, Sanders R, Hackett D, Hubka T, Ebrahimi S, Freeston J, et al. The efficacy of injury prevention programs in adolescent team sports: a meta-analysis. Am J Sports Med. 2016;44(9):2415–24.

Al Attar WSA, Soomro N, Pappas E, Sinclair PJ, Sanders RH. How effective are F-MARC injury prevention programs for soccer players? A systematic review and meta-analysis. Sports Med. 2016;46(2):205–17.

Emery C, Meeuwisse W. The effectiveness of a neuromuscular prevention strategy to reduce injuries in youth soccer: a cluster-randomised controlled trial. Br J Sports Med. 2010;44(8):555–62.

Downs SH, Black N. The feasibility of creating a checklist for the assessment of the methodological quality both of randomised and non-randomised studies of health care interventions. J Epidemiol Commun Health. 1998;52(6):377–84.

Orchard J, Seward H. Epidemiology of injuries in the Australian Football League, seasons 1997–2000. Br J Sports Med. 2002;36(1):39–44. https://doi.org/10.1136/bjsm.36.1.39.

Orchard J, James T, Alcott E, Carter S, Farhart P. Injuries in Australian cricket at first class level 1995/1996 to 2000/2001. Br J Sports Med. 2002;36(4):270–4. https://doi.org/10.1136/bjsm.36.4.270.

Blanch P, Orchard J, Kountouris A, Sims K, Beakley D. Different tissue type categories of overuse injuries to cricket fast bowlers have different severity and incidence which varies with age. S Afr J Sports Med. 2015;27(4):108–13. https://doi.org/10.17159/2078-516x/2015/v27i4a436

Orchard JW, James T, Portus M, Kountouris A, Dennis R. Fast bowlers in cricket demonstrate up to 3-to 4-week delay between high workloads and increased risk of injury. Am J Sports Med. 2009;37(6):1186–92. https://doi.org/10.1177/0363546509332430.

Dennis R, Farhart R, Goumas C, Orchard J. Bowling workload and the risk of injury in elite cricket fast bowlers. J Sci Med Sport. 2003;6(3):359–67. https://doi.org/10.1016/s1440-2440(03)80031-2.

Chan Y, Walmsley RP. Learning and understanding the Kruskal-Wallis one-way analysis-of-variance-by-ranks test for differences among three or more independent groups. Phys Ther. 1997;77(12):1755–61.

Williams MN, Grajales CAG, Kurkiewicz D. Assumptions of multiple regression: correcting two misconceptions. Pract Assess Res Eval. 2013;18(11):1–14.

Dennis R, Farhart P, Clements M, Ledwidge H. The relationship between fast bowling workload and injury in first-class cricketers: a pilot study. J Sci Med Sport. 2004;7(2):232–6. https://doi.org/10.1016/s1440-2440(04)80014-8.

Olivier B, Taljaard T, Burger E, Brukner P, Orchard J, Gray J, et al. Which extrinsic and intrinsic factors are associated with non-contact injuries in adult cricket fast bowlers? Sports Med. 2016;46(1):79–101.

Stretch RA. Junior cricketers are not a smaller version of adult cricketers: a 5-year investigation of injuries in elite junior cricketers. S Afr J Sports Med. 2014;26(4):123–7. https://doi.org/10.7196/sajsm.543.

Ranson C, Gregory PL. Shoulder injury in professional cricketers. Phys Ther Sport. 2008;9(1):34–9. https://doi.org/10.1016/j.ptsp.2007.08.001.

Engstrom CM, Walker DG, Kippers V, Mehnert AJH. Quadratus lumborum asymmetry and L4 Pars injury in fast bowlers: a prospective MR study. Med Sci Sports Exerc. 2007;39(6):910–7. https://doi.org/10.1249/mss.0b013e3180408e25.

Orchard JW, Blanch P, Paoloni J, Kountouris A, Sims K, Orchard JJ, et al. Cricket fast bowling workload patterns as risk factors for tendon, muscle, bone and joint injuries. Br J Sports Med. 2015;49(16):1064–8. https://doi.org/10.1136/bjsports-2014-093683.

Orchard J, Farhart P, Kountouris A, James T, Portus M. Pace bowlers in cricket with history of lumbar stress fracture have increased risk of lower limb muscle strains, particularly calf strains. Open Access J Sports Med. 2010;1:177–82. https://doi.org/10.2147/oajsm.s10623.

Steffen K, Myklebust G, Olsen OE, Holme I, Bahr R. Preventing injuries in female youth football—a cluster-randomized controlled trial. Scand J Med Sci Sports. 2008;18(5):605–14. https://doi.org/10.1111/j.1600-0838.2007.00703.x.

Douglas FM, William CF, Jesse CD. The incidence of injury in Texas high school basketball. Am J Sports Med. 1999;27(3):294–9. https://doi.org/10.1177/03635465990270030401.

Emery CA, Rose MS, McAllister JR, Meeuwisse WH. A prevention strategy to reduce the incidence of injury in high school basketball: a cluster randomized controlled trial. Clin J Sport Med. 2007;17(1):17–24. https://doi.org/10.1097/JSM.0b013e31802e9c05.

Silva RT, Takahashi R, Berra B, Cohen M, Matsumoto M. Medical assistance at the Brazilian juniors tennis circuit—a one-year prospective study. J Sci Med Sport. 2003;6(1):14–8.

Hjelm N, Werner S, Renstrom P. Injury profile in junior tennis players: a prospective two year study. Knee Surg Sports Traumatol Arthrosc. 2010;18(6):845–50. https://doi.org/10.1007/s00167-010-1094-4.

Orchard J, James T, Kountouris A, Portus M. Changes to injury profile (and recommended cricket injury definitions) based on the increased frequency of Twenty20 cricket matches. Open Access J Sports Med. 2010;1:63–76.

Petersen CJ, Pyne D, Dawson B, Portus M, Kellett A. Movement patterns in cricket vary by both position and game format. J Sports Sci. 2010;28(1):45–52. https://doi.org/10.1080/02640410903348665.

Foster D, John D, Elliott B, Ackland T, Fitch K. Back injuries to fast bowlers in cricket: a prospective study. Br J Sports Med. 1989;23(3):150–4.

Kountouri A. Stress fractures of the pars interarticularis in cricket fast bowlers. Sports Link. 2003;1:4–5.

Ranson CA, Burnett AF, King M, Patel N, O’Sullivan PB. The relationship between bowling action classification and three-dimensional lower trunk motion in fast bowlers in cricket. J Sports Sci. 2008;26(3):267–76. https://doi.org/10.1080/02640410701501671.

Portus MR, Mason BR, Elliott BC, Pfitzner MC, Done RP. Technique factors related to ball release speed and trunk injuries in high performance cricket fast bowlers. Sports Biomech. 2004;3(2):263–84.

Johnson M, Ferreira M, Hush J. Lumbar vertebral stress injuries in fast bowlers: a review of prevalence and risk factors. Phys Ther Sport. 2012;13(1):45–52. https://doi.org/10.1016/j.ptsp.2011.01.002.

Burnett AF, Elliott BC, Marshall RN. The effect of a 12-over spell on fast bowling technique in cricket. J Sports Sci. 1995;13(4):329–41. https://doi.org/10.1080/02640419508732247.

Ranson C, King M, Burnett A, Worthington P, Shine K. The effect of coaching intervention on elite fast bowling technique over a two year period. Sports Biomech. 2009;8(4):261–74.

Ranson C, Burnett A, King M, O’Sullivan P, Cornish R, Batt M, editors. Acute lumbar stress injury, trunk kinematics, lumbar MRI and paraspinal muscle morphology in fast bowlers in cricket. ISBS Conference 2008; 14–18 Jul 2008. Seoul: ISBS-Conference Proceedings Archive; 2008. pp. 233–236.

Ranson CA, Kerslake RW, Burnett AF, Batt ME, Abdi S. Magnetic resonance imaging of the lumbar spine in asymptomatic professional fast bowlers in cricket. J Bone Jt Surg. 2005;87B(8):1111–6. https://doi.org/10.1302/0301-620x.87b8.16405.

Cyron B, Hutton W. Variations in the amount and distribution of cortical bone across the partes interarticulares of l5: a predisposing factor in spondylolysis? Spine (Phila Pa 1976). 1979;4(2):163–7.

Micheli LJ, Wood R. Back pain in young athletes: significant differences from adults in causes and patterns. Arch Pediatr Adolesc Med. 1995;149(1):15–8.

Standaert CJ. Spondylolysis in the adolescent athlete. Clin J Sport Med. 2002;12(2):119–22.

England and Wales Cricket Board (ECB). Fast bowling match directives. 2015. pp. 329–330. http://www.ecb.co.uk/sites/default/files/3485_nfc_fastbowling_2015_p329-330_lr.pdf. Accessed 20 Sep 2017.

Cricket Australia. Well played, Cricket Australia’s playing policies and guidelines. 2012. http://mrdca.nsw.cricket.com.au/files/2955/files/Australian_Crickets_Playing_Policy_and_Guidelines.pdf. Accessed 30 Sep 2017.

Fulton J, Wright K, Kelly M, Zebrosky B, Zanis M, Drvol C, et al. Injury risk is altered by previous injury: a systematic review of the literature and presentation of causative neuromuscular factors. Int J Sports Phys Ther. 2014;9(5):583–95.

Brown JC, Verhagen E, Knol D, Van Mechelen W, Lambert MI. The effectiveness of the nationwide BokSmart rugby injury prevention program on catastrophic injury rates. Scand J Med Sci Sports. 2016;26(2):221–5. https://doi.org/10.1111/sms.12414.

Rössler R, Donath L, Verhagen E, Junge A, Schweizer T, Faude O. Exercise-based injury prevention in child and adolescent sport: a systematic review and meta-analysis. Sports Med. 2014;44(12):1733–48. https://doi.org/10.1007/s40279-014-0234-2.

Finch CF, Twomey DM, Fortington LV, Doyle TLA, Elliott BC, Akram M, et al. Preventing Australian football injuries with a targeted neuromuscular control exercise programme: comparative injury rates from a training intervention delivered in a clustered randomised controlled trial. Inj Prev. 2016;22(2):123–8. https://doi.org/10.1136/injuryprev-2015-041667.

Soomro N, Chua N, Freeston J, Ferdinands RED, Sanders R. Cluster randomised control trial for cricket injury prevention programme (CIPP): a protocol paper. Inj Prev. 2017. https://doi.org/10.1136/injuryprev-2017-042518.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

Najeebullah Soomro is supported by Broken Hill UDRH, The University of Sydney. No sources of funding were used to assist in the preparation of this article.

Conflict of Interest

Najeebullah Soomro, Luke Strasiotto, Tausif Sawdagar, David Lyle, David Mills, Rene Ferdinands and Ross Sanders declare that they have no conflicts of interest relevant to the content of this review.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Soomro, N., Strasiotto, L., Sawdagar, T. et al. Cricket Injury Epidemiology in the Twenty-First Century: What is the Burden?. Sports Med 48, 2301–2316 (2018). https://doi.org/10.1007/s40279-018-0960-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40279-018-0960-y