Abstract

Background

The high prevalence of injury amongst cricket fast bowlers exposes a great need for research into the risk factors associated with injury. Both extrinsic (environment-related) and intrinsic (person-related) risk factors are likely to be implicated within the high prevalence of non-contact injury amongst fast bowlers in cricket. Identifying and defining the relative importance of these risk factors is necessary in order to optimize injury prevention efforts.

Objective

The objective of this review was to assess and summarize the scientific literature related to the extrinsic and intrinsic factors associated with non-contact injury inherent to adult cricket fast bowlers.

Method

A systematic review was performed in compliance with the PRISMA guidelines. This review considered both experimental and epidemiological study designs. Studies that included male cricket fast bowlers aged 18 years or above, from all levels of play, evaluating the association between extrinsic/intrinsic factors and injury in fast bowlers were considered for inclusion. The three-step search strategy aimed at finding both published and unpublished studies from all languages. The searched databases included MEDLINE via PubMed, Cumulative Index to Nursing and Allied Health Literature (CINAHL), the Cochrane Controlled Trials Register in the Cochrane Library, Physiotherapy Evidence Database (PEDro), ProQuest 5000 International, ProQuest Health and Medical Complete, EBSCO MegaFile Premier, Science Direct, SPORTDiscus with Full Text and SCOPUS (prior to 28 April 2015). Initial keywords used were ‘cricket’, ‘pace’, ‘fast’, ‘bowler’, and ‘injury’. Papers which fitted the inclusion criteria were assessed by two independent reviewers for methodological validity prior to inclusion in the review using standardized critical appraisal instruments from the Joanna Briggs Institute Meta Analysis of Statistics Assessment and Review Instrument (JBI-MAStARI).

Results

A total of 16 studies were determined to be suitable for inclusion in this systematic review. The mean critical appraisal score of the papers included in this study was 6.88 (SD 1.15) out of a maximum of 9. The following factors were found to be associated with injury: bowling shoulder internal rotation strength deficit, compromised dynamic balance and lumbar proprioception (joint position sense), the appearance of lumbar posterior element bone stress, degeneration of the lumbar disc on magnetic resonance imaging (MRI), and previous injury. Conflicting results were found for the association of quadratus lumborum (QL) muscle asymmetry with injury. Technique-related factors associated with injury included shoulder–pelvis flexion–extension angle, shoulder counter-rotation, knee angle, and the proportion of side-flexion during bowling. Bowling workload was the only extrinsic factor associated with injury in adult cricket fast bowlers. A high bowling workload (particularly if it represented a sudden upgrade from a lower workload) increased the subsequent risk to sustaining an injury 1, 3 or 4 weeks later.

Conclusion

Identifying the factors associated with injury is a crucial step which should precede the development of, and research into, the effectiveness of injury prevention programs. Once identified, risk factors may be included in pre-participation screening tools and injury prevention programs, and may also be incorporated in future research projects. Overall, the current review highlights the clear lack of research on factors associated with non-contact injury, specifically in adult cricket fast bowlers.

Systematic review registration number Johanna Briggs Institute Database of Systematic Reviews and Implementation Reports 1387 (Olivier et al., JBI Database Syst Rev Implement Rep 13(1):3–13. doi:10.11124/jbisrir-2015-1387, 2015).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Modifiable intrinsic factors, such as those related to neuromuscular control (e.g., balance and proprioception) should be included in pre-participatory screening and injury prevention programs in the clinical arena and further investigation of these factors is advocated. |

A constant moderate bowling workload reduces the risk of injury, although workloads are only partially under the control of the player. A ‘whole-of-game’ approach may be required to try to minimize the frequency of high workload spikes in fast bowlers (involving not only players and medical staff, but also coaching staff and administrators responsible for organizing match schedules and playing conditions). |

1 Introduction

Cricket is generally considered to be a low-injury-risk sport [2] compared with other sports [3]. In cricket, the fast bowler strives towards adopting a bowling technique that will allow for a fast and accurate delivery to the opposing batsman. However, of all the various roles of the cricketer, the fast bowler has the highest risk of injury and is specifically at risk of lower back and lower limb (lower quarter) injury [4, 5] due to the inherent, high-load, biomechanical nature of the fast-bowling action [4–6]. The high prevalence of injury amongst fast bowlers [4, 5] exposes a great need for investigating the factors implicated in the causation of injury.

Both extrinsic and intrinsic factors work in combination to predispose a bowler to injury. Extrinsic (environment-related) factors include bowling workload (the numbers of balls a bowler bowls), player position (first, second or third change) and time of play (morning or afternoon). A high bowling workload [6, 7], as well as an uncommonly low bowling workload [7] have been linked with a higher risk of injury in fast bowlers. The major extrinsic factors for bowling injury identified by Orchard et al. [2] were a high number of match overs bowled in the previous week, number of days of play, and bowling second (batting first) in a match. Not only extrinsic factors, but also intrinsic factors are known to make the bowler more susceptible to injury.

Intrinsic, or person-related, factors include muscle strength, flexibility, balance, and bowling technique. Intrinsic strength-related factors, such as shoulder depression, horizontal flexion strength for the dominant limb, and quadriceps power in the non-preferred limb, are related to back injuries in fast bowlers [6]. Furthermore, increased hip internal rotation range of motion [8], reduced ankle dorsiflexion range of motion [8], and reduced hamstring flexibility was associated with a higher risk of injury [9]. Biomechanical risk factors associated with the fast-bowling action such as trunk rotation [6], shoulder counter-rotation [10], and knee angle [6] were investigated previously. In addition to the above kinematic risk factors, high ground reaction forces are associated with the delivery phase of the fast-bowling action [11–13] (Fig. 1). However, many of the studies mentioned above were conducted on school-going or adolescent cricketers and may not be generalizable to an adult fast bowling population.

A systematic review of the literature in order to identify factors associated with non-contact injury in cricket fast bowlers is an important first step in the development of injury prevention programs. Morton et al. [14] conducted a systematic review on risk factors and successful interventions for cricket-related low back pain in fast bowlers aged between 13.7 and 22.5 years. Young cricketers between the ages of 13 and 18 years are different from adults in terms of their physiology, which impacts on their predisposition to injury and phases of healing [15, 16]. Young fast bowlers who sustain injuries during their bowling career may have given up on the sport by the time they approach adulthood. Therefore, a population of young cricketers may differ from an adult population. Caution is thus advised when generalizing findings from this young population group to a population of adult fast bowlers, which emphasizes the need for studies investigating adult fast bowlers. Furthermore, Morton et al.’s [14] review included articles that specifically investigated factors associated with low back pain. However, due to the interconnectedness of the spine and the limbs, one may reason that kinematic variables affecting the spine will also affect the load placed on the lower limbs [17, 18] with subsequent risk of injury [19]. The interdependent mechanical interactions in a linked segment system, such as the system of motion of the low back, can be caused by movement coordination patterns in other body segments [20]. The systematic review by Morton et al. [14] included only intrinsic factors and, to date, there are no systematic reviews which examine both the intrinsic and extrinsic factors associated with injury in adult cricket fast bowlers. Therefore, the primary objective of this review is to assess and summarize the scientific literature related to the extrinsic and intrinsic factors associated with non-contact injury inherent to adult cricket fast bowlers.

2 Methods

The protocol for this systematic review was published in the Johanna Briggs Institute (JBI) Database of Systematic Reviews and Implementation Reports (Registration No. 1387; doi:10.11124/jbisrir-2015-1387) [1].

2.1 Eligibility Criteria

Studies evaluating the association between extrinsic and intrinsic factors, and non-contact injury in male cricket fast bowlers over the age of 18 years from all levels of play were considered for inclusion. Intrinsic factors included, but were not limited to, muscle strength, flexibility, balance, and technique. Extrinsic factors included, but were not limited to, bowling workload, player position, and time of play. Studies that included non-contact injury as an outcome measure were considered for inclusion. A non-contact injury was defined as an injury which is significant enough to cause an inability to fully or partially participate in training or matches and which was caused by an overuse mechanism rather than a collision-type mechanism [21]. A fast bowler (also called a pace bowler) refers to a bowler who bowls fast, medium-fast, or medium pace, and for whom the wicketkeeper will generally stand back from the stumps [22]. This review considered both experimental and epidemiological study designs, including randomized controlled trials, non-randomized controlled trials, quasi-experimental before and after, prospective and retrospective cohort, case-control, and analytical cross-sectional studies. Descriptive epidemiological study designs were also considered, including descriptive cross-sectional studies. Studies were limited to human participants. Studies in all languages were considered for inclusion.

2.2 Information Sources and Search Strategy

The search strategy aimed to find both published and unpublished studies. A three-step search strategy was utilized in this review. An initial limited search of MEDLINE and CINAHL was undertaken followed by analysis of the text words contained in the title and abstract, and of the index terms used to describe the article. A second search using all identified keywords and index terms was then undertaken across all included databases. Thirdly, the reference lists of all identified reports and articles were searched for additional studies. Studies published prior to 28 April 2015, in all languages, were considered for inclusion in this review. The databases searched included MEDLINE via PubMed, Cumulative Index to Nursing and Allied Health Literature (CINAHL), the Cochrane Controlled Trials Register in the Cochrane Library, Physiotherapy Evidence Database (PEDro), ProQuest 5000 International, ProQuest Health and Medical Complete, EBSCO MasterFile Premier, Science Direct, SPORTDiscus with Full Text, and SCOPUS. The search for unpublished studies included EBSCO MasterFile Premier. Initial keywords used were ‘cricket’, ‘pace’, ‘fast’, ‘bowler’, and ‘injury’ (Fig. 2). To avoid missing any relevant papers, as some authors may report data on fast bowlers as part of a general cricket paper, it was decided to include only two key words (‘cricket’ and ‘injury’) into our search strategy (Fig. 3). Searches were performed in ‘all fields’ and the filter function ‘humans’ was applied where possible.

2.3 Study Selection

The titles, abstracts, and full texts (where indicated) of all records were screened for inclusion. Studies which met the inclusion criteria were assessed by two independent reviewers (BO and TT) for methodological validity, prior to inclusion in the review. A standardized critical appraisal instrument, namely the Joanna Briggs Institute Meta Analysis of Statistics Assessment and Review Instrument (JBI-MAStARI) (http://www.joannabriggs.org/sumari.html), was used. Any disagreements that arose between the reviewers were resolved through discussion, or through use of a third reviewer (EB). All three reviewers had previous experience in the critical appraisal of evidence while the first reviewer (BO) attended formal training through the Joanna Briggs Institute.

2.4 Data Collection Process

Data were extracted by two reviewers (BO and TT) independently using the standardized data extraction tool from JBI-MAStARI (http://www.joannabriggs.org/sumari.html) [23]. The data extracted included specific details about the study design, participants (age/level of play/country), risk factor (exposure variable), injury definition (outcome), and study results specific to the review question and specific objectives (Tables 1, 2).

3 Results

3.1 Study Selection

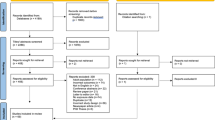

The numbers of studies screened, assessed for eligibility, and included in the review, with reasons for exclusions at each stage, are shown in a flow diagram (Fig. 4). All studies that were critically appraised were included in the review.

3.2 Study Characteristics

For each study, the critical appraisal rating, study design, participants (age/level of play/country), risk factor (exposure variable), injury definition (outcome), and study results were extracted and are shown for studies investigating the association between injury and intrinsic factors (Table 1), and injury and extrinsic factors (Table 2).

3.2.1 Age

Although one of the inclusion criteria for this systematic review was bowlers aged 18 years and above, not all studies specified the age range of their participants. Studies where the mean age of bowlers was 18 years and older were included even though the age range was not specified [10, 24–27]. In the study by Aginsky and colleagues [28], the age range was 17–36 years but the mean age was 22.4 years, which was well above the inclusion criteria of 18 years. In Ranson et al.’s [29] study, although the age range was 16–24 years, this study was included due to the fact that the mean age was 19 years. In three studies [22, 30, 31], neither the mean age, nor the age range of bowlers was specified. These three studies were included in the systematic review as they included a database of contracted first-class players in Australia and it is assumed that the majority of these contracted players were 18 years and above.

3.2.2 Level of Play

In four studies, participants played at club level [25, 32–34], while all other studies included participants who were at an elite (state/provincial and national) level of play. Club level refers to a non-elite level of play that is below provincial level.

3.2.3 Country

All six studies which investigated bowling workload were conducted in Australia [7, 22, 27, 30, 31, 35]. Four of the studies investigating intrinsic factors were conducted in South Africa [28, 32–34], four in Australia [10, 24, 26, 36], and two in England [25, 29].

3.2.4 Risk Factor (Exposure Variable)

Ten studies evaluated intrinsic neuromusculoskeletal factors, namely shoulder isokinetic strength and flexibility [28], quadratus lumborum (QL) muscle asymmetry [24, 36], static and dynamic balance, lumbo-pelvic movement control [34], lumbar spine reposition sense [33], history of lumbar stress fracture [26], previous injury [22], and bony and disc appearance on magnetic resonance imaging (MRI) [25, 29]. Technique-related intrinsic factors were investigated by three studies [10, 25, 32]. Six studies investigated bowling workload as an extrinsic factor [7, 22, 27, 30, 31, 35].

3.2.5 Injury Definition (Outcome)

All six bowling workload studies included non-contact injuries only [7, 22, 27, 30, 31, 35]. Amongst the studies which investigated intrinsic factors, most studies did not explicitly state that only non-contact injuries were included, but this was assumed because the areas of injury, namely shoulder [28], lumbar spine [24–26, 29, 36], lower quarter (lower back and lower limb) [32–34], and trunk [10], are common areas injured as a result of non-contact injuries.

In all studies investigating bowling workload, injuries severe enough to limit participation in matches [7, 22, 27, 30, 31, 35] and/or training [27] were included. Amongst the studies investigating intrinsic factors, seven studies included all levels of severity whether it influenced participation in matches or not [10, 25, 28, 32–34, 36] while three studies stated that injury should be severe enough to lead to an inability to participate in matches [26, 29] and/or training sessions [24, 29].

3.3 Risk of Bias Within and Across Studies

The detailed critical appraisal of each of the studies is shown in Table 3. All six studies investigating bowling workload as an extrinsic factor used a prospective cohort study design (JBI level 3e) [7, 22, 27, 30, 31, 35]. Of the studies investigating intrinsic factors, three studies were of a cross-sectional nature (JBI level 4b) [24, 25, 28], while the rest of the studies used a prospective cohort design (JBI level 3b) [10, 26, 29, 32–34, 36].

Critical appraisal was completed using the standardized critical appraisal instruments from the JBI-MAStARI and are shown in Table 3. The critical appraisal mean score of the papers included in this study was 6.88 (SD 1.15) out of a maximum of 9. All studies obtained a minimum critical appraisal score of 5/9 and above. Cohen’s kappa was used to calculate agreement between the primary and secondary reviewers. The majority of items scored 0.80 and above, prior to discussion. After discussion between reviewers, 100 % agreement between items was attained. The three items which showed the lowest agreement prior to discussion were items 3, 5, and 7. For item 3 (‘were confounding factors identified and strategies to deal with them stated?’) it was decided to only consider obvious confounding factors which were identified by all three reviewers. With regards to item 5 (‘if comparisons are being made, was there sufficient description of groups?’), a ‘yes’ rating was given if at least one characteristic, such as age, was compared between the two groups. For item 7 (‘were the outcomes of people who withdrew described and included in the analysis?’), a ‘yes’ rating was given if the author stated that all participants who withdrew were included in the analysis and also if this was not explicitly described but from results it became clear that the whole population was included in the analysis.

Six of the studies included in this review were population based [22, 24, 26, 27, 30, 31] and therefore randomization did not apply. Of the remaining ten studies, four included participants on a random basis [28, 32–34]. The inclusion criteria of participants were clearly described in all but one study [10]. The confounding factors were identified, strategies to deal with confounding factors were stated, and outcomes were assessed using objective criteria in all studies. Only four studies described the injured and non-injured groups in terms of frequency of bowling [29], age [7, 34, 36], type of bowler, handedness, bowling experience, and previous injuries sustained [34], and mass, height and body mass index [36]. Two studies were of a cross-sectional nature [24, 28], while all other studies followed up the incidence of injuries over a sufficient period of time (between one and ten cricket seasons). Outcomes of participants who withdrew were described and included in the analysis in seven studies [24, 25, 28, 33, 34, 36]. In all studies, outcomes were measured in a reliable way and appropriate statistical analysis was used.

3.4 Results of Individual Studies

The factors which showed a statistically significant association with injury in fast bowlers are described in the sections which follow. Shoulder internal rotation strength, QL asymmetry, dynamic balance, lumbar proprioception (joint position sense), pars interarticularis and disc appearance on MRI, previous injury, and technique-related factors were classified as intrinsic factors (Table 1), while bowling workload was the only extrinsic factor identified (Table 2).

3.4.1 Shoulder Internal Rotation Strength

Bowlers with a history of shoulder injury displayed higher concentric weight-normalized torque values for internal rotation of the bowling arm at a higher velocity (180°/s) than bowlers without a history of shoulder injury (65.20 ± 10.03 vs 45.91 ± 10.26 Nm kg−1; p < 0.009) [28].

3.4.2 Quadratus Lumborum (QL) Asymmetry

QL asymmetry was investigated by two studies and conflicting results were obtained. A higher level of QL muscle size asymmetry was found in fast bowlers with low back pain (24.9 %) than in fast bowlers without low back pain (3.0 %), and the rest of the squad (batsmen, spin bowlers, wicket keepers) with low back pain (7.3 %) or without low back pain (8.7 %) (p = 0.002) [24]. However, Kountouris et al. [36] found that bowlers who did not sustain an injury during the cricket season had significantly larger asymmetries when compared with those in the soft tissue injury and bone stress injury groups (p = 0.050). Furthermore, larger asymmetries were found in the non-injured group (20.2 %) compared with any (soft tissue and bone stress) lumbar injury (9.1 %) (p = 0.025). Amongst participants with more than 10 % asymmetry, those who did not sustain an injury (23 %) had a higher degree of asymmetry than those who sustained an injury (lumbar bone stress 17 % and soft tissue 11 %) [36].

3.4.3 Dynamic Balance

One study assessed dynamic balance by means of the Star Excursion Balance Test (SEBT). A decreased reach distance in the posterior medial reach direction while standing on the right leg was found in bowlers who sustained an injury during the cricket season under review when compared with the no lower quarter (LQ) injury group (p = 0.02, 95 % CI 9.44–11.81) [34].

3.4.4 Lumbar Proprioception (Joint Position Sense)

A single study investigated a total of nine lumbar proprioception (position sense) variables in three different positions: neutral lumbar spine (flexion–extension, left–right, average), front foot placement position of the bowling action (flexion–extension, left–right, average) and ball release position (flexion–extension, left–right, average) [33]. Injuries sustained previously were associated with one position sense variable whilst previous injuries sustained during the bowling action were associated with two position sense variables. Low back injuries sustained previously were associated with eight position sense variables, injuries sustained during the cricket season with three, injuries sustained while bowling during the cricket season with three, and low back injuries sustained during the season were associated with two position sense variables.

3.4.5 Pars Interarticularis Appearance on MRI

In two studies, bone stress, as diagnosed by means of MRI, was associated with lumbar pars interarticularis stress fractures during the cricket season under review. A statistically significant difference was found in the non-dominant side lumbar pars interarticularis appearance on MRI of the fast bowlers who had an occurrence of acute stress injury, when compared with those with no lower back injury (p = 0.001) [25]. There was a strong correlation between acute bone stress on MRI in season 1 or 2 and the later development of a stress fracture (11 of the 15 bowlers whose MRI scans showed the presence of acute bone stress suffered a partial or complete stress fracture later in the same season) (p < 0.001). In 10 of the 12 injured players (83.3 %), the stress fracture was on the opposite side to the bowling arm while the other two had bilateral fractures [29].

3.4.6 Disc Appearance on MRI

A difference was found in the lumbar intervertebral disc MRI appearance (in terms of degeneration) of the fast bowlers who had an occurrence of acute stress injury when compared with those with no lower back injury (p < 0.05) [25]. Intervertebral disc degeneration was less prevalent in those with acute stress injuries than bowlers who did not get injured.

3.4.7 Previous Injury

A history of lumbar stress fracture and any injury sustained earlier in the season under review was associated with an increased risk of future injury. In fast bowlers with a history of lumbar stress fractures, there was a greater risk of calf strain [risk ratio (RR) 4.1; 95 % CI 2.4–7.1], quadriceps strain (RR 2.0; 95 % CI 1.1–3.5), and hamstring strain (RR 1.5; 95 % CI 1.03–2.1), although the risk of knee cartilage injury was reduced (RR 0.1; 95 % CI 0.0–0.4) [26]. In a multivariate analysis, using binary logistic regression, Orchard et al. [22] found that bowlers who had sustained a previous injury in the same season were 1.85 times more likely to sustain a tendon injury within 21 days after a test match [odds ratio (OR) 1.85; 95 % CI 1.33–2.55] or a limited overs match (OR 1.67; 95 % CI 1.15–2.42).

3.4.8 Technique-Related Factors

Technique-related factors included trunk flexion–extension angle, shoulder counter-rotation, knee angle, and the proportion of side-flexion used. It was found that the shoulder girdles of the injured bowlers were in a position of extension while the shoulder girdles of the non-injured bowlers in relation to the pelvis were in a position of flexion at front foot placement (p = 0.0093) [32]. Shoulder counter-rotation was higher amongst bowlers who were diagnosed with a stress fracture than the no trunk injury group (F = 4.5; p = 0.01) [10]. The knee angles of the bowlers that did not sustain an injury were in more flexion at front foot impact than those that did sustain an injury during the season (p = 0.02) [32]. Fast bowlers who presented with an acute lumbar stress injury on MRI, used a smaller proportion of their standing contralateral side-flexion range of motion during the delivery stride of the bowling action than non-lower-back-injured bowlers (p = 0.03) [25].

3.4.9 Bowling Workload

Bowling workload was analyzed in various different ways using a variety of statistical methods as described hereafter.

3.4.9.1 Days Between Bowling Sessions

Dennis et al. [7] analyzed the days between bowling sessions and found that the combined data from two seasons (2000–2001 and 2001–2002) showed that an average of <2 days (RR 2.4; 95 % CI 1.6–3.5), or ≥5 days (RR 1.8; 95 % CI 1.1–2.9) between bowling sessions increased the risk of injury. Data from the 2000–2001 season indicated that <6 days between training sessions led to a 1.8-fold risk of sustaining injury when compared with ≥6 days between training sessions (RR 1.8; 95 % CI 1.1–3.0). Similar results were found in the 2001–2002 season, where <6 days between training sessions led to twice the risk of injury, when compared with ≥6 days between training sessions (RR 2.0; 95 % CI 1.5–2.7).

3.4.9.2 Frequency of Bowling

The number of days since the previous bowling session was fewer for bowlers who sustained an injury (mean 1.9, SD 1.5) than for bowlers who did not sustain an injury (mean 3.2, SD 3.3; p < 0.01) [35]. Also, bowling in ≥5 sessions in any 7-day period increased the injury risk by 4.5 times (RR 4.5; 95 % CI 1.0–20.1) [35].

3.4.9.3 Number of Deliveries

Number of deliveries were analyzed in four different studies [7, 27, 31, 35]. Dennis et al. [7] found that bowlers who bowled <40 deliveries per session had an increased risk of injury compared with bowlers who bowled >40 deliveries per session (RR 1.2; 95 % CI 0.8–1.9) (combined data from two cricket seasons). Furthermore, it was found that if <123 (RR 1.4; 95 % CI 1.0–2.0) or >188 deliveries per week (RR 1.4; 95 % CI 0.9–1.6) were bowled, the risk was higher than bowling between 123 and 188 deliveries per week (combined data from two cricket seasons) [7]. In another study conducted by Dennis et al. [35], bowlers who sustained an injury during the season had a higher mean number of deliveries (mean 235, SD 30) than those who did not sustain an injury (mean 165, SD 23) (p < 0.01). Additionally, both those who bowled >203 deliveries per week (RR 6.0; 95 % CI 1.00–35.91), as well as bowlers who bowled more than the mean number of match deliveries per month (mean 522), were at an increased risk of injury (p < 0.01, RR not stated) [35]. Orchard et al. [31] found that bowling more than 50 match overs (300 deliveries) in a 5-day period increased the risk to sustain an injury over the next month compared with bowlers who bowled 50 overs or less (RR 1.54; 95 % CI 1.04–2.29) [31]. The higher the number of deliveries (referred to as acute external workload by the authors) bowled in the current week, the lower the likelihood to sustain an injury in that same week (p = 0.0001) [27]. A higher average number of deliveries bowled over a 4-week period (chronic external workload) was associated with a lower likelihood of sustaining an injury in the current week (p = 0.002) and in the subsequent week (p = 0.017) [27].

3.4.9.4 Training-Stress Balance

Hulin et al. [27] took the training intensity [rating of perceived exertion (RPE) using a 10-point category ratio scale] and training duration into account when internal workload was calculated, while external workload referred to the number of deliveries. Furthermore, they calculated training-stress balance ranges (expressed as a percentage) by dividing the acute workload (mean number of deliveries in 1 week) by the chronic workload (mean number of deliveries over a 4-week period). A negative training-stress balance increased the risk of injury in subsequent weeks for internal workload (RR 2.2; 95 % CI 1.91–2.53; p = 0.009), and external workload (RR 2.1; 95 % CI 1.81–2.44; p = 0.01). An internal workload training-stress balance of >200 % increased the risk of injury to 4.5 (RR 4.5; 95 % CI 3.43–5.90; p = 0.009), compared with those with a training-stress balance between 50 and 99 %. An internal workload training-stress balance between 150 and 199 % led to an RR of 2.1 (95 % CI 1.25–3.53; p = 0.035) in comparison with a training-stress balance of between 50 and 99 %. An external workload training-stress balance of >200 % led to an RR of 3.3 (95 % CI 1.50–7.25; p = 0.033), in comparison with a training-stress balance of between 50 and 99 % [27].

3.4.9.5 Risk of Injury 1, 3 or 4 Weeks After High Workload Exposure

The mean number of deliveries 1, 3 and 4 weeks prior to sustaining an injury was analyzed by Dennis et al. [7, 35] and Orchard et al. [22, 30]. Dennis et al. [7] found that injured bowlers bowled a higher mean number of deliveries (160 deliveries) in the week prior to the injury when compared with the non-injured bowlers (142 deliveries) (p < 0.01). The mean number of deliveries per session in the 8–21 days prior to the date of injury (mean 77) was higher than the mean number of deliveries per session in the 8–21 days prior to any other observation during the whole season (mean 60) (p < 0.02) [35].

The relative odds of sustaining an injury were greatest at 21 (OR 1.77; 95 % CI 1.05–2.98) and 28 (OR 1.62; 95 % CI 1.02–2.57) days after a high bowling workload [30]. Bowling >50 overs in a match led to an injury incidence in the next 21 days of 3.37 injuries per 1000 overs bowled while bowling <50 overs led to an injury incidence of 1.90 injuries per 1000 overs bowled (CI 1.05–2.98) [30]. Bowling >30 overs in the second innings of a match increased the injury risk per over bowled in the next 28 days (RR 2.42; 95 % CI 1.38–4.26) [30]. In another study conducted by Orchard et al. [22], the risk of sustaining tissue-specific injuries, namely tendon, bone, muscle, and joint, were analyzed separately. Risk factors for tendon injury in the next 21 days were acute match overs of ≥50 (OR 3.69; 95 % CI 1.82–8.24), career overs of ≥1200 (OR 2.38; 95 % CI 1.65–3.42), overs in the previous season of ≥400 (OR 2.01; 95 % CI 1.38–2.94), previous injury in the same season (OR 1.85; 95 % CI 1.33–2.55) and limited overs match (OR 1.67; 95 % CI 1.15–2.42), while overs in the previous 3 months of ≥150 (OR 0.29; 95 % CI 0.17–0.50) and career overs of ≥3000 (OR 0.24; 95 % CI 0.11–0.52) were protective. Risk factors for bone stress in the next 28 days were ≥150 overs in the previous 3 months (OR 2.10; 95 % CI 1.48–2.99), previous injury in the same season (OR 1.71; 95 % CI 1.25–2.34), while career List A overs (bowled in domestic 1-day and T20 matches) of ≥1200 were a protective factor (OR 0.31; 95 % CI 0.21–0.45). Factors related to muscle injury in the next 21 days were playing in a limited overs match (OR 1.34; 95 % CI 1.08–1.67) and bowling ≥400 overs in the previous season (protective) (OR 0.71; 95 % CI 0.53–0.95); risk factors for joint injury in the next 28 days were bowling ≥450 overs in the previous season (OR 1.96; 95 % CI 1.14–3.37) and bowling ≥3000 career List A overs (OR 1.84; 95 % CI 1.02–3.31) [22].

3.5 Synthesis of Results

A meta-analysis could not be performed because of the wide variety of factors studied and methodologies applied. Some of the intrinsic factors, namely shoulder internal rotation strength [28], dynamic balance [34], and proprioception [33], were only investigated in one study each. The methodologies of the studies describing all other intrinsic factors were very different from one another and results could therefore not be pooled. A meta-analysis could not be performed for extrinsic factors due to the different ways in which data were reported. Clinically, overall, studies were very similar (e.g., age, population, level of play), but there were methodological (e.g., type of factors investigated) and statistical differences in the way results were analyzed (e.g., bowling workload), which contributed to the decision not to combine results in a meta-analysis, but rather to present results in a narrative form.

4 Discussion

This is the first systematic review which has reported on the literature on intrinsic and extrinsic factors associated with non-contact injury in adult cricket fast bowlers.

4.1 Risk of Bias Within and Across Studies

No experimental studies, such as randomized controlled trials, were found and although the studies included in this systematic review were categorized as providing relatively lower levels of evidence [level 3e (prospective cohort) and level 4b (cross-sectional)], the methodological quality, as established by the critical appraisal process, was sufficient. The majority of studies scored a rating of 7/9 or above, while only one study scored 5/9. The prospective cohort study design is most suitable to the research question, which in this case was the identification of factors associated with injury. Bias within these studies can be limited by the randomized experimental inclusion of bowlers, a detailed description of injured and non-injured groups, and accounting for bowlers who did not complete a season for reasons other than injury. Other aspects influencing the methodological quality of the study, such as clearly defining the inclusion criteria, measuring outcomes in an objective and reliable manner, identifying confounding factors, and using appropriate statistical methods, should be considered during the design of any study in order to improve the quality of a study.

All studies either implied or explicitly stated that only non-contact injuries were investigated, or at least that the majority of injuries were of a non-contact nature. It would be useful if future studies clearly state or, better, describe the criteria used to define injury. Furthermore, the activity at the time of injury may add valuable insight as some injuries to bowlers might not be related to bowling; for example, they could occur whilst batting or fielding and therefore might confound bowling-related injury risk. Therefore, rather than looking at injuries to bowlers, we may be better focusing on injuries that occur during, or are deemed to be related to, bowling.

Many studies considered only injuries severe enough to limit participation in training or match play, while injuries leading to low severity symptoms were not considered. A severe injury may commence with minor symptoms or ‘niggles’ and may then lead to a more severe time-loss injury. It is possible that the factors associated with these less severe injuries differ from those associated with more severe injury. The potential identification of the factors contributing to lesser injury may aid our attempts to prevent injuries before they become severe enough to limit participation.

The injured and non-injured groups were not described or compared in the majority of studies. Should these groups differ significantly in terms of confounding factors (e.g., age, mass, height, body mass index), it is possible that such biases may have clouded the final results of these studies. In 13 studies, injury incidence was monitored over a number of months and, in some cases, years. The longitudinal nature of these studies elevated the rigor of such investigations since injuries were recorded in a reliable way and recall bias was excluded.

The objectivity of methods used to record injuries varied amongst studies; where some studies relied on recall [24, 28], others monitored injuries throughout the season through intermittent enquiry via players, team doctors, and physiotherapists [10, 25, 29, 32–34, 36], while other studies made use of injury data captured on large databases [7, 22, 26, 27, 30, 31, 35]. Despite the advantages of highly skilled diagnoses of injury, limited resources often restrict the fastidious reporting of injuries through an assessment by a medical professional. Nevertheless, the use of accurate diagnostic data to limit bias and inaccurate reporting is a particular advantage in certain studies.

Only seven studies included the participants who dropped out of the study in the data analysis [24, 25, 28, 29, 33, 34, 36]. Not including bowlers who stopped playing cricket halfway through a season in the data analysis, whether discontinuing cricket was due to undetected injury or non-injury-related reasons, has the potential of introducing attrition bias into the results. Someone who started playing at the start of a cricket season may stop playing cricket because of an unreported and thus undetected injury. The same may happen in a case where a type of injury was sustained that was not defined as being the focus of the specific study (e.g., contact injury while batting). Also, a bowler discontinuing cricket early in the season may have suffered an injury later in the season should he have continued to play throughout the season.

The inclusion of an unpublished study [32] adds to the strength of this systematic review because the inclusion of grey literature tends to reduce publication bias. Also, reporting bias was restricted due to a pre-developed protocol which was approved through a peer review process (JBI 1387; doi:10.11124/jbisrir-2015-1387) [1]. The protocol was deviated from in terms of the types of injuries that were included. Initially, only literature on lower back and lower limb injuries was sought, but very few papers were found which investigated specifically injury to the lower back or lower limb. It was therefore decided to include all non-contact injuries in this systematic review.

4.2 Study Characteristics

The mean age or age range of participants was not specified in all studies. It is important to take age into consideration, as results from studies conducted on school-going bowlers cannot be generalized to adult cricketers. It is a well known fact that the neuromuscular system undergoes a variety of changes during the human lifespan that are directly related to age. These include, but are not limited to, decreases in muscle strength, balance, proprioception, and reaction time [37]. Although the ageing process begins from the moment an individual is born, it seems logical that age-related changes in the neuromuscular system begin as soon as the neuromuscular system is fully matured. The literature depicting when in the human life cycle this maturity is reached, is scarce. When taking a closer look at ageing of the neuromuscular system of sports players specifically, muscle fatigue has a direct influence on sporting performance. Muscle fatigue is defined by Bigland-Ritchie et al. [38] as a decrease in the force-generating ability of the neuromuscular system during continuous activity. Muscular fatigue is directly affected by many factors, one of which is age [39]. When compared, the muscle fatigue in boys (mean age 10 years) was lower and they had a faster recovery rate than adult men (mean age 26 years) [40]. Furthermore, bone mass is positively correlated with age, while the relationship between bone strength and age is primarily explained by the increase in muscle force in children and adolescents [41]. Due to the demanding nature of fast bowling in cricket, fast bowlers are not immune to age-related changes and there will be neuromuscular differences in players of different ages.

Participants played cricket at club level in only four of the 16 studies included in this systematic review. Although the workload of non-elite fast bowlers may be lower than that of elite players, this population are vulnerable to the effects of injury as this group plays on a competitive level, but at the same time does not have easy access to preventative and rehabilitative medical services. All studies where bowling workload was analyzed were performed on Australian databases. Analysis done on injury data in other countries could not be included as specific fast bowling data could not be extracted. If bowling workload could be analyzed in other cricket-playing countries such as New Zealand, South Africa, England, and the West Indies, data could be pooled with the overarching aim to improve injury prevention globally.

4.3 Intrinsic Factors

Shoulder internal rotation strength of the bowling arm, QL asymmetry, dynamic balance, lumbar proprioception (joint position sense), technique-related factors, pars interarticularis and disc appearance on MRI, and previous injury were classified as intrinsic factors which have been studied in relation to injury in fast bowlers.

Modifiable intrinsic risk factors were decreased shoulder internal rotation strength of the bowling arm [28], poor dynamic balance [34], and poor lumbar proprioception [33]. Only one study was conducted for each of these factors, all of which had a low level of strength of the evidence due to the observational methodology used. The two studies which investigated dynamic balance and proprioception were of a higher level of evidence (level 3e) than the study investigating shoulder strength (level 4b). However, although all three of these studies obtained high critical appraisal scores, cause–effect conclusions cannot be drawn from the findings of these studies.

The importance of optimal balance ability is emphasized by the known relationship between poor balance and a higher incidence of injury, in addition the relationship between a highly developed balance ability and the reduced incidence of injuries [42, 43]. Proprioception is a requirement of optimal balance. Both peripheral and spinal pathology may be associated with proprioceptive deficits which may lead to abnormal loading across joint surfaces [44] and tissue overload and injury [45, 46]. However, injury may be both a cause and a consequence of poor proprioception [47]. These modifiable intrinsic factors may thus be incorporated into injury prevention programs as they can potentially optimize the prevention of injury in the fast bowler. Dynamic balance and proprioception can be categorized as neuromusculoskeletal control factors. Other lower limb functional tests relying on neuromuscular control, such as the standing long jump (SLJ) test, the single-leg hop (SLH) for distance test, and the lower extremity functional test (LEFT), can be performed as injury predictors in a population of fast bowlers [48]. The functional needs of bowling, such as power, endurance, and eccentric strength, should be taken into account when studies on injury predictors are formulated [49].

One of the functional adaptations found in the fast bowler is a hypertrophied QL ipsilateral to the dominant side [24, 36]. Two studies investigated QL asymmetry but vastly contradicting results were found. The methodological quality of these two studies also differed greatly, with the study that found a higher degree of QL asymmetry to be protective against injury being of higher quality [36]. Hides et al. [24] included a small sample size (n = 9) and tested cross-sectionally, while Kountouris et al. [36] included more bowlers (n = 23) who were part of a prospective cohort study. Hides et al. [24] asked for a history of low back pain which has the ability to introduce recall bias, while Kountouris et al. [36] diagnosed bony and soft tissue pathology radiologically (scintigraphy, MRI, or computed tomography). A mathematical model, developed by de Visser et al. [50], proposed that QL asymmetry is not the cause of stress fractures and may even act to reduce the stress on the lumbar spine during extreme postures and muscle activation. Caution should be applied in the interpretation of mathematical models because of the number of stated and less obvious assumptions made in the creation of mathematical models. It is furthermore postulated that a large QL asymmetry may be an indication that the fast bowler is utilizing an extreme lateral flexion posture concurrent with a large lateral flexion moment in the direction of the non-dominant side [51], which may indicate the need for bowling technique modification.

Four different technique-related intrinsic factors were investigated (shoulder-pelvis flexion–extension angle, shoulder counter-rotation, knee angle and the proportion of side-flexion) in three different studies [10, 25, 32]. Changing the technique of the fast bowler with the aim of decreasing injury may impact on bowling performance in terms of accuracy, consistency, and ball release speed, and should therefore be considered with care. A clear trade-off is present between optimal performance and injury prevention. This is confirmed with the example where a straighter front knee is associated with increased ball release speed [10, 52, 53], but also with a higher risk of sustaining an injury [6, 9] as a more extended knee angle is associated with higher lumbar load [52]. In contrast, Worthington and colleagues [12] did not find a relationship between knee angle and peak vertical force, although the sample size of the sub-analysis was small and the risk for type II error large. It should be noted, however, that not all intrinsic and extrinsic factors act in a way that forces a trade-off between bowling performance and injury prevention. Extrinsic and intrinsic factors related to the promotion of a more ‘protective’ front knee angle which are at the same time associated with a higher ball release speed should be further investigated and encouraged amongst bowlers. Also, if ‘protective’ factors related to neuromuscular control, such as balance and proprioception, can be optimized through evidence-based training programs, these attributes may serve to prevent injury amidst the bowling action with its inherently high loads.

The appearance of the pars interarticularis on MRI [25, 29] may be seen as a non-modifiable intrinsic risk factor, or rather, as a precursor of an acute lumbar stress fracture, which may be used in pre-participatory screening programs in the early identification of those at risk of an acute lumbar stress fracture. Previous injury is another non-modifiable risk factor which is associated with an increased risk of sustaining an injury [22, 26]. It would therefore be prudent for bowlers who have sustained a stress fracture as well as any other previous injury to be carefully assessed and enter an individualized, intensive rehabilitation program.

4.4 Extrinsic Factors

The only modifiable extrinsic risk factor found to be associated with injury was bowling workload. Various sub-categories related to bowling workload were analyzed, including days between bowling sessions [7], frequency of bowling [35], number of deliveries [7, 27, 31, 35], training–stress balance [27], and risk of injury 1, 3, or 4 weeks after high workload [7, 22, 30, 35]. In addition hereto, various statistical methods were applied including RRs [7, 27, 31, 35], ORs [22, 30], means and SDs [35], and likelihood ratios [27]. Where RRs were calculated, each of the authors used a different number of deliveries in each category. It is suggested that a consensus paper is published in order to urge researchers to analyze results on bowling workload in a consistent manner so that comparison across various populations is made possible. Overall, it seems to be that a moderate bowling workload is protective against injury while both a too high as well as a too low workload increase the risk of injury [7, 27, 31, 35]. Findings from four studies [7, 22, 30, 35] indicate that the risk of injury increases between 1 and 4 weeks after the bowler has been exposed to a high bowling workload. Limiting the number of bowling sessions to fewer than five sessions in a 7-day period and allowing 2–4 days for recovery between sessions may be of benefit. Monitoring of bowling workload is therefore essential, while the influence of this extrinsic risk factor may be curbed by the optimization of modifiable intrinsic protective factors. Australia was the only country which published bowling workload studies. This practice should be encouraged in other countries as well. In general, it is evident that there is a clear lack of research on factors associated with non-contact injury in adult fast bowlers, as only six articles on extrinsic factors and ten on intrinsic factors met the inclusion criteria of this review.

5 Limitations and Future Research

The literature search strategy was conducted in the main databases in the field of sports medicine and sports science. However, it is possible that other eligible studies, which were not listed in these databases or not referenced in the reviewed reference lists, were not identified or included in this systematic review.

A meta-analysis was not possible due to the lack of consistency in the identified studies. It is evident that more research is needed into intrinsic neuromusculoskeletal factors such as concentric and eccentric muscle strength, muscle morphology, dynamic balance and proprioception, and their association with injury. Also, technique-related intrinsic factors, including kinematics and kinetics of the fast-bowling action, should be investigated in adult fast bowlers. Consensus is needed in the analysis of bowling workload to allow for comparison across different populations. Other extrinsic factors which may influence risk of injury should be investigated; for example, bowling in the first or in the second innings, pitch quality, and weather conditions.

Future studies should consider including ‘non-time loss’ injuries into their investigation. The definition of injury should be clearly stated, especially in terms of the nature of the injuries included (e.g., contact or non-contact injuries). The age range of participants should be specified to enable the reader to generalize findings to the appropriate population group. Furthermore, more research is needed on the population of club-level fast bowlers, as these bowlers are especially at risk of injury as they have limited access to preventative and rehabilitative medicine. Future studies aiming at defining the factors associated with injury in a prospective cohort should be of sufficient quality, should ensure inclusion of bowlers on a random basis, and should describe groups sufficiently accounting for bowlers who did not complete a season. Other aspects influencing the methodological quality of a study, such as clearly defining the inclusion criteria, measuring outcomes in an objective and reliable manner, identifying confounding factors, and using appropriate statistical methods, should be considered during the design of any study. It would be extremely useful if the recording of bowling workload specifically in fast bowlers could be documented in all cricket-playing countries and data could be analyzed in such a way that comparisons could be made and data could be pooled.

6 Conclusions and Practical Implications

Identifying the factors associated with injury is a crucial step which should precede the development of and research into the effectiveness of injury prevention programs. Based on the results found in this study, factors such as shoulder internal rotation strength, balance, and proprioception can be included in both pre-participatory screening and injury prevention programs. The early identification of bony changes and disc degeneration through MRI can be used in the early identification of players at risk of lumbar stress fracture. Individualized rehabilitation of previous injury can be used to decrease the risk of future injury. Modification of technique-related factors should be considered with care as these may impact on bowling performance. Bowling workload should be monitored and moderate loads may be prescribed. The optimization of the modifiable intrinsic factors may aid in the prevention of injuries in the presence of the high-load biomechanical nature of the fast-bowling action and the predisposing extrinsic factors such as bowling workload.

References

Olivier B, Stewart A, Taljaard T, et al. Extrinsic and intrinsic factors associated with non-contact injury in adult pace bowlers: a systematic review protocol. JBI Database Syst Rev Implement Rep. 2015;13(1):3–13.

Orchard J, James T, Alcott E, et al. Injuries in Australian cricket at first class level 1995/1996 to 2000/2001. Br J Sports Med. 2002;36(4):270–4 (discussion 5).

Seward H, Orchard J, Hazard H, et al. Football injuries in Australia at the elite level. Med J Aust. 1993;159(5):298–301.

Frost WL, Chalmers DJ. Injury in elite New Zealand cricketers 2002–2008: descriptive epidemiology. Br J Sports Med. 2012;1–6. doi:10.1136/bjsports-2012-091337.

Orchard JW, James T, Portus MR. Injuries to elite male cricketers in Australia over a 10-year period. J Sci Med Sport. 2006;9(6):459–67. doi:10.1016/j.jsams.2006.05.001.

Foster D, John D, Elliott B, et al. Back injuries to fast bowlers in cricket: a prospective study. Br J Sports Med. 1989;23(3):150–4.

Dennis R, Farhart P, Goumas C, et al. Bowling workload and the risk of injury in elite cricket fast bowlers. J Sci Med Sport. 2003;6(3):359–67.

Dennis RJ, Finch CF, McIntosh AS, et al. Use of field-based tests to identify risk factors for injury to fast bowlers in cricket. Br J Sports Med. 2008;42(6):477–82. doi:10.1136/bjsm.2008.046698.

Elliott BC, Hardcastle PH, Burnett AE, et al. The influence of fast bowling and physical factors on radiologic features in high performance young fast bowlers. Sports Med Train Rehabil. 1992;3(2):113–30.

Portus M, Mason BR, Elliott BC, et al. Technique factors related to ball release speed and trunk injuries in high performance cricket fast bowlers. Sports Biomech. 2004;3(2):263–84. doi:10.1080/14763140408522845.

Ferdinands RE, Kersting U, Marshall RN. Three-dimensional lumbar segment kinetics of fast bowling in cricket. J Biomech. 2009;42(11):1616–21. doi:10.1016/j.jbiomech.2009.04.035.

Worthington P, King M, Ranson C. The influence of cricket fast bowlers’ front leg technique on peak ground reaction forces. J Sports Sci. 2013;31(4):434–41. doi:10.1080/02640414.2012.736628.

Hurrion PD, Dyson R, Hale T. Simultaneous measurement of back and front foot ground reaction forces during the same delivery stride of the fast-medium bowler. J Sports Sci. 2000;18(12):993–7. doi:10.1080/026404100446793.

Morton S, Barton CJ, Rice S, et al. Risk factors and successful interventions for cricket-related low back pain: a systematic review. Br J Sports Med. 2013;1–8. doi:10.1136/bjsports-2012-091782.

Gerrard DF. Overuse injury and growing bones: the young athlete at risk. Br J Sports Med. 1993;27(1):14–8.

Difiori JP. Overuse injuries in children and adolescents. Phys Sportsmed. 1999;27(1):75–89. doi:10.3810/psm.1999.01.652.

Zazulak BT, Hewett TE, Reeves NP, et al. Deficits in neuromuscular control of the trunk predict knee injury risk: a prospective biomechanical-epidemiologic study. Am J Sports Med. 2007;35(7):1123–30. doi:10.1177/0363546507301585.

Kulas AS, Hortobagyi T, Devita P. The interaction of trunk-load and trunk-position adaptations on knee anterior shear and hamstrings muscle forces during landing. J Athl Train. 2010;45(1):5–15. doi:10.4085/1062-6050-45.1.5.

Nadler SF, Malanga GA, Feinberg JH, et al. Functional performance deficits in athletes with previous lower extremity injury. Clin J Sport Med. 2002;12(2):73–8.

Putnam CA. Sequential motions of body segments in striking and throwing skills: descriptions and explanations. J Biomech. 1993;26(Suppl 1):125–35.

Orchard JW. Intrinsic and extrinsic risk factors for muscle strains in Australian football. Am J Sports Med. 2001;29(3):300–3.

Orchard JW, Blanch P, Paoloni J, et al. Cricket fast bowling workload patterns as risk factors for tendon, muscle, bone and joint injuries. Br J Sports Med. 2015;1–6. doi:10.1136/bjsports-2014-093683.

Joanna Briggs Institute. Joanna Briggs Institute reviewers’ manual. 2014th ed. Australia: The Joanna Briggs Institute, The University of Adelaide; 2014.

Hides J, Stanton W, Freke M, et al. MRI study of the size, symmetry and function of the trunk muscles among elite cricketers with and without low back pain. Br J Sports Med. 2008;42(10):809–13. doi:10.1136/bjsm.2007.044024.

Ranson C, Burnett A, King M, et al. Acute lumbar stress injury, trunk kinematics, lumbar MRI and paraspinal muscle morphology in fast bowlers in cricket. In: International society of biomechanics in sports conference, Seoul; 2008.

Orchard J, Farhart P, Kountouris A, et al. Pace bowlers in cricket with history of lumbar stress fracture have increased risk of lower limb muscle strains, particularly calf strains. Open Access J Sports Med. 2010;1:177–82. doi:10.2147/OAJSM.S10623.

Hulin BT, Gabbett TJ, Blanch P, et al. Spikes in acute workload are associated with increased injury risk in elite cricket fast bowlers. Br J Sports Med. 2014;48(8):708–12. doi:10.1136/bjsports-2013-092524.

Aginsky KD, Lategan L, Stretch RA. Shoulder injuries in provincial male fast bowlers -predisposing factors. SAJSM. 2004;16(1):25–8.

Ranson CA, Burnett AF, Kerslake RW. Injuries to the lower back in elite fast bowlers: acute stress changes on MRI predict stress fracture. J Bone Jt Surg Br. 2010;92(12):1664–8. doi:10.1302/0301-620X.92B12.24913.

Orchard JW, James T, Portus M, et al. Fast bowlers in cricket demonstrate up to 3- to 4-week delay between high workloads and increased risk of injury. Am J Sports Med. 2009;37(6):1186–92. doi:10.1177/0363546509332430.

Orchard JW, Blanch P, Paoloni J, et al. Fast bowling match workloads over 5-26 days and risk of injury in the following month. J Sci Med Sport. 2015;18(1):26–30. doi:10.1016/j.jsams.2014.09.002.

Olivier B, Stewart AV, McKinon W. Cricket pace bowlers: the role of spinal and knee kinematics in low back and lower limb injury. 2014 (unpublished).

Olivier B, Stewart AV, McKinon W. Injury and lumbar reposition sense in cricket pace bowlers in neutral and pace bowling specific body positions. Spine J. 2014;14(8):1447–53. doi:10.1016/j.spinee.2013.08.036.

Olivier B, Stewart AV, Olorunju SA, et al. Static and dynamic balance ability, lumbo-pelvic movement control and injury incidence in cricket pace bowlers. J Sci Med Sport. 2015;18:19–25.

Dennis R, Farhart P, Clements M, et al. The relationship between fast bowling workload and injury in first-class cricketers: a pilot study. J Sci Med Sport. 2004;7(2):232–6.

Kountouris A, Portus M, Cook J. Cricket fast bowlers without low-back pain have larger quadratus lumborum asymmetry than injured bowlers. Clin J Sport Med. 2013;1–5. doi:10.1097/JSM.0b013e318280ac88.

Bassey EJ. Physical capabilities, exercise and aging. Rev Clin Gerontol. 1997;7:289–97.

Bigland-Ritchie B, Johansson R, Lippold OC, et al. Contractile speed and EMG changes during fatigue of sustained maximal voluntary contractions. J Neurophysiol. 1983;50(1):313–24.

Hunter SK, Critchlow A, Shin IS, et al. Fatigability of the elbow flexor muscles for a sustained submaximal contraction is similar in men and women matched for strength. J Appl Physiol (1985). 2004;96(1):195–202. doi:10.1152/japplphysiol.00893.2003.

Armatas V, Bassa E, Patikas D, et al. Neuromuscular differences between men and prepubescent boys during a peak isometric knee extension intermittent fatigue test. Pediatr Exerc Sci. 2010;22(2):205–17.

Fricke O, Beccard R, Semler O, et al. Analyses of muscular mass and function: the impact on bone mineral density and peak muscle mass. Pediatr Nephrol. 2010;25(12):2393–400. doi:10.1007/s00467-010-1517-y.

Herrington L, Hatcher J, Hatcher A, et al. A comparison of Star Excursion Balance Test reach distances between ACL deficient patients and asymptomatic controls. Knee. 2009;16(2):149–52. doi:10.1016/j.knee.2008.10.004.

Gribble PA, Hertel J, Plisky P. Using the Star Excursion Balance Test to assess dynamic postural-control deficits and outcomes in lower extremity injury: a literature and systematic review. J Athl Train. 2012;47(3):339–57. doi:10.4085/1062-6050-47.3.08.

Forwell LA, Carnahan H. Proprioception during manual aiming in individuals with shoulder instability and controls. J Orthop Sports Phys Ther. 1996;23(2):111–9.

Cholewicki J, Silfies SP, Shah RA, et al. Delayed trunk muscle reflex responses increase the risk of low back injuries. Spine (Phila Pa 1976). 2005;30(23):2614–20.

O’Sullivan PB, Burnett A, Floyd AN, et al. Lumbar repositioning deficit in a specific low back pain population. Spine (Phila Pa 1976). 2003;28(10):1074–9. doi:10.1097/01.BRS.0000061990.56113.6F.

Parkhurst TM, Burnett CN. Injury and proprioception in the lower back. J Orthop Sports Phys Ther. 1994;19(5):282–95.

Brumitt J, Heiderscheit BC, Manske RC, et al. Lower extremity functional tests and risk of injury in division iii collegiate athletes. Int J Sports Phys Ther. 2013;8(3):216–27.

Noakes TD, Durandt JJ. Physiological requirements of cricket. J Sport Sci. 2000;18:919–29.

de Visser H, Adam CJ, Crozier S, et al. The role of quadratus lumborum asymmetry in the occurrence of lesions in the lumbar vertebrae of cricket fast bowlers. Med Eng Phys. 2007;29(8):877–85. doi:10.1016/j.medengphy.2006.09.010.

Crewe H, Campbell A, Elliott B, et al. Lumbo-pelvic biomechanics and quadratus lumborum asymmetry in cricket fast bowlers. Med Sci Sports Exerc. 2013;45(4):778–83. doi:10.1249/MSS.0b013e31827973d1.

Crewe H, Campbell A, Elliott B, et al. Lumbo-pelvic loading during fast bowling in adolescent cricketers: the influence of bowling speed and technique. J Sports Sci. 2013;31(10):1082–90. doi:10.1080/02640414.2012.762601.

Loram LC, McKinon W, Wormgoor S, et al. Determinants of ball release speed in schoolboy fast-medium bowlers in cricket. J Sports Med Phys Fit. 2005;45(4):483–90.

Orchard J, Newman D, Stretch R, et al. Methods for injury surveillance in international cricket. J Sci Med Sport. 2005;8(1):1–14.

Acknowledgments

The authors would like to acknowledge the University of the Witwatersrand’s Research Committee who granted funding for this project as part of the Friedel Sellschop Award.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

The University of the Witwatersrand’s Research Committee granted funding through the Friedel Sellschop Award which was partly used to fund this review.

Conflict of interest

Benita Olivier, Tracy Taljaard, Elaine Burger, Peter Brukner, John Orchard, Janine Gray, Nadine Botha, Aimee Stewart and Warrick McKinon declare that they have no conflicts of interest relevant to the content of this review.

Ethical approval

This manuscript does not contain clinical studies or patient data and therefore ethical clearance was not applied for.

Rights and permissions

About this article

Cite this article

Olivier, B., Taljaard, T., Burger, E. et al. Which Extrinsic and Intrinsic Factors are Associated with Non-Contact Injuries in Adult Cricket Fast Bowlers?. Sports Med 46, 79–101 (2016). https://doi.org/10.1007/s40279-015-0383-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40279-015-0383-y