Abstract

Predicting the thermal history of a component produced via metal-based additive manufacturing is an important step of the part qualification process because the thermal history can be used to predict thermally induced residual stresses, distortion, and porosity. Thermal simulation calibration and validation are difficult due to the lack of insight into the melt pool during the build from traditional data used for calibration, such as thermocouple measurements and infrared camera images. This work presents a three-dimensional, finite element method, predictive thermal model of a Ti–6Al–4V thin wall manufactured via LENS™ that accounts for heat transfer due to conduction, convection, and radiation. Thermal melt pool data taken with a dual-wave pyrometer are used to calibrate and validate the model. Results from the calibration and validation studies indicate that process parameters (i.e., layer height and width) along with physical properties of the heat source and material can be used to inform the heat source modeling of powder-blown directed energy deposition finite element thermal models to generate datasets for modeling across multiple length scales in an integrated computational materials engineering framework.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Additive manufacturing (AM) is a process for creating near-net shape parts on a layer-by-layer basis from a computer aided design (CAD) model [1]. Predicting the thermal history of a component produced via metal-based AM (MBAM) is an important step of the part qualification process because the thermal history can be used to predict thermally induced residual stresses, distortion, and porosity [2,3,4]. However, the thermal history of AM processes has proven difficult to control and predict due to the large thermal gradients and localized heating [5]. Similar to that of rapid solidification casting, the cooling rates present in directed energy deposition (DED) can range from 102 to 104 K/s, indicating that DED can produce a refined grain size, but the distinction between the thermal behavior native and each process is that DED experiences localized and cyclical heating and cooling [6]. The thermal history of an AM part is attributed to the melt pool, which is a function of the process parameters of the build such as laser power, scanning speed, hatch spacing, and layer height [7, 8]. Therefore, the prediction of a unique melt pool with the associated process parameters can determine the temperature history, leading to predictions about microstructure, mechanical properties, and part performance [9,10,11,12].

Effort has been given to model metal-based AM processes at different length scales to gain insight into various phenomena (e.g., temperature history, residual stresses, microstructure, powder dynamics, melt pool dynamics, mechanical properties) using analytical and numerical methods [13]. For microstructure prediction, the cellular automata [14, 15], kinetic Monte Carlo [16, 17], and phase field [18, 19] methods have been used. Microstructure-sensitive models in the form of internal state variable theory [20], crystal plasticity finite element method [21,22,23], or reduced order models [20, 24] can then be used to estimate mechanical properties [25]. Furthermore, these types of models can be extended to better understand the process–structure–property relationship through parameter sensitivity studies [26] and robust design frameworks [27]. However, the implementation of the aforementioned microstructure and mechanical models to establish an integrated computational materials engineering (ICME) framework is highly dependent on an accurate thermal history of the AM process, particularly the cooling rates and thermal cycling at features of interest [28].

A limiting factor of finite element-based MBAM thermal models is that they cannot accurately capture fluid flow in the melt pool. Oftentimes, fluid–solid interaction in the melt pool is accounted for by scaling the thermal conductivity of the metal above the liquidus temperature to account for increased thermocapillary flow due to Marangoni Convection [29]. Additional fine-tuning features of a model such as thermocapillary flow and process efficiency are needed to predict the melt pool shape and morphology during a DED process [30]. Because of these limitations, other methods can be used to better understand the melt pool behavior, which is important for microstructure and defect predictions. The discrete element method [31, 32] has been used to gain a better understanding of powder particle motion and interaction during the build process. Similarly, a powder scale, multi-physics model was introduced in ALE3D [33] to study phenomena associated with melt pool dynamics such as thermocapillary flow, recoil pressure, pore defect generation, powder spatter, and denudation zones [34]. Yan et al. used computational fluid dynamics to model the melt pool to predict balling defects in PBF in order to optimize print strategies to avoid balling defects [35].

One of the key components in the thermal modeling process is leveraging thermal data from AM builds. In situ thermal data can be utilized to gain insight into determining physically motivated model parameters; however, thermal model calibration is difficult due to the lack of insight into the melt pool during the build from traditional data used for calibration, such as thermocouple measurements and infrared camera images. Heigel et al. used three thermocouples and a laser displacement sensor to calibrate their thermomechanical model [36]. The authors concluded that the spatially dependent forced convection model resulted in better predictions for the measured temperature and residual stress prediction. Johnson et al. utilized a side-mounted infrared (IR) thermal camera along with post-build microstructural characterization to inform their model [20]. They concluded that for the first few layers of the build, the substrate acts as a large heat sink, the melt pool did not grow significantly deep until sufficiently away from the substrate, and the overall part temperature grows as the layers increase. Using thermocouples or IR cameras can result in limited accuracy because thermocouples provide scalar temperature measurements over time that are away from the melt pool, and IR cameras capture radiating thermal energy from the side of the part on the side of part, which does not necessarily include the entire melt pool. Additionally, the accuracy of IR thermal images can be limited due to vapor plume around the melt pool in addition to temperature-dependent emissivity [20]. The vapor plume also results in difficulty calibrating the IR camera due to radiation noise around the melt pool [37]. Dual-wavelength pyrometers that capture melt pool images have been used for finite element thermal model calibration as well [38]. Capturing accurate melt pool behavior is significant because it drives the thermal history of the part [39].

The aforementioned limitations of thermocouple and a single-wave IR camera data can be theoretically eliminated by using an alternative thermal data method such as a dual-wave pyrometer. Wang et al. compared their numerical model with thermal images taken with a dual-wave pyrometer and concluded that pyrometer data can be used to study the effects of varying process parameters on the thermal history of a build [40]. Unlike thermocouples and single-wave IR images, a dual-wave pyrometer that is coaxially mounted with the laser heat source and powder deposition can offer greater insight into the heterogeneous history of the part by taking thermal images of the melt pool as the build progresses. Additionally, the pyrometer is not limited by the measurements of a stationary point away from the melt pool from a thermocouple and provides a full view of the melt pool [41]. The limited accuracy of single-wave IR images due to varying emissivity around the melt pool is eliminated because the pyrometer determines the temperature measurements using two wavelengths. The Stratonics dual-wave pyrometer in this study can be reliably used in temperature ranges from 1000 to 2500 °C; therefore, it can capture temperature data above and below the melting temperature of most metals, which is needed for model calibration and validation [42].

The melt pool behavior driving the thermal history of the part and its subsequent implications in the MBAM process–structure–property–performance relationship is evident with data-driven methods for anomaly detection [43,44,45]. Using melt pool images taken from a build, Khanzadeh et al. successfully predicted locations and sizes of pores within a Ti–6Al–4V DED part [44]. The implications of this type of model are that they can be trained by finite element-based models to then quickly optimize process parameters for defect mitigation in different geometries.

The focus of this work is utilizing geometry-dependent heat source variables to predict the temperature history of a DED built Ti–6Al–4V component accounting for its associated geometry and processing parameters using a dual-wave pyrometer dataset for finite element thermal model calibration in the Abaqus/Standard [46] framework. The novelty of this work is the manner in which the experimental calibration method informs the thermal modeling heat source calibration using geometry-dependent heat source parameters, resulting in the ability to generate large thermal history datasets to inform multiscale modeling efforts [9]. The model is informed by thermal images of the melt pool, which elucidates a connection between the melt pool temperature profile and the experimental processing parameters (e.g., deposition height and width) and the calibrated heat source parameters.

Methodology

Thermal Analysis

The thermal history of the LENS™ process is represented with a three-dimensional, transient thermal analysis with energy balance shown in Eq. 1, where ρ is density, Cp is specific heat capacity, t is time, T is temperature, Q is the applied heat source, and q is the heat flux vector. The heat flux vector is further defined as Fourier’s conduction law in Eq. 2 with \(k\) as thermal conductivity and \(\nabla T\) as the temperature gradient.

The volumetric heat source, Q, is modeled as Goldak’s Ellipsoid [47], shown in Eq. 3, where P is the laser power, η is the process efficiency, σ is a fitting coefficient describing the volumetric flux profile, r is the laser beam radius, and d is the laser beam penetration depth. Goldak’s ellipsoidal heat source model was chosen in lieu of the double ellipsoidal heat source model because the Nd:YAG laser in the LENS™ machine has a circular Gaussian profile, so applying a heat flux with differing profiles for the leading and trailing flux distribution would not reflect the applied laser flux. Goldak’s ellipsoidal heat source model has a Gaussian distribution and decreases exponentially to 5% of its maximum heat flux with depth and outward radius when the fitting term is set to 3. If the fitting term were set to a value of 2, the heat flux would be 13.5% at the boundary. The fitting term can be modified to change the percentage of maximum flux observed at the heat source boundary, which can be used to represent different types of heat sources.

The process efficiency depends on the laser type and wavelength, surface preparation, temperature, and material [48] and can be approximated using Bramson’s formula [49], shown in Eq. 4, where R is the material’s electrical resistivity and λ is the laser wavelength. For the LENS™ machine used in this study, the laser wavelength is 1070 nm and Ti–6Al–4V’s electrical resistivity is approximately 178 μΩ-cm [50]. Although Bramson’s formula is temperature-dependent, the electrical resistivity was chosen at ambient temperature because the powder is at LENS environment temperature when it interacts with the laser, melting almost instantly.

The thermal analysis is sensitive to process efficiency, emissivity, and convection coefficients [51,52,53,54,55]. Although conduction accounts for the majority of heat dissipation during the process, it is important to consider heat dissipation due to convection and radiation, as it can exceed 10% of total heat loss [56]. Applying Newton’s Law of Cooling (Eq. 5) accounts for surface heat loss via convection, where \(T_{{{\text{surface}}}}\) is the surface temperature and \(T_{\infty }\) is ambient temperature. Initially, convection coefficients, h, were initially applied as spatially dependent functions, determined by Heigel et al. [36]. However, due to the radiative cooling dominating the heat transfer process near the melting temperature, the spatially dependent functions had no effect on the melt pool, and thus, constant convection coefficients were applied and are presented in Table 1.

Surface heat loss via radiation is accounted for by applying the Stefan–Boltzmann Law, where \(\sigma_{{{\text{sb}}}}\) is the Stefan–Boltzmann constant and ε is material emissivity shown in Eq. 6. For Ti–6Al–4V, the emissivity is set to a constant value of 0.26 [54].

The temperature-dependent material properties (specific heat and thermal conductivity) used in the simulation for solid and liquid Ti–6Al–4V are taken from Mills [57] and are presented in Fig. 1. The discontinuities in each plot occur at the beta transus temperature (995 °C) and the liquidus temperature (1660 °C). Additionally, a latent heat of fusion value of 365 kJ/kg was applied between the solidus and liquidus temperatures to account for the specific heat capacity increase observed at this temperature range [58].

Abaqus Implementation

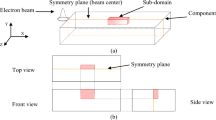

Abaqus/Standard 2017 [46] was used to simulate the LENS™ process for a 10-layer deposition with a fixed time increment of 0.5 ms. The model consisted of 80,792 elements total, split into two parts: the substrate and the thin wall. The substrate is comprised of 62,552 elements, which are broken down into three sets, with the highest mesh density in and directly under the thin wall. The three types of heat transfer elements used in the substrate are: (1) 49,280 linear hexahedral (DC3D8), (2) 12,768 quadratic hexahedral (DC3D20), and (3) 504 linear wedge (DC3D6). The thin wall contains 18,240 quadratic hexahedral heat transfer elements (DC3D20) with 3648 elements in each layer. The thin wall element sides are 0.2225 mm in length in each direction. The model dimensions and mesh are shown in Fig. 2. A mesh sensitivity study was performed to ensure that the results were repeatable on varying mesh densities.

To simulate the LENS™ process, two Abaqus user subroutines are used: UMDFLUX and UEPACTIVATION (UEPA). The UMDFLUX subroutine models the moving laser heat source; the laser coordinates are passed from the input file to the subroutine. The UEPA subroutine simulates material deposition by activating elements. The element activation strategy used here is the active/inactive method. At the start of the simulation, only the substrate elements are active. Once the laser begins to move, elements are activated at an ambient temperature of 37 ºC. UEPA accounts for a constantly evolving free surface by applying the convection and radiation boundary conditions at each timestep to the newly activated elements using the following keywords in the Abaqus input file: FFS and RFS, respectively.

An element is activated at the earliest timestep that satisfies two requirements. The first activation requirement considers the element’s centroid coordinate in the build (z) direction. The element’s centroid must fall below the z-coordinate of the top of the current layer to be considered for the second activation criteria. To meet the second requirement, the distance between the center of the laser and the element centroid must be less than or equal to the user defined element activation radius that is built into Abaqus in the Toolpath-Mesh Intersection module. A visualization of the Abaqus subroutine connectivity can be seen in Fig. 3. Further details of the element activation scheme can be found in previous work [38].

Abaqus subroutine connectivity, adapted from [46]

Experimental Calibration Setup

For model calibration, pyrometer data were used from a thin wall build in the Optomec LENS™ 750 with a 1 kW Nd:YAG laser at the Center for Advanced Vehicular Systems (CAVS) at Mississippi State University (MSU) using the process parameters listed in Table 2. Additional details about the experimental setup along with the data can be found in the Data in Brief article [59]. During the build, a Stratonics dual-wave pyrometer captured in-situ thermal images of the melt pool at a frequency ranging from 4 to 7 Hz via Stratonics Thermaviz software, resulting in approximately 25 images per layer with an estimated error of ± 50. Each pyrometer image consisted of a 752 × 480 matrix of temperature values, with approximately 10% of these in the area of interest in and around the melt pool.

Thermal Model Calibration

The thermal model was calibrated to pyrometer images taken from the first layer of the thin wall build. Approximately 400 thermal simulations were performed, and the final Goldak heat source parameters that were iterated upon are: process efficiency, radius, depth, and the shape fitting term. A Taguchi L27 orthogonal array was implemented for initial model calibration using the aforementioned parameters. With each round of simulations, the temperature profile down the center of the melt pool was plotted similar to Fig. 6. From there, the parameters that showed a best fit within the upper and lower bounds of the simulation data were considered.

The initial rounds of simulations were performed with Goldak’s Double Ellipsoidal heat source model, but it was quickly realized that switching to Goldak’s Ellipsoidal heat source model yielded more accurate results due to the symmetric TEM00 profile of the laser, and thus, more physically based parameters. Analysis revealed that a wide variety of heat source parameters could produce the maximum temperature from the experimental data, so the calibration methodology shifted to examining the temperature profile through the center of the melt pool in the scanning direction. Furthermore, the convection coefficient functions for the top and sides of the thin wall were simplified to the values listed in Table 1 because of negligible changes in temperature in the melt pool due to radiative cooling dominating the heat transfer process near the melting temperature compared to convective cooling. Originally, the model did not account for thermocapillary flow in the melt pool. By scaling the thermal conductivity above the liquidus temperature by a factor of 1.5, similar to Lampa et al. [60], the predicted temperature profile decreased above the liquidus temperature, thus showing a better fit to the experimental data.

Results and Discussion

First Build Layer

Figure 4 compares calibrated simulation results with an experimental pyrometer image; each image is taken from the midpoint of layer one. The raw Abaqus temperature data are interpolated between nodes using a cubic interpolation scheme for comparison with the pyrometer data. For the pyrometer image, the temperature outside of the melt pool appears to be constant at around 1400 °C; however, 1400 °C is the lower bound of this set of pyrometer measurements due to the significant noise in the data below 1400 °C. The calibrated heat source parameters, which are shown in Table 3, were selected because they showed a good fit to the pyrometer data and were physically motivated. Bramson’s equation was used to calculate process efficiency by accounting for the material’s electrical resistivity and the laser’s wavelength. Because the laser in the LENS™ is a TEM00 mode laser, the heat flux drops to 5% of the maximum flux at the outer radius and depth, resulting in a value of 3 for the fitting term. The laser penetration depth was set a value just larger than the layer height, which agrees with previous findings in literature [36, 61]. Finally, the flux radius was set to half of the deposition width for a physical representation of the process parameters.

Figure 5 plots the maximum temperature over the entire first layer with the pyrometer data with error bars showing ± 50° measurement uncertainty that demonstrates the simulation temperatures are in agreeance with the experiment. The utility of the dataset collected by the pyrometer is demonstrated in this figure by showing how much the maximum temperature varies for each pyrometer image for the duration of first layer, which is as high as a 70 °C difference, indicating that metrics other than maximum temperature should be used. This variation can be partially attributed not only to measurement uncertainty, but also to the inherent hetereogeneity of the DED process. Small fluctuations in laser power, powder size distribution, and powder flow rate among other phenomena can affect a component’s temperature history. Using a pyrometer dataset for model calibration allows the user to look at thermal data in the melt pool during the build that is otherwise unavailable when restricted to thermocouples or IR cameras.

Figure 6 compares the simulated temperature profile down the center of the melt pool with pyrometer data for the first layer. The profile shows a good fit and lies within the bounds of the experimental data. The simulation data used in this plot are taken at the midpoint at the top surface of layer one, while the experimental data are an average of five pyrometer images taken around the middle of the layer to represent a grouping of steady state temperatures. The error bars represent the ± 50° measurement uncertainty in addition to the peak to valley values used to calculate the mean. The only portion of the simulated melt pool profile that lies outside of the experimental bounds is the trailing edge, which is likely due to inadequate convection boundary conditions near the laser deposition due to the impinging gas flow. This phenomena is also observed in Fig. 7 for layers 2–5.

Additional Build Layers

To assess the applicability of the calibrated heat source parameters from layer one, the model is extended to a ten-layer thin wall and compared with pyrometer data. The maximum temperature in each layer from the simulation and pyrometer data are compared in Table 4, respectively. The maximum temperature in each layer compares favorably between the predicted temperature and the pyrometer data.

Figure 7 compares the temperature profile down the center of the melt pool using the simulation and the pyrometer data for layers two through five. Even though the maximum temperature and melt pool diameter are overpredicted, the shape of the profile follows the trend demonstrated by the pyrometer data. This deviation from experimental data is likely due to an inadequately sized substrate, which cannot act as a large heat sink. Thus, heat buildup occurs with increasing layers, which causes the maximum temperature and overall melt pool temperature to increase. Further discussion of model improvement can be found in “Thermal model validation”.

Thermal Model Validation

To validate the thermal model, two double-track, twenty-layer Ti–6Al–4V thin walls were fabricated on the Optomec LENS™ 750 at CAVS at MSU with differing scanning strategies and scanning speeds than the calibration builds. The experimental process parameters are listed in Table 5, and the pyrometer data used in the validation study can be found in a publicly available data repository [62]. In contrast to the single line deposition strategy used for the calibration specimen, the validation specimens deposited two lines per layer in a serpentine pattern. The Goldak heat source parameters used in the validation study are calculated in Table 3. Similar to the calibrated heat source values, a small scaling factor of approximately 1.06 was applied to the penetration depth term in relation to the layer height, with the laser spot size set to the layer deposition width. The efficiency and fitting parameter remain unchanged.

To address a possible reason for the heat buildup (i.e., the increased temperatures) observed in the calibration simulations, a larger substrate was modeled to act as a heat sink. The mesh used in the validation build is shown in Fig. 8; the total number of elements is approximately 433,000. The element size in the thin wall is 0.083 mm × 0.083 mm × 0.083 mm, with the mesh coarsening out in the substrate away from the thin wall.

Figure 9 shows the center temperature profile of the melt pool observed with the pyrometer and the simulation data for the validation build. Despite a faster scanning speed, the temperature gradient is much steeper and follows a linear trend compared to the observed melt pool characteristics in the calibration builds. This is likely due to the smaller deposition volume and a more heavily concentrated heat source resulting in a greater energy density. The greater energy density in the validation build leads to a higher peak temperature and a faster cooling rate compared to the calibration build. Unlike the single-track simulations in the previous section, there is no observed peak temperature in the trailing section of the melt pool. This is likely due to the greater energy density in the double-track simulation [63]. The energy density, E [J/mm2], is defined as E = P/(2rv), where P is the laser power, r is the laser beam radius, and v is the scan speed. The double-track simulation has a global energy density that is nearly three times larger than the single-track deposition, resulting in a peak temperature approximately 500° higher than the liquidus temperature. The trailing peak in the single-track data could be attributed to the lack of energy present to overcome latent heat of fusion that occurs between the solidus (1600 °C) and liquidus temperatures (1660 °C) region, which is where the slope of the temperature profile changes.

a Comparison of the experimental and FE simulation temperature profile through the center of the melt pool along the scan direction for layer one of the validation build. b Comparison of the experimental and FE simulation average center temperature profile taken at the midpoint of each layer for layers 1–20 for both the simulation and experimental data. The melt pool is traveling from right to left

To further extend the analysis of the validation build, melt pools center profiles were taken at the midpoint of each layer of the build and were averaged as shown in Fig. 9. The results show that by setting the penetration depth to a value of approximately 1.06 times the layer height, the applied laser radius to one half of the deposition width, and a coupling efficiency of 37.3% a good prediction of the melt pool temperature profile can be achieved. Although each of the simulated center temperature profiles did not fully fall in the error bounds, the maximum temperatures and melt pool shape showed good agreement with the experimental results. This limitation could be due to the selection of the Goldak ellipsoidal heat source and insufficient cooling conditions caused by the blown powder shielding gas.

While both the calibration and validation simulations show good agreeance with the experimental data, there are potential avenues of model improvement, such as: (1) calibrating a machine specific convection coefficient function by performing a hot-film anemometry experiment specific to the LENS™ as proposed by Gouge et al. to improve the temperature profile trailing the melt pool [64]; (2) implement a temperature-dependent emissivity [65, 66]; (3) studying the effect of using different heat source models (e.g., cylindrical-involution-normal, 3D Gaussian) to better represent the specific laser in the LENS™ [67]. Calibrating a spatially dependent convection coefficient that accounts for the cooling effects of the shielding gas and powder-carrying gas along with the implementation of a temperature-dependent emissivity would improve the cooling boundary conditions around the melt pool. Furthermore, implementing different heat sources while following the calibration methodology presented in this study could provide insight as to what heat source provides the best prediction of the melt pool temperature profile.

Conclusions

The findings in this work suggest that applying physically based heat source parameters can provide a good temperature prediction compared with thermal images taken of the melt pool with a dual-wave pyrometer. The physical basis of the heat source parameters is taken from the geometry of the deposition (e.g., layer width and depth) and a coupling efficiency calculated with the material’s electrical resistivity and the laser’s wavelength. The calibration methodology is significant because coaxial dual-wave pyrometer images provide melt pool measurements that are unavailable with other in situ experimental data collection techniques. Insight into the melt pool is crucial for model calibration efforts because the thermal history of a part is driven by the melt pool. This methodology was first calibrated with single-track build data and was then extended to a double-track thin wall geometry to validate the proposed approach. Furthermore, this physically based simulation framework can be used generate large datasets of thermal histories and cooling rates that capture the full temperature history of a build. This is due to the connection between melt pool and the processing parameters rather than using thermocouple calibration away from the melt pool that only captures the macro-response. Future paths for this research include extending the model to complex geometries, exploring different calibration methodologies, and sequentially coupling the thermal model with a mechanical model.

References

ASTM International (2010) Standard terminology for additive manufacturing technologies. ASTM International, West Conshohocken, pp 13–15. https://doi.org/10.1520/F2792-10.2

Bian L, Thompson SM, Shamsaei N (2015) Mechanical properties and microstructural features of direct laser-deposited Ti-6Al-4V. JOM 67:629–638. https://doi.org/10.1007/s11837-015-1308-9

Irwin J, Reutzel EW, Michaleris P, Keist J, Nassar AR (2016) Predicting microstructure from thermal history during additive manufacturing for Ti-6Al-4V. J Manuf Sci Eng 138:111007. https://doi.org/10.1115/1.4033525

Phan TQ, Strantza M, Hill MR, Gnaupel-Herold TH, Heigel J, D’Elia CR, DeWald AT, Clausen B, Pagan DC, Peter Ko JY, Brown DW, Levine LE (2019) Elastic residual strain and stress measurements and corresponding part deflections of 3D additive manufacturing builds of IN625 AM-bench artifacts using neutron diffraction, synchrotron X-ray diffraction, and contour method. Integr Mater Manuf Innov 8:318–334. https://doi.org/10.1007/s40192-019-00149-0

Rubenchik AM, King WE, Wu S (2018) Scaling laws for the additive manufacturing. J Mater Process Technol. https://doi.org/10.1016/j.jmatprotec.2018.02.034

Zheng B, Zhou Y, Smugeresky JE, Schoenung JM, Lavernia EJ (2008) Thermal behavior and microstructural evolution during laser deposition with laser-engineered net shaping: Part I. Numerical calculations. Metall Mater Trans A 39:2228–2236. https://doi.org/10.1007/s11661-008-9557-7

Foteinopoulos P, Papacharalampopoulos A, Stavropoulos P (2017) On thermal modeling of additive manufacturing processes. CIRP J Manuf Sci Technol. https://doi.org/10.1016/j.cirpj.2017.09.007

Irwin J, Michaleris P (2016) A line heat input model for additive manufacturing. J Manuf Sci Eng 138:111004. https://doi.org/10.1115/1.4033662

Mahmoudi M, Tapia G, Karayagiz K, Franco B, Ma J, Arróyave R, Karaman I, Elwany A (2018) Multivariate calibration and experimental validation of a 3D finite element thermal model for laser powder-bed fusion metal additive manufacturing. Integr Mater Manuf Innov 7:116–135

Raghavan N, Babu SS, Dehoff R, Pannala S, Simunovic S, Kirka M, Turner J, Carlson N (2017) Numerical modeling of heat-transfer and the influence of process parameters on tailoring the grain morphology of IN718 in electron beam additive manufacturing. Acta Mater 112:303–314. https://doi.org/10.1016/j.actamat.2017.08.067

Smith J, Xiong W, Yan W, Lin S, Cheng P, Kafka OL, Wagner GJ, Cao J, Liu WK (2016) Linking process, structure, property, and performance for metal-based additive manufacturing: computational approaches with experimental support. Comput Mech 57:583–610. https://doi.org/10.1007/s00466-015-1240-4

Megahed M, Mindt H-W, N’Dri N, Duan H, Desmaison O (2016) Metal additive-manufacturing process and residual stress modeling. Integr Mater Manuf Innov. https://doi.org/10.1186/s40192-016-0047-2

Cooke S, Ahmadi K, Willerth S, Herring R (2020) Metal additive manufacturing: technology, metallurgy and modelling. J Manuf Process 57:978–1003. https://doi.org/10.1016/j.jmapro.2020.07.025

Akram J, Chalavadi P, Pal D, Stucker B (2018) Understanding grain evolution in additive manufacturing through modeling. Addit Manuf 21:255–268. https://doi.org/10.1016/j.addma.2018.03.021

Zinovieva O, Zinoviev A, Ploshikhin V (2018) Three-dimensional modeling of the microstructure evolution during metal additive manufacturing. Comput Mater Sci 141:207–220. https://doi.org/10.1016/j.commatsci.2017.09.018

Rodgers TM, Bishop JE, Madison JD (2018) Direct numerical simulation of mechanical response in synthetic additively manufactured microstructures. Model Simul Mater Sci Eng 26:1–23. https://doi.org/10.1088/1361-651X/aac616

Rodgers TM, Madison JD, Tikare V (2017) Simulation of metal additive manufacturing microstructures using kinetic Monte Carlo. Comput Mater Sci 135:78–89. https://doi.org/10.1016/j.commatsci.2017.03.053

Lu LX, Sridhar N, Zhang YW (2018) Phase field simulation of powder bed-based additive manufacturing. Acta Mater 144:801–809. https://doi.org/10.1016/j.actamat.2017.11.033

Sahoo S, Chou K (2016) Phase-field simulation of microstructure evolution of Ti–6Al–4V in electron beam additive manufacturing process. Addit Manuf 9:14–24. https://doi.org/10.1016/j.addma.2015.12.005

Johnson KL, Rodgers TM, Underwood OD, Madison JD, Ford KR, Whetten SR, Dagel DJ, Bishop JE (2018) Simulation and experimental comparison of the thermo-mechanical history and 3D microstructure evolution of 304L stainless steel tubes manufactured using LENS. Comput Mech 61:559–574. https://doi.org/10.1007/s00466-017-1516-y

Patra A, Priddy MW, McDowell DL (2015) Modeling the effects of microstructure on the tensile properties and micro-fracture behavior of Mo–Si–B alloys at elevated temperatures. Intermetallics 64:6–17. https://doi.org/10.1016/j.intermet.2015.04.008

Lloyd JT, Matejunas AJ, Becker R, Walter TR, Priddy MW, Kimberley J (2019) Dynamic tensile failure of rolled magnesium: simulations and experiments quantifying the role of texture and second-phase particles. Int J Plast 114:174–195. https://doi.org/10.1016/j.ijplas.2018.11.002

Priddy MW, Paulson NH, Kalidindi SR, McDowell DL (2017) Strategies for rapid parametric assessment of microstructure-sensitive fatigue for HCP polycrystals. Int J Fatigue 104:231–242. https://doi.org/10.1016/j.ijfatigue.2017.07.015

Paulson NH, Priddy MW, McDowell DL, Kalidindi SR (2019) Reduced-order microstructure-sensitive protocols to rank-order the transition fatigue resistance of polycrystalline microstructures. Int J Fatigue 119:1–10. https://doi.org/10.1016/j.ijfatigue.2018.09.011

Popova E, Rodgers TM, Gong X, Cecen A, Madison JD, Kalidindi SR (2017) Process-structure linkages using a data science approach: application to simulated additive manufacturing data. Integr Mater Manuf Innov 6:54–68. https://doi.org/10.1007/s40192-017-0088-1

Brindley KA, Priddy MW, Neu RW (2019) Integrative materials design of three-phase Mo-Si-B alloys. Integr Mater Manuf Innov 8:1–16. https://doi.org/10.1007/s40192-019-0124-4

Kern PC, Priddy MW, Ellis BD, McDowell DL (2017) pyDEM: a generalized implementation of the inductive design exploration method. Mater Des 134:293–300. https://doi.org/10.1016/j.matdes.2017.08.042

Hashemi SM, Parvizi S, Baghbanijavid H, Alvin T, Tan L, Nematollahi M, Ramazani A, Fang NX (2021) Computational modelling of process–structure–property–performance relationships in metal additive manufacturing : a review. Int Mater Rev. https://doi.org/10.1080/09506608.2020.1868889

Lampa C, Kaplan AFH, Powell J, Magnusson C (1999) An analytical thermodynamic model of laser welding. J Phys D Appl Phys 30:1293–1299. https://doi.org/10.1088/0022-3727/30/9/004

Kazemi K, Goldak JA (2009) Numerical simulation of laser full penetration welding. Comput Mater Sci 44:841–849. https://doi.org/10.1016/j.commatsci.2008.01.002

Steuben JC, Iliopoulos AP, Michopoulos JG (2016) Discrete element modeling of particle-based additive manufacturing processes. Comput Methods Appl Mech Eng 305:537–561. https://doi.org/10.1016/j.cma.2016.02.023

Lee WH, Zhang Y, Zhang J (2017) Discrete element modeling of powder flow and laser heating in direct metal laser sintering process. Powder Technol 315:300–308. https://doi.org/10.1016/j.powtec.2017.04.002

McCallen CR (2012) ALE3D: Arbitrary Lagrange Eulerian three- and two dimensional modeling and simulation capability. Lawrence Livemore National Laboratory, Livermore

Khairallah SA, Anderson AT, Rubenchik A, King WE (2016) Laser powder-bed fusion additive manufacturing: physics of complex melt flow and formation mechanisms of pores, spatter, and denudation zones. Acta Mater 108:36–45. https://doi.org/10.1016/j.actamat.2016.02.014

Yan W, Ge W, Qian Y, Lin S, Zhou B, Liu WK, Lin F, Wagner GJ (2017) Multi-physics modeling of single/multiple-track defect mechanisms in electron beam selective melting. Acta Mater 134:324–333. https://doi.org/10.1016/j.actamat.2017.05.061

Heigel JC, Michaleris P, Reutzel EW (2015) Thermo-mechanical model development and validation of directed energy deposition additive manufacturing of Ti–6Al–4V. Addit Manuf 5:9–19. https://doi.org/10.1016/j.addma.2014.10.003

Marshall G, Young WJ, Shamsaei N, Craig J, Wakeman T, Thompson SM (2015) Dual thermographic monitoring of Ti-6AL-4V cylinders during direct laser deposition. In: Proceedings of the solid freeform fabrication, pp 259–272.

Dantin MJ, Furr WM, Priddy MW (2018) Towards an open-source, preprocessing framework for simulating material deposition for a directed energy deposition process. In: Proceedings of the solid freeform fabrication, pp 1903–1912

Huang Y, Khamesee MB, Toyserkani E (2019) A new physics-based model for laser directed energy deposition (powder-fed additive manufacturing): from single-track to multi-track and multi-layer. Opt Laser Technol 109:584–599. https://doi.org/10.1016/j.optlastec.2018.08.015

Wang L, Felicelli SD, Craig JE (2009) Experimental and numerical study of the LENS rapid fabrication process. J Manuf Sci Eng 131:041019. https://doi.org/10.1115/1.3173952

Marshall GJ, Young WJ, Thompson SM, Shamsaei N, Daniewicz SR, Shao S (2016) Understanding the microstructure formation of Ti-6Al-4V during direct laser deposition via in-situ thermal monitoring. JOM 68:778–790. https://doi.org/10.1007/s11837-015-1767-z

Shamsaei N, Yadollahi A, Bian L, Thompson SM (2015) An overview of direct laser deposition for additive manufacturing; Part II: mechanical behavior, process parameter optimization and control. Addit Manuf 8:12–35. https://doi.org/10.1016/j.addma.2015.07.002

Seifi SH, Tian W, Doude H, Tschopp MA, Bian L (2019) Layer-wise modeling and anomaly detection for laser-based additive manufacturing. J Manuf Sci Eng Trans ASME 141:1–12. https://doi.org/10.1115/1.4043898

Khanzadeh M, Chowdhury S, Tschopp MA, Doude HR, Marufuzzaman M, Bian L (2019) In-situ monitoring of melt pool images for porosity prediction in directed energy deposition processes. IISE Trans 51:437–455. https://doi.org/10.1080/24725854.2017.1417656

Yan W, Lin S, Kafka OL, Lian Y, Yu C, Liu Z, Yan J, Wolff S, Wu H, Ndip-Agbor E, Mozaffar M, Ehmann K, Cao J, Wagner GJ, Liu WK (2018) Data-driven multi-scale multi-physics models to derive process–structure–property relationships for additive manufacturing. Comput Mech 61:521–541. https://doi.org/10.1007/s00466-018-1539-z

Hibbett, Karlsson, Sorensen (1998) ABAQUS/standard: user’s manual, vol 1

Goldak J, Chakravarti A, Bibby M (1984) A new finite element model for welding heat sources. Metall Trans B 15:299–305. https://doi.org/10.1007/BF02667333

Pinkerton AJ, Li L (2004) An analytical model of energy distribution in laser direct metal deposition. Proc Inst Mech Eng Part B J Eng Manuf 218:363–374

Bramson M (1968) Infrared radiation: a handbook for applications. Plenum, New York

Boivineau M, Cagran C, Doytier D, Eyraud V, Nadal MH, Wilthan B, Pottlacher G (2006) Thermophysical properties of solid and liquid Ti-6Al-4V (TA6V) alloy. Int J Thermophys 27:507–529. https://doi.org/10.1007/s10765-005-0001-6

Denlinger ER, Heigel JC, Michaleris P (2015) Residual stress and distortion modeling of electron beam direct manufacturing Ti-6Al-4V. Proc Inst Mech Eng Part B J Eng Manuf 229:1803–1813. https://doi.org/10.1177/0954405414539494

Shen N, Chou K (2012) Thermal modeling of electron beam additive manufacturing process: powder sintering effects. In: ASME 2012 international manufacturing science and engineering conference, p 287. https://doi.org/10.1115/MSEC2012-7253

Chiumenti M, Lin X, Cervera M, Lei W, Zheng Y, Huang W (2017) Numerical simulation and experimental calibration of additive manufacturing by blown powder technology. Part I: Thermal analysis. Rapid Prototyp J 23:448–463. https://doi.org/10.1108/RPJ-10-2015-0136

Yang J, Sun S, Brandt M, Yan W (2010) Experimental investigation and 3D finite element prediction of the heat affected zone during laser assisted machining of Ti6Al4V alloy. J Mater Process Technol 210:2215–2222. https://doi.org/10.1016/j.jmatprotec.2010.08.007

Denlinger ER, Michaleris P (2016) Effect of stress relaxation on distortion in additive manufacturing process modeling. Addit Manuf. https://doi.org/10.1016/j.addma.2016.06.011

Wang L, Felicelli S (2006) Analysis of thermal phenomena in LENS™ deposition. Mater Sci Eng A 435–436:625–631. https://doi.org/10.1016/j.msea.2006.07.087

Mills KC (2002) Recommended values of thermophysical properties for selected commercial alloys. Woodhead Publishing Ltd., Cambridge

Jelinek B, Young WJ, Dantin M, Furr W, Doude H (2020) Two-dimensional thermal finite element model of directed energy deposition: matching melt pool temperature profile with pyrometer measurement. J Manuf Process 57:187–195. https://doi.org/10.1016/j.jmapro.2020.06.021

Marshall GJ, Thompson SM, Shamsaei N (2016) Data indicating temperature response of Ti–6Al–4V thin-walled structure during its additive manufacture via laser engineered net shaping. Data Brief 7:697–703

Lampa C, Kaplan AFH, Powell J, Magnusson C (1997) An analytical thermodynamic model of laser welding. J Phys D Appl Phys 30:1293–1299. https://doi.org/10.1088/0022-3727/30/9/004

Kummailil J (2004) Process models for laser engineered net shaping. http://www.wpi.edu/Pubs/ETD/Available/etd-0429104-103828/

Dantin MJ (2022) Thermal_Model_Validation_LENS_Bi_Directional_Ti64_Thin_Wall. https://doi.org/10.17632/zvztvdgxpj.1

Wolff SJ, Lin S, Faierson EJ, Liu WK, Wagner GL, Cao J (2017) A framework to link localized cooling and properties of directed energy deposition (DED)-Processed Ti-6Al-4V. Acta Mater 132:106–117. https://doi.org/10.1016/j.actamat.2017.04.027

Gouge MF, Heigel JC, Michaleris P, Palmer TA (2015) Modeling forced convection in the thermal simulation of laser cladding processes. Int J Adv Manuf Technol 79:307–320. https://doi.org/10.1007/s00170-015-6831-x

Hagqvist P, Sikström F, Christiansson AK (2013) Emissivity estimation for high temperature radiation pyrometry on Ti–6Al–4V. Meas J Int Meas Confed 46:871–880. https://doi.org/10.1016/j.measurement.2012.10.019

Li L, Yu K, Zhang K, Liu Y (2016) Study of Ti-6Al-4V alloy spectral emissivity characteristics during thermal oxidation process. Int J Heat Mass Transf 101:699–706. https://doi.org/10.1016/j.ijheatmasstransfer.2016.05.069

Laser Institute of America (2001) LIA handbook of laser materials processing, 1st edn. Springer, Berlin, Heidelberg

Acknowledgements

MWP would like to thank Vince Hammond of ARL for his feedback on this manuscript. This research was sponsored by the Army Research Laboratory (ARL) and was accomplished under Cooperative Agreement Number W911NF-12-R-0011-03. The views and conclusions contained in this document are those of the authors and should not be interpreted as representing the official policies, either expressed or implied, of the Army Research Laboratory or the U.S. Government. The U.S. Government is authorized to reproduce and distribute reprints for Government purposes notwithstanding any copyright notation herein.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Dantin, M.J., Furr, W.M. & Priddy, M.W. Toward a Physical Basis for a Predictive Finite Element Thermal Model of the LENS™ Process Leveraging Dual-Wavelength Pyrometer Datasets. Integr Mater Manuf Innov 11, 407–417 (2022). https://doi.org/10.1007/s40192-022-00271-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40192-022-00271-6