Abstract

In this paper, we consider the following nonparametric regression model:

where \(x_{ni}\) are known fixed design points from A, where \(A\subset {\mathbb {R}}^d\) is a given compact set for some \(d\ge 1\), \(f(\cdot )\) is an unknown regression function defined on A and \(\varepsilon _{ni}\) are random errors, which are assumed to widely orthant dependent (WOD, for short). Firstly, a general result on complete convergence for partial sums of WOD random variables is obtained, which has some interest itself. Based on some mild conditions and the complete convergence result that we established, we further establish the complete consistency of the weighted estimator in the nonparametric regression model, which improves the corresponding one of Wang et al. (TEST 20:607–629, 2014). As an application, the complete consistency of the nearest neighbor estimator is obtained. Finally we provide a numerical simulation to verify the validity of our result.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The random variables are usually assumed to be independent in many statistical applications. However it is not a realistic assumption. Therefore, many statisticians extended this condition to various dependence structures and mixing structures. In this paper, we are interested in the widely orthant dependent (WOD, for short) structure which includes independent random variables, negatively associated (NA, for short) random variables, negatively superadditive dependent (NSD, for short) random variables, negatively orthant dependent (NOD, for short) random variables, and extended negatively dependent (END, for short) random variables as special cases.

Firstly, let us recall the concepts of complete convergence and stochastic domination. The concept of complete convergence was introduced by Hsu and Robbins [7] as follows: a sequence \(\{X_n,n\ge 1\}\) of random variables converges completely to a constant C if for all \(\varepsilon >0\),

By the Borel-Cantelli lemma, complete convergence implies that \(X_{n}\rightarrow C\)a.s. and so complete convergence is stronger than a.s. convergence.

The concept of stochastic domination below will be used frequently throughout the paper.

Definition 2.1

A sequence \(\{X_{n}, n\ge 1\}\) of random variables is said to be stochastically dominated by a nonnegative random variable X if there exists a positive constant C such that

for all \(x\ge 0\) and \(n\ge 1\).

Now, let us recall the concept of WOD random variables, which was introduced by Wang et al. [27] as follows.

Definition 1.1

A finite collection of random variables \(X_1,X_2,\ldots ,X_n\) is said to be widely upper orthant dependent (WUOD, for short) if there exists a finite real number \(g_{U}(n)\) such that for all finite real numbers \(x_{i}, 1\le i\le n\),

A finite collection of random variables \(X_1,X_2,\ldots ,X_n\) is said to be widely lower orthant dependent (WLOD, for short) if there exists a finite real number \(g_{L}(n)\) such that for all finite real numbers \(x_{i}, 1\le i\le n\),

If \(X_1,X_2,\ldots ,X_n\) are both WUOD and WLOD, we then say that \(X_1,X_2,\ldots ,X_n\) are widely orthant dependent (WOD, for short) random variables, and \(g_{U}(n), g_{L}(n)\) are called dominating coefficients. A sequence \(\{X_n, n\ge 1\}\) of random variables is said to be WOD if every finite subcollection is WOD.

An array \(\{X_{ni}, 1\le i\le k_n, n\ge 1\}\) of random variables is said to be rowwise WOD if for every \(n\ge 1\), \(\{X_{ni}, 1\le i\le k_n\}\) are WOD random variables.

It follows from (1.1) and (1.2) that \(g_{U}(n)\ge 1\) and \(g_{L}(n)\ge 1\). If \(g_{U}(n)=g_{L}(n)=M\) for all \(n\ge 1\), where M is a positive constant, then \(\{X_n, n\ge 1\}\) is called END, which was introduced by Liu [14]. If \(M=1\), then \(\{X_n, n\ge 1\}\) is called NOD, which was introduced by Lehmann [12] and carefully studied by Joag-Dev and Proschan [11]. Note that NA implies NOD. Furthermore, Hu [10] pointed out that NSD random variables are NOD. Hence, the class of WOD random variables includes independent random variables, NA random variables, NSD random variables, NOD random variables, and END random variables as special cases. So, studying the limit behavior of WOD random variables and its applications are of great interest.

Since Wang et al. [27] introduced the concept of WOD random variables, many authors were devoted to studying the probability limit theory and statistical large sample theory. Wang et al. [27] provided some examples which showed that the class of WOD random variables contains some common negatively dependent random variables, some positively dependent random variables and some others; in addition, they studied the uniform asymptotics for the finite-time ruin probability of a new dependent risk model with a constant interest rate. Wang and Cheng [31] presented some basic renewal theorems for a random walk with widely dependent increments and gave some applications. Wang et al. [32] studied the asymptotics of the finite-time ruin probability for a generalized renewal risk model with independent strong subexponential claim sizes and widely lower orthant dependent inter-occurrence times. Chen et al. [1] considered uniform asymptotics for the finite-time ruin probabilities of two kinds of nonstandard bidimensional renewal risk models with constant interest forces and diffusion generated by Brownian motions. Shen [19] established the Bernstein type inequality for WOD random variables and gave some applications. Wang et al. [29] studied the complete convergence for WOD random variables and gave its applications in nonparametric regression models. Yang et al. [34] established the Bahadur representation of sample quantiles for WOD random variables under some mild conditions. Shen [21] obtained the asymptotic approximation of inverse moments for a class of nonnegative random variables, including WOD random variables as special cases. Qiu and Chen [16] studied the complete and complete moment convergence for weighted sums of WOD random variables. Wang and Hu [28] investigated the consistency of the nearest neighbor estimator of the density function based on WOD samples. Chen et al. [2] established a more accurate inequality for identically distributed WOD random variables, and gave its application to limit theorems, including the strong law of large numbers, the complete convergence, the a.s. elementary renewal theorem and the weighted elementary renewal theorem. Shen et al. [23] obtained some exponential probability inequalities for WOD random variables and gave some applications, and so on. In this work, we will further study the convergence properties for WOD random variables, and then apply it to nonparametric regression model.

Consider the following nonparametric regression model:

where \(x_{ni}\) are known fixed design points from A, where \(A\subset {\mathbb {R}}^d\) is a given compact set for some \(d\ge 1\), \(f(\cdot )\) is an unknown regression function defined on A and \(\varepsilon _{ni}\) are random errors. Assume that for each n, \(\{\varepsilon _{ni},1\le i\le n\}\) has the same distribution as that of \(\{\varepsilon _{i},1\le i\le n\}\). As an estimator of \(f (\cdot )\), the following weighted regression estimator will be considered:

where \(W_{ni}(x)= W_{ni}(x; x_{n1},x_{n2},\ldots ,x_{nn})\), \(i=1,2,\ldots ,n\) are the weight functions.

The above weighted estimator \(f_{n}(x)\) was first proposed by Stone [25] and adapted by Georgiev [4] to the fixed design case and then constantly studied by many authors. For instance, Georgiev and Greblicki [6], Georgiev [5] and Müller[15] among others studied the consistency and asymptotic normality for the weighted estimator \(f_n(x)\) when \(\varepsilon _{ni}\) are assumed to be independent. When \(\varepsilon _{ni}\) are dependent errors, many authors have also obtained many interesting results in recent years. Fan [3] extended the work of Georgiev [5] and Müller [15] in the estimation of the regression model to the case where form an \(L_q\)-mixingale sequence for some \(1\le q\le 2\). Roussas [17] discussed strong consistency and quadratic mean consistency for \(f_n(x)\) under mixing conditions. Roussas et al. [18] established asymptotic normality of \(f_n(x)\) assuming that the errors are from a strictly stationary stochastic process and satisfying the strong mixing condition. Tran et al. [26] discussed again asymptotic normality of \(f_n(x)\) assuming that the errors form a linear time series, more precisely, a weakly stationary linear process based on a martingale difference sequence. Hu et al. [8] studied the asymptotic normality for double array sum of linear time series. Hu et al. [9] gave the mean consistency, complete consistency and asymptotic normality of regression models with linear process errors. Liang and Jing [13] presented some asymptotic properties for estimates of nonparametric regression models based on negatively associated sequences. Shen [19] presentend the Bernstein-type inequality for widely dependent sequence and gave its applications to nonparametric regression models. Wang et al. [29] studied the complete convergence for WOD random variables and gave its application to nonparametric regression models. Wang et al. [28] established some results on complete consistency for the weighted estimator of nonparametric regression models based on END random errors. Shen et al. [24] presented the Rosenthal-type inequality for NSD random variables and gave its application to nonparametric regression models. Yang et al. [35] provided the convergence rate for the complete consistency of the weighted estimator of nonparametric regression models based on END random errors, and so on.

Unless otherwise specified, we assume throughout the paper that \(f_n(x)\) is defined by (1.4). For any function f(x), we use c(f) to denote all continuity points of the function f on A. The norm \(\Vert x\Vert \) is the Eucledean norm. For any fixed design point \(x \in A\), the following assumptions on weight function \(W_{ni}(x)\) will be used:

- (\(H_1\)):

-

\(\sum _{i=1}^nW_{ni}(x)\rightarrow 1\) as \(n\rightarrow \infty \);

- (\(H_2\)):

-

\(\sum _{i=1}^n\left| W_{ni}(x)\right| \le C<\infty \) for all n;

- (\(H_3\)):

-

\(\sum _{i=1}^n\left| W_{ni}(x)\right| \cdot \left| f(x_{ni})-f(x)\right| I(\Vert x_{ni}-x\Vert >a)\rightarrow 0\) as \(n\rightarrow \infty \) for all \(a>0\).

Recently, Wang et al. [29] established the following result on complete consistency for the weighted estimator \(f_n(x)\) based on the assumptions above.

Theorem A

Let \(\{\varepsilon _{n}, n \ge 1\}\) be a sequence of WOD random variables with mean zero, which is stochastically dominated by a random variable X. Suppose that the conditions (\(H_1\))–(\(H_3\)) hold true, and

holds for some \(p \ge 1\). Assume further that there exists some \(0\le \lambda <1\) such that \(g(n)=O(n^{\lambda /p})\). If \(E|X|^{2p+\lambda }<\infty \), then for any \(x \in c(f)\),

In Theorem A, the moment condition \(E|X|^{2p+\lambda }<\infty \) depends not only on p but also on \(\lambda \), which seems strange. We wonder whether \(E|X|^{2p+\lambda }<\infty \) could be improved to \(E|X|^{2p}<\infty \). In addition, whether \(g(n)=O(n^{\lambda /p})\) for some \(0\le \lambda <1\) and \(p \ge 1\) could be replaced by a more general condition \(g(n)=O(n^{\lambda })\) for some \(\lambda \ge 0\). The answers are positive. Our main result is as follows.

Theorem 1.1

Let \(\{\varepsilon _{n}, n \ge 1\}\) be a sequence of WOD random variables with mean zero, which is stochastically dominated by a random variable X. Suppose that the conditions (\(H_1\))–(\(H_3\)) hold true, and (1.5) holds for some \(p\ge 1\). Assume further that there exists some \(\lambda \ge 0\) such that \(g(n)=O(n^{\lambda })\). If \(E|X|^{2p}<\infty \), then (1.6) holds for any \(x \in c(f)\).

Remark 1.1

Comparing Theorem 1.1 with Theorem A, we can see that the moment condition \(E|X|^{2p}<\infty \) in Theorem 1.1 is weaker than \(E|X|^{2p+\lambda }<\infty \) in Theorem A. In addition, the condition on dominating coefficients \(g(n)=O(n^{\lambda })\) in Theorem 1.1 is also weaker than \(g(n)=O(n^{\lambda /p})\) in Theorem A. Hence, the result of Theorem 1.1 generalizes and improves the corresponding one of Theorem A.

Remark 1.2

If \(g(n)=O(1)\), then sequence of WOD random variables reduces to the sequence of END random variables. Hence, Theorem 1.1 holds for END random variables. In addition, the result of Theorem 1.1 generalizes the corresponding one of Shen [22], Corollary 3.1] for END random variables to the case of WOD random variables.

2 An application to nearest neighbor estimation and numerical simulation

In this section, we will give an application of the main result to nearest neighbor estimation and carry out a numerical simulation to verify the result that we obtained. Wang et al. [29] have shown that conditions (\(H_{1}\))–(\(H_{3}\)) are satisfied for the nearest neighbor estimator by choosing \(k_{n}=\lfloor n^{1/p}\rfloor \) for some \(p>1\), where here and below \(\lfloor x\rfloor \) denotes the integer part of x. We immediately obtain the following result by Theorem 1.1. The details are omitted.

Theorem 2.1

Let \(\{\varepsilon _{n}, n \ge 1\}\) be a sequence of WOD random variables with mean zero, which is stochastically dominated by a random variable X. Suppose that \(f_{n}(x)\) is the nearest neighbor estimator of f(x) and \(k_{n}=\lfloor n^{1/p}\rfloor \) for some \(p>1\). Assume further that there exists some \(\lambda \ge 0\) such that \(g(n)=O(n^{\lambda })\). If \(E|X|^{2p}<\infty \), then (1.6) holds for any \(x \in c(f)\).

Now, we give the simulation study. The data are generated from model (1.3). For any fixed \(n\ge 3\), let \((\varepsilon _{1},\varepsilon _{2},\ldots ,\varepsilon _{n})\sim N_{n}({\varvec{0}},{\varvec{\Sigma }})\), where \({\varvec{0}}\) represents zero vector and

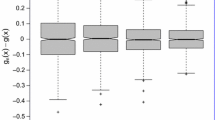

By Joag-Dev and Proschan [11], it can be seen that \((\varepsilon _{1},\varepsilon _{2},\ldots ,\varepsilon _{n})\) is a NA vector for each \(n\ge 3\), and thus is a WOD vector. Choosing \(k_{n}=\lfloor n^{0.5}\rfloor \) and taking the points \(x=i/n\) for \(i=1,2,\ldots ,n\) and the sample sizes n as \(n=50,100,200\) respectively, we use R software to compute the estimator \(f_{n}(x)\) of f(x) with \(f(x)=\sin (2\pi x)\) and \(f(x)=x^{2}\) for 500 times. We obtain the comparison of \(f_{n}(x)\) and f(x) in Figs. 1, 2, 3, 4, 5 and 6 as follows.

Figures 1, 2 and 3 are the comparison of \(f_{n}(x)\) and f(x) with \(f(x)=\sin (2\pi x)\) and Figs. 4, 5 and 6 are the comparison of \(f_{n}(x)\) and f(x) with \(f(x)=x^2\), respectively, where the solid lines are the true functions and the dashed lines are the estimators. From Figs. 1, 2 and 3 we can see a good fit of the true function. There are some fluctuations in Figs. 2 and 3, that is because the value of x are divided into more fragments as n increases. Especially, when x closes to 0 or 1, the estimator converges to the true value as n increases. Figures 4, 5 and 6 also reflect the same result, that is, the estimator converges to the true function as the sample sizes n increases. These basically agree with the results we obtained in the paper.

3 Some lemmas

In this section, we will show some crucial lemmas which will be used to prove the main results. The first one is a basic property for WOD random variables, which can be found in Wang et al. [29].

Lemma 3.1

Let \(\{X_n, n\ge 1\}\) be a sequence of WOD random variables.

-

(i)

If \(\{f_n(\cdot ), n\ge 1\}\) are all nondecreasing (or all nonincreasing), then \(\{f_n(X_n), n\ge 1\}\) are still WOD.

-

(ii)

For each \(n\ge 1\) and any \(s\in {\mathbb {R}}\),

$$\begin{aligned} E\exp \left\{ s\sum _{i=1}^nX_i\right\} \le g (n)\prod _{i=1}^nE\exp \{sX_i\}. \end{aligned}$$

The next one is a complete convergence result for arrays of rowwise WOD random variables, which has some interest itself and plays a key role to prove the main result of the paper.

Lemma 3.2

Let \(\{X_{ni}, 1\le i\le n, n\ge 1\}\) be an array of rowwise WOD random variables with mean zero and \(\{a_n, n\ge 1\}\) be a sequence of positive constants. Suppose that the following conditions hold:

- (i):

-

\(\max \nolimits _{1\le i\le n}|X_{ni}|\le Ca_n\)a.s.;

- (ii):

-

\(\sum \nolimits _{i=1}^{n}EX_{ni}^2=o(a_n)\);

- (iii):

-

there exists some \(\beta >0\) such that

$$\begin{aligned} \sum \limits _{n=1}^{\infty }g(n)e^{-\frac{\beta }{a_n}}<\infty . \end{aligned}$$Then for any \(\varepsilon >0\),

$$\begin{aligned} \sum \limits _{n=1}^{\infty }P\left( \left| \sum \limits _{i=1}^{n}X_{ni}\right| \ge \varepsilon \right) <\infty , \end{aligned}$$(3.1)i.e. \(\sum \limits _{i=1}^{n}X_{ni}\rightarrow 0\) completely as \(n\rightarrow \infty \).

Proof

Noting that \(EX_{ni}=0\), we have by the elementary inequality \(e^x\le 1+x+\frac{1}{2}x^2 e^{|x|}\) for \(x\in {\mathbb {R}}\) and condition (i) that

By Markov’s inequality and Lemma 3.1 (ii), we can get that

which together with (3.2) and condition (ii) yields that

Setting \(t=\frac{\beta +1}{\varepsilon a_n}\) in (3.3), we can see that

holds for all n sufficiently enough. Hence, we have by (3.4) and condition (iii) that

It follows by Lemma 3.1 (i) that \(\{-X_{ni},1\le i\le n,n\ge 1\}\) is still an array of rowwise WOD random variables with mean zero. Hence, we have by (3.5) that

Note for any \(\varepsilon >0\),

Therefore, the desired result (3.1) follows from (3.5)–(3.7) immediately. The proof is completed. \(\square \)

The last one is a basic property for stochastic domination, which can be found in Wu [33], Shen [20] among others.

Lemma 3.3

Let \(\{X_{ni},1\le i\le n,n\ge 1\}\) be an array of random variables which is stochastically dominated by a random variable X. For any \(\alpha >0\) and \(b>0\), it follows that

where \(C_1\) and \(C_2\) are positive constants.

4 Proof of Theorem 1.1

For any \(x\in c(f)\) and \(a>0\), it follows by (1.3) and (1.4) that

Because \(x\in c(f)\), it follows that for any \(\varepsilon >0\), there exists a \(\delta >0\) such that \(|f(x^*)-f(x)|<\varepsilon \) when \(\Vert x^*-x\Vert <\delta \). Letting \(a\in (0,\delta )\) in (4.1), we have

By (4.1) and \((H_1)\)–\((H_3)\), we can get that for any \(x\in c(f)\),

Noting that \(W_{ni}(x)=W_{ni}(x)^+-W_{ni}(x)^-\), so without loss of generality, we assume that \(W_{ni}(x)>0\) and \(\max \nolimits _{1\le i\le n}W_{ni}(x)\le n^{-1/p}\) for any \(x\in c(f)\). In view of (4.3), to prove (1.6), we only need to show

i.e.

where \(T_{ni}=W_{ni}(x)\cdot \varepsilon _{ni}\).

For any \(\varepsilon >0\), take q such that \(p<q<2p\) and denote for \(i=1,2,\ldots ,n\) and \(n\ge 1\) that

where N is a positive integer, whose value will be specified later. Noting that \(X_{ni}(1)+X_{ni}(2)+X_{ni}(3)+X_{ni}(4)=\varepsilon _{ni}\), we know that

Thus, in order to prove (4.4), we only need to prove \(I_j<\infty \) for \(j=1,2,3,4\).

First, we will prove \(I_1<\infty \). Noting that \(E\varepsilon _{i}=0\), we have by Markov’s inequality, condition \((H_2)\) and Lemma 3.3 that

which implies that for all n large enough,

Thus, to prove \(I_1<\infty \), we only need to show

For fixed \(n\ge 1\) and \(x\in c(f)\), we can see that \(\{W_{ni}(x)(X_{ni}(1)-EX_{ni}(1)), 1\le i\le n\}\) are still mean zero WOD random variables by Lemma 3.1. To prove (4.6), we will make the use of Lemma 3.2, where \(X_{ni}=W_{ni}(x)(X_{ni}(1)-EX_{ni}(1))\) and \(a_n=(\log n)^{-1}\). Now, we will check that all conditions of Lemma 3.2 hold true.

Since \(p<q\), we can get that

which implies that condition (i) of Lemma 3.2 satisfies.

Noting that \(E|X|^{2p}<\infty \) implies \(EX^2<\infty \), since \(p\ge 1\), we have by Lemma 3.3, condition \((H_2)\) and (1.5) that

which implies that condition (ii) of Lemma 3.2 satisfies.

For condition (iii) of Lemma 3.2, noting that \(g(n)=O(n^\delta )\) for some \(\delta \ge 0\), so we only need to choose \(\beta >1\). In this case, condition (iii) of Lemma 3.2 satisfies. Therefore, (4.6) follows from Lemma 3.2 immediately, and thus, \(I_1<\infty \) holds.

Next, we will show that \(I_2<\infty \). By the definition of \(X_{ni}(2)\), we can see that \(0\le X_{ni}(2)<\varepsilon n^{1/p}/N\). On the other hand, we have \(0<W_{ni}(x)\le 1/n^{1/p}\). Hence, for any \(\varepsilon >0\), \(\left| \sum \nolimits _{i=1}^{n}W_{ni}(x)X_{ni}(2)\right| =\sum \nolimits _{i=1}^{n}W_{ni}(x)X_{ni}(2)>\varepsilon /4\) yields that there exist at least \(N's\) nonzero \(X_{ni}(2)\). Thus, we have by the definition of WOD random variables and \(E|X|^{2p}<\infty \) that

Noting that \(g(n)=O(n^\delta )\) for some \(\delta \ge 0\), we have by (4.9) that

provided that \(N>\frac{q(\delta +1)}{2p-q}\).

For \(I_3\), due to \(-\varepsilon n^{1/p}/N<X_{ni}(3)\le 0\) and \(0<W_{ni}(x)\le 1/n^{1/p}\), \(\left| \sum \nolimits _{i=1}^{n}W_{ni}(x)(X_{ni}(3)\right| =-\sum \nolimits _{i=1}^{n}W_{ni}(x)X_{ni}(3)>\varepsilon \) implies that there exist at lease \(N's\) nonzero \(X_{ni}(3)\). Analogous to the proof of \(I_2<\infty \), we have \(I_3<\infty \).

At last, we will show that \(I_4<\infty \). Noting that \(E|X|^{2p}<\infty \), we have

which implies that \(I_4<\infty \). Thus, (4.4) follows from (4.5) and \(I_1<\infty ,I_2<\infty ,I_3<\infty ,I_4<\infty \) immediately. This completes the proof of the theorem. \(\square \)

References

Chen, Y., Wang, L., Wang, Y.B.: Uniform asymptotics for the finite-time ruin probabilities of two kinds of nonstandard bidimensional risk models. J. Math. Anal. Appl. 401, 114–129 (2013)

Chen, W., Wang, Y.B., Cheng, D.Y.: An inequality of widely dependent random variables and its applications. Lith. Math. J. 56(1), 16–31 (2016)

Fan, Y.: Consistent nonparametric multiple regression for dependent heterogeneous processes: the xed design case. J. Multivar. Anal. 33, 72–88 (1990)

Georgiev, A.A.: Local properties of function fitting estimates with applications to system identification. In: Grossmann, W., et al. (eds.) Mathematical Statistics and Applications. Proceedings 4th Pannonian Symposium on Mathematical Statistics, 4–10, September 1983, vol. B, pp. 141–151. Bad Tatzmannsdorf, Austria, Reidel, Dordrecht (1985)

Georgiev, A.A.: Consistent nonparametric multiple regression: the fixed design case. J. Multivar. Anal. 25(1), 100–110 (1988)

Georgiev, A.A., Greblicki, W.: Nonparametric function recovering from noisy observations. Journal of Statistical Planning and Inference 13, 1–14 (1986)

Hsu, P.L., Robbins, H.: Complete convergence and the law of large numbers. Proc. Natl. Acad. Sci. USA 33, 25–31 (1947)

Hu, S.H., Zhu, C.H., Chen, Y.B., Wang, L.C.: Fixed-design regression for linear time series. Acta Mathematica Scientia 22B(1), 9–18 (2002)

Hu, S.H., Pan, G.M., Gao, Q.B.: Estimate problem of regression models with linear process errors. Appl. Math. A J. Chin. Univ. 18A(1), 81–90 (2003)

Hu, T.Z.: Negatively superadditive dependence of random variables with applications. Chin. J. Appl. Probab. Stati. 16, 133–144 (2000)

Joag-Dev, K., Proschan, F.: Negative association of random variables with applications. Ann. Stat. 11, 286–295 (1983)

Lehmann, E.: Some concepts of dependence. Ann. Math. Stat. 37, 1137–1153 (1966)

Liang, H.Y., Jing, B.Y.: Asymptotic properties for estimates of nonparametric regression models based on negatively associated sequences. J. Multivar. Anal. 95, 227–245 (2005)

Liu, L.: Precise large deviations for dependent random variables with heavy tails. Stat. Probab. Lett. 79, 1290–1298 (2009)

Müller, H.G.: Weak and universal consistency of moving weighted averages. Periodica Mathematica Hungarica 18(3), 241–250 (1987)

Qiu, D.H., Chen, P.Y.: Complete and complete moment convergence for weighted sums of widely orthant dependent random variables. Acta Mathematica Sinica, English Series 30(9), 1539–1548 (2014)

Roussas, G.G.: Consistent regression estimation with fixed design points under dependence conditions. Stat. Probab. Lett. 8, 41–50 (1989)

Roussas, G.G., Tran, L.T., Ioannides, D.A.: Fixed design regression for time series: asymptotic normality. J. Multivar. Anal. 40, 262–291 (1992)

Shen A.T.: Bernstein-type inequality for widely dependent sequence and its application to nonparametric regression models. Abstr. Appl. Anal. 2013, 9 (2013a) (Article ID 862602)

Shen, A.T.: On the strong convergence rate for weighted sums of arrays of rowwise negatively orthant dependent random variables. RACSAM 107(2), 257–271 (2013b)

Shen, A.T.: On asymptotic approximation of inverse moments for a class of nonnegative random variables. Stat. J. Theor. Appl. Stati. 48(6), 1371–1379 (2014)

Shen, A.T.: Complete convergence for weighted sums of END random variables and its application to nonparametric regression models. J. Nonparametr. Stat. 28(4), 702–715 (2016)

Shen, A.T., Yao, M., Wang, W.J., Volodin, A.: Exponential probability inequalities for WNOD random variables and their applications. RACSAM 110, 251–268 (2016)

Shen, A.T., Zhang, Y., Volodin, A.: Applications of the Rosenthal-type inequality for negatively superadditive dependent random variables. Metrika 78, 295–311 (2015)

Stone, C.J.: Consistent nonparametric regression. Ann. Stat. 5, 595–645 (1977)

Tran, L.T., Roussas, G.G., Yakowitz, S., Van, B.T.: Fixed-design regression for linear time series. Ann. Stat. 24, 975–991 (1996)

Wang, K.Y., Wang, Y.B., Gao, Q.W.: Uniform asymptotic for the finite-time ruin probability of a new dependent risk model with a constant interest rate. Methodol. Comput. Appl. Probab. 15, 109–124 (2013)

Wang, X.J., Hu, S.H.: The consistency of the nearest neighbor estimator of the density function based on WOD samples. J. Math. Anal. Appl. 429(1), 497–512 (2015)

Wang, X.J., Xu, C., Hu, T.C., Volodin, A., Hu, S.H.: On complete convergence for widely orthant-dependent random variables and its applications in nonparametric regression models. TEST 23, 607–629 (2014)

Wang, X.J., Zheng, L.L., Xu, C., Hu, S.H.: Complete consistency for the estimator of nonparametric regression models based on extended negatively dependent errors. Stat. J. Theor. Appl. Stat. 49(2), 396–407 (2015)

Wang, Y.B., Cheng, D.Y.: Basic renewal theorems for a random walk with widely dependent increments and their applications. J. Math. Anal. Appl. 384, 597–606 (2011)

Wang, Y.B., Cui, Z.L., Wang, K.Y., Ma, X.L.: Uniform asymptotic of the finite-time ruin probability for all times. J. Math. Anal. Appl. 390, 208–223 (2012)

Wu, Q.Y.: Probability Limit Theory for Mixing Sequences. Science Press, Beijing (2006)

Yang, W.Z., Liu, T.T., Wang, X.J., Hu, S.H.: On the Bahadur representation of sample quantiles for widely orthant dependent sequences. Filomat 28(7), 1333–1343 (2014)

Yang, W.Z., Xu, H.Y., Chen, L., Hu, S.H.: Complete consistency of estimators for regression models based on extended negatively dependent errors. Statistical Papers. 59(2), 449–465 (2018)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supported by the National Natural Science Foundation of China (11671012, 11871072, 11701004, 11701005), the Natural Science Foundation of Anhui Province (1508085J06), The Key Projects for Academic Talent of Anhui Province (gxbjZD2016005) and the Students Innovative Training Project of Anhui University (201610357496).

Rights and permissions

About this article

Cite this article

Zhang, R., Wu, Y., Xu, W. et al. On complete consistency for the weighted estimator of nonparametric regression models. RACSAM 113, 2319–2333 (2019). https://doi.org/10.1007/s13398-018-00621-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13398-018-00621-0

Keywords

- Widely orthant dependent random variables

- Weighted estimator

- Nonparametric regression model

- Complete consistency