Abstract

This paper investigates the performance of combined and separate log type class of estimators of population mean under stratified ranked set sampling. The expressions of bias and mean square error of the proposed estimators are deduced. The theoretical comparison of the proposed estimators with the existing estimators is carried out and the efficiency conditions are reported. The credibility of theoretical results is extended by a simulation study conducted over various artificially generated symmetric and asymmetric populations. The results of the simulation study show that the proposed class of estimators dominate the well-known existing estimators.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The primary objective of any survey is to improve the efficiency of the estimate of the population which not only rely upon the size of sample and sampling fraction but also upon the heterogeneity or variability of the population. By far the most common sampling scheme used to reduce the heterogeneity of population is stratified simple random sampling (SSRS). Ranked set sampling (RSS) was first introduced by McIntyre [16]. Following McIntyre [16], Samawi [19] investigated the concept of stratified ranked set sampling (SRSS) as an alternative sampling scheme to SSRS which merge the profits of stratification and RSS to obtain an unbiased estimator of population mean with likely improvement in efficiency. In contrast to SSRS, quantified observations in SRSS do not lend identically to draw illation due to further structure that imposes ranking, permitting observations in order to tempt different dimensions of population and to obtain possible advantages in efficiency.

In theory of sample surveys, the aim of survey statistician is to improve the efficiency of the proposed estimators. RSS becomes the better alternative over the other sampling schemes provided the ranking of the units is possible. The procedure of RSS is based on quantifying m simple random samples each of size m units from the population and m units are ranked within each set according to the variable of interest visually or by any cost independent measure. From the first sample, the unit with rank 1 is taken for the measurement of element and remaining units of the sample are omitted. Again, from the second sample, the unit with rank 2 is taken for the measurement of element and remaining units of the sample are omitted. Iterate the process in the similar pattern until the unit with rank m is taken for the measurement of elements from the \(m^{th}\) sample. This whole process constitute a cycle. If this whole cycle is iterated r times then this yield a ranked set sample of size \(n=mr\).

The procedure of stratified ranked set sample is based on drawing \(m_h\) independent random samples each of size \(m_h\) units from the \(h^{th}\), \(h=1,2,...,L\), stratum of population. Now ranking is performed over observations of each sample and procedure of RSS is used to get L independent ranked set samples each of size \(m_h\) such that \(\sum _{h=1}^{L}m_h=m\). This process completes one cycle of SRSS. The whole procedure is iterated r times in order to obtain desired sample size \(n_h=m_hr\).

The literature on RSS is quite broad starting with McIntyre [16] and extending to many variations till date. SRSS as introduced by Samawi [19] is one of those variations of RSS. Samawi and Siam [20] suggested the ratio estimator under SRSS. By using auxiliary information, Mandowara and Mehta [15] analysed some modified ratio estimators under SRSS whereas Mehta and Mandowara [17] suggested an advance estimator under SRSS. Linder et al. [14] suggested regression estimator under SRSS. Khan and Shabbir [10] considered Hartley-Ross type unbiased estimators under RSS and SRSS. Saini and Kumar [18] investigated ratio type estimator using quartile as auxiliary information under SRSS. Bhushan et al. [4] considered the problem of mean estimation utilizing logarithmic estimators under SRSS, while Bhushan et al. [5] introduced an efficient estimation procedure of population mean under SRSS. Bhushan et al. [6] suggested some improved class of estimators employing SRSS. Bhushan et al. [7] investigated SRSS for the quest of an optimal class of estimators. The main objective of this paper is to study the performance of combined and separate log type class of estimators viz a viz the existing classes of estimators under SRSS.

The rest of the paper is drafted as follows. In Sect. 2, the notations used throughout this article will be defined. Section 3 will consider the review of the combined and separate classes of estimators with their properties. The suggested combined and separate classes of estimators will be presented in Sect. 4, whereas the efficiency comparison of combined and separate estimators will be given in Sect. 5. In support of theoretical results, a simulation study will be carried out in Sect. 6. Some formal concluding remarks will be given in Sect. 7.

2 Notaions

To obtain the estimate of the population mean Y. of the study variable y, let the ranking be executed on the auxiliary variable x. For \(r^{th}\) cycle and \(h^{th}\) stratum, let \((X_{h(1)r}, Y_{h[1]r})\), \((X_{h(2)r}, Y_{h[2]r})\),..., \((X_{h(m_h)r}, Y_{h[m_h]r})\) denote the stratified ranked set sample with bivariate probability density function \(f(x_h,y_h)\) and bivariate cumulative distribution function (c.d.f.) \(F(x_h,y_h)\).

The notations used throughout this article are defined below.

-

N; size of population,

-

\(N_h\); size of population in stratum h,

-

n; size of sample,

-

\(n_h\); size of sample in stratum h,

-

\(W_h=N_h/N\); weight of stratum h,

-

\(\bar{y}_h=\sum _{i=1}^{n_h}y_{h_i}/n_h\); sample mean of variable Y in stratum h,

-

\(\bar{y}_{st}=\sum _{h=1}^{L}W_hy_{h}\); sample mean of variable Y,

-

\(\bar{x}_h=\sum _{i=1}^{n_h}x_{h_i}/n_h\); sample mean of variable X in stratum h,

-

\(\bar{x}_{st}=\sum _{h=1}^{L}W_hx_{h}\); sample mean of variable X,

-

\(\bar{y}_{[\text {srss}]}=\sum _{h=1}^{L}W_h\bar{y}_{h{[{rss}]}}\); stratified ranked set sample mean of variable Y,

-

\(\bar{x}_{(\text {srss})}=\sum _{h=1}^{L}W_h\bar{x}_{h{({rss})}}\); stratified ranked set sample mean of variable X,

-

\(\bar{y}_{h{[\text {rss}]}}=\sum _{i=1}^{m_h}\sum _{j=1}^{r}{y}_{h{[i]}j}/m_hr\); ranked set sample mean of variable Y in stratum h,

-

\(\bar{x}_{h{(\text {rss})}}=\sum _{i=1}^{m_h}\sum _{j=1}^{r}{x}_{h{(i)}j}/m_hr\); ranked set sample mean of variable X in stratum h,

-

\(\bar{Y}_h=\sum _{i=1}^{N_h}y_{h_i}/N_h\); population mean of variable Y in stratum h,

-

\(\bar{Y}=\bar{Y}_{st}=\sum _{h=1}^{L}W_hY_{h}\); population mean of variable Y,

-

\(\bar{X}_h=\sum _{i=1}^{N_h}x_{h_i}/N_h\); population mean of variable X in stratum h,

-

\(\bar{X}=\bar{X}_{st}=\sum _{h=1}^{L}W_hX_{h}\); population mean of variable X,

-

\(R=\bar{Y}/\bar{X}\); population ratio,

-

\(R_h=\bar{Y}_h/\bar{X}_h\); population ratio in stratum h,

-

\(S_{y_h}^2=(N_h-1)^{-1}\sum _{h=1}^{N_h}(y_{h_i}-\bar{Y}_h)^2\); population variance of variable Y in stratum h,

-

\(S_{x_h}^2=(N_h-1)^{-1}\sum _{h=1}^{N_h}(x_{h_i}-\bar{X}_h)^2\); population variance of variable X in stratum h,

-

\(S_{xy_h}=(N_h-1)^{-1}\sum _{h=1}^{N_h}(x_{h_i}-\bar{X}_h)(y_{h_i}-\bar{Y}_h)\); population covariance between variables X and Y in stratum h,

-

\(\rho _{xy_h}=S_{xy_h}/S_{x_h}S_{y_h}\); population correlation coefficient between variables X and Y in stratum h,

-

\(C_{y_h}\); population coefficient of variation for variable Y in stratum h,

-

\(C_{x_h}\); population coefficient of variation for variable X in stratum h,

-

\(\beta _1(x_h)=(E(\bar{x}_h-\bar{X}_h)^3)^2/(E(\bar{x}_h-\bar{X}_h)^2)^2\); population coefficient of skewness for variable X in stratum h, and

-

\(\beta _2(x_h)=(E(\bar{x}_h-\bar{X}_h))^4/(E(\bar{x}_h-\bar{X}_h)^2)^2\); population coefficient of kurtosis for variable X in stratum h.

In order to find out bias and mean square error of combined estimators, the following notations will be used end to end in this paper.

Let \(\bar{y}_{[srss]}=\bar{Y}(1+\varepsilon _0),~ \bar{x}_{(srss)}=\bar{X}(1+\varepsilon _1),~\text {such that}~ E(\varepsilon _0)=E(\varepsilon _1)=0\)

Following (2.1), we can write

\(E({\varepsilon _0}^2)=\sum \nolimits _{h=1}^{L}W_h^2\left( \gamma _h\frac{S_{y_h}^2}{\bar{Y}^2}-D^2_{y_{h[i]}}\right) =V_{02},~~ E({\varepsilon _1}^2)=\sum \nolimits _{h=1}^{L}W_h^2\left( \gamma _h\frac{S_{x_h}^2}{\bar{X}^2}-D^2_{x_{h(i)}}\right) =V_{20} ~\text {and}~ E({\varepsilon _0\varepsilon _1})=\sum \nolimits _{h=1}^{L}W_h^2\left( \gamma _h\rho _{x_hy_h}\frac{S_{x_h}}{\bar{X}}\frac{S_{y_h}}{\bar{Y}}-D_{{x_hy_h}_{[i]}}\right) =V_{11}\)

where \(\gamma =1/m_hr,~~ D^2_{x_{h(i)}}=\sum \nolimits _{i=1}^{m_h}\tau _{x_{h(i)}}^2/m_h^2r\bar{X}^2,~~ D^2_{y_{h[i]}}=\sum \nolimits _{i=1}^{m_h}\tau _{y_{h[i]}}^2/m_h^2r\bar{Y}^2,~~ D_{{x_hy_h}_{[i]}}=\sum \nolimits _{i=1}^{m_h}\tau _{{x_hy_h}_{[i]}}/m_h^2r\bar{X}\bar{Y}\), \(\tau _{x_{_{h(i)}}}=(\mu _{x_{_{h(i)}}}-\bar{X}_h)\), \(\tau _{y_{_{h[i]}}}=(\mu _{y_{_{h[i]}}}-\bar{Y}_h)\), and \(\tau _{{x_hy_h}_{{[i]}}}=(\mu _{x_{{h(i)}}}-\bar{X}_h)(\mu _{y{_{h[i]}}}-\bar{Y}_h)\).

To find out bias and mean square error of separate estimators, the following notations will be used throughout this paper.

Let \(\bar{y}_{[srss]}=\bar{Y}(1+e_0),~~ \bar{x}_{(srss)}=\bar{X}(1+e_1),~\text {such that}~ E(e_0)=E(e_1)=0\),

\(E({e_0}^2)=\left( \gamma _h\frac{S_{y_h}^2}{\bar{Y }_h^2}-M^2_{y_{h[i]}}\right) =U_{0},~~ E({e_1}^2)=\left( \gamma _h\frac{S_{x_h}^2}{\bar{X}_h^2}-M^2_{x_{h(i)}}\right) =U_{1} ~\text {and}~ E({e_0e_1})=\left( \gamma _h\rho _{x_hy_h}\frac{S_{x_h}}{\bar{X }_h}\frac{S_{y_h}}{\bar{Y}_h}-M_{{x_hy_h}_{[i]}}\right) =U_{10}\),

where \(M^2_{x_{h(i)}}=\sum _{i=1}^{m_h}\tau _{x_{h(i)}}^2/m_h^2r\bar{X}_h^2,~~ M^2_{y_{h[i]}}=\sum _{i=1}^{m_h}\tau _{y_{h[i]}}^2/m_h^2r\bar{Y}_h^2,~~ M_{{x_hy_h}_{[i]}}=\sum _{i=1}^{m_h}\tau _{{x_hy_h}_{[i]}}/m_h^2r\bar{X}_h\bar{Y}_h\), \(\tau _{x_{_{h(i)}}}=(\mu _{x_{_{h(i)}}}-\bar{X}_h)\), \(\tau _{y_{_{h[i]}}}=(\mu _{y_{_{h[i]}}}-\bar{Y}_h)\), and \(\tau _{{x_hy_h}_{{[i]}}}=(\mu _{x_{_{h(i)}}}-\bar{X}_h)(\mu _{y_{_{h[i]}}}-\bar{Y}_h)\).

3 Review of estimators

The conventional mean estimator under SRSS can be defined as

having variance as

Further, in this section, we consider review of some well known existing combined and separate estimators for the estimation of population mean under SRSS.

3.1 Combined estimators

Samawi and Siam [20] considered the classical combined ratio estimator under SRSS as

Linder et al. [14] suggested the regression estimator under SRSS as

where \(\beta \) is the regression coefficient of y on x.

Utilizing the information on auxiliary variable, Mandowara and Mehta [15] proposed some ratio type estimators as

On the lines of Kadilar and Cingi [9], Mehta and Mandowara [17] suggested an advance ratio estimator under SRSS as

where k is a suitably chosen scalar.

Following Shabbir and Gupta [21], we define the combined regression cum ratio estimator under SRSS as

where \(z_{[srss]}=\sum _{h=1}^{L}W_h(\bar{x}_h+{X})\), \(\bar{Z}=\sum _{h=1}^{L}W_h(\bar{X}_h+{X})\) and X is the population total.

On the lines of Koyuncu and Kadilar [12], one may define the following combined estimator under SRSS as

where \(\alpha \) is a fixed constant and g is a suitably opted scalar which take values 1 and -1 to produce ratio and product type estimators, respectively, whereas \((a \ne 0)\) and b are either real numbers or the function of known parameters of auxiliary variable x.

Following Singh and Vishwakarma [24], one may consider a combined general procedure for estimating the population mean \(\bar{Y}\) under SRSS as

where \(\bar{x}^*_{(srss)}=\sum _{h=1}^{L}W_h(a_h\bar{x}_h+b_h)\), \(\bar{X}^*=\sum _{h=1}^{L}W_h(a_h\bar{X}_h+b_h)\) and \(\Lambda _1\), \(\Lambda _2\) are suitably chosen scalars.

Motivated by Singh and Solanki [23], we define a new family of combined estimators for population mean \(\bar{Y}\) under SRSS as

where \(\delta , g\), and \(\alpha \) are suitably opted constants, whereas \(\lambda _1\) and \(\lambda _2\) are optimizing scalars to be determined later.

Saini and Kumar [18] utilized quartiles as auxiliary information and suggested ratio estimator under SRSS as

where \(q_t,~t=1,3\) is the \(t^{th}\) quartile.

The MSEs of these estimators are given in Appendix A for ready reference and further analysis.

3.2 Separate estimators

Samawi and Siam [20] suggested the classical separate ratio estimator under SRSS as

where \(\bar{y}_{h{[rss]}}=\frac{1}{m_hr}\sum _{i=1}^{m_h}\sum _{j=1}^{r}{y}_{h{[i]}j}\) and \(\bar{x}_{h{(rss)}}=\frac{1}{m_hr}\sum _{i=1}^{m_h}\sum _{j=1}^{r}{x}_{h{(i)}j}\).

Linder et al. [14] evoked the separate regression estimator under SRSS as

where \(\beta _h\) is the regression coefficient of y on x.

The separate version of Mandowara and Mehta [15] estimator under SRSS is

The separate version of Mehta and Mandowara [17] estimator under SRSS is

where \(k_h\) is duly opted scalar.

On the line of Shabbir and Gupta [21], we define the separate regression cum ratio estimator under SRSS as

where \(z_{h[rss]}=\sum _{h=1}^{L}W_h(\bar{x}_h+{X}_h)\), \(\bar{Z}_h=\sum _{h=1}^{L}W_h(\bar{X}_h+{X}_h)\) and \(X_h\) is the population total in stratum h.

Motivated by Koyuncu and Kadilar [12], one may consider the following separate estimator under SRSS as

where \(\alpha _h\), g are fixed constants, and \(\lambda _{k_h}\) is a suitably opted scalar. Also, \((a_h \ne 0)\) and \(b_h\) are real values or the function of known parameters of auxiliary variable \(x_h\) in stratum h.

Following Singh and Vishwakarma [24], one may consider a separate general procedure for estimating population mean \(\bar{Y }\) under SRSS as

where \(\Lambda _{1_h}\) and \(\Lambda _{2_h}\) are duly chosen scalars in stratum h.

Motivated by Singh and Solanki [23], we define a new family of separate estimators for population mean \(\bar{Y }\) under SRSS as

where \(\delta ,~g\), and \(\alpha _h\) are suitably chosen scalars, whereas \(\lambda _{1_h}\) and \(\lambda _{2_h}\) are optimizing constants to be determined later.

The separate type of Saini and Kumar [18] estimator under SRSS is

The MSEs of these estimators are given in Appendix B for ready reference and further analysis.

4 Proposed estimators

The crux of this paper is to suggest some efficient combined and separate classes of estimators for the estimation of population mean \(\bar{Y}\) under SRSS. The suggested class of estimators are better choice over the existing class of estimators discussed in previous section. Motivated by Bhushan et al. [4], we extend the work of Bhushan and Kumar [1] and suggest some combined and separate class of estimators of population mean under SRSS.

4.1 Combined estimators

We propose some efficient combined log type class of estimators under SRSS as

where \(\alpha _i, i=1,2,3,4\) and \(\beta _i\) are suitably chosen scalars, whereas \(\bar{x}^*_{(srss)}=a\bar{x}_{(srss)}+b\) and \({\bar{X}}^*=a{\bar{X}}+b\) provided that \(a(\ne 0),~b\) are either real numbers or function of known parameters of the auxiliary variable x.

Theorem 4.1

The biases of the proposed estimators are given to the first order of approximation as

Proof

The precis of the derivations are given in Appendix C. \(\square \)

Theorem 4.2

The MSEs of the proposed estimators are given to the first order of approximation as

Proof

The precis of the derivations are given in Appendix C. \(\square \)

Corollary 4.1

The minimum MSEs at the optimum values of \(\alpha _{i}\) and \(\beta _i\) are given as

Proof

The precis of the derivations are given in Appendix C. \(\square \)

4.2 Separate estimators

We propose the some efficient separate log type class of estimator under SRSS as

where \(\alpha _{i_h},~i=1,2,3,4\) and \(\beta _{i_h}\) are suitably chosen scalars. Also, \(\bar{x}^*_{h(rss)}=a_h\bar{x}_{h(rss)}+b_h\) and \({\bar{X}}_h^*=a_h{\bar{X}_h}+b_h\) provided that \(a_h(\ne 0),~b_h\) are real values or function of known parameters of the auxiliary variable \(x_h\) in stratum h.

Theorem 4.3

The biases of the proposed estimators are given to the first order of approximation as

Proof

The precis of the derivations are given in Appendix C. \(\square \)

Theorem 4.4

The MSEs of the proposed estimators are given to the first order of approximation as

Proof

The precis of the derivations are given in Appendix C. \(\square \)

Corollary 4.2

The minimum MSEs at the optimum values of \(\alpha _{ih},~i=1,2,3,4\) and \(\beta _{ih}\) are given as

Proof

The precis of the derivations are given in Appendix C. \(\square \)

5 Theoretical comparison

5.1 Combined estimators

On comparing the minimum MSEs of proposed estimator \(T_{v_i},~i=1,2,3,4\) and the existing estimators from (4.1), with (3.1), (A.1), (A.2), (A.3), (A.4), (A.5), (A.6), (A.7), (A.8) and (A.9), we get the following theoretical conditions.

If the above conditions hold then the proposed class of estimators \(T_{v_i}^c,~i=1,2,3,4\) dominates the conventional mean estimator \(T_m^c\), classical ratio and regression estimator \(T_r^c\) and \(T_{\beta }^c\), Shabbir and Gupta [21] type estimator \(T_{sg}^c\), Koyuncu and Kadilar [12] type estimator \(T_{kk}^c\), Singh and Vishwakarma [24] type estimator \(T_{sv}^c\), Singh and Solanki [23] type estimator \(T_{ss}^c\), Mandowara and Mehta [15] estimator \(T_{mm_i}^c,~i=1,2,3,4\), Mehta and Mandowara [17] estimator \(T_{mm}^c\) and Saini and Kumar [18] estimator \(T_{sk_t}^c,~t=1,3\) which shows the theoretical justification of the proposed estimators \(T_{v_i}^c,~i=1,2,3,4\). As a matter of relief, it has been observed that the above conditions are readily met in practical situations.

5.2 Separate estimators

On comparing the minimum MSEs of proposed separate estimator \(T_{v_i}^s,~i=1,2,3,4\) with the exising separate estimators from (4.2) and (3.1), (B.10), (B.11), (B.14), (B.15), (B.12), (B.13), (B.16), (B.17) and (B.18), we get the following theoretical conditions.

Again, it follows from the above conditions that the proposed class of estimators \(T_{v_i}^s,~i=1,2,3,4\) dominates the conventional mean estimator \(T_m^s\), classical ratio and regression estimators \(T_r^s\) and \(T_{\beta }^s\), Shabbir and Gupta [21] type estimator \(T_{sg}^s\), Koyuncu and Kadilar [12] type estimator \(T_{kk}^s\), Singh and Vishwakarma [24] type estimator \(T_{sv}^s\), Singh and Solanki [23] type estimator \(T_{ss}^s\), Mandowara and Mehta [15] estimators \(T_{mm_i}^s,~i=1,2,3,4\), Mehta and Mandowara [17] estimators \(T_{mm}^s\), and Saini and Kumar [18] estimator \(T_{sk_t}^s\) which conclude the theoretical justification of the proposed separate estimators \(T_{v_i}^s,~i=1,2,3,4\). Also, as a matter of relief, it has been observed that the conditions are readily met in practical situations.

5.3 Comparison of proposed combined and separate estimators

On comparing minimum MSE of the proposed combined and separate class of estimators \(T_{v_i}^c,~i=1,2,3,4\) and \(T_{v_i}^s\), we get

In situations if the proposed class of estimators is reasonable and the relationship between auxiliary variable \(x_{(i)}\) and study variable \(y_{[i]}\) within each stratum is a straight line and passes through origine then the last term of (5.1) is generally small and get decreases.

Also, unless \(R_h\) is invariant from stratum to stratum, separate estimators probably becomes more efficient in each stratum if the sample in each stratum is large enough so that the approximate formula for \(MSE(T_{v_i}^s),~i=1,2,3,4\) is valid and cumulative bias that can alter the proposed estimators is neglegible whereas the proposed combibed estimators is to be preferably recommended with only a small sample in each stratum (see, Cochran [8]).

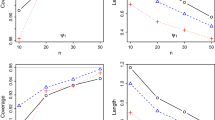

6 Simulation study

In order to enhance the theoretical justification, following Singh and Horn [22] and motivated by Bhushan and Kumar [2, 3] and Kumar et al. [13], we conducted a simulation study over some artificially generated symmetric (viz. Normal, Uniform, Logistic, and t) and asymmetric (viz. F, \(\chi ^2\), \(Beta-I\), Log-normal, Exponential, Gamma, and Weibull) populations of size \(N=1200\) units by using the models given as

where \(x_i^*\) and \(y_i^*\) are independent variates of respective parent distributions with reasonably chosen value of correlation coefficient \(\rho _{x_hy_h}=0.7\) as it seems to be neither very high nor very low. Now, we stratify the population into three equal mutually exclusive and exhaustive strata and drawn a ranked set sample of size 9 units with set size 3 and number of cycles 3 from each strata by using the sampling methodology discussed in earlier section. Using 10,000 iterations, the percent relative efficiency (PRE) of the proposed class of estimators with respect to the conventional mean estimator is computed as

where T denotes the combined and separate estimators. The results of the simulation study which reveals the ascendance of proposed estimators over other existing estimators are reported below in Tables 1 and 2 in terms of PRE for reasonably chosen value of correlation coefficient \(\rho _{xy}=0.7\).

It follows from the persual of simulation results summarized in Table 1 and Table 2 that:

-

(i).

The proposed combined estimator \(T_{v_i}^c,~i=1,3\) dominate the ratio and regression estimators \(T_r^c\) and \(T_{\beta }^c\), Shabbir and Gupta [21] type estimator \(T_{sg}^c\), Koyuncu and Kadilar [12] type estimator \(T_{kk}^c\), Singh and Vishwakarma [24] type estimator \(T_{sv}^c\), Singh and Solanki [23] type estimator \(T_{ss}^c\), Mandowara and Mehta [15] estimators \(T_{mm_i}^c,~i=1,2,3,4\), Mehta and Mandowara [17] estimator \(T_{mm}^c\) and Saini and Kumar [18] estimators \(T_{sk_t}^c,~t=1,3\) in each population.

-

(ii).

The proposed combined estimators \(T_{v_i}^c,~i=2,4\) are less efficient than Singh and Solanki [23] type estimator \(T_{ss}^c\), whereas more efficient than the ratio and regression estimators \(T_r^c\) and \(T_{\beta }^c\), Shabbir and Gupta [21] type estimator \(T_{sg}^c\), Koyuncu and Kadilar [12] type estimator \(T_{kk}^c\), Singh and Vishwakarma [24] type estimator \(T_{sv}^c\), Mandowara and Mehta [15] estimators \(T_{mm_i}^c,~i=1,2,3,4\), Mehta and Mandowara [17] estimator \(T_{mm}^c\) and Saini and Kumar [18] estimators \(T_{sk_t}^c,~t=1,3\) in each population.

-

(iii).

The proposed separate estimators \(T_{v_i}^s,~i=1,3\) dominate the ratio and regression estimators \(T_r^s\) and \(T_{\beta }^s\), Shabbir and Gupta [21] type estimator \(T_{sg}^s\), Koyuncu and Kadilar [12] type estimator \(T_{kk}^s\), Singh and Vishwakarma [24] type estimator \(T_{sv}^s\), Singh and Solanki [23] type estimator \(T_{ss}^s\), Mandowara and Mehta [15] estimators \(T_{mm_i}^s,~i=1,2,3,4\), Mehta and Mandowara [17] estimator \(T_{mm}^s\) and Saini and Kumar [18] estimators \(T_{sk_t}^s,~t=1,3\) in each population.

-

(iv).

The proposed separate estimators \(T_{v_i}^s,~i=2,4\) are less efficient than Singh and Solanki [23] type estimator \(T_{ss}^s\), whereas more efficient than the ratio and regression estimators \(T_r^s\) and \(T_{\beta }^s\), Shabbir and Gupta [21] type estimator \(T_{sg}^s\), Koyuncu and Kadilar [12] type estimator \(T_{kk}^s\), Singh and Vishwakarma [24] type estimator \(T_{sv}^s\), Mandowara and Mehta [15] estimators \(T_{mm_i}^s,~i=1,2,3,4\), Mehta and Mandowara [17] estimator \(T_{mm}^s\) and Saini and Kumar [18] estimators \(T_{sk_t}^s,~t=1,3\) in each population.

-

(v).

It can also be seen that the proposed combined and separate estimators \(T_{v_i}^c,~i=1,3\) and \(T_{v_i}^s,~i=1,3\) are most efficient among the proposed class of estimators.

7 Concluding remarks

This paper has considered some combined and separate log type class of estimators under SRSS along with their properties. The theoretical justification of the proposed combined and separate class of estimators has been provided. In order to enhance the credibility of the theoretical justification, a simulation study has been performed over some artificially generated symmetric (viz. Normal, uniform and Logistic, and t) and asymmetric (viz. F, \(\chi ^2\), \(Beta-I\), Log-normal, Exponential, Gamma, and Weibull) populations. The following noteworthy observations have been mentioned below:

-

i.

The simulation results reported in Table 1 and 2 shows that the proposed combined and separate estimators \(T_{v_i}^c,~i=1,3\) and \(T_{v_i}^s,~i=1,3\) dominate the combined and separate version of ratio and regression type estimator, Mandowara and Mehta [15] estimator, Mehta and Mandowara [17] estimator, Shabbir and Gupta [21] type estimator Koyuncu and Kadilar [12] type estimator, Singh and Vishwakarma [24] type estimator, Singh and Solanki [23] type estimator and Saini and Kumar [18] estimator as well as proposed class of estimators \(T_{v_i}^c,~i=2,4\) and \(T_{v_i}^s,~i=2,4\).

-

ii.

The proposed combined and separate estimators \(T_{v_i}^c,~i=2,4\) and \(T_{v_i}^s,~i=2,4\) are less efficient than combined and separate version of Singh and Solanki [23] type estimator and perform better than the other existing estimators of our study.

-

iii.

It can also be seen that the proposed combined estimators \(T_{v_i}^c,~i=1,2,3,4\) perform better than the proposed separate estimators \(T_{v_i}^s\) in each population.

-

iv.

Thus, the proposed comnined and separate class of estimator \(T_{v_i}^c,~i=1,3\) and \(T_{v_i}^s,~i=1,3\) is to be considered in practice.

In future studies, the proposed estimators may be examined under stratified double RSS, for more details, see Khan et al. [11].

Data availability

Not Applicable.

References

Bhushan, S., Kumar, A.: Novel log type class of estimators under ranked set sampling. Sankhya B 84(1), 421–447 (2022)

Bhushan, S., Kumar, A.: Novel predictive estimators using ranked set sampling. Concurr Computat Pract Exp 35(3), e7435 (2023)

Bhushan, S., Kumar, A.: novel logarithmic imputation methods under ranked set sampling. Measure Interdiscip Res Perspect (2024). https://doi.org/10.1080/15366367.2023.2247692

Bhushan, S., Kumar, A., Shahzad, U., Al-Omari, A.I., Almanjahie, A.I.: On some improved class of estimators by using stratified ranked set sampling. Mathematics 10(3283), 1–32 (2022)

Bhushan, S., Kumar, A., Shahab, S., Lone, S.A., Akhtar, M.T.: On efficient estimation of the population mean under stratified ranked set sampling. J. Math. 2022 (2022)

Bhushan, S., Kumar, A., Banerjie, J.: Mean estimation using logarithmic estimators in stratified ranked set sampling. Life Cycle Reliab. Saf. Eng. 12(1), 1–9 (2023)

Bhushan, S., Kumar, A., Hussam, E., Mustafa, M.S., Zakarya, M., Alharbi, W.R.: On stratified ranked set sampling for the quest of an optimal class of estimators. Alexand. Eng. J. 86, 79–97 (2024)

Cochran, W.G.: Sampling Techniques. Wiley, New York (1977)

Kadilar, C., Cingi, H.: A new ratio estimator in stratified random sampling. Commun. Stat. Theory Methods 34, 597–602 (2005)

Khan, L., Shabbir, J.: Hartley-Ross type unbiased estimators using ranked set sampling and stratified ranked set sampling. North Carolina J. Math. Stat. 2, 10–22 (2016)

Khan, L., Shabbir, J., Gupta, S.: Improved ratio-type estimators using stratified double-ranked set sampling. J. Stat. Theory Pract. 10, 755–767 (2016)

Koyuncu, N., Kadilar, C.: Ratio and product estimators in stratified random sampling. J. Statist. Plann. Infer. 139, 2552–2558 (2009)

Kumar, A., Siddiqui, A.S., Mustafa, M.S., Hussam, E., Aljohani, H.M., Almulhim, F.A.: Mean estimation using an efficient class of estimators based on simple random sampling: simulation and applications. Alexand. Eng. J. 91, 197–203 (2024)

Linder, D.F., Samawi, H., Yu, L., Chatterjee, A., Huang, Y., Vogel, R.: On stratified ranked set sampling for regression estimators. J. Appl. Stat. 42(12), 2571–2583 (2015)

Mandowara, V.L., Mehta, N.: Modified ratio estimators using stratified ranked set sampling. Hacettepe J. Math. Stat. 43(3), 461–471 (2014)

McIntyre, G.A.: A method of unbiased selective sampling using ranked set. Aust. J. Agric. Res. 3, 385–390 (1952)

Mehta, N., Mandowara, V.L.: Advanced estimator in stratified ranked set sampling using auxiliary information. Int. J. Appl. Math. Stat. Sci. 5(4), 37–46 (2016)

Saini, M., Kumar, A.: Ratio estimators using stratified random sampling and stratified ranked set sampling. Life Cycle Reliab. Saf. Eng. 84(5), 931–945 (2018)

Samawi, H.M.: Stratified ranked set sampling. Pak. J. Stat. 12(1), 9–16 (1996)

Samawi, H.M., Siam, M.I.: Ratio estimation using stratified ranked set sampling. Metron Int. J. Stat. LX I(1), 75–90 (2003)

Shabbir, J., Gupta, S.: A new estimator of population mean in stratified sampling. Commun. Stat. Theory Methods 35(7), 1201–1209 (2006)

Singh, H.P., Horn, S.: An alternative estimator for multi-character surveys. Metrika 48, 99–107 (1998)

Singh, H.P., Solanki, R.S.: Efficient ratio and product estimators in stratified random sampling. Commun. Stat. Theory Methods 42(6), 1008–1023 (2013)

Singh, H.P., Vishwakarma, G.K.: A general procedure for estimating the population mean in stratified sampling using auxiliary information. Metron LXVI I(1), 47–65 (2010)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

The optimum values of the scalars involved in the estimators discussed above are reported below.

where,

Appendix B

The optimum values of the scalars involved in the estimators discussed above are reported.

where

Appendix C

This section consider the proof of the Theorem 4.1 to Theorem 4.4.

Consider the combined estimator \(T_{v_1}^c\) as

Using the notations described earlier, we can write \(T_{v_1}^c\) as

Taking expectation both the sides of (C.19), we get

Similarlly, we can find out the biases of remaining combined and separate estimators.

Now, squaring both the sides of (C.19) and taking expectation, we will get the MSE of the estimator upto first order approximation as

where \(P_1=\left\{ 1+V_{02}+2\beta _1(\beta _1-1)V_{20}+4\beta _1V_{11}\right\} \) and \(Q_1=\left\{ \beta _1\left( \frac{\beta _1}{2}-1\right) V_{20}-\beta _1V_{11}\right\} \).

The optimum value of \(\alpha _{{1}}\) can be obtained by minimizing (C.21) w.r.t \(\alpha _{{1}}\) as

Putting \(\alpha _{1(opt)}\) in (C.21), we get the minimum MSE as

Note that the simultaneous minimization of \(\alpha _1\) and \(\beta _1\) is not possible. Therefore, putting \(\alpha _1=1\) in the estimator \(T_{v_1}^c\) and minimizing the MSE w.r.t. \(\beta _1\), we get

In similar lines, we can tabulate the minimum MSEs of other estimators \(T_{v_i},~i=2,3,4\) as

On similar lines, we can obtaine the MSE of the other separate class of estimators.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bhushan, S., Kumar, A. On some efficient logarithmic type estimators under stratified ranked set sampling. Afr. Mat. 35, 40 (2024). https://doi.org/10.1007/s13370-024-01180-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13370-024-01180-x