Abstract

3D multi-view video (MVV) is multiple video streams shot by several cameras around a single scene simultaneously. Therefore it is an urgent task to achieve high 3D MVV compression to meet future bandwidth constraints while maintaining a high reception quality. 3D MVV coded bit-streams that are transmitted over wireless network can suffer from error propagation in the space, time and view domains. Error concealment (EC) algorithms have the advantage of improving the received 3D video quality without any modifications in the transmission rate or in the encoder hardware or software. To improve the quality of reconstructed 3D MVV, we propose an efficient adaptive EC algorithm with multi-hypothesis modes to conceal the erroneous Macro-Blocks (MBs) of intra-coded and inter-coded frames by exploiting the spatial, temporal and inter-view correlations between frames and views. Our proposed algorithm adapts to 3D MVV motion features and to the error locations. The lost MBs are optimally recovered by utilizing motion and disparity matching between frames and views on pixel-by-pixel matching basis. Our simulation results show that the proposed adaptive multi-hypothesis EC algorithm can significantly improve the objective and subjective 3D MVV quality.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

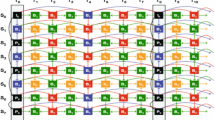

3D multi-view video (MVV) has received a huge attention lately and is expected to quickly replace traditional 2D video in different applications. In multi-view video coding (MVC), the original video content is a group of video sequences captured for the same scene by multiple cameras simultaneously. 3D MVV sequences exhibit high inter-view correlations between views, in addition to the spatio-temporal correlations within each view. 3D MVV transmitted over wireless channel is always subject to random and burst errors. Due to the predictive coding structure of 3D MVV as shown in Fig. 1 [1], which utilizes intra and inter coded frames, errors could propagate to the subsequent frames and to the adjacent views and result in poor video quality [2]. It is not possible to retransmit all erroneous or lost packets due to delay constraints on real-time video transmission. Therefore, there is a need for post-processing error concealment (EC) methods at decoder.

Multi-view video coding prediction structure (MVC-PS) [1]

EC algorithms are recommended since they have the advantage of reducing the visual artifacts caused by channel errors or erasures without increasing the latency or requiring any difficult modifications in the encoder. They exploit advantage of intra-view and inter-view correlations to conceal erroneous packets or frames [3]. Also they are attractive as they neither require encoder changes nor they result in increasing the transmission delay or bit rate [4]. EC algorithms were proposed to protect mono-view videos or stereoscopic videos against transmission errors [5–11]; can be adopted to conceal erroneous frames in 3D MVV sequences. However, they are expected to be more efficient in concealing errors in 3D MVV sequences as they take the advantage of the inter-view correlations [12].

Most of motion and disparity matching techniques at encoder based EC algorithms at decoder [3–11], are block-by-block matching basis. These techniques reconstruct the lost Macro-Blocks (MBs) by employing the neighboring motion and disparity vectors which are obtained by block matching in encoder. In this work, we propose an adaptive EC algorithm that is based on pixel matching in the decoder. We exploit the spatio-temporal and interview correlations between frames and views; to estimate motion and disparity vectors using pixel-to-pixel matching based EC rather than block-based matching. The proposed adaptive EC algorithm introduces significantly better objective and subjective quality comparing to the previous techniques that are based on block-based matching EC methods. The rest of this paper is organized as follows: Sect. 2 presents the background of the prediction structure of MVVC and basic EC algorithms. Section 3 presents proposed EC algorithms for intra-frames and inter-frames, Sect. 4 describes our experimental simulation results and Sect. 5 concludes the paper.

2 Backgrounds

2.1 Multi-view Video Coding Prediction Structure (MVC-PS)

Unlike H.264/AVC coding [13] based on 2D simulcast, a H.264/MVC coding offers high interview correlations between views in addition to spatio-temporal correlations within a view. Motion compensation prediction (MCP) and disparity compensation prediction (DCP) [9] can be exploited at the encoder for high 3D video compression efficiency as well as at the decoder for optimally EC mechanism. In MVC-PS as shown in Fig. 1, Motion Vectors (MVs) are calculated from MCP between frames in the same view and Disparity Vectors (DVs) are estimated from DCP between frames of adjacent views. Therefore each frame in MVC-PS can be predicted using different view frames and/or temporally adjacent frames.

MVC-PS shown in Fig. 1 is used in this paper for its effective performance. It represents a Group of Pictures (GOP) contains pictures at 8 different time; the horizontal axis outlines the temporal axis, whereas the vertical axis demonstrates different camera views. The first view So is encoded using only the temporal correlations based on MCP. The other even views S2, S4 and S6 are also encoded based on the temporal prediction (MCP), but their first frames are encoded using the inter-view prediction (DCP). In the odd views S1, S3 and S5, both the temporal and inter-view predictions (MCP+DCP) are jointly used to enhance compression efficiency. In this paper, we called the view according to its first key frame. So as shown in Fig. 1, So is I-view, the odd views (S1, S3 and S5) are B-views, and the even views (S2, S4, and S6) are P-views. The last view may be odd or even view depending on the proposed GOP-PS; here it is P-view.

MVC-PS introduces two types of coded frames are intra-frames (I frames) and inter-frames (P and B frames). Inter-frames in B-views are predicted from intra-frames in I-view in addition to inter-frames in P-views. Therefore, if an error occurs in I-view or P-view frames, it propagates to the neighboring temporal frames in the same view and also to the adjacent interview frames. Thus our proposed EC algorithm is adaptively selecting the appropriate EC mode based on the corrupted frame and view types to conceal the erroneous MBs. In our work, we propose multi-hypothesis EC modes for intra-frames and inter-frames in I-view, P-view and B-view.

2.2 Error Concealment (EC) Techniques

3D MVV transmission over wireless networks may suffer from random and burst packet losses due to channel errors that seriously degrade the received 3D video quality. Therefore, it is challenging to provide EC for reliable 3D video communication. EC is an effective way to fix the errors by replacing the missing parts of video content by previously corrected decoded parts of the video sequence in order to eliminate or reduce the visual effects of bit stream error.

EC algorithms are attractive since they have increase the bit rate or transmission delay [12]. Therefore, we propose using of EC algorithms to enhance the 3D video quality at the decoder through exploiting the inter-view correlations between the 3D video streams in addition to spatio-temporal correlations within each view, as shown in Fig. 1.

EC algorithms were proposed for mono view H.264/AVC against transmission errors [5–11]; can be adopted with specific adaptive modifications to conceal erroneous frames in 3D MVV sequences. They are expected to be more reliable for concealing errors in 3D H.264/MVC; as they take the advantage of the inter-view correlations. Frame temporal replacement algorithm (FTRA) [6] is a simple temporal EC that is reasonable for static or very slow 3D video sequences, where the lost MBs are replaced by the MBs spatially located at the reference frame. Outer block boundary matching algorithm (OBBMA) and decoder motion vector estimation algorithm (DMVEA) [7] are more sophisticated temporal EC techniques that are suitable for fast moving videos.

OBBMA determines the MVs between the two pixels wide outer boundary of the replacing MB and the same external boundary of the lost MB. It only uses the outer borders of the reference MBs to check the highly correlated neighborhood MVs, as shown in the left part of Fig. 2. DMVEA estimates the MVs of the lost MBs by using a full search in the reference frames, and it is useful in identifying the replacing MBs that minimize the boundary distortion error, as shown in the right part of Fig. 2. DMVEA presents more powerful EC performance than OBBMA with approximately the same complexity of calculations. But, DMVEA relies upon full search motion estimation, it would be inherently slow.

The weight pixel averaging algorithm (WPAA) and directional interpolation ec algorithm (DIECA) algorithms [7] are used for spatial and inter-view EC. WPAA conceals the damaged pixels using the horizontal and vertical pixels in the neighboring blocks, as shown in the left part of Fig. 3. DIECA conceals the damaged MBs by calculating the object edge direction from the neighboring blocks, where the object edge direction with the largest magnitude is chosen as the direction to be used to conceal the damaged MBs as shown in the right part of Fig. 3.

Scene change detection algorithm [8] is an operation used in our work to determine the degree of motion for the 3D video content. It divides the 3D video content into continuous frames then measures the matching between each two consecutive frames by calculating the luminance color and edge difference. It calculates the motion vector between the two consecutive frames to clarify the degree of change between them and thus the 3D video content type; slow or fast video.

3 Proposed Pixel-level Matching based EC Algorithm

In this section, we present the proposed EC algorithms which adaptively select the appropriate EC mode based on the erroneous frame and view types to conceal the erroneous MBs. In our work, we utilize multi-hypothesis EC modes depending on the error patterns that may be in intra-frames or inter-frames at I-view or P-view or B-view. Our proposed EC modes also adapt to the motion characteristics of the transmitted 3D MVV. The proposed EC algorithms are not on a block-matching basis, but the lost MBs are recovered by utilizing motion and disparity matching between frames and views depending on pixel-matching basis.

In pixel-matching based EC techniques, each neighboring pixel of the lost pixel has its own disparity and motion vector, not like that in block-matching based EC methods that all lost pixels in one block have the same MV and DV. Thus due to its EC efficiency, through the proposed EC mechanisms, the estimated candidate MVs and DVs of matching pixels in the reference frame and view are used to conceal the lost pixels depending on pixel-by-pixel matching instead of block-by-block matching. Consider the transmitted I- or P- or B-frame with the vectors P U1 , P U2 , P R1 , P R2 , P B1 , P B2 , P L1 , and P L2 consist of the outside boundary pixels of the upper, right, bottom and left sides of the missing pixel, respectively. The upper, right, bottom and left inner boundary pixels of the candidate pixel are represented by the vectors X U1 , X U2 , X R1 , X R2 , X B1 , X B2 , X L1 and X L2 respectively. The DVs or MVs between the adjacent pixels and their matching pixels can be calculated by the formulas (1–6).

A high-level flow chart diagram of our proposed joint intra-frames and inter-frames EC algorithms at the decoder showing when each EC mode is applicable is presented in Fig. 4. The proposed pixel-by-pixel based multi-hypothesis EC modes can be summarized as following.

3.1 Hypothesis EC Mode 1: Adaptive Spatio-Temporal EC for I-Frame/I-View

-

1.

Find the lost pixels locations inside erroneous I-frame

-

2.

Check “Is the received 3D MVV slow or fast moving video?”, by applying Scene Change Detection Algorithm [8].

-

3.

If the received video is a slow moving video, then

-

a.

Replace the lost pixels by the pixels located at the same spatial positions in the reference frame.

If the received video is a fast moving video, then

-

b.

Apply the DMVEA algorithm, to find the adjacent up, down, left and right pixels to the lost pixel and their matching pixels in the reference frame.

-

c.

Apply the DIECA algorithm, to find the matching pixels surrounding the lost pixels depending on the location of the lost pixels.

-

Find the Disparity Vectors (DVs) between the lost pixels and their surrounding pixels.

-

Select the candidate pixels that give the smallest SAD.

-

-

a.

-

4.

Calculate the average value of the selected candidates MVs and DVs found in the previous step.

-

5.

Check if Temporal information > Spatial information or vice versa.

-

Set appropriate coefficient values to averaged values of MVs and DVs (avg (MVs) and avg (DVs), respectively):

If Temporal information < Spatial information

Candidate pixel = 1/3 avg (MVs) + 2/3 avg (DVs).

Else

Candidate pixel = 2/3 avg (MVs) + 1/3 avg (DVs).

-

-

6.

Replace the lost pixel with the candidate pixel calculated using the weighted average of MVs and DVs as in step 6.

3.2 Hypothesis EC Mode 2: Adaptive Temporal EC for B-Frame in I-view or P-view

-

1.

Find the lost pixels locations inside erroneous B-frame in I-view or P-view.

-

2.

Apply the steps (2), (3.a) and (3.b) of the EC Mode 1, to find the matching pixels inside the forward and backward reference frames depending on the received 3D MVV characteristics (slow or fast).

-

3.

Find the most correlated candidate MVs to lost pixels.

-

4.

Average MVs values of the most matched candidate pixels.

-

5.

Replace the lost pixels with the candidates MBs by using the averaged calculated value.

3.3 Hypothesis EC Mode 3: Adaptive Inter-View EC for P-Frame in P-view

-

1.

Find the lost pixels locations inside erroneous P-Frame in P-view.

-

2.

Apply the step (3.c) of the EC Mode 1, to find the matching pixels inside the left reference frame.

-

3.

Find the most correlated candidate DVs to lost pixels.

-

4.

Average DVs values of the candidate pixels.

-

5.

Replace the lost MB with the candidate pixels by using the averaged calculated value.

3.4 Hypothesis EC Mode 4: Adaptive Temporal-Inter-view EC for B-Frame in B-view

-

1.

Find the lost pixels locations inside erroneous B-frame in B-view.

-

2.

Apply the steps (2), (3.a) and (3.b) of the EC Mode 1, to find the matching pixels inside the previous and subsequent reference frames depending on the received 3D MVV characteristics (slow or fast).

-

3.

Apply the step (3.c) of the EC Mode 1, to find the matching pixels inside the left and right reference frames.

-

4.

Find the most matched candidates MVs and DVs to the lost pixels.

-

5.

Average DVs and MVs values of the candidate pixels.

-

6.

Set appropriate coefficient values to the averaged values of MVs and DVs (avg (MVs) and avg (DVs), respectively) depending on “Is the Temporal information > Spatial information or vice versa?”, by selecting between the following two cases:

-

Candidate pixel = 1/3 avg (MVs) + 2/3 avg (DVs).

-

Candidate pixel = 2/3 avg (MVs) + 1/3 avg (DVs).

-

-

7.

Replace the lost pixels with the candidate pixels by using the weighted average calculated value of MVs and DVs in the previous step.

$$\varvec{\varepsilon}_{\varvec{U}}^{2} = \varvec{ }\mathop \sum \limits_{i = 1,2}^{ } \left\| {\left( {\varvec{ X}_{{\varvec{U}_{\varvec{i}} }} - \varvec{P}_{{\varvec{U}_{\varvec{i}} }} } \right)} \right\|^{2}$$(1)$$\varvec{\varepsilon}_{\varvec{R}}^{2} = \varvec{ }\mathop \sum \limits_{i = 1,2}^{ } \left\| {\left( {\varvec{X}_{{\varvec{R}_{\varvec{i}} }} - \varvec{P}_{{\varvec{R}_{\varvec{i}} }} } \right)} \right\|^{2}$$(2)$$\varvec{\varepsilon}_{\varvec{B}}^{2} = \varvec{ }\mathop \sum \limits_{i = 1,2}^{ } \left\| {\left( {\varvec{X}_{{\varvec{B}_{\varvec{i}} }} - \varvec{P}_{{\varvec{B}_{\varvec{i}} }} } \right)} \right\|^{2}$$(3)$$\varvec{\varepsilon}_{\varvec{L}}^{2} = \varvec{ }\mathop \sum \limits_{i = 1,2}^{ } \left\| {\left( {\varvec{X}_{{\varvec{L}_{\varvec{i}} }} - \varvec{P}_{{\varvec{L}_{\varvec{i}} }} } \right)} \right\|^{2}$$(4)$$\hat{X} = \arg_{{{\mathbf{X}}_{U1} ,{\mathbf{X}}_{U1} , \ldots ,{\mathbf{X}}_{L2} }} \hbox{min} \varepsilon^{2}$$(5)$${\varvec{\upvarepsilon}}^{2} = {\varvec{\upvarepsilon}}_{{\mathbf{U}}}^{2} + {\varvec{\upvarepsilon}}_{{\mathbf{R}}}^{2} + {\varvec{\upvarepsilon}}_{{\mathbf{B}}}^{2} + {\varvec{\upvarepsilon}}_{{\mathbf{L}}}^{2}$$(6)

4 Simulation Results

To evaluate the performance of the proposed EC algorithms, we run some experiments on well-known video sequences (Ballroom [14] and Uli [15]), Uli sequence is a slow moving sequence but Ballroom sequence is a fast moving sequence. JMVC reference software based on H.264/MVC [16] is employed as the platform for our proposed simulation work. All encoding parameters are set according to the JVT common test condition [17]. For each sequence, the coded bit-streams are transmitted over a Rayleigh fading wireless channel with different Signal to Noise Ratios (SNRs). The received bit-stream is then decoded by the proposed adaptive multi-hypothesis EC modes. In all the proposed methods, it is assumed that the slice information/view component information is not corrupted.

Figures 5 and 6 show the subjective results for the Uli and Ballroom sequences, respectively. We select the 209th intra-coded I-frame as a test frame at channel SNR = −5 dB. We recovered the 209th intra-frame with the spatial DIECA algorithm only, the temporal DMVEA algorithm only, and with the full hybrid spatio-temporal hypothesis EC mode 1 algorithm. The corresponding objective Peak Signal to Noise Ratio (PSNR) results for the same frame of the same video sequences are shown in Fig. 7 at different channel SNRs. We observe that the full hybrid spatio-temporal hypothesis EC mode 1 algorithm has the best subjective and objective results compared to using the spatial DIECA algorithm only or applying the temporal DMVEA algorithm only, as the hypothesis EC mode 1 algorithm efficiently exploits the redundant information in both space and time domains simultaneously.

To demonstrate the effectiveness of the proposed adaptive hypothesis EC modes for P and B inter-frames, the Uli and Ballroom sequences were tested with low complexity parameters [16] at the decoder which makes the EC process more practical. In the experimental tests, the P and B inter-view frames within views S1 and S2 are corrupted due to transmission over Rayleigh fading wireless channel with different SNRs. The frames are recovered and concealed with the proposed adaptive hypothesis EC modes 2, 3 and 4 inter-frame algorithms. Figures 8 and 9 show the subjective simulation results of the selected 209th inter B-Frame in B-view S1 and inter P-Frame in P-view S2 of the Uli and Ballroom sequences, respectively. It is noted that in Fig. 8A-c and B-c, the 209th inter B-frame in view S1 is concealed by using the proposed hypothesis EC mode 4 without first error-concealing the intra I-frame, while in Fig. 8A-d and B-d, the intra I-frame is also recovered using our hypothesis EC mode 1. This demonstrates the importance of intra I-frame EC. Figure 9 shows the subjective results, of the 209th inter P-frame in P-view S2 recovered using hypothesis EC mode 3 in Fig. 9A-c and B-c without deploying intra I-frame hypothesis EC mode 1, and in Fig. 9A-d and B-d with using intra I-frame EC of the Uli and Ballroom sequences, respectively.

Subjective simulation results for the selected 209th inter B-Frame in B-view S1 of the A “Uli” and B “Ballroom” sequences, respectively, at channel SNR = −5 dB: a error free B209 frame, b erroneous Inter B209 frame with SNR = −5 dB, c concealed Inter B209 frame without intra I209 frame EC, d concealed Inter B209 frame with intra I209 frame EC

Subjective simulation results for the selected 209th inter P-Frame in P-view S2 of the A “Uli” and B “Ballroom” sequences, respectively, at channel SNR = −5 dB: a error free P209 frame, b erroneous Inter P209 frame with SNR = −5 dB, c concealed Inter P209 frame without intraI209 frame EC, d concealed Inter P209 frame with intra I209frame EC

Figures 10 and 11 present the objective simulation results of recovering inter B-Frame in B-view S1 and inter P-Frame in P-view S2 of the 3D multi-view Uli and Ballroom video sequences, respectively. We again observe that intra I-frame EC improves the performance of inter B or P-frame EC. Table 1 showes the time comutational complexity of the various proposed EC modes in case of pixel domain and block domain operation for the 3D multi-view Uli and Ballroom video sequences.

5 Conclusions

In this paper, we have proposed different multi-hypothesis EC modes for intra-frames and inter-frames of 3D MVV coded sequences corrupted by Rayleigh fading wireless channel errors. The main crux of our proposed algorithms is to adaptively and jointly utilize the spatial, temporal and inter-view correlations in MVC sequences for EC of both intra-frames and inter-frames. The lost MBs are optimally recovered by utilizing motion and disparity matching between frames and views on pixel-by-pixel basis. Our experimental results show that our proposed adaptive pixel-matching hybrid spatio-temporal-inter-view EC modes are significantly superior to conventional EC algorithms that exploit correlation in only the spatial domain or only the temporal domain, e.g. [5–11]. Also, our results demonstrate that the proposed pixel-matching EC modes are objectively and subjectively pre-eminent comparing to block-matching based EC methods [3–11]. We also show the effectiveness importance of EC of intra I-frames as they affect the quality of EC of inter P- and B-frames as well. We conclude that the proposed pixel-matching based EC modes proposed in this paper can conceal errors and lost MBs of MVC intra-frames and inter-frames efficiently, and delivering high quality 3D multi-view video.

References

MerkleP, Smolic A, & MullerK, Wiegand T. (2007). Efficient prediction structures for multiview video coding. IEEE Transactions on Circuits and Systems for Video Technology, 17(11), 1461–1473.

Zhou, Y., Hou, C., Pan, R., Yuan, Z., & Yang, L. (2010). Distortion analysis and error concealment for multi-view video transmission. In Broadband multimedia systems and broadcasting (BMSB), IEEE international symposium, March, pp. 1–5, 24–26.

Song, K., & Kim, C. (2009). Error concealment of multi-view video sequences using inter-view and intra-view correlations. Journal of Visual Communication and Image Representation, 20, 281–292.

Chung, T., Song, K., & Kim, C. (2007). Error concealment techniques for multi-view video sequences. In Proceedings of the multimedia 8th Pacific Rim conference on Advances in multimedia information processing, Springer-Verlag, Hong Kong (pp. 619–627).

Nasiopoulos, P., Mendozal, L., Mansour, H., & Golikeri, A. (2005). An improved error concealment algorithm for intra-frames in h.264/avc. In IEEE international symposium on circuits and systems, ISCAS (pp. 320–323).

Hrusovsky, B., Mochnac, J., & Marchevsky, S. (2010). Temporal-spatial error concealment algorithm for intra-frames in H.264/AVC coded video. In Radioelektronika, 20th international conference (pp. 1–4), April 19–21.

Seiler J., & Kaup, A. (2008). Adaptive joint spatio-temporal error concealment for video communication. In IEEE 10th workshop on multimedia signal processing (pp. 229–234). October 8–10.

Saurav, K., Zhenyu, W., & Jill, M. (2006). An error concealment scheme for entire frame losses for H.264/AVC. In Sarnoff Symposium, IEEE (pp. 1–4). March 27–28.

Xiang, X., Zhao, D., Wang, Q., Ji, X., Gao, W. (2007). A novel error concealment method for stereoscopic video coding. In ICIP IEEE international conference on image processing (Vol. 5, pp. V-101-V–104-V). October 19.

Hwang, M., & Ko, S. (2008). Hybrid temporal error concealment methods for block-based compressed video transmission. IEEE Transactions on Broadcasting, 54(2), 198–207.

Chung, Y., Chen, L., Chen, X., Yeh, W., & Bae, C. (2008). A novel intra-fame error concealment algorithm for H. 264 AVC. In Third international conference on convergence and hybrid information technology, ICCIT’08 (Vol. 1, pp. 881–886).

El-Shafai, W., Hrusovsky, B., El-Khamy, M., & El-Sharkawy, M. (2011). Joint Space-Time-View error concealment algorithms for 3D Multi- View video. In 18th IEEE international conference on image processing (IEEE ICIP2011) (pp. 2249–2252). Brussels.

http://www.wftp3.itu.int/avarch/jvt/2009_01_Geneva/JVTAD207.zip (2015).

ISO/IEC JTC1/SC29/WG11. (2006). Common test conditions for multiview video coding. JVT-U207, Hangzhou.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

El-Shafai, W. Pixel-level Matching Based Multi-hypothesis Error Concealment Modes for Wireless 3D H.264/MVC Communication. 3D Res 6, 31 (2015). https://doi.org/10.1007/s13319-015-0064-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13319-015-0064-5