Abstract

Image compression techniques are widely used on 2D image 2D video 3D images and 3D video. There are many types of compression techniques and among the most popular are JPEG and JPEG2000. In this research, we introduce a new compression method based on applying a two level discrete cosine transform (DCT) and a two level discrete wavelet transform (DWT) in connection with novel compression steps for high-resolution images. The proposed image compression algorithm consists of four steps. (1) Transform an image by a two level DWT followed by a DCT to produce two matrices: DC- and AC-Matrix, or low and high frequency matrix, respectively, (2) apply a second level DCT on the DC-Matrix to generate two arrays, namely nonzero-array and zero-array, (3) apply the Minimize-Matrix-Size algorithm to the AC-Matrix and to the other high-frequencies generated by the second level DWT, (4) apply arithmetic coding to the output of previous steps. A novel decompression algorithm, Fast-Match-Search algorithm (FMS), is used to reconstruct all high-frequency matrices. The FMS-algorithm computes all compressed data probabilities by using a table of data, and then using a binary search algorithm for finding decompressed data inside the table. Thereafter, all decoded DC-values with the decoded AC-coefficients are combined in one matrix followed by inverse two levels DCT with two levels DWT. The technique is tested by compression and reconstruction of 3D surface patches. Additionally, this technique is compared with JPEG and JPEG2000 algorithm through 2D and 3D root-mean-square-error following reconstruction. The results demonstrate that the proposed compression method has better visual properties than JPEG and JPEG2000 and is able to more accurately reconstruct surface patches in 3D.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Compression methods are being rapidly developed for large data files such as images, where data compression in multimedia applications has lately become more important. While it is true that the price of storage has steadily decreased, the amount of generated image and video data has increased exponentially. It is more evident on large data repositories such as YouTube and cloud storage offered by a number of suppliers. With the increasing growth of network traffic and storage requirements, more efficient methods are needed for compressing image and video data, while retaining high quality with significant reduction in storage size. The discrete cosine transform (DCT) has been extensively used [3, 25] in image compression. The image is divided into segments and each segment is then subject of the transform, creating a series of frequency components that correspond with detail levels of the image. Several forms of coding are applied in order to store only coefficients that are found as significant. Such a way is used in the popular JPEG file format, and most video compression methods and multi-media applications are generally based on it [5, 11].

A step beyond JPEG is JPEG2000 that is based on the discrete wavelet transform (DWT) which is one of the mathematical tools for hierarchically decomposing functions. Image compression using wavelet transforms is a powerful method that is preferred by scientists to get the compressed images at higher compression ratios with higher PSNR values [17, 18]. Its superiority in achieving high compression ratio, error resilience, and other features promotes it to become the tomorrow’s compression standard and led to the JPEG2000 ISO. As referred to the JPEG abbreviation, which stands for Joint Photographic Expert Group, JPEG2000 codec is more efficient than its predecessor JPEG and overcomes its drawbacks [6]. It also offers higher flexibility compared to even many other codec such as region of interest, high dynamic range of intensity values, multi component, lossy and lossless compression, efficient computation, and compression rate control. The robustness of JPEG2000 stems from its utilization of DWT for encoding image data. DWT exhibits high effectiveness in image compression due to its support to multi-resolution representation in both spatial and frequency domains. In addition, DWT supports progressive image transmission and region of interest coding [1, 7].

Furthermore, a requirement is introduced concerning the compression of 3D data. We demonstrated that while geometry and connectivity of a 3D mesh can be tackled by a number of techniques such as high degree polynomial interpolation [16] or partial differential equations [13, 15], the issue of efficient compression of 2D images both for 3D reconstruction and texture mapping for structured light 3D applications has not been addressed. Moreover, in many applications, it is necessary to transmit 3D models over the Internet to share CAD/CAM models with e-commerce customers, to update content for entertainment applications, or to support collaborative design, analysis, and display of engineering, medical, and scientific datasets. Bandwidth imposes hard limits on the amount of data transmission and, together with storage costs, limit the complexity of the 3D models that can be transmitted over the Internet and other networked environments. It is envisaged that surface patches can be compressed as a 2D image together with 3D calibration parameters, transmitted over a network and remotely reconstructed (geometry, connectivity and texture map) at the receiving end with the same resolution as the original data [12, 22]. Siddeq and Rodrigues [22] they proposed 2D image compression used in 3D application based on DWT and DCT. DWT linked with DCT for produce series of high-frequency matrices, the same transformation applied again on the low-frequency matrix to produce another series of high-frequencies data array. Finally the series of data are coded by Minimize-Matrix-Size algorithm (MMS), the coded data then decoded by Limited-Sequential Search algorithm (LSS) for reconstruct 2D images. The advantage for this method can get high resolution image at higher compression ratio up to 98 %. However, the complexity for this algorithm made execution time for compression and decompression within few minutes. For this research we describe a new method for image compression based on two separate transformations; two levels DWT and the low-frequency matrix addressed to two levels DCT, leading to an increased number of high-frequency matrices, which are then shrunk using the Enhanced MMS (EMMS) algorithm. This paper demonstrates that our compression algorithm achieved efficient image compression ratio up to 99.5 % and superior accurate 3D reconstruction compared with standard JPEG and JPEG2000.

2 The Proposed 2D Image Compression Algorithm

This section presents a novel lossy compression algorithm implemented via DWT and DCT. The algorithm starts with a two level DWT. While all high frequencies (HL1, LH1, HH1) of the first level are discarded, all sub-bands of the second level are further encoded. We then apply DCT to the low-frequency sub-band (LL2) of the second level; the main reason for using DCT is to split into another low frequency and high-frequency matrices (DC- and AC-Matrix1).The EMMS algorithm is then applied to compress AC-Matrix1 and high frequency matrices (HL2, LH2, HH2). The DC-Matrix1 is subject to a second DCT whose AC-Matrix2 is quantized then subject to arithmetic coding together with DC-Matrix2 and the output of EMMS algorithm as depicted in Fig. 1.

2.1 Two Level Discrete Wavelet Transform (DWT)

The implementation of the wavelet compression scheme is very similar to that of sub-band coding scheme: the signal is decomposed using filter banks [4, 24]. The output of the filter banks is down-sampled, quantized, and encoded. The decoder decodes the coded representation, up-samples and recomposes the signal. Wavelet transform divides the information of an image into an approximation (i.e., LL) and detail sub-band. The approximation sub-band shows the general trend of pixel values and the other three detail sub-bands show the vertical, horizontal and diagonal details in the images. If these details are very small then they can be set to zero without significantly changing the image details [20], for this reason the high frequency sub-bands are compressed into fewer bytes. DWT uses filters for decomposing an image; these filters assist to increase the number of zeros in high frequency sub-bands. One common filter used in decomposition and composition is called Daubechies filter. This filter stores much information about the original image in the “LL” sub-band, while other high-frequency domains contain less significant details, and this is one important property of the Daubechies filter for achieving a high compression ratio. A two-dimensional DWT is implemented on an image twice, first applied on each row, and then applied on each column [11, 18]:

In the proposed research the high frequency sub-bands at first level are set to zero (i.e., discard HL, LH and HH). These sub-bands do not affect image details. Additionally, only a small number of non-zero values are present in these sub-bands. In contrast, high-frequency sub-bands in the second level (HL2, LH2 and HH2) cannot be discarded, as this would significantly affect the image. For this reason, high-frequencies values in this region are quantized. The quantization process in this research depends on the maximum value in each sub-band, as shown by the following equation [23]:

where “H2” represents each high-frequency sub-band at second level in DWT (i.e., HL2, LH2 and HH2). While “H2m” represents maximum value in a sub-band, and the “Ratio” value is used as a control for amount of maximum value, which is used to control image quality. For example, if the maximum value in a sub-band is 60 and Ratio = 0.1, the quantization value is H 2 m = 6, this means all values in a sub-band are divided by 6.

Each sub-band has different priority for keeping image details. The higher priorities are: LH2, HL2 and then HH2. Most of information about image details are in HL2 and LH2. If most of nonzero data in these sub-bands are retained, the image quality will be high even if some information is lost in HH2. For this reason the Ratio value for HL2, LH2 and HH2 is defined in the range = {0.1, …, 0.5}.

2.2 Two Level Discrete Cosine Transform (DCT)

In this section we describe the two levels DCT applied to low-frequency sub-band “LL2” (see Fig. 1). A quantization is first applied to LL2 as follows. All values in LL2 are subtracted by the minimum of LL2 and then divided by 2 (i.e., a constant even number). Thereafter, a two-dimensional DCT is applied to produce de-correlated coefficients. Each variable size block (e.g., 8 × 8) in the frequency-domain consists of: the DC-value at the first location, while all the other coefficients are called AC coefficients. The main reason for using DCT to split the final sub-band (LL2) into two different matrices is to produce a low-frequency matrix (DC-Matrix) and a high-frequency matrix (AC-Matrix). The following two steps are used in the two levels DCT implementation:

Organize LL2 into 8 × 8 non-overlapping blocks (other sizes can also be used such as 16 × 16), then apply DCT to each block followed by quantization. The following equations represent the DCT and its inverse [2, 18, 25]:

The quantization table is a matrix of the same block size that can be represents as follows:

where i, j = 1, 2,…, Block, Scale = 1, 2, 3,…, Block.

After applying the two-dimensional DCT on each 8 × 8 or 16 × 16 block, each block is quantized by the “Q” using dot-division-matrix, which truncates the results. This process removes insignificant coefficients and increases the number of the zeros in the each block. However, in the above Eq. (4), the factor “Scale” is used to increase/decrease the values of “Q”. Thus, image details are reduced in case of Scale >1. There is no limited range for this factor, because this depends on the DCT coefficients.

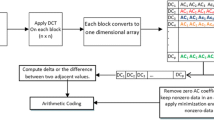

Convert each block to 1D array, and then transfer the first value to a new matrix called DC-Matrix. While the rest of data AC coefficients are saved into a new matrix called AC-Matrix. Similarity, the DC-Matrix is transformed again by DCT into another two different matrices: DCT-Matrix2 and AC-Matrix2. Figure 2 illustrates the details of the two levels DCT applied to the sub-band LL2.

The resulting DC-Matrix2 size is very small and can be represented in a few bytes. On the other hand, the AC-Matrix2 contains lots of zeros with only a few nonzero data. All zero scan be erased and just nonzero data are retained. This process involves separating zeros from nonzero data, as shown in Fig. 3. The zero-array can be computed easily by calculating the number of zeros between two nonzero data. For example, assume the following AC-Matrix = [0.5, 0, 0, 0, 7.3, 0, 0, 0, 0, 0, −7], the zero-array will be [0, 3, 0, 5, 0] where the zeros in italics refer to nonzero data existing at these positions in the original AC-Matrix and the numbers in bold refer to the number of zeros between two consecutive non-zero data. In order to increase the compression ratio, the number “5” in the zero-array can be broken up into “3” and “2” zeros to increase the probability (i.e., re-occurrence) of redundant data. Thus, the new equivalent zero-array would become [0, 3, 0, 3, 2, 0].

After this procedure, the nonzero-array contains positive and negative data (e.g., nonzero-array = [0.5, 7.3, −7]), and each data size probably reaches to 32-bits and these data can be compressed by a coding method. In that case the index size (i.e., the header compressed file) is almost 50 % of compressed data size. The index data are used in the decompression stage, therefore, the index data could be broken up into parts for more efficient compression. Each 32-bit data are partitioned into small parts (4-bit), and this process increases the probability of redundant data. Finally these streams of data are subject to arithmetic coding.

2.3 The Minimize-Matrix-Size Algorithm

In this section we introduce a new algorithm to squeeze high-frequency sub-bands into an array, then minimizing that array by using the MMS algorithm, and then compressing the newly minimised array by arithmetic coding. Figure 4 illustrates the steps in this novel high-frequency compression technique.

Each high-frequency sub-band contains lots of zeros with a few nonzero data. We propose a technique to eliminate block of zeros, and store blocks of nonzero data in an array. This technique is useful for squeezing all high-frequency sub-bands, this process is labelled eliminate zeros and store nonzero data (EZSN) in Fig. 4, applied to each high frequency independently. The EZSN algorithm starts to partition the high-frequency sub-bands into non-overlapped blocks [K × K], and then search for nonzero blocks (i.e., search for at least one nonzero inside a block). If a block contains any nonzero data, this block will be stored in the array called Reduced-Array, with the position of that block. Otherwise, the block will be ignored, and the algorithm continues to search for other nonzero blocks. The EZSN algorithm is illustrated in List-1 below.

After each sub-band is squeezed into an array, thereafter, the MMS algorithm is applied to each Reduced Array independently. This method reduces the array size by 1/3, the calculation depends on key values and coefficients of the reduced array, and the result is stored in a new array called Coded-Array. The following equation represents the MMS algorithm [21–23]:

Note that “RA” represents Reduced-Array (HL2, LH2, HH2 and AC-Matrix1).

L = 1, 2, 3, …,N − 3, “N” is the size of Reduced-Array

P = 1, 2, 3, …, N/3.

The key values in the above Eq. (5) are generated by a key generator algorithm. Initially compute the maximum value in reduced sub-band, and three keys are generated according to the following steps:

Each reduced sub-band (see Fig. 4) has its own key value. This depends on the maximum value in each sub-band. The idea for the key is similar to weights used in perceptron neural network: P = AW1 + BW2 + CW3, where Wi are the weight values generated randomly and “A”, “B” and “C” are data. The output of this summation is “P” and there is only one possible combination for the data values given Wi (more details in Sect. 3). Using a key generator the MMS algorithm illustrated in List-2 [21–23].

Before applying the MMS algorithm, our compression algorithm computes the probability of the Reduced-Array (i.e., compute the probability for each HL2, LH2, HH2 and AC-Matrix1). These probabilities are called Limited-Data, which is used later in the decompression stage. The Limited-Data are stored as additional data in the header of the compressed file. Figure 5 illustrates the probability of data for a matrix.

The final step of the compression algorithm is arithmetic coding, which is one of the important methods used in data compression; its takes a stream of data and convert it to a single floating point value. These values lie in the range less than one and greater than zero that, when decoded, return the exact stream of data. The arithmetic coding needs to compute the probability of all data and assign a range for each data (low and high) in order to generate streams of bits [18].

3 The Decompression Algorithm: Fast Matching Search Algorithm (FMS)

The proposed decompression algorithm is the inverse of compression and consists of three stages:

-

(1)

First level inverse DCT to reconstruct the DC-Matrix1;

-

(2)

Apply the FMS-algorithm to decode each sub-band independently (i.e., HL2, LH2, HH2, AC-Matrix2);

-

(3)

Apply the second level inverse DCT with two levels inverse DWT to reconstruct the 2D image.

Once the 2D image is reconstructed, we apply structured light 3D reconstruction algorithms to obtain an approximation of the original 3D surface, from which errors can be computed for the entire surface. Figure 6 shows the layout of the main steps in the proposed decompression algorithm.

The FMS algorithm has been designed to compute the original high frequency data. The compressed data contains information about the compression keys and probability data (Limited-Data) followed by streams of compressed high frequency data. Therefore, the FMS algorithm picks up each compressed high frequency data and reads information (key values and Limited-Data) from which the original high frequency data are recovered. The FMS-algorithm is illustrated through the following steps A and B:

-

(A)

Initially, Limited-Data are copied (in memory) three times into separated arrays. This is because expanding the compressed data with the three keys resembles an interconnected data, similar to a network as shown in Fig. 7.

-

(B)

Pick up a data item from “D”, the compressed array (i.e., Coded-HL2 or Coded-LH2 or Coded-HH2 or Coded-AC-Matrix1) and search for the combination of (A, B, C) with respective keys that satisfy D. Return the triplet decompressed values (A, B, C).

Since the three arrays of Limited-Data contain the same values, that is A1 = B1 = C1, A2 = B2 = C2, and so on the searching algorithm computes all possible combinations of A with Key1, B with Key2 and C with Key3 that yield a result D. As a means of an example consider that Limited-Data1 = [A1 A2 A3], Limited-Data2 = [B1 B2 B3] and Limited-Data3 = [C1 C2 C3]. Then, according to Eq. (5) these represent RA(L), RA(L + 1) and RA(L + 2), respectively, the equation is executed 27 times (33 = 27) testing all indices and keys. One of these combinations will match the data in (D) (i.e., the original high frequency Coded-LH2 or Coded-HL2 or Coded-HH2 or Coded-AC-Matrix1) as described in Fig. 6b. The match indicates that the unique combination of A, B, C are the original data we are after.

The searching algorithm used in our decompression method is called binary search algorithm, the algorithm finds the original data (A, B, C) for any input from array “D”. For binary search, the array should be arranged in ascending order. In each step, the algorithm compares the input value with the middle of element of the array “D”. If the value matches, then a matching element has been found and its position is returned [9]. Otherwise, if the search is less than the middle element of “D”, then the algorithm repeats its action on the sub-array to the left of the middle element or, if the value is greater, on the sub-array to the right. There is no probability for “Not Matched”, because the FMS-algorithm computed all compression data possibilities previously.

After the Reduced-Arrays (LH2, LH2, HH2 and AC-Matrix1) are recovered, their full corresponding high frequency matrices are re-build by placing nonzero-data in the exact locations according to EZSN algorithm (see List-1). Then the sub-band LL2 is reconstructed by combining the DC-Matrix1 and AC-Matrix1 followed by the inverse DCT. Finally, a two level inverse DWT is applied to recover the original 2D BMP image (see Fig. 6c). Once the 2D image is decompressed, its 3D geometric surface is reconstructed such that error analysis can be performed in this dimension.

4 Experimental Results

The results described below use MATLAB-R2013a for performing 2D image compression and decompression, and 3D surface reconstruction was performed with our own software developed within the GMPR Group [12, 13, 15] running on an AMD quad-core microprocessor. The justification for introducing 3D reconstruction is that we can make use of a new set of metrics in terms of error measurements and perceived quality of the 3D visualization to assess the quality of the compression/decompression algorithms. The principle of operation of 3D surface scanning is to project patterns of light onto the target surface whose image is recorded by a camera. The shape of the captured pattern is combined with the spatial relationship between the light source and the camera, to determine the 3D position of the surface along the pattern. The main advantages of the method are speed and accuracy; a surface can be scanned from a single 2D image and processed into 3D surface in a few milliseconds [14]. Figure 8 shows 2D BMP image captured by scanner then converted to 3D surface by our 3D software.

The results in this research are divided into two parts: first, we apply the proposed image compression and decompression methods to 2D grey scale images of human faces. The original and decompressed images are used to generate 3D surface models from which direct comparisons are made in terms of perceived quality of the mesh and objective error measures such as root-mean-square-error (RMSE). Second, we repeat all procedures for 2D compression 2D decompression 3D reconstruction but this time with colour images of objects other than faces. Additionally, the computed 2D and 3D RMSE are used directly for comparison with JPEG and JPEG2000 techniques.

4.1 Compression, Decompression and 3D Reconstruction from Grey Scale Images

As described above the proposed image compression started with DWT. The level of DWT decomposition affects the image quality also the compression ratio, so we divided the results into two parts to show the effects of each independently: single level DWT and two levels DWT. Figure 9 shows the original 2D human faces tested by the proposed algorithm. Tables 1, 2 and 3 show the compressed size by using our algorithm with a single level and two levels DWT for Face1, Face2 and Face3, respectively also Fig. 10, illustrates scale parameter effects on compression data size.

Original 2D images (left) with 3D surface reconstruction (right). a Original 2D Face1 dimensions 1392 × 1040, Image size: 1.38 Mbytes, converted to 3D surface. b Original 2D Face2 dimensions 1392 × 1040, Image size: 1.38 Mbytes, converted to 3D surface. c Original 2D Face3 dimensions 1392 × 1040, Image size: 1.38 Mbytes, converted to 3D surface

Illustrates Tables 1, 2 and 3 as chart according to “Scale” and “Compressed Size”. a This chart illustrates Table 1 for FACE1 image by using different scale value in our approach, as shown in this figure the results by single level DWT and DCT (block size 16 x 16) approximately close to the results obtained by two levels DWT and DCT (block size 8 x 8). b This chart illustrates Table 2 for FACE2 image by using different scale value in our approach, as shown in this figure the results by single level DWT and DCT (block size 16 x 16) very close to the results obtained by two levels DWT and DCT (block size 16 x 16). c This chart illustrates Table 3 for FACE3 image by using different scale value in our approach, as shown in this figure the results by single level DWT and DCT (block size 16 x 16) approximately equivalent to the results obtained by two levels DWT and DCT (block size 16 x 16)

The proposed decompression algorithm (see Sect. 3) applied to the compressed image data recovers the 2D images which are then used by the 3D reconstruction to generate the respective 3D surface. The following figures, Figures 11, 12 and 13 show high-quality, median-quality and low-quality compressed images for; Face1, Face2 and Face3. Also, Tables 4, 5 and 6 show the time execution for the FMS-algorithm.

Decompressed 2D image Face1 by our proposed decompression method, and then converted to a 3D surface. a Decompressed 2D BMP images at Single level DWT converted to 3D surface; 3D surface with scale = 0.5 represents high quality image comparable to the original image, and 3D surface with scale = 2 represents median quality image approximately high quality image. Also 3D surface with scale = 5 is low quality image some parts of surface failing to reconstruct. Additionally, using a block size of 16 × 16 DCT further degrades the 3D surface. b Decompressed 2D BMP images at two levels DWT converted to 3D surface; 3D surface with scale = 2, 3 and DCT 8 × 8 represent low quality image surface with degradation. Additionally, using a block size of 16 × 16 DCT further degrades the 3D surface

Decompressed 2D image of Face2 image by our proposed decompression method, and then converted to 3D surface. a Decompressed 2D BMP images at Single level DWT converted to 3D surface; 3D surface with scale = 0.5 represents high quality image similar to the original image, and 3D surface with scale = 2 represents a median quality image. Also 3D surface with scale = 4 is low quality with surfaces lightly degraded. Additionally, a block size of 16 × 16 DCT used in our approach degrades some parts of 3D surface. b Decompressed 2D BMP images at two levels DWT converted to 3D surface; 3D surface with scale = 2, 4 with DCT 8 × 8 represent low quality image surface with degradation. Similarly, a block size of 16 × 16 DCT used in our approach degrades the 3D surface

Decompressed 2D of Face3 image by our proposed decompression method, and then converted to a 3D surface. a Decompressed 2D BMP images at Single level DWT converted to 3D surface; 3D surface with scale = 0.5 represent high quality image similar to the original image, and 3D surface with scale = 2 represents median quality image, 3D surface with scale = 3 and 4 are low quality image with slightly degraded surface, while using 16 × 16 block size does not seem to degrade the 3D surface. b Decompressed 2D BMP images at two levels DWT converted to 3D surface; 3D surface with scale = 3, 4 and DCT 8x8 represent low quality image degraded surface. However, the block size of 16 × 16 DCT used in our approach has a better quality 3D surface for higher compression ratios

It is shown through the pictures and tables above that the proposed compression algorithm is successfully applied to grey scale images. In particular, Tables 1, 2 and 3 show a compression of more than 99 % of the original image size compressed and the reconstructed 3D surfaces still preserve most of their quality. Some images are compressed by DCT with block size of 16 × 16 are also shown capable of generating high quality 3D surface. Also there is not much difference between block sizes of 8 × 8 and 16 × 16 for high quality reconstruction images with “Scale = 0.5”.

4.2 Compression, Decompression and 3D Reconstruction from Colour Images

Colour images contain red, green and blue layers. In JPEG and JPEG2000 colour layers are transformed to “YCbCr” layers before compression. This is because most of information about images available in layer “Y” while other layers “CrCb” contain less information [7, 8]. The proposed image compression tested with YCbCr layers, and then applied on true colour layers (red, green and blue). Figure 14 shows the original colour images tested by our approach.

Original 2D images with 3D surface conversion. a Original 2D “Wall” dimensions 1280 × 1024, Image size: 3.75 Mbytes, converted to 3D surface. b Original 2D “Room” dimensions 1280 × 1024, Image size: 3.75 Mbytes, converted to 3D surface. c Original 2D “Corner” dimensions 1280 × 1024, Image size: 3.75 Mbytes, converted to 3D surface

First the Wall, Room and Corner images as depicted in Fig. 14 were transformed to YCbCr before applying our proposed image compression—both using single level and two level DWT decomposition. Second, our approach was applied on the same colour images but this time using true colour layers. Tables 7, 8 and 9 show the compressed size for the colour images by the proposed compression algorithm: also Fig. 15 shows scale parameter effects on compression data size. Figures 16, 17 and 18 show decompressed colour images (Wall, Room and Corner, respectively) as 3D surface. Additionally, Tables 10, 11 and 12 illustrate the execution time for the FMS-algorithm at single level DWT for the colour images. Similarly, Tables 13, 14 and 15 show the FMS-algorithm time execution for the same colour images by using two levels DWT.

Illustrates Tables 7, 8 and 9 as chart according to “Scale” and “Compressed Size”. a Chart from Table 7 for Wall image by using different scale value in our approach, as shown in this figure the results obtained by our approach applied on RGB layers has better compression ratio than using YcbCr layers. b Chart from Table 8 for Room image by using different scale value in our approach, as shown in this figure the results obtained by our approach applied on RGB layers has better compression ratio than using YcbCr layers. c Chart from Table 9 for Corner image by using different scale value in our approach, as shown in this figure the results obtained by single level DWT with DCT (16 x 16) applied on YcbCr layers has better compression ratio than other options illustrated in this chart

Decompressed colour Wall image by our proposed decompression approach, and then converted to 3D surface. a Decompressed 2D BMP images at single level DWT converted to 3D surface; decompressed 3D surface by using RGB layer has better quality than YCbCr layer. b Decompressed 2D BMP images at two levels DWT converted to 3D surface, also decompressed 3D surface using RGB layer has better quality than YCbCr layer at higher compression ratio

Decompressed colour Room image by our proposed decompression method, and then converted to 3D surface. a Decompressed 2D BMP images at Single level DWT results converted to 3D surface; decompressed 3D surface by using RGB layer has better quality than YCbCr layer at higher compression ratio using both block sizes of 8 × 8 or 16 × 16 by DCT. b Decompressed 2D BMP images at two levels DWT converted to 3D surface; decompressed 3D surface by using RGB layer has better quality than YCbCr layer at higher compression ratio using block sizes of 8 × 8, also by using block 16 × 16 the surface is still approximately non-degraded

Decompressed colour “Corner” image by our proposed decompression method, and then converted to 3D surface. The decompressed 3D surface at single level DWT using YCbCr has better quality at higher compression ratio than using RGB layers, also at two levels DWT degradation appears and some parts from surface fail to reconstruct

It can be seen from the above Figs. 16, 17 and 18 and Tables 10, 11 and 12 that a single level DWT is applied successfully to the colour images using both YCbCr and RGB layers. Also the two levels DWT (Tables 13, 14 and 15) gives good performance, however, two levels did not perform well on YCbCr layer at higher compression ratios. Both colour images Wall and Room contain green stripe lines; this renders RGB layers more appropriate to be used with the proposed approach. On the other hand, for the image Corner, the YCbCr layer proved more appropriate.

4.3 Comparison with JPEG2000 and JPEG Compression Techniques

JPEG and JPEG2000 are both used widely in digital image and video compression, also for streaming data over the Internet. In this research we use these two methods for 3D image compression to show quality and compression ratio for comparison with our proposed image compression. The JPEG technique is based on the 2D DCT applied on the partitioned image into 8 × 8 blocks, and then each block encoded by RLE and Huffman encoding [2, 10]. The JPEG2000 is based on the multi-level DWT applied on complete image and then each sub-band quantized and coded by arithmetic encoding [1, 6]. Most image compression applications depend on JPEG and JPEG2000 and allow the user to specify a quality parameter to choose suitable compression ratio. If the image quality is increased the compression ratio is decreased and vice versa [18]. The comparison of these methods with our proposed one is based on for similar compression ratios; we test the image quality by using RMSE. The RMSE is a very popular quality measure, and can be calculated very easily between the decompressed and the original images [18, 19]. We also show visualization 3D surfaces for decompressed 2D images by JPEG and JPEG2000 as a means of comparison. Figures 19–24 shows the 3D reconstructed images respectively by JPEG and JPEG2000.

Decompressed 2D Face3 image by using JPEG2000 and JPEG algorithm, parts of images failed to reconstruct in 3D by JPEG2000 algorithm at 14 Kbytes, degradation appears on the surface by JPEG2000 under 7 Kbytes, also JPEG algorithm degrades the surface at compressed size 27 Kbytes (JPEG fails to compress under 27 Kbytes)

Decompressed Room image by using JPEG2000 and JPEG algorithm, degradation appears on surface by JPEG2000 fewer than 6 Kbytes, also JPEG2000 cannot reconstruct 3D surface matches with original surface (grey colour) at 4 Kbytes, similarly, JPEG algorithm fails to reconstruct 3D surface matching with original surface at 27 Kbytes

Decompressed Corner image by using JPEG2000 and JPEG algorithm, top-left surface decompressed successfully by JPEG2000, in top-right decompressed surface by JPEG2000 not matched with original surface (grey surface). Under 15 Kbytes, similarly, JPEG algorithm fails to compress successfully fewer than 31 Kbytes

In Tables 16, 17, 18, 19, 20 and 21 “Not Applicable” means the relevant algorithm cannot compress to the required size successfully. Also, the symbol “≈” refers to JPEG2000 and JPEG that can approximately compressed at the required size (i.e., added to compressed size ± 1 Kbyte).

5 Conclusion

This research has presented and demonstrated a novel method for image compression and compared the quality of compression through 3D reconstruction, 3D RMSE and the perceived quality of the 3D visualisation. The method is based on a two levels DWT transform and two levels DCT transform in connection with the proposed MMS algorithm. The results showed that our approach introduced better image quality at higher compression ratios than JPEG and JPEG2000 being capable of accurate 3D reconstructing at higher compression ratios. On the other hand, it is more complex than JPEG2000 and JPEG. The most important aspects of the method and their role in providing high quality image with high compression ratios are discussed as follows:

-

(1)

In a two levels DCT, the first level separates the DC-values and AC-values into different matrices; the second level DCT is then applied to the DC-values and this generates two new matrices. The size of the two new matrices are only a few bytes long (because they contain a large number of zeros), this process increases the compression ratio.

-

(2)

Since most of the high-frequency matrices contain lot of zeros as above, in this research we used the EZSN algorithm, to eliminate zeros and keep non-zero data. This process keeps significant information while reducing matrix sizes up to 50 % or more.

-

(3)

The MMS algorithm is used to replace each three coefficients from the high-frequencies matrices by a single floating-point value. This process converts each high-frequency matrix into a one-dimensional array, leading to increased compression ratios while keeping the quality of the high-frequency coefficients.

-

(4)

The FMS-algorithm represents the core of our search algorithm for finding the exact original data from a one-dimensional array (i.e., Reduced-Array) converting to a matrix, and depends on the organized key-values and Limited-Data. According to time execution tables, the FMS-algorithm finds values in a few microseconds, for some high-frequencies needs just few nanoseconds at higher compression ratios.

-

(5)

The key-values and Limited-Data are used in coding and decoding an image, without these information images cannot be reconstructed.

-

(6)

Our proposed image compression algorithm was tested on true colour images (i.e., red, green and blue), obtained higher compression ratios and high image quality for images containing green striped lines. Additionally, our approach has been tested on YCbCr layers with good quality at higher compression ratios.

-

(7)

Our approach gives better visual image quality compared to JPEG and JPEG2000. This is because our approach removes most of the block artefacts caused by the 8 × 8 two-dimensional DCT. Also our approach uses a single level DWT or two levels DWT rather than multi-level DWT as in JPEG2000; for this reason blurring typical of JPEG2000 is removed in our approach. JPEG and JPEG2000 failed to reconstruct a surface in 3D when compressed to higher ratios while it is demonstrated that our approach can successfully reconstruct the surface and thus, is superior to both on this aspect.

However, there are a larger number of steps in the proposed compression and decompression algorithm than in JPEG and JPEG2000. Also the complexity of FMS-algorithm leads to increased execution time for decompression, because the FMS-algorithm is based on a binary search method.

Future work includes search optimization of the FMS algorithm, as per current implementation this is a constraining step for real-time applications such as video data streaming over the Internet.

References

Acharya, T., & Tsai, P. S. (2005). JPEG2000 standard for image compression: Concepts, algorithms and VLSI architectures. New York: Wiley.

Ahmed, N., Natarajan, T., & Rao, K. R. (1974). Discrete cosine transforms. IEEE Transactions on Computer, C-23, 90–93.

Al-Haj, A. (2007). Combined DWT–DCT digital image watermarking. Science Publications, Journal of Computer Science, 3(9), 740–746.

Chen, P., & Chang, J.-Y. (2013). An adaptive quantization scheme for 2-D DWT coefficients. International Journal of Applied Science and Engineering, 11(1), 85–100.

Christopoulos, C., Askelof, J., & Larsson, M. (2000). Efficient methods for encoding regions of interest in the upcoming JPEG 2000 still image coding standard. IEEE Signal Processing Letters, 7(9), 247–249.

Esakkirajan, S., Veerakumar, T., Senthil Murugan, V., & Navaneethan, P. (2008). Image compression using multiwavelet and multi-stage vector quantization. WASET International Journal of Signal Processing, 4(4), 246–253.

Gonzalez, R. C., & Woods, R. E. (2001). Digital image processing. Boston: Addison Wesley Publishing Company.

Horiuchi, T., & Tominaga, S. (2008). Color image coding by colorization approach. EURASIP Journal on Image and Video Processing. doi:10.1155/2008/158273.

Knuth, D. (1997). Sorting and searching: Section 6.2.1: Searching an ordered table. In The art of computer programming (3rd Ed., Vol. 3, pp. 409–426). Reading, MA: Addison-Wesley. ISBN 0-201-89685-0.

Liu, K.-C. (2012). Prediction error preprocessing for perceptual color image compression. EURASIP Journal on Image and Video Processing. doi:10.1186/1687-5281-2012-3.

Richardson, I. E. G. (2002). Video codec design. New York: Wiley.

Rodrigues, M., Kormann, M., Schuhler, C., & Tomek, P. (2013). Structured light techniques for 3D surface reconstruction in robotic tasks. In J. Kacprzyk (Ed.), Advances in intelligent systems and computing (pp. 805–814). Heidelberg: Springer.

Rodrigues, M., Kormann, M., Schuhler, C., & Tomek, P. (2013b). Robot trajectory planning using OLP and structured light 3D machine vision. In Lecture notes in computer science Part II. LCNS (Vol. 8034(8034), pp. 244–253). Heidelberg: Springer.

Rodrigues, M., Kormann, M., Schuhler, C., & Tomek, P. (2013d). An intelligent real time 3D vision system for robotic welding tasks. In Mechatronics and its applications. IEEE Xplore (pp. 1–6).

Rodrigues, M., Osman, A., & Robinson, A. (2013). Partial differential equations for 3D data compression and reconstruction. Journal of Advances in Dynamical Systems and Applications, 12(3), 371–378.

Rodrigues, M., Robinson, A., & Osman, A. (2010). Efficient 3D data compression through parameterization of free-form surface patches. In Proceedings of the 2010 international conference on signal process and multimedia applications (SIGMAP, pp. 130–135). Athena: IEEE.

Sadashivappa, G., & Ananda Babu, K. V. S. (2002). Performance analysis of image coding using wavelets. International Journal of Computer Science and Network Security, 8(10), 144–151.

Sayood, K. (2006). Introduction to data compression (3rd Ed.). San Francisco: Morgan Kaufman Publishers.

Schaefer, G. (2014). Soft computing-based colour quantization. EURASIP Journal on Image and Video Processing. doi:10.1186/1687-5281-2014-8.

Siddeq, M. M. (2012). Using Sequential Search Algorithm with Single level Discrete Wavelet Transform for Image Compression (SSA-W). Journal of Advances in Information Technology, 3(4), 236–249.

Siddeq, M. M., & Al-Khafaji, G. (2013). Applied Minimize-Matrix-Size Algorithm on the Transformed images by DCT and DWT used for image Compression. International Journal of Computer Applications, 70(15), 34–40.

Siddeq, M. M., & Rodrigues, M. (2014a). A new 2D image compression technique for 3D surface reconstruction. In 18th International conference on circuits, systems, communications and computers, Santorin Island, Greece (pp. 379–386).

Siddeq, M. M., & Rodrigues, M. A. (2014b). A Novel Image Compression Algorithm for high resolution 3D Reconstruction. 3D Research, Springer. doi:10.1007/s13319-014-0007-6.

Stamm, M. C., & Ray Liu, K. J. (2010). Wavelet-based image compression anti-forensics. In Proceedings of 2010 IEEE 17th international conference on image processing, Hong Kong, 26–29 September 2010 (pp. 1737–1740).

Suzuki, T., & Ikehara, M. (2013). Integer fast lapped transforms based on direct-lifting of DCTs for lossy-to-lossless image coding. EURASIP Journal on Image and Video Processing. doi:10.1186/1687-5281-2013-65.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Siddeq, M.M., Rodrigues, M.A. A Novel 2D Image Compression Algorithm Based on Two Levels DWT and DCT Transforms with Enhanced Minimize-Matrix-Size Algorithm for High Resolution Structured Light 3D Surface Reconstruction. 3D Res 6, 26 (2015). https://doi.org/10.1007/s13319-015-0055-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13319-015-0055-6