Abstract

This paper studies dynamic mechanism design in a Markovian environment and analyzes a direct mechanism model of a principal-agent framework in which the agent is allowed to exit at any period. We consider that the agent’s private information, referred to as state, evolves over time. The agent makes decisions of whether to stop or continue and what to report at each period. The principal, on the other hand, chooses decision rules consisting of an allocation rule and a set of payment rules to maximize her ex-ante expected payoff. In order to influence the agent’s stopping decision, one of the terminal payment rules is posted-price, i.e., it depends only on the realized stopping time of the agent. This work focuses on the theoretical design regime of the dynamic mechanism design when the agent makes coupled decisions of reporting and stopping. A dynamic incentive compatibility constraint is introduced to guarantee the robustness of the mechanism to the agent’s strategic manipulation. A sufficient condition for dynamic incentive compatibility is obtained by constructing the payment rules in terms of a set of functions parameterized by the allocation rule. The payment rules are then pinned down up to a constant in terms of the allocation rule by deriving a first-order condition. We show cases of relaxations of the principal’s mechanism design problem and provide an approach to evaluate the loss of robustness of the dynamic incentive compatibility when the problem solving is relaxed due to analytical intractability. A case study is used to illustrate the theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Mechanism design theory provides a theoretical foundation for designing games that can induce desired outcomes. The players of the game have private information that is not publicly observable. Hence, the mechanism designer’s collective decisions have to rely on the players to reveal their private information. This information asymmetry is an important feature of mechanism design problems. The revelation principle allows the mechanism designer to focus on a class of incentive-compatible direct mechanisms to replicate equilibrium outcomes of indirect mechanisms. In the celebrated work by Vickery ([52]), it has been shown that the seller receives the same expected revenue independent of the mechanism within a large class of auctions. Vickrey–Clark–Groves (VCG) mechanism is an example of truthful mechanism to achieve a social–optimal solution. This work investigates mechanism design problems in a dynamic environment, in which a player, aka the agent, sends a sequence of messages based on the gathered information to the designer. The designer, aka the principal, chooses dynamic rules of encounter of the agent to maximize the profit based on the messages. Our model allows the agent to decide how to report his private information to the principal and whether to stop the mechanism immediately or continue to the future at the same time. These two coupled decision makings depend on the agent’s dynamic private information that endogenously depends on the past outcomes of the mechanism. The model covers different economic scenarios when the agent establishes an agreement with the principal to, for example, dynamically purchase private or public goods when his valuation stochastically changes over time or frequently consume experience goods when his preference is refined after every usage of the goods, while the agent is allowed to terminate this agreement at any period of time. Unlike the mechanism design with deadline or a solid commitment period, the agent in our model owns the right to stop. This additional freedom reduces the agent’s risk in the long-term relationship with the principal due to the uncertainty of the dynamic environment. However, agent’s such freedom complicates the principal’s characterization of the incentive compatibility in the dynamic environment. In this work, we aim to settle the design regimes of such dynamic mechanism by characterizing the allocation and the payment rules and elaborate the incentive compatibility of the mechanism when the agent with time-varying private information couples his decision making of how to reveal his private information with his optimal stopping decision.

Many real-world problems are fundamentally dynamic in nature. Research of dynamic mechanism design has studied many applications in optimal auctions (e.g., [18, 30]), screening (e.g., [1, 15, 16]), optimal taxation (e.g., [19, 34]), contract design (e.g., [55, 57]), matching market (e.g., [2, 3]), to name a few. In dynamic mechanism problems, there are mechanisms without private information. For example, in airline revenue management problems, an airline makes decisions about seat pricing on a flight by taking into account the time-varying inventory and the time evolution of the customer base. In this paper, however, we consider an information-asymmetric dynamic environment in which the agent privately possesses information that evolves over time. The time evolution of the private information may be caused by external factors, the past observations, as well as the decisions from the principal, as when the agent employs learning-by-doing regimes. For example, in repeated sponsored search auctions, the advertisers privately learn about the profitability of clicks on their ads based on evaluations of the past ads as well as observations from market analysis. In this work, we consider a dynamic environment, when the agent’s private information changes endogenously due to the outcomes of the past decision makings. Evidence in many economic scenarios has shown that people’s past decisions often play a significant role in shaping their future preferences ([56]). Business models have taken into account the belief that customers’ preferences can be changed endogenously by using the products or services to form the habit of the customers and encourage long-term shopping sprees. For example, many products and services, such as newspapers, online video streaming, software as a service, food delivery, provide free trial offers to attract new customers to commit long-term subscriptions.

Optimal stopping theory studies the timing decisions under conditions of uncertainty and has been successfully adopted in applications of economics, finance, and engineering. Examples include gambling problems (e.g., [17, 23]), option tradings (e.g., [4, 28, 33]), and quick detection problems (e.g., [29, 43, 51]). This paper studies a class of general dynamic decision-making models, in which the agent has the right to stop the mechanism at any period based on his current observations and the anticipations of the future. The agent adopts a stopping rule and is allowed to realize the stopping time in any period before the mechanism terminates naturally upon reaching the final period. In contract design problems, for example, allowing the stopping decision is made as a clause of a dynamic contract that specifies the agent’s right to terminate the agreement at any period. However, our optimal stopping setting is fundamentally different from the contract intra-deal renegotiation (i.e., renegotiation within the life of the contract) and the contract break. In general, a renegotiation occurs due to the failure of one party to fulfill its obligations or the inability of one party to meet its commitments. In such cases, one side of the participants seeks relief of its commitments or wishes to terminate the agreement before the term of that agreement has concluded (see, e.g., [49]). The consequence of the renegotiation might be the termination of the contract or a new contract with modified terms and clauses. Our stopping setting, however, is not due to any breach of the contract by any participant or the inability of maintaining the agreement; it is not a consequence of renegotiations of the contract. Instead, early termination due to the stopping rule is the right of the agent and lies in the commitment of the principal’s dynamic mechanism. Once the agent decides to terminate the contract at a specific period, he cannot break the dynamic contract by refusing the outcomes (i.e., allocations and payments) that have already been realized up to that period.

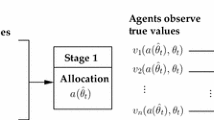

We consider a finite horizon Markovian environment, in which the agent can observe the private information, referred to as the state, that arrives dynamically at the beginning of each period. The dynamic information structure is governed by a stochastic process characterized by the principal’s decision rules, the transition kernels, and the agent’s strategic behaviors. After observing his state at each period, the agent chooses a strategy to report his state to the principal and decides whether to stop immediately or to continue. Conditioning on the reported information (including the stopping decision), the principal provides an allocation to the agent and induces a payment. The principal aims to maximize her ex-ante expected payoff by choosing feasible decision rules including a set of allocation rules and a set of payment rules. The principal provides three payment rules including an intermediate payment rule that specifies a payment based on the report when the agent decides to continue and two terminal payment rules. One of the terminal payment rules is state-dependent and the other is posted-price in the sense that this payment rule depends only on the realized stopping time. The posted-price payment rule enables the principal to influence the agent’s stopping decision without taking into account the agent’s private information. This state-independent terminal payment rule could be the early termination fee to disincentivize the agent from early stopping (when the preferences of the principal and the agent are not aligned), or it could be a reward to elicit the agent to stop at certain periods before the final period to fulfill the principal’s interests (when the preferences of the principal and the agent are aligned or partially aligned). Under some monotone conditions, the optimal stopping rule can be reformulated as a threshold rule with a time-dependent threshold function. The threshold rule simplifies the principal’s design of the posted-price payment rule as well as the agent’s reasoning process for decision makings.

The design problem in this work faces the challenges from the multidimensional interdependence of the agent’s joint decision of reporting and stopping at the current period and the planned ones for the future. On the one hand, by fixing the current and the planned future reporting strategies, the agent’s stopping decision is made by comparing the payoff if he stops immediately and the best-expected payoff he can anticipate from the future. On the other hand, with a fixed stopping decision, the (current and the planned future) reporting strategies are chosen by comparing the expected payoffs of different reporting strategies, which determines the expected instantaneous payoff at each period up to the effective time horizon pinned down by the stopping decision. Therefore, the agent’s stopping decision enters the principal’s characterization of the dynamic incentive compatibility through this dynamic interdependence. Given the mechanism, the stopping and the reporting decisions together determine the agent’s optimal behaviors. The coupling of these two decisions in the analysis of incentive compatibility distinguishes this work from other dynamic mechanism design problems.

We define the notion of dynamic incentive compatibility in terms of Bellman equations and address the challenge induced by the agent’s dynamic multidimensional decision makings via establishing a one-shot deviation principle (see, e.g., [12]). The one-shot deviation principle has uncovered a foundation of optimality in game theory. It states that if the agent’s deviation from truthful reporting is not profitable for one period, then any finite arbitrary deviations from truthfulness are not profitable. Monotonicity regarding the designer’s allocation rules with respect to the agent’s private information is an important result for the implementability of mechanism design. Consider a single good auction in which the states are bidders’ valuations for a single good and the outcomes are the probabilities for the agent to win the good. Here, the notion of monotonicity is that the probability of winning the good is non-decreasing in the reported state (see, e.g., [11, 35]). Myerson [35] has shown that monotonicity is sufficient for implementability in a one-dimensional domain. However, in general, monotonicity acts only as a necessary condition. Rochet [46] has constructed a necessary and sufficient condition, called cyclic monotonicity, under which one can design a mechanism such that truthful reporting is optimal for the rational agent. In this work, we describe a set of monotonicity conditions through inequalities characterized by functions of the allocation rules which we call potential functions. Given the optimality of the stopping rule, we represent each payment rule by the potential functions. By applying the envelope theorem, we formulate the potential functions in closed form in terms of the allocation rule. Our main results, Propositions 2-4, provide characterizations of the dynamic incentive compatibility when the agent with time-varying private information makes coupled decisions of reporting and stopping in a Markovian dynamic environment. The characterizations contribute as design principles by explicitly constructing the three payment rules in terms of the allocation rule and formulating the sufficient and the necessary conditions of dynamic incentive compatibility and facilitate a solid analytical view of dynamic mechanism design to elicit the agent’s truthful reporting and optimal stopping. The sufficient and the necessary conditions yield a revenue equivalence property for the dynamic environment. We also show that given the threshold function and the allocation rule, the state-independent payment rule is unique up to a constant. We observe that the posted-price payments from the state-independent payment rule are restricted by a class of regular conditions. Due to the analytical intractability, relaxation approaches are applied to the principal’s mechanism design problem. We also provide a sufficient condition to evaluate the loss of the robustness of the dynamic incentive compatibility due to the relaxations and the approximations used to solve the principal’s problem.

1.1 Related Work

General settings regarding the source of the dynamics in the related literature can be divided into two categories. On the one hand, the literature on dynamic mechanism design considers the dynamic population of participants with static private information. Parkes and Singh [39] have provided an elegant extension of the social-welfare-maximizing (efficiency) VCG mechanism to an online mechanism design framework that studies sequential allocation problems in a dynamic population environment. In particular, they have considered the setting when each self-interested agent arrives and departs dynamically over time. The private information in their model includes the arrival and the departure time as well as the agent’s valuation about different outcomes. However, the agents do not learn new private information or update their private information. Pai and Vohra [37] have proposed a dynamic mechanism model of a similar setting but focusing on the profit maximization (optimality) of the designer. Other works focusing on this setting include, e.g., [14, 21, 22, 37, 48, 54]. On the other hand, there are a number of works studying the problems of the static population where the underlying framework is dynamic because of the time evolution of the private information. This category of research has been pioneered by the work of Baron and Besanko [9] on the regulation of a monopoly and the contributions of Courty and Hao [15] on a sequential screening problem. There is a large amount of work in this category including, for instance, the dynamic pivot mechanisms (e.g., [10, 25]) and dynamic team mechanisms (e.g., [6, 8, 36]). Pavan et al. [42] have provided a general dynamic mechanism model in which the dynamic of the agents’ private information is captured by a set of kernels that is applicable for different behaviors of the time evolution including the learning-based and i.i.d. evolution. They have used a Myersonian approach and designed a profit-maximizing mechanism with monotonic allocation rules. Kakade et al. [25] have studied a dynamic virtual-pivot mechanism and provided conditions on the dynamics of the agents’ private information. They have shown an optimal mechanism under the environment they call separable. Bergemann and Välimäki [10] and Parkes [38] have provided surveys of recent advances in dynamic mechanism design.

The challenges of both settings of dynamics described above come from the information asymmetry between the designer and the agents. Most of the mechanism design problems study the direct revelation mechanism, in which guaranteeing the incentive compatibility becomes essentially important. In many dynamic population mechanism problems with static private information, the incentive compatibility constraints are essentially static ([25]). The mechanisms with dynamic private information, however, require efforts to guarantee incentive compatibility. Monotonicity is an important property of incentive-compatible mechanism design that is widely used in the literature on dynamic mechanism design (see, e.g., [18, 25, 42]).

Many situations in economics can be modeled as stopping problems. There is recent literature on the mechanism design with stopping time. Kruse and Strack [26] have studied optimal stopping as a mechanism design problem with monetary transfers. In their model, the agent privately observes a stochastic process of a payoff-relevant state. The incentive-compatible mechanism considered in their work contains two mechanism rules where one rule maps the agent’s report into a stopping decision and the other rule maps the agent’s report into a payment that is realized only at the stopping time. Hence, their model considers that the mechanism determines the optimal stopping decision for the agent based on the report and the agent has only one decision (i.e., reporting) to make. Pavan et al. [41] have described an application of their dynamic mechanism model to the optimal stopping problem, where the allocation rule provided by the principal is the stopping rule. Basic formats of stopping rules have been summarized in Lovász andWinkler [31] and for rigorous mathematical formulations of general stopping problems, see Peskir and Shiryaev [44].

1.2 Organization

The rest of the paper is organized as follows. In Sect. 2, we introduce the dynamic environment and specify the key concepts and notations. Section 3 formulates the dynamic principal-agent problem and determines the optimal stopping rule for the agent. In Sect. 4, we describe the implementability of the dynamic principal-agent model by defining the dynamic incentive compatibility constraint and reformulating the optimal stopping rule as a threshold rule. Section 5 characterizes the dynamic incentive compatibility by establishing the sufficient and the necessary conditions and showing the properties of revenue equivalence. In Sect. 6, we formally describe the optimization problem of the principal and show examples of its relaxations due to the analytical intractability. We also provide an approach to evaluate the loss of robustness of the dynamic incentive compatibility due to the relaxations. A case study is given in Sect. 6.2 as a theoretical illustration. Section 7 concludes the work.

2 Dynamic Environment

In this section, we describe the dynamic environment of the model. As conventions, let \({\tilde{x}}_{t}\) represent the random variable such that \(x_{t}\in X_{t}\) is a realized sample of \({\tilde{x}}\). By \(h^{x}_{s,t}\equiv \{x_{s},...x_{t}\}\in \prod _{k=s}^{t}X_{k}\), we denote the history of x from period s up to t (including t), with \(h^{x}_{t}\equiv h^{x}_{1,t}\), \(h^{x}_{t,t}\equiv h^{x}_{t,t-1} \equiv x_{t}\), for all \(t\in {\mathbb {T}}\). For any measurable set X, \(\varDelta (X)\) is the set of probability measures over X. Table 1 summarizes the main notations of this paper.

There are two rational (risk neutral, under the expected utility hypothesis) participants in the mechanism: a principal (indexed by 0, she) and an agent (indexed by 1, he). We consider a finite-time horizon with discrete time \(t \in {\mathbb {T}} \equiv \{1,2,\dots , T\}\). Let \({\mathbb {T}}_{t}\equiv \{t,t+1,\dots , T\}\), for all \(t\in {\mathbb {T}}\). At each period t, the agent observes his state \(\theta _{t}\in \varTheta _{t}\equiv {[{\underline{\theta }}_{t}, \bar{\theta }_{t}]} \subset {\mathbb {R}}\). Based on \(\theta _{t}\), the agent sends a report \(m_{t}\in M_{t}\) to the principal. In this paper, we restrict our attention on the direct mechanism ([20]), in which the message space coincides with the state space, i.e., \(M_{t}=\varTheta _{t}\), for all \(t\in {\mathbb {T}}\). Upon receiving the report \({\hat{\theta }}_{t}\), the principal specifies an allocation \(a_{t}\in A_{t}\) and a payment \(p_{t}\in {\mathbb {R}}\). Each \(A_{t}\) is a measurable space of all possible allocations. Let \(\sigma _{t}: \prod _{s=1}^{t}\varTheta _{s} \times \prod _{s=1}^{t-1}\varTheta _{s} \times \prod _{s=1}^{t-1} A_{s} \mapsto \varTheta _{t}\) be the reporting strategy at t, such that \({\hat{\theta }}_{t}=\sigma _{t} (\theta _{t}|h^{\theta }_{t-1}, h^{{\hat{\theta }}}_{t-1}, h^{a}_{t-1})\) is the report sent to the principal at period t, given his period-t true state \(\theta _{t}\) and the histories \(h^{\theta }_{t-1}, h^{{\hat{\theta }}}_{t-1}, h^{a}_{t-1}\). The allocation and the payment are chosen, respectively, by the decision rules \(\{\alpha , \phi \}\), where \(\alpha \equiv \{\alpha _{t} \}_{t\in {\mathbb {T}} }\) is a collection of (instantaneous) allocation rules \(\alpha _{t}: \prod _{s=1}^{t}\varTheta _{s} \mapsto A_{t}\) and \(\phi \equiv \{\phi _{t} \}_{t\in {\mathbb {T}}}\) is a collection of (instantaneous) payment rules \(\phi _{t}:\prod _{s=1}^{t}\varTheta _{s}\mapsto {\mathbb {R}}\), such that the principal specifies an allocation \(a_{t}=\alpha _{t}({\hat{\theta }}_{t}|h^{{\hat{\theta }}}_{t-1})\) and a payment \(p_{t} = \phi _{t}({\hat{\theta }}_{t}|h^{{\hat{\theta }}}_{t-1})\) when \({\hat{\theta }}_{t}\) is reported at the current period and \(h^{{\hat{\theta }}}_{t-1}\) has been reported up to \(t-1\).

The mechanism allows the agent to leave the mechanism at any period \(t\in {\mathbb {T}}\) by deciding whether to stop or continue at each period according to his optimal stopping rule. Hence, the agent’s decision of \(\sigma _{t}\) is coupled with his stopping decision at each period t. Therefore, the principal’s characterization of incentive compatibility has to take into account the agent’s coupled decision makings. To influence the agent’s stopping decision, the principal uses a terminal payment rule \(\rho : {\mathbb {T}} \mapsto {\mathbb {R}}\), with \(\rho (T)=0\), which is independent of agent’s reports and specifies an additional payment \(\rho (t)\) at period t if the agent decides to stop at t. To distinguish the intermediate periods and the terminal period, let \(\xi _{t}\equiv \phi _{t}\) when the agent realizes his stopping time at period t, such that the agent receives a payment \(p_{t} = \xi _{t}({\hat{\theta }}_{t}|h^{{\hat{\theta }}}_{t-1}) + \rho (t)\).

The mechanism is information-asymmetric because \(\theta _{t}\) is privately possessed by the agent for every \(t\in {\mathbb {T}}\) and the principal can learn the true state only through the report \({\hat{\theta }}_{t}\). The mechanism is dynamic because the agent’s state \(\theta _{t}\) evolves endogenously over time and the decisions of both the agent and the principal are made over multiple periods. The state dynamics lead to the time evolution of probability measures of the states. As a result, the expectations of future behaviors at different periods are in general different from each other.

2.1 Markovian Dynamics

We consider that the agent’s state endogenously evolves over time in a Markovian environment and describe the details of the dynamics and the underlying stochastic process that governs the state dynamics.

Definition 1

(Markovian Dynamics) The Markovian (endogenous) dynamics are characterized by a set of transition kernels \(K=\{K_{t}\}_{t\in {\mathbb {T}}}\), where \(K_{t}: \varTheta _{t-1} \times \prod _{s=1}^{t-1} A_{s} \mapsto \varDelta (\varTheta _{t})\) is the period-t transition kernel of the state, i.e., \({\tilde{\theta }}_{t}\sim K_{t}(\theta _{t-1}, h^{a}_{t-1})\). Let \(F_{t}(\cdot |\) \(\theta _{t-1}, h^{a}_{t-1} )\) be the cumulative distribution function (c.d.f.) of \({\tilde{\theta }}_{t}\), with \(f_{t}(\cdot | \theta _{t-1}, h^{a}_{t-1})\) as the probability density function (p.d.f.). \(F_{1}\) and \(f_{1}\) are given at the initial period.

In Markovian dynamics, the generation of the next-period state \(\theta _{t+1}\) depends on the current-period state \(\theta _{t}\) and the history of allocations \(h^{a}_{t}\). Hence, \(\theta _{t+1}\) is independent of the history of true states \(h^{\theta }_{t-1}\) given \(\theta _{t}\). From Ionescu–Tulcea theorem (see, e.g., [5]), the transition kernel K, the allocation rule \(\alpha \), and the agent’s reporting strategy \(\sigma \) define a unique stochastic process (i.e., a probability measure) that governs the state dynamics. Let \(\varXi _{\alpha ; \sigma }\) denote the stochastic process. Given any realizations of period-t state and history of states, we define the period-t interim process in the following definition.

Definition 2

(Interim Process) The interim process \(\varXi _{\alpha ;\sigma }\big [h^{\theta }_{t}\big ]\) at period \(t\in {\mathbb {T}}\) consists of

-

(i)

a deterministic process of the realized \(h^{\theta }_{t}\in \prod _{s=1}^{t} \varTheta _{s}\) up to time t, and

-

(ii)

a stochastic process starting from \(t+1\) that is uniquely characterized by period-t state \(\theta _{t}\), history of allocations \(h^{a}_{t}\in \prod _{s=1}^{t} A_{s}\), the allocation rule \(\alpha ^{T}_{t+1}\equiv \{\alpha _{s}\}_{s\in {\mathbb {T}}_{t+1}}\), and the planned reporting strategies \(\sigma ^{T}_{t+1}=\{\sigma _{s}\}_{s\in {\mathbb {T}}_{t+1}}\), given the kernels \(K^{T}_{t+1}\equiv \{K_{s}\}_{s\in {\mathbb {T}}_{t+1}}\).

Given the processes \(\varXi _{\alpha ;\sigma }\) and \(\varXi _{\alpha ;\sigma }[h^{\theta }_{t}]\), we specify the timing of the mechanism as follows.

-

I.

Ex-ante stage: there is no realization of state, i.e., before the mechanism starts. At this stage, the randomness of the future is characterized by \(\varXi _{\alpha ;\sigma }\).

-

II.

Interim stage (at period t): \(h^{\theta }_{t}\) are realized according to the Markovian dynamics. At each (period-t) interim stage:

-

1.

Given the current state \(\theta _{t}\), the agent chooses a report \({\hat{\theta }}_{t}=\sigma _{t}(\theta _{t}|h^{\theta }_{t-1}, h^{{\hat{\theta }}}_{t-1}, h^{a}_{t-1})\), and decides whether to stop immediately or continue to the next period.

-

2.

Upon receiving \({\hat{\theta }}_{t}\), the principal specifies an allocation \(a_{t}= \alpha _{t}({\hat{\theta }}_{t}|h^{{\hat{\theta }}}_{t-1})\), a payment \(p_{t} = \phi _{t}({\hat{\theta }}_{t}|h^{{\hat{\theta }}}_{t-1})\) or \(p_{t}=\xi _{t}({\hat{\theta }}_{t}|h^{{\hat{\theta }}}_{t-1})+\rho (t)\) if the agent decides to continue or to stop, respectively.

At each interim stage, the randomness of the future is characterized by \(\varXi _{\alpha ;\sigma }[h^{\theta }_{t}]\). We denote the corresponding expectations of \(\varXi _{\alpha ;\sigma }\) and \(\varXi _{\alpha ;\sigma }[h^{\theta }_{t}]\) by \({\mathbb {E}}^{\varXi _{\alpha ;\sigma }}\big [\cdot \big ]\) and \({\mathbb {E}}^{\varXi _{\alpha ;\sigma }[h^{\theta }_{t}]}\big [\cdot \big ]\), respectively. We suppress the notation \(\sigma \) when it is a truthful reporting strategy.

-

1.

3 Dynamic Principal-Agent Problem

In this section, we describe the principal-agent problem by identifying their respective objectives. Let \(u_{i,t}: \varTheta _{t} \times A_{t} \mapsto {\mathbb {R}}\) denote the (instantaneous) utility of the participant i for \(i\in \{0,1\}\) such that \(u_{i,t}(\theta _{t}, a_{t})\) is the utility that the participant i receives when the agent’s true state is \(\theta _{t}\) and the allocation is \(a_{t}\) for all \(t\in {\mathbb {T}}\). Given any allocation rule \(\alpha \), we assume that \(u_{i,t}\) is Lipschitz continuous in \(\theta _{t}\), for all \(\theta _{t}\in \varTheta _{t}\), all \(t\in {\mathbb {T}}\).

Given any reporting strategy \(\sigma \), define the ex-ante expected values of the principal and the agent, respectively, for any time horizon \(\tau \in {\mathbb {T}}\) as follows:

and

where \(\delta \in (0,1]\) is the discount factor. Let \(J^{\alpha ,\phi ,\xi ,\rho }_{0}(\cdot ;\sigma ):{\mathbb {T}} \mapsto {\mathbb {R}}\) and \(J^{\alpha ,\phi ,\xi ,\rho }_{1}(\cdot ;\sigma ): {\mathbb {T}} \mapsto {\mathbb {R}}\) denote the ex-ante expected payoffs of the principal and the agent, respectively, given as follows:

-

Principal:

$$\begin{aligned} \begin{aligned} J^{\alpha , \phi , \xi , \rho }_{0}(\tau ;\sigma )\; \equiv&Z^{\alpha , \phi , \xi }_{0}(\tau ;\sigma ) - \rho (\tau ) ; \end{aligned} \end{aligned}$$(1) -

Agent:

$$\begin{aligned} \begin{aligned} J^{\alpha , \phi , \xi , \rho }_{1}(\tau ;\sigma ) \;\equiv&Z^{\alpha , \phi , \xi }_{1}(\tau ;\sigma ) + \rho (\tau ). \end{aligned} \end{aligned}$$(2)

Similarly, we define the interim expected value of the agent evaluated at period t, when the histories are \((h^{\theta }_{t-1}, h^{{\hat{\theta }}}_{t-1}, h^{a}_{t-1})\), \(\theta _{t}\) is observed, and \(\sigma _{t}(\theta _{t})\) is reported, as follows, for any \(\tau \in {\mathbb {T}}_{t}\):

with \(Z^{\alpha , \phi , \xi }_{1,t}(\tau , \theta _{t}|h^{\theta }_{t-1})\equiv Z^{\alpha , \phi , \xi }_{1,t}(\tau , \theta _{t},\theta _{t};\sigma |h^{\theta }_{t-1})\) when \(\sigma \) is truthful at every period. Then, the corresponding period-t interim expected payoff of the agent can be defined as follows:

with \(J^{\alpha , \phi , \xi , \rho }_{1,t}(\tau , \theta _{t}|h^{\theta }_{t-1}) \equiv J^{\alpha , \phi , \xi , \rho }_{1,t}(\tau , \theta _{t},\theta _{t};\sigma |h^{\theta }_{t-1})\) when \(\sigma \) is truthful at every period.

At the ex-ante stage, the principal provides a take-it-or-leave-it offer to the agent by taking into account the agent’s stopping rule. Given the stopping rule, the time horizon \(\tau \) of the ex-ante expected payoffs can be calculated. The principal aims to maximize her ex-ante expected payoff (1) by anticipating the agent’s planned reporting strategy and the stopping time, by choosing the decision rules \(\{\alpha ,\phi ,\xi , \rho \}\). The agent with his planned reporting strategy \(\sigma \) decides whether to accept the offer by checking the following rational participation (RP) constraint:

under which the agent expects a nonnegative payoff by participating. Besides the RP constraint, the principal also wants to incentivize the agent to truthfully reveal his true state in the direct mechanism. To achieve this, the principal needs to impose the incentive compatibility (IC) constraint in addition to the RP constraint to guarantee that truthful reporting at each period is for the agent’s best interests.

With the knowledge of the Markovian dynamics, the agent can determine his current reporting strategy and plan his future behaviors. Suppose at period t, the agent observes \(\theta _{t}\) and reports \({\hat{\theta }}_{t}\). The report \({\hat{\theta }}_{t}\) leads to a current-period allocation \(a_{t}=\alpha _{t}({\hat{\theta }}_{t}|h^{{\hat{\theta }}}_{t-1})\). As a rational participant, the current-period decision making of the agent aims to maximize his interim expected payoff (4). Due to the Markovian dynamics, the probability measures of each of the future states depend on the current state \(\theta _{t}\) and the history of allocations \(h^{a}_{t}\). Hence, the agent’s decision of how to report his current-period state is independent of the past true states but depends on \(h^{a}_{t-1}\) and \(h^{{\hat{\theta }}}_{t-1}\) (through \(h^{a}_{t-1}\)), i.e., \({\hat{\theta }}_{t}=\sigma _{t}(\theta _{t}| h^{{\hat{\theta }}}_{t-1}, h^{a}_{t-1})\). (Hereafter, we will drop \(h^{\theta }\) in the notation of the agent’s reporting strategy.) Therefore, guaranteeing IC when the agent has reported truthfully at all past periods is sufficient to ensure IC when the agent has a history of arbitrary reports. Given the dependence of the future states on the histories, the agent can plan his future reporting strategies from period \(t+1\) onward to obtain an optimal interim expected payoff. As a result, the principal’s craft of IC constraint at each period needs to be coupled with the agent’s current and his planned future behaviors. As described in Sect. 3.2, the agent’s adoption of stopping rule further complicates the principal’s guarantee of IC.

3.1 Main Assumptions

We introduce the following assumptions to support our theoretical analysis of the dynamic model with optimal stopping time.

Assumption 1

Given any \(\{\alpha , \phi , \xi , \rho \}\) and \(\sigma \), the following holds, for all \(t\in {\mathbb {T}}\),

Assumption 1 guarantees that the expected absolute value of any period-t interim expected payoff is bounded for any time horizon \(\tau \in {\mathbb {T}}_{t}\). This assumption can be satisfied, for example, when the instantaneous payoff \(u_{i,t}(\theta _{t}, a_{t}) + p_{t}\) is uniformly bounded for any \(\theta _{t}\in \varTheta _{t}\), \(t\in {\mathbb {T}}\), given \(\{\alpha , \phi , \xi , \rho \}\) and \(\sigma \). This assumption is essential to the existence of optimal stopping rule described in Sect. 3.2.

Define \(\chi ^{\alpha ,\phi ,\xi }_{1,t}\) as the difference between the interim expected values when \(\tau =t\) and \(\tau =t+1\), respectively, evaluated at period t as follows,

Here, \(\chi ^{\alpha , \phi , \xi }_{1,t}(\theta _{t})\) can be interpreted as the expected marginal change of deferring the stopping time from current period t to the next period \(t+1\). Hence, \(\chi ^{\alpha , \phi , \xi }_{1,t}(\theta _{t})\) characterizes the marginal incentive of agent’s stopping decision at period t. General optimal stopping problems do not require monotonicity assumptions on \(\chi ^{\alpha , \phi , \xi }_{1,t}(\theta _{t})\). However, as imposed in many economic models, there is a monotonicity condition referred to as the single crossing property (see, e.g., [45]): \(\chi ^{\alpha , \phi , \xi }_{1,t}(\theta _{t})\) crosses the horizontal axis just once, from negative to positive (resp. from positive to negative), as the state \(\theta _{t}\) increases (resp. decreases). A single crossing property applicable in our dynamic model is shown in the following assumption.

Assumption 2

\(\chi ^{\alpha , \phi , \xi }_{1,t}(\theta _{t})\) is non-decreasing in \(\theta _{t}\) for all \(t\in {\mathbb {T}}\).

This assumption is for the desideratum of establishing a threshold-based optimal stopping rule in Sect. 4.1. To ensure that Assumption 2 holds, we can, for example, make the derivative of \(\chi ^{\alpha ,\phi ,\xi }_{1,t}\) with respective to \(\theta _{t}\) nonnegative or impose the monotonicity of the utility function, payment rules, and their combinations, e.g., each of \({\mathbb {E}}^{\varXi _{\alpha ;\sigma }[h^{\theta }_{t}] }\Big [\xi _{t+1}({\tilde{\theta }}_{t+1}|h^{\theta }_{t})-\xi _{t}(\theta _{t}|h^{\theta }_{t-1})\Big ]\), \(\phi _{t}(\theta _{t})\), and \({\mathbb {E}}^{\varXi _{\alpha ;\sigma }[h^{\theta }_{t}] }\) \(\Big [u_{1,t+1}({\tilde{\theta }}_{t+1},\) \( \alpha _{t+1}({\tilde{\theta }}_{t+1}|h^{\theta }_{t}))\Big ]\) is non-decreasing in \(\theta _{t}\). This assumption can be naturally interpreted in many economic scenarios. For example, suppose that the principal aims to establish a multiperiod cooperation with an agent, who has time-evolving productivity (i.e., state) to finish the tasks assigned by the principal over time. Assumption 2 assumes that the agent with higher productivity is more incentivized by the mechanism to continue instead of stopping immediately than the agent with lower productivity.

Assumption 3

The probability density \(f_{t}(\theta _{t}|\theta _{t-1}, h^{a}_{t-1})>0\) for all \(\theta _{t}\in \varTheta _{t}\), \(\theta _{t-1}\in \varTheta _{t-1}\), \(h^{a}_{t-1}\in \prod _{s=1}^{t-1} A_{s}\), \(t\in {\mathbb {T}}\backslash \{1\}\).

Assumption 3 restricts our attention to a full support environment where each state has a strictly positive probability to be realized at each period. This assumption is imposed to support the uniqueness of the threshold-based optimal stopping rule and to ensure that the marginal effect of the change of the current state on the future states is bounded (as used in Lemma 8). Finally, the following assumption imposes a first-order stochastic dominance on the dynamics of the agent’s state.

Assumption 4

For all \(\theta '_t \ge \theta _t\in \varTheta _{t}\), \({\bar{\theta }}_{t+1}\in \varTheta _{t+1}\), \(h^{a}_{t}\in \prod _{s=1}^{t} A_{s}\), \(t\in {\mathbb {T}}\backslash \{T\}\),

Assumption 4 assumes that a larger state at current period leads to a larger state at the next period in the sense of a first-order stochastic dominance, i.e., the partial derivative of \(F_{t+1}\) with respect to \(\theta _{t}\) is non-positive. It is straightforward to see that Assumptions 1, 3, and 4 are spontaneously compatible to each other. However, since the monotonicity of the state dynamics influences the probability measures of the expectations taken in the term \(Z^{\alpha ,\phi ,\xi }_{1,t}\), the monotonicity of \(\chi ^{\alpha ,\phi ,\xi }_{1,t}\) specified by Assumption 2 is correlated with Assumption 4. Given its definition in (7), the monotonicity of \(\chi ^{\alpha ,\phi ,\xi }_{1,t}\) also relates to the monotonicity of the utility function, the allocation rule, and the payment rules. In Sect. 5, the characterizations of the dynamic incentive compatibility consider the case when all the above four assumptions are satisfied as well as a more general case without Assumption 2.

3.2 Optimal Stopping Rule

In this section, we construct the optimal stopping rule for the agent and identify the dynamic incentive compatibility constraints when the agent makes coupled decisions of reporting and stopping at each period. Let \(\varOmega [\sigma ]\) denote the agent’s stopping rule when he uses reporting strategy \(\sigma \). The optimality of \(\varOmega [\sigma ]\) is defined as follows.

Definition 3

(Optimal Stopping) Given \(\{\alpha ,\phi ,\xi , \rho \}\) and any reporting strategy \(\sigma \), the agent’s stopping rule \(\varOmega [\sigma ]\) is optimal if there exists a \(\tau ^{*}\) such that

Let \(\varOmega ^{*}[\sigma ]\) denote the optimal stopping rule when the agent adopts \(\sigma \).

Fix \(\{\alpha ,\phi ,\xi ,\rho \}\). To study the optimal stopping problem (9), we introduce the agent’s valuation function at period t as follows, for all \(\theta _{t}\in \varTheta _{t}\), \(t\in {\mathbb {T}}\), any stopping rule \(\sigma \):

\(V^{\alpha ,\phi ,\xi ,\rho }_{t}(\theta _{t})=V^{\alpha ,\phi ,\xi ,\rho }_{t}(\theta _{t};\sigma )\) when \(\sigma \) is truthful, where the supremum is taken over all time horizon \(\tau \) of the process \(\varXi _{\alpha }[h^{\theta }_{t}]\) starting from t. The valuation function \(V^{\alpha , \phi , \xi , \rho }_{t}(\theta _{t}; \sigma )\) is the maximum interim expected payoff the agent can expect at period t by varying the time horizon when he has reported \(h^{{\hat{\theta }}}_{t-1}\), observes the current state \(\theta _{t}\) and reports \({\hat{\theta }}_{t}=\sigma _{t}(\theta _{t}| h^{{\hat{\theta }}}_{t-1}, h^{a}_{t-1})\), and plans to report future states by \(\{\sigma _{s}\}_{s\in {\mathbb {T}}_{t+1}}\). Note that the agent can modify his planned future reporting strategies at different periods to evaluate the valuation (10).

Suppose that Assumption 1 holds. Backward induction leads to the following Bellman equation, for any reporting strategy \(\sigma \):

-

(i)

for all \(t\in {\mathbb {T}}\backslash \{T\}\),

$$\begin{aligned} \begin{aligned}&V^{\alpha ,\phi ,\xi ,\rho }_{t}(\theta _{t}; \sigma ) \\&\quad = \max \Big ( J^{\alpha ,\phi ,\xi ,\rho }_{1,t}(t, \theta _{t},\sigma _{t}(\theta _{t}| h^{{\hat{\theta }}}_{t-1}, h^{a}_{t-1});\sigma |h^{\theta }_{t-1}), {\mathbb {E}}^{\varXi _{\alpha }[h^{\theta }_{t}]}\big [ V^{\alpha ,\phi ,\xi ,\rho }_{t+1}({\tilde{\theta }}_{t+1};\sigma )\big ] \Big ); \end{aligned} \end{aligned}$$(11) -

(ii)

for \(t=T\),

$$\begin{aligned} V^{\alpha ,\phi ,\xi ,\rho }_{T}(\theta _{T};\sigma ) = J^{\alpha ,\phi ,\xi ,\rho }_{1,T}(T, \theta _{T},\sigma _{T}(\theta _{T}| h^{{\hat{\theta }}}_{T-1}, h^{a}_{T-1}); \sigma |h^{\theta }_{T-1}). \end{aligned}$$(12)

The backward induction yields an equivalent representation of \(V^{\alpha ,\phi ,\xi ,\rho }_{t}\) by comparing interim expected payoff if the agent stops at t and the expected valuation of the next period if the agent continues. Formulations (11) and (12) naturally lead to the following definition of a stopping region, for any reporting strategy \(\sigma \), \(t\in {\mathbb {T}}\):

Hence, the period-t stopping region is a set of states that are realized at period t such that the period-t valuation equals the period-t interim expected payoff if the agent stops at t. Based on the stopping region (13), we define the stopping rule, for any reporting strategy \(\sigma \):

The stopping rule \(\varOmega ^{*}[\sigma ]\) shown in (14) calls for stopping at period t if the realized state \(\theta _{t}\) is in the stopping region. Theorem 1.9 of Peskir and Shiryaev [44] has shown that \(\varOmega ^{*}[\sigma ]\) given in (14) solves (10). Since \(J^{\alpha ,\phi , \xi , \rho }(\tau ^{*};\sigma ) =\) \( {\mathbb {E}}^{{\tilde{\theta }}_{1}\sim K_{1}}\Big [\) \(V_{1,1}^{\alpha ,\phi , \xi , \rho }({\tilde{\theta }}_{1};\sigma )\Big ]\), \(\varOmega ^{*}[\sigma ]\) given in (14) is an optimal stopping rule. Interested readers may refer to Chapter 1 of Peskir and Shiryaev [44] for a rigorous characterization of general optimal stopping problems for Markovian processes.

The coupling of the reporting strategy and the optimal stopping decision is captured in (13) and (14). Given \(\{\alpha ,\phi ,\xi ,\rho \}\), the agent chooses the current \(\sigma _{t}\) and plans the future \(\{\sigma _{s}\}_{s\in {\mathbb {T}}_{t+1}}\) to maximize the current period valuation \(V^{\alpha ,\phi ,\xi , \rho }_{t}(\theta _{t};\sigma )\). For any reporting strategy \(\sigma \), \(\theta _{t}\in \varTheta _{t}\), \(t\in {\mathbb {T}}\), we introduce

Here, \(\tau ^{\sup }_{t}[\theta _{t};\sigma ]\) is the smallest time horizon that leads to the maximum period-t interim expected payoff, when the agent uses \(\sigma \) and his true state is \(\theta _{t}\). On the one hand, the agent’s current \(\sigma _{t}\) and the planned future \(\{\sigma _{s}\}_{s\in {\mathbb {T}}_{t+1}}\) determine \(\tau ^{\sup }_{t}[\theta _{t};\sigma ]\). On the other hand, \(\tau ^{\sup }_{t}[\theta _{t};\sigma ]\) determines how far the agent should look into the future to maximize \(V^{\alpha ,\phi ,\xi , \rho }_{t}(\theta _{t};\sigma )\). This coupling complicates the incentive compatibility and we address the challenge in the next section.

4 Implementability

In this section, we formally define the incentive compatibility in our dynamic environment and formulate the optimal stopping rule as a threshold-based rule.

Definition 4

(Dynamic Incentive Compatibility) The dynamic mechanism \(\{\alpha ,\phi ,\) \(\xi , \rho \}\) is dynamic incentive-compatible (DIC) if, for all reporting strategy \(\sigma \),

-

(1)

for \(t\in {\mathbb {T}}\backslash \{T\}\),

$$\begin{aligned} \begin{aligned}&\max \Big (J^{\alpha ,\phi ,\xi ,\rho }_{1,t}(t, \theta _{t}|h^{\theta }_{t-1}),\;\;\; {\mathbb {E}}^{\varXi _{\alpha }[h^{\theta }_{t}]}\big [ V^{\alpha ,\phi ,\xi ,\rho }_{t+1}({\tilde{\theta }}_{t+1})\big ] \Big )\\&\quad \ge \max \Big (J^{\alpha ,\phi ,\xi ,\rho }_{1,t}(t, \theta _{t}, \sigma _{t}(\theta _{t}| h^{{\hat{\theta }}}_{t-1}, h^{a}_{t-1}); \sigma |h^{\theta }_{t-1}), {\mathbb {E}}^{\varXi _{\alpha ;\sigma }[h^{\theta }_{t}]}\big [ V^{\alpha ,\phi ,\xi ,\rho }_{t+1}({\tilde{\theta }}_{t+1};\sigma )\big ] \Big ); \end{aligned} \end{aligned}$$(16) -

(2)

for \(t=T\),

$$\begin{aligned} J^{\alpha ,\phi ,\xi ,\rho }_{1,T}(T, \theta _{T}|h^{\theta }_{T-1}) \ge J^{\alpha ,\phi ,\xi ,\rho }_{1,T}(T, \theta _{T}, \sigma _{T}(\theta _{T}| h^{{\hat{\theta }}}_{T-1}, h^{a}_{T-1}); \sigma |h^{\theta }_{T-1}); \end{aligned}$$(17)

i.e., the agent of state \(\theta _{t}\) maximizes his value at period t by reporting truthfully at all \(t\in {\mathbb {T}}\). The rules \(\{\alpha ,\phi ,\xi ,\rho \}\) are called implementable if the corresponding mechanism is dynamic incentive-compatible.

At the final period \(t=T\), the agent stops with probability 1. His incentive to misreport the true state is captured by the immediate instantaneous payoff. The condition (17) guarantees the non-profitability of misreporting and thus disincentivizes the agent from untruthful reporting. At each non-final period \(t\in {\mathbb {T}}\backslash \{T\}\), agent’s exploration of profitable deviations from truthful reporting strategy takes into account the misreporting of the current state as well as any possible planned future misreporting. In particular, when the agent’s optimal stopping rule calls for stopping if he reports truthfully, \(V_{t}^{\alpha ,\phi ,\xi , \rho }(\theta _{t}) = J^{\alpha ,\phi ,\xi , \rho }_{1,t}(t,\theta _{t}|h^{\theta }_{t-1})\); when the agent’s optimal stopping rule calls for continuing if he reports truthfully, \(V_{t}^{\alpha ,\phi ,\xi , \rho }(\theta _{t}) = {\mathbb {E}}^{\varXi _{\alpha }[h^{\theta }_{t}]}\big [ V^{\alpha ,\phi ,\xi ,\rho }_{t+1}({\tilde{\theta }}_{t+1}) \big ]\). There are three situations of deviations: (i) misreporting at t and stopping at t, (ii) misreporting at t, planned misreporting in the future and continuing, (iii) truthful reporting at t, planned misreporting in the future and continuing. The condition (16) ensures that no such deviations from truthfully reporting are profitable.

Let \({\hat{\sigma }}[t]=\{{\hat{\sigma }}[t]_{s}\}_{s\in {\mathbb {T}}}\) be any reporting strategy that differs from the truthful reporting strategy \(\sigma ^{*}\) at only one period \(t\in {\mathbb {T}}\), i.e., \({\hat{\sigma }}[t]\) is truthful at all periods before t and is planned to be truthful at all periods after t. We call \({\hat{\sigma }}[t]\) as a one-shot deviation strategy at t, for any \(t\in {\mathbb {T}}\). To simplify the notation, we denote the process \(\varXi _{\alpha ;{\hat{\sigma }}[t]}[h^{\theta }_{t}]\) with \({\hat{\theta }}_{t}={\hat{\sigma }}[t]_{t}(\theta _{t}| h^{\theta }_{t-1}, h^{a}_{t-1})\) by \(\alpha |\theta _{t}, {\hat{\theta }}_{t}\) and let \(\alpha |\theta _{t} = \alpha |\theta _{t}, \theta _{t}\) when the agent reports truthfully. Additionally, we omit \({\hat{\sigma }}[t]\) in the payoff and the valuation functions and only show \({\hat{\theta }}_{t}\) as a typical reported state using \({\hat{\sigma }}[t]_{t}\) unless otherwise stated. We denote the corresponding expectation by \({\mathbb {E}}^{\alpha |\theta _{t}, {\hat{\theta }}_{t}}[\cdot ]\).

Proposition 1

Suppose that Assumption 1 holds. In Markovian dynamic environment, if the agent obtains no gain from untruthful reporting by using any one-shot deviation strategy \({\hat{\sigma }}[t]\), for any \(t\in {\mathbb {T}}\), \(\theta _{t}\in \varTheta _{t}\), and any history of truthful reports \(h^{\theta }_{t-1}\in \prod _{s=1}^{t-1}\varTheta _{s}\), then he obtains no gain by using any untruthful reporting strategy for any arbitrary report history \(h^{{\hat{\theta }}}_{t-1}\in \prod _{s=1}^{t-1}\varTheta _{s}\), i.e.,

-

(i)

for \(t\in {\mathbb {T}}\backslash \{T\}\),

$$\begin{aligned} \begin{aligned}&\max \Big (J^{\alpha ,\phi ,\xi ,\rho }_{1,t}(t, \theta _{t}|h^{\theta }_{t-1}),\;\;\; {\mathbb {E}}^{\alpha |\theta _{t} }\big [ V^{\alpha ,\phi ,\xi ,\rho }_{t+1}({\tilde{\theta }}_{t+1})\big ] \Big )\\&\quad \ge \max \Big (J^{\alpha ,\phi ,\xi ,\rho }_{1,t}(t, \theta _{t},{\hat{\theta }}_{t}|h^{\theta }_{t-1}),\;\;\; {\mathbb {E}}^{\alpha |\theta _{t},{\hat{\theta }}_{t}}\big [ V^{\alpha ,\phi ,\xi ,\rho }_{t+1}({\tilde{\theta }}_{t+1})\big ] \Big ), \end{aligned} \end{aligned}$$(18) -

(ii)

for \(t=T\),

$$\begin{aligned} J^{\alpha ,\phi ,\xi ,\rho }_{1,T}(T, \theta _{T}|h^{\theta }_{T-1}) \ge J^{\alpha ,\phi ,\xi ,\rho }_{1,T}(T, \theta _{T}, {\hat{\theta }}_{T}|h^{\theta }_{T-1}). \end{aligned}$$(19)

Proof

See Appendix A. \(\square \)

Proposition 1 establishes a one-shot deviation principle for our dynamic mechanism. The one-shot deviation principle enables us to reduce the complexity of the characterization of the DIC and to focus on the analysis of the agent’s incentive compatibility at each period when he has reported truthfully at all past periods and plans to report truthfully at all future periods. As a result, we can restrict attention to the conditions (18) and (19) as the DIC constraints when the agent uses any one-shot deviation strategy at each period \(t\in {\mathbb {T}}\). In the rest of this paper, when it comes to the agent’s deviation from truthfulness, we focus on his one-shot deviation strategy \({\hat{\sigma }}[t]\) that reports \({\hat{\theta }}_{t} = {\hat{\sigma }}[t]_{t}(\theta _{t}| h^{\theta }_{t-1}, h^{a}_{t-1})\) at any single period \(t\in {\mathbb {T}}\). With a slight abuse of notation, we use \(h^{{\hat{\theta }}_{t}}_{s}\), \(s\ge t\), to denote the history of reports (including the planned reports) when the agent uses one-shot deviation strategy at t and reports \({\hat{\theta }}_{t}\).

Define the continuing value as, for any \(\theta _{t}, {\hat{\theta }}_{t}\in \varTheta _{t}\), \(t\in {\mathbb {T}}\backslash \{T\}\),

with \(\mu ^{\alpha ,\phi ,\xi ,\rho }_{t}(\theta _{t},\theta _{t}) \equiv \mu ^{\alpha ,\phi ,\xi ,\rho }_{t}(\theta _{t})\) when the agent uses the truthful reporting strategy. The continuing value captures the agent’s maximum expected gain when he decides to continue to the next period instead of stopping immediately. Then, the stopping rule in (14) can be characterized by the continuing value as follows, for any one-shot deviation strategy \({\hat{\sigma }}[t]\) at any \(t\in {\mathbb {T}}\):

Define the marginal value \(L^{\alpha , \phi , \xi ,\rho }_{t}\) as follows, for any \(\theta _{t}, {\hat{\theta }}_{t}\in \varTheta _{t}\), \(t\in {\mathbb {T}}\backslash \{T\}\):

with \(L^{\alpha , \phi , \xi ,\rho }_{t}(\theta _{t})=\) \(L^{\alpha , \phi , \xi ,\rho }_{t}(\theta _{t}, \theta _{t})\) when the agent uses the truthful reporting strategy. Hence, \(L^{\alpha , \phi , \xi ,\rho }_{t}\) \((\theta _{t},\hat{\theta }_{t})\) \(- \rho (t)\) captures the marginal change in the interim expected payoffs evaluated at t if the agent plans to stop at period \(t+1\) instead of stopping immediately at t when his true state is \(\theta _{t}\) and he reports \({\hat{\theta }}_{t}\).

Lemma 1

Given \(\{\alpha , \phi , \xi ,\rho \}\), we have, for any \(\tau \in {\mathbb {T}}\),

and, for any \(\theta _{t}\in \varTheta _{t}\), \(h^{\theta }_{t}\in \prod _{s=1}^{t-1} \varTheta _{s}\), \(t\in {\mathbb {T}}\), \(\tau \in {\mathbb {T}}_{t}\),

Proof

See Appendix B. \(\square \)

Lemma 2

Suppose that Assumption 2 holds. Then, \(L^{\alpha , \phi , \xi , \rho }_{t}(\theta _{t})\) is non-decreasing in \(\theta _{t}\), for all \(t\in {\mathbb {T}}\backslash \{T\}\).

The proof of Lemma 2 directly follows the formulation of \(J^{\alpha ,\phi ,\xi , \rho }_{1}\) in Lemma 1. Lemma 1 shows that the agent’s ex-ante expected payoff and his period-t interim expected payoff can be represented in terms of \(L^{\alpha , \phi , \xi , \rho }\), \(\rho \), and the expected payoffs when the agent stops at the starting periods (i.e., period 1 or period t, respectively). Lemma 2 establishes a single crossing condition that is necessary for the existence of the threshold-based stopping rule (see Sect. 4.1).

From Lemma 1, we can represent the continuing value in terms of \(L^{\alpha , \phi , \xi , \rho }_{t}\) as follows, for any \(\theta _{t}, {\hat{\theta }}_{t}\in \varTheta _{t}\), \(t\in {\mathbb {T}}\backslash \{T\}\):

Let, for any \(\theta _{t}, {\hat{\theta }}_{t}\in \varTheta _{t}\), \(t\in {\mathbb {T}}\backslash \{T\}\),

with \({\bar{\mu }}^{\alpha ,\phi ,\xi ,\rho }_{t}(\theta _{t},\theta _{t}) \equiv {\bar{\mu }}^{\alpha ,\phi ,\xi ,\rho }_{t}(\theta _{t})\). Then, we define the following auxiliary functions, for any \(\theta _{t}, {\hat{\theta }}_{t}\in \varTheta _{t}\), \(h^{\theta }_{t-1}\in \prod _{s=1}^{t-1}\varTheta _{s}\), \(t\in {\mathbb {T}}\),

and

with \(U_{j,t}^{\alpha ,\phi , \xi , \rho }(\theta _{t},\theta _{t}|h^{\theta }_{t-1}) \equiv U_{j,t}^{\alpha ,\phi , \xi , \rho }(\theta _{t}|h^{\theta }_{t-1})\), for \(j\in \{S,{\bar{S}}\}\), where the subscripts S and \(\bar{S}\) represent “stop” and “nonstop,” respectively. Basically, \(U^{\alpha ,\phi , \xi , \rho }_{S,t}\) and \(U^{\alpha ,\phi , \xi , \rho }_{{\bar{S}},t}\) are the agent’s expected payoffs that are directly determined by his current reporting strategy \({\hat{\sigma }}[t]_{t}\) and planned truthful reporting strategies \(\{{\hat{\sigma }}[t]_{s}\}_{s\in {\mathbb {T}}_{t+1}}\), when he decides to stop at t and continue to \(t+1\), respectively. We can rewrite the DIC in Proposition 1 in terms of these auxiliary functions in the following lemma.

Lemma 3

The IC constraints (18) and (19) are equivalent to the following

and

for all \(\theta _{t}\), \( {\hat{\theta }}_{t}\in \varTheta _{t}\), \(h^{\theta }_{t-1}\in \prod _{s=1}^{t-1} \varTheta _{s}\), \(t\in {\mathbb {T}}\).

Conditions (29) and (30) reformulate the incentive constraints in (18) and (19). They ensure that misreporting is not profitable in the instantaneous payoff when the agent stops and continues, respectively. If (29) (resp. (30)) is satisfied, the optimality of the stopping rule guarantees that continuing (resp. stopping) is not profitable when the stopping rule calls for stopping (resp. continuing).

4.1 Threshold Rule

In this section, we revisit the optimal stopping rule and introduce a class of threshold-based stopping rule (see, e.g., [24, 27, 53]). Given the definition of \({\bar{\mu }}^{\alpha , \phi ,\xi , \rho }_{t}(\theta _{t}, {\hat{\theta }}_{t})\) in (26), we can rewrite the optimal stopping rule in (21) as, for any \({\hat{\sigma }}[t]\) that reports \({\hat{\theta }}_{t}\in \varTheta _{t}\), \(t\in {\mathbb {T}}\),

with the corresponding stopping region

Hence, the principal can adjust \(\rho (t)\) to influence the agent’s stopping time by changing the stopping region \(\varLambda ^{\alpha ,\phi ,\xi ,\rho }_{1,t}(t;{\hat{\theta }}_{t})\). In general, the stopping rule partitions the state space \(\varTheta _{t}\) into multiple zones, which leads to multiple stopping subregions. Suppose that, given \(<\alpha ,\phi ,\xi ,\rho>\) and \({\hat{\sigma }}[t]\), there are \(n_{t}\) stopping subregions at period t. Let \(\{\theta ^{\ell ;k}_{t}, \theta ^{r;k}_{t}\}\), \({\underline{\theta }}_{t}\le \theta ^{\ell ;k}_{t}\le \theta ^{r;k}_{t} \le {\bar{\theta }}_{t}\), denote the boundaries of the kth stopping subregion, such that the stopping region (32) is equivalent to

with \(\mathbf {\varLambda }^{\alpha ,\phi ,\xi ,\rho }_{1,t}(t|n_{t})=\mathbf {\varLambda }^{\alpha ,\phi ,\xi ,\rho }_{1,t}(t;\theta _{t}|n_{t})\) when the agent reports truthfully.

If there is only one stopping subregion, i.e., the stopping rule partitions the state space \(\varTheta _{t}\) into two regions, then the stopping rule is a threshold rule. The existence of a threshold rule depends on the monotonicity of \({\bar{\mu }}^{\alpha ,\phi ,\xi ,\rho }_{t}\) with respect to \(\theta _{t}\). The following lemma directly follows Lemma 2.

Lemma 4

Suppose that Assumption 2 holds. Then, \({\bar{\mu }}^{\alpha , \phi , \xi , \rho }_{t}(\theta _{t}, {\hat{\theta }}_{t})\) is non-decreasing in \(\theta _{t}\), for all \(t\in {\mathbb {T}}\backslash \{T\}\), any \({\hat{\theta }}_{t}\in \varTheta _{t}\).

Since \(\rho (t)\) is independent of states or reports, the monotonicity in Lemma 4 suggests a threshold-based stopping rule for the agent. Let \(\eta : {\mathbb {T}} \rightarrow \varTheta _{t}\) be the threshold function, for all \(t\in {\mathbb {T}}\), such that the agent chooses to stop the first time the state \(\theta _{t}\le \eta (t)\). Since the agent has to stop at the final period, we require \(\eta (T)={\bar{\theta }}_{T}\). Let \(\varOmega [{\hat{\sigma }}[t]]|\eta \) denote the stopping rule with the threshold function \(\eta \).

Definition 5

(Threshold Rule) Fix any \({\hat{\sigma }}[t]\), \(t\in {\mathbb {T}}\). We say that the stopping rule \(\varOmega [{\hat{\sigma }}[t]]|\eta \) is a threshold rule if there exists a threshold function \(\eta \) such that

We establish that the optimal stopping rule shown in (31) is a threshold rule in Lemma 5.

Lemma 5

Suppose that Assumption 2 holds. If the stopping rule \(\varOmega [{\hat{\sigma }}[t]]\) is optimal in the mechanism \(\{\alpha ,\phi ,\xi ,\rho \}\), then it is a threshold rule with a threshold function \(\eta \), denoted as \(\varOmega [{\hat{\sigma }}[t]]|\eta \).

Proof

See Appendix C. \(\square \)

Lemma 6 shows the uniqueness of the threshold function.

Lemma 6

Suppose that Assumptions 2 and 3 hold. Then, each threshold rule has a unique threshold function.

Proof

See Appendix D. \(\square \)

From (34), the payment rule \(\rho \) can be fully characterized by the threshold function \(\eta \) such that the principal can influence the agent’s stopping time by manipulating \(\eta \). For example, setting \(\eta (t)={\underline{\theta }}_{t}\) (resp. \(\eta _{t} = {\bar{\theta }}_{t}\)) forces the agent to continue (resp. stop) with probability 1.

5 Characterization of Incentive Compatibility

In this section, we characterize the dynamic incentive compatibility of the mechanism. We first introduce the length functions and the potential functions and show a sufficient condition by constructing the payment rules in terms of the potential functions based on the relationship between the length and the potential functions. Second, we pin down the payment rules in terms of the allocation rule by applying the envelope theorem.

Let, for any \(\theta _{t}\), \({\hat{\theta }}_{t}\in \varTheta _{t}\), \(\tau \in {\mathbb {T}}_{t}\), \(t\in {\mathbb {T}}\backslash {\{T\}}\),

with \(\pi ^{\alpha }_{t}(\theta _{t};\tau )=\pi ^{\alpha }_{t}(\theta _{t},\theta _{t};\tau )\). The term \(\pi ^{\alpha }_{t}(\cdot ;\tau )\) denotes the agent’s period-t expected payoff to go for a time horizon \(\tau >t\) without the current-period payment specified by \(\phi _{t}\). When \(\tau =t\), the term \(\pi ^{\alpha }_{t}(\cdot ;\tau )\) is the agent’s period-t instantaneous payoff if he stops at t. Define the length functions as, for any \(\theta _{t}\), \({\hat{\theta }}_{t}\in \varTheta _{t}\), \(t\in {\mathbb {T}}\),

and, for any \(\tau \in {\mathbb {T}}_{t}\),

The length function \(\ell _{{\bar{S}},t}({\hat{\theta }}_{t},\theta _{t})\) (resp. \(\ell _{S,t}({\hat{\theta }}_{t},\theta _{t})\)) describes the change in the value of \(\pi ^{\alpha }_{t}\) (resp. \(\delta u_{1,t}\)) while keeping reporting \({\hat{\theta }}_{t}\) when the agent observes \({\hat{\theta }}_{t}\) instead of observing \(\theta _{t}\). From the definition of \(\pi ^{\alpha }_{t}\), it is straightforward to see that \(\ell ^{\alpha }_{S,t}({\hat{\theta }}_{t}, \theta _{t}) = \ell ^{\alpha }_{{\bar{S}},t}({\hat{\theta }}_{t}, \theta _{t};t)\).

Let \(\beta ^{\alpha }_{S,t}(\cdot ):\varTheta _{t}\rightarrow {\mathbb {R}}\) and \(\beta ^{\alpha }_{{\bar{S}},t}(\cdot ):\varTheta _{t}\rightarrow {\mathbb {R}}\) be the potential functions that depend only on \(\alpha \). Proposition 2 shows a sufficient condition for DIC by showing the constructions of the payment rules in terms of the potential functions and the threshold function (for \(\rho \)) and specifying the relationships between the potential functions and the length functions.

Proposition 2

Fix an allocation rule \(\alpha \) and a threshold function \(\eta \). Suppose that Assumptions 1, 3, and 4 hold. The dynamic mechanism is dynamic incentive-compatible if the following statements are satisfied:

-

(i)

The payment rules \(\phi \), \(\xi \), and \(\rho \), respectively, are constructed as, for all \(t\in {\mathbb {T}}\),

$$\begin{aligned} \phi _{t}(\theta _{t})= & {} \delta ^{-t}\beta ^{\alpha }_{\bar{S},t}(\theta _{t}) - \delta ^{-t}{\mathbb {E}}^{\alpha |\theta _{t}}\Big [\beta ^{\alpha }_{{\bar{S}},t+1}({\tilde{\theta }}_{t+1}) \Big ] -u_{1,t}(\theta _{t}, \alpha _{t}(\theta _{t}|h^{\theta }_{t-1} )), \end{aligned}$$(38)$$\begin{aligned} \xi _{t}(\theta _{t})= & {} \delta ^{-t}\beta ^{\alpha }_{S,t}(\theta _{t})-u_{1,t}(\theta _{t},\alpha _{t}(\theta _{t}|h^{\theta }_{t-1})), \end{aligned}$$(39)$$\begin{aligned} \rho (t)= & {} \delta ^{-t}{\mathbb {E}}^{\alpha |\eta (t)}\Big [\sum _{s=t}^{T-1} \big (\beta ^{\alpha }_{S,s+1}({\tilde{\theta }}_{s+1}\vee \eta (s+1))-\beta ^{\alpha }_{S,s}({\tilde{\theta }}_{s}\vee \eta (s)) \big ) \nonumber \\&- \big (\beta ^{\alpha }_{{\bar{S}},s+1}({\tilde{\theta }}_{s+1}\vee \eta (s+1))-\beta ^{\alpha }_{{\bar{S}},s}({\tilde{\theta }}_{s}\vee \eta (s)) \big ) \Big ]. \end{aligned}$$(40) -

(ii)

\(\beta ^{\alpha }_{{\bar{S}},t}\) and \(\beta ^{\alpha }_{S,t}\) satisfy, for all \(\theta _{t}\), \({\hat{\theta }}_{t}\in \varTheta _{t}\), \(t\in {\mathbb {T}}\),

$$\begin{aligned}&\beta ^{\alpha }_{S,t}({\hat{\theta }}_{t})- \beta _{S,t}^{\alpha }(\theta _{t})\le \ell ^{\alpha }_{S,t}({\hat{\theta }}_{t},\theta _{t}), \end{aligned}$$(41)$$\begin{aligned}&\beta ^{\alpha }_{{\bar{S}},t}({\hat{\theta }}_{t})-\beta ^{\alpha }_{{\bar{S}},t}(\theta _{t})\le \inf _{\tau \in {\mathbb {T}}_{t}}\Big \{\ell ^{\alpha }_{{\bar{S}},t}({\hat{\theta }}_{t},\theta _{t};\tau )\Big \}-\sup _{\tau '\in {\mathbb {T}}_{t}}\rho (\tau '), \end{aligned}$$(42)and

$$\begin{aligned} \beta ^{\alpha }_{{\bar{S}},t}(\theta _{t}) \ge \beta ^{\alpha }_{S,t}(\theta _{t}) \text { and } \beta ^{\alpha }_{{\bar{S}},T}(\theta _{T}) = \beta ^{\alpha }_{S,T}(\theta _{T}). \end{aligned}$$(43) -

(iii)

\(\beta ^{\alpha }_{{\bar{S}},t}\) and \(\beta ^{\alpha }_{S,t}\) are constructed in terms of \(\alpha \) such that the following term is non-decreasing in \(\theta _{t}\), for all \(t\in {\mathbb {T}}\):

$$\begin{aligned} \begin{aligned} \chi ^{\alpha }_{1,t}(\theta _{t}) = {\mathbb {E}}^{\alpha |\theta _{t}}\Big [\beta ^{\alpha }_{S,t+1}({\tilde{\theta }}_{t+1}) - \beta ^{\alpha }_{{\bar{S}},t+1}({\tilde{\theta }}_{t+1}) \Big ] -\big (\beta ^{\alpha }_{S,t}(\theta _{t})-\beta ^{\alpha }_{{\bar{S}},t}(\theta _{t}) \big ). \end{aligned} \end{aligned}$$(44)

Proof

See Appendix E. \(\square \)

In Proposition 2, the first statement (i) shows three conditions (38)-(40) to construct the three payment rules in terms of the allocation rule and the threshold function (for \(\rho \)) through the potential functions and the utility function, such that if the potential functions satisfy the conditions (41)-(43) in the second statement (ii), then the mechanism \(\{\alpha , \phi ,\xi ,\rho \}\) is DIC. Importantly, the construction of \(\rho \) in (40) is based on the setting when the agent’s optimal stopping rule is a threshold rule with a unique threshold function. The existence of such threshold rule is established under Assumption 2. Therefore, Proposition 2 imposes an additional condition for the construction of the potential functions in the third statement (iii) to guarantee the monotonicity in Assumption 2. The explicit constructions of the potential functions is constructed from the necessity of the DIC.

Define with a slight abuse of notation, for any \(\theta _{t}, {\hat{\theta }}_{t}\in \varTheta _{t}\), \(\tau \in {\mathbb {T}}_{t}\), \(t\in {\mathbb {T}}\),

with \({\bar{\mu }}^{\alpha ,\phi ,\xi ,\rho }_{t}(\theta _{t};\tau )= {\bar{\mu }}^{\alpha ,\phi ,\xi ,\rho }_{t}(\theta _{t}, \theta _{t};\tau )\), and, for any \(\theta _{t}, {\hat{\theta }}_{t}\in \varTheta _{t}\), \(h^{\theta }_{t-1}\in \prod _{s=1}^{t-1}\varTheta _{s}\), \(\tau \in {\mathbb {T}}_{t}\), \(t\in {\mathbb {T}}\)

with \(U^{\alpha ,\phi ,\xi ,\rho }_{t}(\tau , \theta _{t}|h^{\theta }_{t-1})=U^{\alpha ,\phi ,\xi ,\rho }_{t}(\tau , \theta _{t}, \theta _{t}|h^{\theta }_{t-1})\).

The following lemma takes advantage of the quasilinearity of the payoff function and formulates the partial derivative of \(U^{\alpha ,\phi ,\xi ,\rho }_{t}(\tau , \theta _{t}|h^{\theta }_{t-1})\) with respect to \(\theta _{t}\).

Lemma 7

Suppose that Assumption 3 holds. In any DIC mechanism, \(\{\alpha ,\phi ,\xi ,\rho \}\) satisfy, for any \(\theta _{t}\in \varTheta _{t}\), \(h^{\theta }_{t-1}\in \prod _{s=1}^{t-1}\varTheta _{s}\), \(\tau \in {\mathbb {T}}_{t}\), \(t\in {\mathbb {T}}\),

where

Proof

See Appendix F. \(\square \)

We establish the explicit formulations of the potential functions in the following proposition.

Proposition 3

Fix any allocation rule \(\alpha \). Suppose that Assumptions 1, 3, and 4 hold. Suppose additionally that \(u_{1,t}(\theta _{t}, a_{t})\) is a non-decreasing function of \(\theta _{t}\). In any DIC mechanism \(\{\alpha , \phi , \xi , \rho \}\), with \(\phi \), \(\xi \), and \(\rho \) constructed in (38)-(40) respectively, the potential functions \(\beta ^{\alpha }_{S,t}\) and \(\beta ^{\alpha }_{{\bar{S}},t}\) are constructed in terms of \(\alpha \) as follows, for any arbitrary fixed state \(\theta _{\epsilon ,t}\in \varTheta _{t}\), any \(\theta _{t}\), \({\hat{\theta }}_{t}\in \varTheta _{t}\), \(h^{\theta }_{t-1}\in \prod _{s=1}^{t-1}\varTheta _{s}\), \(t\in {\mathbb {T}}\),

where \(\gamma _{t}^{\alpha }(\tau ,\theta _{t}|h^{\theta }_{t-1}) \equiv \frac{\partial U^{\alpha ,\phi ,\xi ,\rho }_{t}(\tau ,r|h^{\theta }_{t-1}) }{ \partial r }\Big |_{r= \theta _{t}} \), where \(\alpha \) satisfies the conditions (41)-(43).

Proof

See Appendix G. \(\square \)

Proposition 3 provides explicit formulations of the potential functions that depend only on the allocation rule \(\alpha \), up to a constant shift (determined by \(\theta _{\epsilon ,t}\)). As a result, the payment rules \(\phi \) and \(\xi \) can be pinned down up to a constant given \(\alpha \). Such results lead to the celebrated revenue equivalence theorem, which is important in mechanism design problems in static settings (e.g., [35, 52]) as well as in dynamic environments (e.g., [27, 42] ). The following proposition summarizes the property of the revenue equivalence of our dynamic mechanism.

Proposition 4

Fix an allocation rule \(\alpha \). Suppose that Assumptions 1, 3, and 4 hold. Suppose additionally that \(u_{1,t}(\theta _{t}, a_{t})\) is a non-decreasing function of \(\theta _{t}\). Let \(\theta ^{a}_{\epsilon ,t}\), \(\theta ^{b}_{\epsilon ,t}\in \varTheta _{t}\) be any two arbitrary states. Let \(\phi ^{a} \equiv \{\phi ^{a}_{t}\}\) and \(\phi ^{b}\equiv \{\phi ^{b}_{t}\}\) (resp. \(\xi ^{a}\) and \(\xi ^{b}\)) be two payment rules constructed according to (38) (resp. (39)) by the potential function \(\beta ^{\alpha }_{{\bar{S}},t}\) formulated in (48) (resp. \(\beta ^{\alpha }_{S,t}\) formulated in (47)) with \(\theta ^{a}_{\epsilon ,t}\) and \(\theta ^{b}_{\epsilon ,t}\), respectively, as the lower limit of the integral. Define \(\rho ^{a}\) and \(\rho ^{b}\) in the similar way, for some threshold functions \(\eta ^{a}\) and \(\eta ^{b}\), respectively. Then, there exist constants \(\{C_{\tau }\}_{\tau \in {\mathbb {T}}}\) such that, for any \(\theta _{t}\in \varTheta _{t}\), \(\tau \in {\mathbb {T}}_{t}\), \(t\in {\mathbb {T}}\),

Proof

See Appendix I. \(\square \)

Proposition 4 implies that different DIC mechanisms with the same allocation rule result in equivalent expected sum of payments paid by the principal up to a constant for any time horizon \(\tau \in {\mathbb {T}}_{t}\) given any \(t\in {\mathbb {T}}\). The following corollary shows the uniqueness of the state-independent payment rule \(\rho \) for any given threshold function \(\eta \).

Corollary 1

Fix an allocation rule \(\alpha \). Suppose that Assumptions 1, 3, and 4 hold. Suppose additionally that \(u_{1,t}(\theta _{t}, a_{t})\) is a non-decreasing function of \(\theta _{t}\). Let \(\eta \) be any threshold function such that \(\eta (t)\in \varTheta _{t}\), for each \(t\in {\mathbb {T}}\), and let \(\theta ^{a}_{\epsilon ,t}\), \(\theta ^{b}_{\epsilon ,t}\in \varTheta _{t}\) be any two arbitrary states. Construct two state-independent payment rules \(\rho ^{a}\) and \(\rho ^{b}\) according to (40) with \(\eta \) in which the potential functions are formulated in (47) and (48) with \(\theta ^{a}_{\epsilon ,t}\) and \(\theta ^{b}_{\epsilon ,t}\), respectively, as the lower limit of the integral. Then, there exist constants \(\{C^{\rho }_{t}\}_{t\in {\mathbb {T}}}\) such that, for any \(t\in {\mathbb {T}}\),

with \(\rho ^{a}(T)=\rho ^{b}(T)=C^{\rho }_{t}=0\).

If the mechanism does not satisfy the monotonicity condition specified in Assumption 2, we cannot guarantee a threshold rule (with a unique threshold function) for the mechanism. Hence, as shown in (33), the optimal stopping rule partitions the state space into multiple stopping subregions. Let \(\mathbf {\varTheta }_{t}\equiv \{\theta ^{k;j}_{t}\}_{k=1, j=\{k, r\} }^{k=n_{t}}\) denote the set of boundaries of stopping region \(\mathbf {\varLambda }^{\alpha ,\phi ,\xi ,\rho }_{1,t}(t|n_{t})\) given in (33). Define an operator

Here, \(\theta _{t} \sqcup \mathbf {\varTheta }_{t}\) makes \(\theta _{t}\) equal to its closest boundary for any \(\theta _{t}\in \varTheta _{t}\) which is in any stopping subregion. The following corollary shows a sufficient condition for the DIC mechanism when Assumption 2 does not hold.

Corollary 2

Fix an allocation rule \(\alpha \). Suppose that Assumptions 1 and 3 hold. The dynamic mechanism is dynamic incentive-compatible if the payment rules \(\phi \) and \(\xi \) are constructed in (38) and (39), respectively, and \(\rho \) is constructed as:

where the potential functions \(\beta ^{\alpha }_{S,t}\) and \(\beta ^{\alpha }_{{\bar{S}},t}\) satisfy the conditions (41)-(43).

Given the stopping region \(\mathbf {\varLambda }_{1,t}^{\alpha ,\phi ,\xi ,\rho }\) with \(\mathbf {\varTheta }_{t}\), the proof of Corollary 2 directly follows Proposition 2. The construction of \(\rho \) in (51) is equivalent to (40) if the formulations of the potential functions satisfy the monotonicity condition (44) in Proposition 2 (thus Assumption 2 holds). The following corollary summarizes that the main results shown in Propositions 3 and 4 and Corollary 1 hold for the general optimal stopping rule.

Corollary 3

Fix an allocation rule \(\alpha \). Suppose that Assumptions 1 and 3 hold. In DIC mechanism with \(\phi \), \(\xi \), and \(\rho \) constructed in (38), (39), and (51) respectively, (i) the potential functions are formulated in terms of \(\alpha \) in (47) and (48); (ii) the results of the revenue equivalence shown in Proposition 4 and Corollary 1 hold.

However, the sufficient condition given by Corollary 2 requires the principal to determine \(n_{t}\) and all the boundaries of the \(n_{t}\) stopping subregions. If the formulations of the potential functions maintain the monotonicity specified by Assumption 2, then the principal’s mechanism design problem only needs to deal with a unique threshold function \(\eta (t)\).

From the construction of \(\rho \) in (40) and the formulations of the potential functions in Proposition 3, \(\rho \) can be completely modeled by \(\alpha \) and \(\eta \), given the transition kernels. Specifically, at each \(t\in {\mathbb {T}}\), one \(\eta (t)\in \varTheta _{t}\) leads to \(\rho \) that is constructed by (40) and satisfies (50). Let \(r\equiv \{r_{1},\dots ,r_{T}\}\in {\mathbb {R}}^{T}\) be any sequence of real values. Define

Hence, \(\mathring{{\mathscr {R}}}\) is the set of payment sequences that \(\rho \) can specify from \(t=1\) to \(t=T\), given \(\alpha \) and \(\eta \).

Corollary 4

Fix an allocation rule \(\alpha \). Suppose that Assumptions 1, 3, and 4 hold. Suppose additionally that \(u_{1,t}(\theta _{t}, a_{t})\) is a non-decreasing function of \(\theta _{t}\). Let \(\eta \) be a threshold function. Suppose that \(\rho \) specifies a sequence of payments \(r=\{r_{t}, \dots , r_{T}\}\), where \(r_{t} = \rho (t)\), \(t\in {\mathbb {T}}\). Then, the following statements hold.

-

(i)

In DIC mechanisms, \(\rho \) cannot punish the agent for stopping; i.e., \(r_{t}\ge 0\), for all \(t\in {\mathbb {T}}\).

-

(ii)

\(\rho \) with \(\alpha \) implements the optimal stopping rule (14) if and only if \(r\in \mathring{{\mathscr {R}}}\).

-

(iii)

Fix any \(r\in \mathring{{\mathscr {R}}}\). Let \(r'\) differ from r in arbitrary periods, such that for some t, \(r'_{t} = r_{t} + \epsilon _{t}\), for some nonzero \(\epsilon _{t}\in {\mathbb {R}}\). Suppose \(r'\not \in \mathring{{\mathscr {R}}}\) due to these \(r'_{t}\)’s. Then, posting \(r'\) may fail the incentive compatibility constraints.

-

(iv)

Let \(\phi \) and \(\xi \) be constructed in Proposition 2. If the mechanism does not involve \(\rho \), then \(\{\alpha ,\phi ,\xi \}\) is dynamic incentive-compatible if and only if there exists \(\eta (t)\in \varTheta _{t}\) such that, for all \(t\in {\mathbb {T}}\),

$$\begin{aligned} \begin{aligned}&{\mathbb {E}}^{\alpha |\eta (t)}\Big [\sum _{s=t}^{T-1} \big (\beta ^{\alpha }_{S,s+1}({\tilde{\theta }}_{s+1}\vee \eta (s+1))-\beta ^{\alpha }_{S,s}({\tilde{\theta }}_{s}\vee \eta (s)) \big ) \\&\quad - \big (\beta ^{\alpha }_{{\bar{S}},s+1}({\tilde{\theta }}_{s+1}\vee \eta (s+1))-\beta ^{\alpha }_{{\bar{S}},s}({\tilde{\theta }}_{s}\vee \eta (s)) \big ) \Big ] = 0, \end{aligned} \end{aligned}$$(53)where \(\beta ^{\alpha }_{{\bar{S}},t}\) and \(\beta ^{\alpha }_{S,t}\) are constructed in terms of \(\alpha \) in Proposition 3.

The following proposition shows a necessary condition for DIC that follows Lemma 7.

Proposition 5

Suppose that Assumptions 1 and 3 hold. In any DIC mechanism \(\{\alpha , \phi ,\) \(\xi ,\rho \}\), the following conditions hold: for any arbitrary fixed state \(\theta _{\epsilon ,t}\in \varTheta _{t}\), any \(\theta _{t}\), \({\hat{\theta }}_{t}\in \varTheta _{t}\), \(h^{\theta }_{t-1}\in \prod _{s=1}^{t-1}\varTheta _{s}\), \(t\in {\mathbb {T}}\),

where \(\gamma _{t}^{\alpha }(\tau ,\theta _{t}|h^{\theta }_{t-1}) \equiv \frac{\partial U^{\alpha ,\phi ,\xi ,\rho }_{t}(\tau ,r|h^{\theta }_{t-1}) }{ \partial r }\Big |_{r= \theta _{t}}\).

Proof

See Appendix H. \(\square \)

Proposition 5 establishes a first-order necessary condition for DIC. This necessary condition takes advantage of the envelope condition established in Lemma 7 to characterize the necessity of DIC in terms of the allocation rule. Note that the conditions (54) and (55) hold for both formulations of \(\rho \) in Proposition 2 and Corollary 2, respectively.

6 Principal’s Optimal Mechanism Design

At the ex-ante stage, the principal provides a take-it-or-leave-it offer by designing the decision rules \(\{\alpha ,\phi ,\xi ,\rho \}\) to maximize her ex-ante expected payoff (1). The time horizon of the principal’s optimization problem is the mean first passage time, \({\bar{\tau }}\). Given the agent’s optimal stopping rule, \({\bar{\tau }}\) is determined by \(\{\alpha ,\phi ,\xi ,\rho \}\) and the stochastic process \(\varXi _{\alpha }\). Propositions 2 and 3 imply that \(\phi \) and \(\xi \) can be represented by \(\alpha \). From Corollary 4, \(\rho \) can be fully characterized by \(\alpha \) and \(\eta \). Then, we can determine \({\bar{\tau }}\) by \(\alpha \), \(\eta \), and \(\varXi _{\alpha }\), i.e., \({\bar{\tau }} = \lambda (\alpha ,\eta ; \varXi _{\alpha })\). Specifically,

Since the principal’s mechanism design problem is finite horizon, i.e., \(T<\infty \), \({\bar{\tau }}\) always exists.

Given \(\{\alpha ,\phi ,\xi ,\rho \}\), the agent decides whether to participate at the ex-ante stage, by checking the rational participation (RP) constraint,

i.e., the ex-ante expected payoff of the agent is nonnegative. Since the decision making at the ex-ante stage involves no private information, the principal’s evaluation of \({\bar{\tau }}\) coincides with the agent’s. Hence, the principal’s problem is

The mechanism design problem formulated in (58) can be generally applicable to principal-agent models in different economic environments, in which the agent’s private information endogenously changes over time. The mechanism could be a dynamic contract, in which the principal commits to a T-period contract and the agent is allowed to unilaterally terminate the contract without advance notification. As an illustration, consider that the principal is an employer and the agent is an employee. The employee’s state is his attitude towards work, which dynamically changes over time due to the time-evolving development of employee loyalty. The employer’s mechanism design problem is to seek an optimal way to design and allocate inter-temporal work and salary arrangements to facilitate the ongoing development of employee loyalty and to specify compensation or penalty policy to influence the employee’s unilateral termination of the contract and hedge the corresponding risk of losing profit. Other applications include dynamic subscription policies (e.g., magazine, online courses, and customized products), insurance, and leasing. Allowing for the early termination provides additional flexibility for the agent and reduces his risk due to the uncertainty of the dynamic environment, thereby increasing the attractiveness of the principal’s offer.