Abstract

An autonomous correlation device in a multistage game is a device that, before every stage, chooses for each player a private signal, possibly in a correlated way, and reveals to each player the signal chosen for him. The chosen signals depend only on previous signals, and not on the actions of the players. An extensive-form correlated \(\varepsilon \)-equilibrium in a multistage game is an \(\varepsilon \)-equilibrium in an extended game that includes an autonomous correlation device. In this paper we prove that every stochastic game with Borel measurable bounded payoffs has an extensive-form correlated \(\varepsilon \)-equilibrium, for every \(\varepsilon >0\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many situations in economics and biology do not have a natural termination time; Nations as well as species have a very long future to consider. A mathematical abstraction for this phenomenon is the concept of infinite-horizon games.

A broad literature deals with infinite-horizon games with perfect information, where at each stage only one player is active; the active player chooses an action and all other players observe his move. A play of the game is an infinite sequence of moves, and the payoff of each player is a function of the play. Martin [10] studied a two-player zero-sum games where the payoff function is \(\{0,1\}\)-valued. That is, player 1 wins the game and gain 1 if the play generated by the players is in a given set of plays, and player 2 wins otherwise. Martin proved that if the winning set of player 1 is Borel measurable, then the game is determined: either player 1 has a winning strategy or player 2 has a winning strategy. This result implies that every two-player zero-sum perfect-information game has a value, provided the payoff function is bounded and Borel measurable. Mertens and Neyman [12] used the existence of the value in two-player zero-sum perfect-information games to prove that for every \(\varepsilon > 0\), every multi-player non-zero-sum perfect-information game has a pure \(\varepsilon \)-equilibrium, provided the payoff functions are bounded and Borel measurable. A recent result of Flesch et al. [5] demonstrates that a subgame perfect \(\varepsilon \)-equilibrium needs not exist in all perfect-information games with bounded Borel payoff functions.

While a lot is known regarding the class of infinite-horizon perfect information games, the same is not true for the class of infinite-horizon imperfect-information games. In this paper we concentrate on stochastic games, a class of infinite-horizon games with imperfect information, in which players move simultaneously at each stage and payoffs are determined by Borel measurable bounded functions on the set of plays. Such payoff functions which were considered by Maitra and Sudderth [9] are more general than the standard approach of using daily payoffs.

In 1969, Blackwell [3] proved the existence of a value in every Blackwell game where the payoff function is the indicator function of a \(G_\delta \) set, and conjectured that every Blackwell game with bounded payoffs has a value. Footnote 1 Blackwell’s conjecture was proved by Martin [11] who also showed how to generalize this result to zero-sum stochastic games. However, the existence of an \(\varepsilon \)-equilibrium in all stochastic games with Borel measurable bounded payoffs is still an open problem, and was only proved for subclasses of these games (see e.g., two-player stochastic game with finite state and action spaces [20, 21], three-player absorbing games [16], and a subclass of multi-player quitting games (Solan and Vieille, [18]). In the current paper we deal with the existence of a weaker concept of equilibrium in stochastic games: an extensive-form correlated \(\varepsilon \)-equilibrium.

Correlated equilibria were introduced by Aumann [1, 2] for one-shot games. A correlated equilibrium is a probability distribution over the set of joint actions, such that if a joint action is drawn from this distribution (presumably by an external mediator), and each player is privately recommended to follow his part in the joint action, then no player can profit by disobeying the recommendation, given that all other players follow their recommendations. Equivalently, consider an extended game in which a correlation device chooses, before the start of the play, a signal to each player according to some known joint distribution over the set of signals. Any Nash equilibrium in this extended game, where the players can base their choice of action on the signal they receive from the device, is a correlated equilibrium in the original game.

For multistage games, several variants of correlation equilibrium have been studied in the literature, each one is based on a different class of correlation devices. First, as in one-shot games, the device can choose one private signal for each player before the start of play and reveal to each player his chosen signal (correlation device, [6, 7]). A more general device may choose a private signal for each player before every stage, and reveal to each player his chosen signal before the players choose actions for that stage. In this case, the data on which the device bases its choices may vary. In the most general case, the device may base its choices on stage messages that it receives from the players (communication device, [6, 7, 14], or [13]. One can restrict oneself to devices that base their choices only on previous signals that they chose (autonomous device, [6, 7]).

In the present paper we are interested in autonomous devices: devices that choose a signal for each player at every stage, and the signals depend only on previous signals, and not on the actions of the players or on previous states. Thus, there is no communication between the players, and a correlation is achieved by the lotteries of the device. An extensive-form correlated \(\varepsilon \)-equilibrium in a multistage game is an \(\varepsilon \)-equilibrium in an extended game that includes an autonomous device.

Solan [17] characterized the set of extensive-form correlated \(\varepsilon \)-equilibria in stochastic games. In particular, he proved that every feasible and individually rational payoff in a stochastic game is an extensive-form correlated equilibrium payoff, by defining a correlation device that mimics that profile. The device chooses for each player an action according to the probability distribution given by the profile and recommends that each player plays that action. To make deviations nonprofitable, the device reveals to all players the recommendations in the previous stage. This way, a deviation is detected immediately and can be punished. Solan leaves open the question whether there exists a feasible and individually rational payoff.

The existence of a correlated equilibrium in stochastic games was known only in special cases. Nowak [15] proved the existence of correlated equilibria in discounted stochastic games with general state space given that the payoffs are continuous with respect to joint actions. Solan and Vieille [19] proved the existence of an extensive-form correlated equilibrium in an undiscounted stochastic game. In addition, if the game is positive and recursive, a stationary correlated equilibrium payoff exists. In their proof for stochastic games Solan and Vieille construct a “good” profile that yields all players a high payoff, in which no player can profit by a unilateral deviation that is followed by an indefinite punishment. They then follow Solan [17] and define a correlation device that mimics that profile.

The main result of the paper is the existence of extensive-form correlated equilibria in stochastic games with Borel measurable bounded payoffs. Our proof is also based on the characterization theorem of Solan [17]. However, since the payoffs are not necessarily continuous it was not clear that stochastic games with Borel and bounded payoffs have a “good” profile that yields all players a high payoff, in which no player can profit by a unilateral deviation that is followed by an indefinite punishment. The crucial step of the proof is a special property for one-shot normal-form games that ensures that such a “good” profile indeed exists.

The paper is organized as follows. The model, some basic definitions, and the main result appear in Sect. 2. Section 3 contains an example that illustrates the main ideas of the construction. The proof is presented in Sect. 4.

2 The Model and the Main Result

Definition 1

A stochastic game with Borel measurable bounded payoffs \(\Gamma \) is given by

-

A nonempty finite set of players \(I \).

-

A nonempty countable set of states \(S\).

-

An initial state \(s_1\in S\).

-

For every player \(i\in I\), a nonempty finite set of actions \(A^i\). Denote by \(A=\prod _{i\in I} A^i\) the set of joint actions. Denote by \(H_\infty =\{s_1\}\times (A\times S)^{\mathbf{N}}\) the set of plays. We endow \(A\) with the discrete topology, and \(H_\infty \) with the \(\sigma \)-algebra generated by all the finite cylinders.

-

A transition rule \(q\) that assigns for each pair \((s,a)\in S\times A\) of state and joint action a probability distribution \(q(\cdot |s,a)\) over \(S\).

-

For every player \(i\in I\), a Borel measurable bounded payoff function \(u^i:H_\infty \rightarrow {\mathbf{R}}\).

The game is played as follows. The initial state \(s_1\) is given. At stage \(t\), the current state \(s_t\) is announced to the players and they simultaneously choose actions. Each player \(i\) chooses an action \(a^i_t\in A^i\), independent of the others. The joint action \(a_t=( a^i_t)_{i\in I}\) is publicly announced, a state \(s_{t+1}\) is drawn according to \(q(\cdot |s_t,a_t)\), and the game proceeds to stage \(t+1\). The payoff to each player \(i\) is \(u^i\left( s_1,a_1,s_2, a_2,\ldots \right) \in {\mathbf{R}}\).

The set of finite histories at stage \(t\) is \(H_t = \{s_1\}\times (A\times S)^{t-1}\). Denote by \(H= \bigcup _{t \in {\mathbf{N}}} H_{t}\) the sets of all finite histories.

Definition 2

A (behavior) strategy for player \(i\) is a function \(\sigma ^i : H \rightarrow \Delta \left( A^i\right) \). A (strategy) profile \(\sigma =\left( \sigma ^i\right) _{i\in I}\) is a vector of strategies, one for each player.

Let \(\Sigma ^i\) be the set of (behavior) strategies of player \(i\) and \(\Sigma =\prod _{i\in I}\Sigma ^i\) be the profile space. We denote by \(\sigma ^{-i}=\left( \sigma ^j\right) _{j\ne i}\) a vector of strategies of all the players excluding player \(i\).

Every profile \(\sigma \in \Sigma \) induces a probability distribution \(P_\sigma \) over \(H_\infty \). Let \(E_\sigma \) be the expectation operator that corresponds to \(P_\sigma \). The expected payoff for player \(i\) under \(\sigma \) is

Definition 3

A correlated profile is a function \(\sigma : H \rightarrow \Delta \left( A\right) \).

Note that every behavior profile induces a correlated profile, but the converse is not true.

Let \(\Sigma _*\) be the correlated profile space. We denote by \(\sigma \) a behavior profile as well as a correlated profile.

Every correlated profile \(\sigma \in \Sigma _*\) induces a probability distribution over \(H_\infty \). We denote by \(u^i\left( \sigma \right) \) the expected payoff for player \(i\) under the correlated profile \(\sigma \).

Definition 4

An autonomous device for \(\Gamma \) is a pair \({\mathcal {C}}=\left( \left( M^i_t\right) _{i\in I,t\in {\mathbf{N}}},\left( \xi _t\right) _{t\in {\mathbf{N}}}\right) \) where

-

\(M^i_t\) is a nonempty finite set of signals for player \(i\) at stage \(t\). Set \(M_t=\prod _{i\in I} M^i_t\), the set of joint signals at stage \(t\). For every \(t\ge 1\), set \(M(t)=M_1\times M_2 \times ...\times M_t\), the set of histories of joint signals at the first \(t\) stages, and \(M\left( 0\right) =\{\emptyset \}\).

-

\(\xi _t: M\left( t-1\right) \rightarrow \Delta \left( M_t\right) \) is a function that assigns for every history of joint signals in \( M\left( t-1\right) \) a distribution over the set of joint signals at stage \(t\).

Given an autonomous device \({\mathcal {C}}\), we define an extended game \(\Gamma \left( {\mathcal {C}}\right) \) as follows. The game \(\Gamma \left( {\mathcal {C}}\right) \) is played in the same manner as the game \(\Gamma \), but at each stage \(t\) once the current state \(s_t\) is announced to the players, a joint signal \(m_t\) is drawn from \(M_t\) according to \(\xi _t\left( m_1,m_2,...,m_{t-1}\right) \) and each player \(i\) privately receives the signal \(m_t^i\). Then, each player chooses an action \(a_t^i\), based on his current private signal and his previous private signals, as well as previous states and the moves of all the players during the first \(t-1\) stages.

Denote by \(H_t^i\left( {\mathcal {C}}\right) \) the set of histories player \(i\) can observe at stage \(t\); that is \(H_1^i\left( {\mathcal {C}}\right) =\{s_1\}\times M^i_1\) and \(H_t^i\left( {\mathcal {C}}\right) =\{s_1\}\times \prod _{\left\{ t'<t\right\} }\left( M_{t'}^i\times A\times S\right) \times M_{t}^i\) for every \(t>1\). Set \(H^i\left( {\mathcal {C}}\right) =\bigcup _{t\in {\mathbf{N}}} H_t^i\left( {\mathcal {C}}\right) \) the set of all finite histories of player \(i\) in \(\Gamma \left( \mathcal C\right) \).

Definition 5

A strategy for player \(i\) in the extended game \(\Gamma \left( {\mathcal {C}}\right) \) is a function \(\sigma ^i : H^i\left( {\mathcal {C}}\right) \rightarrow \Delta \left( A^i\right) \). A profile in the game \(\Gamma \left( {\mathcal {C}}\right) \) is a vector of strategies, one for each player.

Every autonomous device \({\mathcal {C}}\) and profile \(\sigma \) in the game \(\Gamma \left( {\mathcal {C}}\right) \) define a probability distribution \(P_{{\mathcal {C}},\sigma }\) over the set \(H_\infty \) of plays in \(\Gamma \). Let \(E_{{\mathcal {C}},\sigma }\) be the expectation operator that corresponds to \(P_{{\mathcal {C}},\sigma }\). The expected payoff for player \(i\) under \(\sigma \) in \(\Gamma \left( {\mathcal {C}}\right) \) is

Definition 6

Let \(\varepsilon >0\). A profile \(\sigma _*\) in the game \(\Gamma (\mathcal C)\) is an \(\varepsilon \)-equilibrium if for every player \(i\in I\) and every strategy \(\sigma ^i\) of player \(i\) in \(\Gamma \left( {\mathcal {C}}\right) \)

The vector \(u_{\mathcal {C}}\left( \sigma _*\right) \) is called an \(\varepsilon \)-equilibrium payoff vector in \(\Gamma \left( {\mathcal {C}}\right) \).

That is, a profile \(\sigma _*\) is an \(\varepsilon \)-equilibrium in the game \(\Gamma \left( {\mathcal {C}}\right) \) if no player can gain more than \(\varepsilon \) by a unilateral deviation.

Definition 7

Let \(\varepsilon >0\). An extensive-form correlated \(\varepsilon \)-equilibrium in the game \(\Gamma \) is a pair of an autonomous device \({\mathcal {C}}\) for \(\Gamma \) and an \(\varepsilon \)-equilibrium in an extended game \(\Gamma \left( {\mathcal {C}}\right) \).

The main result of the paper is the following.

Theorem 8

Let \(\varepsilon >0\). Every stochastic game with Borel measurable bounded payoffs has an extensive-form correlated \(\varepsilon \)-equilibrium.

3 An Example

In this section we illustrate the main ideas in the construction using an example. For simplicity, we look at a game with only one state.

Consider the following two-player game played by Alice and Bob, where there is only one state and the action sets of both players are \(\{a,b\}\). The game is played in stages. At each stage \(t\), Alice and Bob simultaneously choose actions. The joint action is publicly announced, and the game proceeds to stage \(t+1\). The payoffs are as follows. As soon as Alice plays \(b\), the game terminates Footnote 2 and the payoffs are determined by the last action taken by Bob: if he played \(a\) then the payoffs are \((-2,1)\) (i.e., Alice receives \(-2\) and Bob receives \(1\)); if he played \(b\) then the payoffs are \((1,0)\). If Alice always plays \(a\) then her payoff is \(0\), while the payoff of Bob is \(0\) only if there is a period \(t\) such that Bob plays \(b\) at all periods from t onwards; otherwise, his payoff is \(-\)2. That is,

This game may be displayed as a version of the Big Match’ Game (see Blackwell and Ferguson, [4]) where at every stage Bob plays either \(a\) or \(b\) while Alice tries to predict when he plays \(b\), but she has only one opportunity to do so. The payoff of Alice depends on whether she exploits her opportunity to match the \(b\)-action of Bob and on her success or failure in doing so. Bob is better off if Alice exploits her opportunity and fails. However, if she does not play \(b\) at all, his payoff is maximal when he plays \(b\) from some stage onward, but by doing so he exposes himself to the risk of Alice playing \(b\).

In this example, as well as in the proof, we consider a special class of autonomous devices that was introduced by Solan [17]. At every stage, each player receives a private signal which includes two components. The first one is the action he is recommended to play at the current stage. The device chooses for each player an action according to a probability distribution given by some correlated profile and recommends that each player plays that action. The second component is the actions his opponents were recommended to play in previous stage. This way all players can compare the realized actions of each player to the recommended action, detect deviations, and punish the deviator by playing a minmax strategy that minimizes the payoffs of the deviator. Footnote 3

In this game, the (minmax) value of each player after any non-terminating history is 0. Indeed, if the game has not terminated yet, by playing \(a\) in all future stages a player ensures that the other player’s payoff is at most 0. In addition, each player can ensure that his payoff is at least 0 by following his maxmin strategy: Alice by always playing \(a\) and Bob by playing \(b\) with probability 1 from some stage onward.

We next examine some natural correlated profiles and show that they may fail to produce an extensive-form correlated \(\varepsilon \)-equilibrium.

We first consider the profile according to which both players follow their maxmin strategies. That is, Alice plays \(a\) and Bob plays \(b\), and each player switches to the punishment strategy if a deviation is detected. The payoff under this profile is \((0,0)\). This profile does not induce an extensive-form correlated \(\varepsilon \)-equilibrium, since Alice can deviate by playing \(b\) at the first stage and gain 1.

Another way to see why this suggested profile does not induce an extensive-form correlated \(\varepsilon \)-equilibrium is to consider the one-shot game that the players face when they consider deviating after some history, given the game did not terminate so far. The payoffs in each cell in this one-shot game are the punishment payoffs in case of deviation. In general, for each finite history, there might be different such one-shot game, however, in our case there is only one state hence the one-shot game is the same for all histories.

Bob | |||

|---|---|---|---|

\(a\) | \(b\) | ||

Alice | \(a\) | (0,0) | (0,0) |

\(b\) | (\(-\)2,1) | (1,0) | |

In this one-shot game, the joint action according to which “Alice plays \(a\) and Bob plays \(b\)” is not an equilibrium neither an \(\varepsilon \)-equilibrium for a sufficiently small \(\varepsilon \). This implies that Alice is better off deviating and being punished rather than following the suggested profile.

Consider a correlated profile that (i) yields to each player after each finite history at least the value up to \(\varepsilon \) and (ii) after every finite history the players play a (correlated) \(\varepsilon \)-equilibrium in the above one-shot game. By Solan [17] such a correlated profile induces an extensive-form correlated \(\varepsilon \)-equilibrium. Therefore, we next try to alter the maxmin strategies so as it also satisfies (ii).

We next investigate the profile according to which both players follow an equilibrium in the preceding one-shot game.

A known feature of multistage games with continuous payoffs is that if the players play at every stage \(t\) an equilibrium (or a correlated equilibrium) in the one-shot game where its payoffs are the players’ values at stage \(t+1\), then each player receives at least his value in the whole game. We now show that, this is not necessarily true in case that the payoffs are not continuous, and thus this profile may fail inducing an extensive-form correlated equilibrium.

We will alter the previous profile according to which the players follow their maxmin strategies in a way that whenever it is not an \(\varepsilon \)-equilibrium in the above one-shot game for a sufficiently small \(\varepsilon \), it is replaced by some correlated \(\varepsilon \)-equilibrium. In that one-shot game, for instance, “Alice plays \(a\) and Bob plays \(b\)” is not an \(\varepsilon \)-equilibrium so whenever Bob plays \(b\) with probability greater than \(\varepsilon \) we replace it by the equilibrium in which “both players play a.” In particular, we obtain a profile such that both players play \(a\) as long as no deviation occurs. The amended profile does not induce an extensive-form correlated \(\varepsilon \)-equilibrium. Indeed, Bob’s payoff under this profile is \(-\)2, less than his maxmin value. This result occurs since the payoff functions are not continuous.

Finally, we introduce a profile according to which both players follow a special \(\varepsilon \)-equilibrium in the preceding one-shot game such that it induces an extensive-form correlated \(\varepsilon \)-equilibrium.

Note that if one alters the maxmin profile as suggested in previous paragraph using only finitely many replacements with probability 1, then the new profile necessarily guarantees that each player’s payoff is at least his or her value. Furthermore, if whenever such a replacement is executed, the expected payoff for at least one player in the one-shot game increases by some constant over his value while the payoffs of the others are at least their values, then by the boundedness of the payoffs only finitely many such replacement is needed with probability 1. In Sect. 4.1 we prove that if the profile in which all players follow their maxmin strategy is not an \(\varepsilon \)-equilibrium in the one-shot game, then there is a correlated \(3\varepsilon \)-equilibrium that satisfies the last property, hence one can alter the maxmin profile as suggested in previous paragraph so it induces an extensive-form correlated \(\varepsilon \)-equilibrium.

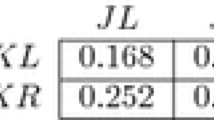

In the example, this method may lead to the correlated profile according to which, as long as the game goes on, an action is chosen by the following distribution.

Bob | |||

|---|---|---|---|

\(a\) | \(b\) | ||

Alice | \(a\) | \(1-\varepsilon \) | \(\varepsilon (1-\varepsilon )\) |

\(b\) | 0 | \(\varepsilon ^2\) | |

The payoffs under this profile in the one-shot game are \((\varepsilon ^2,0)\), and Alice’ payoff is greater than her value by \(\varepsilon ^2\) in any one-shot game. Thus, Alice eventually plays \(b\) with probability 1. In particular, only finitely many replacements are done with probability 1. Furthermore, under this profile the payoffs in the whole game are \((1,0)\), so both players receive at least their value. One can verify that no player can gain more than \(\varepsilon \), by deviating in the game. Hence, this correlated profile induces an extensive-form correlated \(\varepsilon \)-equilibrium.

In the proof, we follow the ideas presented in the example, and show that indeed one can repair the maxmin profile as suggested before using only finitely many replacement with probability 1.

4 The Proof of Theorem 8

Since the payoffs are bounded, one can assume w.l.o.g. that they are in \(\left[ 0,1\right] \).

For every correlated profile \(\sigma \) in \(\Gamma \), one can define an autonomous device \({\mathcal {C}}_\sigma \) that mimics that profile [17]. For each player, the device chooses an action according to the probability distribution given by \(\sigma \) and recommends that he plays that action. To make deviations unprofitable, the device reveals to all players the recommendations it made to all players in the previous stage. This way, a deviation is detected immediately and the deviator can be punished. As is shown in Sect. 4.2, coordination between the players should continue during the punishment phase. Since we have to construct an autonomous correlation device that does not observe the play, the device chooses at each stage \(t\) a vector of recommendations, which includes one recommendation for each possible history of length \(t\), and for every possible deviation by some player that could have occurred in the past, an action is part of the punishment strategy against this player. The players, who observe past play, know which recommendation to take into account, and which to disregard.

From now on we only consider such autonomous devices. We denote by \(\sigma \) the correlated profile in \(\Gamma \) as well as the profile in \(\Gamma \left( {\mathcal {C}}_\sigma \right) \) according to which the players follow the recommendation of the device.

Fix \(\varepsilon >0\) throughout the proof. In due course, we define the punishment in case of deviation. We then present some sufficient conditions introduced by Solan [17] under which a correlated profile in \(\Gamma \) induces an extensive-form correlated \(\varepsilon \)-equilibrium such that the punishment is applied whenever a deviation occurs. We conclude the proof by presenting a correlated profile that satisfies these sufficient conditions. Beforehand, we present a certain normal-form game, which plays a crucial role in the proof of Theorem 8, and study its properties.

4.1 A Result on Correlated Equilibria in Normal-Form Games

A normal-form game is given by a triplet \(G=\left( I, \left( A^i\right) _{i\in I},\left( g^i\right) _{i\in I}\right) \), where \(I\) is a finite set of players, \(A^i\) is a finite set of actions for player \(i\), \(A=\prod _{i\in I}A^i\) is the set of joint actions, and \(g^i:A\rightarrow {\mathbf{R}}\) is the payoff function of player \(i\), for every \(i\in I\).

A mixed action for player \(i\in I\) in \(G\) is a probability distribution \(x^i\in \Delta \left( A^i\right) \). An action profile is a vector of actions, one for each player. For every \(\lambda \ge 0\), a profile \(x\in \prod _{i\in I}\Delta (A^i)\) is a \(\lambda \)-equilibrium if every player \(i\in I\) can gain at most \(\lambda \) by deviating given the other players follow \(x^{-i}\).

A correlated profile in \(G\) is a probability distribution \(x\in \Delta \left( A\right) \). For every \(a\in A\), denote by \(x\left[ a\right] \) the probability that the joint action \(a\) is chosen according to \(x\), and by \(x[a^i]\) the probability that the action \(a^i\) is chosen according to \(x\).

Definition 9

A correlated profile \(x\in \Delta \left( A\right) \) is a correlated \(\lambda \)-equilibrium in \(G\) if for every player \(i\in I\) and every pair of actions \(a^i,\hat{a}^i\in A^i\) such that \(x[a^i]>0\) one has

That is, in a correlated \(\lambda \)-equilibrium \(x\), a joint action \(a\) is chosen according to \(x\), each player \(i\) is informed of \(a^i\) and he can gain at most \(\lambda \) by unilaterally deviating from this recommended action given that all other players follow their recommendations. This concept is an ex-post correlated \(\lambda \)-equilibrium, unlike the concept of an extensive-form correlated \(\varepsilon \)-equilibrium.

Every normal-form game has a correlated 0-equilibrium. This follows from the existence of Nash equilibria (which requires a fixed-point argument; see [1]) or can be proven directly by duality arguments (Hart and Schmeidler [8]). We will prove that every normal-form game also has a correlated \(\lambda \)-equilibrium with a special property that we need in the sequel.

For every player \(i\in I\), and every mixed action \(x^i\in \Delta \left( A^i\right) \) define

This is the minimal payoff of player \(i\) when he follows the mixed action \(x^i\). Note that \(b^i(x^i)\) is at most the maxmin value of player \(i\) in \(G\).

The following theorem asserts that if the profile \(\left( x^i\right) _{i\in I}\) is not a \(\lambda \)-equilibrium in \(G\), then there is a correlated \(3\lambda \)-equilibrium in \(G\) according to which the expected payoff of every player \(i\) is at least \(b^i(x^i)\) and there is at least one player whose payoff is even higher than that.

Theorem 10

Let \(\lambda >0\). If the profile \(x=\left( x^i\right) _{i\in I}\in \prod _{i\in I}\Delta \left( A^i\right) \) is not a \(\lambda \)-equilibrium in \(G\), then the game has a correlated \(3\lambda \)-equilibrium \(x_*\in \Delta \left( A\right) \) such that

and for at least one player \(j\in I\) one has

This theorem is the heart of the proof of Theorem 8. As explained in Sect. 3, we are going to construct an extensive-form correlated \(\varepsilon \)-equilibrium in \(\Gamma \) by modifying the maxmin profile in it. Whenever the joint mixed action is not a \(\lambda \)-equilibrium in a proper one-shot game, we will instruct the players to play a correlated \(3\lambda \)-equilibrium in that one-shot game. Theorem 10 and the boundedness of the payoffs will ensure that only finitely many modifications are necessary.

Proof

Every \(\lambda \)-equilibrium in \(G\) is also a \(\lambda '\)-equilibrium in \(G\), for \(\lambda '>\lambda \). Thus, one can assume w.l.o.g. that \(\lambda <\frac{1}{2}\).

Let \(x\) be a profile that is not a \(\lambda \)-equilibrium in \(G\). In particular, there are a player \(l\in I\) and an action \(a^l\in A^l\) such that

By definition, for every other player \(i\in I\setminus \left\{ l\right\} \) one has \(g^i\left( a^l,x^{-l}\right) \ge b^i(x^i)\).

We next prove that there must be a correlated \(3\lambda \)-equilibrium \(x_*\in \Delta \left( A\right) \) that satisfies the required conditions. The proof will consist of several steps. First we define an auxiliary one-shot game. We then show that every 0-equilibrium in the auxiliary game induces either a \(\lambda \)-equilibrium in the original game that satisfies Eqs. (2) and (3); or a correlated \(3\lambda \)-correlated equilibrium that satisfies Eqs. (2) and (3).

\(\underline{\hbox {Defining an auxiliary game}.}\)

Let \(\bar{G}=\left( I, \left( \bar{A}^i\right) _{i\in I},\left( \bar{g}^i\right) _{i\in I}\right) \) be an auxiliary normal-form game with the set of players of \(G\). The set of actions for player \(i\), \(\bar{A}^i\), is obtained by adding the mixed action \(x^i\) to the set \(A^i\) as a pure action. To distinguish between the “pure” action \(x^i\) in \(\bar{G}\) and the mixed one in \(G\), we denote the first one by \(\bar{x}^i\). Denote by \(\bar{A}=\prod _{i\in I}\bar{A}^i\) the set of joint actions in \(\bar{G}\). For every player \(i\in I\) and every joint action \(\bar{a}\in \bar{A}\), denote by \(a\) the mixed profile in \(\prod _{i\in I}\Delta (A^i)\) that correspond to \(\bar{a}\). The payoff \(\bar{g}^i\left( \bar{a}\right) \) for player \(i\) is as follows:

In other words, the game \(\bar{G}\) is obtained by adding one action to each player \(i\), which is identical to \(x^i\), and to motivate the players to use this action, the payoff of a player who chooses it increases by \(\lambda \).

\(\underline{\hbox {The 0-equilibrium in the auxiliary game}.}\)

By playing the action \(\bar{x}^i\) in \(\bar{G}\), player \(i\) guarantees that his payoff is at least \(b^i(x^i)+\lambda \). It follows that in every 0-equilibrium \(\bar{p}_*=\left( \bar{p}_*^i\right) _{i\in I}\) of \(\bar{G}\), the payoff of each player \(i\) is at least \(b^i(x^i)+\lambda \). Since the payoffs in \(G\) and \(\bar{G}\) differ by at most \(\lambda \), the 0-equilibrium \(\bar{p}_*=\left( \bar{p}_*^i\right) _{i\in I}\) in \(\bar{G}\) induces a \(\lambda \)-equilibrium \( p_*=\left( p_*^i\right) _{i\in I}\) in \(G\) such that the expected payoff of every player \(i\in I\) is at least \(b^i(x^i)\).

\(\underline{\hbox {Constructing a specific correlated}}\) \({3\lambda }\)-\(\underline{\hbox {equilibrium in}}\) \(G\).

We now use \(p_*\) to construct a correlated \({3\lambda }\)-equilibrium in \(G\) that satisfies Eqs. (2) and (3). We distinguish between two cases.

Case 1: there is a player \(j\in I\) that plays \(\bar{x}^{j}\) in \(\bar{p}_*\) with probability at most \({1 \over 2}\).

We claim that in this case \(p_*\) itself is a \(\lambda \)-equilibrium in \(G\) that satisfies Eqs. (2) and (3). Indeed, as we already mentioned, \(p_*\) is a \(\lambda \)-equilibrium in \(G\) that satisfies Eq. (2). It is left to show that \(p_*\) also satisfies Eq. (3).

Denote by \(\bar{p}_*^j\left[ a^j\right] \) (respectively, \( p_*^j\left[ a^j\right] \)) the probability that player \(j\) plays the action \(a^j\) in \(\bar{p}_*^j\) (respectively, \( p_*^j\)). Then

Since \(\lambda <\frac{1}{2}\), one has \(b^j(x^j)+\lambda ^3<b^j(x^j)+\lambda ^2<g^j\left( p_*\right) \), and Eq. (3) holds.

Case 2: every player \(i\in I\) plays \(\bar{x}^i\) in \(\bar{p}_*\) with probability higher than \(\frac{1}{2}\).

Define the following correlated profile \(x_*\) in \(G\): with probability \(1-\lambda \) a joint action \(a\in A\) is chosen according to \(p_*\); with probability \(\lambda \left( 1-\lambda \right) \) a joint action \(a\in A\) is chosen according to \(x\); and with probability \( \lambda ^2\), the action \(a^l\) is chosen for player \(l\) while a joint action \(a^{-l}\in A^{-l}\) for players \(I\setminus \left\{ l\right\} \) is chosen according to \(x^{-l}\).

By following \(x_*\) in \(G\), the expected payoff of each player \(i\in I\setminus \left\{ l\right\} \) is at least \(b^i(x^i)\) and Eq. (2) is satisfied. Indeed,

In addition, player \(l\)’s expected payoff is at least

where the inequality follows from Eq. (4). Thus, Eq. (3) is satisfied as well.To conclude the proof, we need to prove that \(x_*\) is a correlated \(3\lambda \)-equilibrium in \(G\). a joint action \(a\) is drawn from \(A\) according to \(x_*\) and player \(i\in I\) is recommended to play \(a^i\).

If \(i\ne l\) then \(a^i\) is necessarily in the support of \(p_*\). Hence, with probability at least \(\frac{\frac{1}{2}\left( 1-\lambda \right) }{\frac{1}{2}\left( 1-\lambda \right) +\lambda }\) the other players play according to \(p_*^{-i}\), so player \(i\) can gain by deviating at most

If \(i=l\) then either \(a^i=a^l\) or \(a^i\ne a^l\). If the former holds, then either the other players play according to \(p_*^{-i}\) (in case that \(a^l\) is in the support of \(p_*\)) and by deviating he can gain at most \(\lambda \), or the other players play according to \(x^{-l}\) so he is recommended to play his best response, thus by deviating he can not gain more than \(\lambda \). Finally, if \(a^i\ne a^l\) then \(a^i\) is necessarily in the support of \(p_*\), in which case, as shown for the other players, player \(l\) can gain at most \(3\lambda \) by deviating. It follows that \(x_*\) is a correlated \(3\lambda \)-equilibrium in \(G\), as desired. \(\square \)

4.2 The Punishment

Let \(\Gamma \) be a stochastic game with Borel bounded payoffs and let \(h\in H\) be a finite history in \(\Gamma \). Denote by \(\Gamma _{h}\) the subgame that starts after history \(h\) occurs. Every profile \(\sigma \) in \(\Gamma \) induces a profile \(\sigma _{h}\) in the subgame \(\Gamma _{h}\). Denote by \(u^i\left( \sigma |h\right) \) the expected payoff of player \(i\) in the subgame \(\Gamma _{h}\) under this profile.

Fix a player \(i\in I\). Consider a zero-sum stochastic game \(\Gamma ^i_h\) where player 1, the maximizer, represents player \(i\), player 2, the minimizer, represents all other players, and the payoff function is \(u^i(\cdot |h)\). By (Martin [11], Theorem 11), this game has a value \(v^i(h)\) called the value of player \(i\in I\) in the subgame \(\Gamma _h\); that is,

A \(\lambda \)-maxmin (behavior) strategy in \(\Gamma ^i_h\) is a strategy \(\sigma ^i\in \Sigma ^i\) that attains the supremum in the right-hand side of Eq. (5) up to \(\lambda \). A subgame perfect \(\lambda \)-maxmin strategy is a strategy \(\sigma ^i\in \Sigma ^i\) that attains the supremum in the right-hand side of Eq. (5) up to \(\lambda \), for every history \(h\in H\). A \(\lambda \)-punishing strategy in \(\Gamma ^i_h\) is a correlated strategy \(\sigma ^{-i}\in \Sigma ^{-i}_*\) that attains the infimum in the middle term of Eq. (5) up to \(\lambda \).

The next proposition states that every player has a subgame perfect \(\lambda \)-maxmin strategy, and the same holds for all other players.

Proposition 11

Let \(i\in I\) and \(\lambda >0\). Then,

-

(I)

There is a strategy \(\tilde{\sigma }^{i}_\lambda \in \Sigma ^i\) such that for every correlated profile \(\sigma ^{-i}\in \Sigma ^{-i}_*\) of the other players and every finite history \(h\in H\),

$$\begin{aligned} u^i\left( \tilde{\sigma }^i_\lambda ,\sigma ^{-i}|h\right) \ge v^i\left( h\right) -\lambda . \end{aligned}$$ -

(II)

There is a correlated strategy \(\hat{\sigma }^{-i}_{\lambda }\in \Sigma ^{-i}_*\), such that for every strategy \(\sigma ^i\in \Sigma ^i\) of player \(i\) and every finite history \(h\in H\),

$$\begin{aligned} u^i\left( \sigma ^i,\hat{\sigma }^{-i}_{\lambda } \mid h\right) \le v^i\left( h\right) +\lambda . \end{aligned}$$

Proof

Note that (I) implies (II) by exchanging the roles of the players. Thus, it is sufficient to prove that (I) holds.

Construct a strategy \(\tilde{\sigma }^i_{\lambda }\) of player \(i\) in the following way. Player \(i\) starts by following a \(\frac{\lambda }{4}\)-maxmin strategy at the history \(h_1=s_1\). As soon as the game reaches an history \(h\in H\) in which the \(\frac{\lambda }{4}\)-maxmin strategy at the history \(h_1\) is not \(\frac{\lambda }{2}\)-maxmin in \(\Gamma ^i_h\), he switches to a \(\frac{\lambda }{4}\)-maxmin strategy in \(\Gamma ^i_h\). Once he reaches some later history \(h'\) such that the \(\frac{\lambda }{4}\)-maxmin in \(\Gamma ^i_h\) is no longer a \(\frac{\lambda }{2}\)-maxmin in \(\Gamma ^i_{h'}\), he switches to a \(\frac{\lambda }{4}\)-maxmin in \(\Gamma ^i_{h'}\), and so on.

We now show that the strategy \(\tilde{\sigma }^i_{\lambda }\) of player \(i\) is \(\lambda \)-maxmin at every history \(h\). The proof uses a similar ideas to those presented in Sect. 3. If in the construction of \(\tilde{\sigma }^i_{\lambda }\) the number of switches is finite with probability 1, then the new profile is necessarily a \(\lambda \)-maxmin at every history \(h\). To prove that only finitely many switches are needed, we demonstrate that, by the construction, whenever a player switches to a new \(\frac{\lambda }{4}\)-maxmin strategy, the lower bound of his value increases. Thus, by the boundedness of payoffs, with probability 1 only finitely many switches are done. \(\square \)

Lemma 12

For every correlated strategy \(\sigma ^{-i}\in \Sigma ^{-i}_*\) and every history \(h\in H\) one has

Proof

Let \(\sigma ^{-i}\in \Sigma ^{-i}_*\) be a correlated profile of all players excluding player \(i\). We are going to prove that Eq. (6) holds for \(\sigma ^{-i}\) and for the history \(h_1 =s_1\) at the beginning of the game. The proof that it also holds for every other history \(h \in H\) is similar.

Denote by \(\sigma ^i_{T,n}\) the strategy that is obtained by the process defined above for constructing \(\tilde{\sigma }^i_{\lambda }\) under the following modification: the process stops as soon as either the game reaches stage \(T\in {\mathbf{N}}\) or \(n\) switches have been executed. In particular, \(\sigma ^i_{T,0}\) is the \(\frac{\lambda }{4}\)-maxmin strategy of player \(i\) at the history \(h_1=s_1\) with which we started.

We now prove that the strategy \(\sigma ^i_{T,n}\) of player \(i\) is a \(\frac{\lambda }{2}\)-maxmin strategy at \(h_1=s_1\), for every \(T\) and \(n\). In addition, we prove that \(u^i(\sigma ^i_{T,n},\sigma ^{-i})\) converges to \(u^i(\sigma ^i,\sigma ^{-i})\) as \(T\) and \(n\) go to infinity. We then conclude that \(\sigma ^i\) is a \(\lambda \)-maxmin strategy in \(\Gamma ^i_{h_1}\).

We start with several notations that are needed for the proof. For every strategy \(\mu ^i\in \Sigma ^i\) of player \(i\), denote by \(b^i(\mu ^i|h)\) the amount that player \(i\) can guarantees for himself by following \(\mu ^i\) in \(\Gamma _h\):

The following two properties hold for \(b^i\): (a) For every history \(h\in H\) and every correlated profile \(\mu ^{-i}\in \Sigma ^{-i}_*\) one has \(u^i(\mu ^i,\mu ^{-i}|h)\ge b^i(\mu ^i|h) ;\) (b) The process \(b^i(\mu ^i|\cdot )\) is a submartingale. Hence for every two bounded stopping times \(\tau _1\le \tau _2\) and every correlated profile \(\mu ^{-i}\in \Sigma ^{-i}_*\) one has

Let \(P_{T,n}\) be the probability distribution induced by \((\sigma ^i_{T,n},\sigma ^{-i})\). Let \(S_{T,n}\) be the minimum of \(T\) and the stage in which the \(n\)’th switch in the definition of \(\sigma ^i_\lambda \) occurs. Denote by \(R_T(h)\) the number of switches that have been executed in \(h\) according to \(\sigma ^i_{T,T}\). We now show that for every \(n\le T\) one has

We prove Eq. (7) by induction. Eq. (7) holds for \(n=0\) since \(\sigma ^i_{T,0}\) is \(\frac{\lambda }{4}\)-maxmin strategy of player \(i\) at the history \(h_1=s_1\). Suppose that it holds for \(n<T\), we prove that it also holds for \(n+1\).

where Eq. (8) is satisfied since \(\sigma ^i_{T,n}\) and \(\sigma ^i_{T,n+1}\) are identical until stage \(S_{T,n+1}\); Inequality (9) follows by (a); Inequality (10) follows from the construction of \(\sigma ^i_{T,n+1}\) according to which the continuation strategy of \(\sigma ^i_{T,n+1}\) at \(h\in H_{S_{T,n}}\) is not identical to that of \(\sigma ^i_{T,n}\) if and only if \(b^i(\sigma ^i_{T,n}|h)<v^i(h)-\frac{\lambda }{2}\le b^i(\sigma ^i_{T,n+1}|h)+\frac{\lambda }{4}\); Inequality (11) follows by (b); and Inequality (12) follows from the induction hypothesis and since \(\{R_T\ge n+1\}\subseteq \{R_T\ge n\}\).

It follows that Eq. (7) holds \(\forall n\le T\). In particular, \(\sigma ^i_{T,n}\) is \(\frac{\lambda }{2}\)-maxmin at \(h_1=s_1\). Furthermore, since the payoffs are bounded, \(P_{T,T}\left( R_T\ge n \right) \) goes to 0 as \(T\) and \(n\) go to infinity. Indeed, otherwise \(u^i(\sigma ^i_{T,n},\sigma ^{-i})\) goes to infinity, a contradiction. In conclusion, there are two positive integers \(T'\) and \(n'\) sufficiently large such that \(P_{T,T}\left( R_T\ge n \right) <\frac{\lambda }{4}\) for every \(T>T'\) and \(n>n'\). In particular, \(\sigma ^i_{T,T}\) and \(\tilde{\sigma }^i_\lambda \) consolidate over a set of plays whose probability is higher than \(1-\frac{\lambda }{4}\). Thus, \(u^i(\tilde{\sigma }^i_{\lambda },\sigma ^{-i})\ge u^i(\sigma ^i_{T,n},\sigma ^{-i})-2\cdot \frac{\lambda }{4}\cdot 1\ge v^i(h_1)-\lambda ,\) as desired. \(\square \)

Fix \(\varepsilon _1\) such that \(0<\varepsilon _1<\varepsilon \). In the autonomous device that will be constructed below, if player \(i\in I\) deviates and an history \(h\in H\) follows, then a punishment against player \(i\) is executed: all other players start to play according to \(\hat{\sigma }^{-i}_{{\varepsilon _1}}\) from the history \(h\) and forth, so player \(i\)’s payoff is at most \(v^i\left( h\right) + {\varepsilon _1}\). Since \(\hat{\sigma }^{-i}_{{\varepsilon _1}}\) is a correlated strategy, the coordination between the players should continue during the punishment phase.

4.3 Sufficient Conditions

In this section we will present sufficient conditions under which a correlated profile in \(\Gamma \) induces an autonomous device that mimics the profile such that the extended game has an \(\varepsilon \)-equilibrium.

Let \(h\in H\) be a finite history. Denote by \(G_{\varepsilon _1}\left( h\right) \) the normal-form game with the payoffFootnote 4 functions \(g^i_h\left( a\right) := \sum _{s\in S}q(s|h,a)v^i\left( h,a,s\right) +\varepsilon _1\) where \(a=\left( a^i\right) _{i\in I}\) and \(q(s|h,a)\) is the probability that the game reaches \(s\) from current state given the players choose \(a\). One may refer to the game \(G_{\varepsilon _1}\left( h\right) \) as the game every player faces when he considers deviating at history \(h\).

The next proposition presents sufficient conditions under which a correlated profile in \(\Gamma \) induces an extensive-form correlated \(\varepsilon \)-equilibrium. The proposition is a special case of ([17], Proposition 5.2), and hence its proof is omitted.

Proposition 13

Let \(\varepsilon >0\). Let \(\varepsilon _1,\varepsilon _2,\varepsilon _3>0\) such that \(\varepsilon _1+\varepsilon _2+\varepsilon _3<\varepsilon \). Let \(\sigma \) be a correlated profile in \(\Gamma \) such that

-

(i)

\(u^i\left( \sigma |h \right) \ge v^i\left( h\right) -\varepsilon _2, \ \ \ \forall h\in H\) and

-

(ii)

the correlated action \(\sigma \left( h\right) \) is a correlated \(\varepsilon _3\)-equilibrium in \(G_{\varepsilon _1}\left( h\right) \);

Let \(\mathcal C\) be a device that chooses for each player an action according to \(\sigma \) and recommends that each player plays that action. In addition, the device reveals to all players the recommendations it made to all players in the previous stage. Then the profile according to which each player follows his recommendation else the punishment presented in Section 4.2 is applied is an \(\varepsilon \)-equilibrium in \(\Gamma \left( \mathcal C\right) \).

Condition (i) requires that the payoff of each player in each subgame under \(\sigma \) is at least the amount he can guarantee by following his \(\varepsilon _2\)-maxmin strategy. Condition (ii) requires that in each normal-form game induced by \(\sigma \) the players play an ex-post correlated \(\varepsilon _3\)-equilibrium.

Note that by (i) and by the definition of the payoffs in the normal-form game \(G_{\varepsilon _1}\left( h\right) \), the payoff of player \(i\) in \(G_{\varepsilon _1}\left( h\right) \) under \(\sigma \) may exceed the payoff in \(\Gamma \left( h\right) \) under this profile but by no more than \(\varepsilon _1+\varepsilon _2\). Therefore, by (ii), no player can gain more than \(\varepsilon _1+\varepsilon _2+\varepsilon _3< \epsilon \) by deviating after each history. Furthermore, since at each play at most one deviation can occur, it follows that no player can gain more than \(\epsilon \) by deviating in the game \(\Gamma \).

4.4 Constructing a Correlated Profile

We next construct a correlated profile \(\sigma \) that satisfies conditions (i) and (ii) in Proposition 13, so that an extensive-form correlated \(\varepsilon \)-equilibrium exists.

Fix \(\varepsilon _1, \varepsilon _2,\varepsilon _3>0\) such that \(\varepsilon _1+\varepsilon _2+\varepsilon _3<\varepsilon \). Let \(\tilde{\sigma }=\left( \tilde{\sigma }^i_{\varepsilon _2}\right) _{i\in I}\) be a profile in which each player follows his subgame perfect \(\varepsilon _2\)-maxmin strategy. In particular, \(u^i\left( \tilde{\sigma }|h\right) \ge v^i\left( h\right) -\varepsilon _2\), for every player \(i\in I\) and every finite history \(h\in H\).

We define the profile \(\sigma \) as follows. Let \(h\in H\) be a finite history. If \(\tilde{\sigma }\left( h\right) \) is an \(\frac{\varepsilon _3}{3}\)-equilibrium in the normal-form game \(G_{\varepsilon _1}\left( h\right) \), set \( \sigma \left( h\right) :=\tilde{\sigma }\left( h\right) ; \) otherwise, by Theorem 10 there are a correlated \(\varepsilon _3\)-equilibrium \(x_h\) in the game \(G_{\varepsilon _1}\left( h\right) \) and a player \(i_h\) such that the payoff of player \(i_h\) is at least \(v^{i_h}\left( h\right) -\varepsilon _2+\left( \frac{\varepsilon _3}{3}\right) ^3\) and the payoff of every other player \(i\in I\setminus \left\{ i_h\right\} \) is at least \(v^{i}\left( h\right) -\varepsilon _2\) . Set \( \sigma _t\left( h\right) :=x_h. \)

By the construction, \(\sigma \) satisfies condition (ii) in Proposition 13, and it is left to prove that it also satisfies condition (i).

Lemma 14

For every history \(h\in H\) one has

Proof

We are going to prove that Eq. (13) holds for the history \(h_1=s_1\). The proof that it holds for every other history \(h\in H\) is analogous.

Let \(\sigma _{T,n}\) be a correlated profile that is obtained by the same process as \(\sigma \) but if the game reaches either stage \(T\) or the first history after that \(n\) replacements have been executed, then the players continue by following \(\tilde{\sigma }\). Let \(P_{T,n}\) be the probability distribution induced by \(\sigma _{T,n}\). Let \(S_{T,n}\) be the minimal stopping time in which the play reaches a history at which either the \(n\)-th replacements have been executed or the continuation profiles under \(\sigma _{T,n}\) and \(\tilde{\sigma }\) are identical. Denote by \(R_T^i(h)\) the number of replacements that have been executed in \(h\) according to \(\sigma _{T,T}\) in which player \(i\) was the one whose lower bound increases by \(\left( \frac{\varepsilon _3}{3}\right) ^3\).

We now prove that for every \(T\ge n\) one has

We prove Eq. (14)by induction over \(n\). For \(n=0\), Eq. (14) holds since \(\sigma _{T,0}=\tilde{\sigma }\), according to which each player follows his \(\varepsilon _2\)-maxmin strategy. Assume that Eq (14) holds for \(n<T\). We prove that it also holds for \(n+1\).

Inequality (15) holds since \(\sigma _{T,n}\) and \(\tilde{\sigma }\) are identical from stage \(S_{T,n}\) and on. Inequality (16) follows from the construction and since the players play according to \(\tilde{\sigma }\) from stage \(S_{T,n}\) until \(S_{T,n+1}\). Eq. (17) derives from the induction hypothesis.

Since the payoffs are bounded, \(\sum _{i\in I} P_{T,T} (R_T^i\ge n)\) goes to 0 as \(T\) and \(n\) goes to infinity, otherwise, \(\sum _{i\in I} u^i(\sigma _{ T ,n})\) goes to infinity, a contradiction. In conclusion, for every \(\delta >0\), there are \(T'\) and \(n'\) sufficiently large such that \(\sum _{i\in I} P_{T,T} (R_T^i\ge n) < \delta \) for every \(T > T'\) and \(n > n'\). Thus, for every \(\delta >0\)

Hence, \(u^i\left( \sigma \right) \ge v^i\left( h_1\right) -\varepsilon _2\), as desired. \(\square \)

Notes

A Blackwell game is a two-player zero-sum game that is played for infinitely many stages. At each stage the players simultaneously make their moves and are then informed of each other’s moves. Payoff is determined by a Borel measurable function on the set of possible resulting sequences of moves.

One could assume that play goes on without any further influence on the payoffs, so as to make the horizon infinite.

If \(|I|>2\), the players may use a correlated profile to punish a deviator. In this case, the device is also used to implement the punishment phase.

Throughout the paper, we use the notation \(g^i_h\) also for the expected payoff of player \(i\) under a mixed or a correlated profile.

References

Aumann RJ (1974) Subjectivity and correlation in randomized strategies. J Math Econ 1:67–96

Aumann RJ (1987) Correlated equilibrium as an expression of Bayesian rationality. Econometrica 55:1–18

Blackwell D (1969) Infinite \(G_\delta \) games with imperfect information. Zastosowania Matematyki (Appl Math) 10:99–101

Blackwell D, Ferguson TS (1968) The big match. Ann Math Stat 39:159–163

Flesch J, Kuipers J, Mashiah-Yaakovi A, Schoenmakers G, Shmaya E, Solan E, Vrieze K (2014) Non-existence of subgame-perfect \(ep\)-equilibrium in perfect-information games with infinite horizon. Int J Game Theory doi:10.1007/s00182-014-0412-3

Forges F (1986) An approach to communication equilibria. Econometrica 54:1375–1385

Forges F (1988) Communication equilibria in repeated games with incomplete information. Math Oper Res 13:77–117

Hart S, Schmeidler D (1989) Existence of correlated equilibria. Math Oper Res 14:18–25

Maitra A, Sudderth W (1998) Finitely additive stochastic games with borel measurable payoffs. Int J Game Theory 27:257–267

Martin DA (1975) Borel determinacy. Ann Math 2:363–370

Martin DA (1998) The determinacy of Blackwell games. J Symb Logic 63(4):1565–1581

Mertens J-F (1987) Repeated games. In: Proceedings of the International Congress of Mathematicians, 0, (Berkeley, California, 986), pp 0528–0577, American Mathical Society, Providence

Mertens J-F (1994) Correlated and communication equilibria. In: Mertens JF, Sorin S (eds) Game theoretic methods in general equilibrium analysis. Kluwer Academic, Dordrecht

Myerson RB (1986) Multistage games with communication. Econometrica 54:323–358

Nowak AS, Raghavan TES (1992) Existence of stationary correlated equilibria with symmetric information for discounted stochastic games. Math Oper Res 17:519–526

Solan E (1999) Three-player absorbing games. Math Oper Res 24:669–698

Solan E (2001) Characterization of correlated equilibria in stochastic games. Int J Game Theory 30:259–277

Solan E, Vieille N (2001) Quitting games. Math Oper Res 26:265–285

Solan E, Vieille N (2002) Correlated equilibrium in Stochastic games. Games Econ Behav 38:362–399

Vieille N (2000a) Equilibrium in 2-person Stochastic games. I. A reduction. Israel J Math 119:55–91

Vieille N (2000b) Equilibrium in 2-person Stochastic games. II. The case of recursive games. Israel J Math 119:93–126

Acknowledgments

The research leading to these results has received funding from the European Research Council under the European Community’s Seventh Framework Programme (FP7/2007–2013)/ ERC Grant agreement no [249159]. I would like to thank Sergiu Hart and Eilon Solan for insightful discussions and many useful comments.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Mashiah-Yaakovi, A. Correlated Equilibria in Stochastic Games with Borel Measurable Payoffs. Dyn Games Appl 5, 120–135 (2015). https://doi.org/10.1007/s13235-014-0122-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13235-014-0122-2