Abstract

This paper introduces a mean field modeling framework for consumption-accumulation optimization. The production dynamics are generalized from stochastic growth theory by addressing the collective impact of a large population of similar agents on efficiency. This gives rise to a stochastic dynamic game with mean field coupling in the dynamics, where we adopt a hyperbolic absolute risk aversion (HARA) utility functional for the agents. A set of decentralized strategies is obtained by using the Nash certainty equivalence approach. To examine the long-term behavior we introduce a notion called the relaxed stationary mean field solution. The simple strategy computed from this solution is used to investigate the out-of-equilibrium behavior of the mean field system. Interesting nonlinear phenomena can emerge, including stable equilibria, limit cycles and chaos, which are related to the agent’s sensitivity to the mean field.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The recent years have experienced a rapid growth of research on large-population stochastic dynamic games with mean field coupling, where the players are individually insignificant but collectively generate a significant impact on each player. To tackle the dimensionality difficulty in designing strategies, consistent mean field approximations provide a powerful approach. The fundamental idea is that in the infinite population limit each agent optimally responds to a certain mean field which in turn is replicated by the closed-loop behaviors of the agents. Based on this procedure, the mean field is determined by a fixed point analysis. Subsequently one may construct a set of decentralized strategies for the large but finite population initially considered, where each agent only needs to know its own state information and the mass effect computed off-line. One may further establish an ε-Nash equilibrium property for the set of strategies, where the individual optimality loss level ε depends on the population size. This fundamental methodology has been developed in our past work considering linear-quadratic-Gaussian (LQG) games [28, 29], and McKean–Vlasov dynamics [31], and called the Nash certainty equivalence (NCE) method. A closely related approach is independently developed in [36]. For mean field models in industry dynamics, the notion of oblivious equilibrium is proposed in [56], and further generalization is presented in [2]. The survey [14] on differential games reports recent progress on mean field game theory. The mean field approximation approach also appeared in anonymous sequential games [32] with a continuum of players. However, the heuristic application of the law of large numbers to a continuum of independent processes leads to measurability difficulties, and the resulting mathematical rigor problem has long been recognized (see related references in [44]).

The further study of this methodology in LQG models can be found in [8, 12, 28, 38]. A risk-sensitive linear-quadratic model is studied in [54]. The investigation of adaptation with unknown model information in the LQG setting is developed in [33, 46]. The mean field game analysis with nonlinear diffusions can be found in [16, 31, 36]. This approach depends on tools of Hamilton–Jacobi–Bellman (HJB) equations, Fokker–Planck equations, and McKean–Vlasov equations. The reader is also referred to [15] for a systematic account of the basic theory. A very general conceptual framework is developed in [34] by using the notion of nonlinear Markov processes. Numerical solutions for coupled HJB and Fokker–Planck equations have been developed in [1]. The analysis dealing with discrete states/actions is developed in [22, 53]. By using the machinery of HJB–Fokker–Planck equations, synchronization and phase transition of oscillator games are studied in [57]. An interesting application to power systems is presented in [41] for decentralized recharging control of large populations of plug-in electric vehicles. Economic applications of mean field games to exhaustible resource production and human capital optimization are described in [24].

To address mean field interactions with the presence of one or a few agents with strong influences, mixed player models with a major player are studied in [27, 44, 55]. The economic background can be found in [25], which considered static cooperative games. The nonlinear extension of the major player model is developed in [11, 45]. In a different setting, mean field optimal control has been studied in [4, 58], which involves a single optimizer. A mean field limit is studied for Markov decision processes in [23]. For mean field social optimization with decentralized information, see [30].

In this paper, we present an application of the NCE methodology to a consumption-accumulation optimization problem involving many competing agents. We adopt an affine production function to model the input (investment)-output relation of each individual agent, and HARA utility [21] is used for the performance measure. This combination of dynamics and costs will enable explicit computation of the control and is able to address complex behavior which has been observed in economic systems [10]. For the reader’s convenience, some basic economic background on stochastic growth theory will be presented before the mean field extension of the production model is described. Based on the related game theoretic literature on production optimization [3, 7, 20], the problem studied in this paper will be called mean field capital accumulation games.

After the formulation of the mean field game for consumption-accumulation, we apply the NCE methodology to design decentralized strategies and present the performance analysis. Next, we turn to the so-called out-of-equilibrium analysis [5], and for this purpose we introduce a notion called the relaxed stationary mean field solution. The simple strategy determined by this solution notion will be used to reveal interesting nonlinear phenomena of the mean field system: stable equilibria, limit cycles, and chaos. The success in mean field stochastic control achieved so far has crucially depended on the rational anticipation of the mean field behavior by individual agents. The emergence of the chaotic behavior and the associated unpredictability suggest new perspectives and challenges for future studies of such optimization problems.

The organization of the paper is as follows. The mean field capital accumulation game is formulated in Sect. 2. Section 3 studies an auxiliary optimal control problem. The NCE fixed point equation is constructed and analyzed in Sect. 4. Section 5 presents the error analysis of the mean field approximation. Section 6 introduces the relaxed stationary mean field solution and Sect. 7 examines the out-of-equilibrium property of the mean field dynamics. Finally, Sect. 8 presents concluding remarks.

To end this introduction, we give a convention about notation. The integer subscripts i,k,j,1, etc. appearing in a random variable (state \(X_{t}^{i}\), control \(u_{t}^{1}\), noise \(W_{t}^{j}\), etc.) always denote an agent index, not an exponent, unless otherwise indicated. For two numbers a and b, a∨b=max(a,b) and a∧b=min(a,b). For two random variables X and Y, EXY=E(XY). If a sequence of random variables {Y n ,n≥1} converges to Y in probability, we denote \(Y_{n}\stackrel{P}{\rightarrow}Y\).

2 The Mean Field Model

The mean field capital accumulation game to be formulated in this section is closely related to the economic literature on optimal stochastic growth.

2.1 The Classical Modeling of Optimal Stochastic Growth

In stochastic optimal growth theory, a hypothetical central planner undertakes the inter-temporal allocation of capital and consumption in an economy where the production process is subject to random disturbances (or called random shocks). This area has its roots in deterministic optimal growth [17, 49, 51] and has led to the development of a substantial literature. A comprehensive survey is available in [47].

Within the classical stochastic one-sector growth modeling, the economy at stage t involves two basic quantities: the capital stock κ t (used for investment) and consumption c t . The output y t+1 at the next stage is characterized by the relation

where f(⋅,⋅) is called the production function; r t is the random disturbance; and y 0 is the initial output (see e.g. [13, 47]). If the output remaining after investment is all consumed, one has the constraint κ t +c t =y t . The early research usually assumed the so called Inada condition for the production function f(⋅,r) when r is fixed, which means f(⋅,r) is a smooth, strictly increasing and strictly concave function of the capital stock κ, satisfying f(0,r)=0, f′(0,r)=∞ and f′(∞,r)=0 [13]. The shape of the function captures diminishing return when capital stock increases, and in particular f′(∞,r)=0 implies the output is only a negligible fraction of the capital stock when the latter is very abundant. The condition f′(0,r)=∞ indicates extremely high efficiency when the capital stock is very low. Later on considerable attention of economists has been devoted to general production functions without strict concavity [6, 42, 48], and a practical background is production involving renewal resources.

The utility from consumption is denoted by ν(c t ), where ν is usually taken as an increasing concave function defined on [0,∞). The objective of the planning problem is to maximize the expected discounted sum utility

where y 0 is given. Under quite standard conditions on the sequence of disturbances, this problem may be solved by dynamic programming. Although the model is relatively simple, it allows the examination of important issues such as asymptotic behavior of the economy [13]. There are also extensive works on multi-sectoral extensions (see references in [47]).

An obvious limitation of the one-agent-based optimization is that it does not directly consider the fact that in reality the same sector of the economy may involve many agents which optimize for their own utility and interact with each other. Hence, it is well motivated to adopt a game theoretic framework. For such related work on capital accumulation, see [3, 7, 20]. These works assume a joint ownership of the productive asset by different agents, which are associated with a common production function. Such an assumption is appropriate when all the agents have strategic consumption of a shared resource.

2.2 Mean Field Production Modeling

The model consists of N agents involved in a certain type of production activity of the economy. At time \(t\in{\mathbb{Z}}_{+}:=\{0,1,2,\ldots\}\), the output or wealth of agent i is denoted by \(X^{i}_{t}\). It invests the amount \(u^{i}_{t}\) for production. Borrowing is not allowed, and so the investment satisfies the constraint \(u^{i}_{t}\in[0, X^{i}_{t}]\). The amount used for consumption is

Denote \(u^{(N)}_{t}=(1/N) \sum_{j=1}^{N} u^{j}_{t}\), which is the aggregate (or average) investment level. After a period of investment the output of agent i, measured by the unit of capital, is modeled by the stochastic dynamics

where \(u^{(N)}_{t}\) indicates the influences of the mean field investment behavior and \(W^{i}_{t}\) is a random variable to model uncertainty. To obtain a meaningful large population analysis, it is necessary to include the scaling factor 1/N within \(u_{t}^{(N)}\). This kind of scaling by the population size is typical for the analysis of large systems (see e.g. [35]). We may think of \(u_{t}^{(N)}\) as a quantity measured according to a macroscopic unit. For simplicity we consider a population of uniform agents which share the same form of growth dynamics. The following assumptions are assumed throughout the paper:

-

(A1)

(i) Each sequence \(\{W^{i}_{t}, t\in{\mathbb{Z}}_{+} \}\) consists of i.i.d. random variables with support D W and distribution function F W . The N noise sequences \(\{W^{i}_{t}, t\in{\mathbb{Z}}_{+} \}\), i=1,…,N, are i.i.d. (ii) The initial states \(\{X^{i}_{0}, 1\leq i\leq N\}\) are i.i.d. positive random variables with distribution \(F_{X_{0}}\) and mean m 0, which are also independent of the N noise sequences.

The distribution functions F W and \(F_{X_{0}}\) do not depend on N. For convenience of notation, we introduce an auxiliary random variable W with distribution F W .

-

(A2)

(i) The function G: [0,∞)×D W →[0,∞) is continuous; (ii) for each fixed w∈D W , G(z,w) is a decreasing function of z on [0,∞).

-

(A3)

(iii) EG(0,W)<∞ and EG(p,W)>0 for each p∈[0,∞).

It is implied by (A2) that when the aggregate investment level increases, the production becomes less efficient. We give some motivation for using the multiplicative factor G. For illustration, consider the production of the same type of commodity in an agricultural economy. Suppose the model consists of many relatively small wheat farms. When a farm increases its investment, its product quantity increases. However, this alone is not enough to determine its profit. The aggregate investment level of all the farms affects the product price via the aggregate supply. It may also possibly affect labor wages. Therefore, the accumulated wealth of a farm is directly related to both its own investment and the aggregate investment. The simple model (1) explicitly reflects the mean field impact.

The affine production function Gu i in (1) is closely related to [42, 52]. If \(u^{(N)}_{t}\) is replaced by a fixed value \(\overline{u}\), \(G(\overline{u}, W_{t}^{i})u_{i}\) becomes a particular form of the production functions in [42, 52]. A production function with multiplicative noise is also adopted in [26] to solve a resource allocation problem as a Markov decision process. Our production function may be viewed as a mean field variant of the models in [26, 42, 52] by using the linear term \(u_{t}^{i}\) and incorporating the mean field effect \(u_{t}^{(N)}\). Apart from the justification from the point of view of price, our model can address negative production externalities as well, which can be used to model a congestion effect when agents have competitive usage of public resources [9, 40]. Our model shares similarity to the deterministic affine production function in [9, p. 650] which includes negative production externalities.

For model (1), there is no notable diminishing return effect resulting from the investment of agent i for large N. It is intended as a simple approximation to certain more general systems and will ensure tractability. Our main interest is to address the negative mean field impact which is captured by the production efficiency loss due to increasing aggregate investment.

The agent’s objective is the optimization of its utility of consumption. We adopt a hyperbolic absolute risk aversion (HARA) utility function of the form

where we take γ∈(0,1). This is a concave function of the consumption. Denote X=(X 1,…,X N) and u=(u 1,…,u N). Let u −i denote the control of all other N−1 agents excluding agent i. The expected discounted sum utility (also to be called utility functional) is

where ρ∈(0,1] is the discount factor.

For t≥0, let \(\mathcal{F}_{t}=\sigma(X_{s}, u_{s-1}, s\leq t)\) be the σ-algebra generated by the random variables {X s ,u s−1,s≤t}. Similarly, define the σ-algebra \(\mathcal {F}^{j}_{t}=\sigma(X_{s}^{j}, u_{s-1}^{j}, s\leq t)\).

Definition 1

We call \(\{u_{t}=(u^{1}_{t}, \ldots, u^{N}_{t}), 0\leq t\leq T\}\) a set of centralized strategies if (i) for each j, \(u_{t}^{j}\) is adapted to \(\mathcal{F}_{t}\), and (ii) for each pair (j,t), \(u_{t}^{j}\in[0, X_{t}^{j}]\), where \(X_{t}^{j}\) is recursively generated. We call it a set of decentralized strategies if for each j, \(u^{j}_{t}\) is adapted to \(\mathcal{F}_{t}^{j}\) and (ii) still holds.

If a rule is given to determine the dependence of \((u_{t}^{j}, j=1, \ldots, N)\) on {X s ,u s−1,s≤t}, \(\mathcal{F}_{t}\) and \((\mathcal{F}_{t}^{1}, \ldots, \mathcal{F}_{t}^{N}) \) can be recursively determined. Letting {u t ,0≤t≤T} be a set of decentralized strategies, we call it a set of decentralized state feedback strategies, if each \(u_{t}^{j}\) depends only on \((t, X_{t}^{j})\).

To end this section, we introduce an example for the function G.

Example 2

Suppose \(G(z,w)= \frac{\alpha w}{1+\delta z^{\eta}}\), where α>0,δ>0,η>0 are parameters. Let \(\{W^{i}_{t}, t\geq0\}\), 1≤i≤N, be positive i.i.d. noise processes with mean \(EW^{i}_{0}=1\). Then D W ⊂[0,∞) and (A2)–(A3) hold.

3 The Mean Field Limit of the Decision Problem

3.1 The Mean Field Approximation

Our starting point is to approximate \(u^{(N)}_{t}\), 0≤t≤T−1, by a deterministic sequence \((p_{t})_{0}^{T-1}\). There is no need to approximate \(u^{(N)}_{T}\) since it is not used by the agent’s optimal strategy at time T which will always consume all the wealth. Now agent i considers the optimal control problem with dynamics

where \(u^{i}_{t}\in[0, X^{i}_{t}]\) and is adapted to \(\mathcal{F}^{i}_{t}\), and the utility functional is now written as

where the argument 0 indicates the initial time 0. Once \((p_{t})_{0}^{T-1}\) is fixed, the decision problem of agent i is decoupled from that of the other N−1 agents, and for this reason, \(\bar{J}_{i}\) depends only on u i and \((p_{t})_{0}^{T-1}\). Further, denote the utility functional with initial time t by

which is affected only by \((p_{l})_{t}^{T-1}\). The value function is defined as

3.2 The Dynamic Programming Equation

We have the dynamic programming equation

where t=0,1,…,T−1. The terminal condition is

We look for a solution of the value function in the form

where D T =1. Now the main task is to determine D t . This will be accomplished by finding its recursion.

Denote the functions

By (A3), Φ(z)∈(0,∞) for each z. The main result for the dynamic programming equation is the following.

Theorem 3

-

(i)

The value function V i (x,t) takes the form (4), where

$$\begin{aligned} D_{t}= \frac{ \phi(p_{t}) D_{t+1}}{ 1+ \phi(p_{t}) D_{t+1} },\quad t\leq T-1,\qquad D_T=1. \end{aligned}$$(5) -

(ii)

The optimal control has the feedback form

$$\begin{aligned} u^i_t=\frac{ X^i_t}{ 1+ \phi(p_{t}) D_{t+1}}, \quad t\leq T-1, \qquad u_T^i=0. \end{aligned}$$(6)

Proof

(i) First we have D T =1, and \(u^{i}_{T}=0\). Next suppose \(V_{i}(x,t+1)= \frac{1}{\gamma}D_{t+1}^{{\gamma-1}} x^{\gamma}\) for t+1≤T. Let us continue to determine D t , t≤T−1. The dynamic programming equation gives

The maximum in the last equality is attained at a unique point

and the maximum is given as \(V_{i}(x,t)=\frac{1}{\gamma}D_{t}^{{\gamma-1}} x^{\gamma}\), where D t is given by (5).

(i) By the expression of the maximizer \(u_{t}^{i}\), it is clear that (6) is the optimal control law. □

Lemma 4

Denote ϕ t =ϕ(p t ). We have the relation

Proof

D t may be calculated by using (5). □

Let ϕ i ⋯ϕ j , i≥j, denote the successive product \(\prod_{k=j}^{i} \phi_{k}\).

Theorem 5

-

(i)

The closed-loop state of agent i satisfies

$$X^i_t=\left \{ \begin{array}{l@{\quad}l} \frac{1+\phi_{T-1}+\cdots+\phi_{T-1}\cdots\phi_t }{1+\phi_{T-1}+\cdots+\phi_{T-1}\cdots\phi_0} G(p_{t-1},W^i_{t-1} )\cdots G(p_{0},W^i_0 )X^i_0, & 1\leq t\leq T-1,\\ \frac{1}{1+\phi_{T-1}+\cdots+\phi_{T-1}\cdots\phi_0} G(p_{T-1},W^i_{T-1} )\cdots G(p_{0},W^i_0 )X^i_0, & t=T. \end{array} \right . $$ -

(ii)

The optimal control has the representation

$$\begin{aligned} u^i_t= \left \{ \begin{array}{l@{\quad}l} \frac{1+\phi_{T-1}+\cdots+\phi_{T-1}\cdots\phi_{t+1} }{1+\phi_{T-1}+\cdots+\phi_{T-1}\cdots\phi_0} G(p_{t-1},W^i_{t-1} ) \cdots G(p_{0},W^i_0 )X^i_0, & t\leq T-2, \\ \frac{1 }{ 1+\phi_{T-1}+\cdots+\phi_{T-1}\cdots\phi_0 } G(p_{T-2},W^i_{T-2} ) \cdots G(p_{0},W^i_0 )X^i_0 & t=T-1, \\ 0 & t=T, \end{array} \right . \end{aligned}$$(8)where \(G(p_{t-1},W^{i}_{t-1} ) \cdots G(p_{0},W^{i}_{0} ):=1\) if t=0.

Proof

For 0<t≤T, by using the optimal control law,

If 0<t≤T−1, Lemma 4 gives

For the case t=T, we use D T =1 and Lemma 4 to obtain

Then part (i) follows readily. Part (ii) follows from part (i) and (6). □

3.3 An Example

We consider a simple model with T=2. Let (p 0,p 1) be given. Then we have

The controls are given by

4 The Fixed Point Condition for Consistency

Recall that in Sect. 3, the strategy of agent i is obtained by assuming a known sequence \((p_{t})_{0}^{T-1}\) which is intended to approximate the mean field investment process \((u_{t}^{(N)})_{0\leq t\leq T-1}\). Now we apply the NCE methodology to specify \((p_{t})_{0}^{T-1}\) by imposing a consistency condition. Let u i be given in Theorem 5 and implemented by N agents described by (2). It is desired to replicate the mean field p t by \(\lim_{N\rightarrow\infty } u_{t}^{(N)}\). Also, note that the first two terms on the right-hand side of (8) depend only on (p 0,…,p T−1). Denote

The idea of construction here is to take expectation of \(u_{t}^{i}\) in (8) for t=0,…,T−1 to obtain T functions of (p 0,…,p T−1). Note that \(\lim_{N\rightarrow\infty} u_{t}^{(N)}\) is equal to this expectation with probability one.

For 0≤k≤T−2, define

where Ψ(p k−1)⋯Ψ(p 0):=1 if k=0.

For T−1, define

Now we define the vector function

from \(\mathbb{R}_{+}^{T}\) to \(\mathbb{R}_{+}^{T}\), and we further introduce the fixed point equation

where \((p_{0}, \ldots, p_{T-1})\in\mathbb{R}_{+}^{T}\). This equation specifies the consistency requirement for the NCE-based solution procedure in that the infinite population limit should replicate the mean field which had been initially assumed.

Theorem 6

Denote κ 0=Ψ(0) and

Then Λ has the following properties:

-

(i)

Λ is a continuous mapping from M to M.

-

(ii)

Λ has a fixed point.

Proof

We first check continuity. Consider Φ(z)=ρEG γ(z,W), where z∈[0,∞). Note that Φ(z)>0. Fix z. By the monotonicity of G, we have 0≤G γ(z′,W)≤G γ(0,W). By dominated convergence theorem,

So Φ and subsequently ϕ are continuous in p∈[0,∞). Therefore, Λ is continuous.

For t=0,…,T−1, observe that \(u_{t}^{i}\) satisfies

This implies that \(\varLambda_{k}(p_{0}, \ldots, p_{T-1})\in[0, \kappa_{0}^{k}m_{0}]\), and consequently, Λ is a mapping from M to M.

(ii) Brouwer’s fixed point theorem implies that Λ has a fixed point in M. □

5 Error Estimate of the Mean Field Approximation

Let \((p_{t})_{0}^{T-1}\in M\) be a fixed point of Λ. We call

the NCE-based control law. We follow the procedure in [31, Sect. 8] to develop the error estimate. This is done by first defining two reference systems of N agents. Denote \(K_{t}= \frac{1}{1+\phi(p_{t})D_{t+1}}\) for 0≤t≤T−1. Define \(X_{t}^{(N)}=(1/N)\sum_{i=1}^{N} X_{t}^{i}\), and \(\hat{X}_{t}^{(N)} \), \(\check{X}_{t}^{(N)}\), \(\mathring{X}_{t}^{(N)}\), etc. are defined similarly. The first system is based on (2) and the control law (12) for N agents

where \(\hat{X}_{0}^{i}=X_{0}^{i}\). The second system is based on (1) and the control law (12):

where we have used \(u_{t}^{(N)}=K_{t} \check{X}^{(N)}_{t}\). Finally, for each i we construct the envelope process on [0,T−1] by the recursion

By K t <1 and monotonicity of G, \(\mathring{X}_{t}^{i}\) is an upper bound for both \(\hat{X}_{t}^{i}\) and \(\check{X}_{t}^{i}\), 0≤t≤T.

Lemma 7

We have

Proof

For each fixed t≤T−1, since the sequence \(\{\hat{X}^{(N)}_{t}, N\geq1\}\) is generated by i.i.d. L 1 random variables, it is uniformly integrable (see [18, Sect. 4.2]). In addition, \(\lim_{N\rightarrow\infty}K_{t} \hat{X}_{t}^{(N)}=p_{t}\) with probability one. So \(\lim_{N\rightarrow\infty}E|K_{t}\hat{X}^{(N)}_{t}-p_{t}|=0\). □

Lemma 8

We have

Proof

Denote \(\epsilon_{t,N}=\sup_{1\leq i\leq N}E| \check{X}_{t}^{i}-\hat{X}_{t}^{i}|\), 0≤t≤T. We recursively estimate ϵ t,N for t=0,1,…,T. Obviously ϵ 0,N =0. If t=1, we consider agent 1 and

By continuity of G and \(K_{0}X_{0}^{(N)}\stackrel{P}{\rightarrow} p_{0}\), the right-hand side converges to zero in probability when N→∞, and is bounded by \(\mathring{X}_{1}^{1}\). By dominated convergence, \(\lim_{N\rightarrow\infty}E|\check{X}_{1}^{1} -\hat{X}_{1}^{1} |=0\). Hence by symmetric dynamics of the N agents,

We continue to check t=2. Note that

It is clear that Eξ 2≤ϵ 1,N EG(0,W). By the result with t=1 and Lemma 7,

as N→0. So by (18), ξ 1 converges to zero in probability as N→∞ and is upper bounded by \(\mathring{X}_{2}^{1}\), which implies lim N→∞ Eξ 1=0. Hence

So lim N→∞ ϵ 2,N =0. Repeating the above argument, the lemma is proved. □

Corollary 9

We have \(\lim_{N\rightarrow\infty} \sup_{t\leq T-1}E| u_{t}^{(N)}-p_{t}|=0\).

Proof

We have \(E|K_{t} \check{X}_{t}^{(N)}-p_{t}|\leq K_{t} E|\check{X}_{t}^{(N)}-\hat{X}_{t}^{(N)}|+ E|K_{t} \hat{X}_{t}^{(N)}-p_{t}|\). The corollary follows from Lemmas 7 and 8. □

Now in a system N agents, suppose all agents except agent 1 apply the NCE-based strategies. Let \(u^{1}=(u_{t}^{1})_{0}^{T}\) (adapted to \(\mathcal{F}_{t}\)) be the control of agent 1. Denote \(X_{t}^{(-i)}=(1/N)\sum_{k\neq i} X_{t}^{k}\). We introduce the system

The initial conditions are \(X_{0}^{j}\) for j=1,…,N, and \(u_{T}^{i}=0\) for 2≤i≤N. It should be pointed out that the N−1 random variables \(X_{t}^{i}\), 2≤i≤N, may have different marginal distributions creating a form of asymmetry since u 1 is allowed to use information asymmetrically with respect to these N−1 agents. For the analysis below, we need to carefully carry out the estimates uniformly with respect to u 1 and k∈{2,…,N}. The lemma below uses a notion of convergence in probability uniformly.

Lemma 10

Let T be fixed and s≤T−1, and the two positive random variables ξ 1,N and ξ 2,N depend on u 1. Suppose (i) \((\xi_{1,N}+u_{s}^{1}/N)\vee\xi_{2,N}\leq\mathring{X}_{s}^{(N)}\), (ii) For any δ>0, \(\lim_{N\rightarrow\infty} \sup_{u^{1}}P(|\xi_{1,N}+u_{s}^{1}/N-\xi_{2, N}|>\delta)=0\). Then for any δ>0,

Proof

For an arbitrary ϵ>0, we select a sufficiently large C ϵ >0 such that

where C ϵ does not depend on k,s due to the i.i.d. assumption on the noises and the initial states. Now let C ϵ be fixed. Note that G is uniformly continuous on \(B_{C_{\epsilon}}=[0,C_{\epsilon}]\times[-C_{\epsilon}, C_{\epsilon}]\). For the given δ in (21), there exists τ δ,ϵ >0 such that |G(y 1,w 1)−G(y 2,w 2)|≤δ whenever |y 1−y 2|+|w 1−w 2|≤τ δ,ϵ and \((y_{l},w_{l})\in B_{C_{\epsilon}}\), l=1,2.

We select N δ,ϵ such that for all N≥N δ,ϵ ,

Denote \(\varDelta =|G(\xi_{1,N}+u_{s}^{1}/N, W_{s}^{k})-G(\xi_{2,N},W_{s}^{k})|\). The following estimate holds: for all N≥N δ,ϵ ,

where the last inequality follows from (22). Denote the event \(A= \{\varDelta >\delta, |W_{s}^{k}|\leq C_{\epsilon}, |\xi_{1,N}+u^{1}_{s}/N-\xi _{2, N}|\leq\tau_{\delta,\epsilon} \}\). Then

which implies P(Δ>δ)≤ϵ for all N≥N δ,ϵ . Since N δ,ϵ does not depend on u 1,k, the lemma follows. □

Lemma 11

Let \(X^{j}_{t}\) be defined by (19)–(20). Then we have

Proof

It is clear that \(u_{t}^{1}\leq\mathring{X}_{t}^{1}\) where the superscript indicates agent 1. For t≤T, denote \(\epsilon _{t,N}^{1}=\sup_{u^{1}}\sup_{2\leq k\leq N} E|X_{t}^{k} -\check{X}_{t}^{k}|\). In fact, \(X_{t}^{k}\) depends on u 1 up to \((u^{1}_{s})_{s\leq t-1}\). Consider k≥2 for the estimate below. Take any ϵ>0. For t=1,

Denote \(\zeta_{0}^{k}=G(K_{0} X_{0}^{(-1)}+u_{0}^{1}/N, W_{0}^{k}) -G(K_{0} X_{0}^{(N)}, W_{0}^{k})\). We see that for any δ>0,

For any δ>0, by Lemma 10,

Denote \(\mathring{L}=1+2\sup_{0\leq t\leq T} E\mathring{X}_{t}^{1}\). We have

The collection of random variables \(\{\mathring{X}_{s}^{i}, 0\leq s\leq T, i=1,2, \ldots\}\) is uniformly integrable. There exists δ ϵ >0 such that \(E\mathring{X}_{s}^{i} 1_{A}\leq\epsilon/2\) whenever P(A)≤δ ϵ . We have

provided that N≥N ϵ for a sufficiently large N ϵ to ensure \(\sup_{u^{1}, k}P(|\zeta_{0}^{k}|>\epsilon/\mathring{L})\leq\delta _{\epsilon}\) by (24). Therefore, \(\epsilon_{t,N}^{1}\leq\epsilon\) for all N≥N ϵ . Hence the lemma follows for t=1.

Suppose the lemma is true for t≤s. We check t=s+1. We have

For any ϵ>0, we may select N 2,ϵ such that for all N≥N 2,ϵ , \(\epsilon_{s,N}^{1} E G(0, W)\leq\epsilon/2\) by the induction assumption for s.

We continue to estimate ξ 1. Take any δ>0. In order to apply Lemma 10, we estimate

which tends to 0 as N→∞. We then use Lemma 10 and the argument in (25) to show that there exists N ϵ such that for all N>N ϵ , \(\sup_{u^{1},k}E|X_{s+1}^{k}-\check{X}_{s+1}^{k}|\leq \epsilon\). So the lemma holds for s+1. Finally the lemma follows by induction. □

Let \(\{X_{0}^{1},(X_{t+1}^{1}, u_{t}^{1}), 0\leq t\leq T-1\}\) be given in (19)–(20). Now we introduce the new recursion,

where \(\widehat{X}_{0}^{1}=X_{0}^{1}\). Our plan is to set \(\widehat{u}_{t}^{1}\) as close to \(u_{t}^{1}\) as possible. However, it is necessary to introduce a modification when necessary so that the admissibility condition \(\widehat{u}_{t}^{1}\leq\widehat{X}_{t}^{1}\) is satisfied. We take \(\widehat{u}_{0}^{1}=u_{0}^{1}\). Given \(\widehat{u}_{s}^{1}\) generating \(\widehat{X}_{s+1}^{1}\), we determine

Therefore, we have the well-defined collection \(\{\widehat{X}_{0}^{1}, (\widehat{X}_{t+1}^{1}, \widehat{u}_{t}^{1}), 0\leq t\leq T-1\}\).

Lemma 12

We have

Proof

The case of t=0 is trivial. Note the fact that

We begin by checking t=1. We have

Notice that for any δ>0, \(\lim_{N\rightarrow\infty}\sup_{u^{1}}P(|K_{0}X_{0}^{(-1)}+u_{0}^{1}/N- p_{0}|>\delta)=0\). By using Lemma 10 and following the argument in (25) we obtain \(\lim_{N\rightarrow\infty}\sup_{u^{1}} E|\widehat{X}_{1}^{1}-X_{1}^{1}|=0\), which further gives \(\lim_{N\rightarrow\infty}\sup_{u^{1}} E|\widehat{u}_{1}^{1}-u_{1}^{1}|=0\).

For t=2, we have

It follows that

We have the estimate

By Corollary 9, lim N→∞ Eξ 2=0. By Lemma 11, as N→∞,

Hence for any δ>0, \(\sup_{u^{1}}P(|K_{1}X_{1}^{(-1)}+u_{1}^{1}/N-p_{1}|>\delta)\leq\frac{\sup_{u^{1}} E\xi_{1}+E\xi_{2}}{\delta}\rightarrow0\). Again, by using Lemma 10 and the argument in (25) we obtain \(\lim_{N\rightarrow\infty} \sup_{u^{1}}E|\widehat{X}_{2}^{1}-X_{2}^{1}|= 0\), which implies \(\lim_{N\rightarrow\infty} \sup_{u^{1}}E|\widehat{u}_{2}^{1}-u_{2}^{1}|= 0\). Repeating the above argument, the lemma follows. □

The following theorem presents the fundamental fact in mean field decision problems: centralized information has diminishing value for the decision making of individual agents. This property has been established in a significant range of different models (see e.g. [27, 29, 31]). For a population of N agents, let the NCE-based strategies determined by (12) be denoted as \(\{\check {u}_{t}^{i}, 0\leq t\leq T, 1\leq i\leq N\}\), which is a set of decentralized state feedback strategies.

Theorem 13

The set of NCE-based strategies \(\{\check{u}_{t}^{i}, 0\leq t\leq T, 1\leq i\leq N\}\) is an ε N -Nash equilibrium, i.e., for any i∈{1,…,N},

where u i is a centralized strategy (so it is adapted to \(\mathcal {F}_{t}\)) and 0≤ε N →0 as N→∞.

Proof

For γ∈(0,1), the elementary inequality holds:

It further implies that

Step 1. If agent i also applies the NCE-based control law, its utility functional is

where \(\check{u}_{t}^{i}=K_{t} \check{X}_{t}^{i}\) for t≤T−1 and \(\check{u}_{T}^{i}=0\). For t≤T−1, (30) implies

By (31), \(|\check{X}_{t}^{i}-K_{t} \check{X}_{t}^{i}|^{\gamma}\geq|(1-K_{t})\hat{X}_{t}^{i}|^{\gamma}-|(1-K_{t}) (\check{X}_{t}^{i}-\hat{X}_{t}^{i})|^{\gamma}\). For time T, we have \(\check{u}_{T}^{i}=0\), and \(|\check{X}_{T}^{i}|^{\gamma}= | \hat{X}_{T}^{i}+ (\check{X}_{T}^{i}-\hat{X}_{T}^{i})|^{\gamma}\) which leads to

Moreover, \(E|\check{X}_{t}^{i}-\hat{X}_{t}^{i}|^{\gamma}\leq(E|\check{X}_{t}^{i}-\hat{X}_{t}^{i}|)^{\gamma}\) for γ∈(0,1), 0≤t≤T. Denote \(\tau_{N}=(E|\check{X}_{T}^{i}-\hat{X}_{T}^{i}|) \vee\sup_{0\leq t\leq T-1}(1-K_{t}) E |\check{X}_{t}^{i}-\hat{X}_{t}^{i}|\). By Lemma 8, lim N→∞ τ N =0. It is easy to show that

Here \(\hat{u}^{i}\) denotes the control process generated by the NCE-based feedback applied to (2). With reuse of notation, the generic control u i appears in both \(\bar{J}_{i}\) and J i by being associated with different dynamics.

Step 2. We consider agent 1 and check the deviation from the NCE strategy \(\check{u}^{1}\). Suppose now a general admissible control u 1 satisfying \(u_{t}^{1}\leq X_{t}^{1}\) is used. Let \((\widehat{X}_{t}^{1}, \widehat{u}_{t}^{1} )\) be defined by (26)–(27). By (30),

where t≤T−1. Note that

where the last inequality is due to (28). We further have \(|E |X_{T}^{1}|^{\gamma}-E |\widehat{X}_{T}^{1}|^{\gamma}| \leq E |X_{T}^{1}-\widehat{X}_{T}^{1}|^{\gamma}\).

Denote \(\delta_{N}=\sup_{0\leq t\leq T}\sup_{u^{1}} E|\widehat{X}_{t}^{1}- X_{t}^{1}|\). By taking \(\widehat{u}_{T}^{1}=0\), we have

where lim N→∞ δ N =0 by Lemma 12. Hence

By Steps 1 and 2, the ε N -Nash equilibrium property follows by taking \(\varepsilon_{N}= (T+1)(\tau_{N}^{\gamma}+ 2\delta_{N}^{\gamma})/\gamma\). □

6 Stationary Solution Analysis

An interesting problem is to investigate the NCE-based solution when the time horizon T tends to infinity or an infinite horizon is used in the first place, and one might expect a certain steady state behavior will appear as what occurs in standard Markov decision processes [26]. To carry out this program, it will be necessary to use a time-varying sequence \((p_{t})_{0}^{\infty}\) in place of p (see (32) below) to capture the transient phase in the mean field approximation. With the nonlinearity of G, the analysis and computation of the decentralized control are expected to be more challenging than in linear models [29].

As a means of reducing the computational complexity, we turn to a type of stationary solutions without considering the transient behavior. Before analyzing the infinite horizon game problem, we start with an optimal control problem.

6.1 The Optimal Control Problem with Infinite Horizon

Let p∈[0,∞) be a constant. Consider the model

Let the utility functional be

Although the transient phase is neglected, the use of the auxiliary model (32) will provide interesting insights into understanding the mean field behavior.

We construct the iteration

where \(Z_{0}=X^{i}_{0}\). This process will serve as an upper bound for \(X_{t}^{i}\) regardless of the choice of the control.

We need to take some caution with the infinite horizon control problem with HARA utility. Notably, when 0<ρ<1, it is still possible that the value function equals infinity. This feature has been well noticed in optimal control with unbounded utility [21, 37, 43]. We give the following sufficient and necessary condition for the utility functional to have a finite upper bound. The sufficiency part has been proved in an optimal savings model [37].

Proposition 14

Assume \(X_{0}^{i}>0\). Then supJ [0,∞)<∞ if and only if ρEG γ(p,W)<1.

Proof

(i) Assume ρEG γ(p,W)<1. Denote the consumption \(c_{t}=X^{i}_{t}-u^{i}_{t}\). Then for some fixed constant C, in [37] it was shown that

(ii) Consider the case ρEG γ(p,W)≥1. Take θ>1, c 0=c 1=0 and \(c_{t}= X^{i}_{t} /t^{\theta}\) for t≥2. Set

Since θ>1, there exists a fixed constant C 0 such that

Therefore

Hence

We take any \(\theta\in(1, \frac{1}{\gamma}]\). Then

which implies supJ [0,∞)=∞. Hence ρEG γ(p,W)<1 is a necessary condition for supJ [0,∞)<∞. □

The necessity proof of the proposition is based on the following intuition. If ρEG γ(p,W)≥1, it will be beneficial to take a policy of “high accumulation for a better future”, which will eventually end up with an infinite utility functional.

For the analysis below, let p≥0 be fixed first and assume ρEG γ(p,W)<1. Let V i (x), x∈(0,∞), be the value function of the optimal control problem (32)–(33). We introduce the stationary version of the dynamic programming equation

We look for a solution of the form

The optimal control is

where D>0 satisfies

which is a stationary version of (6). We recall that \(\phi (p)=[\rho E G^{\gamma}(p,W)]^{\frac{1}{\gamma-1}}> 1\).

6.2 Applying the Consistency Requirement

Below we reexamine (32)–(33) in a game setting. We continue to determine p so that it is regenerated in the large population limit when each individual applies the stationary feedback (34). The consistency requirement says that at each t, the i.i.d. sequence \(\{u_{t}^{j}, 1\leq j\leq N\}\) has its empirical mean \(u_{t}^{(N)}\) converging to p with probability one, which imposes the condition \(Eu_{t}^{i}=p\) for each t. Consequently, \(EX_{t}^{i}\) also remains a constant when t increases.

By the feedback control (34) and the closed-loop state equation

now the consistency requirement is equivalent to

and the constraint on the initial mean

by \(Eu_{0}^{i}=p\). Note that (37) is decoupled from (35)–(36) in that one can solve the latter and next identify the right initial condition in order to fulfill the consistency requirement. For this reason, we may study (35)–(36) separately without enacting the constraint (37) on \(X_{0}^{i}\). By (35)–(36) and the requirement D>0, we introduce the equivalent system of equations

where \(\phi(p) = [\rho E G^{\gamma}(p,W)]^{\frac{1}{\gamma-1}}\). If (38) holds and the control is given by (34), then \(EX_{t}^{i}\) remains a constant by (36).

Definition 15

The pair (p,D) is called a relaxed stationary mean field (RSMF) solution of (32)–(33) if (38) holds and satisfies D>0, p≥0 and

Here the pair (p,D) is so called for two reasons: (i) the first equation in (38) is based on the stationary policy (34) for an associated infinite horizon optimal control problem; (ii) the restriction (37) on the initial mean is not imposed.

6.3 Multiplicative Noise

We give an existence result for a class of models with multiplicative noise, i.e.,

for some function g>0 on [0,∞), W≥0 and EW=1.

Theorem 16

Assume (i) 0<ρ<1, (ii) g is continuous and strictly decreases on [0,∞), (iii) ρE(W γ)g(0)≥1, and ρE(W γ)g(∞)<1 where g(∞)=lim p→∞ g(p). Then there exists a unique RSMF solution.

Proof

The second equation in (38) reduces to

So there exists a unique \(\hat{p}\in[0,\infty)\) to solve (41). Since γ∈(0,1), EW γ≤(EW)γ=1. It is clear that \(g(\hat{p})>1\). In this case,

which implies \(\phi(\hat{p})>1\). Hence

Therefore, \((\hat{p},\hat{D})\) is a RSMF solution, which is unique since \(\hat{p}\) is unique. □

Condition (iii) in the theorem has interesting implications. Suppose E(W γ)g(0)>1. The parameter ρ indicates time preference in maximizing the utility functional. If ρ is too small so that ρE(W γ)g(0)<1, then there is no RSMF solution. The intuitive interpretation of the nonexistence is that when ρ is too small, the optimizer emphasizes too much on short term utility and this can cause the output process to decline. On the other hand, the RSMF solution demands constant mean output \(EX_{t}^{i}\) by (36) (which is weaker than regenerating the constant mean field p initially assumed) which should not decline.

7 The Out-of-Equilibrium Mean Field Dynamics

Suppose a unique RSMF solution \((\hat{p}, \hat{D})\) exists for (38). We consider the game with a large population but the initial condition does not satisfy the requirement (37). A natural experiment is to let each individual apply the feedback control (34). This might be particularly plausible if the initial mean only sightly differs from the one prescribed by (37). One might further ask whether or not the population behavior will asymptotically settle down in some sense to a steady state when time tends to infinity. However, as shown by the analysis below, some interesting nonlinear phenomena can arise under quite reasonable conditions for the dynamics of the agents.

We start with a population of N agents with state equation

which uses the actual mean field \(u_{t}^{(N)}\) and differs from (32). Based on (34), let each agent apply the RSMF-solution-based control

The closed-loop state equation is given by

We obtain the relation

We proceed to consider the large population limit (i.e., the number of agents tends to infinity) so that \(u_{t}^{(N)}\) is replaced by a deterministic function p t , which is to be recursively generated. For a generic agent (still labeled by the superscript i), its control iteration (42) is then approximated by

where \(\hat{u}_{0}^{i}=u_{0}^{i}\) and we set \(p_{0}=Eu_{0}^{i}\). It is clear that \(p_{0}\neq\hat{p}\) if (37) does not hold with \(p=\hat{p}\). Here we use \(\hat{u}_{t}^{i}\) to distinguish from the original control \(u_{t}^{i}\). We take expectation on both sides of (43) to obtain

The mean of \(\hat{u}_{t}^{i}\) should coincide with p t , and hence we derive the mean field dynamics

where \(p_{0}=E u_{0}^{i}\). In analogy to the error estimates in Sect. 5, it is possible to apply similar ideas to show vanishing error \(E|u_{t}^{i} -\hat{u}_{t}^{i}|\) and \(E|u_{t}^{(N)}-p_{t}|\) when N→∞, on any fixed interval [0,T]. Here we will not provide the details, and instead will directly analyze the properties of (44), which are interesting in their own right.

Equation (44) indicates that in the infinite population limit, the aggregate investment level is updated from p t to p t+1 if all agents apply the RSMF-solution-based investment strategies. The following proposition is immediately clear.

Proposition 17

If \((\hat{p},\hat{D})\) is an RSMF and \(\hat{p}>0\), \(\hat{p}\) is a fixed point of (44).

Denote

To check whether \(\hat{p}\) is an attracting fixed point of (44), we may examine the ratio of the perturbation \(| Q(p)-Q(\hat{p})|\) to \(p-\hat{p}\). Below, we will reveal some interesting nonlinear phenomena associated with (44) characterizing the evolution of the mean field.

Example 18

For (40), we take

where C>1 is a parameter. The factor \(\frac{1}{\rho EW^{\gamma}}\) is chosen so that Q(p) will take a simple form. By Theorem 16, (38) has a unique solution \((\hat{p}, \hat{D})\). In fact, \(\hat{p}=1\) by (41).

Let g be given by Example 18. Recalling EW=1 in (40), (44) reduces to

7.1 Numerical Examples

To facilitate the computation, we will examine some concrete models. The point p=0 will appear as an unstable equilibrium in different examples. It is less interesting and we will only focus on \(\hat{p}>0\) within the RSMF solution \((\hat{p}, \hat{D})\).

7.1.1 Stable Equilibrium and Limit Cycle

Note that \(\hat{p}=1\) is a fixed point of (45), If it is iterated and the state can stay in a small neighborhood of \(\hat{p}\), the slope of Q C (p) at \(\hat{p}\) is crucial to determine the asymptotic property of the iteration. We have

So, at \(\hat{p}=1\),

If 1<C<3 (resp., C>3), there exists a neighborhood \(B_{1}(\hat{p})\) (resp., \(B_{2}(\hat{p})\)) centering \(\hat{p}=1\) such that \(-1<Q'_{C}(p)<1\) (resp., \(Q'_{C}(p)<-1\)) for all \(p\in B_{1}(\hat{p})\) (resp., \(p\in B_{2}(\hat{p})\)). We will select different values of C in (45) for numerical illustration.

-

Case 1

C=1.2 is used to obtain

$$\begin{aligned} p_{t+1}= \frac{1.2p_t}{1+0.2p^3_t} , \qquad Q'_C( \hat{p})=0.5. \end{aligned}$$ -

Case 2

If C=2,

$$\begin{aligned} p_{t+1}= \frac{2p_t}{1+p^3_t} ,\qquad Q_C'(\hat{p})= -0.5. \end{aligned}$$

For Cases 1 and 2, \(\hat{p}=1\) is a stable equilibrium of the iteration; see Fig. 1. If we interpret p t as \(Eu_{t}^{i}= \frac{EX_{t}^{i}}{\phi(\hat{p})}\) for agent i in an infinite population, then

This means \(EX_{t}^{i}\) starting with any initial value will asymptotically restore the mean determined in (37).

-

Case 3

We take C=4 to obtain

$$p_{t+1}= \frac{4p_t}{1+3p_t^3},\qquad Q_C'(\hat{p})= -1.25, $$

which has a limit cycle determined by the two points on the graph of Q C : (0.560360, 1.467041), (1.467041, 0.560360); see Fig. 2. The function Q C (p) attains its maximum 0.733762 at p=0.550321.

7.1.2 Chaotic Dynamics

We describe another example.

Example 19

Define \(h_{0}(p)= \frac{2.5 (1-p)^{0.2}}{1+0.4p}\), and

where h 0(0.99)=0.712943. Select ϵ such that h(1.05)=0.35. Define \(g(p)=\frac{h(p)}{\rho EW^{\gamma}} \) for (40), where EW=1. By Theorem 16, (38) has a unique solution \((\hat{p}, \hat{D})\), where \(\rho EW^{\gamma}g(\hat{p})=1\) by (41) and \(\hat{p}=0.948836\).

The graph of h is shown in Fig. 3. Accordingly, g monotonically decreases on [0,∞). The sharp decrease of g when p approaches 1 is used to model a “pollution effect” [19]. This means when the aggregate investment level approaches a certain reference level (here equal to 1), the production becomes very inefficient. The interested reader is referred to [19] for quick efficiency loss of production functions due to increasing concentration of capital.

By EW=1,

which yields the mean field dynamics

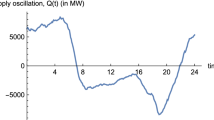

The function Q(p)=ph(p) attains its maximum 1.0984 at p=0.7950 on the interval [0,1.12]. Take p 0=0.4. Then p 0<p 1<p 2 and p 3<p 0, and the period 3 condition holds which implies the difference equation has chaotic properties (see [39] for definition). The chaotic behavior of (46) is illustrated in Fig. 4.

7.2 The Role of the Initial Condition

For Examples 18 (C=1.2, C=4) and 19, there exists a unique initial mean \(\hat{m}_{0}\) determined by (37). There is a consistent mean field approximation interpretation of \((\hat{m}_{0},\hat{p}, \hat{D})\): each agent implements an optimal response to the mean field \(\hat{p}\), and all agents in the large population limit replicate the same \(\hat{p}\). For Example 18 (C=4) and Example 19, after a deviation of the true initial mean from \(\hat{m}_{0}\), no matter how small it is, the actual mean field with control based on \((\hat{p}, \hat{D})\) will follow an entirely different course. Such a high sensitivity of the population behavior to the initial condition is truly remarkable.

8 Concluding Remarks

This paper has developed a mean field game framework for consumption-accumulation optimization with a large number of agents. Our model has a key feature of modeling the negative mean field effect, and adopts HARA utility as the individual optimization objectives.

We use the consistent mean field approximation scheme to obtain decentralized strategies and show an ε-Nash equilibrium property. To address the long time optimizing behavior, we introduce the notion of a relaxed stationary mean field solution. When the simple strategy is implemented by a representative agent in an infinite population, we study the mean field dynamics in the setup of out-of-equilibrium behavior. Some interesting nonlinear phenomena are observed resulting from the population behavior: stable equilibria, limit cycles, and chaos. These dramatically different behaviors may be roughly attributed to different levels of sensitivity of the individual production function to the mean field.

Although erratic oscillatory or chaotic phenomena are well known in macroeconomics and social science [5, 10, 19, 50], our work uncovers such phenomena from the microscopic level of a game involving self-optimizing agents and will potentially shed light on related economic systems.

The general framework developed in this paper can be further extended. For example, the mean field effect which an agent receives can rely on specific distributional information of other agents’ investment rather than just the empirical mean \(u_{t}^{(N)}\). This can be modeled by introducing pairwise nonlinearity and taking average across the population. For the long-term optimization situation, the transient behavior can be included in the mean field approximation.

References

Achdou Y, Capuzzo-Dolcetta I (2010) Mean field games: numerical methods. SIAM J Numer Anal 48(3):1136–1162

Adlakha S, Johari R, Weintraub G, Goldsmith A (2008) Oblivious equilibrium for large-scale stochastic games with unbounded costs. In: Proc 47th IEEE CDC, Cancun, Mexico, pp 5531–5538

Amir R (1996) Continuous stochastic games of capital accumulation with convex transitions. Games Econ Behav 15:111–131

Andersson D, Djehiche B (2011) A maximum principle for SDEs of mean-field type. Appl Math Optim 63(3):341–356

Arthur WB (1999) Complexity and the economy. Science 284:107–109

Askenazy P, Le Van C (1999) A model of optimal growth strategy. J Econ Theory 85:24–51

Balbus L, Nowak AS (2004) Construction of Nash equilibria in symmetric stochastic games of capital accumulation. Math Methods Oper Res 60:267–277

Bardi M (2012) Explicit solutions of some linear-quadratic mean field games. Netw Heterog Media 7(2):243–261

Barro RJ, Sala-I-Martin X (1992) Public finance in models of economic growth. Rev Econ Stud 59(4):645–661

Benhabib J (ed) (1992) Cycles and chaos in economic equilibrium. Princeton University Press, Princeton

Bensoussan A, Frehse J, Yam P (2012) Overview on mean field games and mean field type control theory. Preprint

Bensoussan A, Sung KCJ, Yam SCP, Yung SP (2011) Linear-quadratic mean-field games. Preprint

Brock WA, Mirman LJ (1972) Optimal economic growth and uncertainty: the discounted case. J Econ Theory 4:479–513

Buckdahn R, Cardaliaguet P, Quincampoix M (2011) Some recent aspects of differential game theory. Dyn Games Appl 1(1):74–114

Cardaliaguet P (2012) Notes on mean field games

Carmona R, Delarue F (2012) A probabilistic analysis of mean field games. Preprint

Cass D (1965) Optimum growth in an aggregative model of capital accumulation. Rev Econ Stud 32(3):233–240

Chow YS, Teicher H (1997) Probability theory: independence, interchangeability, martingales, 3rd edn. Springer, New York

Day RH (1982) Irregular growth cycles. Am Econ Rev 72(3):406–414

Dockner EJ, Nishimura K (2005) Capital accumulation games with a non-concave production function. J Econ Behav Organ 57:408–420

Duffie D, Fleming W, Soner HM, Zariphopoulou T (1997) Hedging in incomplete markets with HARA utility. J Econ Dyn Control 21:753–782

Gomes DA, Mohr J, Souza RR (2010) Discrete time, finite state space mean field games. J Math Pures Appl 93:308–328

Gast N, Gaujal B, Le Boudec J-Y (2012) Mean field for Markov decision processes: from discrete to continuous optimization. IEEE Trans Autom Control 57(9):2266–2280

Guéant O, Lasry J-M, Lions P-L (2011) Mean field games and applications. In: Carmona AR et al. (eds) Paris-Princeton lectures on mathematical finance 2010. Springer, Berlin, pp 205–266

Hart S (1973) Values of mixed games. Int J Game Theory 2:69–86

Hernández-Lerma O, Lasserre JB (1996) Discrete-time Markov control processes: basic optimality criteria. Springer, New York

Huang M (2010) Large-population LQG games involving a major player: the Nash certainty equivalence principle. SIAM J Control Optim 48(5):3318–3353

Huang M, Caines PE, Malhamé RP (2003) Individual and mass behaviour in large population stochastic wireless power control problems: centralized and Nash equilibrium solutions. In: Proc 42nd IEEE CDC, Maui, HI, pp 98–103

Huang M, Caines PE, Malhamé RP (2007) Large-population cost-coupled LQG problems with nonuniform agents: individual-mass behavior and decentralized ε-Nash equilibria. IEEE Trans Autom Control 52(9):1560–1571

Huang M, Caines PE, Malhamé RP (2012) Social optima in mean field LQG control: centralized and decentralized strategies. IEEE Trans Autom Control 57(7):1736–1751

Huang M, Malhamé RP, Caines PE (2006) Large population stochastic dynamic games: closed-loop McKean–Vlasov systems and the Nash certainty equivalence principle. Commun Inf Syst 6(3):221–251

Jovanovic B, Rosenthal RW (1988) Anonymous sequential games. J Math Econ 17:77–87

Kizilkale AC, Caines PE (2013) Mean field stochastic adaptive control. IEEE Trans Autom Control 58(4):905–920

Kolokoltsov VN, Li J, Yang W (2011) Mean field games and nonlinear Markov processes. Preprint

Lambson VE (1984) Self-enforcing collusion in large dynamic markets. J Econ Theory 34:282–291

Lasry J-M, Lions P-L (2007) Mean field games. Jpn J Math 2(1):229–260

Levhari D, Srinivasan TN (1969) Optimal savings under uncertainty. Rev Econ Stud 36(2):153–163

Li T, Zhang J-F (2008) Asymptotically optimal decentralized control for large population stochastic multiagent systems. IEEE Trans Autom Control 53(7):1643–1660

Li TY, Yorke JA (1975) Period three implies chaos. Am Math Mon 82(10):985–992

Liu W-F, Turnovsky SJ (2005) Consumption externalities, production externalities, and long-run macroeconomic efficiency. J Public Econ 89:1097–1129

Ma Z, Callaway D, Hiskens I (2013) Decentralized charging control for large populations of plug-in electric vehicles. IEEE Trans Control Syst Technol 21(1):67–78

Mendelssohn R, Sobel MJ (1980) Capital accumulation and the optimization of renewable resource models. J Econ Theory 23:243–260

Merton R (1969) Lifetime portfolio selection under uncertainty: the continuous time case. Rev Econ Stat 51(3):247–257

Nguyen SL, Huang M (2012) Linear-quadratic-Gaussian mixed games with continuum-parameterized minor players. SIAM J Control Optim 50(5):2907–2937

Nourian M, Caines PE (2012) ε-Nash mean field game theory for nonlinear stochastic dynamical systems with mixed agents. In: Proc 51st IEEE CDC, Maui, HI, pp 2090–2095

Nourian M, Caines PE, Malhamé RP, Huang M (2012) Mean field control in leader-follower stochastic multi-agent systems: likelihood ratio based adaptation. IEEE Trans Autom Control 57(11):2801–2816

Olson LJ, Roy S (2006) Theory of stochastic optimal economic growth. In: Dana R-A, Le Van C, Mitra T, Nishimura K (eds) Handbook on optimal growth I. Springer, Berlin, pp 297–335

Olson LJ, Roy S (2000) Dynamic efficiency of conservation of renewable resources under uncertainty. J Econ Theory 95:186–214

Ramsey FP (1928) A mathematical theory of saving. Econ J 38(152):543–559

Saari D (1995) Mathematical complexity of simple economics. Not Am Math Soc 42(2):222–230

Solow RM (1956) A contribution to the theory of economic growth. Q J Econ 70(1):65–94

Stokey NL, Lucas RE Jr., Prescott EC (1989) Recursive methods in economic dynamics. Harvard University Press, Cambridge

Tembine H, Le Boudec J-Y, El-Azouzi R, Altman E (2009) Mean field asymptotics of Markov decision evolutionary games and teams. In: Proc GameNets, Istanbul, Turkey, pp 140–150

Tembine H, Zhu Q, Basar T (2011) Risk-sensitive mean-field stochastic differential games. In: Proc 18th IFAC world congress, Milan, Italy

Wang B-C, Zhang J-F (2012) Distributed control of multi-agent systems with random parameters and a major agent. Automatica 48(9):2093–2106

Weintraub GY, Benkard CL, Van Roy B (2008) Markov perfect industry dynamics with many firms. Econometrica 76(6):1375–1411

Yin H, Mehta PG, Meyn SP, Shanbhag UV (2012) Synchronization of coupled oscillators is a game. IEEE Trans Autom Control 57(4):920–935

Yong J (2011) A linear-quadratic optimal control problem with mean-field stochastic differential equations. Preprint

Acknowledgements

This work was partially supported by Natural Sciences and Engineering Research Council (NSERC) of Canada.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Huang, M. A Mean Field Capital Accumulation Game with HARA Utility. Dyn Games Appl 3, 446–472 (2013). https://doi.org/10.1007/s13235-013-0092-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13235-013-0092-9