Abstract

We explore properties of Cauchy-Stieltjes Kernel (CSK) families that have the same counterpart in natural exponential families (NEFs). We determine the variance function of the finite mixtures of a CSK family with its length-biased family. We also prove that, for a more natural definition for the domain of means, the new domain of means scale nicely under affine transformation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

According to Wesołowski [10], the kernel family generated by a kernel \(k(x,\theta )\) with generating measure \(\nu\) is the set of probability measures

where \(L(\theta )=\int k(x,\theta )\nu (dx)\) is the normalizing constant and \(\nu\) is the generating measure. The theory of natural exponential families (NEF) is based on the exponential kernel \(k(x,\theta )=\exp (\theta x)\). The theory of Cauchy-Stieltjes Kernel (CSK) families is recently introduced and it arise from a procedure analogous to the definition NEF by using the Cauchy-Stieltjes kernel \(1/(1-\theta x)\) instead of the exponential kernel. Bryc [1] initiated the study of CSK families for compactly supported probability measures \(\nu\). He has shown that such families can be parameterized by the mean and under this parametrization, the family (and measure \(\nu\)) is uniquely determined by the variance function V(m) and the mean \(m_0\) of \(\nu\). In [2], Bryc and Hassairi extend the results in [1] to allow measures \(\nu\) with unbounded support, providing the method to determine the domain of means, introducing the “pseudo-variance” function that has no direct probabilistic interpretation but has similar properties to the variance function. They have also introduced the notion of reciprocity between tow CSK families by defining a relation between the R-transforms of the corresponding generating probability measure. This leads to describe a class of cubic CSK families (with support bounded from one side) which is related to quadratic class by a relation of reciprocity. A general description of polynomial variance function with arbitrary degree is given in [4]. In particular, a complete resolution of cubic compactly supported CSK families is given. Other properties and characterizations in CSK families regarding the mean of the reciprocal and orthogonal polynomials are also given in [7] and [6].

The aim of this paper is to continue the study of CSK families. We explore properties of CSK families that have the same counterpart in NEFs. In the rest of this section, we recall a few features about CSK families. In section 2, we present some facts about length biased distributions and we determine the variance function of mixing a CSK family with its length biased family. In section 3, we prove that for a more natural definition of the domain of means, the latter scale nicely under affine transformation as in the case of NEFs.

The notations used in what follows are the ones used in [2, 4, 6] and [7].

Definition of CSK families. Let \(\nu\) be a non-degenerate probability measure with support bounded from above. Then

is well defined for all \(\theta \in [0,\theta _+)\) with \(1/\theta _+=\max \{0, \sup \mathrm{supp} (\nu )\}\) and

is the one-sided CSK family generated by \(\nu\). That is,

and \(Q_m(dx)\) is the corresponding parametrization by the mean, which is given by \(Q_m(dx)=f_{\nu }(x,m)\nu (dx),\) with

and which involves the pseudo-variance function \(\mathbb {V}_{\nu }(m)\) introduced later on.

Domain of means. Let \(k_{\nu }(\theta )=\int x P_{(\theta ,\nu )}(dx)\) denote the mean of \(P_{(\theta ,\nu )}\). Then the map \(\theta \mapsto k_{\nu }(\theta )\) is strictly increasing on \((0,\theta _+)\), it is given by the formula

The image of \((0,\theta _+)\) by \(k_{\nu }\) is called the (one sided) domain of means of the family \(\mathcal {K}_+(\nu )\), it is denoted \((m_0(\nu ),m_+(\nu ))\). From [2, Remark 3.3] we read out the following: for a non-degenerate probability measure \(\nu\) with support bounded from above, define

Then the one-sided domain of means \((m_0(\nu ),m_+(\nu ))\) of is determined from the following formulas

and with \(B=B(\nu )\),

Remark 1.1

One may define the one-sided CSK family for a generating measure with support bounded from below. Then the one-sided CSK family \({\mathcal {K}}_-(\nu )\) is defined for \(\theta _-<\theta <0\), where \(\theta _-\) is either \(1/b(\nu )\) or \(-\infty\) with \(b=b(\nu )=\min \{0,\inf supp(\nu )\}\). In this case, the domain of the means for \({\mathcal {K}}_-(\nu )\) is the interval \((m_-(\nu ),m_0(\nu ))\) with \(m_-(\nu )=b-1/G_\nu (b)\). If \(\nu\) has compact support, the natural domain for the parameter \(\theta\) of the two-sided Cauchy-Stieltjes Kernel (CSK)family \(\mathcal {K}(\nu )=\mathcal {K}_+(\nu )\cup \mathcal {K}_-(\nu )\cup \{\nu \}\) is \(\theta _-<\theta <\theta _+\).

Variance and pseudo-variance functions. The variance function

is a fundamental concept of the theory of CSK families as presented in [1]. Unfortunately, if \(\nu\) does not have the first moment (which is the case for 1/2-stable law), all measures in the CSK family generated by \(\nu\) have infinite variance. Therefore, authors in [2] introduce the pseudo-variance function. It is easy to describe explicitly if \(m_0(\nu )=\int x d\nu\) is finite. Then, see [2, Proposition3.2] we know that

In particular, \(\mathbb {V}_{\nu }=V_{\nu }\) when \(m_0(\nu )=0\). In general,

where \(\psi _{\nu }:(m_0(\nu ),m_+(\nu ))\rightarrow (0,\theta _+)\) is the inverse of the function \(k_{\nu }(\cdot )\).

The generating measure \(\nu\) is determined uniquely by the pseudo-variance function \(\mathbb {V}_{\nu }\) through the following identities (for technical details, see [2]): if

then the Cauchy transform

satisfies

The effects of affine transformations. Here we collect formulas that describe the effects on the corresponding CSK family of applying an affine transformation to the generating measure. For \(\delta \ne 0\) and \(\gamma \in \mathbb {R}\), let \(f(\nu )\) be the image of \(\nu\) under the affine map \(x\longmapsto (x-\gamma )/\delta\). In other words, if X is a random variable with law \(\nu\) then \(f(\nu )\) is the law of \((X-\gamma )/\delta\), or \(f(\nu )=D_{1/\delta }(\nu \boxplus \delta _{-\gamma })\), where \(D_r(\mu )\) denotes the dilation of measure \(\mu\) by a number \(r\ne 0\), i.e. \(D_r(\mu )(U)=\mu (U/r)\).

The effects of the affine transformation on the corresponding CSK family are as follows :

-

Point \(m_0\) is transformed to \((m_0-\gamma )/\delta\). In particular, if \(\delta <0\), then \(f(\nu )\) has support bounded from below and then it generates the left-sided \(\mathcal {K}_-(f(\nu ))\).

-

For m close enough to \((m_0-\gamma )/\delta\) the pseudo-variance function is

$$\begin{aligned} \mathbb {V}_{f(\nu )}(m)=\frac{m}{\delta (m\delta +\gamma )}\mathbb {V}_\nu (\delta m+\gamma ). \end{aligned}$$(1.13)In particular, if the variance function exists, then

$$\begin{aligned} V_{f(\nu )}(m)=\frac{1}{\delta ^2}V_\nu (\delta m+\gamma ). \end{aligned}$$A special case worth noting is the reflection \(f(x)=-x\). If \(\nu\) has support bounded from above and its right-sided CSK family \(\mathcal {K}_+(\nu )\) has domain of means \((m_0,m_+)\) and pseudo-variance function \(\mathbb {V}_\nu (m)\), then \(f(\nu )\) generates the left-sided CSK family \(\mathcal {K}_-(f(\nu ))\) with domain of means \((-m_+,-m_0)\) and the pseudo-variance function \(\mathbb {V}_{f(\nu )}(m)=\mathbb {V}_\nu (-m)\).

Remark 1.2

The upper end of the domain of means do not satisfies a simple formula under affine transformation. This question is well studied in Section 3.

2 Finite mixtures of CSK families

2.1 Motivation

The weighted distributions are widely used in many fields such as medicine, ecology and reliability, to name a few, for the development of proper statistical models. Weighted distributions are milestone for efficient modeling of statistical data and prediction when the standard distributions are not appropriate. Suppose X is a non-negative continuous random variable with pdf (probability density function) f(x). The pdf of the weighted random variable \(X_w\) is given by

where w(x) is a non-negative weight function and \(m_w=\mathbb {E}(w(X))<\infty\). Note that similar definition can be stated for the discrete random variables. For the weight function \(w(x) = x\), the resultant model is called length-biased distribution (or size biased distribution) and its pdf is given by

with \(m=\mathbb {E}(X)<\infty .\)

Patil and Rao [8] examined some general models leading to weighted distributions and showed how the weight \(w(x) = x\) occurs in a natural way in many sampling problems. Size-biasing occurs in many unexpected context such as statistical estimation, renewal theory, infinite divisibility of distributions and number theory. Mixtures of distributions are also of great preeminence in such area as most population of components are indeed heterogenous.

On the other hand, most of probability distributions in classical probability theory are elements of NEFs. Seshadri [9] considers finite mixtures of a NEF where one component is a length-biased version of the other component. Since the variance function is the fundamental concept for NEFs, then some examples of variance function of the form

(where P, Q and \(\Delta\) are polynomials in m with degree of \(P\le 3\), while the degrees of Q and \(\Delta\) are \(\le 2\)), are explained of the result of mixing a NEF and its length-biased family. A general classification of the class of variance function of the form (2.1) is given in [5].

The theory of CSK families is based on notions from free probability theory and it provides probabilities distributions of importance in literature. In the rest of this section we are interested in the CSK-version of finite mixtures where one component is a length-biased version of the other component. We provide new form of variance function given by:

where Q and T are polynomials in \({\overline{m}}\) with degree 1, while P and \(\Delta\) are polynomials of degree 2.

2.2 Variance function of finite mixtures

In this paragraph, we relate the variance function of the mixed CSK family to the original CSK family. The following result is used in the proof of Theorem 2.3.

Proposition 2.1

Let \({\mathcal {K}_{+}}(\nu )\) be the one sided CSK family generated by a compactly supported probability measure \(\nu\) concentrated on the positive real line with mean \(m_0(\nu )\). Consider the probability measure \(\mu (dx)=(\frac{\gamma +x}{\gamma +m_0(\nu )})\nu (dx)\) with \(\gamma \ge 0\). We have that

-

(i)

\(M_{\mu }(\theta )\) exists for \(\theta \in (0,\theta _+)\), and is given by

$$\begin{aligned} M_{\mu }(\theta )=\displaystyle \frac{M_{\nu }(\theta )(\gamma +\frac{1}{\theta })-\frac{1}{\theta }}{\gamma +m_0(\nu )}. \end{aligned}$$ -

(ii)

For all \(\theta \in (0,\theta _+)\),

$$\begin{aligned} k_{\mu }(\theta )=\displaystyle \frac{k_{\nu }(\theta )(1+\gamma \theta +\theta m_0(\nu ))-m_0(\nu )}{\theta (\gamma +k_{\nu }(\theta ))}. \end{aligned}$$(2.3) -

(iii)

For \(\theta \in (0,\theta _+)\), denote \(P^*_{(\theta ,\nu )}(dx)=\frac{x}{k_{\nu }(\theta )}P_{(\theta ,\nu )}(dx)\), then

$$\begin{aligned} P_{(\theta ,\mu )}(dx)=\frac{\gamma }{\gamma +k_{\nu }(\theta )}P_{(\theta ,\nu )}(dx)+\frac{k_{\nu }(\theta )}{\gamma +k_{\nu }(\theta )}P^*_{(\theta ,\nu )}(dx). \end{aligned}$$The CSK family \({\mathcal {K}_{+}}(\mu )\) appears to be the mixture of \(P_{(\theta ,\nu )}(dx)\) and \(P^*_{(\theta ,\nu )}(dx)\).

Proof

(i) The probability measure \(\nu\) is compactly suppoted and the function \(x \longmapsto \displaystyle \frac{\gamma +x}{\gamma +m_0(\nu )}\) is bounded on the support of \(\nu\), so that \(M_{\mu }(\theta )\) exists for \(\theta\) such that \(M_{\nu }(\theta )\) is well defined.

(ii) Using formula (1.3) and (i), we have that

From the fact that \(M_{\nu }(\theta )=\displaystyle \frac{1}{1-\theta k_{\nu }(\theta )}\), we obtain,

(iii) For \(\theta \in (0,\theta _+)\), we have

\(\square\)

Remark 2.2

If \(\nu\) is a non-degenerate probability measure with support bounded from one side (say from below), it may happen that \(\int x \nu (dx)\) is infinite as for example all probability measures that generates cubic CSK family given in [2]. So, we restrict ourselves to compactly supported probability measure \(\nu\), so that \(\mu (dx)=(\frac{\gamma +x}{\gamma +m_0(\nu )})\nu (dx)\) is also a compactly supported probability measure.

Next, we relate the variance function of the CSK family generated by \(\mu\) to the variance function of the CSK family generated by \(\nu\).

Theorem 2.3

Let \({\mathcal {K}_{+}}(\nu )\) be the one sided CSK family generated by a compactly supported probability measure \(\nu\) concentrated on the positive real line with mean \(m_0(\nu )\). Consider the probability measure \(\mu (dx)=(\frac{\gamma +x}{\gamma +m_0(\nu )})\nu (dx)\) with \(\gamma \ge 0\). We have that

-

(i)

For \(\theta \in (0,\theta _{+})\), denote \(m=k_{\nu }(\theta )\) and \({\overline{m}}=k_{\mu }(\theta )\), so we have:

$$\begin{aligned} {\overline{m}}=\frac{V_{\nu }(m)}{\gamma +m}+m. \end{aligned}$$(2.4) -

(ii)

The one sided domain of means of the CSK family \({\mathcal {K}_{+}}(\mu )\) is given by

$$\begin{aligned} \left( m_0(\mu ),m_{+}(\mu )\right) =\left( \frac{V_{\nu }(m_0(\nu ))}{\gamma +m_0(\nu )}+m_0(\nu ), \frac{(\gamma +B)m_{+}(\nu )}{\gamma +m_{+}(\nu )}\right) . \end{aligned}$$(2.5) -

(iii)

The variance function of the CSK family \({\mathcal {K}_{+}}(\mu )\) is

$$\begin{aligned} V_{\mu }({\overline{m}})=({\overline{m}}-m_0(\mu ))\left( \frac{V_{\nu }(m)}{m-m_0(\nu )}+m-{\overline{m}}\right) . \end{aligned}$$(2.6)

Proof

-

(i)

From (2.3), it is easy to see that

$$\begin{aligned} {\overline{m}}=\frac{m-m_{0}(\nu )+m(\gamma +m_{0}(\nu ))\psi _{\nu }(m)}{(\gamma +m)\psi _{\nu }(m)}. \end{aligned}$$(2.7)Using (1.9) and (1.8), equation (2.7) becomes

$$\begin{aligned} {\overline{m}}= & {} \frac{\frac{m-m_{0}(\nu )}{\psi _{\nu }(m)}+m(\gamma +m_{0}(\nu ))}{\gamma +m}=\frac{(m-m_{0}(\nu ))\left( \frac{\mathbb {V}_{\nu }(m)}{m}+m\right) +m(\gamma +m_{0}(\nu ))}{\gamma +m}\\= & {} \frac{V_{\nu }(m)+m(m-m_{0}(\nu ))+m(\gamma +m_{0}(\nu ))}{\gamma +m}=\frac{V_{\nu }(m)}{\gamma +m}+m. \end{aligned}$$ -

(ii)

Using the definition of the domain of means, we obtain

$$\begin{aligned} m_0(\mu )= & {} \lim _{\theta \longrightarrow 0}k_{\mu }(\theta )=\lim _{m\longrightarrow m_{0}(\nu )}\frac{V_{\nu }(m)}{\gamma +m}+m\\= & {} \frac{V_{\nu }(m_0(\nu ))}{\gamma +m_0(\nu )}+m_0(\nu ).\\ m_+(\mu )= & {} \lim _{\theta \longrightarrow \theta _+}k_{\mu }(\theta )=\lim _{m\longrightarrow m_{+}(\nu )}\frac{V_{\nu }(m)}{\gamma +m}+m=\lim _{m\longrightarrow m_{+}(\nu )}m\frac{\frac{V_{\nu }(m)}{m}+m+\gamma }{\gamma +m}\\= & {} \frac{\gamma +B}{\gamma +m_{+}(\nu )}m_{+}(\nu ). \end{aligned}$$(From [2, Proposition 3.3], recall that \(\lim _{m\longrightarrow m_{+}(\nu )}\frac{V_{\nu }(m)}{m}+m=B\)).

-

(iii)

For \(\theta \in (0,\theta _+)\), we have \(\psi _{\mu }({\overline{m}})=\theta =\psi _{\nu }(m)\). This with equations (1.9) and (1.8) implies (2.6). \(\square\)

Note that the function \(m\longmapsto {\overline{m}}=k_{\mu }(\psi _{\nu }(m))\) is a bijection. To obtain the expression of \(V_{\mu }({\overline{m}})\), we express m in terms of \({\overline{m}}\) from (2.4) and insert it in (2.6).

The following special cases are of interest as they exhibit mixtures of CSK families generated by laws of importance in free probability.

Example 2.4

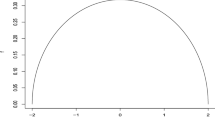

Consider the (one-sided) CSK family generated by the semicircle law

with \(m_0(\nu )=0\). The variance function is \(V_{\nu }(m)=\mathbb {V}_{\nu }(m)=1\) and the domain of means is (0, 1). Consider the image \(\varphi (\nu )\) of \(\nu\) by the map \(\varphi :x\longmapsto x+2\). The CSK family generated by

with \(m_0(\varphi (\nu ))=2\) has variance function \(V_{\varphi (\nu )}(m)=1\). We have that \(B(\varphi (\nu ))=4\) and

For \(\gamma \ge 0\), the probability measure

generates the CSK family with domain of means, according to (2.5), given by

From equation (2.4), solving m in terms of \({\overline{m}}\) we get

It is clear that \(1-\gamma {\overline{m}}\) is negative for \(\gamma \ge \sqrt{2}-1\). In that case the product of roots in (2.8) is negative and m must be the positive root of (2.8), that is

with \(\Delta ({\overline{m}})=({\overline{m}}-\gamma )^2+4(\gamma {\overline{m}}-1)\). On the other hand, from (2.4), we have that \(V_{\varphi (\nu )}(m)=({\overline{m}}-m)(\gamma +m)\). This, with equations (2.6) and (2.9) implies that the variance function of \({\mathcal {K}_{+}}(\mu )\) is

It can be written in the form

with \(P({\overline{m}})=({\overline{m}}+\gamma )[(\gamma +2){\overline{m}}-(2\gamma +5)]\), \(\Delta ({\overline{m}})=({\overline{m}}-\gamma )^2+4(\gamma {\overline{m}}-1)\), \(Q({\overline{m}})=(2\gamma +5)-(\gamma +2){\overline{m}}\) and \(T({\overline{m}})={\overline{m}}-(\gamma +4)\).

Example 2.5

For \(0<a^2<1\), the (absolutely continuous) centered Marchenko-Pastur distribution

generates the CSK family with variance function \(V_{\nu }(m)=1+am={\mathbb {V}}_{\nu }(m)\) and domain of means (0, 1).

-

Suppose that \(0<a<1\) and consider the image \(\varphi (\nu )\) of \(\nu\) by the map \(\varphi :x\longmapsto ax+1\). The CSK family generated by

$$\begin{aligned} \varphi (\nu )(dx)=\frac{\sqrt{((a+1)^2-x)(x-(a-1)^2)}}{2\pi a^2 x}\mathbf{1 }_{((a-1)^2,(a+1)^2)}(x)dx, \end{aligned}$$with \(m_0(\varphi (\nu ))=1\), has variance function of the form \(V_{\varphi (\nu )}(m)=a^2m.\) With \(B(\varphi (\nu ))=(a+1)^2\) and using [1, formula 3.9] , the upper end of the domain of the mean is

$$\begin{aligned} m_+(\varphi (\nu ))= & {} B(\varphi (\nu ))-\lim _{z\longrightarrow B(\varphi (\nu ))}\frac{1}{G_{\varphi (\nu )}(z)}=(a+1)^2-\lim _{z\longrightarrow (a+1)^2}\frac{a}{G_{\nu }((z-1)/a)}\\= & {} (a+1)^2-\frac{a}{G_{\nu }(a+2)}=(a+1)^2-a(a+1)=a+1. \end{aligned}$$For \(\gamma \ge 0\), the probability measure \(\mu (dx)=(\frac{\gamma +x}{\gamma +1})\varphi (\nu )(dx)\) generates the CSK family with (one-sided) domain of means

$$\begin{aligned} \left( 1+\frac{a^2}{\gamma +1},a+1+ \frac{a(a+1)^2}{\gamma +a+1}\right) . \end{aligned}$$From equation (2.4), solving m in terms of \({\overline{m}}\) we get

$$\begin{aligned} m^2+(a^2\gamma -{\overline{m}})m-\gamma {\overline{m}}=0. \end{aligned}$$(2.11)The product of roots in (2.11) is negative, then m must be the positive root of (2.11), that is

$$\begin{aligned} m=\frac{{\overline{m}}-\gamma -a^2+\sqrt{\Delta ({\overline{m}})}}{2}, \end{aligned}$$(2.12)with \(\Delta ({\overline{m}})=({\overline{m}}-\gamma -a^2)^2+4\gamma {\overline{m}}\). The variance function of \({\mathcal {K}_{+}}(\mu )\) is given by (2.10) with \(P({\overline{m}})=[(\gamma +1){\overline{m}}-(\gamma +1+a^2)]({\overline{m}}+\gamma +a^2)\), \(Q({\overline{m}})=-(\gamma +1){\overline{m}}+(\gamma +1+a^2)\), \(T({\overline{m}})={\overline{m}}-(\gamma +a^2+2)\) and \(\Delta ({\overline{m}})=({\overline{m}}-\gamma -a^2)^2+4\gamma {\overline{m}}\).

-

Suppose that \(-1<a<0\) and consider the image \(\varphi (\nu )\) of \(\nu\) by the map \(\varphi :x\longmapsto ax+1\). The CSK family generated by

$$\begin{aligned} \varphi (\nu )(dx)=\frac{\sqrt{((a+1)^2-x)(x-(a-1)^2)}}{2\pi a^2 x}\mathbf{1 }_{((a+1)^2,(a-1)^2)}(x)dx, \end{aligned}$$with \(m_0(\varphi (\nu ))=1\), has variance function of the form \(V_{\varphi (\nu )}(m)=a^2m.\) With \(B(\varphi (\nu ))=(a-1)^2\), the upper end of the domain of the mean is \(m_+(\varphi (\nu ))=1-a\). For \(\gamma \ge 0\), the probability measure \(\mu (dx)=(\frac{\gamma +x}{\gamma +1})\varphi (\nu )(dx)\) generates the CSK family with (one-sided) domain of means

$$\begin{aligned} \left( 1+\frac{a^2}{\gamma +1},1-a- \frac{a(1-a)^2}{\gamma +1-a}\right) . \end{aligned}$$The variance function of \({\mathcal {K}_{+}}(\mu )\) is given by (2.10) with \(P({\overline{m}})=[(\gamma +1){\overline{m}}-(\gamma +1+a^2)]({\overline{m}}+\gamma +a^2)\), \(Q({\overline{m}})=-(\gamma +1){\overline{m}}+(\gamma +1+a^2)\), \(T({\overline{m}})={\overline{m}}-(\gamma +a^2+2)\) and \(\Delta ({\overline{m}})=({\overline{m}}-\gamma -a^2)^2+4\gamma {\overline{m}}\).

Example 2.6

For \(a^2>1\), the Marchenko-Pastur distribution is

It generates the CSK family with variance function \(V_{\nu }(m)=1+am={\mathbb {V}}_{\nu }(m)\).

-

If \(a>1\), \(B(\nu )=a+2\) and the upper end of the domain of the mean is \(m_+(\nu )=1\). In this case the domain of means is (0, 1). Consider the image \(\varphi (\nu )\) of \(\nu\) by the map \(\varphi :x\longmapsto ax+1\). The CSK family generated by

$$\begin{aligned} \varphi (\nu )(dx)=\frac{\sqrt{((a+1)^2-x)(x-(a-1)^2)}}{2\pi a^2 x}\mathbf{1 }_{((a-1)^2,(a+1)^2)}(x)dx +(1-1/a^2)\delta _{0}(dx), \end{aligned}$$with \(m_0(\varphi (\nu ))=1\), has variance function of the form \(V_{\varphi (\nu )}(m)=a^2m.\) With \(B(\varphi (\nu ))=(a+1)^2\), the upper end of the domain of the mean is \(m_+(\varphi (\nu ))=a+1\). For \(\gamma \ge 0\), the probability measure \(\mu (dx)=(\frac{\gamma +x}{\gamma +1})\varphi (\nu )(dx)\) generates the CSK family with (one-sided) domain of means

$$\begin{aligned} \left( 1+\frac{a^2}{\gamma +1},a+1+ \frac{a(a+1)^2}{\gamma +a+1}\right) . \end{aligned}$$The variance function of \({\mathcal {K}_{+}}(\mu )\) is given by (2.10) with \(P({\overline{m}})=[(\gamma +1){\overline{m}}-(\gamma +1+a^2)]({\overline{m}}+\gamma +a^2)\), \(Q({\overline{m}})=-(\gamma +1){\overline{m}}+(\gamma +1+a^2)\), \(T({\overline{m}})={\overline{m}}-(\gamma +a^2+2)\) and \(\Delta ({\overline{m}})=({\overline{m}}-\gamma -a^2)^2+4\gamma {\overline{m}}\).

-

If \(a<-1\), \(B(\nu )=-1/a\) and the upper end of the domain of the mean is \(m_+(\nu )=-1/a\). In this case the domain of means is \((0,-1/a)\). Consider the image \(\varphi (\nu )\) of \(\nu\) by the map \(\varphi :x\longmapsto ax+1\). The Cauchy-Stieltjes Kernel (CSK)family generated by

$$\begin{aligned} \varphi (\nu )(dx)=\frac{\sqrt{((a+1)^2-x)(x-(a-1)^2)}}{2\pi a^2 x}\mathbf{1 }_{((a+1)^2,(a-1)^2)}(x)dx +(1-1/a^2)\delta _{0}(dx), \end{aligned}$$with \(m_0(\varphi (\nu ))=1\), has variance function of the form \(V_{\varphi (\nu )}(m)=a^2m.\) With \(B(\varphi (\nu ))=(a-1)^2\), the upper end of the domain of the mean is \(m_+(\varphi (\nu ))=1-a\). For \(\gamma \ge 0\), the probability measure \(\mu (dx)=(\frac{\gamma +x}{\gamma +1})\varphi (\nu )(dx)\) generates the CSK family with (one-sided) domain of means

$$\begin{aligned} \left( 1+\frac{a^2}{\gamma +1},1-a- \frac{a(1-a)^2}{\gamma -a+1}\right) . \end{aligned}$$The variance function of \({\mathcal {K}_{+}}(\mu )\) is given by (2.10) with \(P({\overline{m}})=[(\gamma +1){\overline{m}}-(\gamma +1+a^2)]({\overline{m}}+\gamma +a^2)\), \(Q({\overline{m}})=-(\gamma +1){\overline{m}}+(\gamma +1+a^2)\), \(T({\overline{m}})={\overline{m}}-(\gamma +a^2+2)\) and \(\Delta ({\overline{m}})=({\overline{m}}-\gamma -a^2)^2+4\gamma {\overline{m}}\).

Example 2.7

For \(a\ne 0\), the standard free gamma distribution

generates the CSK family with \(m_0(\nu )=0\) and variance function \(V_{\nu }(m)=(1+am)^2={\mathbb {V}}_{\nu }(m)\). Suppose that \(a>0\) and consider the image \(\varphi (\nu )\) of \(\nu\) by the map \(\varphi :x\longmapsto ax+1\). The probability measure

generates the CSK family with \(m_0(\varphi (\nu ))=1\) and variance function \(V_{\varphi (\nu )}(m)=a^2m^2\).

From equation (2.4), solving m in terms of \({\overline{m}}\) we get

The product of roots in (2.13) is negative, then m must be the positive root of (2.13), that is

with \(\Delta ({\overline{m}})=({\overline{m}}-\gamma )^2+4\gamma (a^2+1){\overline{m}}\).

For \(\gamma \ge 0\), the probability measure \(\mu (dx)=(\frac{\gamma +x}{\gamma +1})\varphi (\nu )(dx)\) generates the CSK family with variance function given by (2.10) with \(P({\overline{m}})=[(\gamma +1){\overline{m}}-(\gamma +1+a^2)]((2a^2+1){\overline{m}}+\gamma )\), \(Q({\overline{m}})=(\gamma +1){\overline{m}}-(\gamma +1+a^2)\), \(T({\overline{m}})={\overline{m}}-\gamma -2(a^2+1)\) and \(\Delta ({\overline{m}})=({\overline{m}}-\gamma )^2+4\gamma (a^2+1){\overline{m}}\).

3 Domain of means under affine transformation

3.1 A more natural definition for the domain of means

It is well known that the domain of means for exponential families scale nicely under affine transformation: Denote by \(M_F\) the domain of means of a NEF F generated by a probability measure \(\mu\). Let \(\varphi\) an affine transformation. Denote by \(\varphi (F)\) the NEF generated by \(\varphi (\mu )\). It is well known that \(M_{\varphi (F)}=\varphi (M_F)\).

One notes that the lower end of the one sided domain of means of the family \(\mathcal {K}_+(\nu )\) scale nicely under the action of affine transformation \(\varphi\), that is \(m_0(\varphi (\nu ))=\varphi (m_0(\nu ))\), but we do not have a general formula of the upper end for the natural domain of means for \(\mathcal {K}_+(\nu )\). The following examples show that there is no simple formula for \(m_+(\nu )\) under affine transformation.

Example 3.1

Consider the inverse semicircle distribution

It generates the CSK family with pseudo-variance function \(\mathbb {V}_{\nu }(m)=m^3\), and the domain of means is \(\mathcal {D}_+(\nu )=(m_0(\nu ),m_+(\nu ))=(-\infty ,-1)\). The image \(\varphi (\nu )\) of \(\nu\) by the map \(x\longmapsto \varphi (x)=x+1/2\) is

and it generates the CSK family with pseudo-variance function \(\mathbb {V}_{\varphi (\nu )}(m)=m(m-1/2)^2.\) We have that

for all \(\theta\) in \(\Theta (\varphi (\nu ))=(0,4)\). The domain of means is \((m_0(\varphi (\nu )),m_+(\varphi (\nu )))=(-\infty ,0).\) In this case we have \(m_+(\varphi (\nu ))=0\ne -1/2=\varphi (m_+(\nu )).\)

Example 3.2

Consider the (two-sided) CSK family generated by the semicircle law

with the variance function \(V_{\nu }(m)=\mathbb {V}_{\nu }(m)=1\) and domain of means \((-1,1)\), that is

The image \(\varphi (\nu )\) of \(\nu\) by the map \(x\longmapsto \varphi (x)=x-3\) is

With \(B(\varphi (\nu ))=\max \{0, \sup supp (\varphi (\nu ))\}=0\), the (two-sided) range of parameter is \((\theta _-,\theta _+)=(-1/5,+\infty )\). The probability measure \(\varphi (\nu )\) generates the (two-sided) Cauchy-Stieltjes Kernel (CSK)family

with pseudo-variance function \(\mathbb {V}_{\varphi (\nu )}(m)=\frac{m}{m+3}.\) We have that

We have

The purpose of the rest of this paragraph is to give a more natural definition for the domain of means of a CSK family that behave nicely under affine transformation. In several references, we consider the range of the parameter \(\theta\) such that \(1/\theta \in (\sup supp \ \nu , \infty )\cap [0,\infty )\). In fact authors in [2] have pushed forward the theory of CSK families by extending the results in [1] to allow measures \(\nu\) with unbounded support. In such situation, the family is parameterized by a ’one-sided’ range of \(\theta\) of a fixed sign, so that generating measures have support bounded from above and the CSK families are parameterized by \(\theta >0\), which gives the domain of means \((m_0,m_+)\). We can include additional range of \(\theta\) which is possible only when the support of \(\nu\) is in \((-\infty ,0)\). In this case we can include additional range of \(1/\theta \in (\sup supp \ \nu , 0)\), so the extended range of \(\theta\) would have a simpler description

that is, \(\Theta (\nu )\) is the set for which the transform \(M_{\nu }\) exists and, with \(A= A(\nu )=\sup \mathrm{supp}(\nu )\), it can be written as

This extension for the range of the parameter \(\theta\) was considered in [3] where authors prove that in the natural parametrization of a CSK family by the mean, one can sometimes extend the family beyond the natural domain of means, preserving the variance function when the variance exists. The extension given in [3] proceed in two separate steps and leads to a nice formula for the upper end of the extended domain of means under power of free convolution. We specify some fact about the first extension of a CSK family given in [3] that consider range of \(\theta \in \Theta (\nu )\) and we will prove that this extension provide a more natural definition for the domain of means that behave nicely under affine transformation. The first extension of the CSK family \(\mathcal {K}_+(\nu )\) is defined as the set of probability measures

Note that \(\overline{\mathcal {K}}_+(\nu )=\mathcal {K}_+(\nu )\) when \(A(\nu )\ge 0\), because in this case \(\Theta (\nu )=(0,1/A)=(0, 1/B)=(0,\theta _+)\). Therefore, the first extension is non-trivial only when \(A< 0\). In that case

It is known [2] that the mean function \(k_{\nu }(.)\) is strictly increasing on \((0,\theta _+)=(0,+\infty )\) and \((m_0(\nu ),m_+(\nu ))=k_{\nu }((0,+\infty ))\). It is also proved (see [3, Proposition 3.5]), that the function \(k_{\nu }(.)\) is strictly increasing on \(\left( -\infty ,1/A\right)\).

We have that

Define \({\mathbf {m}}_+(\nu )=\displaystyle \lim _{\theta \longrightarrow 1/A}k_{\nu }(\theta )\). The the function \(k_{\nu }(.)\) define a bijection from \(\left( -\infty ,1/A\right)\) onto its image \((m_+(\nu ),{\mathbf {m}}_+(\nu ))\). We then define the function \(\psi _{\nu }\) on \((m_0(\nu ),m_+(\nu ))\) as the inverse of the restriction of \(k_{\nu }(.)\) on \((0,+\infty )\) and on \((m_+(\nu ),{\mathbf {m}}_+(\nu ))\) as the inverse of the restriction of \(k_{\nu }(.)\) on \(\left( -\infty ,1/A\right)\). This leads to the parametrization by the mean \(m\in (m_0(\nu ),m_+(\nu ))\cup (m_+(\nu ),{\mathbf {m}}_+(\nu ))\) of the family \(\overline{\mathcal {K}}_+(\nu )\). The definition of the pseudo-variance function can also be extended using the function \(\psi _{\nu }\). Following (1.9), we define \(\mathbb {V}_{\nu }(.)\) for \(m\in (m_0(\nu ),m_+(\nu ))\cup (m_+(\nu ),{\mathbf {m}}_+(\nu ))\) as

We have that

so that we define \(\mathbb {V}_{\nu }(.)\) at \(m_+(\nu )\) by \(\mathbb {V}_{\nu }(m_+(\nu ))=-(m_+(\nu ))^2\). Note that

is well defined for \(A(\nu )< 0\). The explicit parametrization by the mean of the enlarged family can then be given by

Note that the function \(m\longmapsto \psi _{\nu }(m)=\frac{1}{\mathbb {V}_{\nu }(m)/m+m}\) is increasing on \((m_+(\nu ),{\mathbf {m}}_+(\nu ))\) so that the function \(m\longmapsto \mathbb {V}_{\nu }(m)/m+m\) is decreasing on \((m_+(\nu ),{\mathbf {m}}_+(\nu ))\) and

This implies that

It is worth mentioning here that if \(\nu\) is the inverse semicircle distribution, from example 3.1, given by (3.1). The image \(\varphi (\nu )\) of \(\nu\) by the map \(x\longmapsto \varphi (x)=x+1/2\) is given by (3.2). We have that \(\Theta (\nu )=(-\infty ,-4)\cup (0,+\infty )\). We have that

with \({\mathbf {m}}_+(\nu ) =-1/2\). We have that

Also, if \(\nu\) is the semicircle distribution, from example 3.3, given by (3.3). The image \(\varphi (\nu )\) of \(\nu\) by the map \(x\longmapsto \varphi (x)=x-3\) is given by (3.4). We have that \({\mathbf {m}}_+(\nu )=m_+(\nu )=1\) and \(\Theta (\varphi (\nu ))=(-\infty ,-1)\cup (-1/5,+\infty )\). The CSK family generated by \(\varphi (\nu )\) is

with

3.2 Domain of means under affine transformation

Given a probability measure \(\nu\) with support bounded from above and an affine transformation \(\varphi\), the following result gives the link between the mean function of the CSK family generated by \(\varphi (\nu )\) and the the mean function of the CSK family generated by \(\nu\).

Proposition 3.3

Let \(\nu\) be a non degenerate probability measure with support bounded from above and let \(\varphi (\nu )\) be the image of \(\nu\) by the map \(\varphi :x\longmapsto \alpha x+\beta\). If \(\Theta (\nu )\) and \(\Theta (\varphi (\nu ))\) are respectively the sets for which the transforms \(M_{\nu }\) and \(M_{\varphi (\nu )}\) exists and \(h:x \longmapsto 1/x\), then

and for \(\theta \in \Theta (\varphi (\nu ))\),

Proof

If \(1-\theta \beta =0\), then

If \(1-\theta \beta \ne 0\), then

We have that

That is

which is nothing but (3.5).

It is clear that \(h(\Theta (\nu ))\) is the complementary of the convex support of \(\nu\). If \(1-\theta \beta =0\), that is \(\varphi ^{-1}(1/\theta )=0\), then \(0\in h(\Theta (\nu ))\), and so \(G_{\nu }(0)\) is well defined.

If \(1-\theta \beta =0\), then

If \(1-\theta \beta \ne 0\), then

Given that \(M_{\nu }(t)=\displaystyle \frac{1}{1-tk_{\nu }(t)}\), we obtain

\(\square\)

The following result prove that the domain of means of the extended CSK family scale nicely under affine transformation in a manner analogous to the domain of means for NEFs. We also consider how \(m_+(\nu )\) gets transformed under affine transformation.

Theorem 3.4

Consider a probability measure \(\nu\) with support bounded from above and let \(\varphi (\nu )\) be the image of \(\nu\) by the map \(\varphi :x\longmapsto \alpha x+\beta\), where \(\alpha \in \mathbb {R}\backslash \{0\}\) and \(\beta \in \mathbb {R}\).

(A) Suppose that \(\alpha >0\). The domain of means of \(\overline{{\mathcal {K}}}_+(\varphi (\nu ))\) is \((m_0(\varphi (\nu )), {\mathbf {m}}_+(\varphi (\nu )))\) with \({\mathbf {m}}_+(\varphi (\nu ))=\varphi ({\mathbf {m}}_+(\nu ))\). Furthermore:

-

(a)

If \(\beta =0\), then \(m_+(\varphi (\nu ))=\varphi (m_+(\nu ))\).

-

(b)

If \(\beta \ne 0\), we have that

-

(i)

If \(A\ge 0\) and \(\alpha A+\beta \ge 0\), then \(m_+(\varphi (\nu ))=\varphi (m_+(\nu ))\).

-

(ii)

If \(A\ge 0\) and \(\alpha A+\beta <0\), then \(m_+(\varphi (\nu ))=\alpha k_{\nu }(-\alpha /\beta )+\beta\).

-

(iii)

If \(A<0\) and \(\alpha A+\beta \ge 0\), then \(m_+(\varphi (\nu ))=\varphi ({\mathbf {m}}_+(\nu ))\).

-

(iv)

If \(A<0\) and \(\alpha A+\beta <0\), then \(m_+(\varphi (\nu ))=\alpha k_{\nu }(-\alpha /\beta )+\beta\).

(B) Suppose that \(\alpha <0\). The probability measure \(\varphi (\nu )\) has support bounded from below and we are dealing with left sided CSK family. The domain of means of \({\overline{\mathcal {K}}}_-(\varphi (\nu ))\) is \(({\mathbf {m}}_-(\varphi (\nu )),m_0(\varphi (\nu )))\) with \({\mathbf {m}}_-(\varphi (\nu ))=\varphi ({\mathbf {m}}_+(\nu )).\) Furthermore,

-

(i)

-

(a)

If \(\beta =0\), then \(m_-(\varphi (\nu ))=\varphi (m_+(\nu ))\).

-

(b)

If \(\beta \ne 0\), we have that

-

(i)

If \(A\ge 0\) and \(\alpha A+\beta \ge 0\), then \(m_-(\varphi (\nu ))=\alpha k_{\nu }(-\alpha /\beta )+\beta\).

-

(ii)

If \(A\ge 0\) and \(\alpha A+\beta <0\), then \(m_-(\varphi (\nu ))=\varphi (m_+(\nu ))\).

-

(iii)

If \(A<0\) and \(\alpha A+\beta \ge 0\), then \(m_-(\varphi (\nu ))=\alpha k_{\nu }(-\alpha /\beta )+\beta .\)

-

(iv)

If \(A<0\) and \(\alpha A+\beta <0\), then \(m_-(\varphi (\nu ))=\varphi ({\mathbf {m}}_+(\nu )).\)

-

(i)

Proof

(A) Suppose that \(\alpha >0\), then the probability measure \(\varphi (\nu )\) has support bounded from above and the one sided domain of means of the extended CSK family \({\overline{\mathcal {K}}}_+(\varphi (\nu ))\) is \((m_0(\varphi (\nu )), {\mathbf {m}}_+(\varphi (\nu ))\) with

and

Furthermore,

-

(a)

If \(\varphi (x)=\alpha x\), then \(m_+(\varphi (\nu ))=\varphi (m_+(\nu ))\).

-

(b)

If \(\varphi (x)= \alpha x+\beta\), with \(\beta \ne 0\), we have that

-

(i)

If \(A\ge 0\) and \(\alpha A+\beta \ge 0\), then \(m_+(\nu )={\mathbf {m}}_+(\nu )\) and \(m_+(\varphi (\nu ))={\mathbf {m}}_+(\varphi (\nu )).\) In this case we have

$$\begin{aligned} m_+(\varphi (\nu ))={\mathbf {m}}_+(\varphi (\nu ))=\varphi ({\mathbf {m}}_+(\nu ))=\varphi (m_+(\nu )). \end{aligned}$$ -

(ii)

If \(A\ge 0\) and \(\alpha A+\beta <0\), then \(\theta _+(\varphi (\nu ))=+\infty\) and

$$\begin{aligned} m_+(\varphi (\nu ))=\lim _{\theta \longrightarrow +\infty }k_{\varphi (\nu )}(\theta )=\lim _{\theta \longrightarrow +\infty }\varphi \left( k_{\nu }\left( \frac{\alpha \theta }{1-\theta \beta }\right) \right) =\varphi (k_{\nu }(-\alpha /\beta ))=\alpha k_{\nu }(-\alpha /\beta )+\beta . \end{aligned}$$ -

(iii)

If \(A<0\) and \(\alpha A+\beta \ge 0\), then \(m_+(\varphi (\nu ))={\mathbf {m}}_+(\varphi (\nu ))=\varphi ({\mathbf {m}}_+(\nu )).\)

-

(iv)

If \(A<0\) and \(\alpha A+\beta <0\), then \(\theta _+(\varphi (\nu ))=+\infty\) and

$$\begin{aligned} m_+(\varphi (\nu ))=\lim _{\theta \longrightarrow +\infty }k_{\varphi (\nu )}(\theta )=\lim _{\theta \longrightarrow +\infty }\varphi \left( k_{\nu }\left( \frac{\alpha \theta }{1-\theta \beta }\right) \right) =\varphi (k_{\nu }(-\alpha /\beta ))=\alpha k_{\nu }(-\alpha /\beta )+\beta . \end{aligned}$$

(B) Suppose that \(\alpha <0\), then the probability measure \(\varphi (\nu )\) has support bounded from below and the one sided domain of means of the extended CSK family \({\overline{\mathcal {K}}}_-(\varphi (\nu ))\) is \(({\mathbf {m}}_-(\varphi (\nu ),m_0(\varphi (\nu )))\) with

$$\begin{aligned} {\mathbf {m}}_-(\varphi (\nu ))=\lim _{\theta \longrightarrow \frac{1}{A(\varphi (\nu ))}}k_{\varphi (\nu )}(\theta ) = \lim _{\theta \longrightarrow \frac{1}{\alpha A(\nu )+\beta }}\varphi \left( k_{\nu }\left( \frac{\theta \alpha }{1-\theta \beta }\right) \right) = \lim _{t\longrightarrow \frac{1}{A(\nu )}}\varphi (k_{\nu }(t))=\varphi ({\mathbf {m}}_+(\nu )). \end{aligned}$$Furthermore,

-

(i)

-

(a)

If \(\varphi (x)=\alpha x\), then \(m_-(\varphi (\nu ))=\varphi (m_+(\nu ))\).

-

(b)

If \(\varphi (x)= \alpha x+\beta\), with \(\beta \ne 0\), we have that

-

(i)

If \(A\ge 0\) and \(\alpha A+\beta \ge 0\), then \(\theta _-(\varphi (\nu ))=-\infty\) and

$$\begin{aligned} m_-(\varphi (\nu ))=\lim _{\theta \longrightarrow -\infty }k_{\varphi (\nu )}(\theta )=\lim _{\theta \longrightarrow -\infty }\varphi \left( k_{\nu }\left( \frac{\alpha \theta }{1-\theta \beta }\right) \right) =\varphi (k_{\nu }(-\alpha /\beta ))=\alpha k_{\nu }(-\alpha /\beta )+\beta . \end{aligned}$$ -

(ii)

If \(A\ge 0\) and \(\alpha A+\beta <0\), then \(m_-(\varphi (\nu ))={\mathbf {m}}_-(\varphi (\nu ))=\varphi ({\mathbf {m}}_+(\nu ))=\varphi (m_+(\nu ))\).

-

(iii)

If \(A<0\) and \(\alpha A+\beta \ge 0\), then \(\theta _-(\varphi (\nu ))=-\infty\) and the same calculations in (i) gives that \(m_-(\varphi (\nu ))=\alpha k_{\nu }(-\alpha /\beta )+\beta .\)

-

(iv)

If \(A<0\) and \(\alpha A+\beta <0\), then \(m_-(\varphi (\nu ))={\mathbf {m}}_-(\varphi (\nu ))=\varphi ({\mathbf {m}}_+(\nu ))\).

-

(i)

\(\square\)

References

Bryc, W. (2009). Free exponential families as kernel families. Demonstr. Math., XLII(3):657–672. arxiv.org:math.PR:0601273.

Bryc, W. and Hassairi, A. (2011). One-sided Cauchy-Stieltjes kernel families. Journ. Theoret. Probab., 24(2):577–594. arxiv.org/abs/0906.4073.

Bryc, W. Fakhfakh, R. and Hassairi, A. On Cauchy-Stieltjes kernel families. Journ. Multivariate. Analysis.. 124: 295-312, 2014

Bryc, W. Fakhfakh, R and Mlotkowski, W. Cauchy-Stieltjes families with polynomial variance funtions and generalized orthogonality. Probability and Mathematical Statistics Vol. 39, Fasc. 2 (2019), pp. 237-258 https://doi.org/10.19195/0208-4147.39.2.1.

Celestin C. Kokonendji. Exponential families with variance functions in \(\sqrt{\Delta }P(\sqrt{\Delta })\): Seshadri’s Class. Ann. Statist. 18 (1994) 1-37.

Fakhfakh, R. Characterization of quadratic Cauchy-Stieltjes Kernels families based on the orthogonality of polynomials. J. Math.Anal.Appl. 459(2018)577-589.

Fakhfakh, R The mean of the reciprocal in a Cauchy-Stieltjes family. Statistics and Probability Letters 129 (2017) 1-11.

Patil, G.P. and Rao, C.R. Weighted distributions and size-biased sampling with applications populations and human families. Biometrics, 34, 179-189 (1978).

Seshadri, V. Finite mixtres of natural exponential families. The Canadian Journal of Statistics.. No. 4 (Dec., 1991), pp. 437-445.

Wesolowski, J. Kernels families. Unpublished manuscript (1999).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Rahul Roy, Ph. D.

Rights and permissions

About this article

Cite this article

Fakhfakh, R. On some properties of Cauchy-Stieltjes Kernel families. Indian J Pure Appl Math 52, 1186–1200 (2021). https://doi.org/10.1007/s13226-021-00020-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13226-021-00020-z