Abstract

Optimization techniques are considered as a part of nature’s way of adjusting to the changes happening around it. There are different factors that establish the optimum working condition or the production of any value-added product. A model is accepted for a particular process after its sustainability has been verified on a statistical and analytical level. Optimization techniques can be divided into categories as statistical, nature inspired and artificial neural network each with its own benefits and usage in particular cases. A brief introduction about subcategories of different techniques that are available and their computational effectivity will be discussed. The main focus of the study revolves around the applicability of these techniques to any particular operation such as submerged fermentation (SmF) and solid state fermentation (SSF), their ability to produce secondary metabolites and the usefulness in the laboratory and industrial level. Primary studies to determine the enzyme activity of different microorganisms such as bacteria, fungi and yeast will also be discussed. l-Asparaginase, the most commonly used drugs in the treatment of acute lymphoblastic leukemia (ALL) shall be considered as an example, a short discussion on models used in the production by the processes of SmF and SSF will be discussed to understand the optimization techniques that are being dealt. It is expected that this discussion would help in determining the proper technique that can be used in running any optimization process for different purposes, and would help in making these processes less time-consuming with better output.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Optimization methods first came into existence when Euclid in around 300 BC showed that the minimal distance between any two points is equal to the length of a straight line that joins these points (Agrawal and Sharma 2015). It was also shown later that the square is that shape which has the highest area among all the rectangles of a given edge length. In as early as 100 BC, Heron showed that when the light is reflected from a mirror it follows a path which is shorter in length (Agrawal and Sharma 2016). Optimization techniques are having an increasing acceptance in all fields of study as it is found to make working much easier than the normal trial and error methodology. It can be said to have become a cornerstone in the respective fields of study and to increase its applicability algorithms and software have been developed which adds to the advantage of these techniques (Williams et al. 2004; Xu et al. 2012). The basic methodology of any optimization technique is to work on the structure and sensitivity of the method and come out with the best way to compute any function or limitation that arise along with the technique (Fasham et al. 1995; Li et al. 2001; Yam et al. 2010).The linear and nonlinear constraints arising in optimization problem can be easily handled by penalty method. In this method, few or more expressions are added to make objective function less optimal as the solution approaches a constraint (Ba and Boyaci 2007; Mandal et al. 2015).

In any operation, it is best to work under the conditions that provide the most successful output which in this case would be larger quantity of the product, so to produce a better quantity it will be useful to optimize the factors that are associated with that procedure and in case of most of the microbial activities it would be temperature, pH, carbon source, nitrogen source, etc. (Sindhu et al. 2009; Kumar et al. 2010; Faisal et al. 2014; Irfan et al. 2016). A complete set of optimized parameters, which take into considerations most of the factors, have to be determined and that has to be tested to show how the process was successful or not in determining the final output. There are a wide range of methods available to describe the optimization techniques, some of these are quite simple techniques (e.g., one factor at a time {OFAT}) and also certain complex techniques which include central composite design (CCD), Plackett–Burman design (PBD) each with its own different methodologies as described in Fig. 1 (Müller et al. 2002; Kenari et al. 2011; Hoa and Hung 2013; Bruns et al. 2014). The simple techniques that will be used are rather long and time-consuming, and in the economic sense this would be quite a difficulty, also as seen in the OFAT technique the critical interaction between the parameters being tested will be ignored, and there are chances that the results that will be delivered might be erroneous so it would be better to determine the requirements of the experiment and construct the optimization methods around the required parameters after careful statistical analysis so as to minimize the errors that may occur and to cope with most of the problems that may arise in the process, it will also be time and money efficient (Garsoux et al. 2004; Venil et al. 2009; Elias et al. 2010).

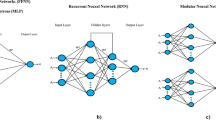

ANN is parallel computing systems which are made of a large number of simple processors connected to each other. (Ying et al. 2009; Funes et al. 2015). ANN was designed as an exciting alternative to solve problems associated with prediction, optimization, control, etc. It can be considered as a design of the human biological network, most commonly the brain which has the capacity to handle multiple operations in a single stretch (Funes et al. 2015; Pappu and Gummadi 2016). It is expected that the ANN uses the organizational principles that are used in the human brain. The best thing about ANN is that its use in modeling biological nervous system would help in understanding the biological functions which will be a great advantage to this study.

Production of enzymes that help in the further commercial production of pharmaceutical drugs used in the treatment of different diseases is one such example where the application of these optimization technique can be said is a must, as loss of material is not an acceptable factor. The quality of the produced drugs should not be affected in any way. l-Asparaginase is considered as one such important enzyme, it is an amidohydrolase component that generally breaks down the asparagine to aspartic acid and ammonia specifically in the medical field due to its anti-leukemic characteristics in the treatment of acute lymphoblastic leukemia (ALL), and in the food industry to prevent the acrylamide formation at higher temperatures in fried food products (Couto and Sanromán 2006; El-Bakry et al. 2015). Due to such applications, the need for more enzymes has been a challenge in the current scenario as production capacity has to be increased to meet the increasing demand. It is known that certain leukemic cells depend on the supply of the l-asparagine from an external source. The specific nature of the l-asparaginase in controlling the growth of the leukemic cells of the normal cells is what has given it the emphasis over the other enzymes (Venil et al. 2009; Farag et al. 2015). Most widely used source for l-asparaginase production is the Escherichia coli and Erwinia carotovora, but it has shown a large number of side effects for the enzyme prepared using these microbes over a period of time and the clinical approach has been changing to incorporate the production of the enzyme from other microbial sources from different biodiverse locations (Cammack et al. 1972; Rizzari et al. 2000; Kenari et al. 2011; Kim et al. 2015).

In this paper, the discussions will be related to the brief idea of various techniques that will be used in common and also an extension to the possibility of using artificial neural network (ANN) as an alternative to the current techniques. It is expected that this discussion will help in simplifying the process of selecting the appropriate technique to be used for the fermentation processes and help researchers in understanding the necessary parameters that shall be required for the process. A brief introduction to the various techniques available is given in Table 1.

Nature inspired optimization techniques

In the world of computational studies, there are many techniques that can be used to analyze the problem at hand, these include fuzzy logic techniques, nature inspired optimization and also neural network designs, these optimization techniques have the advantage of being prompt, useful and helpful in understanding the problem statement much better (Yang 2014; Funes et al. 2015). Advancement in these fields of study is the major research work being done in the recent years.

Optimization techniques have been based on different models that are available in nature, nature has been the backbone for the development of such techniques. There are different methodologies that have been used based on the studies done on factors present in nature and all the latest additions to the optimization methodology can be considered as the one obtained from some form of natural process (Fister et al. 2013). Nature inspired algorithms are basically classified into two subcategories, i.e., Evolutionary and Swarm, where evolutionary includes methodologies such as genetic algorithms, differential evolution and the others such as ant-colony, beehive all come under swarm optimization (Afshinmanesh et al. 2005; Fister et al. 2013). Even in nature, biological systems are considered as the most prominent factor to design any technique because of the presence of specific and positive characteristics, and thus the most of the techniques can be considered as bio-inspired techniques. Few examples of bio-inspired optimization include ant-colony optimization (ACO), particle swarm optimization (PSO), and firefly optimization (FO), etc. (Afshinmanesh et al. 2005; Dorigo and Stützle 2009; Arora and Singh 2013). Some techniques have also been added using physical or chemical systems as a reference. Each of the individual techniques has an advantage that it holds over the other, the peculiarity of these techniques helps in solving some of the difficult problems associated with a bit of complication. The strategies that are enveloped in each of these techniques help it to create a random pathway and a quick solution can be obtained from these randomized techniques that will be used. Its application in the fermentation technique will help in determining the cell culture conditions, the parametric studies involving the physical and chemical parameters can be studied and the results from each of these studies can be tabulated to determine the best and optimum solution to the experimental set (Hongwen et al. 2005; Kunamneni et al. 2015).

Optimization has always had an advantage in bringing about the betterment in the purity and productivity of any process, the effectiveness with which this solution can be determined defines the success of that particular optimization technique, and it must be robust and less time-consuming at the least to be considered as a successful technique (Varalakshmi and Raju 2013).

The entire process of fermentation is divided into three parts, upstream, fermentation and downstream processes. The optimization of the factors at each individual step is important to understand the overall process; there are factors that can be varied in each of these steps that shall bring about the required change in the process (Kumar et al. 2010; Irfan et al. 2016; de Melo et al. 2014). The upstream process involves the pre-treatment part, wherein the material and parameters are set for the process to run, it is the primary optimization section as most of the optimization parameters are to be decided and set at this stage so that the processing can be carried out smoothly, optimized parameters in this stage will make it easier for the overall process to run without much difficulty. The most important part is the fermentation process where all the reactions are taking place and the necessary products are being produced and so this process has to be handled with utmost care, optimization of this section is expected to give the best of the results for the entire process. The physical and chemical parameters that have to be set decides the outcome of the process, multiple runs have to be done to bring out the best positive result (Hoa and Hung 2013; Meng et al. 2015). The downstream processing mainly deals with the purification of the obtained product, the processes involved differ based on the product that is produced and whether it is a submerged or solid state fermentation process that is involved because there is much difference in treating a liquid product and a solid product, the optimizations that may be involved in this section will include the order in which each individual process is carried out, what is repetition tendency of the process and what quantitative materials are involved in these processes (Rodríguez-fernández et al. 2011; Kunamneni et al. 2015; Chang and Chang 2016).

Application of optimization techniques in SSF and SmF

The basic difference between SSF and SmF is the type of substrate that is used, solid in case of SSF and liquid broth in case of SmF, the optimization techniques that can be used to study each of these variations and if possible a better technique to be used can be suggested so as to reduce the redundancy time in the process (Subramaniyam and Vimala 2012; Doriya et al. 2016). Evolutionary techniques have the process methodology which includes selection, crossover, and mutation as essential parts of the process.

Statistical techniques

One-factor at a time (OFAT)

It is the most common technique for optimization process, although it is a time-taking procedure, it is one of the simplest methods available for applicability of the process. The physical and chemical parameters can be controlled with much ease in SmF over SSF owing to its liquid nature, so the OFAT technique is most suitable in case of SmF processes (Xu et al. 2003). For better understanding, an example of the output result of the process is shown in Fig. 2a below, where the optimum value of the yield was calculated against the temperature of the process.

a Optimum yield % using OFAT technique, b finding the least value using Taguchi method, c third-order factorial design using for parameters (Kalantar and Deopurkar 2007)

Taguchi method

This technique works on the basis of number of parameters to be studied, when only physical parameters are considered, this technique has an advantage of being used in the SmF processes owing to the ease in the manipulation of the parameters that can be done in these processes, Taguchi method works on the combination of multiple parameters to bring out the optimum for improving the quality of the process (Athreya and Venkatesh 2012; Mandal et al. 2015). The optimum parameters obtained using this technique has the specificity to be absolute for any changes in the environmental effect as parameter design is the most important step in the Taguchi methodology (Biswas et al. 2014). Figure 2b shows the effectivity of the Taguchi method over the traditional method of determining the best output for the process.

Factorial designs

Most commonly preferred technique where larger number of parameters are involved in the process, it has been efficient in the design of techniques where importance to chemical parameters predominates the effectiveness of the physical parameters and so it can be said that it can be less preferred in case of SmF techniques (Dange and Peshwe 2015; Kalantar and Deopurkar 2007). In case of SSF designs, the parameters have to be managed and studied with much care than in case of the SmF, and there will also be one particular factor which influences the overall process and is referred to as the main effect, the study of the interaction of the different factors and a quantitative design of the process which leads to a regression model representation and which can be sorted out using the least-squares estimates are better suitable for the SSF process over the SmF studies (Weng and Sun 2006; Montgomery 2013). Figure 2c shows the three-level factorial design that was run on four different parameters to establish the effectivity of the process being studied.

Response surface methodology (RSM)

Several variables play a contributing role in determining the optimum solution in case of this technique, it has a better compatibility when used with physical parameters that are involved in the process, e.g., pressure, temperature, etc. (Venil et al. 2009). The surface designs are modeled on a first-order or second-order model based on the requirement of that process, and the experimental data are collected after proper validation of the parameters involved which helps in estimation with greater ease. It is a sequential procedure and it helps in determining the exact optimum value or it shall bring it to a region where the optimum value is present, hence this technique can be considered suitable for SmF over the SSF processes (Ghosh et al. 2013; El-Naggar et al. 2015). Figure 3a below shows the response surface plot for the interaction of the two components shown on a typical third parameter, the optimum results are established based on the requirement for the process.

Central composite design (CCD)

Most commonly used when second-order and higher surface response surface models are built and are most suitable for higher order parameter designs when physical as well as chemical parameters play a significant role in determining the output of any process, as the design works much better with higher parameter numbers and the influence of more number of parameters in case of SSF is higher than in the case of SmF, the CCD can be considered as an extension of the factorial and RSM techniques and so it has a better command over the parameters involved in the SSF processes (Karmakar and Ray 2011; de Melo et al. 2014). Figure 3b gives a simple representation of the different systems that are available in establishing the optimum using the CCD technique where the individual factors are represented along the edges of the cube and the optimum result is at the center of the cube.

Artificial neural network (ANN)

An exhaustive technique that has the ability to deal with any number of parameters be it physical or chemical. This technique is suitable in almost all cases as it has the ability to adjust to any minute changes that are being incorporated into the system, the system works like human neuron, and is able to identify the simplest of changes and has a response that identifies the changes that are being done. It is the most widely used technique in the current scenario and has been gaining importance for a long time (Desai et al. 2008; Funes et al. 2015). ANN is an apt technique that can help in identifying the changes that are happening in the process with a better precision and it has always been understood that the changes in SSF are much difficult to understand and maintain than that in SmF, but it is expected that the technique will help in bringing clarity to that particular process (Chang et al. 2006). In case of SSF studies, the changes are much rapid and minute than in case of SmF and so this technique enables to pinpoint the exact changes and the effect of these changes on the final solution (Ying et al. 2009; Baskar and Renganathan 2012). Figure 4 describes the basic working of an ANN technique, as represented here the input variables are passed through hidden layers containing different layers of neurons which represent the individual parameters, after determining the best set of parameters that would give the optimum output the results are tabulated and the final output is given for that particular set of processes.

ANN methodology (Falahian et al. 2015)

Nature inspired techniques

Genetic algorithm

The evolutionary optimization techniques are used in the physiochemical parametric studies in different experimental conditions, it is based on the biological evolution of reproduction, mutation, etc. These techniques are better suited when the chemical parameters have precedence over the physical ones, a fitness function is established which determines the quality of the process solutions, the process has the capability for repeatability which is an added advantage to the overall solution, they have a problem only in case where complex evolutionary techniques are involved else these techniques are considered as robust, simple and easier to handle (Coello 1999). Figure 5a shows the different steps involved in the genetic algorithm methodology which involves selection, crossover, and mutation.

Swarm optimization

The bio-inspired techniques are a part of the evolutionary process which include a number of processes like particle swarm, beehive, ant-colony optimization techniques which are a guided path line technique that is helpful in understanding the random changes that are being carried out in any process. The steps involved in each of these processes are complicated and a little difficult to manage but the precision with which the results are dispensed from each of the technique overcomes any of the lengthy processes that are associated with the working of the techniques (Biswas et al. 2007; Dhanarajan et al. 2014). Figure 5b shows examples of the results that were obtained while running a PSO on multiple factors; although there is large number of results, the best result is marked at the bottom which represents the least possible value for the set of experimentation that was run. Figure 5c shows the methodology that will be run using ACO technique of optimization where a particular path will be set after multiple experimentations and that path will be used as the optimum for all further runs. These techniques are expected to have a better understanding of the processes associated with SSF; although its applicability in SmF processes cannot be unseen, the amount of time that it takes compared to other techniques that are used to study the SmF process allows these techniques to be limited for the study of SSF process (Roy et al. 2006; Doriya et al. 2016). SmF has been scaled-up to the industrial level and the difficulties that lie with the scaling up of the SSF process makes it important to have a very precise calculation when dealing with the processes involving the SSF studies (Mitchell et al. 2000).

The most important part of any of these processes is the growth of the microorganisms that are involved in these processes. Each type of microorganism has its own needs for its growth, e.g., temperature, pH, moisture content, agitation, etc. Each of these factors will have a specific effect on the growth of the microorganism, like bacteria can only grow in high moisture content environment while fungi grow in low moisture content environment which makes this microorganism specific to SmF and SSF, respectively, where these conditions are available (Chen et al. 2011; Abbas Ahmed 2015). Genetic algorithm (GA) best explains the growth of the microorganism, as it involves factors such as crossover or recombination and mutation, the primary step involved selects a breed of a particular generation which are selected based on the effectivity with which they might show results; and crossover is a technique that is extremely useful when dealing with recombinant microorganisms; and mutation also helps to determine if there are any changes that are happening in the growth of the microorganism. Bacteria, yeast, and molds require high moisture while fungi grow in low moisture environment (Singh and Srivastava 2013; Sinha and Singh 2016). Advanced techniques have always the usefulness in understanding the growth of microorganisms compared to the simpler OFAT and Taguchi method of optimization.

Scale-up studies using optimization techniques

Scale-up of any process is the most vital task that has to be studied with utmost care, the effectivity of a process is the ability to replicate the results that are obtained in the small-scale or lab-scale studies in the mass production of the enzymes. Large-scale production cannot be a trial and error method of production, it is only started once the process is identified with clarity using statistical techniques before being put into active production (Schutyser et al. 2004; Varalakshmi and Raju 2013). Each technique has its own advantage in the production of the enzyme; the choice will always be to use the best suitable technique which shall give better productivity, reduced cost and time (Ashok et al. 2017). The preparation of low cost enzyme can be done using simpler techniques such as randomized blocks and Taguchi methods, but as the complication in production increases, like the increase in the number of factors which affect the process, in such instances, it is wiser to use advanced techniques such as ANN or nature inspired optimization techniques (Athreya and Venkatesh 2012; Ali et al. 2016); these help to better understand the process and increase the effectivity of the production. For example, in the production of l-asparaginase from bacterial or fungal sources using SmF, the effect of factors are easily manageable, and so simpler techniques such as Taguchi or factorial design can be used to understand the process but when using SSF, the number of factors to be controlled and the controlling efficiency increase exponentially also while using recombinant microorganisms the number of factors to be handled increases, and hence better efficiency will be required to analyze the process. In SSF, as controlling of the factors is considered much difficult than that in the case of SmF, and so techniques such as GA, ANN, and ACO, are preferred while handling such kind of processes (Kumar et al. 2010; Pallem et al. 2010). Figure 6 summarizes the different techniques that can be used effectively while dealing with SmF and SSF techniques when the scaling up of the process is being studied.

Conclusion

The statistical techniques are considered to be best suitable when dealing with the simpler process parameters and scaling up in the case of submerged fermentation where parameters are easier to control although certain types of process requires advanced techniques but this can be considered minimal, whereas in case of solid state fermentation where the handling of parameters is much difficult and multiple efforts are needed to bring out the best solution, it is suggested to use the advanced techniques. Flask scale studies can be done using statistical methods but when scaling up the process to a laboratory or industrial level, it is better to shift the focus to advanced optimization techniques. The current review has the ability to help understand what techniques are required when working with submerged and solid state fermentation techniques on a laboratory scale, and also is expected to reduce the time for any process as it would help in focusing on critical variables, thereby decreasing the economy involved in running the process as a whole.

References

Abbas Ahmed MM (2015) Production, purification and characterization of l-asparaginase from marine endophytic Aspergillus sp. ALAA-2000 under submerged and solid state fermentation. J Microb Biochem Technol 7:165–172

Afshinmanesh F, Marandi A, Rahimi-Kian A (2005) A novel binary particle swarm optimization method using artificial immune system. In: Computer as a Tool, 2005. EUROCON 2005. pp 217–220

Agrawal H, Sharma M (2015) Optimization of NTRU cryptosystem using genetic algorithm. Int J Adv Res Comput Sci Softw Eng 5:944–947

Agrawal H, Sharma M (2016) Optimization of NTRU cryptosystem using ACO and PSO algorithm. Int J Sci Eng Technol Res 5:617–621

Ali U, Naveed M, Ullah A et al (2016) l-asparaginase as a critical component to combat acute lymphoblastic leukaemia (ALL): a novel approach to target ALL. Eur J Pharmacol 771:199–210

Arora S, Singh S (2013) The firefly optimization algorithm: convergence analysis and parameter selection. Int J Comput Appl 69:48–52

Ashok A, Doriya K, Rao DRM, Kumar DS (2017) Design of solid state bioreactor for industrial applications: an overview to conventional bioreactors. Biocatal Agric Biotechnol 9:11–18

Athreya S, Venkatesh YD (2012) Application of Taguchi method for optimization of process parameters in improving the surface roughness of lathe facing operation. Int Ref J Eng Sci 1:13–19

Ba D, Boyaci IH (2007) Modeling and optimization II: comparison of estimation capabilities of response surface methodology with artificial neural networks in a biochemical reaction. J Food Eng 78:846–854

Baskar G, Renganathan S (2012) Optimization of l-asparaginase production by Aspergillus terreus MTCC 1782 using response surface methodology and artificial neural network-linked genetic algorithm. Asia Pac J Chem Eng 7(2):212–220

Biswas A, Dasgupta S, Das S, Abraham A (2007) Synergy of PSO and bacterial foraging optimization—a comparative study on numerical benchmarks. Adv Soft Comput 44:255–263

Biswas T, Kumari K, Ramsingh H, Jha SK (2014) Optimization of three-phase partitioning system for enhanced recovery of l-asparaginase from Escherichia coli K12 using design of experiment (DOE). Int J Adv Res 2:1142–1147

Bruns RE, Luis S, Ferreira C et al (2014) Statistical designs and response surface techniques for the optimization of chromatographic systems. J Chromatogr 1158:2–14

Cammack KA, Marlborough DI, Miller DS (1972) Physical properties and subunit structure of l-asparaginase isolated from Erwinia carotovora. Biochem J 126:361–379

Carreiro MM, Sinsabaugh RL, Repert DA, Parkhurst DF (2017) Microbial enzyme shifts explain litter decay responses to simulated nitrogen deposition. Wiley Ecol Soc Am 81:2359–2365

Chang BV, Chang YM (2016) Biodegradation of toxic chemicals by Pleurotus eryngii in submerged fermentation and solid-state fermentation. J Microbiol Immunol Infect 49:175–181

Chang JS, Lee JT, Chang AC (2006) Neural-network rate-function modeling of submerged cultivation of Monascus anka. Biochem Eng J 32:119–126

Chen L, Yang X, Raza W et al (2011) Solid-state fermentation of agro-industrial wastes to produce bioorganic fertilizer for the biocontrol of fusarium wilt of cucumber in continuously cropped soil. Bioresour Technol 102:3900–3910

Coello CAC (1999) A comprehensive survey of evolutionary-based multiobjective optimization techniques. Knowl Inf Syst 1:129–156

Couto SR, Sanromán MA (2006) Application of solid-state fermentation to food industry—a review. J Food Eng 76:291–302

Dange VU, Peshwe SA (2015) Statistical assessment of media components by factorial design for l-asparaginase production by Aspergillus niger in surface fermentation. Eur J Exp Biol 5:57–61

de Melo AG, Pedroso RCF, Guimarães LHS, Alves JGLF, Dias ES, de Resende MLV, Cardoso PG (2014) The optimization of Aspergillus sp. GM4 tannase production under submerged fermentation. Adv Microbiol 4:143–150

Desai KM, Survase SA, Saudagar PS et al (2008) Comparison of artificial neural network (ANN) and response surface methodology (RSM) in fermentation media optimization: a case study of fermentative production of scleroglucan. Biochem Eng J 41:266–273

Dhanarajan G, Mandal M, Sen R (2014) A combined artificial neural network modeling-particle swarm optimization strategy for improved production of marine bacterial lipopeptide from food waste. Biochem Eng J 84:59–65

Dorigo M, Stützle T (2009) Ant colony optimization. Encycl Mach Learn 1:28–39

Doriya K, Jose N, Gowda M, Kumar DS (2016) Solid-state fermentation vs submerged fermentation for the production of l-asparaginase. Adv Food Nutr Res 78:115–135

El-Bakry M, Abraham J, Cerda A et al (2015) From wastes to high value added products: novel aspects of SSF in the production of enzymes. Crit Rev Environ Sci Technol 45:1999–2042

Elias F, Soares F, Braga FR et al (2010) Optimization of medium composition for protease production by Paecilomyces marquandii in solid-state-fermentation using response surface methodology. Afr J Microbiol Res 4:2699–2703

El-Naggar NEA, Moawad H, El-Shweihy NM, El-Ewasy SM (2015) Optimization of culture conditions for production of the anti-leukemic glutaminase free l-asparaginase by newly isolated Streptomyces olivaceus NEAE-119 using response surface methodology. Biomed Res Int 2015:1–17

El-Sersy NA, Ebrahim HA, Abou-Elela GM (2010) Response surface methodology as a tool for optimizing the production of antimicrobial agents from Bacillus licheniformis SN2. Curr Res Bacteriol 3:1–14

Faisal PA, Hareesh ES, Priji P et al (2014) Optimization of parameters for the production of lipase from Pseudomonas sp. BUP6 by solid state fermentation. Adv Enzyme Res 2:125–133

Falahian R, Mehdizadeh Dastjerdi M, Molaie M et al (2015) Artificial neural network-based modeling of brain response to flicker light. Nonlinear Dyn 81:1951–1967

Farag AM, Hassan SW, Beltagy EA, El-Shenawy MA (2015) Optimization of production of anti-tumor l-asparaginase by free and immobilized marine Aspergillus terreus. Egypt J Aquat Res 41:295–302

Fasham AMJR, Evans GT, Kiefer DA et al (1995) The use of optimization techniques to model marine ecosystem dynamics at the JGOFS station at 47°N20°W [and discussion]. Philos Trans R Soc Lond B Biol Sci 348:203–209

Fenech A, Fearn T, Strlic M (2012) Use of Design-of-Experiment principles to develop a dose-response function for colour photographs. Polym Degrad Stab 97:621–625

Fister I Jr, Yang XS, Fister I et al (2013) A brief review of nature-inspired algorithms for optimization. Elektroteh Vestn/Electrotech Rev 80:116–122

Funes E, Allouche Y, Beltrán G, Jiménez A (2015) A review: artificial neural networks as tool for control food industry process. J Sens Technol 5:28–43

Garsoux G, Lamotte J, Gerday C, Feller G (2004) Kinetic and structural optimization to catalysis at low temperatures in a psychrophilic cellulase from the Antarctic bacterium Pseudoalteromonas haloplanktis. Biochem J 384:247–253

Ghosh S, Murthy S, Govindasamy S, Chandrasekaran M (2013) Optimization of l-asparaginase production by Serratia marcescens (NCIM 2919) under solid state fermentation using coconut oil cake. Sustain Chem Process 1:9

Hoa BT, Hung PV (2013) Optimization of nutritional composition and fermentation conditions for cellulase and pectinase production by Aspergillus oryzae using response surface methodology. Int Food Res J 20:3269–3274

Hongwen C, Baishan F, Zongding H (2005) Optimization of process parameters for key enzymes accumulation of 1,3-propanediol production from Klebsiella pneumoniae. Biochem Eng J 25:47–53

Irfan M, Asghar U, Nadeem M et al (2016) Optimization of process parameters for xylanase production by Bacillus sp. in submerged fermentation. J Radiat Res Appl Sci 9:139–147

Kalantar E, Deopurkar R (2007) Application of factorial design for the optimized production of antistaphylococcal metabolite by Aureobasidium pullulans. Jundishapur J Nat Pharm Prod 2:69–77

Karmakar M, Ray RR (2011) Statistical optimization of FPase production from water hyacinth using Rhizopus oryzae PR 7. J Biochem 3:225–229

Kenari SLD, Alemzadeh I, Maghsodi V (2011) Production of l-asparaginase from Escherichia coli ATCC 11303: optimization by response surface methodology. Food Bioprod Process 89:315–321

Khaleel H, Ali Q, Zulkali M et al (2012) Economic benefit from the optimization of citric acid production from rice straw through Plackett–Burman design and central. Turk J Eng Environ Sci 36:81–93

Kim S-K, Min W-K, Park Y-C, Seo J-H (2015) Application of repeated aspartate tags to improving extracellular production of Escherichia coli l-asparaginase isozyme II. Enzyme Microb Technol 79–80:49–54

Kumar GM, Knowles NR (1993) Changes in lipid peroxidation and lipolytic and free-radical scavenging enzyme activities during aging and sprouting of potato (Solanum tuberosum) seed-tubers. Plant Physiol 102:115–124

Kumar R, Balaji S, Uma TS et al (2010) Optimization of influential parameters for extracellular keratinase production by Bacillus subtilis (MTCC9102) in solid state fermentation using horn meal—a biowaste management. Appl Biochem Biotechnol 160:30–39

Kunamneni A, Singh S (2016) Response surface optimization of enzymatic hydrolysis of maize starch for higher glucose production maize starch for higher glucose production. Biochem Eng 27:179–190

Kunamneni A, Raju KVVSNB, Zargar MI et al (2015) Optimization of process parameters for production of lipase in solid-state fermentation by newly isolated Aspergillus species. Indian J Biotechnol 3:65–69

Li S, Lin S, Chien YW et al (2001) Statistical optimization of gastric floating system for oral controlled delivery of calcium. AAPS Pharm Sci Tech 2:11–22

Mandal A, Kar S, Dutta T et al (2015) Parametric optimization of submerged fermentation conditions for xylanase production by Bacillus cereus BSA1 through Taguchi Methodology. Acta Biol Szeged 59:189–195

Meng F, Xing G, Li Y et al (2015) The optimization of Marasmius androsaceus submerged fermentation conditions in 5-l fermentor. Saud J Biol Sci 23:S99–S105

Mitchell DA, Krieger N, Stuart DM, Pandey A (2000) New developments in solid-state fermentation II. Rational approaches to the design, operation, and scale-up of bioreactors. Process Biochem 35:1211–1225

Mohana S, Shrivastava S, Divecha J, Madamwar D (2008) Response surface methodology for optimization of medium for decolorization of textile dye direct black 22 by a novel bacterial consortium. Bioresour Technol 99:562–569

Montgomery DC (2013) Design and analysis of experiments, 8th edn. Wiley, New Jersey

Müller SD, Marchetto J, Airaghi S, Koumoutsakos P (2002) Optimization based on bacterial chemotaxis. IEEE Trans Evol Comput 6:16–29

Negi S, Banerjee R (2006) Optimization of amylase and protease production from Aspergillus awamori in single bioreactor through EVOP factorial design technique. Food Technol Biotechnol 44:257–261

Pallem C, Manipati S, Somalanka SR, Pradesh A (2010) Process optimization of l-glutaminase production by Trichoderma koningii under solid state fermentation (SSF) Centre for Biotechnology, Department of Chemical Engineering, AU College of Engineering Department of Surgery, NRI Institute of Medical Science. 1168–1174

Pappu JSM, Gummadi SN (2016) Multi response optimization for enhanced xylitol production by Debaryomyces nepalensis in bioreactor. 3. Biotech 6:1–10

Rizzari C, Zucchetti M, Conter V et al (2000) l-asparagine depletion and l-asparaginase activity in children with acute lymphoblastic leukemia receiving i.m. or i.v. Erwinia C. or E. coli l-asparaginase as first exposure. Ann Oncol 11:189–193

Rodríguez-fernández DE, Rodríguez-león JA, De Carvalho JC et al (2011) The behavior of kinetic parameters in production of pectinase and xylanase by solid-state fermentation. Bioresour Technol 102:10657–10662

Roy RV, Das M, Banerjee R, Bhowmick AK (2006) Comparative studies on rubber biodegradation through solid-state and submerged fermentation. Process Biochem 41:181–186

Schutyser MAI, Briels WJ, Boom RM, Rinzema A (2004) Combined discrete particle and continuum model predicting solid-state fermentation in a drum fermentor. Biotechnol Bioeng 86:405–413

Sindhu R, Suprabha GN, Shashidhar S (2009) Optimization of process parameters for the production of α-amylase from Penicillium janthinellum (NCIM 4960) under solid state fermentation. Afr J Microbiol Res 3:498–503

Singh Y, Srivastava K (2013) Statistical and evolutionary optimization for enhanced production of an antileukemic enzyme, l-asparaginase, in a protease-deficient Bacillus aryabhattai ITBHU02 isolated from the soil contaminated with hospital waste. Indian J Exp Biol 51:322–335

Sinha RK, Singh HR (2016) Statistical optimization of the recombinant l-asparaginase from Pseudomonas fluorescens by Taguchi DOE. Int J Pharm Tech Res 9:254–260

Subramaniyam R, Vimala R (2012) Solid state and submerged fermentation for the production of bioactive substances: a comparative study. Int J Sci Nat 3:480–486

Varalakshmi V, Raju K (2013) Optimization of l-asparaginase production by Aspergillus terreus mtcc 1782 using bajra seed flour under solid state fermentation. Int J Res Eng Technol 2:121–129

Venil CK, Nanthakumar K, Karthikeyan K (2009) Production of l-asparaginase by Serratia marcescens SB08: optimization by response surface methodology. Iran J Biotechnol 7:10–18

Weng XY, Sun JY (2006) Kinetics of biodegradation of free gossypol by Candida tropicalis in solid-state fermentation. Biochem Eng J 32:226–232

Williams JC, ReVelle CS, Levin SA (2004) Using mathematical optimization models to design nature reserves. Front Ecol Environ 2:98–105

Xu CP, Kim SW, Hwang HJ et al (2003) Optimization of submerged culture conditions for mycelial growth and exo-biopolymer production by Paecilomyces tenuipes C240. Process Biochem 38:1025–1030

Xu H, Caramanis C, Mannor S (2012) Statistical optimization in high dimensions. In: Proceedings of the 15th international conference on artificial intelligence and statistics (AISTATS) 2012, La Palma, Canary Islands, pp 1332–1340

Yam CH, Izzo D, Biscani F (2010) Towards a high fidelity direct transcription method for optimisation of low-thrust trajectories. In: In 4th International Conference on Astrodynamics Tools and Techniques, pp 1–9

Yang X (2014) Nature-inspired optimization algorithms. Elsevier, London

Ying Y, Shao P, Jiang S, Sun P (2009) Artificial neural network analysis of immobilized lipase catalyzed synthesis of biodiesel from rapeseed. Comput Comput Technol Agric 2:1239–1249

Acknowledgements

The authors sincerely thank Director, Indian Institute of Technology Hyderabad for their continued encouragement and support. This work is supported by research grant from Department of Science and Technology-Science and Engineering Research Board SERB (SB-EMEQ-048/2014), Government of India. The authors would also like to extend their gratitude to all the members of the IBBL for the unconditional support that they have given.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All the authors declare that they have no conflicts of interest regarding this paper.

Rights and permissions

About this article

Cite this article

Ashok, A., Kumar, D.S. Different methodologies for sustainability of optimization techniques used in submerged and solid state fermentation. 3 Biotech 7, 301 (2017). https://doi.org/10.1007/s13205-017-0934-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13205-017-0934-z