Abstract

\(l_{q}\) (\(0< q < 1\)) regularization is a dominating strategy for matrix completion problems. The main goal of nonconvex \(l_{q}\) regularization based algorithm is to find a so-called low-rank solution.Unfortunately, most existing algorithms suffer from full singular value decomposition (SVD), and thus become inefficient for large-scale matrix completion problems. To alleviate this limitation, in this paper we propose an accelerated inexact algorithm to handle such problem. The key idea is to employ the closed-form q-thresholding (\(q = 1/2, 2/3\)) operator to approximate the rank of a matrix. The power method and the special “sparse plus low-rank” structure of the matrix iterates are adopted to allow efficient SVD. Besides, we employ Nesterov’s accelerated gradient method and continuation technique to further accelerate the convergence speed of our proposed algorithm. A convergence analysis shows that the sequence \(\{X_{t}\}\) generated by our proposed algorithm is bounded and has at least one accumulation point. Extensive experiments have been conducted to study its recovery performance on synthetic data, image recovery and recommendation problems. All results demonstrate that our proposed algorithm is able to achieve comparable recovery performance, while being faster and more efficient than state-of-the-art methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In various machine learning and data analysis areas, such as collaborative filtering [1, 2], dimensionality reduction [3], subspace clustering [4], multiple labels learning [5, 6], and image processing [7, 8], one needs to consider the following matrix completion problem:

where \(M \in {\mathbb {R}}^{m \times n}\) is the incomplete low-rank matrix to be reconstructed, X is the considered low-rank matrix in \({\mathbb {R}}^{m \times n}\), rank(X) is the rank of X, \(\varOmega \) is the location of the observed entries, and \({\mathscr {P}}_{\varOmega }\) is the orthogonal projection onto the span of matrices vanishing outside of \(\varOmega \). The goal of problem (1) is to find the lowest-rank solution \(X^{*}\) of \({\mathscr {P}}_{\varOmega }(X) = {\mathscr {P}}_{\varOmega }(M)\), which is minimal to rank(X). More often, we consider the following regularization problem, which can be cast as:

where \(\| \cdot \| _{F}\) is the Frobenius norm, \(\lambda \) is a positive regularization parameter.

However, since the nonconvexity and discontinuous nature of rank(X), problems (1) and (2) are NP-hard [9] and cannot be solved in polynomial time. To alleviate this difficulty, a widely used strategy is to adopt the nuclear norm as a convex surrogate of \({ {rank}}(\cdot )\). Theoretical studies show that the nuclear norm is the tightest convex lower bound of the rank [9]. Candès and Recht [10] have been proven that the missing values can be perfectly recovered by solving nuclear norm regularization minimization problem if incomplete matrix M satisfies certain assumptions, e.g., \(|\varOmega | \ge {\mathscr {O}}(N^{1.2}r{\text {log}}(N))\)\((N = { {max}}(m, n), r = { {rank}}(M))\). Generally speaking, the nuclear norm regularization minimization problem can be treated as a Semidefinite Program (SDP) problem [11]. However, SDP solvers are only suitable for \(m \times n\) matrices with \(m, n \le 100\). In order to overcome this drawback, various methods have been proposed to tackle nuclear norm regularization minimization problem. Examples of first-order methods include singular value thresholding (SVT) algorithm [12] and accelerated proximal gradient with linesearch (APGL) algorithm [13]. Although these two sophisticated algorithms can attain promising results with a strong theoretical guarantee, they all involve expensive SVD operations in each iteration and cannot suitable for large-scale matrices. With the aim of alleviating this shortcoming, fixed point continuation with approximate (FPCA) [14] SVD addresses the same problem as APGL while utilizing a fast Monte Carlo algorithm for SVD calculations. Another state-of-the-art algorithm is Soft-impute algorithm [15] which utilizes a special “sparse plus low-rank” structure associated with the SVT to allow efficient SVD in each iteration. Very recently, Soft-impute algorithm has been accelerated by using Nesterov’s rule [16].

Although the nuclear norm regularization minimization problem can be efficiently solved and has been accepted as a powerful tool for the matrix completion problems. Fan [17] pointed out that the nuclear norm penalty shrinks all singular values equally, which leads to over-penalize large singular values. In other words, the nuclear norm may make the solution deviate from the original solution. With the aim of making the larger singular values get less penalized, there has been a significant interest in the use of the nonconvex surrogates of \(rank(\cdot )\), such as capped-\(l_{1}\) penalty, log-sum penalty (LSP), truncated nuclear norm (TNN), smoothly clipped absolute deviation (SCAD), minimax concave penalty (MCP). In [18], the learning formulations with capped-\(l_{1}\) objective functions are solved by means of multi-stage convex relaxation scheme. In [19], the sparse signal recovery problems are solved by a sequence of weighted \(l_{1}\)-minimization problems. In [20], the matrix completion algorithm based on the TNN is employed in achieving a better approximation to the rank of matrix. In [17], the penalized likelihood methods are proposed to deal with nonparametric regression by select variables and estimate coeffcients simultaneously. In [21], a fast, continuous, nearly unbiased and accurate method is proposed for solving high-dimensional linear regression. Empirically, these nonconvex regularization methods achieve better recovery performance than the convex nuclear norm regularization methods.

Another line of nonconvex surrogates of \({ {rank}}(\cdot )\) is \(l_{q} \; (0< q < 1 )\) or Schatten-q quasi-norm, which has received significant interest in the area of matrix completion. The key idea is that it allows a less biased and/or lower rank solution to be found than using the nuclear norm. However, the resultant nonconvex \(l_{q}\) regularization problems are much more difficult to solve. A popular used method for optimization the nonconvex \(l_{q}\) regularization problems is \(l_{q}\)-Proximal-Gradient (\(l_{q}PG\)) algorithm [22]. Since the noncontinuous of objective function (\(q = 0\)), most existing convergence theory that work with convex cases cannot be applied. By employing the Zangwill’s global convergence theory, a non-trivial convergence result of \(l_{q}PG\) is obtained. However, each \(l_{q}PG\) iteration involves computing the approximate solutions [23,24,25,26] and SVD steps. Thus, this method is not very accurate and may becomes slow when addressing large-scale matrices. To improve the accuracies and speed of \(l_{q}PG\) algorithm, some improved methods based on Schatten 1/2 quasi-norm [27] and Schatten 2/3 quasi-norm [28] have recently been proposed. Peng et al. [29] proposed a fixed point iterative scheme with the singular value half thresholding operator. The convergence analysis of this method reveals that it is faster and more efficient than \(l_{q}PG\) algorithm. Most recently, by observing that the Schatten 2/3 quasi-norm regularization model has shown better performance than Schatten 1/2 quasi-norm minimization as it requires fewer measurements, Wang et al. [30] build a \(L_{2/3}\)-PA algorithm for matrix completion. Although, the closed-form thresholding formulas of Schatten 1/2 quasi-norm and Schatten 2/3 quasi-norm regularization problems are obtained, a computationally expensive SVD still be required in each iteration.

With the aim of improving the drawbacks of above, this paper proposes a novel faster and more accurate algorithm for matrix completion. The proposed method extends HFPA and \(L_{2/3}\)-PA algorithms by using inexact proximal operator and Nesterov’s accelerated gradient method. More precisely, our algorithm is based on HFPA and \(L_{2/3}\)-PA algorithms, but achieves faster convergence rate and better recovery performance than HFPA and \(L_{2/3}\)-PA algorithms do. Besides, our proposed algorithm is simple and easy to use for large-scale matrix completion problems. The main contributions of our paper include the following:

-

1.

We first propose an efficient and fast algorithm to solve matrix completion problem, which is based on an inexact proximal operator and the Nesterov’s accelerated gradient method;

-

2.

In addition, we study the convergence of our proposed method, and show that our proposed algorithm is guaranteed to converge to a critical point of the nonconvex objective. Furthermore, our proposed algorithm is simple and easy to use;

-

3.

Finally, we apply the proposed algorithm to synthetic data, image recovery and large-scale recommendation problems, and achieve faster convergence rate and better recovery performance than most of state-of-the-art algorithms, which demonstrate that our proposed algorithm has the great potentials in matrix completion applications.

The remainder of this paper can be organized as follows. Section 2 describes the related works; Sect. 3 introduces the proposed algorithm; Sect. 4 reports and analyses the experimental results in both speed and quality; Finally, Sect. 5 gives the conclusion of this paper.

Notation We summarize the notations that will be used in this paper. For \(X \in {\mathbb {R}}^{m \times n}, \sigma (X) = (\sigma _{1}(X), \sigma _{2}(X), \ldots , \sigma _{r}(X))^{T}\) denotes the vector of singular value of X arranged in nonincreasing order; \(Diag(\sigma (X))\) denotes a diagonal matrix whose diagonal vector is \(\sigma (X)\). The Frobenius norm and Schatten q quasi-norm of X are defined as \(\| X \| _{F} = (\sum _{i,j}X_{ij}^{2})^{1/2} = (\sum _{i = 1}^{r}\sigma _{i}(X)^{2})^{1/2}\) and \(\| X \| _{q} = (\sum _{i = 1}^{r}\sigma _{i}(X)^{q})^{1/q}\), respectively. For \(X, Y \in {\mathbb {R}}^{m \times n}\), \(<X, Y>= tr(Y^{T}X)\) denotes their inner product.

2 Related work

2.1 Proximal algorithms

The APGL algorithm pioneered uses proximal operator [31] to solve matrix completion problems. It considers the following low-rank matrix completion problem:

where f, g are convex, and f is smooth but g is possibly nonsmooth. Besides, f is differentiable with Lipschitz continuous gradient L, i.e., \(\| \bigtriangledown f(X_{1}) - \bigtriangledown f(X_{2}) \le L\| X_{1} - X_{2}\| \). At the tth iteration, the APGL algorithm generates \(X_{t + 1}\) as

where \(\mu < 1/L\), and \(\hbox {prox}_{\mu g}(\cdot )\) denotes the proximal operator. By using the Nesterov’s accelerated gradient method, Y can be obtained as follows:

The convergence analysis of APGL algorithm reveals that this algorithm converges at a rate of \({\mathscr {O}}(1/T^{2})\), where T is the iteration number. This is also known to be the best possible rate for the problem (3) [32].

Since the considerable attention of nonconvex and nonsmooth problems in machine learning, it is natural to ask that if the accelerated proximal algorithm can be extended to problems where f and/or g may be nonconvex. The answer is positive. In [33], Li and Lin have extended accelerated proximal algorithm to solve nonconvex and nonsmooth problems. The key idea is adopting a monitor that satisfies the sufficient descent property. Subsequently, they proposed a nonmonotone accelerated proximal gradient algorithm (nmAPG). Although the nmAPG algorithm is much faster than most of state-of-the-art, its convergence rate is still unknown.

2.2 Existing completion methods with \(l_{q}\) regularization

Using the Schatten-q quasi-norm minimization instead of the \(rank(\cdot )\) minimization is one of the most successful approaches in the area of matrix completion. The state-of-the-art matrix completion algorithms based on \(l_{q}\) regularization are \(l_{q}PG\) [22], HFPA [29], and \(L_{2/3}\)-PA [30].

(1) \(l_{q}PG\): Considering the \(l_{q}\) penalized model:

where \(q \in [0, 1]\). It should be note that \(q = 0\) is equivalent to the problem (2) and \(q = 1\) is equivalent to the nuclear norm penalized problem. It requires computing the following non-trivial optimization problem:

where z is a constant and \(q \in (0, 1)\). This has the following non-trivial solutions.

Theorem 1

[22] Let \(q \in (0, 1)\), \(b = [2\lambda (1 - q)]^{1/(2 - q)}\), and \(c = b + \lambda q b^{q - 1}\). Then the solutions \(x^{*} = \tau _{\lambda }(z)\)to the problem (9) are:

where for \(|z| > c\), \({\hat{x}} \in (b, |z|)\)solves:

When \(|z| > c\)there are two solution to (11) and \({\hat{x}}\)is the larger one which can be computed from the iteration:

with the initial condition \(x_{(0)} \in [b, |z|]\).

Using the above theorem, Marjanovic et al. have proposed a MM-based algorithm namely \(l_{q}PG\) for iteratively reducing objective function F. Moreover, experiments are performed on matrix completion problems show that \(l_{q}PG\) algorithm is superior to the state-of-the-art. Although the \(l_{q}PG\) algorithm further accelerated using warm-starting and Nesterov’s method, it is still suffer from heavy SVD which takes \({\mathscr {O}}(mn^{2})\) time.

(2) HFPA: Recently, Peng et al. proposed HFPA to improve FPCA and \(l_{q}PG\) algorithms by using the Schatten 1/2 quasi-norm. The key idea is that when \(q = 1/2\) the solution of problem (9) has an analytical expression [27], that is:

with \(\phi _{\lambda }(z) = {\text {arccos}}(\frac{\lambda }{8}(\frac{z}{3})^{-3/2})\). The HFPA algorithm consists of two loops. For inner iterations, a special gradient descent method and the matrix half thersholding operator are employed to obtain the approximation solution. In the outer loops, the continuation technique is used to accelerate the convergence speed of HFPA. Besides, the authors further provide the global necessary optimality condition for the \(L_{1/2}\) regularization problem and the exact mathematical convergence analysis of the HFPA. Actually, HFPA is a fixed point method, but used for nonconvex optimization problem. Although, a fast Monte Carlo algorithm is adopted in HFPA to approximate SVD procedure, it is still time-consuming on large matrices.

(3) \(L_{2/3}\)-PA: Another state-of-the-art algorithm, \(L_{2/3}\)-PA, combines a gradient descent method in inner iterations that approximation the solutions by 2/3-thresholding operator with continuation technique that accelerates the convergence speed. The main idea in \(L_{2/3}\)-PA is to employ the following analytical expression of solutions of problem (9) when \(q = 2/3\):

where \(\varphi _{\lambda }(z) = (2/\sqrt{3})\lambda ^{1/4}({\text {cosh}}(\phi _{\lambda }(z)/3))^{1/2}\), with \(\phi _{\lambda }(z) = {\hbox {arccosh}}(27z^{2}\lambda ^{-3/2}/16)\). Based on the empirical studies, we found that \(L_{2/3}\)-PA is not very accurate though it improves the recovery performance of HFPA. Moreover, it is not efficient enough as still involve time-consuming SVD steps and cannot suitable for large-scale matrices completion.

2.3 Existing completion methods with inexact proximal operator

In machine learning research, the proximal gradient methods are popular for solving various optimization problems with non-smooth regularization. However, it requires the full SVD to solve the proximal operator, which may be time-consuming. Thus, inexact proximal gradient methods are extremely important.

Based on this line, Yao et al. [34] use the power method to approximate the SVD scheme, and propose Accelerated and Inexact Soft-Impute (AIS-Impute) algorithm for matrix completion. The convergence analysis of the AIS-Impute algorithm illustrates that it still converges at a rate of \({\mathscr {O}}(1/T^{2})\). For the nonconvex problems, a Fast NonConvex Low-rank (FaNCL) [35] algorithm is proposed for matrix completion and robust principal component analysis (RPCA). To improve the convergence speed, the inexact proximal gradient method is also employed in the procedure of FaNCL. Besides, Gu et al. [36] have proposed nonmontone accelerated inexact proximal gradient method (nmAIPG) which extends the nmAPG from exact case to inexact case. They also point out that the inexact version of nmAPG shares the same convergence rate as the nmAPG. Observing that the nmAIPG may requires two proximal steps in each iteration, and can be inefficient for solving large-scale matrices. With the aim of alleviating this shortcoming, the noconvex inexact APG (niAPG) algorithm has been proposed in [37] which requires only one inexact proximal step in each iteration. Thus, the niAPG algorithm is much faster, while achieves the comparable performance as the state-of-the-art.

3 Proposed method

In this section, we introduce our proposed algorithm and discuss some of its basic properties.

3.1 Motivation

In this section, we illustrate that how the proximal gradient algorithm can be solving the following problems:

where \(f(X) = \frac{1}{2}\| {\mathscr {P}}_{\varOmega }(X - M)\| _{F}^{2}\) and \(q \in \{\frac{1}{2}, \frac{2}{3}\}\).

First, we give the following definition.

Definition 1

(q-thresholding operator) Suppose \(x = (x_{1},x_{2},\ldots ,x_{n})^{T}\), for any \(\lambda > 0\), the q-thresholding operator \({\mathscr {T}}_{\lambda }(\cdot )\) is defined as

Now, we consider the following quadratic approximation model of the objective function (15) at Y:

where \(\mu \in (0, 1/L)\). It should be note that \(Q_{\lambda , \mu }(X, X) = F(X)\) and \(Q_{\lambda , \mu }(X, Y) \ge F(X)\) for any \(X, Y \in {\mathbb {R}}^{m \times n}\). By using simple algebra, Eq. (17) can be recasted as:

Ignoring the constant terms in (18) and let \(Y = X_{t - 1}\), the minimizer \(X_{t}\) of \(Q_{\lambda , \mu }(X, Y)\) can be obtained by

Thus, the above \(l_{q}\) regularization problem can be solved by the proximal operator as shown in the following lemma.

Lemma 2

[29] Let \(G = X_{t - 1} - \mu {\mathscr {P}}_{\varOmega }(X_{t - 1} - M)\)and the SVD of G is \(U\varSigma V^{T}\). Then

where \(q \in \{\frac{1}{2}, \frac{2}{3}\}\). Specifically, \({ {prox}}_{\lambda \mu , q}(G) = U { {Diag}}({\mathscr {T}}_{\lambda \mu }(\sigma (G)))V^{T}\).

3.2 Method for computing inexact proximal operator

As shown in Lemma 2, solving the proximal operator \({ {prox}}_{\lambda \mu , q}(\cdot )\) only needs the singular values/vectors which are greater than \( \frac{\root 3 \of {54}}{4}(\lambda \mu )^{2/3}\) or \(\frac{\root 4 \of {48}}{3}(\lambda \mu )^{3/4}\). It means that the full SVD in Lemma 2 is time-consuming and unnecessary. In order to overcome this drawback, it is natural to ask that if there is a faster and more effective way to solve this problem. Fortunately, there are some researcher focus on this problem [35, 38]. They suggest to apply SVD on small matrix instead of the original large matrix, thus the complexity could be dramatically down. The main idea of their methods is to extract a small core matrix by finding orthonormal based with the unitary invariant property. For problem (20), we can obtain similar result.

Proposition 1

Suppose G has \({\hat{k}}\)singular values larger than \( \frac{\root 3 \of {54}}{4}(\lambda \mu )^{2/3}\)or \(\frac{\root 4 \of {48}}{3}(\lambda \mu )^{3/4}\), and \(G = QQ^{T}G\), where \(Q \in {\mathbb {R}}^{m \times k}, k > {\hat{k}}\), is orthogonal. Then

-

(i)

range(G) \(\subseteq \)range(Q);

-

(ii)

\({ {prox}}_{\lambda \mu , q}(G) = Q{ {prox}}_{\lambda \mu , q}(Q^{T}G)\).

Proof

-

(i)

Since \(G = Q(Q^{T}G)\), we can obtained the first part of Proposition 1 obviously.

-

(ii)

Consider an arbitrary matrix \(X \in {\mathbb {R}}^{m \times n}\) admits the SVD as \(X = U\varSigma V^{T}\), then \(QX = (QU)\varSigma V^{T}\). Since Q is orthogonal and using the unitary invariant norm property, we can get \(\| X \| _{q}^{q} = \| QX \| _{q}^{q}\). Therefore,

$$\begin{aligned} Q{ {prox}}_{\lambda \mu , q}(Q^{T}G)& {} = Q\mathop {{\text {argmin}}}_{Z \in {\mathbb {R}}^{m \times n}}\frac{1}{2}\| Z - Q^{T}G \| _{F}^{2} + \lambda \| Z\| _{q}^{q}\nonumber \\& {} = \mathop {{\text {argmin}}}_{X \in {\mathbb {R}}^{m \times n}}\frac{1}{2}\| QZ - QQ^{T}G \| _{F}^{2} + \lambda \| Q^{T}X\| _{q}^{q}, \end{aligned}$$(21)the second equality follows from \(X =QZ\).

Since \(\| Q^{T}X \| _{q}^{q} = \| Z \| _{q}^{q} = \| QZ \| _{q}^{q} = \| X \| _{q}^{q}\) and \(QQ^{T} = I\), we get

which is the second part of Proposition 1. We complete the proof. \(\square \)

In the traditional way, we need full SVD on the original large matrix and its complexity is \({\mathscr {O}}(mn^{2})\). From Proposition 1, we can easily find that the complexity can be dramatically down as performing SVD on a smaller matrix. Specifically, we perform SVD on matrix \(Q^{T}G\) and its complexity becomes \({\mathscr {O}}(mk^{2})\). Thus, when \(k \ll n\), the computation speed can be significantly improved.

It should be note that the proof process of Proposition 1 partially follows but is different from [38]. Actually, we deal with the \(l_{q}\) regularization problem instead of nuclear norm regularization problem.

Next, we will address that how to determine the orthogonal matrix Q. Indeed, orthogonal matrix Q can be approximated by using power method [39], which is wildly used to approximate the SVD in nuclear norm and nonconvex regularization minimization problem [35, 40]. More precisely, we can use Algorithm 2 to obtain the orthogonal matrix Q.

Similar to [35, 40], we fix the number of iterations to 3 for PowerMethod. The matrix R is used for warm-start which is particularly useful for proximal algorithm. As pointed out by [35], the PROPACK algorithm [41] can also be used to obtain Q and the complexity is the same as PowerMethod. Empirically, PROPACK is much less efficient than PowerMethod. Besides, a fast Monte Carlo algorithm [14, 29, 30] is used for computing an approximate SVD. Although this method greatly reduces the computational effort, it has some tunable parameters that need to be set, which makes it not easy to use.

3.3 Inexact proximal operator

Based on the PowerMethod algorithm, we can compute \({ {prox}}_{\lambda \mu , q}(\cdot )\) more efficiently. Specifically, as in Algorithm 3, we first adopt the PowerMethod algorithm to obtain orthogonal matrix Q. Then, we perform SVD on \(Q^{T}G\) which is much smaller than the original matrix G as \(k \ll n\), and the complexity is reduced from \({\mathscr {O}}(mn^{2})\) to \({\mathscr {O}}(mk^{2})\). The next steps are obtain the approximate \({ {prox}}_{\lambda \mu , q}(\cdot )\) by solving problem (9), where q is fixed to 1/2 or 2/3.

According to Algorithm 3, the \({ {prox}}_{\lambda \mu , q}(\cdot )\) step will be inexact. Thus, we should monitor the progress of F to make sure the proposed algorithm converges to a critical point. Motivated by [42], Yao et al. [35, 37] suggest employing the following condition to control the inexactness, that is,

where \(\delta > 0\) is a constant. Obviously, this condition makes the objective function F always decreased. However, in real application, this monotonically decreasing may makes the algorithm fall into narrow curved valley. A nonmontone condition is also used. Specifically, we accept \(X_{t + 1}\), if \(X_{t + 1}\) makes the value of F smaller than the maximum over previous m \((m > 1)\) iterations, that is,

Based on the analysis of above, we propose Algorithm 4 to obtain the approximation solution at iteration t.

3.4 The proposed algorithm

In this section, we will introduce our proposed \(l_{q}\) inexact APG (\(l_{q}\)iAPG) algorithm and discuss three techniques to accelerate the convergence of our proposed algorithm.

First, we use Nesterov’s accelerated gradient method. In the area of convex optimization, the Nesterov’s accelerated gradient method is a widely used technique for speed up the convergence of most machine learning algorithms, e.g., proximal algorithm. Recently, this method has also been employed to accelerate the convergence of nonconvex optimization. nmAPG [33], FaNCL-acc [35] and niAPG [37] are the state-of-the-art algorithms. Thus, similar to nmAPG, we will use the following steps in \(l_{q}\)iAPG algorithm,

Due to the (25) and (26) strategies, extensive experiments have shown that the convergence rate of \(l_{q}\)iAPG algorithm is significantly improved.

Since the regularization parameter \(\lambda \) plays an important role in our proposed algorithm, the tuning of \(\lambda \) becomes a subtle issue. Fortunately, this problem has been already considered in [29, 30], and a most reliable choice of the optimal regularization parameters of (15) are

Besides, the continuation technique is used in our proposed algorithm. As shown in [14, 30, 35], continuation technique is a commonly used method to improve the convergence speed of machine learning algorithms. The key idea of continuation technique is to choose a decreasing sequence \(\lambda _{t}: \lambda _{1}> \lambda _{2}> \ldots> {\bar{\lambda }} > 0\), then at tth iteration, use \(\lambda = \lambda _{t}\). Therefore, based on continuation technique, we suggest use the following regularization parameter at the \((t + 1)\)th iteration, that is,

where \(\eta \in (0, 1)\) is a constant, \(r_{t + 1}\) is the rank of \(X_{t + 1}\), and \({\bar{\lambda }}\) is a sufficiently small but positive real number, e.g., \(10^{-4}\).

Now, we outline our proposed algorithm as follows,

The third technique to accelerate the convergence rate of our proposed algorithm is to use the “sparse plus low-rank” structure [15]. Step 4 in \(l_{q}\)iAPG algorithm performs inexact proximal step. Obviously, for any \(t > 1\), there are two cases will be happen. When \(X_{t}\) is equal to \(Z_{t}\), \(Y_{t + 1} = X_{t} + \left( \frac{\theta _{t} - 1}{\theta _{t + 1}}\right) (X_{t} - X_{t - 1})\) . Therefore, step 1 of Algorithm 4 has

The first two terms are low-rank matrices, while the last term is a sparse matrix. This kind of “sparse plus low-rank” structure can speed up matrix multiplications. More precisely, for any \(V \in {\mathbb {R}}^{m \times k}\), GV can be obtained as

where \(\alpha _{t} = \frac{\theta _{t} - 1}{\theta _{t + 1}}\). Similarly, for any \(U \in {\mathbb {R}}^{m \times k}\), \(U^{T}G\) can be obtained as

When \(X_{t} = X_{t - 1}\), \(Y_{t + 1}\) becomes \(X_{t} + \left( \frac{\theta _{t}}{\theta _{t + 1}}\right) (Z_{t} - X_{t})\). Thus, step 1 of Algorithm 4 has

where \(\alpha _{t} = \frac{\theta _{t}}{\theta _{t + 1}}\). The same as above, we have

and

It should be note that the \(l_{q}\)iAPG algorithm is different from \(l_{q}\)PG algorithm. First, the \(l_{q}\)iAPG algorithm employs closed-form thresholding formulas, while \(l_{q}\)PG algorithm uses q-thresholding function. The q-thresholding function is often solved by numerical methods and only obtained its approximate solutions. Besides, the \(l_{q}\)iAPG algorithm allows inexact proximal step (Step 4), which makes the algorithm more efficient and faster. Moreover, the \(l_{q}\)iAPG algorithm uses more robust acceleration scheme, in which involves \(X_{t}\), \(X_{t - 1}\) and \(Z_{t}\). Finally, the \(l_{q}\)iAPG algorithm exploits the “sparse plus low-rank” structure to improve the speed of matrix multiplications.

The convergence of F(X) is shown as follows:

Theorem 3

Let \(\{X_{t}\}\) be the sequence generated by \(l_{q}\) iAGP algorithm, then

-

(i)

\(\{X_{t}\}\) is a minimization sequence and bounded, and has at least one accumulation point;

-

(ii)

\(F(X_{t})\)converges to \(F(X_{*})\), where \(X_{*}\)is any accumulation point of \(\{X_{t}\}\).

Proof

-

(i)

According to the control condition (23) in \(l_{q}\)iAGP algorithm, for any \(t = 1, 2, \ldots ,\) we have

$$\begin{aligned} F(X_{t}) \le F(X_{t - 1}) \le \cdots \le F(X_{0}). \end{aligned}$$(35)Thus, \(\{X_{t}\}\) is a minimization sequence of F(X), and \(\{F(X_{t})\}\) is bounded. Since \(\{X_{t}\} \subset \{X: F(X) \le F(X_{0})\}\), \(\{X_{t}\}\) is bounded and has at least one accumulation point.

-

(ii)

From above description, we also have \(F(X_{t})\) converges to \(F_{*}\), where \(F_{*}\) is a constant. Suppose an accumulation point of \(\{X_{t}\}\) is \(X_{*}\). Using the continuity of F(X), we obtain \(F(X_{t}) \rightarrow F_{*} = F(X_{*})\) as \(t \rightarrow \infty \). We complete the proof.□

4 Numerical experiments

In this section, we validate our proposed \(l_{q}\)iAPG algorithm for matrix completion problems by conducting a series of experiments. We compare our proposed method with the following state-of-the-art matrix completion algorithms.

-

(1)

Three nuclear norm minimization algorithms: APGL [13], AIS-Impute [34], and Active [40];

-

(2)

Two low-rank matrix decomposition-based methods: low-rank matrix fitting (LMaFit) [43], and alternating steepest descent algorithm (ASD) [45];

-

(3)

Two methods for solving models with schatten-q regularizers: HFPA [29], and multi-schatten-q norm surrogate (MSS) [46];

-

(4)

Three methods for solving models with nonconvex low-rank regularizers: iterative reweighted nuclear norm (IRNN) [47] algorithm, FaNCL [35], and niAPG [37].

We also tested singular value projection (SVP) [48], rank-one matrix pursuit method (R1MP ) [44], iterative reweighted least square(IRucLp) [49], and Soft-AIS [50]. In the following tests, however, these methods are slow or require large memory, so their results are not reported here.

In the following experiments, we follow the recommended settings of the parameters for these algorithms. For our proposed algorithm, we set \({\bar{\lambda }} = 10^{-4}\), \(\mu = 1.99\), and \(\eta = 0.75\). To prove the effectiveness of our proposed algorithm, we consider three cases: synthetic data, image recovery and recommendation problems. Besides, all the algorithms are stopped when the difference in objective values between consecutive iterations becomes smaller than \(10^{-5}\). All the algorithms are implemented in MATLAB R2014a on a Windows server 2008 system with Intel Xeon E5-2680-v4 CPU(3 cores, 2.4 GHz) and 256 GB memory.

4.1 Synthetic data

The test matrix \(M \in {\mathbb {R}}^{m \times n}\) with rank r is generated as \(M = M_{L}M_{R} + N\), where the entries of random matrices \(M_{L} \in {\mathbb {R}}^{m \times r}\) and \(M_{R} \in {\mathbb {R}}^{r \times n}\) are sampled i.i.d. from the standard normal distribution \({\mathscr {N}}(0, 1)\), and entries of N sampled from \({\mathscr {N}}(0, 0.1)\). Without loss of generality, we set \(m = n\) and \(r = 5\). We then sampled a subset \(\varOmega \) of p entries uniformly at random as the observations, where \(p = 2mrlog(m)\).

Similar to [35], we evaluate the recovery performance of the algorithms based on the i) normalized mean squared error NMSE = \(\| {\mathscr {P}}_{\varOmega ^{\perp }}(X - UV)\| _{F}/\| {\mathscr {P}}_{\varOmega ^{\perp }}(UV)\| _{F}\), where X is the recovered matrix and \(\varOmega ^{\perp }\) stands for the unobserved positions; ii) rank of X; and iii) running time. We vary m in the range \(\{1000, 2000, 3000, 5000\}\). For each algorithm, we present its average NMSE, rank and running time with 10 runs.

The average NMSE, rank, and running time are reported in Table 1. The results in Table 1 demonstrate that our proposed \(l_{q}\)iAPG is a competitive algorithm. More precisely, \(l_{q}\)iAPG algorithm runs fastest among these algorithms. In terms of accuracy, \(l_{q}\)iAPG attained satisfying performance. In Table 1, we can find that \(l_{q}\)iAPG algorithm achieves most accurate solutions in nearly all problems. We also observe that as the size of the matrix increases, the \(l_{q}\)iAPG algorithm will be more faster than other algorithms. Moreover, \(l_{q}\)iAPG algorithm is able to solve large-scale random matrix completions. Specifically, the running time of \(l_{q}\)iAPG algorithm for solving problem with \(m = 10^{5}\), \(sr = 0.12\% \) is within 1159.2 s (NMSE is smaller than 0.0141), while most other algorithms cannot get satisfactory results within this time. Therefore, taking both accuracy and converge speed into consideration, our proposed \(l_{q}\)iAPG algorithm has the best recovery performance among these algorithms.

4.2 Image recovery

In this section, we will apply \(l_{q}\)iAPG algorithm to image inpainting problems. In image inpainting problems, the values of some of the pixels of the image are missing, and our mission is to find out the missing values. If the image is low rank or numerical low rank, we can solve the image inpainting problem as a matrix completion problem. In this tests, we use the following benchmark images: Barbara, Bridge, Clown, Couple, Crowd, Fingerprint, Girlface, Houses, Kiel, Lighthouse, Tank, Truck, Trucks, and Zelda. The size of each image is \(512 \times 512\). We directly deal with the original images. As the image matrix is not guaranteed to be low rank, we use rank 50 for the estimated matrix for test. We randomly exclude \(50\%\) of the pixels in the images, and the remaining ones are used as the observations. We use peak signal-to-noise ratio (PSNR) [51] and running time to evaluate the recovery performance of the algorithms. We represent their average results with 5 runs.

We list the results in terms of the PSNR in Table 2. We also exhibit the results of image recovery by using different algorithms for Zelda in Fig. 1. The results in Fig. 1 demonstrate that our proposed \(l_{q}\)iAPG algorithm performs best among 12 algorithms. We also can easily find from Table 2 that our \(l_{q}\)iAPG, niAPG, HFPA, and ASD algorithms achieve the best results. Although, the results obtained by HFPA and ASD algorithms are slightly better than \(l_{q}\)iAPG. Our algorithm is much faster than HFPA and ASD algorithms. In addition, although niAPG algorithm is slightly faster than our algorithm, the solutions obtained by our algorithm are more accurate. More precisely, \(l_{q}\)iAPG algorithm achieves most accurate solutions 7 times on all images, while niAPG achieves most accurate solutions only 4 times. Again, taking both accuracy and converge speed into consideration, our proposed \(l_{q}\)iAPG algorithm is a competitive algorithm in the field of image recovery.

4.3 Recommendation

To further demonstrate the effectiveness of our proposed method, in this section we apply \(l_{q}\)iAPG algorithm on Jester and MovieLens datasets. We consider six datasets: Jester1, Jester2, Jester3, Jester-all, MovieLens-100K, and MovieLens-1M. The characteristics of these datasets are shown in Table 3. The Jester datasets are collected from a joke recommendation system. The whole data is stored in three excel files with the following characteristics [13].

-

(1)

jester-1: 24,983 users who have rated 36 or more jokes;

-

(2)

jester-2: 23,500 users who have rated 36 or more jokes;

-

(3)

jester-3: 24,938 users who have rated between 15 and 35 jokes.

The MovieLens datasets are collected from the MovieLens website. The characteristics of these data sets are list as follows [13]:

-

(1)

movie-100K: 100,000 ratings for 1682 movies by 943 users;

-

(2)

movie-1M: 1 million ratings for 3900 movies by 6040 users.

The Jester-all is obtained by combining Jester1, Jester2, and Jester3 datasets. In the following test, we follow the setup in [35], and randomly pick up \(50\%\) of the observed for training and use the remaining \(50\%\) for testing. We use the root mean squared error (RMSE) and running time to evaluate the recovery performance of the algorithms. The RMSE is defined as \({\hbox {RMSE}} = \sqrt{\| {\mathscr {P}}_{{\bar{\varOmega }}}(X - M)\| _{F}^{2}/|{\bar{\varOmega }}|_{1}}\), where \({\bar{\varOmega }}\) is the test set, X is the recovered matrix. The test of each algorithm is repeated 5 times.

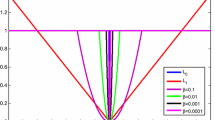

Matrix completion results on Jester and MovieLens datasets by using different algorithms. In the first and second rows, we apply different algorithms on Jester datasets and depict the RMSE along the CPU times. In the last row, we apply different algorithms on MovieLens datasets and depict the RMSE along the CPU times

The reconstruction results in terms of RMSE and running time are listed in Table 4. We depict the RMSE along the CPU times and show these results in Fig. 2. As can be seen form Table 4, our proposed algorithm, APGL, and niAPG achieve the lowest RMSE in nearly all problems. We also observe that the results obtained by APGL are slightly better than ours, but our method is much faster. Besides, our proposed algorithm can be runs on all six data sets, while many algorithms only run partial data sets. Furthermore, Fig. 2 demonstrate that our proposed algorithm decreases the RMSE much faster than others. This is the third time to show that our proposed algorithm is a competitive algorithm in the field of matrix completion.

5 Conclusion

In this paper, we focus on the large-scale low-rank matrix completion problems with \(l_{q}\) regularizers and proposed an efficient and fast inexact thresholding algorithm called \(l_{q}\)iAPG algorithm to handle such problems. The key idea is to employ the closed-form q-thresholding operator to approximate the rank of a matrix and power method to approximate the SVD procedure. At the same time, our proposed algorithm inherits the great efficiency advantages of first-order gradient-based methods and is simple and easy to use, which is more suitable for large-scale matrix completion problems. In addition, we adopted three techniques to accelerate the convergence rate of our proposed algorithm, which are Nesterov’s accelerated gradient method, continuation technique, and the “sparse plus low-rank” structure. Furthermore, a convergence analysis of the \(l_{q}\)iAPG algorithm has shown that the sequence \(\{X_{t}\}\) generated by \(l_{q}\)iAPG algorithm is bounded and has at least one accumulation point. More important, we also shown that the objective function F(X) converges to \(F(X_{*})\), where \(X_{*}\) is any accumulation point of \(\{X_{t}\}\). Finally, extensive experiments on matrix completion problems validated that our proposed algorithm is more efficient and faster. Specifically, we compare our proposed algorithm with state-of-the-art algorithms on a series of scenarios, including synthetic data, image recovery and recommendation problems. All results demonstrated that our proposed algorithm is able to achieve comparable recovery performance, while being faster and more efficient than state-of-the-art methods.

References

Rennie J, Srebro N (2005) Fast maximum margin matrix factorization for collaborative prediction. In: Proceedings of the 22nd international conference on machine learning (ICML-05), pp 713–719

Koren Y (2008) Factorization meets the neighborhood: A multifaceted collaborative filtering model. In: Proceedings of the 14th ACM SIGKDD international conference on Knowledge discovery and data mining, pp 426–434

Li X, Wang Z, Gao C, Shi L (2017) Reasoning human emotional responses from large-scale social and public media. Appl Math Comput 310:128–193

Peng X, Zhang Y, Tang H (2016) A unified framework for representation-based subspace clustering of out-of-sample and large-scale data. IEEE Trans Neural Netw Learn Syst 27(12):2499–2512

Yang L, Nie F, Gao Q (2018) Nuclear-norm based semi-supervised multiple labels learning. Neurocomputing 275:940–947

Yang L, Gao Q, Li J, Han J, Shao L (2018) Zero shot learning via low-rank embedded semantic autoencoder. In: Proceedings of the international joint conference on artificial intelligence, pp 2490–2496

Cabral R, De la Torre F, Costeira JP, Bernardino A (2015) Matrix completion for weakly-supervised multi-label image classification. IEEE Trans Pattern Anal Mach Intell 37(1):121–135

Yang L, Shan C, Gao Q, Gao X, Han J, Cui R (2019) Hyperspectral image denoising via minimizing the partial sum of singular values and superpixel segmentation. Neurocomputing 330:465–482

Recht B, Fazel M, Parrilo PA (2010) Guaranteed minimum-rank solutions of linear matrix equations via nuclear norm minimization. SIAM Rev 52(3):471–501

Candès EJ, Recht B (2009) Exact matrix completion via convex optimization. Found Compt Math 9(6):717–772

Fazel M (2002) Matrix rank minimization with applications. Ph.D. thesis, Stanford University

Cai J-F, Candès EJ, Shen Z (2010) A singular value thresholding algorithm for matrix completion. SIAM J Optim 20(4):1956–1982

Toh K-C, Yun S (2010) An accelerated proximal gradient algorithm for nuclear norm regularized linear least squares problems. Pac J Optim 6(3):615–640

Ma S, Goldfarb D, Chen L (2011) Fixed point and Bregman iterative methods for matrix rank minimization. Math Program 128(1–2):321–353

Mazumder R, Hastie T, Tibshirani R (2010) Spectral regularization algorithms for learning large incomplete matrices. J Mach Learn Res 11:2287–2322

Yao Q, Kwok JT (2015) Accelerated inexact soft-impute for fast largescale matrix completion. In: Proceedings of the international joint conference on artificial intelligence, pp 4002–4008

Fan J, Li R (2001) Variable selection via nonconcave penalized likelihood and its Oracle properties. J Am Stat Assoc 96:1348–1361

Zhang T (2010) Analysis of multi-stage convex relaxation for sparse regularization. J Mach Learn Res 11:1081–1107

Candès EJ, Wakin MB, Boyd SP (2008) Enhancing sparsity by reweighted \(l_{1}\) minimization. J Fourier Anal Appl 14(5–6):877–905

Hu Y, Zhang D, Ye J, Li X, He X (2013) Fast and accurate matrix completion via truncated nuclear norm regularization. IEEE Trans Pattern Anal Mach Intell 35(19):2117–2130

Zhang C-H (2010) Nearly unbiased variable selection under minimax concave penalty. Ann Stat 38(2):894–942

Marjanovic G, Solo V (2012) On \(l_{q}\) optimization and matrix completion. IEEE Trans Signal Process 60(11):5714–5724

Abu Arqub O, Abo-Hammour Z (2014) Numerical solution of systems of second-order boundary value problems using continuous genetic algorithm. Inf Sci 279:396–415

Abu Arqub O, AL-Smadi M, Momani S, Hayat M (2016) Numerical solutions of fuzzy differential equtions using reproducing kernel Hilbert space method. Soft Comput 20(8):3283–3302

Abu Arqub O, AL-Smadi M, Momani S, Hayat M (2017) Application of reproducing kernel algorithm for solving second-order, two-point fuzzy boundary value problems. Soft Comput 21(23):7191–7206

Abu Arqub O (2017) Adaptation of reproducing kernel algorithm for solving fuzzy Fredholm–Volterra integrodifferential equations. Neural Comput Appl 28(7):1591–1610

Xu Z, Chang X, Xu F, Zhang H (2012) \(L_{1/2}\) regularization: a thresholding representation theory and a fast solver. IEEE Trans Neural Netw Learn Syst 23(7):1013–1027

Cao W, Sun J, Xu Z (2013) Fast image deconvolution using closed-form thresholding formulas of \(l_{q}(q = 1/2, 2/3)\) regularization. J Vis Commun Image R 24(1):31–41

Peng D, Xiu N, Yu J (2017) \(S_{1/2}\) regularization methods and fixed point algorithms for affine rank minimization problems. Comput Optim Appl 67:543–569

Wang Z, Wang W, Wang J, Chen S (2019) Fast and efficient algorithm for matrix completion via closed-form 2/3-thresholding operator. Neurocomputing 330:212–222

Qian W, Cao F (2019) Adaptive algorithms for low-rank and sparse matrix recovery with truncated nuclear norm. Int J Mach Learn Cyb 10(6):1341–1355

Nesterov Y (2013) Gradient methods for minimizing composite functions. Math Program 140(1):125–161

Li H, Lin Z (2015) Accelerated proximal gradient methods for noncovex programming. In: Proceedings of the advances in neural information processing systems, pp 379–387

Yao Q, Kwok J (2019) Accelerated and inexact soft-impute for large-scale matrix and tensor completion. IEEE Trans Knowl Data En 31(9):1665–1679

Yao Q, Kwok J, Wang T, Liu T (2019) Large-scale low-rank matrix learning with nonconvex regularizers. IEEE Trans Pattern Anal Mach Intell 41(11):2628–2643

Gu B, Wang D, Huo Z, Huang H (2018) Inexact proximal gradient methods for non-convex and non-smooth optimization. In: AAAI conference on artificial intelligence

Yao Q, Kwok J, Gao F, Chen W, Liu T (2017) Efficient inexact proximal gradient algorithm for nonconvex problems. In: Proceedings of the international joint conference on artificial intelligence, pp 3308–3314

Oh T, Matsushita Y, Tai Y, Kweon I (2018) Fast randomized singular value thresholding for low-rank optimization. IEEE Trans Pattern Anal Mach Intell 40(2):376–391

Halko N, Martinsson P-G, Tropp J (2011) Finding structure with randomness: probabilistic algorithms for constructing approximate matrix decompositions. SIAM Rev 53(2):1805–1811

Hsieh C.-J, Olsen P (2014) Nuclear norm minimization via active subspace selection. In: Proceedings of the 31st international conference on machine learning (ICML-14), pp 575–583

Larsen R (1998) Lanczos bidiagonalization with partial reorthogonalization. Department of Computer Science, Aarhus University, DAIMI PB-357

Gong P, Zhang C, Lu Z, Huang J, Ye J (2013) A general iterative shrinkage and tresholding algorithm for non-convex regularized optimization problems. In: Proceedings of the 30th international conference on machine learning (ICML-13), pp 37–45

Wen Z, Yin W, Zhang Y (2012) Solving a low-rank factorization model for matrix completion by a nonlinear successive over-relaxation algorithm. Math Program Comput 44(4):333–361

Wang Z, Lai M, Lu Z, Fan W, Davulcu H, Ye J (2015) Orthogonal rank-one matrix pursuit for low rank matrix completion. SIAM J Sci Comput 37(1):A488–A514

Tanner J, Wei K (2016) Low rank matrix completion by alternating steepest descent methods. Appl Comput Harmon A 40:417–420

Xu C, Lin Z, Zha H (2017) A unified convex surrogate for the schatten-\(p\) norm. In: AAAI conference on artificial intelligence

Lu C, Tang J, Yan S, Lin Z (2016) Noncovex nonsmooth low rank minimization via iteratively reweighted nuclear norm. IEEE Trans Image Process 25(2):829–839

Jain P, Meka R, Dhillon I (2010) Guaranteed rank minimization via singular value projection. In: Proceedings of the advances in neural information processing systems, pp 937–945

Lai M, Xu Y, Yin W (2013) Improved iteratively rewighted least squares for unconstrained smoothed \(l_{p}\) minimization. SIAM J Numer Anal 5:927–957

Hastie T, Mazumder R, Lee J, Zadeh R (2015) Matrix completion and low-rank SVD via fast alternating least squares. J Mach Learn Res 16:3367–3402

Thu Q, Ghanbari M (2008) Scope of validity of PSNR in image/video quality assesment. Electron Lett 44(13):800–801

Acknowledgements

This work is supported in part by the Natural Science Foundation of China under Grant 61273020, and in part by the Fundamental Research Funds for the Central Universities under Grant XDJK2019B063.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, Z., Gao, C., Luo, X. et al. Accelerated inexact matrix completion algorithm via closed-form q-thresholding \((q = 1/2, 2/3)\) operator. Int. J. Mach. Learn. & Cyber. 11, 2327–2339 (2020). https://doi.org/10.1007/s13042-020-01121-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-020-01121-7