Abstract

The global exponential stability of the equilibrium point for uncertain memristor-based recurrent neural networks is studied in this paper. The memristor-based recurrent neural networks considered in this paper are based on a realistic memristor model, and can be considered as the extension of some existing memristor-based recurrent neural networks. By virtue of homomorphic theory, it is proved that the uncertain memristor-based recurrent neural networks have a unique equilibrium point under some mild assumptions. Moreover, the unique equilibrium point is proved to be globally exponentially stable by constructing a suitable Lyapunov functional. Finally, the obtained results are applied to determine the dynamical behaviors and circuit design of the memristor-based recurrent neural networks by some numerical examples.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In 1971, Professor Chua [1] theoretically predicted the existence of a new two-terminal circuit element called the memristor (a contraction for memory and resistor). Chua believed that memristor has every right to be the fourth fundamental passive circuit element. However, until 2008, the Williams group built the first solid-state memristor, which was modeled as a thin semiconductor film (\(\hbox {TiO}_2\)) sandwiched between two metal contacts [2]. Because of the important memory feature, the memristor has generated unprecedented worldwide interest since its potential applications in next generation computers and powerful brain-like neural computers (see [3, 4]).

In recent years, various recurrent neural networks have been proposed and their dynamic behaviors are studied extensively since their wide applications in pattern recognition, image processing, associative memory, neurodynamic optimization problems and so on (see [5,6,7,8,9,10,11,12,13,14,15]). Meanwhile, more and more researchers observe that time delays are unavoidable and can influence greatly the dynamical behaviors of neural networks (see [16,17,18,19,20]). In general, these delays include discrete delays, time-varying delays, distributed delays and so on. However, the conventional recurrent neural network’s connection weights are implemented by resistors, and do not have any memory property. The memristor works like a biological synapse, with its conductance varying with experience, or with the current flowing through it over time [21, 22]. Compared with the resistor, the memristor is more suitably used as synapse in neural networks since its nanoscale size, automatic information storage, and nonvolatile characteristic with respect to long periods of power-down [23]. This special behavior can be applied in artificial neural networks, i.e., memristor-based neural network (see [21]). Memristor-based neural networks have proven as a promising architecture in neuromorphic systems for the non-volatility, high-density, and unique memristive characteristic. There exist several different mathematical models for the memristor-based neural networks. For example, in 2010, Hu and Wang [24] proposed a mathematical model for the memristor-based neural networks and studied its global uniform asymptotic stability by a constructing proper Lyapunov functional. In [25], combining with the typical current–voltage characteristics of memristor, Wu et.al introduced a simple model of the memristor-based recurrent neural networks.

It is well known that the applications of neural networks rely heavily on the dynamical behaviors of the networks, such as stability, periodic oscillatory, chaos, and so on. Meanwhile, the analysis of dynamical behaviors of the memristor-based neural networks has been found useful to address a number of interesting engineering applications and therefore have been studied extensively (see [22, 26,28,29,30,31,32,32]). Based on a realistic memristor model and differential inclusion theory, authors in [22] studied the convergence and attractivity of memristor-based cellular neural networks with time delays. Wu et al. in [28] introduced some Lagrange stability criteria dependent on the network parameters for the Lagrange stability of the memristor-based recurrent neural networks with discrete and distributed delays. The paper [33] presented some new theoretical results on the invariance and attractivity of memristor-based cellular neural networks with time-varying delays. In [34], Wu et.al designed a simple memristor-based neural network model. Based on the fuzzy theory and Lyapunov method, they studied the problem of global exponential synchronization of a class of memristor-based recurrent neural networks with time-varying delays. In [35], the global asymptotic stability and synchronization of a class of fractional-order memristor-based delayed neural networks were investigated.

Meanwhile, the estimation errors are unavoidable for the numerical values of the neural network parameters including the neuron fire rate and the weight coefficients depending on certain resistance and capacitance. Moreover, some other external disturbances such as noise are also unavoidable. It should be noted that the uncertainty may change the stability of the neural network. On the other hand, transmission delay is also unavoidable when signals are communicated among neurons, and the transmission delay may lead to some undesired complex dynamical behaviors. In general, the transmission delay includes discrete delays, time-varying delays and distributed delays and so on. For example, by exploiting all possible information in mixed time delays, two discrete-time mixed delay neural networks were studied separately in [36, 37]. So, it is reasonable to study the dynamical behaviors of the uncertain memristor-based neural networks with time delays. Recently, more and more literatures focus on the stability of uncertain neural networks with mixed time delays (see [36,38,39,39]). For example, in [38], author studied the global asymptotic robust stability of delayed neural networks with norm-bounded uncertainties. The problems of robust stability analysis and robust controller designing of an uncertain memristive neural networks were studied in [40]. Reference [41] was concerned with the global robust synchronization of multiple memristive neural networks with nonidentical uncertain parameters.

However, as far as we know, there are very few literatures concerning on the stability of the uncertain memristor-based recurrent neural networks with time-varying delays and distributed delays. Motivated by the above works, we will study the existence and global exponential stability of the equilibrium point for a class of the uncertain memristor-based recurrent neural networks with time-varying delays and distributed delays. The neural network considered in this paper can be considered as an extension of the neural network in [34]. The structure of this paper is outlined as follows. In Sect. 2, we introduce the memristor-based recurrent neural network model and some related preliminaries. In Sect. 3, we prove the existence and global exponential stability of the equilibrium point for a class of the uncertain memristor-based recurrent neural networks. In Sect. 4, we present several numerical simulations to show the effectiveness of our results. Finally, the main conclusions drawn in the paper are summarized.

\(\mathbf{Notation }\) Given the vector \(x=(x_1,x_2,\ldots ,x_n)^T\), where the superscript T is the transpose operator, we let \(\Vert x\Vert :=(\sum \nolimits _{i=1}^nx_i^2)^\frac{1}{2}\). \({\mathbb{R}}\) is the set of real numbers. Let \(A=(a_{ij})\in {\mathbb{R}}^{n\times n}\) and define \(\Vert A\Vert =\sqrt{\lambda _M(A^TA)}\), where \(\lambda _M(A)\) stands for the operation of taking the maximum eigenvalue of A. \(I\in {\mathbb{R}}^{n\times n}\) is the \(n\times n\) identity matrix. For a real symmetric matrix A, \(A<0(>0)\) means that A is negative (positive) definite.

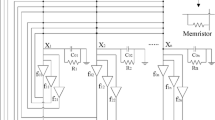

Circuit of memristor-based recurrent neural network in [34]

2 Neural network model and preliminaries

As shown in [34], the memristor-based recurrent neural network can be implemented by very large scale of integration circuits with memristors (see Fig. 1). By Kirchoff’s current law, the following memristor-based recurrent neural network was introduced in [34],

where \(f_j\) is the activation function, \(\tau _j(t)\) is the time-varying delay, and \(x_i(t)\) is the voltage of the capacitor \(C_i\). \(R_{f_{ij}}\) is the resistor between the feedback function \(f_j(x_j(t))\) and \(x_i(t)\), and \(R_{g_{ij}}\) is the resistor between the feedback function \(f_j(x_j(t-\tau _j(t)))\) and \(x_i(t)\). \(\text {sign}_{ij}\) is defined as

\(W_i\) is the memductance of the i-th memristor satisfying

\(I_i\) is an external input or bias. Let

and

Then, by (3), we obtain that

For simplicity, we let

It follows that \([\sum \nolimits _{j=1}^n(\frac{1}{C_{i}R_{f_{ij}}}+\frac{1}{C_{i}R_{g_{ij}}})+\frac{W_i(x_i)}{C_{i}}]x_i =[d_i+{m_i}s(x_i)]x_i=d_ix_i+{m_i}|x_i|\). Hence, the memristor-based neural network (1) can be simplified as follows,

or,

where \(D=\text {diag}\{d_1,d_2,\ldots ,d_n\}\), \({M}=\text {diag}\{{m}_1,{m}_2,\ldots ,{m}_n\}\), \(A=(a_{ij})_{n\times n}\), \(B=(b_{ij})_{n\times n}\), \(|x(t)|=(|x_1(t)|,\ldots ,|x_n(t)|)^T\), \(\tau (t)=(\tau _1(t),\tau _2(t),\ldots ,\tau _n(t))^T\), \(x (t-\tau (t))=(x_1(t-\tau _1(t)),\ldots ,x_n(t-\tau _n (t)))^T\), and \(U=(U_{1},U_{2},\ldots ,U_{n})^{T}.\)

Throughout the paper, we also need the following assumptions introduced in [34].

\({\mathbf{(A_1)}}\) For \(i\in \{1, 2,\ldots , n\}\), the activation function \(f_i\) is Lipschitz continuous. That is, there exists \(l_i>0\) such that for all \(r_1, r_2\in {\mathbb{R}}\) with \(r_1\ne r_2\),

Here, we let \(L=\text {diag}\{l_1,l_2,\ldots ,l_n\}\).

\({\mathbf{(A_2)}}\) For \(i\in \{1, 2, \ldots , n\}\), \(\tau _i(t)\) satisfies

Here, we let \({\overline{\tau }}=\max \{{\overline{\tau }}_1,\ldots ,{\overline{\tau }}_n\}\).

Different from [34], we will study the following memristor-based neural network with time-varying delays and distributed delays,

\(i=1,2,\ldots ,n\). Neural network (11) can be considered as an extension of neural network (7). \(e_{ij}\) and the time delay \(\mu _j>0\) are two constants, and \(\int _{t-\mu _{j}}^{t} f_j(x_j(s))ds\) is the distributed delay. The memristor-based neural network (11) can be rewritten as follows,

where \(E=(e_{ij})_{n\times n}\). It is clear that the neural network (12) can be considered as an extension of the neural network in [34] (i.e., (7) in this paper).

Next, we introduce the assumptions about the connection weight matrices as follows.

\({\mathbf{(A_3)}}\) The parameters \(D=\text {diag}\{d_1,d_2,\ldots ,d_{n}\}\), \({M}=\text {diag}\{{m}_1,{m}_2,\ldots ,{m}_n\}\), \(A=(a_{ij})_{n \times n}\), \(B=(b_{ij})_{n \times n}\), and \(E=(e_{ij})_{n \times n}\) are assumed to be intervalised as follows,

Here, we let \({\underline{A}}=({\underline{a}}_{ij})_{n\times n}\), \({\underline{B}}=({\underline{b}}_{ij})_{n\times n}\), \({\underline{E}}=({\underline{e}}_{ij})_{n\times n}\), \(\overline{A}=(\overline{a}_{ij})_{n\times n}\), \({\overline{B}}=({\overline{B}}_{ij})_{n\times n}\), and \({\overline{E}}=({\overline{E}}_{ij})_{n\times n}\). From the above assumption \({\mathbf{(A_3)}}\), the diagonal matrix D is invertible.

Lemma 1

If\(H(x)\in {\mathcal {C}}^{0}\)satisfies the following conditions

then, H(x) is a homeomorphism of\({\mathbb{R}}^{n}\).

The following lemmas are necessary for proving the existence and global exponential stability of the equilibrium point for the uncertain memristor-based recurrent neural network (11).

Lemma 2

[42] For\(x\in {\mathbb{R}}^{n}\), \(A\in A_{\mathcal {I}}\)and any positive diagonal matrixP, we have

where\(A^{*}=\frac{1}{2}({\underline{A}}+\overline{A})\)and\(A_{*}=\frac{1}{2}(\overline{A}-{\underline{A}}).\)

Lemma 3

[42] For any\(B\in B_{\mathcal {I}}\)and\(E\in E_{\mathcal {I}}\), we have

where

\(B^{*}=\frac{1}{2}({\underline{B}}+{\overline{B}})\), \(B_{*}=\frac{1}{2}({\overline{B}}-{\underline{B}})\), \(E^{*}=\frac{1}{2}({\underline{E}}+{\overline{E}})\), \(E_{*}=\frac{1}{2}({\overline{E}}-{\underline{E}})\). \(\hat{B}=(\hat{b}_{ij})_{n\times n}\) with \(\hat{b}_{ij}=\max \{\mid {\underline{b}}_{ij}\mid ,\mid {\overline{B}}_{ij}\mid \}\), and \(\hat{E}=(\hat{e}_{ij})_{n\times n}\) with \(\hat{e}_{ij}=\max \{\mid {\underline{e}}_{ij}\mid ,\mid {\overline{E}}_{ij}\mid \}\).

Lemma 4

[43] For any constant matrix\(\chi \in {\mathbb{R}}^{n\times n}\),\(\chi =\chi ^{T}\), scalar\(v>0\), vector function\(F:[0,v]\rightarrow {\mathbb{R}}^{n}\)such that the integrations concerned are well-defined, we have,

3 Main results

In this section, we will study the existence and global exponential stability of the equilibrium point for the uncertain memristor-based neural network (11). For simplicity, we let

where \({\underline{{m}}}_{i},{\overline{{m}}}_{i}\) are from (13), and \(\mu _i\) is from (11).

Theorem 1

Under the assumptions (\({\mathbf{A_1}}\)),(\({\mathbf{A_2}}\)), and (\({\mathbf{A_3}}\)), if the diagonal matrix\({\underline{D}}-{\widehat{M}}>0\)and there exists a positive diagonal matrix\(P=\text {diag}\{p_1,p_2,\ldots ,p_n\}\)such that

then, the memristor-based neural network (11) has a unique equilibrium point.

Proof

Let \(H(x)=-Dx-{M}|x|+(A+B+E\mu )f(x)+U\). It is obvious that \(H(x^*)=0\) if and only if \(x^*\) is an equilibrium point of the memristor-based neural network (11). Next, based on Lemma 1, we will prove that \(H(\cdot )\) a homeomorphism of \({\mathbb{R}}^{n}\), and then the memristor-based neural network (11) has a unique equilibrium point.

\(\mathbf{Step\,1: }\) We first prove \(H(\cdot )\) is injective, i.e., the hypothesis (i) in Lemma 1 holds. In fact, for any \(x,y\in {\mathbb{R}}^{n}\) with \(x\ne y\), we have

The proof of this step is divided into two following cases:

\(\mathbf{Case\,1: }\)\(f(x)-f(y)=0\). If \(f(x)-f(y)=0\), then \(H(x)-H(y)=-D(x-y)-{M}(|x|-|y|)\), and

Hence, \(H(x)-H(y)\ne 0\).

\(\mathbf{Case\,2: }\)\(f(x)-f(y)\ne 0\). In this case, multiplying both sides of (16) by \(2(f(x)-f(y))^TP\), we have

By the assumption (\({\mathbf{A_1}}\)) and the fact that P and M are two diagonal matrices, it is clear that

Meanwhile,

Substituting (20) into (18), we have

Hence, by (21), considering the assumptions that \(\varPsi\) is negative definite and \(f(x)-f(y)\ne 0\), we obtain

which means \(H(x)-H(y)\ne 0\).

Thus, from \(\mathbf{Cases\,1 }\) and \(\mathbf{2 }\), it follows that H is injective.

\(\mathbf{Step\,2: }\) We next prove that the hypothesis (ii) in Lemma 1 holds, i.e., \(\Vert H(x)\Vert \rightarrow \infty\) as \(\Vert x\Vert \rightarrow \infty\). In fact, by the definition of H, we have

It is noted that \(\Vert {\underline{D}}\Vert -\Vert {\widehat{M}}\Vert>0\) since the diagonal matrix \({\underline{D}}-\widehat{M}\) is positive definite. On the other hand, letting \(y=0\) in (21), we have

That is,

Then, by (24), it follows that \(\Vert 2P\Vert \Vert H(x)-H(0)\Vert \ge -\lambda _M(\varPsi )\Vert f(x)-f(0)\Vert\), and consequently,

It is also noted that \(-\lambda _M(\varPsi )>0\). Hence,

Thus, we obtain that \(\Vert H(x)\Vert \rightarrow \infty\) as \(\Vert x\Vert \rightarrow \infty\).

Then, by Lemma 1, \(H(\cdot )\) is a homeomorphism of \({\mathbb{R}}^{n}\), and consequently the memristor-based neural network (11) has a unique equilibrium point. \(\square\)

We next study the global exponential stability of the equilibrium point for the memristor-based neural network (11).

Theorem 2

Under the assumptions in Theorem 1, the unique equilibrium point of the memristor-based neural network (11) is globally exponentially stable.

Proof

From Theorem 1, the memristor-based neural network (11) has a unique equilibrium point. Let \(x^*=(x_1^*,x_2^*,\ldots ,x_n^*)^T\) be the unique equilibrium point of the memristor-based neural network (11). Then, by the definition of the equilibrium point, we have

To simplify the proof, we make the following transformation

Then, the memristor-based neural network (11) can be expressed equivalently as follows,

where \(g(z(t))=f(z(t)+x^*)-f(x^*)\) and \(g(z(t-\tau (t)))=f(z(t-\tau (t))+x^*)-f(x^*)\).

We consider the following Lyapunov function,

where

Here, \(\alpha ,\beta _j,\gamma _j\), and \(\delta\) are some positive constants to be determined, \(j=1,2\).

First, calculating the time derivative of \(V_1(t,z)\) along the trajectories of the memristor-based neural network (28), we have

Since D and M are all diagonal matrices, we have

Substituting (32) into (31), we obtain that

where k is a positive constant to be determined later.

Second, we calculate the time derivative of \(V_2(t,z)\) along the trajectories of the memristor-based neural network (28) as follows,

Similarly to (32), and by the fact that \(g_i\) is nondecreasing with \(g_i(0)=0\), we have

Based on the assumption that \({\underline{D}}-{\widehat{M}}\) is positive definite, it can be obtained that the diagonal matrix \(D-|{M}|\) is positive definite. Hence, we can choose a sufficient small constant \(\delta>0\) such that

Meanwhile, by the assumption (\({\mathbf{A_1}}\)) and the transformation (27), we have

Thus, substituting (35) and (37) into (34), we obtain

since \(2g^T(z (t))PAg(z (t)) =g^T(z (t))(PA+A^TP)g(z (t))\) and \(2g^T(z (t))PBg(z (t-\tau (t)))\)\(\le g^T(z (t))\Vert PB\Vert g(z (t))\)\(+g^T(z (t-\tau (t)))\Vert PB\Vert g(z (t-\tau (t))).\)

Third, calculating the time derivative of \(V_3(t,z)\) along the trajectories of the memristor-based neural network (28), we have

Meanwhile, according to Lemma 4, we have

Hence, by (33), (38), and (39), the time derivative of V(t, z) can be calculated as follows,

Next, we let

Then, under the assumption \({\mathbf{(A_3)}}\), by Lemmas 2 and 3, we have

Here, \(\varPsi\) is from (15). By Lemma 3 and the choices of \(\gamma _1\) and \(\beta _1\) in (41), we have

Similarly, we also have

Then, letting \(\alpha =k^2\) and \(\delta =\frac{1}{k}\), and substituting (43) and (44) into (42), we obtain that

The facts that

imply that we can choose a sufficiently large k such that both (36) and the following inequalities hold,

Hence, by (45), we have

which means that

More precisely,

where \(M=[d_{m}V(0,z(0))]^\frac{1}{2}\) and \(d_{m}=\max \{d_i:i=1,\ldots ,n\}\). That is, the unique equilibrium point \(x^*\) of the memristor-based neural network (11) is globally exponentially stable. \(\square\)

Remark 1

Recently, researchers propose several different mathematical models of the memristor-based neural networks, and study their dynamical behaviors extensively [24, 25, 33, 34]. These dynamical behaviors include the stability of equilibrium point, periodic solution, almost-periodic solution and synchronization and so on. For example, the global exponential synchronization and periodic solution of memristor-based neural network (11) were separately studied in [34, 44]. However, as far as we know, there are very few related conclusions about uncertain memristor-based recurrent neural network (11) with distributed delays.

Meanwhile, Theorems 1 and 2 can also be used to verify the global exponential stability of the equilibrium point not only for the memristor-based recurrent neural network in [34] but also for the general uncertain recurrent neural networks in [16, 18]. Hence, the conclusions in this paper can be considered as the generalization and improvement of the previous related works.

Corollary 1

Under the assumptions (\({\mathbf{A_1}}\)) and (\({\mathbf{A_2}}\)), if the diagonal matrix\({D}-{\widehat{M}}>0\)and there exists a positive diagonal matrix\(P=diag\{p_1,p_2,\ldots ,p_n\}\)such that

then, memristor-based neural network (1) has a unique equilibrium point, which is globally exponentially stable.

4 Numerical examples

In this section, we present some illustrative examples to show the effectiveness and application of the obtained results.

4.1 Analysis of dynamical behaviors of network (11)

First, we choose randomly the values of capacitor \(C_{i}\), external input \(I_{i}\), memductance \(W'_{i}\), \(W''_{i}\), resistors \(R_{f_{ij}}\), and \(R_{g_{ij}}\) in (1) in Tables 1 and 2, and let \(R_{f_{ij}}=R_{g_{ij}}\) for \(i,j=1,2,3,4.\)

Then, substituting the parameter values in Tables 1 and 2 into (11), we have

Additionally, the parameter \({e_{ij}}\) in (11) are uniformly distributed pseudorandom numbers generated by rand in Matlab. In this numerical experiment,

The activation functions are given:

Then, by (9), we have \(L=\text {diag}\{1,1,1,1\}.\) Let \(\mu =\text {diag}\{0.6,0.6,0.6,0.6\}\) and \(\tau _{i}(t)=1\) for \(i=1,2,3,4\) in (11).

Second, the assumption \(({\mathbf{A_3}})\) about the matrices D, M, A, B and E in (12) is shown as follows,

Furthermore, by Lemmas 2, 3, and (14), we can calculate \(A^{*}\), \(A_{*}\), \(\widehat{M}\), \(\varrho =2.1649\), \({\mathrm {b}}= 0.5515\), and \(\mu _{0}=0.6\). It is clear that \({\underline{D}}-{\widehat{M}}\) is positive and there exists a positive definite diagonal matrix

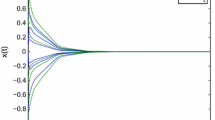

such that (15) holds. Thus, by Theorems 1 and 2, the unique equilibrium point of the memristor-based neural network (11) is globally exponentially stable. The initial values of the neural network (11) are set to be \((0.1,0.1,0.1,0.1)^{T}\), \((0.5,0.5,0.5,0.5)^{T}\), and \((1,1,1,1)^{T}\), respectively. The solution trajectories of (11) are illustrated in Fig. 2.

Solution trajectories of the memristor-based neural network (11)

4.2 Applications

In this section, we apply the proposed results to analysis the dynamic behaviors and design the circuit of memristor-based neural network in [34].

4.2.1 Analysis of the dynamical behaviors of (1)

We fix the values of parameters \(C_{i}\), \(I_{i}\), \(R_{f_{ij}}\), \(R_{g_{ij}}\), \(\tau _{j}(t)\) for \(i,j=1,2,3,4.\) in Section 4.1. It is clear that \({D}-{\widehat{M}}\) is positive and there exists a positive definite diagonal matrix

such that (47) holds. Thus, by Corollary 1, the unique equilibrium point of the memristor-based neural network (1) is globally exponentially stable. The initial values of the neural network (1) are set to be \((0.1,0.1,0.1,0.1)^{T}\), \((0.5,0.5,0.5,0.5)^{T}\), and \((1,1,1,1)^{T}\), respectively. The solution trajectories of (1) are illustrated in Fig. 3.

Solution trajectories of the memristor-based neural network (1)

4.2.2 Design of network (1)

In this section, we apply the obtained results in Corollary 1 to design the circuit of memristor-based neural network with a unique globally exponentially stable equilibrium point. First, we fix the values of parameters \(C_{i}\), \(I_{i}\), \(R_{f_{ij}}\), and \(R_{g_{ij}}\) in (1). Then, we apply the obtained results to determine \(d_{i}\) and \(m_i\) in (1). Furthermore, we calculate the memductances \(W_{i}'\) and \(W_{i}''\) of the \(i-\)th memristor, and finish the design of the circuit.

The design process of memristor-based neural network is described by three steps as follows.

Step 1 We fix a positive definite matrix P in (47). Based on the matrix inequality (47), we add the following two matrix inequalities

to solve the matrix \({D}-|\widehat{M}|.\) Here, the conditions (49) and (50) guarantee that \(D-|\widehat{M}|\) and \(|\widehat{M}|\) are positive definite in both Theorem 1 and Corollary 1, respectively.

Step 2 By (5) and (6), if \(m_{i}\ge 0\), then we have

Furthermore, we calculate \(W_{i}'\) for \(i\in \{1,2,\cdots ,n\}\). Meanwhile, the corresponding \(W_{i}''\) can be assigned the arbitrary value satisfied \(m_{i}=\frac{W_{i}''-W_{i}'}{2C_{i}}>0\) theoretically.

If \(m_{i}<0\), then we have

Similarly, we obtain the \(W_{i}'\) and \(W_{i}''\) in (1).

Step 3 Substituting \(W_{i}'\) and \(W_{i}''\) into (5) and (6), we obtain \(d_{i}\) and \(m_{i}\). That is, we complete the design of memristor-based neural network.

Now we set activation function \(f_{i}(x)\) represented by (48), and the values of parameters \(C_{i}\), \(I_{i}\), \(R_{f_{ij}}\), and \(R_{g_{ij}}\) in (1) as same as in Tables 1 and 2. \(\tau _{i}(t)\) are given as same as in Sect. 4.1. Consequently, we obtain the matrices U, A, B, and L as same as in Sect. 4.1. Next, we give a positive definite matrix

By Step 1, we have

By Step 2, letting \(m_{i}>0\) for \(i=1,2,3,4\), we have \(W_{1}'= -1.7109,\, W_{2}'= 0.1170,\, W_{3}'= -0.6748,\, W_{4}'= 4.8468.\) Moreover, fix \(W_{1}''=1.2891,\, W_{2}''=3.1170,\, W_{3}''=2.3252,\) and \(W_{4}''=7.8468.\) Then, by Step 3, we obtain

The initial values of the neural network (1) are set to be \((0.2,0.2,0.2,0.2)^{T}\), \((0.8,0.8,0.8,0.8)^{T}\), and \((2,2,2,2)^{T}\), respectively. We depict the solution trajectories of (1) in Fig. 4.

Solution trajectories of the designed memristor-based neural network (1)

Remark 2

It should be noted that the obtained conclusions in this paper can also be applied to verify the global exponential stability of the equilibrium point for the general uncertain recurrent neural networks as follows,

which has been studied extensively (see [17, 38, 39]). However, the influence of the distributed delays were not considered in [17, 38, 39]. Hence, the obtained conclusions in this paper improve the previous related works.

5 Conclusion

The analysis of dynamical behaviors of the memristor-based neural networks is necessary when the engineering applications of such networks become more and more popular. In this paper, we study the existence and global exponential stability of the equilibrium point for a class of memristor-based recurrent neural networks. By virtue of homeomorphic theory, we prove that the memristor-based neural network has a unique equilibrium point. Furthermore, we prove that the unique equilibrium point is globally exponentially stable by constructing a suitable Lyapunov functional. From the circuit of memristor-based recurrent network, we present some conditions for the amplifiers, connection resistors between the amplifiers, the capacitors, and the memductances of memristor to guarantee the existence and global exponential stability of the equilibrium point of the circuit. Finally, some numerical examples are used to show the effectiveness of our main results. In the future, we will focus on the delay-distribution probability problem for memristor-based recurrent neural networks.

References

Chua LO (1971) Memristor-the missing circuit element. IEEE Trans Circuit Theory 18:507–519

Strukov DB, Snider GS, Stewart DR, Williams RS (2008) The missing memristor found. Nature 453:80–83

Lu W (2012) Memristors: going active. Nat Mater 12(2):93–94

Thomas A (2013) Memristor-based neural networks. J Phys D Appl Phys 46:093001/1–093001/12

Qin S, Bian W, Xue X (2013) A new one-layer recurrent neural network for nonsmooth pseudoconvex optimization. Neurocomputing 120:655–662

Qin S, Xue X (2015) A two-layer recurrent neural network for nonsmooth convex optimization problems. IEEE Trans Neural Netw Learn Syst 26(6):1149–1160

Qin S, Fan D, Wu G, Zhao L (2015) Neural network for constrained nonsmooth optimization using Tikhonov regularization. Neural Netw 63:272–281

Zhu Q, Cao J, Rakkiyappan R (2015) Exponential input-to-state stability of stochastic Cohen–Grossberg neural networks with mixed delays. Nonlinear Dyn 2(79):1085–1098

Zhu Q, Cao J (2014) Mean-square exponential input-to-state stability of stochastic delayed neural networks. Neurocomputing 131:157–163

Zhu Q, Cao J (2012) Stability analysis of Markovian jump stochastic BAM neural networks with impulse control and mixed time delays. IEEE Trans Neural Netw Learn Syst 23(3):467–479

Zhu Q, Cao J (2012) Stability of Markovian jump neural networks with impulse control and time varying delays. Nonlinear Anal Real World Appl 13(5):2259–2270

Xie W, Zhu Q (2015) Mean square exponential stability of stochastic fuzzy delayed Cohen–Grossberg neural networks with expectations in the coefficients. Neurocomputing 166:133–139

Ali MS, Gunasekaran N, Zhu Q (2017) State estimation of T–S fuzzy delayed neural networks with Markovian jumping parameters using sampled-data control. Fuzzy Sets Syst 306:87–104

Liu L, Zhu Q (2015) Almost sure exponential stability of numerical solutions to stochastic delay Hopfield neural networks. Appl Math Comput 266:698–712

Zhu Q, Cao J, Hayat T, Alsaadi F (2015) Robust stability of Markovian jump stochastic neural networks with time delays in the leakage terms. Neural Process Lett 41(1):1–27

Qin S, Xue X, Wang P (2012) Global exponential stability of almost periodic solution of delayed neural networks with discontinuous activations. Inf Sci 220:367–378

Qin S, Fan D, Yan M, Liu Q (2014) Global robust exponential stability for interval delayed neural networks with possibly unbounded activation functions. Neural Process Lett 40(1):35–50

Qin S, Xu J, Shi X (2014) Convergence analysis for second-order interval Cohen–Grossberg neural networks. Commun Nonlinear Sci Numer Simul 19(8):2747–2757

Zhou C, Zhang W, Yang X, Xu C, Feng J (2017) Finite-time synchronization of complex-valued neural networks with mixed delays and uncertain perturbations. Neural Process Lett 46(1):271–291

Yang X, Feng Z, Feng J, Cao J (2016) Synchronization of discrete-time neural networks with delays and Markov jump topologies based on tracker information. Neural Netw 85(C):157–164

Anthes G (2011) Memristors: pass or fail? Commun ACM 54(3):22–24

Qin S, Wang J, Xue X (2015) Convergence and attractivity of memristor-based cellular neural networks with time delays. Neural Netw 63:223–233

Wang L, Duan M, Duan S (2013) Memristive perceptron for combinational logic classification. Math Probl Eng 4:211–244

Hu J, Wang J (2010) Global uniform asymptotic stability of memristor-based recurrent neural networks with time delays. IEEE Congress Comput Intell Barc Spain 2010:2127–2134

Wu A, Zeng Z, Zhu X, Zhang J (2011) Exponential synchronization of memristor-based recurrent neural networks with time delays. Neurocomputing 74(17):3043–3050

Wu A, Zeng Z (2012) Exponential stabilization of memristive neural networks with time delays. IEEE Trans Neural Netw Learn Syst 23:1919–1929

Wu H, Zhang X, Li R, Yao R (2015) Adaptive anti-synchronization and anti-synchronization for memristive neural networks with mixed time delays and reaction-diffusion terms. Neurocomputing 168:726–740

Wu A, Zeng Z (2014) Lagrange stability of memristive neural networks with discrete and distributed delays. IEEE Trans Neural Netw Learn Syst 25(4):690–703

Yang S, Guo Z, Wang J (2015) Robust synchronization of multiple memristive neural networks with uncertain parameters via nonlinear coupling. IEEE Trans Syst Man Cybern Syst 45(7):1077–1086

Wu A, Zeng Z (2013) Anti-synchronization control of a class of memristive recurrent neural networks. Commun Nonlinear Sci Numer Simul 18:373–385

Wang G, Shen Y (2014) Exponential synchronization of coupled memristive neural networks with time delays. Neural Comput Appl 24(6):1421–1430

Wen S, Zeng Z, Huang T (2013) Dynamic behaviors of memristor-based delayed recurrent networks. Neural Comput Appl 23(3–4):815–821

Guo Z, Wang J, Y Z (2014) Attractivity analysis of memristor-based cellular neural networks with time-varying delays. IEEE Trans Neural Netw Learn Syst 25(4):704–717

Wen S, Bao G, Zeng Z, Chen Y, Huang T (2013) Global exponential synchronization of memristor-based recurrent neural networks with time-varying delays. Neural Netw 48:195–203

Chen L, Wu R, Cao J, Liu J-B (2015) Stability and synchronization of memristor-based fractional-order delayed neural networks. Neural Netw 71:37–44

Li JN, Li LS (2015) Mean-square exponential stability for stochastic discrete-time recurrent neural networks with mixed time delays. Neurocomputing 151:790–797

Li JN et al (2016) Exponential synchronization of discrete-time mixed delay neural networks with actuator constraints and stochastic missing data. Neurocomputing 207(2016):700–707

Arik S (2014) New criteria for global robust stability of delayed neural networks with norm-bounded uncertainties. IEEE Trans Neural Netw Learn Syst 25(25):1045–1052

Jarina Banu L, Balasubramaniam P (2016) Robust stability analysis for discrete-time neural networks with time-varying leakage delays and random parameter uncertainties. Neurocomputing 179:126–134

Wang X, Li C, Huang T (2014) Delay-dependent robust stability and stabilization of uncertain memristive delay neural networks. Neurocomputing 140:155–161

Yang S, Guo Z, Wang J (2015) Robust synchronization of multiple memristive neural networks with uncertain parameters via nonlinear coupling. IEEE Trans Syst Man Cybern Syst 45(7):1077–1086

Faydasicok O, Arik S (2012) Robust stability analysis of a class of neural networks with discrete time delays. Neural Netw 29–30(5):1407–1414

Qin S, Cheng Q, Chen G (2016) Global exponential stability of uncertain neural networks with discontinuous Lurie-type activation and mixed delays. Neurocomputing 198(C):12–19

Feng J, Ma Q, Qin S (2017) Exponential stability of periodic solution for impulsive memristor-based Cohen–Grossberg neural networks with mixed delays. Int J Pattern Recogn Artif Intell 31(27):1750022

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grant (11201100, 11401142, 61403101), and Heilongjiang Province Science and Technology Agency Funds of China (A201213).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wang, J., Liu, F. & Qin, S. Global exponential stability of uncertain memristor-based recurrent neural networks with mixed time delays. Int. J. Mach. Learn. & Cyber. 10, 743–755 (2019). https://doi.org/10.1007/s13042-017-0759-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-017-0759-4