Abstract

We propose a new active learning method for classification, which handles label noise without relying on multiple oracles (i.e., crowdsourcing). We propose a strategy that selects (for labeling) instances with a high influence on the learned model. An instance x is said to have a high influence on the model h, if training h on x (with label \(y = h(x)\)) would result in a model that greatly disagrees with h on labeling other instances. Then, we propose another strategy that selects (for labeling) instances that are highly influenced by changes in the learned model. An instance x is said to be highly influenced, if training h with a set of instances would result in a committee of models that agree on a common label for x but disagree with h(x). We compare the two strategies and we show, on different publicly available datasets, that selecting instances according to the first strategy while eliminating noisy labels according to the second strategy, greatly improves the accuracy compared to several benchmarking methods, even when a significant amount of instances are mislabeled.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In order to learn a classification model, supervised learning algorithms need a training dataset where each instance is manually labeled. With a large amount of unlabeled instances, one needs to manually label as much instances as possible. Such instances are randomly selected by a human labeler or oracle (i.e., passive learning). With this setting, the learning methods need huge labeled data to produce an optimized classifier. Note that labeling is costly and time consuming. Semi-supervised learning methods like [21] learn using both labeled and unlabeled data, and can therefore be used to reduce the labeling cost to some extent. Nonetheless, instead of randomly selecting the instances to be labeled, active learning methods allow to further reduce the labeling cost by allowing interaction between the learning algorithm and the oracle. Unlike a passive learning, active learning lets the learner choose which instances are more appropriate for labeling, according to an informativeness measure.

The main problem that active learning addresses is about defining informativeness in a way that reduces the number of instances to be labeled along with the improvement of the classifier’s performance. This is an important problem because in most real-world applications a large amount of unlabeled data are cheaply available as compared to the labeled ones.

We refer to [24] for a survey of active learning strategies. The most widely used active learning strategies are based on uncertainty sampling [11, 19, 26, 27]. Those strategies select instances in regions of the feature space, where the classifier is most uncertain about its prediction. Such instances are typically close to the decision boundary and allow to fine-tune the boundary regardless of the change which is made to the classifier. Examples of those strategies are presented in [14]. Other uncertainty based active learning methods, such as [5], define uncertainty in terms of the change that a weighted instance brings to the model so that the model changes its prediction regarding this instance. The classifier is then considered uncertain about its prediction, if a small weight is sufficient to change the predicted label. Active learning strategies based on query-by-committee [10] can also be regarded as an uncertainty sampling because they select instances on which the members of the committee are most uncertain. Those methods implicitly assume that the decision boundary is stable and needs just to be finely tuned. Indeed, stability of a decision boundary is expected to increase as training progresses (in terms of the number of labeled instances). However, since our objective is to reduce the labeling cost, active learning is initialized with few labeled instances and starts with a poor decision boundary. Therefore, the performance of those strategies may be limited. In the light of the aforementioned issues, some active learning strategies define informative instances as those having a great influence on the model [6, 23, 25, 33]. The influence of a candidate instance can be measured by the reduction in the overall uncertainty of the model [23, 33], the change in the probabilistic output of the model [6], or most commonly the change in specific parameters of the model before and after training on that instance. As an example, the authors in [25] use this strategy specifically with a discriminative probabilistic model where gradient-based optimization is used. The influence of an instance on the model is measured by the magnitude of the training gradient if the model is trained based on that instance. However, unlike the uncertainty-based active learning, those strategies highly depend on the type of the used classification model, because they evaluate the change in specific parameters of the model. The active learning method that we propose in this paper, measures the informativeness of instances by their influence on the predictive capability of the classification model, not on its parameters, and can be used with any base classification model.

Further, most existing active learning methods assume that the labels given by the oracle are perfectly correct. However, the oracle is usually subject to accidental labeling errors, especially in complex applications such as document analysis [15], entity recognition in text [7], biomedical image processing [1] and video annotation [29], where the labeling task is tedious and time consuming. Such labeling errors not only reduce the accuracy of the classifier, but also mislead the active learner, causing it to query for the label of instances that are not necessarily informative. Many existing methods, like [17] and those surveyed in [9], address the problem of noisy labels in a passive supervised learning setting. Such methods allow to repeatedly correct or remove the possibly mislabeled instances from a dataset of labeled instances which is already available. This is different from the active learning setting where labeling errors only affects instances whose labels are queried during training. Such instances are located in regions of high informativeness, which naturally makes labeling errors not equally likely for all possible instances in the feature space. In this context, active learning with noisy labels is primarily tackled in the literature based on crowdsourcing techniques [2, 13, 16, 32]. However, those techniques can not be used with a single oracle because they rely on the redundancy of labels that are queried for each instance from multiple oracles, which induces a high additional labeling cost. Very few methods that are independent of a specific classifier, try to address this problem without relying on crowdsourcing. A strategy proposed in [20] rely on the classifier’s confidence to actively ask the correction of the suspected (i.e., possibly mislabeled) instances from an expert, however, the learning in itself is passive. The same strategy has been investigated for active learning in [4, 12], where suspected instances can be relabeled or discarded. The active learning method proposed in [33], suggests that a suspiciously mislabeled instance is the one that minimizes the expected entropy over the unlabeled dataset if it is labeled with a new label other than the one given by the oracle. Some more restrictive active learning methods like [8, 28] assume that labeling errors are due to uncertain domain knowledge of the oracle. They address this problem by modeling the knowledge of the oracle and querying for the label of an instance only if it is part of his/her knowledge. However, those methods do not handle labeling errors that are simply due to inattention, and they require the oracle to remain the same over time. The active learning method that we propose in this paper, handles labeling errors without using crowdsourcing and without making assumptions about the oracle’s domain knowledge.

More specifically, the proposed active learning method relies on two strategies. In order to measure the informativeness of an instance x under a classification model h, the first strategy (see Sect. 3) trains h on x and evaluates its output on other unlabeled instances, whereas the second strategy (see Section 4) trains h on other instances and evaluates the output on x. A modification of the later strategy (see Sect. 6), allows to characterize mislabeled instances. The experimental evaluation that we present in Sect. 7 shows that querying labels according to the first strategy and reducing noisy labels according to the second strategy, allows to improve the performance of the active learner compared to several commonly used active learning strategies from the literature.

2 Preliminaries and notations

A brief summary of the active learning can be generalized as follows. Let \(X \subseteq \mathbb {R}^d\) be a d dimensional feature space. The input \(x \in X\) is called an instance. Let Y be a finite set of classes where each class \(y \in Y\) is a discrete value called class label. The classifier is then a function h that associates an instance \(x \in X\) with a class \(y \in Y\) (see Eq. 1). Most classifiers not only return the predicted class y but also give a score or an estimate of the posterior probability P(y|x, h), i.e., probability that x belongs to class y under the model h.

Let U be a set of unlabeled instances, L be the set of labeled instances that are queried so far, and h be the current classification model trained on L. In active learning, the learner is given the set U and has to iteratively select an instance \(x \in U\) in order to ask an oracle for the corresponding class label \(y \in Y\) and add it to L. In this way, the goal is to learn an efficient classification model \(h : X \longrightarrow Y\) using a minimum number of queried labels.

The uncertainty based active learning strategies select (for labeling) instances for which the model h is most uncertain about their class. For example, if \(y_1 = {\mathrm{max}}_{y \in Y} P(y | x, h)\) is the most probable class label for x, then the most common uncertainty strategy simply selects instances with a low confidence \(P(y_1|x, h)\) or with a high conditional entropy \(- \sum _{y \in Y} P(y | x, h) \log {P(y | x, h)}\) which is a measure of uncertainty.

A general active learning procedure is illustrated in Algorithm 1. The input is a classification model h, a set of unlabeled instances U and an initial set of labeled instances L. At each iteration the algorithm queries for the true class label of the instance which maximizes some informativeness measure F (e.g., uncertainty).

In the next sections, we will use the notation \(h_x\) to denote the classification model after being trained on L in addition to some labeled instance x (i.e., trained on \(L \cup \{(x, y)\}\)), and the notation \(\bar{x}\) to denote an unlabeled instance which is candidate for labeling.

3 Disagreement 1 (selecting the most influencing instance)

This strategy measures the informativeness of an unlabeled instance \(\bar{x}\) (candidate for labeling) based on the disagreement between the current classification model h and \(h_{\bar{x}}\) (the model trained on L after including \(\bar{x}\)). If the two models greatly disagree on labeling the unlabeled instances of U, then \(\bar{x}\) is informative, and its true class label is queried from an oracle.

In order to define the disagreement between two classification models a and b, let us assume that instances are drawn i.i.d. from an underlying probability distribution D. We can then define a metric d which represents the disagreement between a and b as follows:

As shown in Eq. 2, we can practically define this metric as the number of unlabeled instances on which the two models disagree about their predicted labels.

Let us consider the candidate instance \(\bar{x} \in U\) for labeling. If we decide to query for the true class label of \(\bar{x}\), then \(d(h, h_{\bar{x}})\) would express how many instances are affected by this decision. In order to compute the informativeness of \(\bar{x}\) before querying for its true label, we define \(h_{\bar{x}}\) as the classification model trained on \(L \cup \{(\bar{x}, h(\bar{x}))\}\). In this way, if we query for the true label of \(\bar{x}\), then the resulting model will most likely be \(h_{\bar{x}}\) because \(h(\bar{x})\) is the most probable class label for \(\bar{x}\). Based on Eq. 2 we can define the informativeness of \(\bar{x}\) as

where \(\mathbbm {1}(C)\) is the 0–1 indicator function of condition C, defined as

Note that in Eq. 3, the informativeness of the candidate instance \(\bar{x}\) is determined by training a model \(h_{\bar{x}}\) on L including \(\bar{x}\), and testing on every instance \(x \in U\).

Instead of expressing disagreement 1 as how many instances are affected (i.e., their predicted label change), we can also express it as how much those instances are affected. This is done by introducing a weight as described in Eq. 4

where \(w_{x} = |\mathop {\mathrm{max}} \limits _{y \in Y} P(y | x, h_{\bar{x}}) - \mathop {\mathrm{max}} \limits _{y \in Y} P(y | x, h)|\) is the difference in the confidence of the predicted label of x under \(h_{\bar{x}}\) and h, respectively.

The time H required for training a model h on a set of instances L depends mainly on the size of L. However, in our case, L increases by only one instance after each iteration, and its final size |L| is very small compared to |U|. As the final |L| is bounded by a fixed labeling budget, we can consider H as a constant (representing the upper bound training time) in our time complexity analysis. At each iteration, the disagreement 1 is computed (using Eqs. 3 or 4) for all instances \(\bar{x} \in U\). Then, the instance with the highest disagreement 1 is selected for labeling. Since H is constant, the complexity of computing disagreement 1 for a single instance \(\bar{x}\) is \(\mathcal {O}(n)\) where \(n = |U|\). Since the disagreement 1 needs to be computed for all instances (to select the one with highest score), the complexity of this query strategy is \(\mathcal {O}(n^2)\).

4 Disagreement 2 (selecting the most influenced instance)

We define another disagreement measure that we call disagreement 2. While the objective of disagreement 1 was to favour instances having a large impact on the model output, the objective of disagreement 2 is to favour the instances whose label is wrongly predicted by the current classification model h.

Let us consider the committee (or ensemble) of classification models \(C = \{h_x: x \in U\}\). The disagreement 2 strategy computes how many models in the committee C disagree with h on the label of a candidate instance \(\bar{x}\). If many members of the committee disagree with \(h(\bar{x})\), then \(h(\bar{x})\) is likely to be wrong, and the true label of \(\bar{x}\) is worth querying:

Note that while Eq. 5 looks similar to Eq. 3, it is different. Eq. 3 trains a model \(h_{\bar{x}}\) on the candidate instance \(\bar{x}\) and tests the output on every instance \(x \in U\), and Eq. 5 trains a model \(h_{x}\) on each instance \(x \in U\) and tests on \(\bar{x}\).

According to Eq. 5, it is likely that \(h(\bar{x})\) is wrong if many members of the committee C disagree with \(h(\bar{x})\). However, we can get more confidence about that if such committee members agree on a common label (different from \(h(\bar{x})\)). Therefore, another version of disagreement 2 which quantifies how much a committee of models disagree with \(h(\bar{x})\) and agree on a common label for \(\bar{x}\), would be expressed as follows:

Instead of using the 0–1 indicator function \(\mathbbm {1} (h_{x}(\bar{x}) = y)\) to indicate the agreement of a committee member \(h_{x}\) on the label y for \(\bar{x}\), we can rather consider the probability \(P(y | \bar{x}, h_x)\), that is, the confidence of the committee member \(h_x\) about assigning the label y for \(\bar{x}\). The weighted version of disagreement 2 can then be expressed (according to Eq. 6) as

Regarding the time complexity of disagreement 2, it is worth noting that at any given iteration, the committee of models \(C = \{h_x: x \in U\}\) is independent of the candidate instance \(\bar{x} \in U\). At each iteration, the set of models C can be computed once and used to evaluate the disagreement 2 for each instance \(\bar{x} \in U\) (based on Eqs. 5 or 6). Therefore, the complexity of this query strategy is also \(\mathcal {O}(n^2)\).

5 Discussion on disagreements 1 and 2

To summarize, the simple version of disagreement 1 (Eq. 3) is quantifying how many predictions change if the model is trained by adding the candidate instance \(\bar{x}\). The weighted version (Eq. 4) is quantifying how big is the change in those predictions. There is a relation between this proposed (disagreement 1) strategy and an optimal active learning strategy. Indeed, since the ultimate objective of active learning is to produce a high accuracy classifier with a minimum number of labeled training instances, an optimal strategy would be to select at each iteration the instance \(\bar{x}\) that leads to the maximum increase in accuracy if labeled and used for training.Footnote 1 However, this strategy can never be used because it requires knowing beforehand the true class labels of the instances in U to evaluate the gain in accuracy of the classifier.

For simplification purposes, let us consider a binary classification task (i.e., with only two possible classes). Let \(y^*_x\) be the (unknown) true class label of an instance \(x \in U\). The overall gain in accuracy of the model induced by training on a candidate instance \(\bar{x}\) is expressed as

where g(x) is the gain in accuracy regarding a single instance x.

The value of g(x) would be 1 if the label of x is correctly predicted by \(h_{\bar{x}}\) only, \(-1\) if it is correctly predicted by h only, and 0 if the two models h and \(h_{\bar{x}}\) predict the same label for x (either correctly or wrongly). To illustrate the relation between disagreement 1 and the optimal active learning, g(x) can be re-written as a factor of \(\mathbbm {1}(h(x) \ne h_{\bar{x}}(x))\) (which is used in Eqs. 3 and 4) as follows:

While it is impossible to evaluate the left-hand side factor in Eq. 7 because \(y^*_x\) is unknown, it is obvious that if \(\mathbbm {1}(h_{\bar{x}}(x) \ne h(x)) = 0\), there is no gain in accuracy regarding the instance x. Therefore, the disagreement between h and \(h_{\bar{x}}\) is a necessary (but not necessarily sufficient) condition to improve the accuracy.

Disagreement 2 is a measure of how likely \(h(\bar{x})\) is wrong. The simple version of disagreement 2 (Eq. 5) is quantifying how many models disagree with h regarding the predicted label of the candidate instance \(\bar{x}\). The weighted version (Eq. 6) is quantifying how much the different models commonly agree to disagree with h regarding the predicted label of \(\bar{x}\). Conceptually, the proposed (disagreement 2) strategy has some similarity with the active learning strategies based on query-by-committee and uncertainty sampling, because it allows to query for the label of instances on which h is uncertain (i.e., instances whose labels are likely wrongly predicted by h). However, those strategies define the most uncertain instance as the one being closest to the current decision boundary. This may result in querying for the label of an instance which has not a great impact on the model even if its label is wrongly predicted by h. Unlike those strategies, disagreement 2 selects at each iteration (for labeling) an instance which is still close to the decision boundary (not completely far from it) but not necessarily the closest one, which makes it have a larger influence on the classification model. The reason why this may improve the results is that the current decision boundary (especially at early iterations of the active learning) is poorly defined. Fine tuning the poorly defined decision boundary by always selecting the closest instance to it, is likely to slow down the early stages of the learning process.Footnote 2

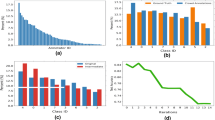

It is worth mentioning that the disagreement 1 and the disagreement 2 measures have different objectives and there is not a simple linear correlation between them. This is demonstrated by Fig. 1 which shows the informativeness of some instances according to the weighted versions of disagreement 1 and disagreement 2, for different datasets. As disagreement 1 and 2 have different objectives, they may give a conflicting informativeness regarding some candidate instances. For example, disagreement 1 may be large while disagreement 2 is small. Indeed, if we look at Eq. 3, we see that for a candidate instance, disagreement 1 maximizes the number of unlabeled instances whose predicted labels change. However, we are not guaranteed that all those predicted labels change towards the true class labels. For this reason, disagreement 1 may, in some cases, over-estimate (to some degree) the informativeness of a candidate instance, while this informativeness is still small with disagreement 2. These observations opens-up for further future work on combining the two disagreement measures.

In the next section, we show that a simple modification of the disagreement 2 measure, allows it to be used as a mislabeling measure to characterize noisy labels.

6 Dealing with noisy labels

As indicated in Sect. 1, the oracle is potentially subject to labeling errors. We consider random labeling errors, where the oracle has a probability \(\alpha \in [0, 1]\) for giving a wrong label for each query (i.e., \(\alpha\) represents the noise intensity).

Let \((x_q, y_q)\) be a labeled instance from L whose label \(y_q\) was queried from the oracle. As a reminder, we previously used the notation \(h_x\) to denote the classification model after being trained on L in addition to the instance x with its predicted label h(x). In other words, \(h_x\) is the classifier trained on \(L \cup \{(x, h(x))\}\). Let us now use the notation \(h_{x\setminus {x_q}}\) to denote the same model as \(h_x\), but trained after (temporarily) excluding the instance \(x_q\) from L.

According to the idea of disagreement 2, if many models highly agree on a common label for \(x_q\), which is different from the queried label \(y_q\) (i.e., agreeing to disagree with \(y_q),\) then we can suspect \(y_q\) to be wrong. Therefore, a mislabeling measure can be expressed based on Eq. 6 for a labeled instance \((x_q, y_q)\), as follows:

A labeled instance with a high value of \(M(x_q, y_q)\) is potentially mislabeled. In order to reduce the effect of such instance on the active learning, three main alternatives exist in the literature (with different mislabeling measures).

The first alternative is to manually review and correct the label of the instance by an expert oracle. This alternative may induce an additional relabeling cost, because the expert is assumed to be reliable. The second alternative simply consists in removing the instance from L. Note that this alternative may occasionally remove an informative instance that was actually correctly labeled. The third alternative is softer than the previous ones. If the classifier accepts training with weighted instances [22, 30], then every instance in \((x_q, y_q) \in L\) can be weighted by a weight \(\frac{1}{M(x_q, y_q)}\) which is the inverse of the mislabeling measure, that is, instances with a higher mislabeling measure have a smaller weight (i.e., smaller impact on the model). However, this alternative highly depends on the used classifier.

As one can notice, each alternative has its benefits and drawbacks. For our experiments, in order to remain independent of any specific classifier, we evaluate active learning with the proposed mislabeling measure (Eq. 8) against other mislabeling measures from the literature, for the two first alternatives (i.e., relabeling and removing). Those alternatives require to periodically select an instance with the highest mislabeling measure from L. To ensure a fair comparison between the mislabeling measures, we simply set this period to \(\frac{1}{\alpha }\). For example, if \(\alpha = 0.1\), then after each 10 queries, the most likely mislabeled instance is either relabeled or removed from the set L.

As a recapitulation, Fig. 2 shows a general flowchart of the developed system. First, a model h is trained on an initial set of labeled instances L. If a stopping criterion is not yet fulfilled (e.g. reaching a given labeling budget), then the most informative instance is selected for labeling according to one of the proposed disagreement measures, and is added to L. If the number of iterations is multiple of \(\frac{1}{\alpha }\) then the most likely mislabeled instance is either relabeled or removed from L. The whole process is then repeated again for another iteration.

7 Experiments

In this section, we evaluate the proposed active learning strategies. First, we present the datasets and evaluation metrics as well as the benchmarking methods used for comparison. Then, we present the experimental results.

7.1 Datasets

We consider in our experimental evaluation seven different datasets of a variable size and number of classes, where six of them are considered as publicly available datasets and are described in the UCI machine learning repository [3]. The other dataset is a set of real-world administrative documents that are represented as bag of textual words (i.e., sparse vector containing the occurrence count of each word in the document). Table 1 shows a brief summary of each dataset including the number of categories (column class), the dimensionality (column dim), the number of instances (column size), and the percentage of instances kept for testing (column test). This percentage is presented for each dataset as it is originally available in the UCI repository.

7.2 Evaluation metrics

There is no absolute measure for evaluating active learning strategies. Most authors demonstrate the performance of active learning strategies visually by plotting curves of the classifier’s accuracy (on a test set) with respect to the number of labeled samples used for training. The higher the curve, the better the active learning strategy. We also present some of such plots in our experiments (see Figs. 3, 4). Nonetheless, this method does not provide a quantitative evaluation of the active learning strategy for the whole learning session. A straightforward way to achieve this is to compute the average accuracy (over iterations) for the whole active learning session:

where \(Acc_t\) is the test accuracy achieved by the classifier after querying for the t’th label. However, this measure gives a higher score to strategies that achieve higher accuracy at the end of the learning session (where accuracy is pretty high) even if they perform relatively poorly at early stages (where accuracy is pretty low). Therefore, we propose an alternative evaluation measure which quantifies the performance of a given active learning strategy relatively to the optimal strategy (that we referred to in Sect. 5) and the random sampling strategy which selects instances independently of their informativeness. This measure aims to be independent of the dataset by quantifying the area between the maximum achievable accuracy and the accuracy which is achievable by a random sampling, and can be computed as

where \(Acc_t(AL)\) is the test accuracy achieved at time t for a given strategy AL.

For the results presented in this paper, we noticed that using the average accuracy measure will lead to the same conclusions as the proposed evaluation metric E, although this is not necessarily true in general. Nevertheless, the metric E have some readability benefits. It shows how much a given active learning strategy is close to the optimal one independently of the dataset. Moreover, it clearly gives a negative value if there is no benefit in using the active learning strategy over the usual passive learning where data is randomly selected by the oracle.

7.3 Benchmarking methods

In order to evaluate the proposed active learning strategies (without considering noisy labels for now), we compare them to five active learning strategies described below.

-

1.

Entropy uncertainty [24]: it determines the most uncertain instance with respect to all possible classes based on the entropy measure. This strategy selects at each iteration the instance \(\bar{x}\) with the highest conditional entropy. \(\bar{x} = \mathop {\mathrm{argmax}} \limits _{x \in U} H(y | x, h),\) where \(H(y | x, h) = - \sum _{y \in Y} P(y | x, h) \log {P(y | x, h)}\).

-

2.

Least certain strategy [14]: it selects at each iteration the instance \(\bar{x}\) which is closest to the decision boundary. For classifiers that output an estimate of the prediction probability, this strategy is equivalent to selecting \(\bar{x} = \mathop {\mathrm{argmax}} \limits _{x \in U} P(y_1 | x, h)\), where \(y_1 = \mathop {\mathrm{argmax}} \limits _{y \in Y} P(y | x, h)\) is the most probable class for x.

-

3.

Sufficient weight strategy [5]: it computes for each instance x a sufficient weight which is defined as the smallest weight that should be associated with x so that the prediction of the classifier h(x) changes from one label to another. Then, it selects (for labeling) the instance \(\bar{x}\) with the smallest sufficient weight.

-

4.

Expected entropy reduction (EER) [23, 33]: this strategy selects the instance \(\bar{x}\) which minimizes the expected entropy of the model regarding all the other unlabeled instances. The expected entropy is computed by averaging over all possible labels \(y_i \in Y\) for each instance \(x \in U\).

$$\begin{aligned} \bar{x} = \mathop {\mathrm{argmin}} \limits _{x \in U} \sum _{y_i \in Y} p(y_i | x, h) \sum _{u \in U-x} H(y | u, h_{(x, y_i)}), \end{aligned}$$where \(h_{(x, y_i)}\) is the model after being trained on \(L \cup \{(x, y_i)\}\) and \(H(y | u, h_{(x, y_i)})\) is the conditional entropy for the instance u as described in the first strategy.

-

5.

Change in probabilities [6]: this strategy measures the variation between two models in terms of the difference in their probabilistic output. Let \(v_h\) denote a vector containing the prediction probabilities of the model h on all the available instances (labeled and unlabeled). Given the current model h and the model after being trained on L with an additional instance \(h_x\), the informativeness of the instance x is measured proportionally to the distance between \(v_h\) and \(v_{h_x}\).

To evaluate the active learning in the presence of noisy labels, we use the proposed mislabeling measure to filter mislabeled instances as described in Sect. 6. We compare the results based on two other mislabeling measures (described below) that are independent of the classifier, and has been used to mitigate the effect of noisy labels on active learning in [4, 12, 33].

-

1.

Entropy reduction based mislabeling measure [33]: this measure suggests that a suspiciously mislabeled instance is the one that minimizes the expected entropy over the set U, if it is labeled with a new label other than the one given by the oracle.

-

2.

Margin based mislabeling measure [4, 12]: this measure simply suggest that a mislabeled instance is the one having a high prediction probability and a low probability for the label given by the oracle. The mislabeling measure for a labeled instance \((x_q, y_q) \in L\) is then simply defined as \(p(y_1 | x, h_{L-{x_q}}) - p(y_q | x, h_{L-{x_q}})\), where \(y_1\) is the most probable label for \(x_q\), \(y_q\) is the label given by the oracle, and \(h_{L-{x_q}}\) is the model trained after excluding \(x_q\) from L.

SVM is used as a base classifier for all the considered active learning strategies. We consider two variants of the SVM classifier, a simple one (with a linear kernel) and a complex one (with an RBF kernel). We use the Python implementation of SVM which is available in the scikit-learn library [18]. As the traditional SVM is a binary classification model, we applied the one-against-one multiclass strategy, which constructs one classifier per pair of classes. At prediction time, the class which receives the most votes is selected.Footnote 3 Prediction probabilities are estimated and calibrated based on the SVM scores using the method described in [31]. For all the scenarios that we consider in this paper, the initial SVM model is trained on a fixed set of 50 initially labeled instances chosen randomly from U while ensuring that at least two instances from each class are included. The only exception is for the Letter-recognition dataset where 52 instances were selected (exactly two instances from each class).

The main SVM parameters taken into consideration are a penalty parameter C and a kernel coefficient parameter \(\gamma\) (only for the RBF kernel). The parameter C is a trade off between the training error and the simplicity of the decision surface. The parameter \(\gamma\) is the inverse of the radius of influence of samples selected by the model as support vectors. Those parameter values are simply selected based on an exhaustive grid search over pre-specified parameter values where the score is obtained using cross-validation on a separate dataset ( 15% of the whole dataset)3. The values retained for those parameters for each dataset are indicated on Table 1 (column SVM).

7.4 Results and analysis

We firstly evaluate the active learning without considering noisy labels. Table 2 shows the average accuracy for each active learning strategy based on the SVM (RBF) classifier. Each method was run on each dataset five times, by considering each time a random split of the training/testing sets. This allows to have five accuracy values for each method and dataset. The average accuracy (of the five values) is reported on Table 2, together with the corresponding confidence intervals (by considering a confidence level of 95%). Table 2 reports the p-value obtained based on Student’s t-test which shows how much significantly is the best performing strategy (among the proposed ones) different from the second best strategy (among the other reference strategies). Table 2 also reports the p-value obtained based on the statistical analysis of variance method (ANOVA), which is used to analyze the differences among all strategies. The smaller the p-values, the more significant is the difference. We can see that the most outstanding result is achieved on the Letter-recognition dataset where the weighted version of disagreement 1 achieves a much significantly different accuracy than the other strategies. Table 3 shows the average E metric (defined in section 7.2) over all classifiers for each individual dataset. Table 4 shows the average E metric as well as the average accuracy over all datasets for each individual classifier (columns RBF SVM and Linear SVM), and for all datasets and classifiers (column All SVMs). Figs. 3 and 4 show the test accuracy of the model h with respect to the number of labeled samples (chosen according to different strategies) used to train h, for 7 different datasets and two variants of h (SVM with RBF kernel in the top of each figure, and SVM with a linear kernel in the bottom of each figure). Fig. 3 (respectively Fig. 4) compares the proposed simple and weighted versions of disagreement 1 (respectively disagreement 2) to the other reference strategies. For clarity purposes, the curve of only one baseline strategy is presented in the figures, however, results of all the considered strategies are summarized in Tables 3 and 4.

First, we can observe that all the considered active learning strategies perform generally better than the passive random sampling. This can be seen from Figs. 3 and 4 where the active learning curves are predominantly higher, and from Tables 3 and 4, where the E metric values are rarely negative. This confirms that any active learning method can, in general, improve the results over the usual passive learning (random sampling).

Second, we can see that the simple and weighted versions of the proposed disagreement 1 and disagreement 2 strategies, all achieve a better overall performance than the Entropy and the Least certain strategies. This can be directly observed from the column All SVMs of Table 4. Moreover, the Entropy and the Least certain strategies are occasionally less reliable than the random one. This is especially true for the Letter-recognition dataset where the two strategies are significantly worse than the random sampling (see the Letter-recognition column in Table 3, and the Letter-recognition curves in Figs. 3 and 4). Indeed, for this dataset, the initial classifier achieves a low test accuracy (around \(30\%\)) and the learning progresses slowly. Therefore, selecting the most uncertain instances will allow to fine-tune a poorly defined decision boundary, which slows down the learning progress further. The same observation can be made for the EER strategy which achieved a lower performance than the random strategy on the Letter-recognition dataset (see Table 3). This is seemingly due to the fact that the EER strategy computes an expected entropy by averaging over all possible labels, which makes it less reliable when the number of classes is high (i.e., 26 classes for the Letter-recognition dataset as shown on Table 1).

Third, from Table 4 we can see that the strategy based on the change in probabilities achieved the closest performance to our proposed strategies and a slightly better performance than the simple disagreement 1 strategy. However, the weighted version of disagreement 1 achieves the best overall result. This is confirmed by the column All SVMs which shows the average E metric over all datasets and classifiers. This proves that the instances chosen using the proposed weighted disagreement 1 strategy are usually more informative.

Finally, the active learning results in the presence of noisy labels are summarized in Table 5. The weighted disagreement 1 has been used as a query strategy, as it achieved the best performance in the previous experiments. Table 5 shows the average E metric over all datasets when different mislabeling measures (including the one proposed in Sect. 6) are used to filter (i.e., relabel or remove) the potentially mislabeled instances. Different intensities of noise \(\alpha\) are considered. We can observe from Table 5 that for all the considered mislabeling measures, and for a fixed value of \(\alpha\), relabeling the potentially mislabeled instances improves the accuracy better than removing them. However, as discussed in Sect. 6, relabeling may require an additional cost, while removing does not. Further, Table 5 shows that the mislabeling measure that we proposed in Sect. 6 (which is based on the weighted disagreement 2 measure) allows to better mitigate the effect of noisy labels than the margin or the entropy reduction based mislabeling measures. This proves that relaying on a committee of models that highly agree on a common label and disagree with the label given by the oracle, allows to better characterize mislabeled instances, even when the noise intensity is significantly high.

8 Conclusion and future work

In this paper, we proposed a new active learning method which is able to query for the label of instances based on how much they impact the output of the classification model. The method is also able to measure how much the queried instance’s label is likely to be wrong, based on models that agree to disagree with the current classification model, without relaying on crowdsourcing techniques. This method is generic and can be used with any base classifier. The experimental evaluation demonstrate that the proposed query strategy achieves a higher accuracy compared to several active learning strategies, and that the proposed mislabeling measure efficiently characterize mislabeled instances.

As a future work, we will focus on how to automatically estimate the noise intensity from the data and from previous interactions with the oracle, so that the number of relabeled or removed instances could be adapted according to this noise intensity. We will also focus on improving computational efficiency of the proposed method. A first direction for this, is to explore heuristics that can significantly improve the computational efficiency by reducing the size of U. For example, as times go, unlabeled instances whose predicted labels never change during subsequent iterations of the active learning, could be safely removed from U. Another possibility is to use a fast-to-compute informativeness measure (e.g., uncertainty) in order to pre-select at each iteration a sufficiently large subset \(U' \subset U\) of a fixed size \(|U'| = m\) such that \(m \ll |U|\). Then, we can compute the disagreement measures for the instances in \(U'\) instead of all instances in U, which will allow us to reduce the complexity from \(\mathcal {O}(n^2)\) to \(\mathcal {O}(n)\) (which is similar to \(\mathcal {O}(n \times m),\) because m is constant). Another possible direction is to explore whether the computational efficiency could be improved if the proposed method is specialized for on a limited class of machine learning algorithms. Furthermore, we would like to investigate ways of combining the disagreement 1 and disagreement 2 strategies to benefit from their synergy. As a simple idea, Eq. 7 contains two factors that allow to improve the classifier’s accuracy. The right-hand side factor has been used in disagreement 1, but the left-hand side factor is not possible to estimate because it requires knowing if the label of x has been correctly or incorrectly predicted. However, since disagreement 2 allows to characterize instances whose label is probably wrongly predicted, then it can be possibly used as a weight in place of the left-hand side factor of Eq. 7.

Notes

This is optimal given that we are only allowed to query for the label of one instance at each iteration, and it is only optimal for the given classifier.

As the decision boundary becomes more stable (over time), fine tuning it becomes more effective.

For more information about the used one-against-one multiclass strategy and the hyper-parameter selection, please visit the APIs sklearn.multiclass.OneVsOneClassifier and sklearn.model_selection.GridSearchCV and sklearn.svm.SVC on http://scikit-learn.org.

References

Abedini M, Codella N, Connell J, Garnavi R, Merler M, Pankanti S, Smith J, Syeda-Mahmood T (2015) A generalized framework for medical image classification and recognition. IBM J Res Dev 59(2/3):1–18

Agarwal A, Garg R, Chaudhury S (2013) Greedy search for active learning of ocr. In: International conference on document analysis and recognition (ICDAR). IEEE, pp 837–841

Bache K, Lichman M (2013) Uci machine learning repository. Irvine, CA : University of California, School of Information and Computer Science. http://archive.ics.uci.edu/ml

Bouguelia MR, Belaid Y, Belaid A (2015) Identifying and mitigating labelling errors in active learning. In: Fred A, De Marsico M, Figueiredo M (eds) Pattern recognition: applications and methods. Lecture Notes in Computer Science, vol 9493. Springer, Cham, pp 35–51

Bouguelia MR, Belaid Y, Belaid A (2016) An adaptive streaming active learning strategy based on instance weighting. Pattern Recogn Lett 70:38–44

Bouneffouf D, Laroche R, Urvoy T, Fraud R, Allesiardo R (2014) Contextual bandit for active learning: active thompson sampling. Int Conf Neural Inf Process 26(12):405–412

Ekbal A, Saha S, Sikdar UK (2014) On active annotation for named entity recognition. Int J Mach Learn Cybern

Fang M, Zhu X (2014) Active learning with uncertain labeling knowledge. Pattern Recogn Lett 43:98–108

Frnay B, Verleysen M (2014) Classification in the presence of label noise: a survey. IEEE Trans Neural Netw Learn Syst 25(5):845–869

Gilad-Bachrach R, Navot A, Tishby N (2005) Query by committee made real. Adv Neural Inf Process Syst 5:443–450

Hamidzadeh J, Monsefi R, Yazdi HS (2016) Large symmetric margin instance selection algorithm. Int J Mach Learn Cybern 7(1):25–45

Henter D, Stahl A, Ebbecke M, Gillmann M (2015) Classifier self-assessment: active learning and active noise correction for document classification. In: 13th International conference on document analysis and recognition (ICDAR). IEEE, pp 276–280

Ipeirotis PG, Provost F, Sheng VS, Wang J (2014) Repeated labeling using multiple noisy labelers. Data Min Knowl Discov 28(2):402–441

Kremer J, Steenstrup Pedersen K, Igel C (2014) Active learning with support vector machines. Wiley Interdiscip Rev Data Min Knowl Discov 4(4):313–326

Krithara A, Amini MR, Renders JM, Goutte C (2008) Semi-supervised document classification with a mislabeling error model. In: Macdonald C, Ounis I, Plachouras V, Ruthven I, White RW (eds) Advances in information retrieval, ECIR 2008. Lecture Notes in Computer Science, vol 4956. Springer, Berlin, Heidelberg, pp 370–381

Lin CH, Weld DS (2016) Re-active learning: active learning with relabeling. In: AAAI conference on artificial intelligence, pp 1845–1852

Natarajan N, Dhillon IS, Ravikumar PK,Tewari A (2013) Learning with noisy labels. In: Advances in neural information processing systems, pp 1196–1204

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V, Vanderplas J, Passos A, Cournapeau D, Brucher M, Perrot M, Duchesnay E (2011) Scikit-learn: machine learning in python. J Mach Learn Res 12:2825–2830

Ramirez-Loaiza ME, Sharma M, Kumar G, Bilgic M (2016) Active learning: an empirical study of common baselines. Data Min Knowl Discove 31:287–313

Rebbapragada U, Brodley CE, Sulla-Menashe D, Friedl MA (2012) Active label correction. In: IEEE 12th international conference on data mining (ICDM). IEEE, pp 1080–1085

Ren W, Li G (2015) Graph based semi-supervised learning via label fitting. Int J Mach Learn Cybern. doi:10.1007/s13042-015-0458-y

Rosenberg A (2012) Classifying skewed data: importance weighting to optimize average recall. In: INTERSPEECH, pp 2242–2245

Roy N, McCallum A (2001) Toward optimal active learning through sampling estimation of error reduction. In: International conference on machine learning, pp 441–448

Settles B (2012) Active learning. Synth Lect Artif Intell Mach Learn 6(1):1–114

Settles B, Craven M, Ray S (2008) Multiple-instance active learning. Adv Neural Inf Process Syst 20:1289–1296

Sharma M, Bilgic M (2013) Most-surely vs. least-surely uncertain. In: IEEE 12th International conference on data mining (ICDM). IEEE, pp 667–676

Small K, Roth D (2010) Margin-based active learning for structured predictions. Int J Mach Learn Cybern 1(1–4):3–25

Tuia D, Munoz-Mari J (2013) Learning user’s confidence for active learning. IEEE Trans Geosci Remote Sens 51(2):872–880

Vijayanarasimhan S, Grauman K (2012) Active frame selection for label propagation in videos. In: Fitzgibbon A, Lazebnik S, Perona P, Sato Y, Schmid C (eds) Computer Vision–ECCV 2012. Lecture Notes in Computer Science, vol 7576. Springer, Berlin, Heidelberg, pp 496–509

Wu J, Pan S, Cai Z, Zhu X, Zhang C (2014) Dual instance and attribute weighting for naive bayes classification. In: IEEE international conference on neural networks. IEEE, pp 1675–1679

Wu TF, Lin CJ, Weng RC (2004) Probability estimates for multi-class classification by pairwise coupling. J Mach Learn Res 5:975–1005

Zhang J, Wu X, Shengs VS (2015a) Active learning with imbalanced multiple noisy labeling. IEEE Trans Cybern 45(5):1095–1107

Zhang XY, Wang S, Yun X (2015b) Bidirectional active learning: a two-way exploration into unlabeled and labeled data set. IEEE Trans Neural Netw Learn Syst 26(12):3034–3044

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bouguelia, MR., Nowaczyk, S., Santosh, K.C. et al. Agreeing to disagree: active learning with noisy labels without crowdsourcing. Int. J. Mach. Learn. & Cyber. 9, 1307–1319 (2018). https://doi.org/10.1007/s13042-017-0645-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-017-0645-0