Abstract

The increasing availability of remotely sensed data offers a new opportunity to address landslide hazard assessment at larger spatial scales. A prototype global satellite-based landslide hazard algorithm has been developed to identify areas that may experience landslide activity. This system combines a calculation of static landslide susceptibility with satellite-derived rainfall estimates and uses a threshold approach to generate a set of ‘nowcasts’ that classify potentially hazardous areas. A recent evaluation of this algorithm framework found that while this tool represents an important first step in larger-scale near real-time landslide hazard assessment efforts, it requires several modifications before it can be fully realized as an operational tool. This study draws upon a prior work’s recommendations to develop a new approach for considering landslide susceptibility and hazard at the regional scale. This case study calculates a regional susceptibility map using remotely sensed and in situ information and a database of landslides triggered by Hurricane Mitch in 1998 over four countries in Central America. The susceptibility map is evaluated with a regional rainfall intensity–duration triggering threshold and results are compared with the global algorithm framework for the same event. Evaluation of this regional system suggests that this empirically based approach provides one plausible way to approach some of the data and resolution issues identified in the global assessment. The presented methodology is straightforward to implement, improves upon the global approach, and allows for results to be transferable between regions. The results also highlight several remaining challenges, including the empirical nature of the algorithm framework and adequate information for algorithm validation. Conclusions suggest that integrating additional triggering factors such as soil moisture may help to improve algorithm performance accuracy. The regional algorithm scenario represents an important step forward in advancing regional and global-scale landslide hazard assessment.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Landslide hazards generate more economic losses and fatalities than is generally acknowledged, due in large part to the reality that most casualties from landslide disasters occur in the developing world (Guzzetti et al. 1999). In such areas, complex topographic, lithologic, and vegetation signatures coupled with heavy rainfall events can lead to extensive mass wasting. Recent examinations of rainfall-induced landslides have increased understanding of the triggering mechanisms and surface conditions underlying slope instability. However, investigations remain largely site-specific and rely on high-resolution surface observable data, in situ rainfall gauge information and detailed landslide inventories. Such information is frequently unavailable at larger spatial scales due to spatial and temporal heterogeneities in surface information, rainfall gauge networks, and sparse landslide inventories.

The increasing availability of remotely sensed surface and atmospheric data offers a new opportunity to address landslide hazard assessment at larger spatial scales. A preliminary global landslide hazard algorithm developed by Hong et al. (2006, 2007) seeks to identify areas that exhibit a high potential for landslide activity by combining a calculation of landslide susceptibility with satellite-derived rainfall estimates. The algorithm framework is currently running at a 0.25° × 0.25° spatial resolution and outputs a set of landslide ‘nowcasts’ that can be compared with landslide inventories to assess algorithm performance. In this study, the authors define an algorithm ‘nowcast’ as a near real-time estimate of landslide event occurrence, highlighting the potential for landslide activity over a defined area. The algorithm considers all rapidly occurring mass movement types (e.g. landslides, debris flows, mudslides) that are directly triggered by rainfall.

Kirschbaum et al. (2009a) compares the prototype ‘nowcast’ algorithm framework with a global catalog of landslide events. The research concludes that the chief limitations of this system stem from the coarse spatial resolution and weighting scheme used in developing the susceptibility map and the global rainfall intensity–duration threshold employed. To improve the performance accuracy and utility of this system, the study recommends that the landslide hazard algorithm be considered at the regional level.

This research draws on the algorithm evaluation and recommendations described in Kirschbaum et al. (2009a) to develop one approach to estimating regional susceptibility and hazard assessment using satellite and in situ surface data and detailed landslide event information. The regional investigation draws upon a large landslide inventory obtained from Hurricane Mitch, which affected several countries in Central America in 1998. This case study integrates a statistically derived landslide susceptibility map with a regionally based intensity–duration threshold to provide one plausible approach for enhancing near real-time algorithm ‘nowcasts’. This paper introduces the framework, methodology, and setting for the regional landslide algorithm investigation. A new regional susceptibility map is presented and rainfall triggering threshold relationships are tested against previous work. The authors compare the regional case study framework to the existing global algorithm for the Hurricane Mitch event. Remaining issues and potential improvements are discussed.

Satellite-based landslide hazard algorithm

The intensity of rainfall over short time periods can serve as a triggering mechanism by increasing the pore water pressures. This is due to an increase in the ground water levels or the formation of perched water tables within the unsaturated zone (Wieczorek 1996; Iverson 2000). Historically, this triggering relationship has been derived empirically using information on the duration and intensity of a rainfall event that triggered a landslide. Rainfall intensity–duration (I–D) thresholds have been calculated on global (Caine 1980; Hong et al. 2006; Guzzetti et al. 2008) to local scales (Larsen and Simon 1993; Ahmad 2003) and specify a lower rainfall threshold for when a mass movement may be triggered.

To identify ‘nowcast’ areas of potential landslide activity, the algorithm couples a static susceptibility map with a rainfall I–D curve, assigning minimum thresholds for susceptibility and rainfall values at specified temporal durations. If a pixel has a susceptibility index value greater than the defined threshold and the rainfall accumulation exceeds the I–D threshold value, then an algorithm ‘nowcast’ is issued. This approach is intended to provide a regional to global perspective for near real-time landslide hazard assessment. The optimum scale at which the algorithm nowcasts are intended to be utilized extends over areas larger than 2,500 km2. These scales are currently limited by the coarsest data inputs, namely the 0.25° × 0.25° Tropical Rainfall Measuring Mission (TRMM) Multisatellite Precipitation Analysis (TMPA) product (Huffman et al. 2007). Higher resolution surface data is also employed to calculate the static susceptibility values. The algorithm framework is outlined in Fig. 1.

Global framework

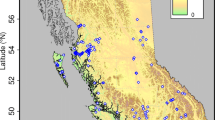

The global algorithm framework uses a global landslide susceptibility map, which was calculated using several remotely sensed and surface products. Shuttle Radar Topography Mission (SRTM) data at a 3 arc-second (~90 m) resolution is used to derive topographic parameters including elevation, slope, and drainage density (Rabus et al. 2003). Other products include 1-km Moderate Resolution Imaging Spectroradiometer (MODIS) land cover (Friedl et al. 2002), and 0.25°–0.5° resolution soil characteristics information (FAO/UNESCO 2003; Batjes 2000). Each product is aggregated or interpolated from its base spatial resolution to 0.25° × 0.25°. Soil type (classification of soil clay mineralogy, soil depth, moisture capacity, etc.), soil texture (percentage of sand, clay, and loam), and land cover were assigned a qualitative weight derived from previous literature. The six parameters chosen to estimate susceptibility (slope, soil type, soil texture, elevation, land cover, and drainage density) were normalized globally and combined using a weighted linear combination approach. The resulting global susceptibility map includes susceptibility values ranging from 0 (low susceptibility) to 5 (high susceptibility) (Hong et al. 2007) (Fig. 2).

Global landslide susceptibility map described in Hong et al. (2007), with inset maps showing global susceptibility values for a Central America, and b Southeast Asia

Hong et al. (2006) developed an I–D threshold for the global algorithm framework that utilizes satellite-based TMPA rainfall information. This merged satellite product provides precipitation estimates at 0.25° × 0.25° resolution every 3 h from 50°N to 50°S. Forecasts from the global algorithm are updated every 3 h.Footnote 1

The global algorithm framework was evaluated using a newly compiled global catalog for rainfall-triggered landslide events (Kirschbaum et al. 2009b). Landslide information was obtained from online news reports, hazard databases, and other credible sources. The landslide catalog includes events from 2003, and 2007–2010 and generally provides a minimum number of reported landslide events worldwide due to influencing factors such as regional reporting biases and accuracy of reports.

Evaluation of the global landslide hazard algorithm in Kirschbaum et al. (2009a) identifies several limitations of the existing system. First, all surface observables are aggregated to a 0.25° resolution, which serves to strongly decrease the strength of surface signals such as topography or slope by averaging values over a large area. Second, the susceptibility map incorporates two fairly coarse resolution soil products, which serves to over-emphasize the soils information and bias susceptibility values in many areas. Third, the globally consistent rainfall threshold is shown to under-estimate actual rainfall triggering conditions in many environments. The paper also stresses the dearth of landslide inventory information for accurate and comprehensive validation of the algorithm framework.

Kirschbaum et al. (2009a) outlines a set of recommendations for improving algorithm performance. These include consideration of surface data products at higher spatial resolutions, employment of a more physically based methodology for calculating susceptibility, and re-evaluation of the rainfall intensity–duration threshold to better account for regional climatology. The study emphasizes that the validation efforts would be greatly enhanced by using more detailed and comprehensive landslide inventories and that this approach may be better suited for a regional scale analysis.

Regional setting

Drawing upon the recommendations outlined in the global evaluation, this paper presents a more statistically based methodology for approaching the issue of regional landslide hazard assessment. This research selects study areas in four Central American countries that were significantly affected by Hurricane Mitch in November 1998. Hurricane Mitch made landfall as a Category 1 hurricane (Saffir-Simpson scale) on October 29, 1998 and moved across northern Honduras before turning north to hit eastern Guatemala. In its wake, it triggered hundreds of thousands of landslides in Nicaragua, El Salvador, Honduras and Guatemala, causing an estimated 18,800 fatalities and over USD$14.8 billion in damages (EM-DAT 2008). Following the hurricane, teams of landslide experts from the U.S. Geological Survey (USGS) and others used multi-temporal aerial photographs and field mapping to estimate the locations and perimeter of over 24,000 landslides in selected study areas over the four countries (Bucknam et al. 2001; Harp et al. 2002; Rodriguez et al. 2006; Devoli et al. 2007). The locations of the study areas and mapped landslides are shown in Fig. 3.

Hurricane Mitch storm track and mapped landslide inventory study areas. The total inventory contains over 24,000 landslides, covering a total study area of 35,474 sq km. The 19 landslide events not associated with Hurricane Mitch (non-Mitch landslides) are extracted from the global landslide catalog described in Kirschbaum et al. (2009b)

Previous studies have used portions of the Hurricane Mitch landslide inventory for landslide susceptibility analysis at local scales, employing empirical, statistical, and deterministic methodologies (Menéndez-Duarte et al. 2003; Coe et al. 2004; Guinau et al. 2005; Liao et al. 2011). In this evaluation, the authors employ a more dynamic approach at the regional scale to statistically model landslide susceptibility and hazard over the four affected countries in order to provide a more spatially homogeneous assessment. This study is intended to serve as a regional investigation of landslide hazard assessment where the climatology and geologic setting is fairly homogenous and where the methodology is general enough to replicate in other geographic regions.

The Hurricane Mitch landslide inventories are statistically evaluated with several satellite-based and in situ surface observables to develop a regional landslide susceptibility map. The regional susceptibility map uses a statistical methodology described below to integrate surface observables into a 90-m resolution map. Surface observables include a 90-m SRTM DEM, which was used to derive elevation, slope, curvature (slope concavity), and aspect (slope orientation). While this DEM was calculated after the Hurricane Mitch event, the vertical error for the SRTM system components has been found to be within the range of any incident elevation changes caused by landslides (Farr et al. 2007). Land cover information was obtained from the Central American Vegetation/Land Cover Classification and Conservation Status dataset (1 km resolution), developed by Proyecto Ambiental Regional de Centroamerica/Central America Protected Areas Systems (PROARCA/CAPAS 1998), and was re-classified into 10 classes based on a relative landslide susceptibility categorization presented in Hong et al. (2007). Country-level lithologic maps were obtained for each of the four countries, with a mapping scale of 1:100,000 for El Salvador (SNET/MARN 2008), and 1:500,000 for Honduras (Wieczorek et al. 1998), Guatemala (Lira, personal communication), and Nicaragua (INTER 1995). The data were divided into classes according to their lithologic characteristics, rock type and age. Lithologic classes were assigned using information on landslide distribution in a selection of the mapped study areas and classification guidelines from Nadim et al. (2006). Figure 4 provides a listing of the surface observable datasets, category classifications, and sources used in the regional susceptibility evaluations.

Surface observable data, binned categories, and corresponding frequency ratio values used in the regional susceptibility evaluation. Frequency ratio values exceeding the black dashed line (Fr = 1), suggests that as the value diverges from 1, there is a more pronounced relationship between the landslide and total pixel area

Recent work by Guzzetti et al. (2008) and Nadim et al. (2009) suggest that using a single global I–D threshold does not account for diverse climatologies at the regional level where rainfall signatures can vary greatly. As a result, Guzzetti et al. (2008) proposes several different regional I–D thresholds to more accurately resolve triggering relationships in climatologically similar geographic areas, using a database of over 2,600 landslide events and several hundred I–D thresholds from previous literature. The new set of defined regional thresholds are consistently lower than previous global thresholds, which Guzzetti et al. (2008) attributes to the increased availability of data and a more representative sample of events and thresholds globally.

There are very few landslide events with a precise time of landslide occurrence within the study area, and as a result, a new landslide I–D threshold cannot be calculated for the regional case study. As a substitute, the authors use an I–D threshold calculated by Guzzetti et al. (2008) for humid subtropical environments within the regional algorithm framework. Figure 5 plots the global and regional I–D curves used in the algorithm frameworks along with additional examples of I–D curves at the global scale and in climatologically similar regions. The regional I–D curve for humid subtropical environments is considerably lower than previous regional and global I–D thresholds, which suggests that less rainfall may actually be required to trigger a landslide in this area. This threshold may also be a more realistic value for satellite rainfall products, which tend to report lower rainfall intensities compared to surface rain gauges.

Rainfall intensity–duration graph for global curves: a Hong et al. (2006), b Caine (1980), and c Guzzetti et al. (2008)—Global. I–D thresholds for regional curves in humid, subtropical regions: d Guzzetti et al. (2008)—Regional, e Ahmad (2003)—Jamaica, and f Larsen and Simon (1993)—Puerto Rico. The algorithm framework uses the I–D threshold curves from Hong et al. (2006) and Guzzetti et al. (2008)—Regional for the global and regional algorithms, respectively

Landslide susceptibility map

Methodology

As discussed above, and shown in Fig. 2, the global landslide susceptibility map from Hong et al. (2007) is based on a weighted linear combination method to calculate susceptibility. This method assigns a weight to each surface observable based on previous literature and sums the results to create a composite map at 0.25° × 0.25° resolution.

The derivation of the regional susceptibility map differs in two important respects from the global map of Hong et al. (1) a statistically derived bivariate, grid-cell unit technique is employed to estimate susceptibility using the Hurricane Mitch landslide inventory dataset; and (2) susceptibility values are calculated based on 90-m to 1-km data and are aggregated to the 1-km scale. To prepare the susceptibility data, the landslide inventory vector files were transformed into 90-m resolution grids using a maximum-area transformation algorithm in a Geographic Information System (GIS). The resulting grid files provide a binary dataset where pixels equal to one denote areas with one or more mapped landslides and pixels with a value of zero indicate no mapped landslides. Each of the surface observables was also transformed from their base resolution into 90-m grids using a resampling tool.

This study employs a frequency ratio methodology described by Lee and Pradhan (2007) and Lee et al. (2007), which considers each surface observable individually (e.g., slope), and classifies values into a set of defined bins (e.g., <5°, 5–10°, 10–15°). A frequency ratio value is calculated for each bin as the percentage of landslide pixels in each bin divided by the percentage of pixels within the study area having the same bin values, written as:

where Frcb is the frequency ratio value for each surface observable or category c = (1, 2,…,m), at each bin b = (1, 2,…,n), and T is the total landslide or study area for the surface observable. The numerical value of the frequency ratio suggests that when Fr ≈ 1, the percent of landslide pixels is proportional to the total pixel area within the bin and when the frequency ratio diverges from Fr = 1, there is a more pronounced relationship between the landslide and total pixel areas. Figure 4 provides a description of the surface observable categories tested and their corresponding frequency ratio values over the study area.

The frequency ratio values are calculated for each set of surface observable bins and the values are summed on a pixel-by-pixel basis to estimate landslide susceptibility over the study area, shown as:

where FrfactorN can be any combination of surface observable factors, with c = (1, 2,…,m) representing the number of surface observables, and b = (1, 2,…,n) showing the number of bins. For example, the SI for a pixel with a slope between 25° and 30°, elevation between 1,500 and 1,750 m, south-facing aspect, convex slope in tropical evergreen forest with a surficial alluvium lithology would generate a SI value of 9.26. Fr values are shown in Fig. 4. The numerical value of the Susceptibility Index varies according to the number of surface observables considered; however, high index values indicate an increased likelihood of landslide occurrence and low values suggest that the type of surface observables present are indicative of low landslide frequency.

This methodology provides a straightforward calculation of susceptibility. The use of a grid-based technique allows the frequency ratio approach to be applied at any spatial scale. However, the methodology requires that surface observable data have homogeneous coverage over the study area and that there are adequate landslide inventories. This methodology also tends to simplify the relationship between surface observables and landslides across the study area by generalizing their contribution so that only one frequency ratio value describes the relationship between a range of surface observable values in each classified bin (e.g., one Fr value for elevations greater than 2,250 m) (Kojima et al. 2000). Despite the limitations, the bivariate frequency ratio approach provides a clear, direct comparison between landslide and non-landslide areas using minimal processing time.

Frequency ratios were calculated for each of the six surface observables and results were tested with the landslide inventories within each country. Sensitivity analysis of individual as well as combinations of surface observables were tested with landslide inventory data over several different study areas in the four countries. Results conclude that all surface observable categories provide information to improve the susceptibility calculations (Kirschbaum 2009). The susceptibility map was calculated at a 90-m pixel resolution but was aggregated to 1 km using the median value for easier integration into the algorithm framework (Fig. 6).

The frequency ratio values were mapped from numerical SI values to five susceptibility index classes using a quantile-based categorization. The five classes were defined from equal intervals (20%) on a receiver operating characteristic (ROC) curve, explained below. The authors found this categorization scheme to most effectively maximize the true-positive rate (successful nowcasts) while minimizing the false-positive rate (false alarms) for each susceptibility index category. These results are consistent with previous mapping schemes (Dai et al. 2004; Can et al. 2005; Guzzetti et al. 2006a; Lee et al. 2007). In addition, the five defined susceptibility index classes range from Low (SI < 4.5) to High (SI > 7.7) susceptibility.

Global versus regional susceptibility

Receiver operating characteristic evaluation is employed to compare the relative success of the susceptibility maps for the regional and global approaches. ROC analysis depicts the tradeoff between successful hit rates (true-positives) and false alarm rates (false-positives). The resulting ROC curve graphs the cumulative true-positive rate against the false-positive rate for a set of classifiers (Fawcett 2006). Landslide and non-landslide pixels are extracted and summed into equal interval bins. Rates are plotted cumulatively, with the true-positive rate (accurately resolved landslide pixels) on the y-axis and the false-positive rate (non-landslide pixels classified as landslides) on the x-axis. The success of the susceptibility map is determined by taking the area under the ROC curve (AUC), where an AUC value of 1 indicates a perfect model fit and a value of 0.5 represents a fit indistinguishable from random occurrence.

The susceptibility index values are compared for the regional and global maps over the same area. Figure 7 plots the ROC validation results for the study areas in each country. The global susceptibility map has a fit barely indistinguishable from random occurrence in many of the study areas, whereas the regional model demonstrates fairly robust model fits. Given that the regional susceptibility map was calculated using the landslide inventory data, it is not surprising that the regional ROC and AUC results are higher than the global susceptibility map over the same area. The ROC result for Nicaragua suggests that susceptibility is difficult to characterize from the available data. This may be a result of the small study area size in this country or topographic heterogeneities that may not be adequately resolved.

ROC validation comparing results from this study (black line) and the Hong et al. (2007) global susceptibility map (blue line) over the study areas in a Guatemala, b Honduras, c El Salvador, and d Nicaragua. The 1:1 line (red line) represents a model fit indistinguishable from random occurrence. AUC values are shown for each country for the global (blue) and regional (black) evaluations

A confusion matrix is calculated for both the global and regional susceptibility index maps using a threshold of SI ≥ 4 from the qualitative category descriptions. The matrix includes calculations for the true-positive (TP), true-negative (TN), false-positive (FP), and false-negative (FN) values, which can be used to compute several statistics:

N represents all of the negative nowcasts, P are all the positive nowcasts, POD is the percentage of landslide pixels that are successfully predicted by the susceptibility map, FAR is the percentage of pixels defined as high susceptibility where no landslides were documented, and accuracy defines the percentage of susceptible and non-susceptible pixels accurately identified by the susceptibility map. The Cohen’s Kappa index (κ) is used as a second validation method, which accounts for model agreement by chance and is therefore considered to be a more robust statistical measure (Cohen 1960; Hoehler 2000). The Kappa index computes the observed probability P obs of pixels accurately identified as landslide or non-landslide, compared to the expected probability P exp based on chance assignment:

P obs = accuracy;

The κ values for the relative level of agreement between the two models have been subjectively classified in the literature into five categories: slight (<0.2), fair (0.2–0.4), moderate (0.4–0.6), substantial (0.6–0.8), and almost perfect (0.8–1) (Landis and Koch 1977).

Both the global map and regional scenario were compared at 0.01° resolution and results of the statistical analysis are shown in Table 1. The regional POD is lower than the global map, which is likely due to the fact that some of the landslide events are mapped along the total runout of the landslide and consequently are occasionally mistaken for streams or rivers. When only landslide initiation points are considered (information was only available for Guatemala), the POD of the regional map increases to 86%. The lower POD values may also be a result of the spatial resolution of the regional susceptibility map, which is expected to produce a lower false alarm rate but also possibly lower detection. Comparatively, the high POD of the global map also results in a high FAR. One challenge in comparing susceptibility maps at different spatial resolution is the issue of representation errors. Despite discrepancies between the 0.25° and 0.01° resolution of the two maps, comparing their results highlights some interesting trends which are worth exploring.

Several studies have used Kappa indices to evaluate the relationship between landslide susceptibility maps (Guzzetti et al. 2006b; Van Den Eeckhaut et al. 2006; Baeza et al. 2009), noting values ranging from 0.53 to 0.87. While the Kappa values cited here are lower than those of previous studies, the cited studies calculated and validated their susceptibility maps over small areas, ranging from 40 to 200 km2, using 15 surface observables or more to compute their susceptibility indices. Therefore these studies have expectedly better model fits compared to the current evaluation, where the mapped landslide areas alone cover over 35,000 km2. Despite the discrepancies in study area size, the regional SI map falls into the ‘fair agreement’ category, which suggests that it has some statistical significance.

Rainfall intensity–duration triggering threshold

With the exception of the I–D threshold developed by Hong et al. (2006), nearly all previous rainfall thresholds were derived from in situ rainfall gauge information. These threshold relationships require sufficient knowledge on the timing and precise amount of rainfall that accumulated during the landslide event. This information is often challenging to obtain due to the spatial variability of rainfall gauging stations and difficulties in attributing past landslides to specific rainfall events. To test applicability of the Guzzetti et al. (2008) regional I–D threshold to landslide events in Central America, available rainfall gauge information is compared with TMPA satellite rainfall for several landslide events within the four countries. Eighteen reported landslides were identified from the global landslide inventory presented in Kirschbaum et al. (2009b) in addition to six different landslide-affected areas from the Hurricane Mitch dataset. Gauge and satellite rainfall signatures were extracted for the storm events that were believed to have triggered the landslides and results are plotted according to the cumulative storm precipitation (storm depth) and storm duration. Gauge information is available for 14 of the 25 landslide events. Since it is difficult to ascertain the exact timing of the slope failures during Hurricane Mitch, the study assumes that the landslides were triggered in the latter portion the storm’s passage.

Figure 8 plots the peak intensity and cumulative precipitation for each storm in order to resolve both the near-instantaneous and longer term rainfall signatures. Results are plotted with the global and regional I–D thresholds used in the algorithm frameworks. Figure 8 illustrates that the regional I–D threshold resolves significantly more events than the global I–D curve. The landslide events where the cumulative rainfall does not exceed the regional threshold may be due to an underestimation of rainfall intensities, particularly by the TMPA satellite rainfall; inaccurate identification of the landslide time or location; or other contributing factors to landslide initiation such as antecedent moisture. These issues are discussed below.

Storm depth (cumulative rainfall) versus duration graph comparing rainfall totals for a selection of Hurricane Mitch and non-Mitch landslide events in Central America. Rainfall signatures are calculated from surface rainfall gauges (NCDC daily global precipitation data; http://www.ncdc.noaa.gov/) and the TMPA product (Huffman et al. 2007). The global and regional I–D thresholds are plotted as cumulative rainfall versus storm duration

Regional approach versus global algorithm evaluation

The global and regional algorithms were run retrospectively from October 20 to November 8, 1998, representing the window in which Hurricane Mitch impacted Central America. The algorithm scenarios were run within the Land Information System (LIS) platform, a NASA Goddard Space Flight Center software framework with high performance land surface modeling and data assimilation capabilities (Kumar et al. 2006; Peters-Lidard et al. 2007). Both the global and regional 1-day intensity–duration thresholds were tested for each susceptibility map. 2 and 3-day I–D thresholds were also tested and were found to have similar results. The global and regional algorithms were run at 0.25° and 0.01° resolution, respectively, with the TMPA rainfall data simply interpolated to 0.01° for the regional trials. Landslide areas were calculated in 0.01° grids, denoting pixels with at least one landslide in their area. The resulting global algorithm forecasts were disaggregated to 0.01° resolution for more direct comparison with the regional results. Figure 9 shows the algorithm forecasts for the four different scenarios tested. An algorithm nowcast is considered to be successful if the nowcast and landslide pixel overlap. From this evaluation, a confusion matrix was calculated for each of the tested scenarios (Table 2).

Comparison of algorithm nowcasts for the regional case study under four scenarios: a regional susceptibility with regional I–D threshold, b regional susceptibility with global I–D threshold, c global susceptibility with regional I–D threshold, and d global susceptibility and global I–D threshold. The four maps plot results for the Hurricane Mitch event, displaying algorithm nowcasts (black), high susceptibility area from the regional or global susceptibility index maps (SI ≥ 4; grey), and study areas used for algorithm evaluation (green)

When the regional I–D threshold is applied to the regional map (Fig. 9a) and global map (Fig. 9c), the POD and FAR statistics are similar to that of the static susceptibility map evaluation but produce higher Accuracy results for the regional framework (Table 2). Comparatively, when the global I–D threshold is applied to the regional (Fig. 9b) and global (Fig. 9d) susceptibility maps, the POD and FAR are significantly lower, due in part to the coarse spatial resolution of the global framework. While the Global I–D threshold actually produces a higher accuracy value, the resulting forecasts have an extremely low POD and Kappa Index value.

The two algorithm components were also evaluated using events from the global landslide catalog described above. Of the nine landslides identified within the four countries, eight events fell within high susceptibility areas in the global map and all nine events were categorized as high susceptibility by the regional map. Comparatively, only one event exceeded the global I–D threshold while four events exceeded the regional I–D threshold. These results suggest that the I–D threshold may be the most significant limiting factor in the failure to accurately generate nowcasts within the algorithm framework and as a result, should be more closely evaluated with other empirical or modeled triggering relationships.

Discussion

The regional susceptibility map and intensity–duration threshold were designed to present a feasible approach to addressing near real-time landslide hazard assessment at the regional level. Due to the fact that only one extreme event was considered for algorithm development, this regional framework serves as one plausible scenario for landslide hazard evaluation and is not representative of all landslide-triggering rainfall events.

Comparison of the global and regional algorithm frameworks suggests that the regionally focused input parameters and I–D threshold may serve as a more realistic approach to landslide ‘nowcasting’; however, there are several additional factors that must be considered when making a framework such as this operational. First, obtaining adequate surface information including geologic, geomorphologic, and hydrologic information is extremely challenging. This issue is particularly relevant when the analysis is performed over larger study areas, which requires both comprehensive and consistent surface observable data. This analysis assumes that each landslide-controlling factor is mutually independent in the frequency ratio analysis, yet in reality, this is not realistic and could produce an overestimation of susceptibility and high autocorrelation values, particularly in higher susceptibility classes (Guinau et al. 2005).

A second issue with the susceptibility map is that the calibration data was obtained from a single extreme rainfall event. As a result, the susceptibility map may be biased towards resolving specific landslide typologies (over 95% of the Hurricane Mitch landslide inventory was reported as shallow debris flow events) and topographic aspects resulting from the storm’s directionality. Due to the extreme nature of the hurricane rainfall, many slopes that ordinarily remain stable during seasonal rainfall events may have been preferentially affected by this event. Potential biases in the landslide susceptibility map also occur as a result of using the Hurricane Mitch inventory for map calibration as well as validation. Kirschbaum (2009) tested ten different groupings of the landslide study areas to calibrate the susceptibility maps, choosing separate study areas for validation. Results suggested that the AUC results from each of the map tests vary by <6%, which the authors determine is within an acceptable confidence range for the regional results. Spatial autocorrelation of the susceptibility map and Hurricane Mitch landslide inventory may also represent a bias in the map calculation, which in some cases is unavoidable.

A third challenge of the existing framework is the non-physically based, empirical nature of the algorithm framework, particularly with respect to the intensity–duration threshold. Results shown in Fig. 8 demonstrate that Hurricane Mitch had anomalously high rainfall intensities compared to other rainfall-triggered landslide events recorded over the same area. While the Hurricane Mitch rainfall signatures exceeded the regional I–D threshold, only 4 of the 14 rainfall signatures exceed the global threshold for the TMPA satellite data. Comparatively, only one non-Mitch landslide exceeded the global I–D threshold and many events failed to exceed even the regional threshold. While the I–D threshold curves have been widely used in the literature, the purely empirical curves lack a physical, hydrologically justifiable basis, particularly when considered as the only triggering component and over a large study area.

Research has indicated that slope stability may be accurately computed at larger spatial scales by employing more physically based soil mechanics methodologies (Baum et al. 2010). The Transient Rainfall Infiltration and Grid-based Regional Slope-stability Model (TRIGRS; Baum et al. 2008) is designed to model the timing and distribution of shallow, rainfall-induced landslides and has been modified for larger-area applications and tested within Macon County, North Carolina (Liao et al. 2011). Another prototype physical model, SLope-Infiltration-Distributed Equilibrium (SLIDE), has been tested over a 1,200 km2 area in Honduras and employs simplified hypotheses on water infiltration to define a direct relationship between factor of safety and the rainfall depth on an infinite slope (Liao et al. 2010). While these model approaches are outside the scope of the current research due to algorithm spatial scale and the dearth of detailed soils information, it is an area of current investigation for smaller-area studies.

Results from the algorithm testing also indicate that the I–D threshold chosen strongly dictates the algorithm hazard assessment capability and is responsible for missed algorithm nowcasts. The 1-day global I–D threshold missed nearly all the landslide events mapped in Guatemala, El Salvador, and portions of Honduras. These missed events may have resulted from the difficulty of TRMM data to accurately resolve rainfall structures in areas influenced by orography, which can lead to underestimations or misidentification of rainfall in landslide-prone areas. However, failure to resolve mapped landslide events may also be due to the fact that a simplified cumulative satellite-derived rainfall total does not adequately account for the hydrologic conditions influencing slope instability.

Many of the non-Mitch landslide reports noted that some landslides occurred after the peak rainfall event, suggesting that antecedent moisture plays an important role in slope destabilization and must be incorporated into the algorithm framework to provide more dynamic hydrologic indicators. Studies have proposed several different models, including a simple antecedent rainfall threshold curve (Chleborad et al. 2006), an empirical Antecedent Daily Rainfall Model (Glade et al. 2000), Antecedent Water Status Model (Crozier 1999), and relationships to normalized cumulative critical rainfall (Aleotti 2004), among others. Work is underway to incorporate antecedent soil moisture information into the algorithm.

A final challenge of the existing algorithm framework at any level is to adequately account for exogenous factors leading to increased surface instability. At present, the algorithm framework does not account for landslides triggered by seismic activity or anthropogenic impact. Tectonic weakening of the hillslopes can destabilize the surface and failure planes, increasing the potential for a landslide during and subsequent to heavy rainfall events. Anthropogenic impact on surface instability and landslide frequency is somewhat more difficult to quantify due to the multifaceted impact development can have on slopes. Road cuts, improper building, and inadequate water drainage weakens surface materials, which serve to increase shear stresses and slope instability. While the completeness and accuracy of landslide inventories continues to be a limiting factor in algorithm validation, integrating additional indicators such as population density, proximity to road networks, and economic fragility may help to better constrain locations where populations may be at higher risk to landslide occurrences.

Despite the outlined challenges results suggest that the regional investigation provides one plausible way to approach some of the data and resolution issues identified in the global assessment and, in this scenario, provides more realistic landslide nowcasts. Applying this empirical methodology over diverse regions is contingent on the availability of landslide inventory information and consistency of data products between regions. Remotely sensed surface data offer the homogeneity, regional consistency, and high spatial resolution necessary to make the described methodology transferable to other regions. Regional investigations may also build upon existing regional or local investigations to evaluate susceptibility, possibly using the global landslide catalog to relate landslide initiation conditions amongst regions. Ensemble regional susceptibility index models as well as climatologically based I–D thresholds may then be synthesized in order to develop a new global algorithm framework.

Conclusions

This study draws upon the recommendations outlined in Kirschbaum et al. (2009a) to develop a regional approach to near real-time hazard assessment, employing higher resolution satellite and surface datasets and a statistically derived methodology. The Hurricane Mitch case study provides a valuable opportunity to apply the bivariate frequency ratio methodology at the regional scale. Due to the vast differences in their calculation methodologies and landslide information, it is difficult to compare the global and regional algorithm strategies directly. However, results suggests that the overall accuracy of the regional map and algorithm nowcasts demonstrate some statistical skill and that the Kappa Index value for the regional approach are within a realistic range compared to previous studies.

While the regional approach suggests that algorithm performance accuracy may be improved when considering landslide hazard at regional scales, several challenges must be resolved in order to successfully apply the regional framework in disparate areas and scale-up results to a new global framework. These include landslide inventory availability in other regions, re-evaluation of the intensity–duration thresholds and inclusion of soil moisture as a precursor to landslide initiation, and incorporation of more physically based parameters that may better predict slope instability. Future work will approach the issue of slope stability from a mechanical perspective, testing the existing empirical algorithm framework against deterministic infiltration models to determine how algorithm nowcast capabilities may be improved.

Globally consistent rainfall information for accurate and continuous monitoring of rainfall intensities is imperative for improved system performance. Future missions such as Global Precipitation Measurement (GPM; http://gpm.nasa.gov/) and Soil Moisture Active & Passive (SMAP; http://smap.jpl.nasa.gov/) set the stage for improved rapid assessment of landslide prone areas through more accurate and consistent precipitation and soil moisture status information. These future products, along with many existing global satellite datasets, provide the necessary tools to advance the prototype algorithm framework and improve near real-time landslide hazard assessment capabilities at regional and global scales.

Notes

The near real-time algorithm nowcasts and the global landslide catalogs are available at http://trmm.gsfc.nasa.gov/publications_dir/potential_landslide.html.

References

Ahmad R (2003) Developing early warning systems in Jamaica: rainfall thresholds for hydrological hazards. In: National Disaster Management Conference, Ocho Rios, St. Ann, Jamaica

Aleotti P (2004) A warning system for rainfall-induced shallow failures. Eng Geol 73:247–265

Baeza C, Lantada N, Moya J (2009) Validation and evaluation of two multivariate statistical models for predictive shallow landslide susceptibility mapping of the Eastern Pyrenees (Spain). Environ Earth Sci. doi:10.1007/s12665-009-0361-5

Batjes NH (2000) Global data set of derived soil properties, 0.5-degree grid (ISRIC-WISE). Oak Ridge National Laboratory Distributed Active Archive Center

Baum RL, Savage WZ, Godt JW (2008) TRIGRS–a Fortran program for transient rainfall infiltration and grid-based regional slope-stability analysis, Version 2.0. U.S. Geological Survey, Open-File, pp 1–75

Baum RL, Godt JW, Savage WZ (2010) Estimating the timing and location of shallow rainfall induced landslides using a model for transient, unsaturated infiltration. J Geophys Res 115(F03013):26. doi:10.1029/2009JF001321

Bucknam RC, Coe JA, Chavarría MM, Godt JW, Tarr AC, Bradley L, Rafferty S, Hancock D, Dart RL, Johnson ML (2001) Landslides triggered by Hurricane Mitch in Guatemala—inventory and discussion. U.S. Geological Survey Open-File Report 01-443, pp 1–40

Caine N (1980) The rainfall intensity: duration control of shallow landslides and debris flows. Geogr Ann 62:23–27

Can T, Nefeslioglu HA, Gokceoglu C, Sonmez H, Duman TY (2005) Susceptibility assessments of shallow earthflows triggered by heavy rainfall at three catchments by logistic regression analyses. Geomorphology 72:250–271

Chleborad AF, Baum RL, Godt JW (2006) Rainfall thresholds for forecasting landslides in the Seattle, Washington, Area-Exceedance and Probability. U.S. Geological Survey Open-File Report 2006-1064

Coe JA, Godt JW, Baum RL, Bucknam RC, Michael JA (2004) Landslide susceptibility from topography in Guatemala. In: Lacerda WA, Ehrlich M, Fontura SA, Sayão ASF (eds) Landslides: evaluation and stabilization. Taylor & Francis Group, London, pp 69–78

Cohen J (1960) A coefficient of agreement for nominal scales. Educ Psychol Meas 20:37–46

Crozier MJ (1999) Prediction of rainfall-triggered landslides: a test of the antecedent water status model. Earth Surf Process Landf 24:825–833

Dai FC, Lee CF, Tham LG, Ng KC, Shum WL (2004) Logistic regression modelling of storm-induced shallow landsliding in time and space on natural terrain of Lantau Island, Hong Kong. Bull Eng Geol Environ 63:315–327

Devoli G, Strauch W, Chávez G, Høeg K (2007) A landslide database for Nicaragua: a tool for landslide-hazard management. Landslides 4:163–176

EM-DAT (2008) The OFDA/CRED International Disaster Database. Université Catholique de Louvain. http://www.emdat.be/

FAO/UNESCO (2003) Digital soil map of the world and derived soil properties. Food and Agriculture Organization. http://www.fao.org/ag/agl/agll/dsmw.htm

Farr TG, Rosen PA, Caro E et al (2007) The shuttle radar topography mission. Rev Geophys 45. doi:10.1029/2005RG000183

Fawcett T (2006) An introduction to ROC analysis. Pattern Recognit Lett 27:861–874

Friedl MA, McIver DK, Hodges JC et al (2002) Global land cover mapping from MODIS: algorithms and early results. Remote Sens Environ 83:287–302

Glade T, Crozier M, Smith P (2000) Applying probability determination to refine landslide-triggering rainfall thresholds using empirical “Antecedent Daily Rainfall Model”. Pure Appl Geophys 157:1059–1079

Guinau M, Pallàs R, Vilaplana JM (2005) A feasible methodology for landslide susceptibility assessment in developing countries: a case-study of NW Nicaragua after Hurricane Mitch. Eng Geol 80:316–327. doi:10.1016/j.enggeo.2005.07.001

Guzzetti F, Carrara A, Cardinali M, Reichenbach P (1999) Landslide hazard evaluation: a review of current techniques and their application in a multi-scale study, Central Italy. Geomorphology 31:181–216

Guzzetti F, Galli M, Reichenbach P, Ardizzone F, Cardinali M (2006a) Landslide hazard assessment in the Collazzone area, Umbria, Central Italy. Nat Hazard Earth Syst Sci 6:115–131

Guzzetti F, Reichenbach P, Ardizzone F, Cardinali M, Galli M (2006b) Estimating the quality of landslide susceptibility models. Geomorphology 81:166–184. doi:10.1016/j.geomorph.2006.04.007

Guzzetti F, Peruccacci S, Rossi M, Stark CP (2008) The rainfall intensity-duration control of shallow landslides and debris flows: an update. Landslides 5:3–17

Harp EL, Hagaman KW, Held MD, Mckenna JP (2002) Digital inventory of landslides and related deposits in Honduras triggered by Hurricane Mitch. U.S. Geological Survey Open-File Report 2004-1348, pp 1–22

Hoehler FK (2000) Bias and prevalence effects on kappa viewed in terms of sensitivity and specificity. J Clin Epidemiol 53:499–503

Hong Y, Adler R, Huffman G (2006) Evaluation of the potential of NASA multi-satellite precipitation analysis in global landslide hazard assessment. Geophys Res Lett 33:1–5. doi:10.1029/2006GL028010

Hong Y, Adler R, Huffman G (2007) Use of satellite remote sensing data in the mapping of global landslide susceptibility. Nat Hazard 43:245–256

Huffman GJ, Adler RF, Bolvin DT, Gu G, Nelkin EJ, Bowman KP, Hong Y, Stocker EF, Wolff DB (2007) The TRMM multisatellite precipitation analysis (TMPA): quasi-global, multiyear, combined-sensor precipitation estimates at fine scales. J Hydrometeorol 8:38–55

INTER (1995) Mapa Geológico Minero de la Republica de Nicaragua a escala 1:500.000, personal communication

Iverson RM (2000) Landslide triggering by rain infiltration. Water Resour Res 36:1897–1910

Kirschbaum DB (2009) Multi-scale landslide hazard and risk assessment: a modeling and multivariate statistical approach. Doctoral Dissertation, Columbia University

Kirschbaum DB, Adler R, Hong Y, Lerner-Lam A (2009a) Evaluation of a preliminary satellite-based landslide hazard algorithm using global landslide inventories. Nat Hazard Earth Syst Sci 9:673–686

Kirschbaum DB, Adler R, Hong Y, Hill S, Lerner-Lam A (2009b) A global landslide catalog for hazard applications: method, results, and limitations. Nat Hazard 52:561–575

Kojima H, Chung CJ, van Westen CJ (2000) Strategy on the landslide type analysis based on the expert knowledge and the quantitative prediction model. Int Arch Photogramm Remote Sens XXXIII:701–708

Kumar SV, Peters-Lidard CD, Tian Y, Houser PR, Geiger J, Olden S, Lighty L, Eastman JL, Doty B, Dirmeyer P, Adams J, Mitchell K, Wood EF, Sheffield J (2006) Land information system—an interoperable framework for high resolution land surface modeling. Environ Model Softw 21:1402–1415

Landis JR, Koch GG (1977) The measurement of observer agreement for categorical data. Biometrics 33:159–174

Larsen MC, Simon A (1993) A rainfall intensity-duration threshold for landslides in a humid-tropical environment, Puerto Rico. Geogr Ann 75:13–23

Lee S, Pradhan B (2007) Landslide hazard mapping at Selangor, Malaysia using frequency ratio and logistic regression models. Landslides 4:33–41. doi:10.1007/s10346-006-0047-y

Lee S, Ryu J-H, Kim I-S (2007) Landslide susceptibility analysis and its verification using likelihood ratio, logistic regression, and artificial neural network models: case study of Youngin, Korea. Landslides 4:327–338. doi:10.1007/s10346-007-0088-x

Liao Z, Hong Y, Kirschbaum D, Adler RF, Gourley JJ, Wooten R (2010) Evaluation of TRIGRS (transient rainfall infiltration and grid-based regional slope-stability analysis)’s predictive skill for hurricane-triggered landslides: a case study in Macon County, North Carolina. Nat Hazard. doi:10.1007/s11069-010-9670-y

Liao Z, Hong Y, Kirschbaum D, Liu C (2011) Assessment of shallow landslides from Hurricane Mitch in Central America using a physically based model. Environ Earth Sci (accepted)

Menéndez-Duarte R, Marquínez J, Devoli G (2003) Slope instability in Nicaragua triggered by Hurricane Mitch: distribution of shallow mass movements. Environ Geol 44:290–300

Nadim F, Kjekstad O, Peduzzi P, Herold C, Jaedicke C (2006) Global landslide and avalanche hotspots. Landslides 3:159–173. doi:10.1007/s10346-006-0036-1

Nadim F, Cepeda J, Sandersen F, Jaedicke C, Heyerdahl H (2009) Prediction of rainfall-induced landslides through empirical and numerical models. In: Rainfall-induced landslides: mechanisms, monitoring techniques and nowcasting models for early-warning systems. First Italian Workshop on Landslides, Naples, pp 1–10

Peters-Lidard CD, Houser PR, Tian Y et al (2007) High-performance Earth system modeling with NASA/GSFC’s land information system. Innov Syst Softw Eng 3:157–165

PROARCA/CAPAS (1998) Central American vegetation/land cover classification and conservation status. http://sedac.ciesin.columbia.edu/conservation/proarca.html. Accessed Sept 2007

Rabus B, Eineder M, Roth A, Bamler R (2003) The shuttle radar topography mission—a new class of digital elevation models acquired by spaceborne radar. ISPRS J Photogramm Remote Sens 57:241–262

Rodriguez CE, Torres AT, León EA (2006) Landslide Hazard in El Salvador. In: Nadim F, Pöttler R, Einstein H, Klapperich H, Kramer S (eds) ECI conference on geohazards Lillehammer, Paper 6, pp 10

SNET/MARN (2008) El Salvador geology, G. Molina. Centro de Investigaciones Geotecnicas, MOP, Universidad Politecnica de Madrid, Sistema de Referencia Territorial

Van Den Eeckhaut M, Vanwalleghem T, Poesen J, Govers G, Verstraeten G, Vandekerckhove L (2006) Prediction of landslide susceptibility using rare events logistic regression: a case-study in the Flemish Ardennes (Belgium). Geomorphology 76:392–410

Wieczorek GF (1996) Landslide triggering mechanisms. In: Turner KA, Schuster RL (eds) Landslides: investigations and mitigation. TRB Special Transportation Research Board, Washington, D.C., pp 76–88

Wieczorek GF, Newell WL, Chirico PG, Gohn GS, Nardini R, Putbrese T (1998) Introduction and metadata for preliminary digital geologic map database for Honduras. U.S. Geological Survey Open-file Report 98-774

Acknowledgments

This research was supported by an appointment to the NASA Postdoctoral Program at the Goddard Space Flight Center, administered by Oak Ridge Associated Universities through a contract with NASA. The authors thank Yudong Tian for his computing help and support. Thanks also go to Chiara Lepore for her help in evaluating susceptibility methodologies. Thank you to those at the U.S. Geological Survey for providing the landslide inventory data used in this analysis and to Graziella Devoli, Giovanni Molina, Estuardo Lira and Gerald Wieczorek for providing lithology information within the evaluated areas. The global landslide algorithm studies are supported by NASA’s Applied Sciences program. The contributions of Dr. Peters-Lidard and Dr. Kumar were supported by a NASA Advanced Information System Technology program project (AIST-08-0077, PI: Christa D Peters-Lidard). This support is gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kirschbaum, D.B., Adler, R., Hong, Y. et al. Advances in landslide nowcasting: evaluation of a global and regional modeling approach. Environ Earth Sci 66, 1683–1696 (2012). https://doi.org/10.1007/s12665-011-0990-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12665-011-0990-3