Abstract

Emotion recognition from Electroencephalography (EEG) is a better choice for the people with facial deformity like where facial data is not accurate or not available for example burned or paralyzed faces. This research exploits the image processing capability of convolutional neural network (CNN) and proposes a CNN model to classify different emotions from the scalogram images of EEG data. Scalogram images from EEG obtained by applying continuous wavelet transform used for the study. The proposed model is subject independent where the objective is to extract emotion specific features from EEG data irrespective of the source of the data. The proposed emotion recognition model is evaluated on two benchmark public databases namely DEAP and SEED. In order to show the model as a purely subject independent one, the cross data base criteria is also used for evaluation. The various performance evaluation experiments show that the proposed model is comparable in terms of emotion classification accuracy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

EEG is a complex time series data and researchers are using it frequently for several applications since it is a noninvasive method. Emotion recognition is a sub task of affective computing which involves studies of how computers process and recognize emotions. Emotions assist us in life for taking a decision, reasoning, planning and other human mental tasks. An emotionally balanced person with full of positive emotions may have more successful life as compared to emotionally unbalanced one. Moreover negative emotions may impact one’s decision making as well as health too. Emotion recognition has several applications like in e-learning, entertainment industry, adaptive advertisement, adaptive games, and an emotion enabled music player.

Researchers are using EEG to recognize emotions because an EEG cap is a portable device so it is convenient and can be employed for various applications of emotion recognition. Several attempts have been made using deep learning approach to develop an emotion-recognition system. The EEG data recorded from the brain is highly dynamic in nature and the model processing this data should be a nonlinear one. In such cases, recurrent neural network (RNN) may be one of the options as the classifier as it is a popular choice when it comes to processing of time series dataset. Authors (Li et al. 2018) have proposed LSTM based Bi hemisphere domain adversarial neural network for emotion recognition. The main drawback of RNN is that it is not capable to remember longer sequences because of vanishing or exploding gradient problem. Therefore, instead of RNN, convolution neural network (CNN) which is mostly used for image related tasks has also been proved to be a good choice for emotion recognition task. In another CNN based work, Dynamical graph CNN (Song et al. 2018) is proposed. They worked on two databases SEED and Dreamer. For SEED data they achieved average accuracy as 90.4% for subject dependent emotion recognition and 79.95% for subject independent emotion recognition.

It is difficult to create a model that is applicable across persons since there are individual differences in EEG (Jayaram et al. 2016). Therefore multiple works available in the literature uses subject dependent approach. In this approach data from the same subject from the different sessions is used to train the classifier. Later on different session data is used to test the classifier. For subject dependent case, good accuracy have been achieved on DEAP database. Moon et al. (2018) have used brain connectivity features with CNN on DEAP database. They considered three connectivity features- Pearson correlation coefficients, Phase lag index and phase locking value separately to feed into CNN. Salama et al. (Salama et al. 2018) have represented EEG data from DEAP database in three dimensional space and used 3D CNN as classifier. They reported model accuracy 87.44% for valence and 88.49% for arousal using k-fold cross validation techniques. There k was set to 5. Li et al. (Li et al. 2016) have used scalogram images with hybrid classification model which was the combination of CNN and LSTM. They reported K-fold cross validation accuracy as 74.12% for arousal and 72.06% for valence taking K at 5. In this way this work was also subject dependent. Few authors worked on each subject individually and then reported their average classification accuracy as in Wang et al. (2011). They worked on self created data and got 66.51% accuracy for four emotions with five subjects only. Liang et al. (2019) have used clustering based approach to classify emotions using EEG. On DEAP database they got accuracy as 61.02 for arousal and 52.58% for valence with subject dependent criteria.

Lakhan et al. (2019) created and worked on their own database to examine the suitability of OpenBCI as compared to costly EEG amplifiers for data acquisition. Moreover, they validated their method on other available benchmark databases. In case of DEAP data they reported mean valence accuracy 57.60% and mean arousal accuracy 62.00%.

In subject independent approach, training data belongs to different subjects and test data belongs to fully different subject. For subject independent case, very less number of work is reported with limited classification accuracy. Rayatdoost and Soleymani (2018) have worked with subject independent approach on DEAP database and got accuracy as 59.22 for valence and 55.70 for arousal. An ensemble classifier is constructed (Fazli et al. 2009) for using temporal and spatial filters for EEG data related to Brain computer interface. A transfer learning approach is proposed (Li et al. 2019) for fast deployment of emotion recognition models. They could improve the classification accuracy by 12.72% compared to non transfer learning approach on SEED data.

Statistical features like time domain and frequency domain features or both are used to represent EEG. Short time Fourier Transform (Ackermann et al. 2016), CWT (Li et al. 2016) and discrete wavelet transforms (Murugappan et al. 2010; Pandey and Seeja 2019a, b) are some techniques those are used for EEG-signal analysis. Fourier Transform gives phase and amplitude of sinusoids of a signal, whereas wavelet coefficients give the correlation between wavelet function and signal at a particular time. Few of the works shows the use of fractal dimension (Sourina and Liu 2011) as features of EEG signals. Fractals are based on self-similarity concepts. Higher-order-crossing (HOC) based feature vector is developed (Petrantonakis and Hadjileontiadis 2009) to overcome the problem of subject to subject variability in EEG signals corresponding to emotions. HOC is a time series analysis method used to find out the number of zero crossing point from the filtered EEG signal. Zero crossing is used to measure the oscillating property of the signal. Some works (Mert and Akan 2018) have extracted intrinsic mode functions (IMFs) from EEG and several statistical or Power spectral Density based features are determined from using these IMFs (Pandey and Seeja 2019c).

The people who have burnt or paralyzed faces are more prone to emotion disorders. Emotion disorder may lead to various psychological problems. Therefore, continuous monitoring of Emotions is required. Moreover, in situations where the labeled data of a particular subject (subject dependent case) is not available for training the model as in case of a paralyzed person, the subject independent model is the only way to identify the emotions. Therefore, in this paper a subject independent EEG based Emotion recognition is suggested for the people with facial deformity. The major contributions of this research are,

-

A pure subject independent emotion recognition system has proposed using cross database criteria.

-

An optimized CNN model is proposed for EEG based emotion recognition that takes scalogram images of EEG as input and classifies emotions.

2 Materials and methods

2.1 EEG

EEG captures and records the electrical functions of the brain along the scalp. There are primarily five types of EEG frequency bands: δ < 4 Hz., θ > = 4 and < 8 Hz., α > = 8 and < = 14 Hz., β > 14 and < = 40 and γ between 40 to 100 Hz. The main difficulty with EEG is its poor spatial resolution. Subjects wearing an electrode cap during watching the stimuli for predetermined amount of time and the EEG signals would be recorded using any recording software of EEG. The electrodes in the electrode cap should be placed according to 10/20 international electrode placement system (Klem et al. 1999).

2.2 Valence arousal model

Researchers understand and prepare emotional space by using two unique models. Emotions are classified by using these two models (Mauss and Robinson 2009)—discrete and dimensional model. Discrete model is based on fundamental emotions. The fundamental emotions are happy, sad, fear, distaste, anger and surprise. These are called “fundamental” or “basic” emotions because they are available at the time of birth. In the dimensional model, proposed in(Lang 1995), emotions are represented on the Valence-Arousal space as discrete points where Valence and Arousal both ranges from 1.0 to 9.0 on discrete point scale as shown in Fig. 1.

The main battle in devising a subject independent Emotion recognition system is the variation in EEG among individuals. That is, two persons express their emotions differently on the same stimulus. Moreover, for the same emotion (say joy) but different stimuli (Scenes from sunrise at hills and a smiling baby), the levels of valence-Arousal will be different.

2.3 Databases

This work uses two databases to recognize emotions. Moreover cross database criteria is also assessed for subject independent emotion recognition using these two databases.

2.3.1 DEAP Database

The DEAP database (Koelstra et al. 2011) is selected for the proposed work. Length of 1 min video is shown to the participants and they rated it on the valence, arousal, dominance and like/dislike scales. While watching the video they were wearing electrode cap. In the electrode cap, electrodes are placed according to 10–20 electrode placement system (Klem et al. 1999). Emotions are modeled on valence/arousal scale (Russell 1980) and rating is done considering self assessment manikin (SAM) (Bradley and Lang 1994). The statistics of database is shown in Table 1.

The database is already preprocessed and the artifacts are detached by the authors of the database. To remove eye artifacts, a blind-source-separation technique is used. Delta band which is the lowest frequency band of EEG signal is removed from the signal in the database. Signals are down-sampled to 128 Hz.

2.3.2 SEED database

SEED database (Zheng and Lu 2015) is recorded in three sessions. In each session there were 15 videos (trials) therefore for three session there are 45 trials exists in the database. Signals were downsampled to 200 Hz. Other statics are shown in Table 2.

2.4 Scalogram

Continuous Wavelet Transform decomposition is useful to evaluate signal energy distribution in time-scale based representation. This representation of spectral parameters is called scalogram. In this proposed study, scalogram (Bolós and Benítez 2014) representation of CWT of EEG is the input data given to the CNN for classification. CWT of a time series/signal \( f(t) \) at time u and scale s can be obtained using Eq. (1).

Here Ψu,s is the wavelet function. The scalogram Sc of signal \( f(t) \) can be determined by the Eq. (2).

Scalogram Sc represents the energy of \( f_{w} \) at a scale s. The scalogram represents those scales/frequencies of a signal, which contribute the most to the total energy of the signal. In other ways, the time-varying energy substances upon a span of frequencies can be obtained by plotting squared modulus of the wavelet transform as a function of frequency and time to create a scalogram (Kareem and Kijewski 2002). Figure 2 shows a sample EEG signal from DEAP database and its scalogram image.

2.5 CNN

CNN is a deep learning technique which provides sparse connectivity as well as weight sharing. CNN consists mainly of three unique types of layers. These layers involves convolution, pooling and fully connected. Convolution layer is the backbone of CNN. Learnable filter is convolved through the input volume. Weight sharing is done by convolving same filter at different position of the image. This results fewer number of parameters and hence faster the network. Pooling operation can be average pooling or max pooling.

2.6 Proposed methodology

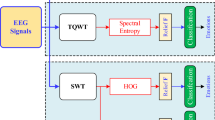

In this work, deep learning is used to detect emotions from EEG data. EEG signals were recorded while watching stimuli and artifacts were removed by the authors of the database. From the EEG database, signals are converted into scalogram images using continuous wavelet transform. These images give suitable textural information of the signal to compute better features. Scalogram is a pictorial representation of the CWT and gives time–frequency information of the signal. It represents the wavelet coefficients, where x axis corresponds to time and Y axis to scale. Full scalogram image does not contain information of the EEG signal therefore it is cropped and fed into the deep learning based CNN classifier. Scalogram is cropped to reduce the size of image and hence save the computation. Moreover, it is observed that cropped image gives better classification accuracy as compared to the full image. Figure 3 represents the complete approach used for the proposed study. This work is subject independent as data from 2 subjects is used for testing and 30 subjects for training at a time for both the cases—valence and arousal for DEAP database. In case of SEED the data from 12 subjects is used to train the CNN model and 3 subjects to test the model.

In order to implement pure subject independent approach and to obtain better generalization, a database independent criterion is utilized. Therefore model is trained with one database and tested with another database. Two benchmark EEG emotion databases are utilized for this purpose namely- DEAP and SEED. To do so first, all frontal electrodes EEG from DEAP and SEED is converted into scalogram images using CWT and distributed into three classes of emotions, positive, neutral and negative based on valence rating.

2.6.1 Proposed CNN architecture

Proposed model comprises of total twelve layers. First is input layer in which scalogram of size 91 × 91 is inputted followed by two set of convolution, batch normalization, Relu and max pooling layer are used. After that, there exists a fully connected layer, which connected to all the previous inputs. Convolution is a widely used operation in the area of signal processing, image processing, and other engineering as well as Science based applications. A centered convolution operation is used in this model. If I be the pixel value of scalogram image obtained after applying CWT onto the EEG signal x, a centered convolution operation can be defined as shown in Eq. (3).

Here \( A_{ij} \) is the revised entry, \( I_{ij} \) is the centered pixel of the image block (portion) through which the filter convolved. The two dimensional filter is of size \( mxn \) and K is the weight assigned to neighborhood pixels. Final result is fed into pooling layer after passing ReLU (rectified linear unit) layer. ReLU layer converts each negative value to 0. Then pooling layer performs down sampling operation. Here max pooling is used in this study. Batch normalization layer is added between convolution and Relu layer to normalize the determined feature maps by convolution layer. Next is fully connected layer that is fully connected with the previous layer inputs. Figure 4 depicts the proposed CNN architecture.

A simple CNN architecture is more appropriate than pre trained models like Alex Net or Google net for the proposed work. Since here, the classification task is to classify two or three classes of emotions those require less complex CNN architectures than other pre trained models like image net with thousand classes of images. Moreover the domain of training data would be fully different so domain adaptation (transfer learning) would not be accurate in this case of scalogram images as pre-trained CNNs are trained with the images of several objects. Hence, in the proposed CNN model, two set of Convolution, batch normalization, ReLU and max pooling layer is used to recognize emotions.

3 Implementation

The proposed methodology is implemented in a system with 12 GB RAM and Intel(R) core(TM) Intel i5 processor with 2.50 GHz speed using single GPU environment. This work is implemented using MATLAB R2018b.

In the proposed work, EEG signals are converted into scalogram images by using CWT. These images are fed into CNN. Since CNN is a deep learning approach, it does the entire feature engineering itself. While implementing CWT, to convert EEG into scalogram images, the elementary steps of decompositions were decided by using the number of voices per octave. So parameters for data creation were Voice per Octave (VOP) and Mother Wavelet. VOP ranged from 12 to 48. Best observed value is 38 in case of EEG emotion recognition. Three mother wavelets were analyzed -Morse wavelet, Bump and Amor (Analytic Morlet). Morse wavelet has given the better results. CWT contains plethora of frequency components to analyze signals and the time–frequency representation of a signal is termed as scalogram. To generate scalogram, first, filter bank is created where the signal length is the size of an EEG for one electrode having 7680 data values in case of DEAP database and 8000 in case of SEED database. Now the continuous wavelet transform is applied on the created filter bank. The time bandwidth product is kept as 60. CWT does not use constant window size. It uses smaller scale for high frequencies and larger scales for low frequencies. There are 120 scales used in CWT filter-bank to obtain scalogram for one EEG signal in which min value is 0.689988916500468 and max value is 666.893121863546.

The activation function used at fully connected layer is softmax. With the proposed CNN model, several parameters were evaluated at their different values like number of filters and filter size, number of convolutional layers, number of epochs, initial learning rate, bias learning rate and batch size. Good results are obtained with batch size 100, filter size 4 × 4, number of filters 16, no. of convolution layers 2, initial learning rate 0.0001, bias learning rate 0.001 at varying number of epochs for different cases.

Three different experiments were carried out to demonstrate the proposed subject independent model. In experiment 1 and 2 different subjects’ data were used for training and testing and in experiment 3, different databases were used for training and testing.

3.1 Experiment 1: emotion recognition with DEAP database

The DEAP database is created using 40 videos (stimuli) and the EEG data is collected from 32 different electrodes. In this experiment, two cases were considered. In the first case, all frontal electrodes namely fP1, fP2, F3, F4, F7, F8, FC5, FC6, FC1 and FC2 are used to obtain the scalogram using CWT since frontal brain is related to emotions (Alarcao and Fonseca 2017). 30 subjects’ data have been used to train and two subjects’ data is used to test the classifier. In the second case, all the 32 electrodes are used to obtain the scalogram using CWT. The data size is shown in Table 3.

The emotions are classified into high/low valence and high/low arousal. In order to cope up with the class imbalance problem in both the Valence and Arousal classes, valence and arousal thresholds are adjusted. For arousal, threshold obtained is 5.23 means if the arousal rating is less than or equal to 5.23, the EEG corresponding to this rating would be in low arousal class otherwise in high arousal class. Similarly for valence, the threshold value is 5.04. If the participant’s rating is less than or equal to 5.04, the corresponding EEG belongs to negative valence class and if the participant’s rating is greater than 5.04, the corresponding EEG belongs to positive valence class.

For three emotion classification, the valence ratings are divided into three parts. One to three belongs to negative class, four to six belonged neutral class and seven to nine rated videos belonged to positive class. But the data distribution was highly imbalanced in three classes. To deal with the stated problem, rating range has been adjusted so that data distribution becomes nearly balanced. Updated ranges of rating are 1.0 to 4.1 for negative, greater than 4.1 to less than 6.7 for neutral class and 6.7 to 9.0 for positive class for valence. The results are shown in Table 4.

3.2 Experiment 2: emotion recognition with SEED database

SEED data contains recordings of 4 min with 200 samples/s. Here in this experiment, last 1 min data is used. Data is recorded in three sessions. Only first sessions’ data is used for the study. All 15 subjects’ data for every 15 stimuli is selected for experiment. Two cases for number of electrode selection have been considered in this SEED database too. One is all 10 frontal electrodes and second is total 62 electrodes. The data size is shown in Table 5.

During high/low valence classification, the data corresponding to neutral stimuli is dropped out. The data corresponding to negative class is used for low valence and positive class is used for high valence. Table 6 shows obtained results.

3.3 Experiment 3: emotion recognition with cross database criteria

Since SEED data is recorded for three classes, positive, neutral and negative but DEAP data is recorded according to valence arousal rating, a kind of mapping is required to implement a cross database approach for emotion recognition system. For this experiment, videos are selected from DEAP database according to valence rating. Valence rating threshold selection for data division in three class of emotion is done as described in Sect. 3.1. Figure 5 shows the mapping between DEAP and SEED data and data distribution among three classes of valence based on Participants’ ratings.

Further scalogram images from DEAP with all 32 subjects data is used to train the CNN model and tested with the scalogram images from SEED with 15 subjects. Obtained best results are shown in Table 7. As stated earlier DEAP is recorded with 32 electrodes cap and SEED is recorded with 62 electrodes cap. In cross database criteria 10 frontal electrodes are selected for experiment.

Now for two class classification, in seed data, neutral class data is dropped out. The positive class of SEED data is mapped with high valence class of DEAP data and Negative class of SEED data is mapped with low valence class of DEAP data. Arousal classification is not possible in this case since arousal statistics of videos is not provided in the SEED database. Results are shown in Table 8.

4 Results and discussion

A comparison graph for different experiments for three emotions classification is shown in Fig. 6. First bar shows the classification accuracy when the CNN is trained with DEAP data and testing done with the same DEAP data. Second bar shows the classification accuracy when the CNN trained with DEAP data and testing performed with the same SEED data and so on.

From the experiments, it is found that,

-

(a)

From the experiment 1 and 2 (Tables 4 and Table 6), it is clear that frontal electrodes are better for emotion recognition as compared to all electrodes in case of valence and arousal classification, since the results obtained with frontal electrodes is better as compared to all 32 electrodes in case of DEAP and all 62 electrodes in case of SEED data.

-

(b)

Experiment 2 shows that the accuracy of seed to seed is higher and it may be due to the stimulus videos used in Seed data set creation. That means the videos were capable to induce emotions distinctly in SEED data. For example, two persons are fighting in a video. By looking this video, one subject may be sad and other person may be angry on one of the fighting persons according to the situation portrayed in the video. So sad and angry are different emotions having different valence levels. From the results, it seems that in SEED, stimuli videos were strong enough to portray distinct emotions.

-

(c)

Experiment 3 shows that the accuracy of the classifier is more when the model is trained with DEAP dataset. It shows that DEAP dataset gives more generalization than SEED dataset. Generalization refers to how well the model learnt so that it can accurately classify data from new subjects those were not seen by the model earlier during training. From Tables 7 and 8, it is observed that the recognition accuracy is better when the model is trained with DEAP data and tested with SEED data.

The proposed method is also compared with other research based on subject independent methodology. Table 9 shows performance comparison with other existing methods in case of high/low valence and high/low arousal classification with DEAP database. Table 10 shows performance comparison for three emotions classification using cross dataset criteria.

5 Conclusion

This paper proposes a Deep CNN model for subject independent emotion recognition from EEG Data. In order to exploit the image processing capability of CNN, in the proposed methodology, scalogram image of EEG is used as the input to the CNN. The low/high valence and arousal thresholds are selected in such a way that the dataset is almost balanced for classification. The experiments show that the EEG from frontal electrodes contains information about emotion compared to other electrodes that supports the existing theory. In order to evaluate the proposed model as a pure subject independent emotion recognition system, cross database criteria is also used for evaluation. In cross database criteria, the model is trained with one dataset and tested on another dataset for better generalization. The proposed model performs better when it is trained using the benchmark DEAP dataset. The proposed model is found to be effective in subject independent emotion recognition in terms of classification accuracy compared to the state of the art models. In the future work, attention mechanism on different brain region may be employed to improve the classification accuracy for subject independent emotion recognition with EEG signals.

References

Ackermann P, Kohlschein C, Bitsch JÁ, Wehrle K, Jeschke S (2016) EEG-based automatic emotion recognition: feature extraction, selection and classification methods. In: e-Health networking, applications and services (Healthcom), 2016 IEEE 18th international conference on. IEEE, pp 1–6

Alarcao SM, Fonseca MJ (2017) Emotions recognition using EEG signals: a survey. IEEE Trans Affect Comput 10(3):374–393

Bolós VJ, Benítez R (2014) The wavelet scalogram in the study of time series. In: Advances in differential equations and applications. Springer, Cham, pp 147–154

Bradley MM, Lang PJ (1994) Measuring emotion: the self-assessment manikin and the semantic differential. J Behav Ther Exp Psychiatry 25(1):49–59

Fazli S, Popescu F, Danóczy M, Blankertz B, Müller KR, Grozea C (2009) Subject-independent mental state classification in single trials. Neural Netw 22(9):1305–1312

Jayaram V, Alamgir M, Altun Y, Scholkopf B, Grosse-Wentrup M (2016) Transfer learning in brain-computer interfaces. IEEE Comput Intell Mag 11(1):20–31

Jirayucharoensak S, Pan-Ngum S, Israsena P (2014) EEG-based emotion recognition using deep learning network with principal component based covariate shift adaptation. Sci World J 2014. https://doi.org/10.1155/2014/627892

Kareem A, Kijewski T (2002) Time-frequency analysis of wind effects on structures. J Wind Eng Ind Aerodyn 90(12–15):1435–1452

Klem GH, Lüders HO, Jasper HH, Elger C (1999) The ten-twenty electrode system of the International Federation. Electroencephalogr Clin Neurophysiol 52(3):3–6

Koelstra S, Muhl C, Soleymani M, Lee JS, Yazdani A, Ebrahimi T et al (2011) Deap: a database for emotion analysis; using physiological signals. IEEE Trans Affect Comput 3(1):18–31

Lakhan P, Banluesombatkul N, Changniam V, Dhithijaiyratn R, Leelaarporn P, Boonchieng E et al (2019) Consumer grade brain sensing for emotion recognition. IEEE Sens J 19(21):9896–9907

Lan Z, Sourina O, Wang L, Scherer R, Müller-Putz GR (2018) Domain adaptation techniques for EEG-based emotion recognition: a comparative study on two public datasets. IEEE Trans Cogn Dev Syst 11(1):85–94

Lang PJ (1995) The emotion probe: studies of motivation and attention. Am Psychol 50(5):372

Li X, Song D, Zhang P, Yu G, Hou Y, Hu B (2016) Emotion recognition from multi-channel EEG data through convolutional recurrent neural network. In: 2016 IEEE international conference on bioinformatics and biomedicine (BIBM). IEEE, pp 352–359

Li Y, Zheng W, Zong Y, Cui Z, Zhang T, Zhou X (2018) A bi-hemisphere domain adversarial neural network model for EEG emotion recognition. IEEE Trans Affect Comput. https://doi.org/10.1109/TAFFC.2018.2885474

Li J, Qiu S, Shen Y, Liu C, He H (2019) Multisource transfer learning for cross-subject EEG emotion recognition. IEEE Trans Cybern 20(7):3281–3293

Liang Z, Oba S, Ishii S (2019) An unsupervised EEG decoding system for human emotion recognition. Neural Netw 116:257–268

Mauss IB, Robinson MD (2009) Measures of emotion: a review. Cogn Emot 23(2):209–237

Mert A, Akan A (2018) Emotion recognition from EEG signals by using multivariate empirical mode decomposition. Pattern Anal Appl 21(1):81–89

Moon SE, Jang S, Lee JS (2018) Convolutional neural network approach for EEG-based emotion recognition using brain connectivity and its spatial information. In: 2018 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 2556–2560

Murugappan M, Ramachandran N, Sazali Y (2010) Classification of human emotion from EEG using discrete wavelet transform. J Biomed Sci Eng 3(04):390

Pandey P, Seeja KR (2019a) Emotional state recognition with EEG signals using subject independent approach. In: Mishra D, Yang XS, Unal A (eds) Data science and big data analytics. Lecture Notes on Data Engineering and Communications Technologies, vol 16. Springer, Singapore, pp 117–124

Pandey P, Seeja KR (2019b) Subject-independent emotion detection from EEG signals using deep neural network. In: International conference on innovative computing and communications. Springer, Singapore, pp 41–46

Pandey P, Seeja KR (2019c) Subject independent emotion recognition from EEG using VMD and deep learning. J King Saud Univ-Comput Inf Sci. https://doi.org/10.1016/j.jksuci.2019.11.003

Petrantonakis PC, Hadjileontiadis LJ (2009) Emotion recognition from EEG using higher order crossings. IEEE Trans Inf Technol Biomed 14(2):186–197

Rayatdoost S, Soleymani M (2018). Cross-corpus eeg-based emotion recognition. In: 2018 IEEE 28th international workshop on machine learning for signal processing (MLSP). IEEE, pp 1–6

Russell JA (1980) A circumplex model of affect. J Pers Soc Psychol 39(6):1161–1178

Salama ES, El-Khoribi RA, Shoman ME, Shalaby MAW (2018) EEG-based emotion recognition using 3D convolutional neural networks. Int J Adv Comput Sci Appl 9(8):329–337

Song T, Zheng W, Song P, Cui Z (2018) EEG emotion recognition using dynamical graph convolutional neural networks. IEEE Trans Affect Comput. https://doi.org/10.1109/TAFFC.2018.2817622

Sourina O, Liu Y (2011) A fractal-based algorithm of emotion recognition from EEG using arousal-valence model. In: International Conference on Bio-inspired Systems and Signal Processing, Vol 2. SCITEPRESS, pp 209–214

Wang XW, Nie D, Lu BL (2011) EEG-based emotion recognition using frequency domain features and support vector machines. In: International conference on neural information processing. Springer, Berlin, pp 734–743

Zheng WL, Lu BL (2015) Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans Auton Ment Dev 7(3):162–175

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Pandey, P., Seeja, K.R. Subject independent emotion recognition system for people with facial deformity: an EEG based approach. J Ambient Intell Human Comput 12, 2311–2320 (2021). https://doi.org/10.1007/s12652-020-02338-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-020-02338-8