Abstract

Methods to numerically quantify uncertainties in hyperbolic equations can be divided into intrusive and non-intrusive techniques. Standard intrusive methods such as Stochastic Galerkin yield oscillatory solutions in the vicinity of shocks and require a new implementation. The more advanced Intrusive Polynomial Moment (IPM) method necessitates a costly solution reconstruction, but promises bounds on oscillatory over- and undershoots. Non-intrusive methods such as Stochastic Collocation (SC) can suffer from aliasing errors, and their black-box nature comes at the cost of loosing control over the time evolution of the solution. In this paper, we derive an intrusive method, which adaptively switches between SC and IPM updates by locally refining the quadrature set on which the solution is calculated. The IPM reconstruction of the solution is performed on the quadrature set and uses suitable basis vectors, which reduces numerical costs and allows non-oscillatory reconstructions. We test the method on Burger’s equation, where we obtain non-oscillating solution approximations fulfilling the maximum principle.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Hyperbolic equations play an important role in various research areas, ranging from the Euler equations in fluid mechanics to the magnetohydrodynamics (MHD) equations in plasma physics. The general form of a scalar, one-dimensional hyperbolic equation is

where \(u:{{\mathbb {R}}}^+\times {{\mathbb {R}}}\rightarrow {{\mathbb {R}}}\) is the unknown solution which depends on time \(t\in {{\mathbb {R}}}^+\) and the spatial variable \(x\in {{\mathcal {D}}}\subset {{\mathbb {R}}}\). The physical flux is given by \(f:{{\mathbb {R}}}\rightarrow {{\mathbb {R}}}\). To allow discontinuous solutions, the notion of weak solutions is used. A weak solution fulfills

meaning that u does not need to be differentiable in the spatial variable and time. Since condition (2) allows multiple possible solutions, the concept of entropy solutions has been introduced to pick a unique physically meaningful weak solution. A convex function \(s:{{\mathbb {R}}}\rightarrow {{\mathbb {R}}}\) is called an entropy function for (1) if the integrability condition \(s'(u)f'(u) = F'(u)\) holds. If a weak solution fulfills

for all entropy functions s, it is called an entropy solution. For further details, see [1, Chapter 3.8]. Important properties of the entropy solution u are

where v is an entropy solution to (1) with initial condition \(v_{\text {IC}}\) [2, Chapter 2.4]. Property (3a), known as \(L^1\) stability, guarantees boundedness of oscillations, playing a key role in the construction of numerical schemes for solving scalar problems (1) as this ensures convergence to a unique, physically meaningful solution [see (1, Chapter 15.3)]. Property (3b) is known as the maximum principle and ensures bounds on the solution. Numerical schemes which are constructed to satisfy these bounds can be found in [3,4,5,6,7].

To incorporate the fact that solutions to (1) are in practice influenced by uncertainties, various methods to calculate moments of the solutions such as expected value and variance have been constructed. Uncertainties can enter through model parameters as well as initial or boundary conditions. Since uncertain parameters can be expressed by uncertain initial conditions [see (8)], this type of problem is studied in the following. The general form of a scalar hyperbolic conservation law with uncertain initial condition is

where \(\xi \in \Theta\) is a random variable with the probability density function \(f_{\Xi }(\xi )\). To avoid a high-dimensional solution, one aims at calculating the moments of u for a given set of basis functions \(\varphi _i(\xi )\) where \(i\in \{0,1,\ldots ,N\}\) is the polynomial order. A common choice of the basis functions are so-called chaos polynomials [9], which for smooth data guarantee spectral convergence toward the exact solution when using the moments as coefficients of a polynomial approximation, see [10]. The moments are defined to be

Methods to calculate these moments can be divided into two classes, namely intrusive and non-intrusive methods. A standard non-intrusive method is the so-called Stochastic Collocation (SC) method, see [11,12,13,14]. It makes use of a quadrature rule to calculate the moments, meaning that

where the solution u needs to be calculated at \(N_q\) quadrature points \(\xi _k\) with the corresponding quadrature weights \(w_k\). Since the solution evaluated at a fixed quadrature point \(\xi _k\) is deterministic, the time evolution of the moments can be described by standard deterministic solvers applied to equation (4) for fixed \(\xi\). Hence, the deterministic function \(u(t,x,\xi _k)\) in (6) is given by the solution of

Given a numerical scheme to solve the deterministic problem (1), the implementation of Stochastic Collocation can be treated as a black-box approach. Furthermore, the resulting computer program is embarrassingly parallel, allowing an efficient computation of the SC solution. The error of this solution is given by the classical quadrature error. As a consequence, the gPC approximation can suffer from aliasing effects, see [15, Chapter 2]. Furthermore, the black-box approach which facilitates the implementation comes at the cost of lacking control over the solution at intermediate time steps.

Intrusive methods aim at solving the moment system, which describes the exact time evolution of the moments. This system can be derived by multiplying (4) with the basis functions \(\varphi _i\) for \(i = 0,\ldots ,N\) as well as the probability density \(f_{\Xi }\) and integrating over \(\xi\). Using the vector notation \(\hat{\varvec{{{{u}}}}} = ({{\hat{u}}}_0,\ldots ,{{\hat{u}}}_N)^T\) and \(\varvec{\varphi } = (\varphi _0,\ldots ,\varphi _N)^T\), the resulting moment system becomes

This system describes the exact time evolution of the first \(N+1\) moments. However, to actually solve the moment system (7), one needs to find a closure, as the flux term depends on the unknown solution u, whereas the only known variables are the first \(N+1\) moments. Hence, one needs to find a function

to be able to describe the time evolution of the first \(N+1\) moments via the moment system (7). Plugging the closure (8) into the moment system (7) yields the closed moment system

which can now be solved as the \(N+1\) equations describe the time evolution of \(N+1\) moments. The closure \({{\mathcal {U}}}\) should be constructed such that the so-called moment constraint

is fulfilled while guaranteeing a hyperbolic moment system. A well-known closure which satisfies these two desirable properties for scalar equations is the Stochastic Galerkin (SG) method [16]

If all integrals appearing in the moment system (9) can be computet exactly, the method does not suffer from interpolation errors. Consequently, we replace the aliasing error of SC methods with a closure error. Smooth problems can nicely be approximated using the SG method, see, for example, [17]. Spectral convergence has been proven in [18] for kinetic equations and in [8] for Burgers’ equation assuming sufficiently smooth solutions. However, solutions of hyperbolic equations tend to develop discontinuities in the physical as well as the random space. Therefore, the Stochastic Galerkin method generally converges slowly since the closure (10) suffers from Gibbs phenomena. The oscillatory over- and undershoots furthermore violate important properties of the entropy solution as given in (3), hence violating fundamental analytic findings for hyperbolic equations. Furthermore, the implementation of the SG method requires writing an entirely new code since the deterministic solver cannot be reused. All these shortcomings of Stochastic Galerkin indicate that the Stochastic Galerkin method is not suited for closing the moment system of hyperbolic problems. A non-oscillatory closure which fulfills the maximum principle is the Intrusive Polynomial Moment (IPM) closure [19]. For applications and further properties of the IPM method, see [8, 19,20,21,22,23,24]. The IPM closure is constructed such that

where s(u) is a convex entropy for the deterministic problem (1). To calculate the closure, one makes use of the adjoint approach, which lets us rewrite the closure problem as

Here, the Legendre transformation of the entropy \(s^{*}(\varvec{\lambda }^T \varvec{\varphi })\) is used. For more details on the adjoint approach, see [25, Chapter 5]. The need to solve an optimization problem with \(N+1\) parameters to calculate the IPM closure (11) makes the IPM method numerically expensive, especially if a large number of moments are needed. However, nice approximation properties, the hyperbolicity of the IPM moment system and the maximum principle make IPM an attractive method for hyperbolic problems.

In this paper, we connect intrusive and non-intrusive methods to come up with a strategy to combine benefits of both approaches, i.e., saving computational costs while achieving a good solution approximation. We review the connection of collocation and intrusive methods: When discretizing the moment system (7) with an inaccurate quadrature rule, the computed solution will match the collocation result. To avoid the resulting aliasing errors in regions with high uncertainty, the quadrature is locally refined, meaning that we switch to intrusive methods. Consequently, a closure needs to be used. The arising computationally expensive calculation of a non-oscillatory closure on the refined quadrature set is performed by introducing a set of basis vectors spanning the solution at the quadrature points. By choosing basis functions lying in the null space of the moment constraint, the numerical reconstruction costs can be reduced. Computing the closure on the quadrature points allows choosing reconstructions with minimal total variation. The resulting method is intrusive; however, when switching to the collocation solution, we circumvent the expensive reconstruction step of the standard intrusive method.

The paper is structured as follows: After the introduction in Sect. 1, we investigate the numerical approximation of integrals showing up in the moment system in Sect. 2. This investigation shows a connection between intrusive and non-intrusive methods. In Sect. 3, we introduce the quadrature-based closure (QBC) approach. The implementation of the closure as well as the quadrature refinement is discussed in Sect. 4. Section 5 shows the connection between the QBC and the IPM method. In Sect. 6, we present numerical results, and Sect. 7 summarizes our findings and gives an outlook on future work.

2 Transition from stochastic collocation to Stochastic Galerkin

We first investigate the moment system (7) discretized in the random variable \(\xi\). This gives a connection between collocation and intrusive methods, which we use to discuss potential error sources of collocation methods. Furthermore, we use this link to later adaptively switch between a collocation and an intrusive method. Note that this connection is well known in various areas such as Discontinuous Galerkin (DG) (see, for example, [26]) and is reviewed in this section for the sake of completeness.

Let us start by looking at the moment system (7), when making use of the definition of the moments (5), which gives

for \(i = 0,\ldots ,N\). This system describes the exact time evolution of the first \(N+1\) moments. In order to calculate the time evolution numerically, one needs to discretize the moment system. For now, x and t remain continuous and we only discretize the integral evaluations with the help of a quadrature rule

where \(\xi _k\) is the \(k{\text {th}}\) quadrature point with the corresponding quadrature weight \(w_k\). Approximating the terms of the moment system with this quadrature yields

Now we must choose a suitable number of quadrature points \(N_q\) to capture the correct time evolution of the moments. First, we see what happens if \(N_q\) equals the number of moments, meaning that \(N_q = N+1\). This appears to be a striking choice, since it means that especially the time evolution equations for the higher moments are approximated poorly. Nevertheless, we discuss this case because it yields the Stochastic Collocation solution:

Proposition 1

Assume a quadrature rule with\(N+1\)quadrature points being exact for polynomials\(p\in {{\mathbb {P}}}^{2N}\)under the integral density\(f_{\Xi }\)exists, i.e.,

When making use of this quadrature rule to discretize the exact moment system (7), the resulting time evolution of the moments is equivalent to the evolution of the Stochastic Collocation method (6).

Proof

Using the quadrature rule (13) to discretize the exact moment system (7) yields the discrete moment system

Making use of the matrix \(\varvec{A}\in {{\mathbb {R}}}^{N+1\times N+1}\) with \(a_{ik}:=w_k\varphi _i(\xi _k)f_{\Xi }(\xi _k)\), the discrete moment system can be rewritten as

The inverse of \(\varvec{A}\) exists and is given by \(\varvec{A}^{-1} = \left( \varphi _i(\xi _j)\right) _{i,j = 0,\ldots ,N}\), since

Multiplication of the discrete moment system (15) with \(\varvec{A}^{-1}\) decouples this system leading to

which is the Stochastic Collocation (SC) method. \(\square\)

Remark 1

The extension to multidimensional problems is straightforward when using tensorized grids. However, this strategy cannot be applied for sparse grids.

This result shows that the Stochastic Collocation solution can be seen as the solution of the moment system when making use of an inaccurate quadrature rule. Consequently, especially the time evolution of high-order moments is described poorly due to integration errors when evaluating the physical flux.

Our goal is to improve the time evolution of the moment system in regions with high uncertainty. To gain further accuracy, one needs to add more quadrature points to the discrete moment system (12). Again defining a matrix \(\varvec{A}\in {{\mathbb {R}}}^{N+1\times N_q}\) with \(a_{ik}:=w_k\varphi _i(\xi _k)f_{\Xi }(\xi _k)\), the discrete moment system (12) can be rewritten as

In this case, the \(N+1\) equations of the moment system no longer fully determine the time evolution of the solution u, due to the fact that one needs to know this solution at \(N_q>N+1\) quadrature points. Hence, to obtain a better integral approximation of the flux, we need to feed additional information to the moment equations (i.e., define a closure). In order to actually achieve an improved result compared to collocation, this information should not violate the moment constraint and at the same time fulfill properties of the entropy solution (3). Before specifying the choice of the remaining degrees of freedom, we derive a method for choosing the degrees of freedom in \(u(t,x,\xi _k)\) for \(k = 1,\ldots ,N_q\) without violating the moment constraint.

Remark 2

The inexact integration of the flux function when using collocation methods also arises in DG methods when applying nodal instead of modal schemes: Nodal DG schemes can be seen as collocation methods. They suffer from quadrature errors of the physical fluxes, which can lead to poor results as well as stability issues. A strategy to avoid these problems has been presented in [26], where the flux evaluation uses the solution values belonging to a lower-order polynomial (i.e., high-order coefficients are set to zero), guaranteeing an exact integration. The approach taken in this paper is different since we intend to keep all moments fixed but add quadrature points to obtain an improved accuracy of integral approximations.

3 Quadrature-based closure approach

The moment constraint computed with the chosen quadrature rule (13) can be rewritten as

where \(\varvec{u} := (u(t,x,\xi _1),\ldots ,u(t,x,\xi _{N_q}))^T\) is the solution evaluated at the quadrature points. Now the vector \(\varvec{u}\) needs to be chosen such that the moment constraint (18) is fulfilled.

The main idea of the quadrature-based closure is to find a suitable basis to span the solution evaluated at the quadrature points. This facilitates the task of adding admissible information to the moment system. For this, we calculate the kernel of \(\varvec{A}\), which is given by

Choosing the vectors \(\varvec{v}_i\) to represent the solution, one needs \(N+1\) additional linearly independent vectors \(\varvec{b}_0,\ldots ,\varvec{b}_N\) to span the entire solution space \({{\mathbb {R}}}^{N_q}\).Footnote 1 The solution is now given by

and hence we shifted the problem of choosing \(\varvec{u}\) to choosing the coefficient vectors \(\varvec{\alpha }\) and \(\varvec{\beta }\). Luckily, the coefficient vector \(\varvec{\beta }\) is directly given by the moment constraint: Plugging the solution reconstruction (19) into the moment constraint (18) leads to

with \(c_{ij} = \sum _{l} a_{il}b_{lj}\), where \(b_{lj}:=\left( \varvec{b}_j\right) _l\). Hence, the coefficients \(\varvec{\beta }\) are directly given by the moment vector \(\hat{\boldsymbol{{u}}}\). The coefficients \(\varvec{\alpha }\) do not influence the moments of the solution and can therefore be picked freely. These coefficients resemble the additional information we need to give to the moment system in order to apply an improved quadrature rule. Plugging \(\varvec{u}\) into the discretized moment system (12) gives

It can be seen that we do not have an equation describing the time evolution of \(\alpha _i\). Clearly, the choice of \(\varvec{\alpha }\) is important since it influences the time evolution of \(\hat{\varvec{{{{u}}}}}\). This is due to the fact that the vectors \(\varvec{v}_i\) span the kernel of the moment constraint, but do not lie in the null space of the flux term. The degrees of freedom we obtain by adding more quadrature points to the moment system to improve the Collocation solution can now easily be picked by choosing values for \(\varvec{\alpha }\). Our first choice yields the Stochastic Galerkin solution.

Proposition 2

Representing the solution by (19) while choosing the degrees of freedom\(\varvec{\alpha }\)such that the solution minimizes

yields the Stochastic Galerkin method.

Proof

We need to pick \(\varvec{\alpha }\) such that we minimize the discrete \(L^2\) norm (21), hence

meaning that

Hence, one needs to choose \(\varvec{\alpha }\) such that

therefore \(\alpha _i = 0\). It remains to show that

meaning that we must show \((\varvec{B}\varvec{C}^{-1})_{ki}{\mathop {=}\limits ^{!}} \varphi _i(\xi _k)\). For this, we note that \(\varvec{B}\) can be written as

where \((l_{ni})_{n,i} =: \varvec{L}\in {{\mathbb {R}}}^{N+1\times N+1}\) is a transformation matrix. Hence,

Defining \(\varvec{T}\in {{\mathbb {R}}}^{N+1\times N_q}\) with \(t_{kn} = \varphi _n(\xi _k)\) yields

Hence \((\varvec{B}\varvec{C}^{-1})_{ki} = t_{ki} = \varphi _i(\xi _k)\), which concludes the proof. \(\square\)

Remark 3

Note that for linear problems (e.g., \(f(u) = \xi u\)), \(N+1\) quadrature points suffice to discretize the SG moment system in \(\xi\). Therefore, \(\varvec{\alpha }\) has dimension zero, meaning that the SG and collocation yield the same result.

The choice of picking the degrees of freedom such that one minimizes the weighted \(L^2\) norm of the solution is convenient in the context of spectral convergence for smooth solutions. However, it does not fit to the theory of hyperbolic equations, which is based on \(L^1\) properties. Particularly, in non-smooth regimes, the minimization of the \(L^2\) norm leads to oscillatory approximations violating the maximum principle, see Sect. 6.2. Therefore, we choose the resulting degrees of freedom in accordance with the \(L^1\) stability property (3a). When including the random variable \(\xi\), this stability result becomes

where u and v are entropy solutions of (4) with two different initial conditions \(u_{\text {IC}},v_{\text {IC}}\). One chooses \(v_{\text {IC}}(x,\xi ) = u_{\text {IC}}(x,\xi +h)\), multiplies (22) with 1 / h, and lets h go to zero to get

which shows that oscillations w.r.t. \(\xi\) are bounded by oscillations of the initial condition. Therefore, \(\varvec{\alpha }\) is now picked such that the reconstruction has minimal oscillations: The total variation in \(\xi\) is denoted by

Since we are only interested in the solution on a finite set of quadrature points, the discrete total variation

is used. The coefficient vector \(\varvec{\alpha }\) is now picked such that \(\text {TV}_{\Delta } (\varvec{u})\) is minimized. The full QBC moment system is then given by

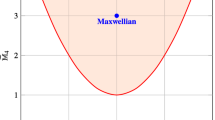

To demonstrate the non-oscillatory behavior of the chosen QBC reconstruction (23b) and (23c), we approximate a shock which is shown in Fig. 1: After computing the moments of this shock, the solution is reconstructed on the quadrature points when using the discrete \(L^2\) norm as well as the total variation. The reconstructions match the first ten moments at 15 quadrature points, i.e., \(\varvec{\alpha }\in {{\mathbb {R}}}^{5}\) needs to be determined. A non-oscillatory discrete solution which nicely represents the shock on the quadrature points is obtained when choosing \(\varvec{\alpha }\) such that the solution minimizes the discrete total variation. When minimizing the \(L^2\) norm, the solution at the quadrature points oscillates, destroying the maximum principle and leading to a poor approximation. Comparing the solution to the continuous Stochastic Galerkin reconstruction \(u_{SG} = \hat{\varvec{{{{u}}}}}^T\varvec{\varphi }\) shows that the QBC reconstruction ansatz when minimizing the \(L^2\) norm resembles the Stochastic Galerkin solution evaluated at the quadrature points as shown in Proposition 2.

As discussed in Sect. 2, non-intrusive methods yield a poor approximation of the exact moment system. Therefore, our goal is to add quadrature points in regions with high uncertainty. The QBC allows adding these quadrature points without violating the moment constraint or introducing oscillations. In the following section, we discuss the implementation of the refinement process. Furthermore, we point out how the QBC moment system (23) can be implemented efficiently.

4 Implementation and refinement

4.1 Implementation

The implementation of the QBC moment system (23) does not require writing an entirely new program. In fact, most of the code of a given finite-volume (FV) implementation for solving the deterministic problem (1) can be reused.

The key idea of FV solvers is to divide the spatial domain into cells. The numerical solution is now a collection of intermediate cell values at discrete time steps \(t_n\)

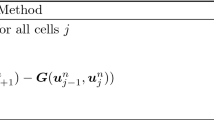

Since only the discrete solution for \(n = 0\) is known, a time update formula H, updating the solution by a time step of \(\Delta t,\) needs to be derived. The FV implementation now calls H repeatedly until the end time \(t_{\text {end}} = N_t\Delta t\) is reached. A common choice for the update formula is

where

The function g is the numerical flux, which needs to be consistent with the physical flux, i.e., \(g(u,u) = f(u)\). For better readability, we assume that the numerical fluxes only depend on two states. The extension to higher order stencils is straightforward.

Our goal is to calculate the solution of the QBC moment system (23). To solve this system, we again use a finite-volume approach, meaning that we represent the moments by

where \(\varvec{u}_{j}^n = (u_{j,1}^n,\ldots ,u_{j,N_q}^n)^T\) with

One strategy to evolve the moment vector \(\hat{\varvec{{{{u}}}}}_{j}^n\) in time according to (23) is to reconstruct the solution at every quadrature point, meaning that we compute \(\varvec{u}\). On the discrete level, the reconstructed solution is given by

where \(\hat{\varvec{{{\alpha }}}}_j^n\) is given by

After that, the reconstructed solution in every cell at each quadrature point is evolved in time using the time update function of the FV implementation

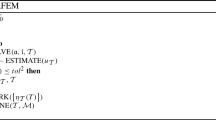

The time-updated moments can then be calculated by (25). This process is repeated until \(t_{\text {end}}\) is reached. The extension of a given finite-volume implementation to the QBC scheme can be achieved according to Fig. 2. We use \(\varvec{u}_{\Delta }^n\) to denote the field containing the solution at all spatial cells and quadrature points for time step \(t_n\).

4.2 Refinement

One can now think about which quadrature should be chosen. For this, we would like to take advantage of the fact that intrusive methods know the behavior of the solution in every time step. In contrast to the non-intrusive black-box approach, adapting the quadrature is possible: If a cell \(I^*\) with ‘high uncertainty’ is detected at spatial position \(x_i\) and time step \(t_m\), we are now able to refine the quadrature locally by performing the reconstruction step (26) in \(I^*\) with a more accurate quadrature, which we denote by

where \({\bar{N}}_q > N_q\). For ease of notation, the solution at the new quadrature points is denoted by

Defining the matrix \(\varvec{{\bar{A}}}\in {{\mathbb {R}}}^{N+1\times {\bar{N}}_q}\) with \({\bar{a}}_{il} = {\bar{w}}_l\varphi _i({\bar{\xi }}_l)f_{\Xi }({\bar{\xi }}_l)\), we compute \(\varvec{{\bar{v}}},\varvec{{\bar{b}}}\) and \(\varvec{{\bar{C}}}\) analogous to before making use of \(\varvec{{\bar{A}}}\) instead of \(\varvec{A}\). The reconstruction on the finer quadrature set given a moment vector \(\hat{\varvec{{{{u}}}}}_j^n\) becomes

with

Assume that we wish to perform a time update after each cell has been reconstructed by either the standard or the refined quadrature rule. We now update the solution in the refined cell \(I^*\) in time by

for \(l = 1,\ldots ,{\bar{N}}_q\). Here, one notices that solution values of the neighboring cells of \(I^*\) also need to be known on the refined quadrature points. Therefore, cells which have neighbors with a fine as well as a standard reconstruction need to be reconstructed on both the fine and the standard quadrature set. Using Clenshaw–Curtis quadrature sets allows reconstructing these interface cells only for the fine quadrature rule since the quadrature points of different refinement levels are nested. In the following, we present the QBC algorithm with refinement for non-nested quadrature rules.

Assume that a spatial grid as depicted in Fig. 3 is given and on each cell we know the moment vector. The cell types are then identified by choosing a function which indicates high uncertainty. Here, we use the highest order moments to identify non-smooth regimes in the uncertain domain similar to [27]. Note that this strategy has also been applied for DG methods [28]. To avoid unwanted artifacts from even or odd functions, we take the last two moments and divide by the solution’s squared L\(^2\) norm as smoothness indicator. If the indicator in a given cell lies above a specified value \(\eta\), i.e.,

we indicate it as a fine cell. Cells which are not fine, but have a neighboring fine cell are transition cells. The cells which have a transition neighbor and are not fine are called interface cells. All remaining cells are coarse. In our example in Fig. 3, the two red cells have a variance lying above a specified value and are therefore fine (f). The neighboring cells are identified as transition (t), since they have fine neighbors, interface (i), since they are not fine and have a transition neighbor and coarse (c). In a next step, the reconstructions need to be computed: We do this on the fine quadrature grid if the cells are fine and on the coarse grid if they are coarse. For interface and transition cells, we compute both a coarse and a fine reconstruction. The next task is to evolve the reconstructions in time. One needs to perform a coarse time update for coarse and interface cells and a fine time update for the remaining cells, which are exemplarily illustrated once for each cell type in Fig. 3. The coarse time update stencil of (27) is depicted by downward pointing, and the fine stencil of (29) is depicted by upward pointing arrows. By looking at the dependencies of the stencils, one sees why it is necessary to reconstruct both the fine and the coarse solution for interface and transition cells. We then compute the time-updated moments using \(\varvec{A}\varvec{u}_j^{n+1}\) on coarse and interface cells and \(\varvec{{\bar{A}}}\varvec{{\bar{u}}}_j^{n+1}\) on transition and fine cells. The entire process is then repeated until the end time is reached.

We use the proposed refinement strategy to locally switch between collocation and standard QBC, i.e., the coarse quadrature set has \(N+1\) quadrature points. If a cell has high uncertainty, we add quadrature points by our refinement strategy, to improve the time evolution approximation of the moment system. We refer to this method as adaptive QBC. Note that switching to collocation updates does not mean the proposed method is non-intrusive. However, we can circumvent the expensive computation of a closure (i.e., picking \(\varvec{\alpha }\in {{\mathbb {R}}}^{N_q-N-1}\) by solving (23c)), since \(N_q-N-1=0\).

5 Interpretation as IPM closure

The derived closure can be interpreted as an Intrusive Polynomial Moment (IPM) method. This method represents the solution by the ansatz \({{\mathcal {U}}}_{ME}\) which minimizes the convex entropy \(\int _{\Theta } s(u) f_{\Xi }(\xi )\,{\mathrm{d}}\xi\) under the moment constraints \(\hat{\varvec{{{{u}}}}} = \int _{\Theta } u \varvec{\varphi } f_{\Xi }(\xi )\,{\mathrm{d}}\xi\), i.e.,

A standard computation using duality converts this problem into an unconstrained finite-dimensional problem. Indeed, using the Lagrangian L, we have

The Legendre transform \(s^{*}\) of the entropy fulfills

with \(u_{ME}(\varvec{\lambda }^T\varvec{\varphi }) = \left( s' \right) ^{-1}(\varvec{\lambda }^T\varvec{\varphi })\). Calculating the closure by solving an infinite-dimensional constrained optimization problem has now been reduced to finding the dual variables \(\varvec{\lambda }\) by solving the dual problem (32). This problem is finite-dimensional as well as unconstrained. Thus, we have

Inserting the IPM closure into (7) leads to the closed moment system

This is the system of equations of the IPM method. The solution to the IPM system has nice properties. First, it dissipates the entropy

Second, the IPM system is hyperbolic provided that the entropy density s is strictly convex. Additionally, the IPM method fulfills a maximum principle in the case of scalar problems. However, these two advantages come at the cost of solving an optimization problem with \(N+1\) unknowns at each time step in every spatial cell. In the following, we interpret the quadrature-based closure approach as an efficient strategy to solve the IPM closure if the number of quadrature points is only slightly larger than the number of moments: First, let us revisit the constraint optimization problem (31). The strategy of the dual ansatz is to parametrize all possible solutions which are potential minimizers of the given entropy. To determine the parameters such that the solution fulfills the moment constraint, the dual problem is solved. We take the approach of first ensuring that the moment constraint is fulfilled and after that, we minimize the entropy: In the case of orthogonal basis functions, one way to construct an ansatz which fulfills the moment constraint is

Note that we need to truncate the series by choosing a finite \(M>N\). Consequently, a truncation error is introduced, meaning that we cannot recover the IPM solution. However, we can avoid introducing a truncation error by first discretizing the integrals of the primal problem. In this case, the solution only needs to be reconstructed on a finite set of quadrature points. Again using \(u_k:=u(t,x,\xi _k)\), one obtains

Now the task of finding a parameterization which fulfills the constraint is easy: We again span the solution vector \(\varvec{u}\) as in (19), i.e.,

The coefficient vector \(\varvec{\beta }\) is uniquely defined by the moment constraint, see (20). Hence, we have a parametrization of all solutions fulfilling the moment constraint, where the number of parameters \(\alpha _i\) is finite, namely \(N_q-N-1\). We then choose these parameters such that the discrete entropy

is minimized. In our case, we choose this entropy to be the total variation. The main difference to the IPM method is that we construct the closure on the discretized level, enabling us to find a finite-dimensional parameterization of all admissible reconstructions from which we pick the one with the smallest entropy. Consequently, the problem of finding \(N+1\) dual variables \({{\hat{\boldsymbol{\lambda }}}}\) has been shifted to finding \(N_q-N-1\) reconstruction parameters \(\varvec{\alpha }\). Hence, the computational costs decrease if \(N_q-N-1<N+1\), i.e., in the transition between collocation and intrusive methods.

6 Results

In the following, we present results of the QBC method and compare them to results obtained with the SG method. To compare these different strategies, we follow [19] and solve the uncertain Burgers’ equation

on \(x\in [0,3]\) with the uncertain initial condition

The initial condition is a forming shock with uncertain position. We choose \(\xi\) to be uniformly distributed in \(\Theta = [-1,1]\). To ensure good integral approximations on \(\Theta\), we use a Gauss–Legendre quadrature rule. Parameters of the initial condition and the numerical scheme can be found in the following table:

The basis vectors \(\varvec{v}_i\) of the QBC are calculated by performing a singular value decomposition of \(\varvec{A},\) and the vectors \(\varvec{b}_i\) are chosen as

for \(i = 0,\ldots ,N\).

6.1 Convergence of expected value

In the following, we study the effects of varying the number of quadrature points \(N_q\) to study the \(L^1\) error of the expected value by

where \({{\hat{u}}}_{0,\text {ex}}(t_{\text {end}}, x)\) is the expected value (which is equal to the zeroth moment) of the exact solution at the final time \(t_{\text {end}}\). As discussed in Sect. 2, choosing \(N_q > N+1\) necessitates feeding information to the collocation moment system. The QBC picks these degrees of freedom to minimize the total variation, whereas the SG method minimizes the \(L^2\) norm. When applying the adaptive QBC, we can switch between different quadrature rules to locally refine the set of integration points. In the following, the number of quadrature points on the coarse level \(N_q^c\) equals the number of moments, i.e., \(N_q^c = N+1 = 15\). Hence, we perform collocation updates on the coarse level. We switch to intrusive methods whenever the smoothness indicator lies above a value of \(5 \times 10^{-4}\). The number of quadrature points on the fine level \(N_q\) is then varied to study the error of the expected value.

The resulting errors for different \(N_q\) are shown in Fig. 4. If \(N_q = 15\), all three methods resemble Stochastic Collocation, which is why they all have the same error. The methods differ in the choice of degrees of freedom when adding quadrature points. Taking a look at the SG method, improving the numerical integration has little effect. Moreover, the resulting error will start to grow after having added one quadrature point, leaving the SG method with a poorer approximation as the collocation result. In contrast to this, the QBC steadily decreases the error when adding quadrature points. Hence, the choice of degrees of freedom helps in improving the approximation of the integrals appearing in the moment system. The adaptive QBC shows a similar error decrease. Locally switching to collocation whenever the solution shows low uncertainty decreases the computational costs while leading to a slightly increased error.

6.2 Comparison expectation value and variance

In the following, we fix the number of quadrature points at 25. The adaptive QBC switches between collocation as well as QBC with 25 quadrature points. We start by looking at the expectation value and the variance in Fig. 5. The SG method shows a step-like approximation of the expectation value, whereas QBC and adaptive QBC show only small variations from the exact solution. Furthermore, one observes that the expected values computed with QBC and its adaptive version differ only slightly. Consequently, the poor approximation of integrals in the moment system in cells with low smoothness indicator does not destroy the accuracy achieved with the standard QBC. A similar result can be found when investigating the variance: While the variance of SG oscillates heavily, both QBC and adaptive QBC show mitigated oscillations.

Lastly, we take a look at the reconstruction of the solution at a fixed spatial position \(x^* = 2.1\) for the final time \(t_{\text {end}}\). The exact solution is a reversed shock from \(u_R\) to \(u_L\). Note that since we use a quadrature rule with \(N_q = 25\), we only need to know the solution at the corresponding 25 quadrature points. A continuous reconstruction of the SG method can be computed by interpolating the given solution points with Legendre polynomials. In the QBC case, a continuous reconstruction can be calculated with the adjoint approach of IPM (11). Since our interest lies in computing the solution’s moments, we skip this step. Figure 6 shows that the SG method has great difficulties when representing the reversed shock, because this leads to oscillations. The solution violates the maximum principle and shows high total variation in \(\xi\). Both the QBC and adaptive QBC yield a reconstruction which does not violate the bounds of the initial condition, namely \(u_R\) and \(u_L\). The solution nicely captures the exact shock structure. Also note that the adaptive QBC makes use of 25 instead of 15 quadrature points at the shock position, meaning that the spatial cell at \(x^*\) is identified as a fine cell. Consequently, the shock position is nicely localized.

7 Conclusion and outlook

In this paper, we have presented a method to locally add quadrature points to the collocation solution in regions with high uncertainty, allowing a transition from non-intrusive to intrusive methods. Refining the quadrature set requires choosing resulting degrees of freedom, which are described by the kernel of the moment constraint. Using this kernel as basis functions for our solution led to an efficient way of choosing the added degrees of freedom. It turned out that the choice of these parameters is crucial. By choosing the parameters such that the \(L^2\) norm of the solution is minimized, we added characteristics which contradict analytic findings of hyperbolic equations. The resulting Stochastic Galerkin solution showed bad approximation results such as oscillations and violations of the maximum principle. Nice approximation results which fulfill the maximum principle were achieved by the quadrature-based closure (QBC) method, which uses the degrees of freedom to minimize the total variation. Adaptive refinement of the quadrature set allows switching between non-intrusive and the derived intrusive QBC method. The adaptive QBC yields an efficient computation of moments and reconstructions, which closely agree with the standard QBC results.

In future work, we plan to use the presented refinement strategy solely on collocation methods. Refining the quadrature set by a reconstruction allows adding collocation points without having to recalculate the entire solution. Furthermore, we wish to investigate different functions indicating high uncertainty such as shock indicators to refine the solution. Additionally, more than one refinement levels should be added. To avoid having to introduce new transition and interface cells especially for high-order spatial discretizations, one should use a DG implementation or nested quadrature grids. In addition to that, the refinement strategy should be tested on sparse quadrature grids for high-dimensional problems.

Notes

Since \(\varvec{A}\) is a priori known, all basis vectors can be computed before running the program.

References

LeVeque, R.J.: Numerical Methods for Conservation Laws. Basel, Birkhauser (1992)

Risebro, H.H., Henrik, N.: Front Tracking for Hyperbolic Conservation Laws, vol. 152. Springer, Berlin (2015)

Bell, J.B, Dawson, C.N, Shubin, G.R: An unsplit, higher order godunov method for scalar conservation laws in multiple dimensions. J. Comput. Phys. 74(1), 1–24 (1988)

Colella, P.: Multidimensional upwind methods for hyperbolic conservation laws. J. Comput. Phys. 87(1), 171–200 (1990)

Liu, X.-D.: A maximum principle satisfying modification of triangle based adapative stencils for the solution of scalar hyperbolic conservation laws. SIAM J. Numer. Anal. 30(3), 701–716 (1993)

Zhang, X., Shu, C.W.: Maximum-principle-satisfying and positivity-preserving high-order schemes for conservation laws: survey and new developments. Proc. R. Soc. Math. Phys. Eng. Sci. 467(2134), 2752–2776 (2011)

Guermond, J.-L., Nazarov, M., Popov, B., Yang, Y.: A second-order maximum principle preserving lagrange finite element technique for nonlinear scalar conservation equations. SIAM J. Numer. Anal. 52(4), 2163–2182 (2014)

Després, B., Poëtte, G., Lucor, D.: Robust uncertainty propagation in systems of conservation laws with the entropy closure method. In: Uncertainty Quantification in Computational Fluid Dynamics, pp. 105–149. Springer (2013)

Wiener, N.: The homogeneous chaos. Am. J. Math. 60(4), 897–936 (1938)

Canuto, C., Quarteroni, A.: Approximation results for orthogonal polynomials in sobolev spaces. Math. Comput. 38(157), 67–86 (1982)

Tatang, M.A.: Direct incorporation of uncertainty in chemical and environmental engineering systems, Ph.D. thesis, Massachusetts Institute of Technology (1995)

Mathelin, L., Hussaini, M.Y., Zang, T.A.: Stochastic approaches to uncertainty quantification in cfd simulations. Numer. Algorithms 38(1–3), 209–236 (2005)

Xiu, D., Hesthaven, J.S.: High-order collocation methods for differential equations with random inputs. SIAM J. Sci. Comput. 27(3), 1118–1139 (2005)

Babuška, Ivo, Nobile, Fabio, Tempone, Raul: A stochastic collocation method for elliptic partial differential equations with random input data. SIAM J. Numer. Anal. 45(3), 1005–1034 (2007)

Canuto, C., Hussaini, M.Y., Quarteroni, A., Zang, T.A.: Spectral Methods. Springer, Berlin (2006)

Ghanem, R.G., Spanos, P.D.: Stochastic Finite Elements: A Spectral Approach. Courier Corporation, North Chelmsford (2003)

Gottlieb, D., Xiu, D.: Galerkin method for wave equations with uncertain coefficients. Commun. Comput. Phys. 3(2), 505–518 (2008)

Jin, S., Liu, J.-G., Ma, Z.: Uniform spectral convergence of the stochastic galerkin method for the linear transport equations with random inputs in diffusive regime and a micro-macro decomposition-based asymptotic-preserving method. Res. Math. Sci. 4(1), 15 (2017)

Poëtte, G., Després, B., Lucor, D.: Uncertainty quantification for systems of conservation laws. J. Comput. Phys. 228(7), 2443–2467 (2009)

Poëtte, G., Després, B., Lucor, D.: Treatment of uncertain material interfaces in compressible flows. Comput. Methods Appl. Mech. Eng. 200(1), 284–308 (2011)

Kusch, J., Alldredge, G.W., Frank, M.: Maximum-principle-satisfying second-order intrusive polynomial moment scheme. SMAI J. Comput. Math. 5, 23–51 (2019)

Schlachter, L., Schneider, F.: A hyperbolicity-preserving stochastic galerkin approximation for uncertain hyperbolic systems of equations. J. Comput. Phys. 375, 80–98 (2018)

Kusch, J., Frank, M.: Intrusive methods in uncertainty quantification and their connection to kinetic theory. Int. J. Adv. Eng. Sci. Appl. Math. 10(1), 54–69 (2018)

Kusch, J., McClarren, R.G., Frank, M.: Filtered stochastic galerkin methods for hyperbolic equations. (2018) arXiv preprint arXiv:1808.00819

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press, Cambridge (2004)

Gassner, G.J., Beck, A.D.: On the accuracy of high-order discretizations for underresolved turbulence simulations. Theor. Comput. Fluid Dyn. 27(3–4), 221–237 (2013)

Kröker, I., Rohde, C.: Finite volume schemes for hyperbolic balance laws with multiplicative noise. Appl. Numer. Math. 62(4), 441–456 (2012)

Persson, P.O., Peraire, J.: Sub-cell shock capturing for discontinuous Galerkin methods. In: 44th AIAA Aerospace Sciences Meeting and Exhibit, p. 112. (2006)

Funding

This work was supported by the German Research Foundation (DFG) under Grant FR 2841/6-1.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kusch, J., Frank, M. An adaptive quadrature-based moment closure. Int J Adv Eng Sci Appl Math 11, 174–186 (2019). https://doi.org/10.1007/s12572-019-00252-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12572-019-00252-7