Abstract

Extreme learning machine (ELM) is proposed for solving a single-layer feed-forward network (SLFN) with fast learning speed and has been confirmed to be effective and efficient for pattern classification and regression in different fields. ELM originally focuses on the supervised, semi-supervised, and unsupervised learning problems, but just in the single domain. To our best knowledge, ELM with cross-domain learning capability in subspace learning has not been exploited very well. Inspired by a cognitive-based extreme learning machine technique (Cognit Comput. 6:376–390, 1; Cognit Comput. 7:263–278, 2.), this paper proposes a unified subspace transfer framework called cross-domain extreme learning machine (CdELM), which aims at learning a common (shared) subspace across domains. Three merits of the proposed CdELM are included: (1) A cross-domain subspace shared by source and target domains is achieved based on domain adaptation; (2) ELM is well exploited in the cross-domain shared subspace learning framework, and a new perspective is brought for ELM theory in heterogeneous data analysis; (3) the proposed method is a subspace learning framework and can be combined with different classifiers in recognition phase, such as ELM, SVM, nearest neighbor, etc. Experiments on our electronic nose olfaction datasets demonstrate that the proposed CdELM method significantly outperforms other compared methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Although many cognitive computational models (e.g. neural networks and support vector machines, etc.) have been proposed to solve classification problem, those methods encounter lots of challenges, such as poor computational scalability, trivial human intervention, etc. Recently, extreme learning machines (ELM) [1,2,3,4,5], as a cognitive-based technique, are proposed for “generalized” Single-Layer Feed-forward Networks (SLFNs) [1, 2, 4, 6, 7]. ELM can analytically determine the output layer using Moor-Penrose generalized inverse by adopting the square loss of prediction error. Huang et al. [4, 6, 8, 9] have rigorously proved that, in theory, ELM can approximate any continuous functions and also proved the classification capability of ELM [4]. Moreover, different from traditional learning algorithms [10], ELM tends to achieve not only the smallest training error but also the smallest norm of output weights for better generalization performance [3, 7]. Its variants [11,12,13,14,15,16,17,18,19] also focus on the regression, classification, and pattern recognition applications.

For the past years, there are a number of improved versions of ELM. A weighted ELM was proposed for binary/multiclass classification tasks with both balanced and imbalanced data distribution [20]. Bai et al. [21] proposed a sparse ELM for reducing storage space and testing time. Huang et al. [22] proposed a semi-supervised ELM for classification, in which a manifold regularization with graph Laplacian was imposed as constraint, and an unsupervised ELM was also explored for clustering. Liu et al. [23,24,25] proposed many methods to address the issue of tactile object recognition. Recently, Liu et al. [26] also proposed an extreme kernel sparse learning for tactile object recognition, which combines the ELM and kernel dictionary learning.

The data distribution obtained in different stages with different sampling conditions would be different, which is identified as cross-domain problem. Traditional ELM assumes that the data distribution between training and testing data should be similar and therefore cannot address this issue. To handle this problem, domain adaptation has been proposed for heterogeneous data (i.e. cross-domain problem), by leveraging a few labeled instances from another domain with similar semantic [27, 28]. Inspired by domain adaptation, Zhang et al. [29] proposed a domain adaption ELM (DAELM) for classification across tasks (source domain vs. target domain), which is the first paper to study the cross-domain learning mechanism in ELM. However, DAELM was proposed as a cross-domain classifier, and how to learn a shared (common) subspace with source and target domains, to our knowledge, has never been studied in ELM. Therefore, in this paper, we extend ELMs to handle cross-domain problems by transfer learning and subspace learning, and explore its capability in multi-domain application for ELMs. Inspired by DAELM, we propose a cross-domain extreme learning machine (CdELM) for common subspace learning, the basic idea of the proposed CdELM method is illustrated in Fig. 1, in which we aim at learning a shared subspace β. Notably, the proposed CdELM is different from DAELM that we would like to learn a shared subspace for source and target domains, instead of a shared classifier.

Schematic diagram of the proposed CdELM method; after a subspace projection β, the source domain and target domain of different space distribution lie in a latent subspace with good distribution consistency (the centers of both domains become very close and drift is removed). Formally, the upper coordinate system denotes the raw data points of source domain and target domain in three dimensions. We use the word “center” to represent the mean of each domain data. From the upper figure, we can see that the difference between the mean of source domain and the mean of target domain is large. After a subspace projection β in the below figure, we can see that the values of d 2 become smaller, which demonstrate that the distribution difference becomes small, and both domains of different space distribution lie in a latent common subspace with good distribution consistency

The remainder of this is as follows. Section 2 contains a brief review of ELM. In Section 3, the proposed CdELM method with detailed model formulation and optimization algorithm is presented. The experiments and results have been discussed in Section 4. Finally, Section 5 concludes this paper.

Related Work

Review of ELM

Briefly, the principle of ELM is described as follows. Given the training data X = [x 1, x 2, … , x N ] ∈ ℜ N × n, where n is the dimensionality and N is the number of training samples, and T = [t 1, t 2, … , t N ] ∈ ℜ N × m denotes the labels with respect to the data X, where m is the number of classes. The output of the hidden layer is denoted as ℋ(x i ) ∈ ℜ 1 × L, where L is the number of hidden nodes and ℋ(⋅) is the activation function. The output weights between the hidden layer and the output layer being learned is denoted as β ∈ ℜ L × m. The regularized ELM aims at minimizing the training error and the norm of the output weights for better generalization performance, formulated as follows:

where ξ i denotes the prediction error with respect to the ith training pattern x i and C is a penalty constant on the training errors.

By substituting the constraint term in (1) into the objective function, an equivalent unconstrained optimization problem can be obtained as follows:

where H = [ℋ(x 1); ℋ(x 2); … ; ℋ(x N )] ∈ ℜ N × L.

The minimization problem (2) is a regularized least square problem. By setting the gradient of ℒ with respect to β to zero, we can get the closed-form solution of β. There are two cases while solving β. If N is larger than L, the gradient equation is over-determined, and the closed-form solution can be obtained as

where I denotes the identity matrix of size L.

Second, if the number N of training samples is smaller than L, an under-determined least square problem would be handled. In this case, the solution of (1) can be obtained as

where I denotes the identity matrix of size N.

Therefore, for classification problem, the solution of β can be computed by using Eq. (3) or Eq. (4). We direct the interested readers to [3] for more details on ELM theory and the algorithms.

Subspace Learning

Subspace learning aims at learning a low-dimensional subspace. There are several common methods, such as principal component analysis (PCA) [30], linear discriminant analysis (LDA) [31], and manifold learning-based locality preserving projections (LPP) [32]. All of these methods suppose that the data distribution is consistent, namely, that they are only applicable to single domain. However, this assumption is often violated in many real-world applications. So, for heterogeneous data, we proposed a new cross-domain learning method to learn a shared subspace, which is called cross-domain extreme learning machine.

The Proposed CdELM Method

Notations

In this paper, source domain and target domain are defined by subscript S and T, respectively. The training data of source and target domain is denoted as \( {X}_S=\left[{x}_S^1,\dots, {x}_S^{N_S}\right]\in {\Re}^{D\times {N}_S} \) and \( {X}_T=\left[{x}_T^1,\dots, {x}_T^{N_T}\right]\in {\Re}^{D\times {N}_T} \), respectively, where D is the number of dimensions, N S and N T are the number of training samples in both domains. Let β ∈ ℜ L × d represent the basis transformation that maps the ELM space of source and target data to some subspace with dimension of d. ║∙║F and ║∙║2 denote the Frobenius norm and l 2-norm. Tr(∙) denotes the trace operator and (∙)T denotes the transpose operator. Throughout this paper, matrix is written in capital bold face, vector is presented in lower bold face, and variable is in italics.

The Proposed Method

As illustrated in Fig. 1, the distribution between the source domain and target domain is different. Therefore, the performance of the learned classifier by the source domain will be dramatically degraded. Inspired by subspace learning and ELM, the main idea of the proposed CdELM is to learn a shared subspace β in ELM space rather than a classifier β. Therefore, the source domain and target domain share the similar feature distribution in the latent projection β.

Firstly, mapping the source data and target data into the ELM space, and then we could obtain \( {H}_S=\left[{h}_S^1,\dots, {h}_S^{N_S}\right]\in {\Re}^{L\times {N}_S} \) and \( {H}_T=\left[{h}_T^1,\dots, {h}_T^{N_T}\right]\in {\Re}^{L\times {N}_T} \), where \( {h}_S^i= g\left({W}^T{x}_S^i+{b}^T\right) \) and \( {h}_T^j= g\left({W}^T{x}_T^j+{b}^T\right) \) are the output (column) vector of the hidden layer with respect to the input \( {x}_S^i \) and \( {x}_T^j \), respectively, i = 1,2,...,N S , j = 1,2,...,N T , g(∙) is a activation function, L is the number of randomly generated hidden nodes, W ∈ ℜ D × L and b ∈ ℜ 1 × L are randomly generated weights.

In the learned subspace β, we expect that not only the distribution between source domain and target domain should be consistent, and the discrimination can also be improved for recognition. Inspired by linear discriminant analysis (LDA), we aim at minimizing the intra-class scatter matrix \( {S}_W^S \) and simultaneously maximizing the inter-class scatter matrix \( {S}_B^S \) of the source data, such that the separability can be promised in the learned linear subspace. Therefore, for source domain, it is rational to maximize the following term

where the inter-class scatter matrix and intra-class scatter matrix can be computed as \( {S}_B^S=\sum_{c=1}^C\left({\mu}_S^c-{\mu}_S\right){\left({\mu}_S^c-{\mu}_S\right)}^T \) and \( {S}_W^S=\sum_{c=1}^C\sum_{k=1,{h}_S^k\in {G}_c}^{n_c}\left({h}_S^k-{\mu}_S^c\right){\left({h}_S^k-{\mu}_S^c\right)}^T \), where μ S represents the center of source data, \( {\mu}_S^c \) represents the center of class c of source data in the raw space, C represents the number of categories, G c represents a collection belonging to class c, and n c represents the number of class c.

For learning such a subspace β that maximizes the formulation (5), we should also ensure that the projection does not distort the data from target domain, such that much more available information can be kept in the new subspace representation. Therefore, it is rational to maximize the following term:

Naturally, after projected by β, the feature distributions between the mapped source domain \( {H}_S=\left[{h}_S^1,\dots, {h}_S^{N_S}\right]\in {\Re}^{L\times {N}_S} \) and target domain \( {H}_T=\left[{h}_T^1,\dots, {h}_T^{N_T}\right]\in {\Re}^{L\times {N}_T} \) can become similar. Therefore, it is rational to have an idea that the mean distribution discrepancy (MDD) between H S and H T can be minimized. That is, the distance between the centers of the two domains should be minimized. Therefore, the MDD minimization is formulated as

With the merits of ELM, we expect that the norm of β is minimized,

After a detailed description of the four specific parts in the proposed CdELM model, by incorporating the Eq. (5) to Eq. (8) together, a complete CdELM model is formulated as follows

where λ 0, λ 1, and λ 2 denote the trade-off parameters.

Let \( {\mu}_S=\frac{1}{N_S}\sum_{i=1}^{N_S}{h}_S^i \) and \( {\mu}_T=\frac{1}{N_T}\sum_{j=1}^{N_T}{h}_T^j \) be the centers of source domain and target domain in ELM space; then the minimization problem in Eq.(9) can be finally written as

where I is an identity matrix of size L.

Model Optimization

In the minimization problem Eq. (10), there are many possible solutions of β (i.e. non-unique solutions). To guarantee the unique property of solution, we impose an equality constraint on the optimization problem, and then Eq. (10) can be written as

where η is a positive constant.

To solve Eq. (11), the Lagrange multiplier function is written as

where ρ denotes the Lagrange multiplier coefficient.

By setting the partial derivation of ℒ(β, ρ) with respect to β to be 0, we have

From Eq. (13), we can observe that β can be obtained by solving the following eigenvalue decomposition problem,

where \( A={\left({S}_B^S+{\lambda}_2{H}_T{H}_T^T\right)}^{-1}\left({S}_W^S+{\lambda}_0 I+{\lambda}_1\left({\mu}_S-{\mu}_T\right){\left({\mu}_S-{\mu}_T\right)}^T\right) \) and ρ denotes the eigenvalues.

From (14), it is clear that β denotes the eigenvectors. Due to that the model (11) is a minimization problem; therefore, the optimal subspace denotes the eigenvectors with respect to the first d smallest eigenvalues [ρ 1,..., ρ d ], represented by

For easy implementation, the proposed algorithm is summarized in Algorithm 1.

Experiments

Data Description

We validate the proposed method on our own datasets. This dataset includes three subsets: master data (collected 5 years ago), slave 1 data (collected now), and slave 2 data (collected now). In data acquisition, the master and two slavery E-nose systems were developed in [33]. Each system consists of four TGS series sensors and an extra temperature and humidity module. Therefore, the dimension of each sample is 6. This dataset includes six kinds of gaseous contaminants (i.e. six classes), such as formaldehyde, benzene, toluene, carbon monoxide, nitrogen dioxide, and ammonia. The detailed description of the dataset is shown in Table 1. For visually observing the heterogeneous E-nose data, the PCA scatter points on the master data, slave 1 data and slave 2 data, are shown in Fig. 2, in which we can see that the points from different classes are overlapped.

Experimental Settings

The master data collected 5 years ago is used as source domain data (no drift). The slave 1 and slave 2 data collected now are used as target domain data (with drift). Then, we conduct the experiments with two settings as follows.

-

Setting 1.

During CdELM training and classifier learning, the labels of the target domain data are unavailable, and only the source labels are used. The classification accuracy on slave 1 data (or slave 2 data) is reported.

-

Setting 2.

The only difference between Setting 1 and Setting 2 is that, in classifier training, partial labeled data of target domain can be used. Specifically, for each class in the target domain, k labeled samples can be used for classifier learning, where the values k = 1, 3, 5, 7, and 9 are discussed in this paper.

Compared Methods

To show the effectiveness of the proposed method, we have chosen 12 machine learning methods. First, three baseline methods such as support vector machine (SVM), principal component analysis (PCA), and linear discriminant analysis (LDA) are compared. Second, five semi-supervised learning methods based manifold learning, including locality preservation projection (LPP) [32], multidimensional scaling (MDS) [34], neighborhood component analysis (NCA) [35], neighborhood preserving embedding (NPE) [36], and local fisher discriminant analysis (LFDA) [37] are explored and compared. Finally, the popular subspace transfer learning method, sampling geodesic flow (SGF) [38], is also explored and compared.

Results

In this section, the experimental results on each setting are reported to validate the performance of the proposed CdELM method. Under each setting, two tasks including master → slave 1 and master → slave 2 are conducted.

Under setting 1, we first observe the qualitative result by implementing the proposed CdELM method on master → slave 1 and master → slave 2, respectively. The result is shown in Fig. 3, in which the separability among data points from different classes (represented as different symbols) is much improved in the learned common subspace compared to Fig. 2. Further, the odor classification accuracy of the target domain data has been presented in Table 2. From the results, we can observe that the proposed CdELM achieves the highest accuracies on two tasks. While the activation function in hidden layer is Gaussian (RBF function), the best performance of the CdELM is achieved. This demonstrates that the proposed CdELM has a good performance for cross-domain pattern recognition scenarios.

Under the setting 2, k-labeled data for each class in the target domain is leveraged for classifier learning. The recognition accuracy of the first task (i.e. master → slave 1) is reported in Table 3 and the second task (i.e. master → slave 2) is reported in Table 4. Notably, all the compared methods follow the same setting conditions. From Tables 3 and 4, we can observe that the proposed CdELM still outperforms other methods. Therefore, we confirm that the proposed method is effective in handling heterogeneous measurement data.

Parameter Sensitivity Analysis

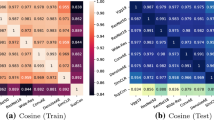

In the proposed CdELM model, there are three parameters: λ 0, λ 1, and d. We focus on observing the performance variations in tuning λ 0 and λ 1 according to \( {10}^t \), where \( t=\left\{-6,-4,-2,0,2,4,6\right\} \). To show the performance with respect to each parameter, one is tuned by freezing the other one. The parameter λ 1 tuning results by fixing λ 0 are shown in Fig. 4, and the parameter λ 0 tuning results by fixing λ 1 are shown in Fig. 5, from which the best parameters λ 0 and λ 1 can be witnessed. Further, we tune the subspace dimensionality d from the parameter set d = {1, 2, 3, 4, 5, 6, 7, 8, 9, 10}, and the result is shown in Fig. 6 by fixing other model parameters.

Conclusion

In this paper, we present a cross-domain common subspace learning approach for heterogeneous data classification problem, which is called cross-domain extreme learning machine (CdELM). The method is motivated by subspace learning, domain adaptation, and cognitive-based extreme learning machine, such that the advantage of ELM, such as good generalization, is inherited. Since traditional ELM supposes that the training data and testing data should be with similar distribution, once the assumption is violated in multi-domain scenarios, the ELM may not be adapted. The aim of this paper is to bring some new perspective for ELM in multi-domain subspace learning scenarios. Extensive experiments demonstrate that the proposed method outperforms other compared methods.

References

Huang GB. An insight into extreme learning machines: random neurons, random features and kernels. Cognit Comput. 2014;6(3):376–90.

Huang GB. What are extreme learning machines? Filling the gap between frank Rosenblatt's dream and John von Neumann's puzzle. Cognit Comput. 2015;7(3):263–78.

Huang GB, Zhu QY, Siew CK. Extreme learning machine: theory and applications. Neurocomputing. 2006;70(1–3):489–501.

Huang GB, Zhou H, Ding X, Zhang R. Extreme learning machine for regression and multiclass classification. IEEE Trans Syst Man Cybern B Cybern. 2012;42(2):513–29.

Cambria E. Extreme learning machines [trends & controversies]. IEEE Intell Syst. 2013;28(6):30–59.

Huang GB, Chen L, Siew CK. Universal approximation using incremental constructive feedforward networks with random hidden nodes. IEEE Trans Neural Netw. 2006;17(4):879–92.

Huang GB, Zhu QY, Siew CK. Extreme learning machine: a new learning scheme of feedforward neural networks. In: Proc. IEEE Int Joint Conf Neural Netw. vol. 2. pp. 985–990, 2004.

Huang GB, Chen L. Convex incremental extreme learning machine. Neurocomputing. 2007;70(16–18):3056–62.

Huang GB, Chen L. Enhanced random search based incremental extreme learning machine. Neurocomputing. 2008;71(16–18):3460–8.

Rumelhart DE, Hinton GE, Williams RJ. Learning representations by back-propagation errors. Nature. 1986;323:533–6.

Huang GB, Zhu QY, Mao KZ, Siew CK, Saratchandran P. Can threshold networks be trained directly? IEEE Trans Circ Syst II Exp Briefs. 2006;53(3):187–91.

Liang NY, Huang GB, Saratchandran P, Sundararajan N. A fast and accurate on-line sequential learning algorithm for feedforward networks. IEEE Trans Neural Netw. 2006;17(6):1411–23.

Li MB, Huang GB, Saratchandran P, Sundararajan N. Fully complex extreme learning machine. Neurocomputing. 2005;68:306–14.

Feng G, Huang GB, Lin Q, Gay R. Error minimized extreme learning machine with growth of hidden nodes and incremental learning. IEEE Trans Neural Netw. 2009;20(8):1352–7.

Rong HJ, Huang GB, Sundararajan N, Saratchandran P. Online sequential fuzzy extreme learning machine for function approximation and classi fication problems. IEEE Trans Syst Man Cybern B Cybern. 2009;39(4):1067–72.

Huang Z, Yu Y, Gu J and Liu H. An efficient method for traffic sign recognition based on extreme learning machine. IEEE Trans Cybern, Appear Online, 2016.

Yu Y, Sun Z. Sparse coding extreme learning machine for classification. Neurocomputing, accepted, 2016.

Zhang L, Zhang D. Evolutionary cost-sensitive extreme learning machine. IEEE Trans Neural Netw Learn Syst. 2016; doi:10.1109/TNNLS.2016.2607757.

Zhang L, Zhang D. Robust Visual knowledge transfer via extreme learning machine based domain. IEEE Trans Image Process. 2016;25(10):4959–73.

Zong W, Huang GB, Chen Y. Weighted extreme learning machine for imbalance learning. Neurocomputing. 2013;101:229–42.

Bai Z, Huang GB, Wang D, Wang H, Westover MB. Sparse extreme learning machine for classification. IEEE Trans Cybern. 2014;44(10):1858–70.

Huang GB, Song S, Gupta JND, Wu C. Semi-supervised and unsupervised extreme learning machines. IEEE Trans. Cybern. 2014; doi:10.1109/TCYB.2014.2307349.

Liu H, Yu Y, Sun F, et al. Visual-tactile fusion for object recognition. IEEE Trans Autom Sci Eng. 2016;14:1–13.

Liu H, Guo D, Sun F. Object recognition using tactile measurements: kernel sparse coding methods. IEEE Trans Instrum Meas. 2016;65(3):1–10.

Liu H, Liu Y, Sun F. Robust exemplar extraction using structured sparse coding. IEEE Trans Neural Netw Learn Syst. 2014;26(8):1816–21.

Liu H, Qin J, Sun F, et al. Extreme kernel sparse learning for tactile object recognition. IEEE Trans Cyber, 2016.

Pan SJ, Tsang IW, Kwok JT, Yang Q. Domain adaptation via transfer component analysis. IEEE Trans Neural Netw. 2011;22(2):199–210.

Zhang L, Zuo W, Zhang D. LSDT: latent sparse domain transfer learning for Visual adaptation. IEEE Trans Image Process. 2016;25(3):1177–91.

Zhang L, Zhang D. Domain adaptation extreme learning machines for drift compensation in e-nose systems. IEEE Trans Instrum Meas. 2015;64(7):1790–801.

Turk M, Pentland A. Eigenfaces for recognition. J Cogn NeuroSci. 1991;3(1):71–86.

Belhumeur PN, Hespanha J, Kriegman DJ. Eigenfaces vs. fisherfaces: recognition using class specific linear projection. IEEE Trans Pattern Anal Mach Intell. 1997;19(7):711–20.

He X, Niyogi P. Locality preserving projections. NIPS, 2004.

Zhang L, Tian F. Performance study of multilayer Perceptrons in a low-cost electronic nose. IEEE Trans Instrum Meas. 2014;63(7):1670–9.

Torgerson WS. Multidimensional scaling: I. Theory and method. Psychometrika. 1952;17(4):401–19.

Goldberger J, Roweis S, Hinton G, Salakhutdinov R. Neighborhood component analysis. NIPS, 2004.

He X, Cai D, Yan S, and Zhang HJ. Neighborhood preserving embedding. ICCV, 2005.

Sugiyama M. Local fisher discriminant analysis for supervised dimensionality reduction. ICML, pp. 905–912, 2006.

Gopalan R, Li R, and Chellappa R. Domain adaptation for object recognition: an unsupervised approach. ICCV, 2011.

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grant 61401048, the Fundamental Research Funds for the Central Universities, and Chongqing University Postgraduates’ Innovation Project (No.CYB15030).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

Yan Liu, Lei Zhang, Pingling Deng, and Zheng He declare that they have no conflict of interest.

Informed Consent

Informed consent was not required as no human or animals were involved.

Human and Animal Rights

This article does not contain any studies with human or animal subjects performed by any of the authors.

Rights and permissions

About this article

Cite this article

Liu, Y., Zhang, L., Deng, P. et al. Common Subspace Learning via Cross-Domain Extreme Learning Machine. Cogn Comput 9, 555–563 (2017). https://doi.org/10.1007/s12559-017-9473-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-017-9473-5