Abstract

Background

Big social data analysis is the area of research focusing on collecting, examining, and processing large multi-modal and multi-source datasets in order to discover patterns/correlations and extract information from the Social Web. This is usually accomplished through the use of supervised and unsupervised machine learning algorithms that learn from the available data. However, these are usually highly computationally expensive, either in the training or in the prediction phase, as they are often not able to handle current data volumes. Parallel approaches have been proposed in order to boost processing speeds, but this clearly requires technologies that support distributed computations.

Methods

Extreme learning machines (ELMs) are an emerging learning paradigm, presenting an efficient unified solution to generalized feed-forward neural networks. ELM offers significant advantages such as fast learning speed, ease of implementation, and minimal human intervention. However, ELM cannot be easily parallelized, due to the presence of a pseudo-inverse calculation. Therefore, this paper aims to find a reliable method to realize a parallel implementation of ELM that can be applied to large datasets typical of Big Data problems with the employment of the most recent technology for parallel in-memory computation, i.e., Spark, designed to efficiently deal with iterative procedures that recursively perform operations over the same data. Moreover, this paper shows how to take advantage of the most recent advances in statistical learning theory (SLT) in order to address the issue of selecting ELM hyperparameters that give the best generalization performance. This involves assessing the performance of such algorithms (i.e., resampling methods and in-sample methods) by exploiting the most recent results in SLT and adapting them to the Big Data framework. The proposed approach has been tested on two affective analogical reasoning datasets. Affective analogical reasoning can be defined as the intrinsically human capacity to interpret the cognitive and affective information associated with natural language. In particular, we employed two benchmarks, each one composed by 21,743 common-sense concepts; each concept is represented according to two models of a semantic network in which common-sense concepts are linked to a hierarchy of affective domain labels.

Results

The labeled data have been split into two sets: The first 20,000 samples have been used for building the model with the ELM with the different SLT strategies, while the rest of the labeled samples, numbering 1743, have been kept apart as reference set in order to test the performance of the learned model. The splitting process has been repeated 30 times in order to obtain statistically relevant results. We ran the experiments through the use of the Google Cloud Platform, in particular, the Google Compute Engine. We employed the Google Compute Engine Platform with NM = 4 machines with two cores and 1.8 GB of RAM (machine type n1-highcpu-2) and an HDD of 30 GB equipped with Spark. Results on the affective dataset both show the effectiveness of the proposed parallel approach and underline the most suitable SLT strategies for the specific Big Data problem.

Conclusion

In this paper we showed how to build an ELM model with a novel scalable approach and to carefully assess the performance, with the use of the most recent results from SLT, for a sentiment analysis problem. Thanks to recent technologies and methods, the computational requirements of these methods have been improved to allow for the scaling to large datasets, which are typical of Big Data applications.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The advent of social networks, web communities, blogs, Wikipedia, and other online collaborative media has deeply changed the ways people express their opinions and sentiments. A growing amount of content and ideas are continuously expressed by the millions of people connected to the World Wide Web. As a major consequence, the distillation of knowledge from this huge quantity of unstructured information can be a key tool for marketers who want to create a brand or product image and identity in the minds of their customers. Such a scenario has led to the emerging fields of opinion mining and sentiment analysis [1–4], which deal with information retrieval and knowledge discovery from text using data mining and natural language processing (NLP) techniques [5–8]. However, mining opinions and sentiments from natural language is an extremely difficult task as it involves a deep and broad understanding of the explicit and implicit, regular and irregular, syntactical and semantic rules proper of a language.

Sentic computing [9] tackles these crucial issues by exploiting affective common-sense reasoning, i.e., the intrinsically human capacity to interpret cognitive and affective information associated with natural language, and thus differs from standard statistical approaches to big social data analysis. Common-sense computing techniques are applied in different contexts (including multi-modality [10], handwriting recognition [11], e-health [12], and more) to bridge the semantic gap between word-level natural language data and the concept-level opinions conveyed by these. To achieve this goal, the sentic computing framework takes advantage of AffectNet [13], a semantic network in which common-sense concepts are linked to a hierarchy of affective domain labels. In particular, the vector space representation of one such semantic network, termed AffectiveSpace [14], enables affective analogical reasoning on natural language concepts.

Current research shows that the emerging field of big social data analysis [15–18] can take advantage of inductive learning systems to support emotion recognition in natural language text. In this context, every common-sense concept is represented according to AffectiveSpace and defined by four affective dimensions [19]: Pleasantness, Attention, Sensitivity, and Aptitude. This representation leads to a further polarity detection task. The current emotion recognition problem is complicated by the fact that labeling all the common-sense concepts of AffectiveSpace is often difficult, expensive, and time-consuming. Therefore, affective dimensions labeling is only available for a set of concepts. The need to properly tackle these issues leads to the use of a semi-supervised classifier. Eventually, a semi-supervised version of the extreme learning machine (ELM) framework [20–23] is adopted.

The interest in semi-supervised learning [4, 24–26] has recently increased, especially because application domains exist (e.g., text mining, natural language processing, image and video retrieval, bioinformatics). In this context, semi-supervised learning can be formalized as a supervised learning problem biased by an unsupervised reference solution. First, a general biased-regularization scheme that encompasses the biased version of ELM is introduced. Then, a semi-supervised learning model based on the biased-regularization [27] scheme follows a two-step procedure. In the first step, an unsupervised clustering of the whole dataset (including both labeled and unlabeled data) obtains a reference solution. As a result, the eventual semi-supervised classification framework can derive a reference function from any clustering algorithm, thus providing remarkable flexibility. In the second step, the clustering outcomes drive the learning process in a biased-regularization ELM to acquire the class information provided by labels. The ultimate result is that the overall learned function exploits both labeled and unlabeled data. The integrated framework applies to both linear and nonlinear data distributions: In the former, one works under a cluster assumption on data; in the latter, one works under a manifold hypothesis [28].

In this paper, we want to address the problem of assessing the performance of a predictive model, i.e., the semi-supervised version of ELM, and quantify its uncertainty. Similar problems have been addressed in the field of statistical inference since the last century [29]. The now classic approach of parametric statistics identifies a family of models (e.g., linear functions) and a noise assumption (e.g., Gaussian). Given some data, it easily provides an assessment of the performance of the fitted model, along with a quantification of the uncertainty or, in modern terms, an estimation of the generalization error and the related confidence intervalFootnote 1. On the contrary, data-driven models exploit nonparametric inference, where it is expected that an effective model would stem out directly from the data, without any assumption on the model family nor any other information that is external to the dataset itself [31, 32].

Statistical learning theory (SLT) tries to find necessary and sufficient conditions for nonparametric inference to build predictive models from data [33–35] or, using the language of SLT, learn an optimal model from data. The main SLT results have been obtained by deriving nonasymptotic bounds on the generalization error of a model or, to be more precise, upper bounds on the excess risk between the optimal predictor and the learned model, as a function of the possibly infinite family of models and the number of available samples [36]. For a long time, SLT has been considered only a theoretical, albeit very sound and deep, statistical framework, without any real applicability to practical problems [37]. It was only in the last decade, after important advances in this field [38], that SLT has been shown to be able to provide practical answers, at least when targeting the inference of data-driven models for classification purposes [39, 40].

This paper shows how to exploit unlabeled samples in the usual semi-supervised learning context so as to tune and assess the performance of a learning algorithm, with particular reference to the ELM applied to an affective analogical reasoning problem. We review all most recent methodologies of model selection (MS) and error estimation (EE) that can be applied to the ELM, as well as how these methodologies can take advantage of unlabeled samples. In brief, among the several methods proposed in the literature for MS and EE, we identify two main categories: out-of-sample and in-sample methods [40]. The first works well in many situations and allows the application of simple statistical techniques in order to estimate quantities of interest by splitting data in different sets, each for a different purpose (training, validation, and test). Some examples of out-of-sample methods are the well-known holdout (HO) and k-fold cross-validation (KCV) [41], leave-one-out (LOO), and bootstrap [42]. In contrast, the in-sample methods exploit the whole set of available data for training the model, assessing its performance and estimating its generalization error, thanks to the application of rigorous statistical procedures. We describe how in-sample methods can be further divided into two subgroups: the hypothesis space-based methods and the algorithm-based methods [43]. The first subgroup requires knowledge of the hypothesis space from which the algorithm chooses the model. Some examples of these methods are the Vapnik–Chervonenkis (VC) theory [36, 44], (local) Rademacher complexity (RC) theory [38, 45–48], and PAC Bayes theory [39, 49–52]. The second subgroup of methods does not require advance knowledge of the hypothesis space, instead just relying on application of the algorithm on a series of modified training sets. Some examples are the compression bound [53, 54] and algorithmic stability theory (AS) [43, 55, 56]. We also mention the distinctions between the frequentist and Bayesian approaches, although some approaches combine aspects from these two [57].

Semi-supervised Binary Classification

Before getting into the discussion proper, let us recall some common preliminary definitions [36, 55]. Let us consider a set of labeled samples \({\mathscr{D}}_{n} =\) \(\{ ({x} _1, y_1),\ldots ,({x} _{n}, y_{n}) \} =\{ {z} _1, \ldots , {z} _{n} \}\) and another set of unlabeled ones \({\mathscr{D}}_{n_u} = \{ {x} _{n + 1},\ldots ,{x} _{n + n_u} \}\) drawn i.i.d., according to an unknown probability distribution \(\mu\) over the cartesian product between the input space \({\mathscr{X}} \subseteq {\mathbb{R}}^d\) and the output space \({\mathscr{Y}} = \{ -1, +1 \}\) defined as \({\mathscr{Z}} = {\mathscr{X}} \times {\mathscr{Y}}\). Let us also consider a function \(f \in {\mathscr{F}}\) where \(f: {\mathscr{X}} \rightarrow \overline{{\mathscr{Y}}} = {\mathbb{R}}\). The error of f in approximating \(\mu\) is measured with reference to some [0, 1]-bounded loss function \(\ell : {\mathscr{F}} \times \mathscr{{z} } \rightarrow [0,1]\). The risk of f can be then defined as such:

Since \(\mu\) is unknown, L(f) cannot be computed though we can compute its empirical estimators. Before defining them, let us introduce two modified training sets \({\mathscr{D}}_{n}^{{\setminus} i}\) and \({\mathscr{D}}_{n}^{i}\), where the ith element is respectively removed or replaced by another sample \({z} '_i\) sampled i.i.d. from \(\mu\):

If \({\widehat{f}} = {\mathscr{A}}_{({\mathscr{D}}_{n} \cup {\mathscr{D}}_{n_u},{\mathscr{H}})}\), \({\widehat{f}}^{{\setminus} i} = {\mathscr{A}}_{({\mathscr{D}}_{n}^{{\setminus} i} \cup {\mathscr{D}}_{n_u},{\mathscr{H}})}\), and \({\widehat{f}}^{i} = {\mathscr{A}}_{({\mathscr{D}}_{n}^{i} \cup {\mathscr{D}}_{n_u},{\mathscr{H}})}\) are chosen form of functions \({\mathscr{F}}_{\mathscr{H}}\) according to some criteria, or algorithm, \({\mathscr{A}}_{\mathscr{H}}\), where \({\mathscr{H}}\) is a set of hyperparameters that must be tuned, and based respectively on \({\mathscr{D}}_{n} \cup {\mathscr{D}}_{n_u}\), \({\mathscr{D}}_{n}^{{\setminus} i} \cup {\mathscr{D}}_{n_u}\), and \({\mathscr{D}}_{n}^{i} \cup {\mathscr{D}}_{n_u}\) (\({\mathscr{D}}_{n_u}\) can be exploited or not based on \({\mathscr{A}}\)), we can define the empirical risk of a function \(f \in {\mathscr{F}}_{\mathscr{H}}\) and the LOO risk of the algorithm \({\mathscr{A}}\) [55] as:

If the loss function is not specified, all the results hold for any [0, 1]-bounded loss function. Instead, some results just hold for particular loss functions; in such cases, we specify which loss function must be adopted as \(L^\ell (f)\), \({\widehat{L}}^\ell _{\text{emp}}(f)\), \({\widehat{L}}^\ell _{\text{loo}}({\mathscr{A}}_{\mathscr{H}})\), etc.

Semi-supervised Extreme Learning Machines

The biased model adopted as a semi-supervised approach exploits both unlabeled and labeled data to learn a classification function empirically. The model is based on the biased-regularization theory, defined as follows: A reference solution (e.g., a hyperplane) is used to bias the solution of a regularization-based learning machine.

Extreme Learning Machines

The ELM model [20, 58–60] implements a single-hidden layer feed-forward neural network (SLFN) with \(N_h\) mapping neurons. Thus, the hypothesis space can be formalized as follows

where \(w_j \in {\mathbb{R}}\), and \(a({x}, \zeta )\) is a nonlinear piecewise continuous function satisfying ELM universal approximation capability theorems [20]. In general, the activation function is characterized by a set of parameters \(\zeta\), and the jth neuron has its \(\zeta _j\). Sigmoid function, RBF, and polynomial functions represent three popular choices for the activation function.

The peculiar aspect of ELM is that the parameters \(\zeta _j\) are set randomly. Accordingly, the hidden layer implements an explicit mapping of the original input space \({\mathscr{X}}\) into a new space \({\mathbb{R}}^{N_h}\). Hence, training ELMs is equivalent to solving a regularized least squares (RLS) problem in a linear space [20]. Let \(H \in {\mathbb{R}}^{n \times N_h}\) be an activation matrix such that the entry \(H_{i,j}\) is the activation value of the jth hidden neuron for the ith input pattern. Then, the training problem reduces to the minimization of the convex cost:

A matrix pseudo-inversion yields the unique \(L_2\) solution, as proven in [20]:

Furthermore, the theory derived in [61] proves that regularization strategies can further improve the generalization performance of the model. As a result, the cost function of Eq. (5) is augmented by a \(L_2\) regularization factor as follows:

The vector of weights \({w}^{\ast}\) is then obtained as follows:

where \(I \in {\mathbb{R}}^{N_h \times N_h}\) is an identity matrix.

A Biased Regularization

The general biased-regularization model works via biasing the solution of a regularization-based learning machine by a reference function (e.g., a hyperplane) [62]. The nature of this reference function is a crucial aspect that concerns the learning theory in general. In the linear domain one can define a generic convex loss function \(\ell\), and a biased-regularizing term, with the resulting cost function being

where \({w} _0\) is a reference hyperplane, \(\lambda _1\) is the classical regularization parameter that controls smoothness, and \(\lambda _2\) controls the adherence to the reference solution \({w} _0\). The expression of Eq. (9) is a convex functional and thus admits a global solution. From Eq. (9) one obtains:

The role played by parameter\(\lambda _2\) is indeed critical from both the theoretical and the practical point of view [62]. This parameter allows the cost function (10) to exploit a strong or weak bias on the hypothesis space; i.e., by adjusting \(\lambda _2\) one can modulate the contribution provided by \({w} _0\) to the cost function. Hence, one can take advantage of biased regularization even when the reference solution is not optimal. The crucial aspect is the ability to shrink the space to be explored in order to get an optimal solution, which in turn means the ability to reduce the complexity of the hypothesis space [62].

Note that given a reference hyperplane \({w} _0\), a regularization constant \(\lambda _1\), and a biasing constant \(\lambda _2\), the problem:

has solution:

The proof is trivial [62]. Note, also, that thanks to the representer theorem [63, 64] it is possible to write \({w}^{\ast}\) as:

A Semi-supervised Learning Scheme Based on Biased Regularization

The biased version of the ELM can support a semi-supervised framework for the classification task [62]. Let H denote the activation matrix of the whole dataset \({\mathscr{D}}_n \cup {\mathscr{D}}_{n_u}\), \(H_n\) denote the activation matrix of \({\mathscr{D}}_n\), \({y} _n\) denote the corresponding vector of labels, and \(H_{n_u}\) denote the activation matrix of \({\mathscr{D}}_{n_u}\). The semi-supervised learning scheme then requires one to apply the following four-step procedure:

-

1.

Clustering: Use any clustering algorithm to perform an unsupervised partition of the dataset \({\mathscr{D}}_n \cup {\mathscr{D}}_{n_u}\) by discarding the available labels (a bipartition in the simplest case).

-

2.

Calibration: For every cluster, a majority voting scheme is adopted to set the cluster label; this is done by exploiting the labeled samples. Then, for each cluster, assign to each sample the cluster label. Let \({\widehat{y}}\) denote this new set of labels.

-

3.

Mapping: Given \({\mathscr{D}}_n \cup {\mathscr{D}}_{n_u}\) and \({\widehat{y}}\), train the selected learning machine and obtain the solution \({w} _0\).

-

4.

Biasing: Given \({\mathscr{D}}_n\), train the biased version of the learning machine (biased by \({w} _0\)). The solution w carries information derived from both the labeled data \({\mathscr{D}}_n\) and the unlabeled data \({\mathscr{D}}_{n_u}\).

This procedure, Step 4, Biasing, has similarities to that adopted in deep learning architectures [65, 66]. In the latter case, the training algorithm performs a preliminary unsupervised stage and then uses labels only to adjust the network for the specific classification task; the eventual representation still mostly reflects the outcome of the learning process completed in the pre-training phase. Likewise, in the proposed framework, a pre-training phase builds \({w} _0\) and a final adjustment derives the final w .

The semi-supervised learning scheme possesses some interesting features:

-

Since the proposed method could be applied to both linear and nonlinear domains, the result is a completely generalizable learning scheme.

-

Clustering and biasing can be tackled independently. If one wants to adopt a particular solution for biasing or a new clustering algorithm is designed, then the two actions can be controlled and adjusted separately.

-

If the learning machine is a single-layer learning machine whose cost is convex then convexity is preserved and a global solution is granted.

-

Every clustering method can be used to build the reference solution.

Model Selection

The selection of the optimal hyperparameters of a predictive model is the fundamental problem of SLT and is still the target of current research [38, 40, 41, 57, 67, 68]. The approaches can be divided into two large families: out-of-sample methods, like HO, cross-validation, and the bootstrap [40–42, 69], and more recent in-sample methods, like the class of function-based methods [40] (based on the VC dimension [36], RC [45–47, 70], PAC Bayes theory [49, 50]), algorithm-based methods [43] (based on compression bounds [53], and AS theory [55, 56]).

Out-of-sample methods [40, 71] are favored by practitioners because they work well in many situations and allow the application of simple statistical techniques for estimating the quantities of interest. Some examples of out-of-sample methods are the well-known HO, the KCV, the LOO, and the bootstrap (BOO) [41, 42, 72]. All these techniques rely on a similar idea: The original dataset is resampled, with or without replacement, to build two independent datasets called, respectively, the training and validation (or estimation) sets. The first one is used for training a classifier, while the second one is exploited to estimate its generalization error, so that the hyperparameters can be tuned to achieve its minimum value. Note that both error estimates computed through the training and validation sets are, obviously, optimistically biased; therefore, if a generalization estimate of the final model is desired, it is necessary to build a third independent set, called the test set, by nesting two of the resampling procedures mentioned above. Furthermore, the resampling procedure itself can introduce artifacts in the estimation process and so must be carefully designed.

In-sample methods [40, 71], instead, allow the whole set of available data for both training the model and estimating its generalization error to be exploited, thanks to the application of rigorous statistical procedures [38, 50, 55]. Despite their unquestionable advantage with respect to out-of-sample methods, their use is not widespread: One of the reasons is the common belief that though in-sample methods are very useful for gaining deep theoretical insights on the learning process or for developing new learning algorithms, they are not suitable for practical purposes. However, recent advances and deeper insights on these methods demonstrate that this is no longer true [73].

Note that the conventional out-of-sample and in-sample techniques neither take into account nor take advantages of the unlabeled samples available in the semi-supervised learning framework. For more details about the advantages and disadvantages of the different methods one can refer to [39, 40, 43].

Out-of-Sample methods

As described earlier, these techniques rely on a similar idea: The original dataset \({\mathscr{D}}_{n}\) is resampled once or many (\(n_r\)) times, with or without replacement, to build two independent datasets called training and validation sets, respectively \({\mathscr{T}}^r_{n_t}\) and \({\mathscr{V}}^r_{n_v}\), with \(r \in \{ 1, \ldots , n_r \}\). Note that \({\mathscr{T}}^r_{n_t} \cap {\mathscr{V}}^r_{n_v} = \oslash\) and \({\mathscr{T}}^r_{n_t} \cup {\mathscr{V}}^r_{n_v} = {\mathscr{D}}_{n}\). Then, in order to select the best set of hyperparameters \({\mathscr{H}}\) from a set of possible ones \({\mathfrak{H}} = \{ {\mathscr{H}}_1, {\mathscr{H}}_2, \ldots , {\mathscr{H}}_{n_{\mathscr{H}}} \}\) for the algorithm \({\mathscr{A}}_{\mathscr{H}}\) or, in other words, to perform the MS, we have to apply the following procedure:

Since the data in \({\mathscr{T}}^r_{n_t}\) are i.i.d. from the ones in \({\mathscr{V}}^r_{n_v}\), the idea is that \({\mathscr{H}}^{\ast}\) should be the set of hyperparameters which result in a small error on a dataset that is independent from the training set. This approach is theoretically grounded by the following reasoning: Since the data in \({\mathscr{T}}^r_{n_t}\) are i.i.d. from the ones in \({\mathscr{V}}^r_{n_v}\), we can state, thanks to the Hoeffding’s inequality [74], that the generalization error of the function trained using \({\mathscr{T}}^r_{n_t} \cup {\mathscr{D}}_{n_u}\) can be bounded as:

and the bound holds with probability \((1-\delta )\). Since we are choosing \({\mathscr{H}}^{\ast} \in {\mathfrak{H}}\) (i.e., we are choosing over \(n_{\mathscr{H}}\) functions trained with different configurations of the hyperparameters) we have to apply the union bound [36] and we have that, with probability \((1-\delta )\), the generalization error of the function chosen between the \(n_{\mathscr{H}}\) functions is:

In repeating the training/validation split procedure \(n_r\) times, then, we choose the set of hyperparameters \({\mathscr{H}} \in {\mathfrak{H}}\) and obtain the generalization error of the classifier \(f_{\mathscr{H}}\) which randomly selects one of the functions \({\mathscr{A}}_{({\mathscr{T}}^r_{n_t} \cup {\mathscr{D}}_{n_u},{\mathscr{H}}^{\ast})}\) with \(r \in \{ 1, \ldots , n_r \}\). Each time a new sample must be classified, it can be bounded with the probability \((1-\delta )\):

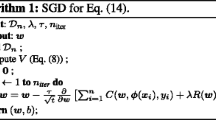

Based on the SLT, we have to choose the set of hyperparameters that minimize the estimated generalization error and obtain that:

The first approximation is due to the fact that the distribution of the data is unknown and hence the true generalization error of \(L(f_{{\mathscr{H}}})\) cannot be computed [36]. Since we have its rigorous upper bound, we can use it based on SLT [36]. The last equality holds because the last two terms, \(\sqrt{\frac{\ln \left( \frac{1}{\delta } \right) }{2 n_v}}\) and \(\sqrt{\frac{\ln \left( n_{\mathscr{H}} \right) }{2 n_v}}\), are constants and do not affect the choice of \({\mathscr{H}}^{\ast}\). Consequently, we have retrieved the criteria of Eq. (14). Note that after the best set of hyperparameters is found, one usually replaces the model \(f_{{\mathscr{H}}^{\ast}}\) with the model obtained by training the algorithm with the whole dataset \({\mathscr{A}}_{({\mathscr{D}}_{n},{\mathscr{H}}^{\ast})}\) [75]. Moreover, note that this is an approximation, since for classifying a new sample we use the function retrained with \({\mathscr{H}}^{\ast}\) over the whole set of data and so, basically, we are not directly optimizing the hyperparameters for \({\mathscr{A}}_{({\mathscr{D}}_{n},{\mathscr{H}})}\) with \({\mathscr{H}} \in {\mathfrak{H}}\).

If \(n_r=1\), if t and v are aprioristically set such that \(n = t+v\), and if the resample procedure is performed without replacement, we obtain the HO method [40]. In order to implement the KCV method, we have to set \(n_r \le \left( {\begin{array}{l}n\\ k\end{array}}\right) \), \(t = (k-1) \frac{n}{k}\), and \(v = \frac{n}{k}\) and the resampling must be done without replacement [40, 41, 67]. Finally, for implementing the BOO method, \(t = n\) and \({\mathscr{T}}^r_t\) must be sampled with replacement from \({\mathscr{D}}_{n}\), while \({\mathscr{V}}^r_{n_v} = {\mathscr{D}}_{n} {\setminus} {\mathscr{T}}^r_t\) and \({\mathscr{T}}^r_{n_t}\) [40, 42]. Note that for the bootstrap procedure \(n_r \le \left( {\begin{array}{l}2n-1\\ n\end{array}}\right) \).

It is worthwhile noting that the only hypothesis needed in order to rigorously apply the out-of-sample technique is the i.i.d. hypothesis on the data in \({\mathscr{D}}_{n}\) and that all these techniques work for any deterministic algorithm.

In-Sample Methods

For the in-sample methods, there are two subfamilies of techniques: the class of function-based ones and the algorithm-based ones [43]. The difference between the two is that the first requires the knowledge of \({\mathscr{F}}_{\mathscr{H}}\) and so cannot be applied to some algorithms (e.g., the k-nearest neighbor algorithm) while the second can be applied to any deterministic algorithm without additional knowledge. Both subfamilies, like the out-of-sample methods, require the i.i.d. hypothesis.

Vapnik–Chervonenkis Theory

The milestone result from the class of function-based methods in SLT is the VC theory [36]. In this case, the analysis holds just for the semi-supervised learning problems where the hard loss function \(\ell _H\) is exploited:

In the VC theory the following quantity is defined:

which is the set of distinct functions that shatter the dataset. Then the VC entropy \(H_{n}({\mathscr{F}}_{\mathscr{H}})\) and the annealed VC entropy \(A_{n}({\mathscr{F}}_{\mathscr{H}})\), together with their empirical counterparts \({\widehat{H}}_{n}({\mathscr{F}}_{\mathscr{H}})\) and \({\widehat{A}}_{n}({\mathscr{F}}_{\mathscr{H}})\) [36], can be recalled:

where \(\left| \cdot \right|\) is the cardinality of a set. Based on previous definitions, it is possible to define the growth function and the VC dimension, respectively \(G_{n}({\mathscr{F}}_{\mathscr{H}})\) and \(d_{\rm VC}({\mathscr{F}}_{\mathscr{H}})\), as:

Note that \(G_{n}({\mathscr{F}}_{\mathscr{H}}) \le d_{\rm VC}({\mathscr{F}}_{\mathscr{H}}) \ln (n).\) Thanks to the Vapnik results it is possible to prove that [76]:

Consequently, we have that with probability \((1 - \delta )\):

Moreover, since \({\mathscr{F}}_{\mathscr{H}}\) is chosen in a set of possible spaces \({\mathfrak{F}} = \{{\mathscr{F}}_{{\mathscr{H}}_1}, \ldots , {\mathscr{F}}_{{\mathscr{H}}_{n_{\mathscr{H}}}} \}\), we have to apply the union bound [36, 77], so we have that with probability \((1 - 2 \delta )\):

The VC theory is basically a more refined form of union bound that is able to deal with the class of functions which have an infinite number of functions [36]. In particular, the entropies and the growth function measure the number of distinct functions with respect to the distribution of the data, while the \(d_{\rm VC}\) is a measure of dimensionality for a general nonlinear class of functions [36, 44].

Recently in [78, 79] it has been proved that with probability \((1 - \delta )\):

Consequently, we can state that with probability \((1 - \delta )\):

Based on this last result, we can state that with probability \((1 - 2 \delta )\) the following inequality holds:

Moreover, since \({\mathscr{F}}_{\mathscr{H}} \in {\mathfrak{F}}\) by applying the union bound [36, 77] we have that with probability \((1 - 2 \delta )\):

Based on the results of Eqs. (27) and (31) we can present the two MS procedures based on the VC theory, noting that some terms are constants. In particular, the original approach based on Eq. (27) says that:

The second approach, based on Eq. (31), says that:

In order to extend the analysis to a real-valued loss we have to exploit a result of [36] which states that:

Then, we define:

Based on these definitions, it is possible to define the VC entropy, the growth function, and the VC dimension for real-valued functions:

and finally state that:

since \(G^\beta _{n}({\mathscr{F}}_{\mathscr{H}}) \le d^\beta _{\rm VC}({\mathscr{F}}_{\mathscr{H}}) \ln (n).\) By following the same argument presented before we can state that with probability \((1 - \delta )\):

Consequently, we can state that with probability \((1 - \delta )\):

By following the same argument presented before, it is possible to propose the two approaches for MS based on the VC theory for real-valued loss functions. The first approach states that:

The second approach, based on Eq. (31), instead says that:

(Local) Rademacher Complexity

One of the most powerful classes of function-based methods is based on the RC [40, 70]. In particular, it is possible to prove that the following bound holds with probability \((1- \delta )\) [80]:

where

RC is essentially a more refined form of union bound that is able to deal with class of functions with infinite number of functions [80]. Another interpretation of the RC is that it measures the ability of the class of functions to fit random labels [38]. More refined interpretations and the advantages and disadvantages with respect to the VC theory can be found in [44].

Therefore, based on the same principles described above, we have that:

Let us use the following loss function:

also called soft loss function. Let us also suppose that the \(f \in {\mathscr{F}} \in {\mathfrak{F}}\) can be expressed as:

where w is constrained such that

In such a case, instead of computing \({\widehat{R}}_{n}({\mathscr{F}}_{\mathscr{H}})\) we can bound it as [80]:

Consequently, we obtain that:

Recently, a more refined version of the RC, named the local Rademacher complexity (LRC), which is able to discard those functions from the class of functions which are not useful during the learning process, has been proposed in the literature [46, 47]. The result is the following bound which holds with probability \((1 - 3 n_{\mathscr{H}} \delta )\):

Unfortunately, computing the above LRC-based bound is not a trivial task and can be undertaken only when the number of samples is limited [45–47]. Furthermore, when there are a large number of samples, the advantages of using the LRC with respect to RC are not so evident [45].

PAC Bayes Theory

The PAC Bayes theory is the last major theory in the class of function-based methods. In the PAC Bayes theory, we do not bound the error of a \(f \in {\mathscr{F}}_{\mathscr{H}} \in {\mathfrak{H}}\) but instead bound the error of the stochastic Gibbs classifier (also called randomized classifier) and the majority vote classifier (also called Bayes classifier) [49–51, 68, 81, 82]. The Gibbs classifier draws an \(f \in {\mathscr{F}}_{\mathscr{H}}\) according to a probability distribution \(Q_{\mathscr{H}}\) over \({\mathscr{F}}_{\mathscr{H}}\) each time a label for an input \(X \in {\mathscr{X}}\) is required. One can also choose from different distributions \(Q_{\mathscr{H}} \in {\mathfrak{Q}} = \{Q^1_{\mathscr{H}}, \ldots , Q^{n^Q_{\mathscr{H}}}_{\mathscr{H}} \}\) [50]. The Bayes classifier is the majority voting of the different classifiers according to the distribution \(Q_{\mathscr{H}}\) [51]. This framework, despite being really powerful, is not suited for algorithm like the ELM since it is built for ensemble methods [51] like Bagging [83, 84], Boosting [85, 86] or Bayesian approaches [87].

Algorithmic Stability Theory

Algorithmic-based methods circumvent the problem of knowing the class of functions by defining the properties that an algorithm should satisfy in order to achieve good generalization performances [43]. The AS theory [43, 55, 88], in particular, states that it is possible to prove that the following bounds hold with probability \((1- \delta )\) [55]:

where

Basically, AS states that if an algorithm selects similar functions, the training set being slightly changed results in the algorithm having good generalization performances [56].

It has been proven recently in [43] that \(\beta _{\text{loo}}({\mathscr{A}}_{\mathscr{H}},n)\) can be estimated directly from the data, if \(\beta _{\text{loo}}({\mathscr{A}}_{\mathscr{H}},n)\) decreases with n. We wish to highlight that this property is a desirable requirement for any learning algorithm, since we need that in order to be able to prove the learnability in the AS framework:

or that, in other words, the impact on the learning procedure of removing or replacing one sample from \({\mathscr{D}}_{n}\) should decrease, on average, as n grows. Numerous researchers have already studied this property in the past. In particular, it is related to the consistency concept [89]. However, connections can also be identified with the trend of the learning curves of an algorithm [90]. Such quantity is also strictly linked to the concept of Smart Rule [89]. It is worth underlining that, in many of the above-referenced works, the required property is proved satisfied by many well-known algorithms like least squares, regularized least squares, and kerneled regularized least squares. Consequently, the property is also true for ELM, which is itself represented by a random protection with a regularized least squares.

Therefore in [43] it is proved that with probability \((1-\delta )\):

where

By plugging this last result in the bound of Eq. (52) we obtain the fully empirical-based bound of [43], where every quantity involved in the bound can be computed from the available data. Note also that \({\widehat{H}}_{\text{loo}}({\mathscr{A}}_{\left( {\mathscr{D}}_{{\sqrt{n}}/{2}},{\mathscr{H}}\right) }, {\mathscr{D}}_{{\sqrt{n}}/{2}})\) can be effectively estimated via a Monte Carlo procedure: This enables computing a subset \(s_{MC}\) of the required steps, i.e., \(s_{MC} \ll \frac{n\sqrt{n}}{8}\).

The bounds of Eqs. (51) and (52) are polynomial bounds in n (so not very tight when n is small), while \(\beta _{\text{emp}}\) and \(\beta _{\text{loo}}\) are two versions of hypothesis stability (HS) which are able to take into account both the properties of the algorithm and the property of the distribution that has generated the data \({\mathscr{D}}_{n}\) [43, 55]. It is possible to improve the bounds of Eqs. (51) and (52) by exploiting a stronger notion of AS, known as the uniform stability (US). In particular, the following bounds hold with probability \((1- \delta )\):

where

Note that \(\beta^{i} \le 2 \beta^{{\setminus} i}\).

Unfortunately, the US (\(\beta^{i}\) or \(\beta^{{\setminus} i}\)) is not able to take into account the properties of the distribution that has generated the data \({\mathscr{D}}_{n}\) and sometimes is not even able to capture the properties of the algorithm because it deals with a worst-case learning scenario [43].

All the four AS-based bounds of Eqs. (51), (52), (59), and (60) can be used to select the best set of hyperparameters \({\mathscr{H}} \in {\mathfrak{H}}\) for the algorithm \({\mathscr{A}}_{\mathscr{H}}\). In particular, all the bounds are in the form: \(L({\mathscr{A}}_{({\mathscr{D}}_{n},{\mathscr{H}})}) \le \epsilon ({\mathscr{A}}_{\mathscr{H}},{\mathscr{D}}_{n},n,\delta ,n_{\mathscr{H}})\). In order to perform the MS procedure we have:

The procedure of Eq. (63) can be exploited with any algorithm for which it is possible to compute one notion of AS.

Compression Bound

The compression bound is the result of the approximation of the Kolmogorov theory [91] and, in particular, the minimum description length principle [92]. The compression bound [53] states that the less data of \({\mathscr{D}}_n\) we use for learning the better generalization performance our model will have. Unfortunately, this approach is not suited for ELM but just for algorithms which produce sparse models like SVM [39].

The Use of Unlabeled Samples for Extreme Learning Machine Model Selection

As we described before, it is not possible (or it does not make sense) to apply some of the methodologies described above (e.g., the PAC Bayes theory and the compression bound theory). In this section, we show how to apply the out-of-sample methods, the VC theory, the RC theory, and the AS theory to the ELM and how to take advantage of unlabeled samples both for training a more accurate model thanks to the regularization framework depicted in “Semi-supervised Extreme Learning Machines” section, and during the MS process. In particular, we show how to perform the MS effectively with and without exploiting the unlabeled samples for the three version of ELM presented in this paper:

-

ELM-NoR: the easier ELM which does not implement any regularization strategy [see Eq. (5)],

-

ELM-R: the now-standard ELM which implements the typical Tikhonov regularization schema [93] [see Eq. (7)],

-

ELM-SemiR: the ELM which implements the semi-supervised regularization schema presented in Eq. (11).

The ELM-NoR has just one hyperparameter: the number of hidden neurons, so \({\mathscr{H}} = \{ N_h \}\). For ELM-R the hyperparameters are the number of hidden neurons and the regularization hyperparameter, so we have that \({\mathscr{H}} = \{ N_h, \lambda \}\). Finally, for the ELM-SemiR the hyperparameters are the number of hidden neutrons and the two regularization hyperparameters, so we have that \({\mathscr{H}} = \{ N_h, \lambda _1, \lambda _2 \}\).

Out-of-Sample Methods

The main problem of the out-of-sample methods, as described in “Out-of-Sample Methods” section, is that instead of tuning the hyperparameters for the classifier we are tuning the performance of an ensemble classifier. Since we are dealing with binary classification a reasonable choice is to use the hard loss function \(\ell _H\) which counts the number of errors of a classifier trained with \({\mathscr{A}}_{\mathscr{H}}\) over a dataset. In particular, if we use the ELM we can define:

where \({\mathscr{A}}_{\mathscr{H}}\) can be the solution of ELM-NoR or ELM-R or ELM-SemiR trained over \({\mathscr{T}}^r_{n_t} \cup {\mathscr{D}}_{n_u}\) for \({w}^{{\mathscr{T}}^r_{n_t} \cup {\mathscr{D}}_{n_u}}_{{\mathscr{H}}}\), trained over \({\mathscr{D}}_{n} \cup {\mathscr{D}}_{n_u}\) for \({w}^{{\mathscr{D}}_{n} \cup {\mathscr{D}}_{n_u}}_{{\mathscr{H}}}\), etc. Note that \({\mathscr{D}}_{n_u}\) can be exploited or not based on \({\mathscr{A}}\), in fact ELM-NoR and ELM-R do not use it. The procedure of Eq. (18) states that:

We remember that (see “Out-of-Sample Methods” section) we are minimizing the error of the classifier which randomly chooses one of the \({w}^{{\mathscr{T}}^r_{n_t} \cup {\mathscr{D}}_{n_u}}_{{\mathscr{H}}}\) with \(r \in \{1, \ldots , n_r \}\) while at the end of the procedure we classify a new sample with \({w}^{{\mathscr{D}}_{n} \cup {\mathscr{D}}_{n_u}}_{{\mathscr{H}}}\). This produces a sub-optimal result [40, 57]. In order to fix this bias we can employ the unlabeled samples. In particular, we can estimate the difference between the error of \({w}^{{\mathscr{T}}^r_{n_t} \cup {\mathscr{D}}_{n_u}}_{{\mathscr{H}}}\) with \(r \in \{1, \ldots , n_r \}\) and the one of \({w}^{{\mathscr{D}}_{n} \cup {\mathscr{D}}_{n_u}}_{{\mathscr{H}}}\). In fact, when the hard loss function is used, the error of \({w}^{{\mathscr{D}}_{n} \cup {\mathscr{D}}_{n_u}}_{{\mathscr{H}}}\) is bounded by the average error of \({w}^{{\mathscr{T}}^r_{n_t} \cup {\mathscr{D}}_{n_u}}_{{\mathscr{H}}}\) with \(r \in \{1, \ldots , n_r \}\) plus the average difference between the prediction of \({w}^{{\mathscr{D}}_{n} \cup {\mathscr{D}}_{n_u}}_{{\mathscr{H}}}\) and \({w}^{{\mathscr{T}}^r_{n_t} \cup {\mathscr{D}}_{n_u}}_{{\mathscr{H}}}\) with \(r \in \{1, \ldots , n_r \}\):

The first term can be bounded as we have done in “Out-of-Sample Methods” section, while the second term can be bounded by using the unlabeled patterns. In fact, if we define the following quantity:

since the data in \({\mathscr{D}}_{n} \cup {\mathscr{D}}_{n_u}\) are i.i.d., the quantity of Eq. (67) is an unbiased estimator of

Consequently by exploiting the Hoeffing’s inequality we can state that with probability \((1-\delta )\):

Consequently, we have that with probability \((1 - 2 \delta )\):

Note that, if we have just few unlabeled samples the bound is very loose, while if we have a lot of unlabeled samples (in the semi-supervised learning framework usually \(n_u \gg n\)) the bound fast converges to the conventional bound [see Eq. (17)], plus \({\widehat{D}}_{\mathscr{H}}\) which takes into account the bias discussed above. Based on this last result we can derive the out-of-sample MS procedure for ELM which exploits also the unlabeled samples:

where \({\widehat{D}}_{\mathscr{H}}\) is defined above.

Vapnik–Chervonenkis Theory

Let us start by considering the ELM-NoR. Let us consider the VC theory when the hard loss function is employed. Since the ELM searches a linear separator in the space defined by the random protection of the original input space \({\mathbb{R}}^r\) into the space defined by the \(N_h\) hidden neurons we have that \(d_{\rm VC} \le N_h\) [36].

Unfortunately, this is a loose upper bound which does not depend on the distribution of the data [36]. In order to be able to take into account the distribution of the data we have to use the VC entropy \({\widehat{A}}_{n}({\mathscr{F}}_{\mathscr{H}})\). Note that, from Eq. (33), in order to perform the MS procedure with the VC entropy we need to compute \({{\widehat{A}}_{n}({\mathscr{F}}_{\mathscr{H}})}/{n} \in [0, \ln (2)]\). The VC entropy is the number of configurations of the labels that can be shattered by \({\mathscr{F}}_{\mathscr{H}}\). Consequently, let \(\varvec{\sigma }_i \in \{-1,1 \}^n\) be one of the possible \(2^n\) configurations of the labels; we have to search how many of them can be shattered by a linear separator in the random projection space, then we have to check for how many of the following problems

at least one solution exists. Note that the above problem is a linear programming (LP) problem [94]. Searching for a feasible solution of an LP problem is again an LP problem [94] which can be solved in polynomial time [94]. We have to solve \(2^n\) problems and this represents an NP problem. The issue can be circumvented by noting that we can estimate \({\widehat{A}}_{n}({\mathscr{F}}_{\mathscr{H}})\) through a Monte Carlo procedure by checking just a random subset, and in particular \(n_{MC} \le 2^n\) realizations of the labels [44]. If we indicate with \(\widehat{{\widehat{A}}}_{n}({\mathscr{F}}_{\mathscr{H}})\) the logarithm number of configurations of the \(n_{MC}\) that can be shattered, thanks to the Serfling’s bound [95] (since we are bounding the expected value of an hypergeometric distribution), we can state that with probability \((1-\delta )\):

Note that the quality of the estimation does not depend on n but just on \(n_{MC}\). Consequently, \({\widehat{{\widehat{A}}}_{n}({\mathscr{F}}_{\mathscr{H}})}/{n_{MC}}\) rapidly converges to its mean \({{\widehat{A}}_{n}({\mathscr{F}}_{\mathscr{H}})}/{n}\). Consequently, let us suppose that \({w} _{{\mathscr{H}}}\) is the solution of the ELM-NoR for a value of its hyperparameters, thanks to the procedure of Eqs. (32) and (33), we have that, based on the VC theory:

We now show that the unlabeled samples can be useful to improve the quality of the estimation of the VC entropy. In particular, let us suppose that \({\mathscr{D}}_{n_u}\) contains more samples than \({\mathscr{D}}_{n}\), in particular \(n_u \ge 2 n\). This is a reasonable hypothesis since usually the number of unlabeled samples exceeds by a large amount the number of labeled ones [96]. In particular, let us define \(m = \lfloor {n + n_u}/{2 n} \rfloor\) and let us consider the original bound of [33]:

We show that \(A_{2 n}({\mathscr{F}}_{\mathscr{H}})\) can be estimated more effectively with the use of the unlabeled samples. In particular, let us define the following quantity:

which is basically the VC entropy for sample size of 2n averaged over m different realizations. Thanks to the results of [78, 79] we can state that with probability \((1 - \delta )\):

which is a much tighter estimate with respect to the one presented in Eq. (28). Moreover, \({\widehat{A}}^m_{2n}({\mathscr{F}}_{\mathscr{H}})\) can be estimated from the data since we do not need the labels of the unlabeled samples; hence, by following the same reasoning presented above we can define a Monte Carlo estimation of \({\widehat{A}}^m_{2n}({\mathscr{F}}_{\mathscr{H}})\) through \(\widehat{{\widehat{A}}}^m_{2n}({\mathscr{F}}_{\mathscr{H}})\), which is VC entropy-based MS procedure which exploits also the unlabeled samples [the counterpart of the method of Eq. (75)]:

Regarding the ELM-R and the ELM-SemiR the reasoning is more complex. In fact, in this case we cannot use the hard loss function \(\ell _H\) since we would eliminate the effect of the regularization hyperparameter [76, 97]. For this reason we have to employ a smooth loss function like the \(\ell _S\). In particular, it is straightforward to see that \(\ell _H \le \ell _S\). So, even if we use \(\ell _S\) we still have information about \(\ell _H\) [80]. In order to apply the procedure for real-valued losses [see Eqs. (40) and (41)] we have to compute the VC entropy and the VC dimension for real-valued functions which are \(d^\beta _{\rm VC}({\mathscr{F}}_{\mathscr{H}})\) and \({\widehat{A}}^\beta _{n}({\mathscr{F}}_{\mathscr{H}})\). Unfortunately, upper bounding the \(d^\beta _{\rm VC}({\mathscr{F}}_{\mathscr{H}})\) is a rather complex phase while estimating \({\widehat{A}}^\beta _{n}({\mathscr{F}}_{\mathscr{H}})\) cannot be transformed to a polynomial problem as we have done for the \({\widehat{A}}_{n}({\mathscr{F}}_{\mathscr{H}})\). Moreover, \({\widehat{A}}^\beta _{n}({\mathscr{F}}_{\mathscr{H}})\) requires the knowledge of the labels so the unlabeled samples cannot be exploited for improving the MS strategy. Other extensions to real-valued functions of the VC theory have been proposed in [98, 99], but their applications in real world are not feasible.

Rademacher Complexity Theory

By exploiting the same notation adopted in “Out-of-Sample Methods” section and by noting again that \(\ell _H \le \ell _S\) we can state, thanks to the result of “(Local) Rademacher Complexity” section, that with probability \((1- \delta )\):

and

When the ELM-NoR is exploited one should use the bound of Eq. (80) in order to control the generalization performance of the ELM, since no regularization is applied, while for ELM-R and ELM-SemiR the bound of Eq. (81) should be used. Unfortunately, computing the RC when the hard loss function is exploited results in an NP-hard problem [44, 80]. For this reason we can retrieve the Massart’s Lemma [100] which states that:

By exploiting this result we retrieve the one reported in “Vapnik–Chervonenkis Theory” section for the VC theory.

For ELM-R and ELM-SemiR, instead, we use the \(\ell _S\). In this case, we exploit the property of Eq. (48) which is proven in [80].

For ELM-R we can state that:

The Tikhonov regularization problem of Eq. (7):

is equivalent to an Ivanov-based one [69, 101, 102]

for a suitable value of \(W = \left\| {w}^{{\mathscr{D}}_{n} \cup {\mathscr{D}}_{n_u}}_{{\mathscr{H}}} \right\|\). Note that this bound can be used also for ELM-NoR by exploiting the soft loss function instead of the hard one, but since no regularization is applied in ELM-NoR \(W = \left\| {w}^{{\mathscr{D}}_{n} \cup {\mathscr{D}}_{n_u}}_{{\mathscr{H}}} \right\|\) can assume any value. In fact, for ELM-R from \(\lambda = \infty\) we have that \(W = 0\) while for \(\lambda = 0\) we retrieve the ELM-NoR.

For ELM-SemiR we can state that:

The Tikhonov regularization problem of Eq. (11):

is equivalent to an Ivanov-based one:

for a suitable value of \(W = \left\| {w}^{{\mathscr{D}}_{n} \cup {\mathscr{D}}_{n_u}}_{{\mathscr{H}}} - \lambda _1 {w} _0 \right\|\).

Based on these results we can propose the RC-based MS for ELM-R and ELM-SemiR:

where for ELM-R \(W = \left\| {w}^{{\mathscr{D}}_{n} \cup {\mathscr{D}}_{n_u}}_{{\mathscr{H}}} \right\|\) while for ELM-SemiR, which exploits also the unlabeled samples, \(W = \left\| {w}^{{\mathscr{D}}_{n} \cup {\mathscr{D}}_{n_u}}_{{\mathscr{H}}}- \lambda _1 {w} _0 \right\|\).

In order to exploit the unlabeled samples also for the MS process, we have to exploit a recent result reported in [103, 104] which states that:

where \(m = \lfloor {n + n_u}/{n} \rfloor\), \({\mathscr{D}}_n \cup {\mathscr{D}}_{n_u} = \left\{ {x} _1, \ldots , {x} _{n + n_u} \right\}\) and

Note that the bound of Eq. (90) is tighter than the one of Eq. (42) since we have a better estimation of the RC thanks to the unlabeled samples. Basically, the unlabeled samples give us the ability of computing the average over m different realizations of the RC. Based on the previous results we can state that if we use the soft loss function, for the ELM-R we have that:

while for the ELM-SemiR

Based on this result we can propose the RC-based MS for ELM-R and ELM-SemiR which takes into account also the unlabeled samples:

where \(W = \left\| {w}^{{\mathscr{D}}_{n} \cup {\mathscr{D}}_{n_u}}_{{\mathscr{H}}} \right\|\) for ELM-R and \(W = \left\| {w}^{{\mathscr{D}}_{n} \cup {\mathscr{D}}_{n_u}}_{{\mathscr{H}}} - \lambda _1 {w} _0 \right\|\) for ELM-SemiR.

Also for the LRC it has been proved that the unlabeled samples can improve the reliability of the estimation of the generalization error of the model [47] but, unfortunately, the open question remains how to effectively compute the LRC in practice.

Algorithmic Stability Theory

In order to apply the AS to ELM we have to exploit some known results and prove some new ones. We use the same notation of “Out-of-Sample Methods” section.

Let us start with the US. If we use the hard loss function, it is straightforward to prove that for any ELM we have [43, 46, 56]:

which is the only possible, and trivial, result. If instead we use the soft loss function in [55] it is proved that for a Tikhonov regularization problem like the ELM-R we have that:

Note that ELM-R is equal to ELM-NoR if \(\lambda \rightarrow 0\), which results in \(\left( \beta^{{\setminus} i}({\mathscr{A}}_{\mathscr{H}},n) \right)^\ell _H \rightarrow +\infty\). This means that without regularization the ELM is not stable. For the ELM-SemiR we have that:

Consequently, we can use the US just for ELM-R and ELM-SemiR and by exploiting the results of “Algorithmic Stability Theory” section we can state that:

Note that \(\beta^{{\setminus} i}\) must be computed based on Eqs. (96) and (97), respectively, for ELM-R and ELM-SemiR. Note that there is no advantage in having unlabeled samples.

With the HS the approach is quite different. In this case, we can exploit the hard loss function as described also in [43]. Since \(\beta _{\text{emp}}({\mathscr{A}}_{\mathscr{H}},n)\) cannot be estimated from the data [43] we can just use the bound which takes into the LOO error [see Eq. (52)]. In order to compute the \(\beta _{\text{loo}}({\mathscr{A}}_{\mathscr{H}},n)\) of Eq. (56) for ELM with the hard loss function we have to take Eq. (56) and note that with probability \((1-\delta )\):

Note that \({\widehat{\beta }}^{\ell _H}_{\text{loo}}({\mathscr{A}}_{\mathscr{H}}, {\sqrt{n}}/{2})\) can be computed from the data and so by applying the bound of Eq. (52) we have the HS MS strategyFootnote 2:

This approach can be applied to ELM-NoR, ELM-R, or ELM-SemiR.

It can also be shown that the bound of Eq. (56), as well as the MS strategy, can be improved, if some unlabeled data are available. In particular, from Eq. (100) it is possible to note that, if the hard loss function is exploited, \({\widehat{\beta }}_{\text{loo}}({\mathscr{A}}_{\mathscr{H}},{\sqrt{n}}/{2})\) does not require the knowledge of the labels. In particular, let us suppose to have at least \(n_u = n\) unlabeled data, since for the ELM \(\beta _{\text{loo}}({\mathscr{A}}_{\mathscr{H}},n)\) decreases with n we have that:

Let us define now the following quantity:

where

Note that the label in \({\mathscr{D}}_{n_u}\) are unknown but, if the hard loss function is used, we have that:

which does not require the knowledge of the labels. Moreover, \({\widehat{\beta }}^{\ell _H}_{\text{loo}}({\mathscr{A}}_{\mathscr{H}},\sqrt{n})\) is an empirical unbiased estimator of \({\beta }^{\ell _H}_{\text{loo}}({\mathscr{A}}_{\mathscr{H}},\sqrt{n})\) (based on the same reasoning proposed in [43]) and therefore thanks to the Hoeffding’s inequality we can state that:

By plugging these results into the bound of Eq. (52) and by following the procedure adopted for deriving Eq. (101), we can derive the HS MS strategy which takes advantage also of the unlabeled samples:

This approach can be applied to ELM-NoR, ELM-R, or ELM-SemiR.

Affective Analogical Reasoning Dataset

The AffectiveSpace model

AffectNet is a semantic network in which common-sense concepts (e.g., ‘read book,’ ‘payment,’ ‘play music’) are linked to a hierarchy of affective domain labels (e.g., ‘joy,’ ‘amazement,’ ‘fear,’ ‘admiration’). In order to enable affective analogical reasoning on natural language concepts, AffectiveSpace [13] is obtained as the vector space representation of such a semantic network. Therefore, in AffectiveSpace, concepts conveying similar semantic and affective information, e.g., ‘enjoy conversation’ and ‘chat with friends,’ tend to fall near each other in the multi-dimensional space.

Both AffectNet and AffectiveSpace are publicly available at http://sentic.net. AffectiveSpace has been obtained applying principal component analysis (PCA) on the matrix representation of AffectNet. Due to computational cost issues, truncated singular value decomposition (TSVD) has been preferred to other dimensionality reduction techniques. TSVD uses an orthogonal transformation to convert the set of common-sense features associated with each concept into a set of uncorrelated variables (the principal components of the SVD).

Indicating AffectNet as A, a low-rank approximation of it is obtained: \({\tilde{A}} = U_M \, \Sigma _M \, V^T_M\). This approximation is based on minimizing the Frobenius norm of the difference between A and \({\tilde{A}}\), under the constraint \(rank({\tilde{A}})=M\); according to the Eckart–Young theorem [105], this represents the best approximation of A in the least-square sense:

assuming that \({\tilde{A}} = USV^{\rm T}\), where S is diagonal and has M nonzero diagonal entries for the rank constraint. The minimum of the above equation may be obtained as follows:

In fact, in the Frobenius norm sense the minimum is obtained when \(\sigma _i=s_i\) \((i=1,\ldots ,M)\) and the corresponding singular vectors are the same as those of A. Thus, if only the first M principal components are kept, common-sense concepts are represented by vectors of M coordinates.

As already said, concepts with the same affective orientation are likely to have similar features; i.e., concepts conveying the same emotion tend to fall near each other in AffectiveSpace. Concept similarity does not depend on their absolute positions in the vector space, but rather on the angle they make with the origin, as it can be seen in Fig. 1.

The number of singular values M, which indicates the dimensionality of the AffectiveSpace, represents the trade-off between efficiency and precision: The bigger is M, the more precisely AffectiveSpace represents AffectNet’s knowledge, but generating the vector space is slower, while the smaller is M, the more efficiently AffectiveSpace can be obtained.

The hourglass of emotions [9], used in Fig. 2, is employed to reason on the disposition of concepts in AffectiveSpace. In the model, affective states are represented by four concomitant but independent dimensions (Pleasantness, Attention, Sensitivity, and Aptitude), each one characterized by six levels of activation, which determine the intensity of the expressed/perceived emotion.

Such levels represent a set of 24 basic emotions (six for each affective dimension). Therefore, a four-dimensional vector can potentially synthesize the level of activation of each affective dimension of a concept. Beyond emotion detection, the hourglass model is also used for polarity detection tasks. Polarity is defined in terms of the four affective dimensions, according to the formula:

where \(c_i\) is an input concept, N the total number of concepts, and 3 the normalization factor (as the hourglass dimensions are defined as float \(\in\) [−1, +1]).

In the equation, Attention is taken as absolute value since both its positive and negative intensity values correspond to positive polarity values (e.g., ‘surprise’ is negative in the sense of lack of Attention, but positive from a polarity point of view). Similarly, Sensitivity is taken as negative absolute value since both its positive and negative intensity values correspond to negative polarity values (e.g., ‘anger’ is positive in the sense of level of activation of Sensitivity, but negative in terms of polarity).

Dataset Description

The proposed MS framework was tested on a benchmark of 23,244 common-sense concepts. Each concept is represented according to the AffectiveSpace model discussed in “The AffectiveSpace Model” section, with dimension M equal to 50 and 100.

The publicly available Sentic API (on http://sentic.net/api) was used to obtain for each concept the level of activation for each affective dimension. According to the hourglass model presented in “The AffectiveSpace Model” section, the Sentic API expresses the levels of activation as an analog number in the range \([-1, 1]\), which are eventually mapped into the associated polarity according to equation Eq. (111). Only 6813 concepts of the benchmark are labeled, while the others are left unlabeled.

Experimental Results

In this section, we compare the performance of different ELMs (ELM-noR, ELM-R, and ELM-SemiR) over the dataset described in “Dataset Description” section, tuned with the different MS strategies described in “Model Selection” section. In particular, for the ELMs we have that:

-

ELM-noR: The set of possible configurations of hyperparameters is every possible combination of the hyperparameters such that \({\mathfrak{H}} = \{ N_h:\ N_h \in \{100;\) 250; 500; 750; \(1000\} \}\)

-

ELM-R: The set of possible configurations of hyperparameters is every possible combination of the hyperparameters such that \({\mathfrak{H}} = \{ N_h:\ N_h \in \{100;\) 250; 500; 750; \(1000\}, \lambda \in 10^{\{-6, -5.5, \ldots , 2.5, 3\}} \}\)

-

ELM-SemiR: The set of possible configurations of hyperparameters is every possible combination of the hyperparameters such that \({\mathfrak{H}} = \{ N_h:\ N_h \in \{100;\) 250; 500; 750; \(1000\},\) \(\lambda _1\) \(\in\) \(10^{\{-6, -5.5, \ldots , 2.5, 3\}} \},\) \(\lambda _2\) \(\in\) \(10^{\{-6, -5.5, \ldots , 2.5, 3\}} \} \}\)

For the MS strategies, the possible options are several but some of them cannot be applied to every version of ELM exploited in this paper. Therefore, Table 1 reports on the match between MS methods and the type of ELM in which the method can be adopted. In Table 1 we refer to the methods with the following acronyms:

-

HO: indicates the usual HO procedure where no unlabeled samples are exploited (see Eq. (65) in “Out-of-Sample Methods” section). Note that \(r=1\), \(v = \lfloor 0.1 n \rfloor\) and the resample procedure is performed without replacement;

-

HO-SEMI: indicates the usual HO procedure where also the unlabeled samples are exploited (see Eq. (71) in “Out-of-Sample Methods” section). Note that we employed the same parameters of HO;

-

KCV: indicates the k-fold cross-validation procedure where no unlabeled samples are exploited (see Eq. (65) in “Out-of-Sample Methods” section). Note that \(n_r = 10\), \(v = \lfloor 0.1 n \rfloor\) (\(k = 10\)) and the resample procedure is performed without replacement;

-

KCV-SEMI: indicates the k-fold cross-validation procedure where also the unlabeled samples are exploited (see Eq. (71) in “Out-of-Sample Methods” section). Note that we employed the same parameters of KCV;

-

BOO: indicates the bootstrap procedure where no unlabeled samples are exploited (see Eq. (65) in “Out-of-Sample Methods” section). Note that \(n_r = 30\), \(t = n\) (\(k = 10\)) and the resample procedure is performed with replacement;

-

BOO-SEMI: indicates the bootstrap procedure where also the unlabeled samples are exploited (see Eq. (71) in “Out-of-Sample Methods” section). Note that we employed the same parameters of BOO;

-

VC-DIMENSION: exploits the VC dimension without employing the unlabeled samples (see Eq. (74) in “Vapnik–Chervonenkis Theory” section)

-

VC-ENTROPY: exploits the VC entropy without employing the unlabeled samples (see Eq. (75) in “Vapnik–Chervonenkis Theory” section)

-

VC-ENTROPY-SEMI: exploits the VC entropy with the employment of the unlabeled samples (see Eq. (79) in “Vapnik–Chervonenkis Theory” section)

-

RC: exploits the Rademacher complexity without employing the unlabeled samples (see Eq. (89) in “Rademacher Complexity Theory” section)

-

RC-SEMI: exploits the Rademacher complexity with the employment of the unlabeled samples (see Eq. (94) in “Rademacher Complexity Theory” section)

-

US\(_\text{EMP}\): exploits the US and the empirical error without employing the unlabeled samples (see Eq. (98) in “Algorithmic Stability Theory” section)

-

US\(_\text{LOO}\): exploits the US and the LOO error without employing the unlabeled samples (see Eq. (99) in “Algorithmic Stability Theory” section)

-

HS: exploits the hypothesis stability without employing the unlabeled samples (see Eq. (101) in “Algorithmic Stability Theory” section)

-

HS-SEMI: exploits the hypothesis stability with the employment of the unlabeled samples (see Eq. (108) in “Algorithmic Stability Theory” section)

The labeled data have been split into two sets: The first 5000 samples have been used for building the model with the different ELMs (ELM-noR, ELM-R, and ELM-SemiR) and with the different MS strategies (HO, HO-SEMI, KCV, KCV-SEMI, BOO, BOO-SEMI, VC-DIMENSION, VC-ENTROPY, VC-ENTROPY-SEMI, RC, RC-SEMI, \(\hbox{US}_{\mathrm{EMP}}\), \(\hbox{US}_{\mathrm{LOO}}\), HS, HS-SEMI) as reported in Table 1, while the rest of the labeled samples, which are 1813, have been kept apart as reference set in order to test the performance of the learned model. The splitting process has been repeated 30 times in order to obtain statistically relevant results.

The experiments have been performed on a Workstation equipped with one Solid Stata Drive disk of 100 GB, one Hard Disk Drive of 1 TB, 128 GB of RAM, 4 Intel Xeon CPU E5-4620 @2.20 GHz, and Windows Server 2012 R2. The code has been written in Fortran 90 and compiled with the Intel Parallel Studio XE 2016 Composer Edition.

In Tables 2 and 3 we reported the error on the reference set and the time needed to build the model for the different combination of ELM and MS strategies (see Table 1), for \(M = 50\) in Table 2 and \(M = 100\) in Table 3, for the five binary classification tasks (Polarity, Pleasantness, Attention, Sensitivity, and Aptitude).

From the results reported in Tables 2 and 3 it is possible to derive three main conclusions:

-

With \(M = 100\) we retrieve models with generally higher accuracy with respect to \(M = 50\). This is reasonable since the more information we feed the learning machine the more accurate results the final model.

-

ELM-SemiR produces models with higher accuracy with respect to ELM-noR and ELM-R. This means that the algorithm is able to exploit and take advantage of the hidden information given by the unlabeled samples. Anyway, note that ELM-SemiR requires more time to build the model because of the unsupervised pre-training phase.

-

The MS strategies which exploit also the unlabeled samples (HO-SEMI, KCV-SEMI, BOO-SEMI, VC-ENTROPY-SEMI, RC-SEMI, HS-SEMI) select models with higher accuracy with respect to their counterparts where the unlabeled samples are not taken into account (HO, KCV, BOO, VC-DIMENSION, VC-ENTROPY, RC, US\(_\text{EMP}\), US\(_\text{LOO}\), HS). As expected from theory, the information hidden in the unlabeled samples helps to improve the performance of the MS strategy. Generally, the difference in terms of time to build the model between the MS methods which exploit the unlabeled samples and the ones which do not is not noticeable.

Besides these general considerations it is possible to derive some other interesting insights from the results of Tables 2 and 3 about the characteristics of each ELM and MS strategy.

-

The in-sample methods (VC-DIMENSION, VC-ENTROPY, RC, US\(_\text{EMP}\), US\(_\text{LOO}\)) usually perform worse, in terms of accuracy of the selected models, with respect to the out-of-sample ones (HO, KCV, BOO), when the unlabeled samples are not exploited. Anyway, the in-sample methods require less computational effort. The only exception is the HS method which generally produces models with higher accuracy than the in-sample methods.

-

When the unlabeled data are exploited for MS purposes, the in-sample methods (RC-SEMI, HS-SEMI) produce models with higher accuracy compared to the out-of-sample ones (HO-SEMI, KCV-SEMI, BOO-SEMI), even if the models selected by the latter methods possess higher accuracy with respect to their counterparts when the unlabeled samples are not exploited (HO, KCV, BOO). The only exception is the VC-ENTROPY-SEMI, which selects more accurate models compared to the VC-ENTROPY but less accurate models than the ones selected with the out-of-sample methods.

-

The out-of-sample method which selects the most accurate models is the bootstrap (BOO and BOO-SEMI), while the less accurate models are selected by the HO method (HO and HO-SEMI). This is due to the fact that the bootstrap represents the statistical method which extracts more information from data (as described in “Out-of-Sample Methods” section). In fact, the bootstrap is also the out-of-sample method which requires more time to build the model, while the HO method is the most computational inexpensive out-of-sample method.

-

The in-sample method which selects the most accurate models is the hypothesis stability (HS and HS-SEMI), while the less accurate models are selected by the US-based methods (US\(_\text{EMP}\) and US\(_\text{LOO}\)) together with the VC-based methods (VC-ENTROPY and VC-ENTROPY-SEMI). This is due to the fact that the VC dimension and the US techniques are not able to properly take into account the properties of the algorithms and the probability distributions that have generated the data (as described in “In-Sample Methods” section). Again, the in-sample method which selects the most accurate models is the also the one with the higher computational requirements.

-

Overall, the HS-SEMI method is the one which selects the most accurate model while requiring less computational effort with respect to the out-of-sample methods.

Finally, we would like to stress that the proposed approach is quite general and can be applied in other applications and other learning algorithms.

Conclusion

In this work, we have addressed the problem of exploiting unlabeled samples to perform an emotion recognition task. In particular, we have shown that the unlabeled samples can be exploited during the formulation of the learning algorithm with particular reference to the ELM. More in details, we proposed a different regularization procedure which is able to incapsulate an unsupervised pre-training hint in a form of a reference hyperplane into the ELM formulation. Moreover, we have shown that unlabeled samples can be extremely useful during another key phase of the learning process, the model selection phase, where the hyperparameters which influence the generalization performances of the learned model must be tuned based on the available data. In particular, we have reviewed all the most important state-of-the-art theoretical approaches to model selection and we have shown how to modify the theoretical framework in order to explicitly take advantage of the available information hidden in the unlabeled samples. The results performed on an affective analogical reasoning problem show that our method is indeed able to exploit the information given by unlabeled samples in order to build models with higher generalization performances with respect to the models built without exploiting them.

Notes

In this paper, we deal with a frequentist approach, which derives confidence intervals for quantities of interest, but the credible intervals of the Bayesian approach can be addressed equally well in the parametric setting [30].

We have exploited the property \(\sqrt{a 2b} \le \frac{a}{2} + b\) in order to remove all the constant terms which do not depend on \({\widehat{\beta }}_{\text{loo}}({\mathscr{A}}_{\mathscr{H}}, {\sqrt{n}}/{2})\).

References

Cambria E. Affective computing and sentiment analysis. IEEE Intell Syst. 2016;31(2):102–7.

Saif H, He Y, Fernandez M, Alani H. Contextual semantics for sentiment analysis of twitter. Inf Process Manag. 2016;52(1):5–19.

Xia R, Xu F, Yu J, Qi Y, Cambria E. Polarity shift detection, elimination and ensemble: a three-stage model for document-level sentiment analysis. Inf Process Manag. 2016;52(1):36–45.

Balahur A, Jacquet G. Sentiment analysis meets social media-challenges and solutions of the field in view of the current information sharing context. Inf Process Manag. 2015;51(4):428–32.

Google. Announcing syntaxnet: the world’s most accurate parser goes open source. http://googleresearch.blogspot.it/2016/05/announcing-syntaxnet-worlds-most.html. 2016.

Roy RS, Agarwal S, Ganguly N, Choudhury M. Syntactic complexity of web search queries through the lenses of language models, networks and users. Inf Process Manag. 2016;52(5):923–48.

Abainia K, Ouamour S, Sayoud H. Effective language identification of forum texts based on statistical approaches. Inf Process Manag. 2016;52(4):491–512.

Sun J, Wang G, Cheng X, Fu Y. Mining affective text to improve social media item recommendation. Inf Process Manag. 2015;51(4):444–57.

Cambria E, Hussain A. Sentic computing: a common-sense-based framework for concept-level sentiment analysis. Switzerland: Cham; 2015.

Poria S, Cambria E, Howard N, Huang G-B, Hussain A. Fusing audio, visual and textual clues for sentiment analysis from multimodal content. Neurocomputing. 2016;174:50–9.

Wang Q, Cambria E, Liu C, Hussain A. Common sense knowledge for handwritten chinese recognition. Cogn Comput. 2013;5(2):234–42.

Cambria E, Hussain A, Durrani T, Havasi C, Eckl C, Munro J. Sentic computing for patient centered application. In: IEEE ICSP, Beijing; 2010. p. 1279–82.

Cambria E, Gastaldo P, Bisio F, Zunino R. An ELM-based model for affective analogical reasoning. Neurocomputing. 2015;149:443–55.

Cambria E, Fu J, Bisio F, Poria S. AffectiveSpace 2: enabling affective intuition for concept-level sentiment analysis. In: AAAI, Austin; 2015. p. 508–14.

Cambria E, Wang H, White B. Guest editorial: big social data analysis. Knowl Based Syst. 2014;69:1–2.

Chakraborty M, Pal S, Pramanik R, Chowdary CR. Recent developments in social spam detection and combating techniques: a survey. Inf Process Manag. 2016;52(6):1053–73.

Kranjc J, Smailović J, Podpečan V, Grčar M, Žnidaršič M, Lavrač N. Active learning for sentiment analysis on data streams: methodology and workflow implementation in the clowdflows platform. Inf Process Manag. 2015;51(2):187–203.

Fersini E, Messina E, Pozzi FA. Expressive signals in social media languages to improve polarity detection. Inf Process Manag. 2016;52(1):20–35.

Cambria E, Livingstone A, Hussain A. The hourglass of emotions. In: Esposito A, Esposito AM, Vinciarelli A, Hoffmann R, Müller CC, editors. Cognitive behavioural systems. Berlin Heidelberg: Springer; 2012. p. 144–57.

Huang G-B, Wang DH, Lan Y. Extreme learning machines: a survey. Int J Mach Learn Cybern. 2011;2(2):107–22.

Huang G, Song S, Gupta JND, Wu C. Semi-supervised and unsupervised extreme learning machines. IEEE Trans Cybern. 2014;44(12):2405–17.

Cambria E, Huang G-B, et al. Extreme learning machines. IEEE Intell Syst. 2013;28(6):30–59.

Huang G-B, Cambria E, Toh K-A, Widrow B, Xu Z. New trends of learning in computational intelligence. IEEE Comput Intell Mag. 2015;10(2):16–7.

Chapelle O, Schölkopf B, Zien A, et al. Semi-supervised learning. Cambridge: MIT Press; 2006.

Zhu X. Semi-supervised learning literature survey. Madison: University of Wisconsin; 2005.

Habernal I, Ptáček T, Steinberger J. Supervised sentiment analysis in Czech social media. Inf Process Manag. 2014;50(5):693–707.

Guo Z, Zhang ZM, Xing EP, Faloutsos C. Semi-supervised learning based on semiparametric regularization, vol. 8. In: SDM, SIAM; 2008. p. 132–42.

Belkin M, Niyogi P, Sindhwani V. Manifold regularization: a geometric framework for learning from labeled and unlabeled examples. J Mach Learn Res. 2006;7:2399–434.

Draper NR, Smith H, Pownell E. Applied regression analysis. New York: Wiley; 1966.

MacKay DJC. Bayesian interpolation. Neural Comput. 1992;4(3):415–47.

Breiman L. Statistical modeling: the two cultures (with comments and a rejoinder by the author). Stat Sci. 2001;16(3):199–231.

Dhar V. Data science and prediction. Commun ACM. 2013;56(12):64–73.

Vapnik VN. An overview of statistical learning theory. IEEE Trans Neural Netw. 1999;10(5):988–99.

Wolpert DH. The lack of a priori distinctions between learning algorithms. Neural Comput. 1996;8(7):1341–90.

Magdon-Ismail M. No free lunch for noise prediction. Neural Comput. 2000;12(3):547–64.

Vapnik VN. Statistical learning theory. New York: Wiley-Interscience; 1998.

Valiant LG. A theory of the learnable. Commun ACM. 1984;27(11):1134–42.

Bartlett PL, Boucheron S, Lugosi G. Model selection and error estimation. Mach Learn. 2002;48(1–3):85–113.

Langford J. Tutorial on practical prediction theory for classification. J Mach Learn Res. 2006;6(1):273.

Anguita D, Ghio A, Oneto L, Ridella S. In-sample and out-of-sample model selection and error estimation for support vector machines. IEEE Trans Neural Netw Learn Syst. 2012;23(9):1390–406.

Kohavi R, et al. A study of cross-validation and bootstrap for accuracy estimation and model selection. In: International joint conference on artificial intelligence; 1995.

Efron B, Tibshirani RJ. An introduction to the bootstrap. London: Chapman & Hall; 1993.