Abstract

This paper presents the development of a system that uses inertial sensors, wireless transceivers and virtual models to monitor the exercises of motor rehabilitation of the upper limbs based on Kabat’s method. This method involves performing rehabilitation complex exercises that cannot be easily reproduced by the patient, requiring permanent assistance of a qualified professional. However, it is very expensive to have a professional expert assisting the patient throughout the treatment. Therefore, the development of technologies to monitor this type of exercise is necessary. The Kabat’s method has several applications, e.g. in motor rehabilitation of stroke patients. Stroke is considered the second most common cardiovascular disorder and affects about 9.6 million people in Europe alone, and an estimated 6 million people worldwide die from this disorder. Also, the natural aging process increases the number of strokes, and the demand for healthcare and motor rehabilitation services. To minimize this problem, we propose an experimental system consisting of inertial sensors, wireless transceivers and virtual models according to the models of Denavit & Hartenberg and Euler Angles & Tait Bryan. Through inertial sensors, this system can characterize the movement performed by the patient, compare it with a predefined motion and then indicate if the motor system performed the correct movement. The patients monitor their own movements and the movement pattern (correct movement). All movements are stored in a database allowing continuous checking by a qualified professional. Several experimental tests have shown that the average system error was 0.97°, which is suitable to the proposed system.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

This article offers a contribution to the characterization of the human motion indicated for motor rehabilitation of the human body using inertial sensors that form a wireless network. The main novelty is the use of inertial sensors and virtual models to monitor the user’s movements developed by Kabat. Inertial sensors, such as accelerometers, gyroscopes and magnetometers are often used in industrial applications. However, in recent years they have been widely used in Biomedical Engineering due to the following reasons: low cost compared to kinematics equipment; its use is not restricted to the laboratory environment; their small size allow a wide range of real-time motions, and they are available in various types, models and different sensitivities [1–11].

Studies on the characterization of human body segment movements have deserved considerable attention in the last years. For example, Pérez et al. [12] proposed an inertial sensor-based monitoring system for measuring and analyzing upper limb movements. Four inertial sensors (MTi Xsens) mounted on a special garment worn by the patient provides the quaternions representing the patient upper limb’s orientation in space. A kinematic model was built to estimate 3D upper limb motion for accurate therapeutic evaluation. The mean correlation coefficient obtained from all the movements was 0.957, indicating that both signals are almost identical in shape for all degrees of freedom (DOF). Sheikh et al. [13] used the Xsens MTi sensor of Xsens Technologies for determining the suitability of inertial sensors for motion analysis research. The results of the Xsens MTi sensor were compared against an electromagnetic motion tracking system (Fastrak, Polhemus) for measuring motions of an artificial hinge joint and random 3D motions. The authors concluded that inertial sensors have sufficient accuracy for clinical assessment. Vargas et al. [14] presented a systematic review of the literature comparing inertial sensors with all kinds of gold standards (electrogoniometry, optoelectronic systems, electromagnetic systems, etc.) and concluded that inertial sensors can offer an accurate and reliable method to study human motion, but the degree of accuracy and reliability are site and task specific.

Zhoua et al. [15] showed a human motion tracking system that used two wearable inertial sensors (MT9B inertial sensors of Xsens Technologies) that are placed near the wrist and elbow joints of the upper limb. The turning rates of the gyroscope were utilized for localizing the wrist and elbow joints on the assumption that the two upper limb segment lengths were previously known. To determine the translation and rotation of the shoulder joint, an equality-constrained optimization technique is adopted to find an optimal solution, incorporating measurements from the tri-axial accelerometer and gyroscope. Experimental results demonstrated that this new system, compared to an optical motion tracker, had RMS position errors of less than 0.01 m, and RMS angle errors between 2.5 and 4.8°.

Shin et al. [16] used smartphone inclinometer for measuring range of motion in the physical examination and functional evaluation of the shoulder joint. According to the authors, the digital inclinometers available in the market are expensive, which shall inhibit their widespread use. The results obtained with smartphone showed acceptable reliability compared to the classical goniometric measurements of movements and the correlation between the two measurements was fairly high. Hadjidj et al. [17] presented a review of sensors used for motor rehabilitation, sensor node design projects and comparison of communication protocols. According to the authors, rehabilitation supervision has emerged as a new application of wireless sensor networks, with unique communication, signal processing and hardware design requirements.

Zhou & Hu [18] presented an excellent review of human motion tracking systems for rehabilitation and a performance comparison of different motion tracking systems, e.g., inertial sensors, magnetic sensors, and ultrasound sensors, among others. In general, the inertial sensors have high accuracy, high compactness, efficient computation and low cost although the main drawback is drift. In their study, Zhou & Hu [19] used two commercially available inertial sensors. One of the inertial sensors was placed on the lower arm, 2 cm far from the wrist joint, whereas the other was fixated on the upper arm around 5 cm far from the elbow joint. The purpose of the study was to present an inertial motion tracking system for monitoring upper limb movements in order to support a home-based rehabilitation scheme in which the recovery of stroke patients’ motor function through repetitive exercises needed to be continuously monitored and appropriately evaluated.

People who have experienced trauma and must perform physical rehabilitation exercises, such as stroke patients, car accident victims, among others, often require specialized medical assistance to regain their normal motor functions. The path to the full rehabilitation is often long and intensive, and requires repeating exercises for weeks or even months. This treatment in a hospital and the continuous doctor or physiotherapist care at home is very expensive. However, if the patient is to perform the exercise alone it is likely to do so wrongly, probably hindering their rehabilitation. A possible solution to mitigate this issue is to use a system that continuously monitors the implementation of the rehabilitation protocol, which allows the patients to visually monitor their movements and correct them, when necessary, while implementing the set of exercises proposed by the doctor or physiotherapist, i.e., allowing them to perform the rehabilitation exercises autonomously and correctly. Moreover, this minimizes the need for hospitalization and less time is spent in monitoring by trained professionals.

Therefore, this work aims to develop a system of assistive technology able to characterize upper limb movements, using the method developed by Kabat. This system is expected to:

-

monitor the movements performed by the patient’s arm in 3D space;

-

the movement performed by the patient must produce data for instant comparison with a predefined movement called standard virtual model;

-

the data generated must be stored in a database so that the movement can be reconstructed for further analysis;

-

the data generated must be stored in a database so that the movement can be reconstructed for further analysis;

-

the system should be robust to be used in the patient’s home;

-

the system should be inexpensive so that each patient can have a unit in their home.

Initially, the paper presents a brief description of the main concepts related to the development of the mechanical model that interacts with the virtual model, wireless transceivers and inertial sensors. The next chapters describe the system developed, emphasizing the inertial sensors and its integration to a wireless network based on IEEE 802.15.4 protocol [20], as well as the description of a virtual model that responds to the motions determined by sensors. Next, the outcomes from this study and the conclusions drawn from the system developed are shown.

1.1 Mechanical model of human upper limb

The accuracy of the virtual model is defined by the number of polygons that compose it. The processing time is increased depending on the number of polygons, thus generating a slower system response. This project does not require a high quality model of the human body. It is desirable that the virtual models are created as quickly as possible, allowing real-time feedback to support the users of the system. Therefore, a virtual model that allows the user to identify in detail the movement being done is enough for the purpose of this project.

In order to characterize the movements of every segment of the human body, the arm joints (shoulder, elbow and wrist) were modeled using mechanical linkages. Seven degrees of freedom (DoF) are used to represent the human arm [21]:

-

shoulder three degrees of freedom of rotation (spheroid);

-

elbow two degrees of freedom rotation (ellipsoid);

-

wrist two degrees of freedom rotation (ellipsoid).

These upper limb joints are represented as shown in Fig. 1, assuming the arm at its resting position, i.e., vertically extended parallel to the body, with the inside of the hand facing the body. Two mechanical models were evaluated in this work: the model of Denavit & Hartenberg and the model of Euler Angles & Tait Bryan summarized below.

1.2 Denavit & Hartenberg model

Denavit & Hartenberg established a convention for representing mechanical joints that is widely used in robotics. This convention defines an axis for each connection and allows to determine the position and configuration of each connection type (pivot or prismatic) in the representation, for example, of the mechanical arm. A pivot connection allows only one DOF (rotation) and no translation, while a prismatic connection allows only one DOF (translation) [22–25]. Each connection has its own axis and references that need to be defined according to the following rules:

-

O i is the source of the connection i;

-

X i is the X axis of the connection i;

-

A i is the rotation axis of the bond;

-

O i is situated on A i and on the common perpendicular between A i and A i-1 ;

-

X i is supported by the common perpendicular between A i and A i-1 and is oriented from A i-1 to A i ;

-

Z i is supported by the shaft and its orientation is arbitrary;

-

the vector Y i results of the cross product between X i e Z i .

The Denavit & Hartenberg model requires the definition of the α and θ parameters, which describe the inclination of the axes of the second joint, and r and a parameters, which define the position of the origin of the second joint (see Fig. 2). In this study, only α and θ parameters had to be identified according to the following rules:

-

α i is the angle between Z i e Z i+1 measured about X i+1 axis;

-

θ i is the angle between X i e X i+1 measured about Z i axis.

For example, to determine the axis of the second joint on the reference axis, as a function of θ i , one can use a homogeneous matrix T 1,2 , and so on, to obtain the joint axis i + 1 depending on the axis of joint i using the matrix of homogeneous transition T i,i+1 (see Eq. (1)):

The matrix of Eq. (1) is extremely useful as it is able to fully describe the joint axis i +1 depending on the axis of joint i. In this case, the first 3 rows of the first column describe respectively the axis X i+1 according to X i , Y i and Z i . The second column, describes Y i+1 axis and the third column Z i+1 axis. In the last column the values of the first 3 lines correspond to the position of point O i+1 as a function of X, Y and Z coordinates for point O i . Therefore, it is possible to completely describe the axes of joint i + 1 with this matrix. For this project it is possible to neglect the fourth column of the matrix, since the purpose here is to describe the angles of the axes only. Therefore, the matrix of Eq. (1) becomes:

The matrix of Eq. (2) can also be used to describe any axes of the joint as a function of the axes of a previous joint and all the intermediate variables as shown in Eq. (3):

Using the inverse model Denavit & Hartenberg it is possible to calculate the values of the angles θ i based on the description of the subsequent axes as a function of the reference axes using the inverse matrix, for example, indicated in Eq. (4):

1.3 Model of the Euler Angles and Tait Bryan

Euler angles are used to describe the spatial orientation of rigid bodies. They make it possible to describe a three-dimensional rotation relative to a Cartesian coordinate system in terms of three parameters [26]. Given a reference coordinate system, a rotation of α° about z-axis is performed. Then a β° rotation is performed about axis N as a result of x-axis rotation around z-axis. Finally, a third rotation is performed on the value of γ° about z axis results of the last two preceding rotations. The α, β and γ angles are called Euler angles. This system is widely used in aeronautics and computer graphics [27]. Tait Bryan angles are very similar to Euler angles and are also able to describe any three-dimensional rotation of a coordinate system. However, instead of performing rotations about z axis N, and Z, Tait Bryan’s method is more general. Axis rotation is arbitrary, allowing six possibilities of rotations.

2 Methods

2.1 Experimental system

Figure 3 shows a simplified block diagram on the proposed system. This system consists of the following main blocks: inertial sensors, data transmission, virtual model and database. The module called inertial sensor (units ArduIMUV3 (see Fig. 4) that includes accelerometers, gyroscopes and magnetometers) is positioned on the human body (shoulder, elbow and wrist joints) and measures the inclination of the upper limbs in 3D. The sensors provide the tilt in 3 axes (x, y, z) with respect to a rest position, which is calibrated at system startup.

The data transmission module consists of XBee transceivers based on IEEE802.15.4 protocol, providing communication between the inertial sensor module and a computer. The module is formed by a virtual model that perfectly replicates the Diagonal Movement of the Kabat’s method and demonstrates the movement performed by the patient, allowing the comparison of the movement performed by the patient with the standard virtual model. This block system was developed with MakeHuman and Blender software and allows interaction between the patient and the virtual system. For data manipulation and communication between XBee transceivers and software Blender a Python program was developed. The data packet sent to the XBee is composed of data from 9 inertia sensors (accelerometer, gyroscope and magnetometer) positioned in the human body.

The flowchart in Fig. 5 shows the path of the data through the system. Communication between the XBee and a computer was configured in API mode to allow the identification of the module that sent the data. The developed program is responsible for receiving the data and identifying all the sensors responsible for sending the data. With this ability to control the virtual model, we developed a script that opens a socket connection and waits to receive information about the rotations to be performed on a specific segment of the human arm. Upon receiving the data, Blender performs the corresponding animation of the virtual model according to the data received. The virtual model of the Blender, with strategically placed sensors that monitored the arm movement, is shown in Fig 6.

2.2 Conversion of data from sensors

In order to determine the spatial orientation of the inertial ArduIMUV3 module and, consequently, each segment of the virtual model, it is necessary to describe the axis of the inertial module relative to the initially determined axes of reference. This results in a matrix shown in Eq. (5) where, for example, PrXY corresponds to the projection of the x axis of the inertial module on the y-axis of reference and so forth:

Therefore, the axes of the inertial module are represented by the columns of the matrix relative to the original axes. We used accelerometers as inclinometers. Thus, the angle α formed between the axis of the accelerometer and the acceleration of gravity g (see Fig. 7a) determines the projection of acceleration due to gravity Pg given by Eq. (6):

The axis of gravity corresponds to the z axis of reference in the opposite direction, so the output value of accelerometers corresponds to the projection of the axis of the accelerometer in the z direction with the sign reversed, multiplied by the value of gravity. However, in the proposed application, the value of gravity is the only significant acceleration in this system and corresponds to the vector sum of the total acceleration measured by the accelerometers (see Eq. (7)), where acelX is the value measured by the accelerometer on the x axis of the inertial module and so on:

Therefore, the normalized projection is given by Eq. (8):

Only the horizontal components of data from these sensors are analyzed to determine the horizontal axes of the magnetometers The vertical component of this vector was subtracted and the projection of the vector magnetic fields on the vertical axis was determined according to Eq. (9). Then, the result of this projection was subtracted from the initial vector determined by magnetometers:

It now remains to determine the angle between the magnetic field and axis Y of the human body. Figure 7b shows the y axis in the lateral direction, leaving the body. At least a horizontal reference is needed to determine the angle α between the measure of the magnetometer and the y axis. This reference can be obtained from a known position of the magnetometer sensors. With the arm extended in vertical position, as shown in Fig. 8, and positioning the inertial ArduIMUV3 module with its x-axis pointing to the longitudinal direction of the upper limb, (in this study, the y-axis, for the arm), it is possible to obtain a known position of the magnetometer with in relation to the axis of the body.

Thus, in this position, the axes of the magnetometer are coincident with the axes of the system. In the case of the arm, it is necessary to determine angle α in relation to the Y-axis magnetometer through Eq. (10):

Therefore, measurement of α° about Z axis with magnetometer is used to determine the direction of Y axis. Finally, X axis can be calculated from the cross product between Y and Z. Thus, the reference axes from the initial position can be obtained with the following procedure:

-

the Z axis is the result of measurements of accelerometers with inverted and normalized signal;

-

Y axis is the measure of magnetometers subtracted from its projection on the Z axis, the rotated α° about the Z axis and normalized;

-

X axis is calculated from the cross product between Y and Z.

With the reference axes normalized, we should perform the dot product between the axles calculated by the sensors and the reference axes (for the values of the second and third rows of the matrix projections).

3 Results

Figure 9 shows a photo of the prototype system (featuring inertial sensor (IMU) and Xbee). A 9 V battery powers the ArduIMU and an output port of the ArduIMU powers the Xbee. The XBee network was configured to work in star mode, i.e., all Xbees send data to the FFD device connected to the computer. Table 1 shows the configuration of the star network.

Tests were performed to ensure the transmission and reception of packets with standardized data. The results of these tests are shown in Table 2. Data in Table 2 show that the operation of the wireless network is suitable for the purpose of this study. Errors below 10 % do not interfere with the operation of the system, i.e., a 20 Hz frequency was selected.

3.1 Application of Denavit & Hartenberg model

According to Fig. 1, the human upper limb was represented by three major joints: shoulder, elbow and wrist, which are represented by a ball joint, an ellipsoid and another ellipsoid, respectively. However, according to Denavit & Hartenberg, all the rotations must be represented as pivot connections. The kneecap is represented by three orthogonal pivot connections and an ellipsoid and can be represented by two pivot links. Figure 10 illustrates the joints, in the form of pivot links. All matrices determined for this system are based on Eqs. (1) to (4).

The axes O 1 of the link (first link pivot) are invariant while all other axes are rotated according to the rotation of previous connections. The axes of O 4 represent the axes of the arm after shoulder rotations. The O 6 -axis represents the axis of the forearm and the O 8 represents the axis of the hand. The arm is always rotated in relation to the axis of the body, while the forearm is rotated about the axis of the arm. The hand is rotated about the axis of the forearm. However, it is important to note that the Denavit & Hartenberg model’s axes do not directly correspond to the axes of Blender software. Table 3 presents the conversion rules to solve this problem. The parameters of Denavit & Hartenberg model’s for the upper limb are shown in Table 4.

To determine the rotation angles depending on the inclinations of the sensors it is necessary to use the inverse model Denavit & Hatenberg. However, in this particular case, it is possible to segment it into 3 parts, i.e. determine the rotation angles from the previous segment. Here, the rotations of the arm were defined in terms of the absolute axis. Rotations were defined for the forearm relative to the axes of the arm after their rotation, and the rotations of the hand are defined in relation to the axes of the forearm. This segmentation is interesting because only two variables will have to be considered to define, for example, the rotations of the hand: the DOF of the wrist. Thus, the rotation can be defined without the need to evaluate the rotations in the forearms. This is possible for two reasons: there is a sensor in each segment of the upper limb that provides its inclination with respect to the absolute axis; Blender receives as input the rotation about the axes of the forelimb and not on the absolute axis. The definition of the angles of rotation of the arm relative to the body, of the forearm relative to the arm and hand in relation to the forearm, were assessed independently. The measurement of the sensor positioned in the user’s arm provides the inclination of the axis O 4 with respect to the absolute axis, i.e., O 1 . Therefore, the matrix provided by the sensor is the generic 3 × 3 matrix represented by Eq. (11):

the inclination of the axis O 4 depends only on three possible rotations of the shoulder joint, represented by θ 1 , θ 2 and θ 3 , that is, the matrix representing the axis of the arm is shown as a function of the absolute axis, or the matrix is equal to T 14 shown in Eq. (12):

In T 14 the term of the third row and second column depend solely on variable θ 2 . Thus, one can determine θ 2 equating these two terms of the matrices. The inverse transform matrix can be determined in Eq. (13):

One may then obtain two valid responses to t 1 , t 2 and t 3 , which result in the same rotation matrix obtained. The same logic used in the previous case, namely the O 6 rotate relative to O 4 ., can be used to find the angle of rotation of the forearm about the arm. However, the matrix of inclination of the axes provided by the sensor is measured in relation to absolute axis and not in relation to the axis of O 4 . Therefore, it is necessary to rewrite the inclination of the axes of O 6 depending on the axis of O 4 . One must make a projection of O 6 axes on axes O 4 for this relationship. This can be done by performing a scalar product between the axes of O 6 and O 4 . For example, the description of the scalar product of O 6 x-axis on the x axis O 4 result in the first term of the matrix. The matrix of inclination of the axes O6 in relation O4 axes (I46) is given by Eq. (14):

Where I 4 is the inclination of the axes of O 4 related to the absolute axes and the symbol ’ represents the transpose of the matrix. Equating I 46 the T 46 matrix should determine the 2 angles corresponding to the forearm rotation given by Eq. (15):

In this case, there will be only one correct answer. Just like in the arm system - in forearm rotation it is necessary to make the projection of the O 8 axes for O 6 , according to Eq. (16):

Equating the I 68 matrix the T 68 matrix is possible to obtain the values of rotation for the wrist (see Eq. (17)). Again, in this case there is only one correct answer.

3.2 Application of angles Tait Bryan

To select the best way to represent the rotation angles of each segment of the upper limb, it is interesting to analyze the procedure that Blender uses to perform rotations about the segments of the virtual model. As Blender uses angles Tait Bryan extrinsic to orient their bones in space, is interesting transform the projected axes in the three rotation angles of Tait Bryan extrinsic. The order of rotation axes is arbitrary. It is only required to indicate Blender. The following order of rotation was selected: Z, Y, X. The rotation about Z axis will be called γ, on Y axis will be called β and on X axis, α. The resulting matrix of three rotations corresponds to the projected matrix with axes. The resulting matrix is the same generic matrix shown in Eq. (11), followed by rotation matrices on the x, y and z axes (see Eqs. (18) to (20)):

The matrix of rotation about the three axes is given by Eqs. (21) and (22):

After extensive algebraic work it is possible to obtain Eq. (23):

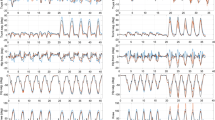

3.3 Calibration of sensors

Accelerometers were programmed for a range up to ±4 g and gyroscopes with a sensitivity of ±2000°/s. The signals from the accelerometers and gyroscopes were digitized by a 16-bit ADC (the signals from the magnetometers are digitized by a 12-bit ADC). Subsequently, data were filtered through a 10 Hz low pass filter (available from ArduIMU itself). Each sensor was calibrated and its offset and sensitivity were determined (all data from the inertial sensors were compared with a commercial inertial sensor (MTI-100 Xsens Company)). The offset value is determined by Eq. (24):

where Max and Min are the maximum and minimum values of the sensor. The sensitivity of each sensor is determined by Eq. (25):

Each output of the sensor is given by Eq. (26):

It is important to note that this procedure was performed for all sensors. Table 5 shows an example of the transfer functions of the accelerometers used.

After many trials it was possible to determine the error for each axis of each sensor and hence the error for each motion, e.g. the error for the rotation of 45° about the X axis was:

For all inertial sensors used, the calculated error was similar to the one presented above (Eq. (27)), i.e., typically less than 0.06. It was possible to transform the array of projections for any angle of rotation using Euler angles or Tait Bryan. The system developed was able to convert the array of projections in the exact angles, in ways compatible with Blender. The virtual model generated by MakeHuman and animated by Blender software was very user friendly. Therefore, it was possible to identify the position of the upper limb measured by the sensors. However, Blender does not allow a very high rate of updates of the position of the segments of the model. For example, with a data transmission rate of 100 Hz to Blender, it showed a significant delay in the animation performed. But with a sending rate of 20 Hz per sensor, the animation was satisfactory and without noticeable delays. Moreover, 20 Hz is compatible with the movements performed in this type of test.

Figure shows a picture of the system. The volunteer is performing the movement of diagonal Kabat. The time delay in this system was not greater than 0.2 s. This result is consistent with the proposal of this system. Ongoing trials with stroke patients have demonstrated the effectiveness of the proposed system. According to information from health professionals, the use of this system has reduced the training time for the correct performance of movement Kabat (Example of the tests (see Fig. 11).

4 Conclusion

The initial design goal was successfully achieved. The system developed allowed to monitor the movement of Kabat’s method. Moreover, this system is portable, inexpensive and developed in free code. It uses only inertial sensors and allows comparison of the movement performed by the user with a standard motion. Errors did not exceed 0.97 ° allowing us to conclude that this system is suitable for the proposed application.

Equaling I 46 the T 46 matrix

References

Côrrea D, Babinot A. Accelerometry for the motion analysis of the lateral plane of the human body during gait. Health Technol. 2011;1(1):35–46.

Armstrong WJ, McGregor SJ, Yaggie JA, Bailey JJ, Johnson SM, Goin AM, et al. Reliability of mechanomyography and triaxial accelerometry in the assessment of balance. J Electromyogr Kinesiol. 2010;20:726–31.

Moreau M, Sibert S, Buerket A, Schlecht E. Use of a tri-axial accelerometer for automated recording and classification of goat’s grazing behavior. Appl Anim Behav Sci. 2009;119:158–70.

Robert B, White BJ, Renter DG, Larson RL. Evaluation of three-dimensional accelerometers to monitor and classify behavior patterns in cattle. Comput Electron Agric. 2009;67:80–4.

Bruxel Y, Balbinot A, Zaro MA. Evaluation of impact transmissibility on individuals with shoes and barefoot during human gait. Measurement. 2013;46:2547–54.

Schulc E, Unterberger I, Saboor S, Hilbe J, Ertl M, Ammenwerth E, et al. Measurement and quantification of generalized tonic-clonic seizures in epilepsy patients by means of accelerometry—an explorative study. Epilepsy Res. 2011;95(1–2):173–83. doi:10.1016/j.eplepsyres.2011.02.010.

Rodes CE, Chillrud SN, Haskell WL, Intille SS, Albinali F, Rosenberger ME. Predicting adult pulmonary ventilation volume and wearing compliance by on-board accelerometry during personal level exposure assessments. Atmos Environ. 2012;57:126–37.

Young DJ, Zurcher MA,Semaan M, Megerian CA, Ko WH. MEMS capacitive accelerometer-based middle ear microphone. IEEE Trans Biomed Eng. 2012;59(12):3283–92.

Tura A, Rocchi L, Raggi M, Cutti AG, Chiari L. Recommended number of strides for automatic assessment of gait symmetry and regularity in above-knee amputees by means of accelerometry and autocorrelation analysis. J Neuroeng Rehab. 2012;9(11):1–8.

LeMoyne R, Coroian C, Mastroianni T, Grundfest W. Accelerometers for quantification of gait and movement disorders: a perspective review. J Mech Med Biol. 2008;8(2):137–52.

Kim H, Shin SH, Kim JK, Park YJ, Oh HS, Park YB. Cervical coupling motion characteristics in healthy people using a wireless inertial measurement unit. Evid Based Complement Alternat Med. 2013;2013:8. doi:10.1155/2013/570428.

Pérez R, Costa Ú, Torrent M, Solana J, Opisso E, Cáceres C, et al. Upper limb portable motion analysis system based on inertial technology for neurorehabilitation purposes. Sensors. 2010;10:10733–51.

Sheikh KS, Bryant EC, Glazzard C, Hamel A, Lee RYW. Feasibility of using inertial sensors to assess human movement. Man Ther. 2010;15:122–5.

Vargas AIC, Mercant AG, Williams JM. The use of inertial sensors system for human motion analysis. Phys Ther Rev. 2010;15(6):462–73.

Zhoua H, Stoneb T, Huc H, Harris N. Use of multiple wearable inertial sensors in upper limb motion tracking. Med Eng Phys. 2008;30:123–33.

Shin SH, Ro DH, Lee OS, Oh JH, Kim SH. Within-day reliability of shoulder range of motion measurement with a smartphone. Man Ther. 2012;17:298–304.

Hadjidj A, Souil M, Bouabdallah A, Challal Y, Owen H. Wireless sensor networks for rehabilitation applications: challenges and opportunities. J Netw Comput Appl. 2013;36:1–15.

Zhou H, Hu H. Human motion tracking for rehabilitation: a survey. Biomed Signal Process Control. 2008;3:1–18.

Zhou H, Hu H. Inertial sensors for motion detection of human upper limbs. Sens Rev. 2007;27(2):151–8.

IEEE Standard for Information technology. Telecommunications and information exchange between systems— Local and metropolitan area networks— Specific requirements— Part 15.4: Wireless Medium Access Control (MAC) and Physical Layer (PHY) Specifications for Low-Rate Wireless Personal Area Networks (WPANs)

Tortora GJ, Derrickson BH. Principles of anatomy and physiology. New York: Wiley; 2013. p. 1344.

Denavit J, Hartenberg RS. A kinematic notation for lower-pair mechanisms based on matrices. Trans ASME J Appl Mech. 1955;23:215–21.

Paul R. Robot manipulators: mathematics, programming, and control: the computer control of robot manipulators. Cambridge: MIT Press; 1981.

Spong MW, Vidyasagar M. Robot dynamics and control. New York: Wiley; 1989.

Legnani G, Casolo F, Righettini P, Zappa B. A homogeneous matrix approach to 3D kinematics and dynamics — I. Theory. Mech Mach Theory. 1996;31(3):573.

Genta G, Morello L. The automotive chassis: system design. New York: Springer; 2009. p. 690.

Faugeras O. Three-dimensional computer vision—a geometric viewpoint. Cambridge: The MIT Press; 1993.

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical statement

The authors declare that they have no competing interests.

We declare that no party having a direct interest in the results of the research supporting this article has or will confer a benefit on us or on any organization with which we are associated.

All subjects undertook informed-consent procedures as approved by the Research Ethics Committee.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Balbinot, A., de Freitas, J.C.R. & Côrrea, D.S. Use of inertial sensors as devices for upper limb motor monitoring exercises for motor rehabilitation. Health Technol. 5, 91–102 (2015). https://doi.org/10.1007/s12553-015-0110-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12553-015-0110-6