Abstract

We present an effective heuristic for the Steiner Problem in Graphs. Its main elements are a multistart algorithm coupled with aggressive combination of elite solutions, both leveraging recently-proposed fast local searches. We also propose a fast implementation of a well-known dual ascent algorithm that not only makes our heuristics more robust (by dealing with easier cases quickly), but can also be used as a building block of an exact branch-and-bound algorithm that is quite effective for some inputs. On all graph classes we consider, our heuristic is competitive with (and sometimes more effective than) any previous approach with similar running times. It is also scalable: with long runs, we could improve or match the best published results for most open instances in the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Given an edge-weighted, undirected graph \(G = (V,E)\) and a set \(T \subseteq V\) of terminals, the Steiner Problem in Graphs (SPG) is that of finding a minimum-cost tree that contains all vertices in T. This has applications in many areas, including computational biology, networking, and circuit design [9]. Unfortunately, it is NP-hard not only to find an optimal solution [30], but also to approximate it within a factor of 96/95 [10]. The best known approximation ratio is 1.39 [8, 24] (see [46] for a 1.55 approximation). Given its practical importance, there is a wealth of exact algorithms [12, 18, 25, 31, 33, 38, 40, 41, 45, 51] and heuristics [3, 6, 17, 18, 37, 44, 45, 52] to deal with real-world instances. State-of-the-art algorithms use a diverse toolkit that includes linear relaxations, branch-and-bound, reduction tests (preprocessing), and primal and dual heuristics.

Our goal in this paper is to develop an algorithm that is, above all, robust. For any input instance, regardless of its characteristics, we want to quickly produce a good solution. Moreover, the algorithm should scale well: when given more time to run, it should produce better solutions.

Our basic algorithm follows the principles of a heuristic proposed by Ribeiro, Uchoa, and Werneck [45]: it is a multistart algorithm with an evolutionary component, using perturbation for randomization. Under the hood, however, we introduce significant improvements that lead to much better results.

First, we leverage fast local search algorithms recently proposed by Uchoa and Werneck [52], which are asymptotically faster (in theory and practice) than previous approaches. Second, we propose a cascaded combination strategy, which immediately combines each fresh (newly-created) solution with multiple entries from a pool of elite solutions, leading to much quicker convergence. Third, we counterbalance this intensification strategy with a series of diversification measures (including careful perturbation and replacement policies in the pool) in order to explore the search space more comprehensively.

As a result, long runs of our algorithm can match or even improve the best published solutions (at the time of writing) for several open instances in the literature. For easier inputs, our basic algorithm still finds very good results, but for some graph classes it can be slower than alternative approaches that rely heavily on preprocessing and small duality gaps [12, 40].

To make our overall approach more robust, we include some preprocessing routines. Moreover, we propose a Guarded Multistart algorithm, which runs a branch-and-bound routine at the same time as our basic (primal-only) algorithm. For easy instances, these two threads can help each other, often leading to drastic reductions in total CPU time. To compute lower bounds, we propose a novel and efficient implementation of a well-known combinatorial dual ascent algorithm due to Wong [58]. For several hard instances, our branch-and-bound routine finds provably optimal solutions faster than any published algorithm.

Even with these optimizations, there are important graph classes (such as some VLSI instances) in which our method is not as effective as other approaches, notably those based on advanced reduction techniques and linear programming [12, 40] or on dynamic programming [25] (when the number of terminals is small). Even in such cases, however, the solutions found by our approach are not much worse, confirming its robustness. Overall, our algorithm provides a reliable, general-purpose solution for the Steiner Problem in Graphs.

This paper is organized as follows. Section 2 explains our multistart algorithm. Section 3 discusses our lower-bounding techniques, including branch-and-bound. Section 4 shows how preprocessing and lower-bounding make our basic algorithm more robust. Section 5 has experiments, and we conclude in Sect. 6.

Notation The input to the Steiner Problem in Graphs is an undirected graph \(G = (V,E)\) and a set \(T \subseteq V\) of terminals. Each edge \(e = (v,w)\) has an associated nonnegative cost (length) denoted by \( cost (e)\) or \( cost (v,w)\). A solution \(S = (V_S, E_S)\) is a tree with \(T \subseteq V_S \subseteq V\) and \(E_S \subseteq E\); its cost is the sum of the costs of its edges. Our goal is to find a solution of minimum cost.

2 Basic algorithm

Our basic algorithm follows the multistart approach and runs in M iterations (where M is an input parameter). Each iteration generates a new solution from scratch using a constructive algorithm (with randomization), followed by local search. We also maintain a pool of elite solutions with good solutions found so far, including the very best one. The main feature of our algorithm is a cascaded combination strategy, which aggressively combines a new solution with several existing ones. This finds very good solutions soon, but has a very strong intensification effect. To counterbalance it, we look for diversification in other aspects of the algorithm. The outline of the entire algorithm is as follows:

-

1.

Create an empty pool P of elite solutions with capacity \(\lceil {\sqrt{M/2}}\rceil \).

-

2.

Repeat for M iterations:

-

(a)

Generate a new solution S using a constructive algorithm, local search, and randomization.

-

(b)

Try to add S to the pool P.

-

(c)

Generate a solution \(S'\) by combining S with solutions in the pool P.

-

(d)

Try to add \(S'\) to the pool P.

-

(a)

-

3.

Return the best solution in the pool P.

The remainder of this section describes details omitted from this outline. Section 2.1 explains the local search routines, Sect. 2.2 describes how fresh solutions are generated, Sect. 2.3 deals with the cascaded combination algorithm, and Sect. 2.4 addresses the insertion and eviction policies for the pool.

2.1 Local search

A local search algorithm tries to improve an existing solution S by examining a neighborhood \(\mathcal{N}(S)\) of S, a set of solutions obtainable from S by performing a restricted set of operations. Evaluating \(\mathcal{N}(S)\) consists of either finding an improving solution \(S'\) (i.e., one with \(cost(S') < cost(S)\)) or proving that no such \(S'\) exists in \(\mathcal{N}(S)\). A local search heuristic repeatedly replaces the current solution by an improving neighbor until it reaches a local optimum. Uchoa and Werneck [52] present algorithms to evaluate in \(O(|E| \log |V|)\) time four natural (and well-studied) neighborhoods: Steiner-vertex insertion, Steiner-vertex elimination, key-path exchange, and key-vertex elimination.

The first two represent a solution \(S = (V_S,E_S)\) in terms of its set \(V_S {\setminus } T\) of Steiner vertices. The minimum spanning tree (MST) of the subgraph of G induced by \(V_S\) (which we denote by \(\text{ MST }(G[V_S])\)) costs no more than S. In particular, if S is optimal, so is \(\text{ MST }(G[V_S])\). Uchoa and Werneck use dynamic graph techniques to efficiently evaluate neighborhoods defined by the insertion or removal of a single Steiner vertex [35, 36, 48, 54]. These neighborhoods had been considered in metaheuristics before [3, 44, 45], but evaluation required \(O(|V|^2)\) time for insertions and O(|E| |V|) time for removals.

The other two neighborhoods represent a solution S in terms of its key vertices \(K_S\), which are Steiner vertices with degree at least three in S. If S is optimal, it costs the same as the MST of its distance network restricted to \(K_S \cup T\) (the complete graph on \(|K_S \cup T|\) vertices whose edge lengths reflect shortest paths in G). Uchoa and Werneck show that the neighborhood corresponding to the elimination of a single key vertex can be evaluated in \(O(|E| \log |V|)\) time. They prove the same bound for the key-path exchange local search [14, 17, 53], which attempts to replace an existing key path (linking two vertices of \(K_S \cup T\) in S) by a shorter path between the components it connects. Both implementations improve on previous time bounds by a factor of O(|T|).

In this paper, we take the local searches mostly as black boxes, but use the fact that these implementations work in passes. If there is an improving move in the neighborhood, a pass is guaranteed to find one in \(O(|E| \log |V|)\) time. To accelerate convergence, the algorithms may perform multiple independent moves in the same pass (within the same time bound). In practice, there are almost always fewer than 10 passes, with most of the improvements achieved early on [52].

We follow Uchoa and Werneck and use what they call the vq local search within our algorithm. It alternates between a pass that evaluates Steiner-vertex insertion (v) and a pass that evaluates (simultaneously) both key-vertex removal and key-path exchange (q). Since in practice Steiner vertex removal (u) is rarely better than key-vertex removal, our algorithm does not use it.Footnote 1

2.2 Generating new solutions

New solutions are generated by a constructive algorithm followed by local search, using randomization. Instead of making our algorithms (constructive heuristic and local search) randomized, we follow Ribeiro et al. [45] and apply perturbations to the edge costs instead, preserving the running time guarantees of all algorithms.

Using the perturbation To build a constructive solution, we apply a random perturbation to the edge costs (details will be given later), then run a near-linear time (in practice) implementation [39] of the shortest-path heuristic (SPH) [49]. Starting from a random root vertex, SPH greedily adds to the solution the entire shortest path to the terminal that is closest (on the perturbed graph) to previously picked vertices.

Ribeiro et al. [45] suggest applying local search to the constructive solution, but using the original (unperturbed) costs during local search. Since the constructive solution can be quite far from the local optimum, however, the effects of the perturbation may disappear quite soon, hurting diversification.

We propose an alternative approach, which leverages the fact that our local searches work in passes. We start the local search on the perturbed instance and, after each pass it makes, we dampen the perturbation, bringing all costs closer to their original values. For each edge e with original (unperturbed) cost \( cost (e)\), let \( cost _i(e)\) be its (perturbed) cost at the end of pass i. For pass \(i+1\), we set \( cost _{i+1}(e) = \alpha cost _i(e) + (1 - \alpha ) cost (e)\), where \(0< \alpha < 1\) is a decay factor (we use \(\alpha = 0.5\)). This dampened approach makes better use of the guidance provided by the perturbation, thus increasing diversification. For efficiency, after three passes with perturbation, we restore the original (unperturbed) costs and run the local search until a local optimum is reached. Uchoa and Werneck [52] (Table 5) show that, on non-trivial graph classes, the local search takes between three and four passes (on average) to reach a local minimum (on the unperturbed graph). By making the local search operate on a (decreasingly) perturbed graph for the first three iterations, we improve diversity with minimal effect on the time to reach an (unperturbed) local optimum, as Sect. 5.1 will show.

Computing the perturbation We now return to the issue of how initial perturbations are computed. Ribeiro et al. propose a simple edge-based approach, in which the cost of each edge is multiplied by a random factor (between 1.0 and 1.2). This is reasonably effective, but has a potential drawback: because edges are independent, the perturbations applied to each edge incident to any particular vertex tend to cancel out one another. We thus propose a vertex-based perturbation, which associates an independent random factor to each vertex in the graph. The perturbed cost of an edge (u, v) is then its original cost multiplied by the average factors of its two endpoints (u and v).

To enhance diversification, the choice of parameters that control the perturbation itself is randomized. Each iteration chooses either edge-based or vertex-based perturbation with equal probability. It then picks a maximum perturbation Q uniformly at random in the range [1.25, 2.00]. Finally, it defines the actual perturbation factors: for each element (vertex or edge), it sets the factor to \(1 + \rho Q\), where \(\rho \) is generated (for each element) uniformly at random in [0, 1].

For further diversification, we actually use a slightly non-uniform distribution parameterized by a small threshold \(\tau = (\log _2 n) / n\). If the random number \(\rho \) is at least \(\tau \) (as is usually the case), we use the formula above. Otherwise, we use a perturbation factor of \(\rho /\tau \). Note that this factor is between 0.00 and 1.00, while the standard factor is always between 1.25 and 2.00. This means that a small fraction of the elements can become significantly cheaper than others, and are thus more likely to appear in the solution. This allows us to test key-vertices that our standard local searches would not normally consider, since they do not include a fast implementation of key-vertex insertion.

We stress that the algorithm already works reasonably well with the standard edge-based perturbation proposed by Ribeiro et al. [45]; although we observed some improvement with the vertex-based perturbation (and the non-uniform distribution), the effects were relatively minor. Given that these these alternatives are quite simple to implement, they are still worth using.

2.3 Cascaded combination

The cascaded combination algorithm takes as input an initial solution \(S_0\), the pool of elite solutions, and the maximum number of allowed failures, denoted by \(\phi \) (we use \(\phi =3\)). The procedure combines \(S_0\) with elements in the pool, generating a (potentially better) solution \(S^*\).

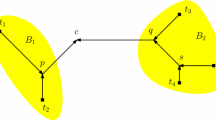

The basic building block of this procedure is the randomized merge operation [45], which takes as input two solutions (\(S_a\) and \(S_b\)) and produces a third (potentially cheaper) one. It does so by first generating a perturbed graph from G by perturbing each edge cost depending on which of the original solutions (\(S_a\) and/or \(S_b\)) it appears in. If an edge appears in both solutions, it keeps its original cost. If it appears in none of the solutions, its cost is multiplied by 1000. If it appears in exactly one solution (\(S_a\) or \(S_b\)), its cost is multiplied by a random number between 100 and 500. We run the SPH heuristic on the resulting instance. Note that, regardless of graph size, the combined solution preserves all edges that appear in both \(S_a\) and \(S_b\) (since they are relatively much cheaper); the remaining edges are likely to come from either \(S_a\) or \(S_b\) (since such edges are still significantly cheaper than those that appear in neither solution). We then remove all perturbations and apply local search to the combined solution, producing \(S_c\), the result of the perturbed combination.

The cascaded combination procedure maintains an incumbent solution \(S^*\), originally set to \(S_0\). In each step, it performs a randomized merge of \(S^*\) and a solution \(S'\) picked uniformly at random from the pool. Let \(S''\) be the resulting solution. If \( cost (S'') < cost (S^*)\), we make \(S''\) the new incumbent (i.e., we set \(S^* \leftarrow S''\)). Otherwise, we say that the randomized merge failed and keep \(S^*\) as the incumbent. When the number of failures reaches \(\phi \), the cascaded combination algorithm stops and returns \(S^*\).

Note that the resulting solution \(S^*\) may have elements from several other solutions in the pool. This makes it a powerful intensification agent, helping achieve good solutions quite quickly. That said, the first few solutions added to the pool will have a disproportionate influence on all others, potentially confining the multistart algorithm to a very restricted region of the search space. This is why we prioritize diversification elsewhere in the algorithm.

On average, each multistart iteration touches a constant number of solutions in the pool. We set the capacity of the elite pool to \(\varTheta (\sqrt{M})\) to ensure that most pairs of elite solutions are (indirectly) combined with one another at some point during the algorithm. We set the precise capacity to \(\lceil \sqrt{M/2} \rceil \), but the algorithm is not too sensitive to this constant; results were not much different with \(\lceil \sqrt{M} \rceil \) or \(\lceil \sqrt{M/4} \rceil \).

2.4 Pool management

We now address the insertion and eviction policies for the pool of elite solutions. When our algorithm attempts to add a solution S to the pool, we must consider three simple cases and a nontrivial one. First, if S is identical to a solution already in the pool, it is not added. Second, if the pool is not full and S is not identical to any solution, S is simply added. Third, if the pool is full and S is not better than any solution in the pool, S is not added.

The nontrivial case happens when the pool is full, S is different from all solutions in the pool, and S is better than the worst current solution. In this case, S replaces a solution that is at least as bad as S, with (randomized) preference for solutions that are similar to S. More precisely, we define the relative symmetric difference between S and \(S'\) as \(\varDelta (S,S') = |E(S) \cap E(S')| / |E(S) \cup E(S')|\); this is 0 if the solutions are identical, and 1 if they have no edge in common. When inserting S into the pool, we pick a solution to replace among all solutions \(S'\) that cost at least as much as S, with probability proportional to a score given by \(1/((1 - \varDelta (S,S'))^{2})\). Note that solutions that are similar to S are much more likely to be picked; for example, if S and \(S'\) are nearly identical, the score is close to 1; if they share only half their edges, the score is close to 0.25. This technique (biased towards replacing similar solutions) has been shown to increase diversification for other problems [43].

2.5 Discussion

Although our algorithm borrows some elements from the multistart approach of Ribeiro et al. [45], Sect. 5.1.3 will show that our algorithm significantly outperforms theirs. This section outlines the most relevant similarities and differences between the two approaches.

Ribeiro et al. run a pure multistart routine, in which solutions generated by the end of each iteration are mostly independent from previous ones; these solutions are used to populate an elite pool. At the very end, all pairs of elite solutions in the pool are combined with one another. The resulting solutions populate a second pool (generation). This procedure is repeated until it reaches a generation whose average solution value does not improve upon the previous one. The algorithm makes extensive use of randomization: each iteration chooses between three types of constructive algorithm, three types of perturbation (used to randomize the construction), and two local search schemes. The post-optimization phase chooses between two types of combination (randomized merges or path relinking by complementary moves).

Although there are many differences between the algorithms, the main reason for our good performance is that we leverage asymptotically faster implementations of the local searches. Like us, Ribeiro et al. use local searches based on adding and removing Steiner vertices and a local search based on key-path exchanges (we also add a local search based on key-vertex removals). While they must explicitly build each neighboring solution in linear or quasi-linear time, we can evaluate them implicitly in logarithmic amortized time by applying the recent algorithms from Uchoa and Werneck [52]. This allows us to reach local optima much faster in practice. Our local optima tend to be slightly worse, however, since explicitly building neighboring solutions allows Ribeiro et al. to look for “opportunistic” moves (such as removing non-terminal leaves) during the evaluation. As Sect. 5.1.3 will show, this is a worthy trade-off within the overall multistart algorithm, allowing us to find better results within the same time limit.

Faster local searches allow us not only to run more iterations within the same time limit, but we can also do more in each iteration using cascaded combinations, another contribution of this paper. Both cascaded combinations and the algorithm of Ribeiro et al. use randomized merges as building blocks. While Ribeiro et al. perform randomized merges only at the very end, cascaded combinations (as proposed in this paper) use randomized merges much more aggressively, with combinations happening during the multistart phase itself. The result of one iteration is thus heavily dependent on others. As our experiments will show (see Fig. 2), this allows very good solutions to be generated much earlier in the execution.

Despite being less relevant to explain the performance gap, there are several other differences between the algorithms. We use a single constructive algorithm (Ribeiro et al. choose between three in each iteration) and a single local search scheme (Ribeiro et al. alternate between two). We use a difference-based replacement scheme when updating the pool (Ribeiro et al. do not). We alternate between vertex-based and edge-based perturbations, while Ribeiro et al. use only the latter. We stress that these are minor differences, however. The parameters we reported are the ones we ended up using in the final version of our code, but Sect. 5.1 will show that our algorithm is not very sensitive to these parameters.

3 Lower bounds

We now turn our attention to finding lower bounds. We propose an efficient implementation of a known greedy combinatorial algorithm (due to Wong [58]) associated with a powerful linear programming formulation. We first describe the formulation and an abstract version of the algorithm, then discuss our implementation.

3.1 Formulation and dual ascent

We use the dual of the well-known directed cut formulation for the Steiner problem in graphs [58]. It takes a terminal \(r \in T\) as the root. A set \(W \subset V\) is a Steiner cut if W contains at least one terminal but not the root. Let \(\delta ^-(W)\) be the set consisting of all arcs (u, v) such that \(u \not \in W\) and \(v \in W\).

The dual formulation associates a nonnegative variable \(\pi _W\) with each Steiner cut W. The set \(\mathcal W\) of all Steiner cuts has exponential size. Given a dual solution \(\pi \), the reduced cost \(\bar{c}(a)\) of an arc a is defined as \( cost (a) - \sum _{W \in \mathcal{W}: a \in \delta ^-(W)} \pi _W\). The dual formulation maximizes the sum of all \(\pi _W\) variables, subject to all reduced costs being nonnegative. An arc whose reduced cost is zero is said to be saturated.

The technique we use for finding lower bounds is a dual ascent routine proposed by Wong [58], which finds a greedy feasible solution to the dual formulation. The algorithm maintains a set of C of active terminals, initially consisting of \(T {\setminus } \{r\}\). For a terminal \(t \in T\), let \( cut (t)\) be the set of vertices that can reach t through saturated arcs only. We say that t induces a root component if t is the only active terminal in \( cut (t)\).

The algorithm (implicitly) initializes with 0 the variables associated with all Steiner cuts. In each iteration, it picks a vertex \(v \in C\) and checks if \( cut (v)\) is a root component. If it is not, it makes v inactive and removes it from C; if it is a root component, the algorithm increases \(\pi _{\delta ^-( cut (v))}\) until one of its arcs is saturated (and keeps v in C). We stop when C becomes empty, at which point every terminal can be reached from the root using only saturated arcs.

Each iteration takes O(|E|) time to process a candidate root component. There are O(|T|) unsuccessful iterations, since each reduces the number of active vertices. A successful iteration saturates at least one arc and increases the size (number of vertices) of at least one root component. There can thus be at most \(\min \{|V||T|, |E|\}\) such iterations, bounding the total running time by \(O(|E| \min \{|V||T|, |E|\})\) [15].

3.2 Our implementation

We now describe details of our implementation of Wong’s algorithm that are crucial to its good performance in practice.

Processing a root component Once a vertex v is picked at the beginning of an iteration, we process its (potential) root component in three passes.

The first pass performs a graph search (we use BFS) from v following only saturated incoming arcs. If the search hits another active vertex, the iteration stops immediately: v does not define a root component. Otherwise, the search finishes with two data structures: a set S consisting of all vertices in \( cut (v)\), and a list L which includes all unsaturated arcs (a, b) such that \(a \not \in cut (v)\) and \(b \in cut (v)\). To ensure both structures can be built during the BFS, we allow L to also contain some unsaturated arcs (a, b) such that both a and b belong to \( cut (v)\). These may appear because, when the BFS scans b, it may not know yet whether its neighbor a is part of \( cut (v)\); to be safe, we add (a, b) to L anyway.

The second pass traverses L with two aims: (1) remove from L all arcs (a, b) that are invalid (with \(a \in cut (v)\)); and (2) pick, among the remaining arcs, the one with the minimum residual capacity \(\varDelta \).

The third pass performs an augmentation by reducing the residual capacity of each arc in L by \(\varDelta \). It also builds a set X with the tails of all arcs that become saturated, which will be part of the new root component of v (after augmentation).

Note that only the first pass of the algorithm performs a graph search; the other two passes are much cheaper, as they merely traverse arrays.

Selection rules and lazy evaluation The bound given by the algorithm depends on which active vertex (root component) it selects in each iteration. Without loss of generality, we assume each iteration picks the active vertex that minimizes some score function. Poggi de Aragão, Uchoa, and Werneck [38] (see also [56]) found that using the number of incident arcs as the score works well in practice. Polzin and Vahdati Daneshmand [12, 40] show that a related (but coarser) measure, the number of vertices in the component, also works well. For either score function (and others), the main challenge is to maintain scores efficiently for all root components during the algorithm, since augmenting on one root component may affect several others.

For efficiency, we focus on nondecreasing score functions: as the root component grows, its score can either increase or stay the same. This allows us to use lazy evaluation. We maintain each active vertex v in a priority queue, with a priority \(\sigma (v)\) that is a lower bound on the score of its root component. Each round of the algorithm removes the minimum element t from the queue. It then verifies (using the procedure above) if t defines a root component; if it does not, we just discard t. Otherwise, we perform the corresponding augmentation as long as the actual score is not higher than the priority of the second element in the queue. Finally, we reinsert t into the priority queue.

When we do augment, computing the new exact score of t can be expensive. Instead, we update the score assuming the vertices in the root component are the union of X (the tails of all arcs saturated during the augmentation) and the original vertices (at the beginning of the iteration). Although we may miss some vertices, this is relatively cheap to compute and provides a valid lower bound on the actual score.

Since the number of arcs incident to a root component may decrease, we cannot use it as score function. Instead, we use a refined version of the number of vertices in the root component. Given a component c, let \(\text{ vc }(c)\) be its number of vertices and let \(\text{ deg }(c)\) be the sum of their in-degrees. We use \(\text{ deg }(c) - (\text{ vc }(c) - 1)\) as the score. This is an upper bound on the number of incoming arcs on the component (the \(\text{ vc }(c) - 1\) term discards arcs in a spanning tree of the component, which must exist). This function is nondecreasing, as cheap to compute as the number of vertices, and gives a better estimate on the number of incoming arcs.

Eager evaluation Even with lazy evaluation, we may process a root component multiple times before actually performing an augmentation (or discarding the component). To make the algorithm more efficient, we also use eager evaluation: after removing a component from the priority queue, we sometimes perform an augmentation even its real score does not match the priority in the queue. More precisely, as long as the actual score is no more than 25% higher than the priority, the augmentation is performed. This has almost no effect on solution quality but makes the algorithm significantly faster. Note that any constant factor (including 25%) implies a logarithmic bound on the number of times any component can be reevaluated during the algorithm. The maximum time spent reevaluating components (without subsequent augmentations) is thus \(O(|E||T|\log |V|)\).

Last component Typically, the initial cuts found by the dual ascent algorithm have much fewer vertices than later ones. In particular, when there is only one active vertex v left, we may have to perform several expensive augmentations until it becomes reachable from the root. We can obtain the same bounds faster by dealing with this case differently: we run (forward) Dijkstra’s algorithm from the root to v, using reduced costs as arc lengths. We then use a linear pass to update the reduced costs of the remaining arcs appropriately [56].

3.3 Branch-and-bound

We use our dual ascent algorithm within a simple branch-and-bound procedure. We follow the basic principles of most previous work [12, 38, 40, 56, 58], using dual ascent for lower bounds and branching on vertices. The remainder of this section describes other features of our implementation.

The dual ascent root is picked uniformly at random (among the terminals) and independently for each node of the branch-and-bound tree.

To find primal (upper) bounds, we run the SPH heuristic on the (directed) subgraph consisting only of arcs saturated by the dual ascent procedure, using the same root. We then run a single pass of the Steiner vertex insertion and elimination local search procedures (using all edges, not just saturated ones).

We branch on the vertex that has maximum degree in the primal solution found in the current branch-and-bound node. In case of ties, we look beyond the current primal solution and prefer vertices that maximize the sum of incoming saturated arcs, outgoing saturated arcs, and total degree. Remaining ties are broken at random. If v is the chosen vertex, we remove it from the graph on the “zero” side and make it a terminal on the “one” side. We traverse the branch-and-bound tree in DFS order, visiting the “one” side first. This tends to find good primal solutions quicker than other approaches we tried.

We can eliminate an arc (u, v) if its reduced cost is at least as high as the difference between the best known primal solution and the current dual solution. We actually take the extended reduced cost [41], which also considers the distance (using reduced costs) from the root to u. Since the root can change between nodes in the branch-and-bound tree, we only eliminate an (undirected) edge when both corresponding arcs (directions) could be fixed by reduced cost. If we eliminate at least |E| / 5 edges in a branch-and-bound node, we create a single child node rather than branching.

4 Improving robustness

While the algorithm from Sect. 2 works well on many graph classes, there are still opportunities to make it more robust (compared to other approaches) for very easy or very hard instances. Section 4.1 shows how the lower bounds described in Sect. 3 allow our heuristic to stop sooner. Section 4.2 describes some basic preprocessing techniques to reduce the size of the graph on certain classes of instances. Finally, Sect. 4.3 describes a two-level version of the multistart algorithm that achieves greater diversification on longer runs.

4.1 Guarded multistart

Being a pure heuristic, the multistart algorithm described in Sect. 2 can be wasteful. Because it cannot prove that the best solution it found is optimal (even if it actually is), it cannot stop until it completes its scheduled number of iterations. Ideally, we would like to stop sooner on easy instances.

To that end, we propose a Guarded Multistart (GMS) algorithm. It runs two threads in parallel: the first runs our standard multistart algorithm, while the second runs the branch-and-bound routine from Sect. 3.3. The algorithm terminates as soon as either the multistart thread completes its iterations, or the branch-and-bound thread proves that the incumbent solution is optimal. Communication between threads is limited: the threads inform one another about termination and share the best incumbent solution.

The benefits of this approach are twofold. First, as already mentioned, on easy instances the branch-and-bound algorithm can often prove optimality well before the scheduled number of multistart iterations is reached, making the algorithm faster. Moreover, sometimes the branch-and-bound algorithm quickly finds better primal solutions by itself, leading to better quality as well.

Of course, these advantages are not free: the cycles spent on the branch-and-bound computation could have been used for the multistart itself. On harder instances, we can only afford to perform roughly half as many iterations within the same CPU time. We could make this problem less pronounced using a simple heuristic to detect cases in which the branch-and-bound computation is obviously unhelpful: if its depth reaches (say) 100, we could stop it and proceed only with the multistart computation, saving CPU time. Another potential drawback is nondeterminism: due to scheduling, multiple runs of GMS (even with the same random seed) may find different results. Although one could make the algorithm deterministic by carefully controlling when communication occurs, it is not clear this is worth the extra effort and complexity.

Similarly, it is possible that greater integration between the algorithms (for instance, by tentatively adding branch-and-bound solutions to the multistart pool) would help. In practice, however, we found that the greatest advantage of GMS is saving time on easy instances by allowing the algorithm to stop sooner. Since the primal solutions found by dual ascent tend to be of lower quality for harder instances (with larger duality gaps), it is unlikely that greater integration would justify the extra effort.

4.2 Reduction techniques

To be competitive with state-of-the-art algorithms on standard benchmark instances, we must deal with “easy” inputs effectively. We thus use some basic reduction (preprocessing) techniques that transform the input into a potentially much smaller instance with the same solution.

In particular, we delete non-terminal vertices of degree one (alongside their incident edges). Also, if there is a non-terminal vertex v with exactly two neighbors, u and w, we replace edges (u, v) and (v, w) with a single edge (u, w) with cost \( cost (u,w) = cost (u,v) + cost (v,w)\).

Finally, we implemented a limited version of the Bottleneck Steiner Distance [16] test, which states that an edge (u, v) can be removed from the graph if there is a (bottleneck) path \(P_{uv}\) between u and v such that (1) \(P_{uv}\) excludes edge (u, v) and (2) every subpath of \(P_{uv}\) without an internal terminal has length at most \( cost (u,v)\). Identifying all removable edges can be expensive, so we consider only a couple of common (and cheap) special cases, which can be seen as restricted versions of existing algorithms [12, 40].

The first case is simple. If the combined degree of u and v is small (up to 20), we scan both vertices looking for a common neighbor x such that \( cost (u,x) + cost (x,v) \le cost (u,v)\); we also check if a parallel (u, v) edge exists. This heuristic helps with some grid-like graphs, such as VLSI instances.

The second case may find more elaborate paths. We first use a modified version of Dijkstra’s algorithm [13] to build the Voronoi diagram associated with the terminals of G [34, 39, 52]. For each vertex v, this structure defines \( vb (v)\) (the closest terminal to v), \( vd (v)\) (the distance from that terminal), and \( vp (v)\) (the parent edge on the path from \( vb (v)\)). By looking at boundary edges of this Voronoi diagram, we compute the MST of the distance network of G [34, 39, 52]. Let \(E_t\) be the set of edges of this MST, each corresponding to a path in the original graph. Let \(E_f \subseteq E\) be the set of free edges, i.e., original edges that neither are parents in the Voronoi diagram nor belong to a path in \(E_t\).

We try to eliminate edges in \(E_f\), using \(E_t\) and the Voronoi diagram to find bottleneck paths. To do so efficiently, we first sort the union of \(E_t\) and \(E_f\) in increasing order of cost, breaking ties in favor of entries in \(E_t\). We also initialize a union-find data structure [50] with |V| disjoint sets. We then traverse this list in order. Consider edge \(e_i = (u,v)\). If \(e_i \in E_t\), we join groups u and v in the union-find data structure. Otherwise (if \(e_i \in E_f\)) we delete \(e_i\) if all of the following three conditions hold: (1) u and v belong to the same component in the union-find data structure; (2) \( dist ( vb (u),u) \le cost (e_i)\); and (3) \( dist ( vb (v),v) \le cost (e_i)\). This Voronoi-based test is particularly effective on random and dense Euclidean graphs.

4.3 Two-phase multistart

Even with all the measures we take to increase diversification, our multistart algorithm can still be strongly influenced by the first few solutions it finds, since they will be heavily used during cascaded combination. If the algorithm is unlucky in the choice of the first few solutions, it may be unable to escape a low-quality local optimum.

When the number M of iterations is large (in the thousands), we obtain more consistent results with a two-phase version of our algorithm. The first phase independently runs the standard algorithm four times, with M / 8 iterations each. The second phase runs the standard algorithm with M / 2 iterations, but starting from a pool of elite solutions obtained from the union of the four pools created in the first phase. Note that the combined size of all pools in the first phase is \(4 \sqrt{M/16} = \sqrt{M}\), while the second-phase pool can hold only \(\sqrt{M/4} = \sqrt{M}/2\) solutions. We thus take the elite solutions from the first phase in random order and try to add them to the (initially empty) final pool, using the criteria outlined in Sect. 2.4 to decide which solutions are kept.

We call this two-phase version of our multistart algorithm MS2. Since it has multiple independent starts, it is less likely than the single-phase version of our algorithm (which we call MS) to be adversely influenced by a particularly bad set of initial solutions.

4.4 Time-bounded algorithm

The standard version of our multistart algorithm (as described in Sect. 2) is parameterized by the number of iterations. In some real-world applications, one would like to have instead a time-bounded algorithm, whose goal is to find the best possible solution within a time budget \(\tau \). (This was in fact how the software competition from the 11th DIMACS Implementation Challenge [28] was set up.) Recall, however, that our multistart algorithm sets the size of the elite pool to be proportional to the square root of the number of iterations, which is unknown in the time-bounded setup. A solution would be to fix the size of the elite pool to some constant, and just stop the algorithm when it runs out of time.

We get better results by computing an initial estimate on the number of iterations in the obvious way: we run a single iteration of the multistart algorithm (i.e., constructive algorithm (SPH) followed by local search). Here we use actual edge weights, without perturbation. Let its running time by \(\tau _1\).

We then run the standard multistart algorithm described in Sect. 2, using an (initially empty) elite pool of size \(\sqrt{M'}\). Here \(M' = \tau / (2.5 \tau _1)\) is an estimate on the number of iterations the algorithm can run within the time budget \(\tau \), meaning that we estimate that a standard iteration of the algorithm will take about 2.5 times the first one, which does not use combinations. This constant (2.5) was found empirically, and the algorithm is not too sensitive to it. Note that the algorithm actually stops when the time limit is reached, so its number of iterations may be lower or higher than \(M'\).

The same approach can be used to make the two-phase MS2 algorithm time-bounded: we run a single iteration to get an estimate \(M'\) on the total number of iterations, then run MS2 parameterized by \(M'\). In fact, we can even have an adaptive algorithm that picks either MS or MS2 for the main runs depending on the value of \(M'\). As Sect. 5 will show, a good strategy is to use MS for \(M' \le 2048\) and MS2 otherwise.

5 Experiments

We implemented all algorithms in C++ and compiled them using Visual Studio 2013 (optimizing for speed). We ran our main experiments on a machine with two 3.33 GHz Intel Xeon X5680 processors running Windows 2008R2 Server with 96 GB of DDR3-1333 RAM. This machine scores 388.914 according to the benchmark code made available for the 11th DIMACS Implementation Challenge (http://dimacs11.zib.de/downloads.html). All runs we report are sequential, except those of the Guarded Multistart algorithm, which use two cores. In every case, we report total CPU times, i.e., the sum of the times spent by each CPU involved in the computation.

Our main experiments evaluate all 1437 instances available by August 1, 2014 from the 11th DIMACS Implementation Challenge [28] (accessible from http://dimacs11.zib.de/downloads.html) and report error rates relative to the best solutions published by then (http://dimacs11.zib.de/instances/bounds20140801.txt). Section 5.3 discusses recent developments.

For ease of exposition, we group the original series into classes, as shown in Table 1 (augmented from [52]). More detailed information about the dimensions of the instances in each series can be found in Table 12, in the Appendix. Most instances are available from the SteinLib [32], with two exceptions: cph14 (graphs obtained from rectilinear problems [27]) and vienna (road networks from telecommunication applications [33]).

For experiments on the entire set of benchmark instances, we use a single (identical) random seed for each of the 1437 instances, since they are already quite numerous. Experiments restricted to a subset of the benchmark use multiple random seeds for each instance. These cases will be noted explicitly.

5.1 Multistart

In our first experiment, we ran the default version of our multistart algorithm on all instances from the DIMACS Challenge [28]. Recall that this version is not guarded (no branch-and-bound) and uses the lightweight preprocessing routine. We vary the number of multistart iterations from 1 to 256 (by factors of 4). Table 2 shows average running times (in seconds) and percent errors relative to the best known solutions (http://dimacs11.zib.de/instances/bounds20140801.txt). Error rates are also shown in Fig. 1. Results aggregated by series can be found in Tables 13 and 14, in the Appendix. To improve readability, errors smaller than 0.001% are shown multiplied by 1000 and in brackets. Such small errors are particularly common for wrp instances as a side effect of a reduction from the Group Steiner Tree problem, but occasionally appear for other classes (including euclidean and vienna).

Our algorithm is quite effective. With as little as 16 multistart iterations, the average error rate is below 0.5% on all classes except hard, which consists of adversarial synthetic instances. With 256 iterations, the average error falls below 0.5% for hard, and below 0.06% for all other classes. Average running times are still quite reasonable: the only outlier is vienna, which has much bigger graphs on average (see Table 12, in the Appendix). These instances are relatively easy: a single iteration is enough to find solutions that are on average within 0.1% of the best previously known bounds.

One reason for the success of our approach is its use of cascaded combination. To confirm it is important, we tested six variants of our algorithm on 30 nontrivial representative instances chosen for the DIMACS Challenge competition. (The set is available at http://dimacs11.zib.de/contest/instances/SPG.tgz.) The variants differ only on the maximum number \(\phi \) of failures allowed during cascaded combination. Figure 2 summarizes the results. There is one curve for each value of \(\phi \) (0 to 5, with 0 meaning “no combination”), with different numbers of iterations (\(1, 2, 4, \ldots , 256\)). Each point is the mean of 5 runs with different random seeds. The x-axis shows the (geometric) mean running time, whereas the y-axis represents the (geometric) mean solution value for all 30 instances, normalized so that one iteration with \(\phi =0\) has value 1.00 (the actual mean solution for \(\phi =0\) was 36,871.1873).

The figure shows that, even though cascaded combination significantly increases the time per iteration, it leads to much better solutions within the same allotted time. We use \(\phi = 3\) by default, but any small positive \(\phi \) also works well—there is very little difference between the curves with \(\phi > 0\).

In contrast to cascaded combination, other parameters we considered have only minor effect on solution quality. For instance, recall that each iteration picks either vertex-based or edge-based perturbation with equal probability. With 256 iterations, our default algorithm found a mean solution value (on the same set of 30 instances, with nine runs per instance) of 35,822.2. Using only edge-based perturbations increases this to 35,827.0; vertex-based perturbations increase it to 35,832.9. Running times are essentially the same in all three cases, and the difference in quality is negligible.

Similarly, using dampened perturbations (i.e., running up to three local search passes on the perturbed graph, with some decay) does improve quality, but only slightly. On the same set of instances (and nine seeds per instance), the mean solution value without dampened perturbations is 35,830.2, which is marginally higher than the baseline of 35,822.2. Running times typically increase by less than 10%.

5.1.1 Guarded multistart

We now consider the Guarded Multistart (GMS) algorithm from Sect. 4.1, which runs the standard multistart in parallel with a branch-and-bound algorithm. We tested GMS with 2, 8, 32, and 128 multistart iterations. Table 3 reports the error rates and average running times (see also Tables 15 and 16, in the Appendix). For consistency, we report total CPU times; since GMS uses two cores, the actual wall-clock time is lower.

The CPU time spent by GMS with i iterations cannot be (by design) much worse that the unguarded algorithm (MS) with 2i iterations. A comparison of Tables 2 and 3 shows that running times are indeed similar for several classes, such as hard, vlsi, and wrp. But GMS can stop much sooner on “easy” instances, when its branch-and-bound portion can prove the optimality of the incumbent. For random, incidence, and especially euclidean, GMS becomes significantly faster than MS as the number of allowed iterations increases.

The relative solution quality of the two variants also depends on the type of instance. For classes such as hard and wrp, the guarded variant finds slightly worse results (within the same CPU time), since most of the useful computation is done by the multistart portion of the algorithm, which has fewer iterations to work with. For a few classes (such as incidence), the guarded version actually finds much better solutions, thanks to the branch-and-bound portion of the algorithm. In most cases, the difference is quite small. On balance, the guarded version is more robust and should be used unless there is reason to believe the branch-and-bound portion will be ineffective.

5.1.2 Comparison with Polzin and Vahdati Daneshmand

Table 4 compares Guarded Multistart (GMS) against the three state-of-the-art heuristics by Polzin and Vahdati Daneshmand [12, 40]: prune, ascend&prune, and slack-prune. As the authors report [12, 40], these heuristics dominate the multistart approach by Ribeiro et al. [45].

Since the three algorithms have very different time/quality tradeoffs, we report results for GMS with 1, 8, 32, and 128 iterations. For consistency with how the results are reported in [12, 40], Table 4 shows the lin series separately from the remaining vlsi instances. Running times for their algorithms are scaled (divided by 6.12) to roughly match our machine.Footnote 2

The table shows that the algorithms have different profiles. Both prune and ascend&prune are quite fast, with running times comparable to GMS1, which runs a single multistart iteration. They provide much better solutions on series d and e, whereas GMS1 is significantly better on 1r, i080, i160, i320, and x. Error rates are usually within a factor of two of one another otherwise.

The slack-prune algorithm usually finds better solutions, but takes much longer; it should then be compared with GMS with a few dozen iterations. The slack-prune approach is superior when advanced reduction techniques (exploiting small duality gaps) work very well: 2r, e, and vlsi are good examples. When these techniques are less effective, our algorithm dominates: see es10000fst, i080, i160, i320, mc, and wrp, for example. Performance is comparable for several cases in between, such as es1000fst, lin, or tspfst.

Unfortunately, Polzin and Vahdati Daneshmand [12, 40] only report results for series in which all optimal solutions are known, which consist mostly of small inputs or instances for which reduction techniques work well. It is encouraging that GMS is competitive even in the absence of very hard instances. This confirms that, although reduction and dual-based techniques are powerful, primal heuristics based on local search (the core of our approach) are essential for a truly robust algorithm.

5.1.3 Comparison with Ribeiro et al.

For completeness, we also compare our algorithm to the hybrid multistart heuristic of Ribeiro et al. [45], on which our approach builds. Both codes were compiled with g++ and full optimization and run on an Intel Core i7-6700K CPU with 24 GB of DDR4-2133 RAM; this machine scores 486.387 according to the benchmark routine from the 11th DIMACS Implementation Challenge. For this analysis, we consider the reference set of 30 representative instances from the DIMACS Challenge.Footnote 3 For a fair comparison, neither algorithm uses preprocessing.

We first compare (in Table 5) their performance with a single multistart iteration (i.e., a single constructive heuristic followed by local search until a local optimum is reached). It shows that the local search used by Ribeiro et al. [45] tends to find better results than the one we use (from Uchoa and Werneck [52]), since it can exploit opportunistic moves. Average solution values are 1.1% lower for Ribeiro et al.’s local search, and its advantage is clearer on instances with small solution values, which tend to have more ties. Due to its worse asymptotics, however, their approach is prohibitively slow for a robust general-purpose solver. We are hundreds of times faster on the larger instances tested (with a few tens of thousands of vertices), and never slower by much more than a factor of two. These results confirm the findings by Uchoa and Werneck [52].

Table 6 compares the same two algorithms, but now using 128 multistart iterations and a pool of 10 initial solutions, the setup recommended by Ribeiro et al. [45]. Once again, we used nine random seeds for almost all runs. For the six slowest instances (G106ac1, I064ac1, alue7080, es10000fst01, fnl4461fst, s5), we ran Ribeiro et al.’s code only once, since each run took from 4 h to more than a week (in fact, s5 failed to finish within 10 days). Although there are some cases in which Ribeiro et al. have marginally better results (notably some of the cc instances), our approach finds solutions that are 0.2% better on average, overcoming most of the limitations of its more strict local search. Its greatest advantage, however, is speed. On larger instances, it is orders of magnitude faster and still finds better solutions.

5.1.4 Long runs

To test the scalability of our algorithm, we consider the 41 SteinLib instances that had no published proof of optimality by August 1, 2014. We consider three versions of our algorithm. The baseline is MS, the multistart algorithm described in Sect. 2. MS2 is the two-phase version of MS, as described in Sect. 4.3. Finally, MSK augments plain MS by also using the key-vertex insertion local search implemented as calls to the SPH algorithm (as proposed in [37]); it is very expensive, but can potentially find better results. None of these variants is guarded, since branch-and-bound is ineffective on hard instances. To find near-optimal solutions, we test up to 262,144 (\(2^{18}\)) iterations (1024 for MSK). Since these experiments were very time-consuming, we could only afford to test each set of parameters with a single random seed; we used the same seed for all runs.

For each variant (and number of iterations), Table 7 shows the (geometric) mean time in seconds and average error with respect to the best published solutions. Figure 3 represents the same data visually.

With 1024 iterations, MSK finds better results than other variants, but is much slower: increasing the number of iterations of either MS or MS2 to 16,384 is cheaper and leads to better solutions. Unsurprisingly, MS and MS2 have comparable running times for the same number of iterations. As argued in Sect. 4.3, increasing the number of iterations is more effective for MS2 than for MS. In fact, the average solution quality for MS does not even improve when we increase the number of iterations from 65,536 to 262,144. By starting from four independent sets of solutions, MS2 is less likely to be confined to a particularly bad region of the search space.

Considering all 13 runs from Table 7 (five runs each for MS and MS2, and three for MSK), there were only eight cases (out of 41) for which we could not at least match the best bound published by August 1, 2014. (See Table 17, in the Appendix.) In 19 cases, we found a strictly better solution. Most of these were found by MS2 (see Table 18, in the Appendix); MSK was better only for cc11-2u and cc12-2u.

5.1.5 Time-bounded algorithms

We now consider the time-bounded versions of our multistart algorithms, as described in Sect. 4.4. We ran three variants: single-phase always runs MS; two-phase always runs MS2; and adaptive runs MS if the expected number of iterations is at most 2048 (and MS2 otherwise). In this experiment, we consider the 30 representative instances tested in Fig. 4, each with seven time bounds, from 2 s to 2 h.

Figure 4 shows how the (geometric) mean solution improves with time for all three variants. For clarity, solution values are given relative to the single-phase algorithm with a 2-s time bound (for which the actual mean solution value was 36,195.018). As expected, all three algorithms scale well with time. Moreover, the adaptive algorithm is effective in picking a good strategy for all regimes (single-phase for short runs and two-phase for longer ones). Within 5 h, the adaptive algorithm achieves a mean solution value of 35,715.321, an improvement of more than 1.3% over the baseline.

5.2 Branch-and-bound

We now consider the effectiveness of our branch-and-bound procedure as a standalone exact algorithm. Unlike our heuristics, it is not robust. There are some graphs (such as large vlsi or fst instances) for which it will not produce a good solution in reasonable time, let alone prove its optimality. On large instances with small duality gaps, exact algorithms based on dual ascent are generally not competitive with those using linear programming.

We thus focus on small instances with large duality gaps. Series i080, i160, and i320 have been solved to optimality [31, 38], as have 95 of 100 instances from i640 [12, 40] (all but i640-311, i640-312, i640-313, i640-314, and i640-315). We also consider all solved instances from the bip and cc series. Our method could solve every such instance in less than 2 h; most took fractions of a second.

For perspective, Table 8 compares our exact algorithm against a state-of-the-art approach (as of August 1, 2014) for such instances, due to Polzin and Vahdati Daneshmand [12, 40]. The table has all instances they solved from series i160, i320, i640, cc, and bip. For each series, we show the number of instances tested, our average time in seconds, the average time of their method (divided by 6.12 to match our machine), and the ratio between them. The remaining columns use geometric means instead of averages.

Our method is quite competitive for these instances. Running times are comparable for bip instances and we are faster for other graph classes. The relative difference is higher when we consider averages rather than mean times, indicating that our advantage is greater on harder instances (which have a more pronounced effect on the average). This confirms that the engineering effort outlined in Sect. 3 does pay off.

We stress, however, that Table 8 contains only a very small (and not particularly representative) subset of all instances tested. Because Polzin and Vahdati Daneshmand use linear programming and advanced reduction techniques, there are several classes of instances (such as vlsi) that they can easily solve but we cannot. This is true for other algorithms as well [25].

For completeness, we also compare our algorithm with the branch-and-ascent implementation proposed by Poggi de Aragão et al. [38] (described in further detail in [56]). This method (which we call B&A) can be seen as a precursor of our approach. We limit our tests to i320, a series that was first solved to optimality by B&A itself [38]. This series has 100 random graphs with 320 vertices and adversarial (incidence) costs. The instances are divided in 20 groups of 5; instances in each group are generated with the same number of terminals and edges, but different random seeds.

For conciseness, Table 9 only reports results for the first instance in each group (see Table 21 in the Appendix for full results). To solve all 100 instances, B&A needs 767.8 s on average; the (geometric) mean time is 770 ms. Our new algorithm takes an average time 11.7 s and a mean time of 55 ms. (Both codes were evaluated using the same compiler and machine as in Tables 5 and 6.) The numbers of branch-and-bound nodes visited is comparable: B&A visits 27,913 nodes on average, while we visit 19,976 (the geometric means are 24.4 and 51.3, respectively). This indicates that most of our advantage comes from processing each node much faster; the bounds themselves are not stronger. For easy instances, in particular, we actually tend to visit more nodes, partly because of different accounting: B&A may run dual ascent multiple times on the same node, as long as each run reduces the number of edges by at least 1% (in such cases, we create a new child node). For harder instances, we often visit fewer nodes.

Finally, we note that our branch-and-bound algorithm could prove that 35,535 is the optimal solution for i640-313, a formerly open incidence instance. On a machine about 28% faster than the one we used for our main experiments, it took 15.16 days and visited 7.31 billion branch-and-bound nodes. For this particular run, we gave the algorithm 35,536 (one unit above the optimum) as the initial upper bound and used strong branching.

5.3 Recent developments

So far, we have considered only instances and results available before August 1, 2014; these roughly correspond to the state-of-the-art before the final phase of the 11th DIMACS Implementation Challenge.Footnote 4 This section considers subsequent developments, which were motivated by the challenge itself.

Algorithms First, Polzin and Vahdati Daneshmand reran their exact algorithm on a newer machine with different sets of parameters and an up-to-date version of CPLEX and made the results available on the challenge web page [42]. Although the results are mostly consistent with their previous publications [12, 40], the additional tuning has made the algorithm more competitive for some “hard” graph classes—notably those in Table 8. For series i160, i320, i640, and bip, their (scaled) average running times in seconds are now 0.08, 65.2, 169.3, and 389.4. These improvements (of at least a factor of three) bring their algorithm closer to ours. For cc, however, scaled average times are only slightly better (4890 instead of 5385), which makes our method still more than 20 times faster. In addition, their algorithm can solve all vienna instances to optimality within an hour or so.

Another contribution to the challenge was the SCIP-Jack algorithm by Gamrath, Koch, Maher, Rehfeldt, and Shinano [22] (see also [23]), which can be seen as an updated version of the MIP-based work by Koch and Martin [31] made massively parallel using the SCIP [1] framework. On instances with small duality gaps, it is essentially dominated by the work of Polzin and Vahdati Daneshmand, which use more modern reduction techniques. For small, hard instances, however, running on hundreds of cores allowed them to improve the best known bounds for several instances. Other notable contributions were the algorithms of Althaus and Blumenstock [2] and Biazzo, Braunstein, and Zecchnia [7]. The latter works particularly well on small adversarial instances, notably the hard class. For VLSI (and other) instances with a small number of terminals, Hougardy, Silvanus, and Vygen [25] (see also [26]) presented an exact algorithm based on dynamic programming that can significantly outperform any other approach. None of these algorithms, however, is particularly robust. Although they slightly outperform our method on their core classes, they are much worse on others (often being more than 10% off or failing to produce a solution in reasonable time).

The most robust contribution to the challenge (besides ours) was the algorithm of Fischetti, Leitner, Ljubic, Luipersbeck, Monaci, Resch, Salvagnin, and Sinnl [19] (see also [20]), which combines several techniques. Besides including the local search implementations proposed by Uchoa and Werneck [52], their algorithm relies heavily on mathematical programming techniques, such as using local branching to search large neighborhoods or to fix almost-feasible solutions produced by a set covering heuristic. Depending on some characteristics of the input instance, their algorithm decides which techniques and strategies to use. In particular, on instances with uniform or near-uniform costs, a new lightweight integer programming formulation based on node separators can replace the usual directed cut formulation [58]. This works extremely well on some hard instances, especially those from series hc and bip. On more general instances, however, they do not outperform Polzin and Vahdati Daneshmand [12, 40]. One advantage of their approach relative to ours is that it can also handle other variants of the Steiner problem (such as prize-collecting).

With these new developments, some bounds have been improved by other submissions to the 11th DIMACS Challenge [28]. Together, Gamrath et al. [22] and Fischetti et al. [19] managed to improve the best known bounds by August 1, 2014 for 25 of the 41 open SteinLib instances. Compared against these improved bounds, our algorithm is still strictly better on 9 and matches a further 14.

The challenge included a contest comparing long (2-h) runs of the algorithms above on the set of 30 representative instances we considered in Figs. 2 and 4. The best performers were the algorithm of Fischetti et al. and our algorithm (our entry was the time-bounded single-phase multistart approach); Biazzo et al. [7] had the best performance for some hard instances, but was less consistent overall. Despite being very different in nature, the top two contenders had very similar performance. If one simply considers the mean solution quality, Fischetti et al. had a slight advantage; in a points-based system (in the style of Formula 1, with algorithms assigned points for each instance based on their relative rank), the slight advantage was ours. The algorithms were also evaluated on how fast they found good solutions (rather than just what the final solution was), using the primal integral method [5]. Once again, our algorithm was worse in terms of the mean value but better in the points-based system. Since the points-based system requires consistency, this confirms the robustness of our approach. Moreover, we note that the time limit (2 h) was rather large. Figure 4 shows that the algorithm can produce a reasonable solution in a couple of seconds even for large instances, as it does not have to solve a large linear program. Finally, although the adaptive version of our time-bounded algorithm outperforms our (single-phase) entry in the contest (see Fig. 4), it was only developed later, using the results of contest as motivation. We expect other algorithms to have improved since the challenge as well.

New instances We now discuss instances made available after August 1, 2014.

To highlight the strengths of their algorithm, Fischetti et al. [19] introduced the ccn series, created from the existing cc series [57] by setting all edge costs to one. Five of its instances can be solved to optimality by both Fischetti et al. and our branch-and-bound (although we are two orders of magnitude slower for cc6-3n, the hardest among those). For the remaining (open) instances, Table 10 shows the performance of two versions of our heuristic (MS with 256 iterations and MS2 with 65,536 iterations). Even the faster version is quite competitive: it finds a better bound on the largest instance and is never worse by more than two edges. With longer runs, our two-phase algorithm improves the best solutions found by Fischetti et al. [19] in five cases.

Table 11 reports the performance of our multistart algorithm on the efst class [29], which consists of graph instances generated by the GeoSteiner package [29, 55] from Euclidean inputs (each graph is the union of a small set of full Steiner trees). The class is divided into four series, depending on whether the terminals originate from TSPLib instances (tspefst) or from random points on the plane (r25kefst, r50kefst, r100kefst). For each series and number of iterations, the table shows the average running time (in seconds) as well as the percent error relative to the best known solution obtained by Juhl et al. [29], available at http://dimacs11.zib.de/instances/bounds-efst-20150324.txt.

Although we cannot match the quality of their specialized algorithm (which is not based on a graph problem), a single iteration of our algorithm is enough to get within half a percent of the best known solution on average. With more iterations, the error rate decreases to below 0.2%. These graphs are quite sparse, with average degrees ranging from 2.4 (for random instances) to about 4.3 (for very regular tsp instances). In contrast, the ratio of nonterminals to terminals, which is roughly 0.58 for random instances (such as rk*efst), reaches more than 240 for some tspefst instances (such as fl1400efst and p654efst). These high-ratio instances are the hardest for our method. That said, even the highest error we observed with 256 iterations (1.37% on instance u1432efst, with 98 nonterminals for each terminal) was still fairly small.

Finally, we consider a class of synthetic instances created to illustrate duality gaps for certain graph classes. Series gap-csd, gap-g, and gap-smc consist of very small instances, every one of which we can solve to optimality in less than 10 ms (by either preprocessing or branch-and-bound). For series gap-s, a single iteration of our multistart algorithm can find the optimal solutions (as proven by [19]) of all five instances.

6 Conclusion

We presented a new heuristic approach for the Steiner problem in graphs, based on fast local searches, multistart with an evolutionary component, and fast combinatorial algorithms for finding lower bounds. Although the algorithm could be further improved, notably by incorporating more elaborate preprocessing techniques, it is already quite robust. For short runs, it is competitive with any previous approach on a wide variety of instance classes. Moreover, it is scalable: when given more time, it improved the best published solutions for several hard instances from the literature. Overall, our results show that primal heuristics can be an important component of robust solvers for the Steiner problem in graphs.

Notes

Uchoa and Werneck [52] state in passing that key-vertex removal dominates Steiner vertex removal, but this is not strictly true. Some local optima for key-vertex removal can be improved by Steiner vertex removal by transforming a degree-two vertex (on a key-path) into a key vertex. We found such cases to be extremely rare in practice, however.

We know from http://www.cpubenchmark.net/singleThread.html that our machine is 3.111 times faster than an 1.53 GHz AMD Athlon XP 1800+, which is 1.967 times faster (based on Tables 5.3 and A.6 from [40]) than the 900 MHz SPARC III+ Sunfire 15,000 used in their main experiments.

The code from Ribeiro et al. [45] cannot handle instances G106ac and I064ac because they have a small number (454 and 6, respectively) of zero-length edges; we reset these edge lengths to 1 for this experiment, creating instances G106ac1 and I064ac1. This may increase the solution value by a negligible amount (no more than 0.001%).

Detailed competition results can be found at http://dimacs11.zib.de/contest/results/results.html.

References

Achterberg, T.: SCIP: solving constraint integer programs. Math. Program. Comput. 1(1), 1–41 (2009)

Althaus, E., Blumenstock, M.: Algorithms for the maximum weight connected subgraph and prize-collecting Steiner tree problems. In: Manuscript Presented at the 11th DIMACS/ICERM Implementation Challenge (2014)

Bastos, M.P., Ribeiro, C.C.: Reactive tabu search with path-relinking for the Steiner problem in graphs. In: Ribeiro, C.C., Hansen, P. (eds.) Essays and Surveys in Metaheuristics, pp. 39–58. Kluwer, Alphen aan den Rijn (2001)

Beasley, J.: OR-Library: distributing test problems by electronic mail. J. Oper. Res. Soc. 41, 1069–1072 (1990). http://mscmga.ms.ic.ac.uk/info.html

Berthold, T.: Measuring the impact of primal heuristics. Oper. Res. Lett. 41(6), 611–614 (2013)

Biazzo, I., Braunstein, A., Zecchina, R.: Performance of a cavity-method-based algorithm for the prize-collecting Steiner tree problem on graphs. Phys. Rev. E 86 (2012). http://arxiv.org/abs/1309.0346

Biazzo, I., Muntoni, A., Braunstein, A., Zecchina, R.: On the performance of a cavity method based algorithm for the prize-collecting Steiner tree problem on graphs. In: Presentation at the 11th DIMACS/ICERM Implementation Challenge (2014)

Byrka, J., Grandoni, F., Rothvoß, T., Sanità, L.: Steiner tree approximation via iterative randomized rounding. J. ACM 60(1), 6:1–6:33 (2013)

Cheng, X., Du, D.Z.: Steiner Trees in Industry. Springer, Berlin (2002)

Chlebík, M., Chlebíková, J.: Approximation hardness of the Steiner tree problem on graphs. In: Proceedings of 8th Scandinavian Workshop on Algorithm Theory (SWAT), LNCS, vol. 2368, pp. 95–99. Springer (2002)

Chopra, S., Gorres, E.R., Rao, M.R.: Solving the Steiner tree problem on a graph using branch and cut. ORSA J. Comput. 4, 320–335 (1992)

Daneshmand, S.V.: Algorithmic approaches to the Steiner problem in networks. Ph.D. thesis, Universität Mannheim. http://d-nb.info/970511787/34 (2003)

Dijkstra, E.W.: A note on two problems in connexion with graphs. Numer. Math. 1, 269–271 (1959)

Dowsland, K.: Hill-climbing, simulated annealing and the Steiner problem in graphs. Eng. Optim. 17, 91–107 (1991)

Duin, C.: Steiner’s problem in graphs: approximation, reduction, variation. Ph.D. thesis, Institute for Actuarial Science and Economics, University of Amsterdam (1993)

Duin, C., Volgenant, A.: Reduction tests for the Steiner problem in graphs. Networks 19, 549–567 (1989)

Duin, C., Voß, S.: Efficient path and vertex exchange in Steiner tree algorithms. Networks 29, 89–105 (1997)

Duin, C., Voß, S.: The Pilot method: a strategy for heuristic repetition with application to the Steiner problem in graphs. Networks 34, 181–191 (1999)

Fischetti, M., Leitner, M., Ljubic, I., Luipersbeck, M., Monaci, M., Resch, M., Salvagnin, D., Sinnl, M.: Thinning out Steiner trees: a node-based model for uniform edge costs. In: Manuscript Presented at the 11th DIMACS/ICERM Implementation Challenge (2014)

Fischetti, M., Leitner, M., Ljubić, I., Luipersbeck, M., Monaci, M., Resch, M., Salvagnin, D., Sinnl, M.: Thinning out Steiner trees: a node-based model for uniform edge costs. Math. Program. Comput. 9(2), 203–229 (2017)

Frey, C.: Heuristiken und genetisch algorithmen für modifizierte Steinerbaumprobleme. Ph.D. thesis (1997)

Gamrath, G., Koch, T., Maher, S.J., Rehfeldt, D., Shinano, Y.: SCIP-Jack: a solver for STP and variants with parallelization extensions. In: Manuscript Presented at the 11th DIMACS/ICERM Implementation Challenge (2014)

Gamrath, G., Koch, T., Maher, S.J., Rehfeldt, D., Shinano, Y.: SCIP-Jack–a solver for STP and variants with parallelization extensions. Math. Program. Comput. 9(2), 231–296 (2017)

Goemans, M.X., Olver, N., Rothvoß, T., Zenklusen, R.: Matroids and integrality gaps for hypergraphic Steiner tree relaxations. In: ACM Symposium on Theory of Computing (STOC), pp. 1161–1176. ACM (2012)

Hougardy, S., Silvanus, J., Vygen, J.: Dijkstra meets Steiner: A fast exact goal-oriented Steiner tree algorithm. Technical Report abs/1406.0492, CoRR (2014)

Hougardy, S., Silvanus, J., Vygen, J.: Dijkstra meets Steiner: a fast exact goal-oriented Steiner tree algorithm. Math. Program. Comput. 9(2), 135–202 (2017)

Huang, T., Young, E.F.Y.: ObSteiner: an exact algorithm for the construction of rectilinear Steiner minimum trees in the presence of complex rectilinear obstacles. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 882–893

Johnson, D.S., Koch, T., Werneck, R.F., Zachariasen, M.: 11th DIMACS Implementation Challenge in Collaboration with ICERM: Steiner Tree Problems. http://dimacs11.zib.de

Juhl, D., Warme, D.M., Winter, P., Zachariasen, M.: The GeoSteiner software package for computing Steiner trees in the plane: an updated computational study. In: Manuscript Presented at the 11th DIMACS/ICERM Implementation Challenge (2014)

Karp, R.: Reducibility among combinatorial problems. In: Miller, R., Thatcher, J. (eds.) Complexity of Computer Computations, pp. 85–103. Plenum, New York (1972)

Koch, T., Martin, A.: Solving Steiner tree problems in graphs to optimality. Networks 32, 207–232 (1998)

Koch, T., Martin, A., Voß, S.: SteinLib: an updated library on Steiner tree problems in graphs. Tech. Rep. ZIB-Report 00-37, Konrad-Zuse-Zentrum für Informationstechnik Berlin. http://elib.zib.de/steinlib (2000)

Leitner, M., Ljubic, I., Luipersbeck, M., Prossegger, M., Resch, M.: New real-world instances for the Steiner tree problem in graphs. Tech. rep., ISOR, Uni Wien. http://homepage.univie.ac.at/ivana.ljubic/research/STP/realworld-stp-report-short.pdf (2014)

Mehlhorn, K.: A faster approximation algorithm for the Steiner problem in graphs. Inf. Process. Lett. 27, 125–128 (1988)

Minoux, M.: Efficient greedy heuristics for Steiner tree problems using reoptimization and supermodularity. INFOR 28, 221–233 (1990)

Osborne, L., Gillett, B.: A comparison of two simulated annealing algorithms applied to the directed Steiner problem on networks. ORSA J. Comp. 3(3), 213–225 (1991)

Poggi de Aragão, M., Ribeiro, C.C., Uchoa, E., Werneck, R.F.: Hybrid local search for the Steiner problem in graphs. In: Ext. Abstracts of the 4th Metaheuristics International Conference, pp. 429–433. Porto (2001)

Poggi de Aragão, M., Uchoa, E., Werneck, R.F.: Dual heuristics on the exact solution of large Steiner problems. In: Proceedings of Brazilian Symposium on Graphs, Algorithms and Combinatorics (GRACO), Elec. Notes in Disc. Math. vol. 7 (2001)

Poggi de Aragão, M., Werneck, R.F.: On the implementation of MST-based heuristics for the Steiner problem in graphs. In: Mount, D.M., Stein, C., (eds.) Proceedings of 4th Workshop on Algorithm Engineering and Experiments (ALENEX), LNCS, vol. 2409, pp. 1–15. Springer (2002)

Polzin, T.: Algorithms for the Steiner problem in networks. Ph.D. thesis, Universität des Saarlandes (2003)

Polzin, T., Vahdati Daneshmand, S.: Improved algorithms for the Steiner problem in networks. Discrete Appl. Math. 112(1–3), 263–300 (2001)

Polzin, T., Vahdati Daneshmand, S.: The Steiner tree challenge: an updated study. In: Manuscript Contributed to the 11th DIMACS/ICERM Implementation Challenge. http://dimacs11.zib.de/downloads.html (2014)