Abstract

Quadratic programming problems (QPs) that arise from dynamic optimization problems typically exhibit a very particular structure. We address the ubiquitous case where these QPs are strictly convex and propose a dual Newton strategy that exploits the block-bandedness similarly to an interior-point method. Still, the proposed method features warmstarting capabilities of active-set methods. We give details for an efficient implementation, including tailored numerical linear algebra, step size computation, parallelization, and infeasibility handling. We prove convergence of the algorithm for the considered problem class. A numerical study based on the open-source implementation qpDUNES shows that the algorithm outperforms both well-established general purpose QP solvers as well as state-of-the-art tailored control QP solvers significantly on the considered benchmark problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A large class of practical algorithms for the solution of dynamic optimization problems, as they appear for example in optimal control and dynamic parameter estimation, is based on sequential quadratic programming (SQP) [8, 9, 12, 28]. Particularly for their online variants, model predictive control (MPC) and moving horizon estimation (MHE), fast solution of the arising quadratic programming (QP) subproblems is crucial [12, 28, 38], exploiting all problem-inherent structure.

In a generic form the QP subproblems arising in this class of methods can be formulated as follows:

Discretized state variables are denoted by \(x_k \in \mathbb {R}^{n_x}\), while control variables are denoted by \(u_k \in \mathbb {R}^{n_u}\) for each discretization stage \(k \in \mathcal {S}:= \{ 0, \dots , N \}\) and \(k \in \mathcal {S}_{N}\), respectively. In general we use subscripts to indicate exclusions from a set, e.g., \(\mathcal {S}_{i,j} := \mathcal {S} \backslash \{ i,j \}\). For notational simplicity, we hide time-constant parameters in the state vector. The stages are coupled over the discretized time horizon of length \(N\) by constraints (1b), while constraints (1c) arise from \(n_d\) discretized path constraints. The objective (1a) is often a quadratic approximation to the Lagrangian of the original control or estimation problem. We assume throughout this paper that (1a) is strictly convex, i.e.,

In a typical SQP scheme for dynamic optimization, QP (1) is solved repeatedly, each time with updated (relinearized) data, until a termination criterion is met. While the QP data changes, one often assumes that the active-set, i.e., those equations in (1c) that are satisfied tightly with equality, is similar from one iteration to the next, particularly in later iterations. In the large class of MPC and MHE algorithms that feature linear time-invariant dynamic systems, QP (1) is solved repeatedly for different initial conditions, i.e., only few entries of the vector data of the QP change.

QP (1) is very sparse for long time horizons \(N\). The exploitation of these characteristic, predetermined sparsity patterns in combination with an exploitation of the problem similarity for algorithm warmstarting is one of the central challenges for efficiency of QP solvers in dynamic optimization. A large variety of attempts has been made to address these two requirements and we will briefly highlight several of these classes in the following.

The block-banded structure exhibited by (1) is typically exploited well by tailored interior-point (e.g., [13, 32, 37]) and first-order methods similar to Nesterov’s fast-gradient method [34] (e.g., [4, 39]). However, a well-known drawback of these methods is their limited wamstarting capability to exploit the knowledge of similarity between the solutions of subsequently solved QPs.

Tailored active-set-methods like [14, 15], on the other hand, can exploit this similarity for higher efficiency, but typically do not benefit from the problem-inherent sparsity as much as interior-point methods, as they, on the average, require significantly more iterations, each of which is dominated by the solution of a rather large linear system. One popular approach to overcome this bottleneck is to make use of a so-called condensing routine to express overparameterized state variables in terms of the control inputs and thus reduce the vector of optimization variables to only the control inputs [26, 30]. In general, this condensing step needs to be performed at every sampling time, with a typically quadratic or cubic runtime complexity in the horizon length (depending on the chosen implementation, cf. [2, 3, 20]). Furthermore, the initial factorization of the dense QP Hessian is of similar runtime complexity in the horizon length, see [27]. The active-set strategy suggested in [26] can exploit the sparsity structure, but is most useful when only few active-set changes occur between the iterations, since each change requires a new sparse matrix factorization.

In this paper, we follow up on the idea of a different QP algorithm that was presented for linear MPC problems in [16]. This approach aims at combining the benefits in terms of structure exploitation of interior-point methods with the wamstarting capabilities of active-set methods, and comes at only a linear runtime complexity in the horizon length. Based on ideas from [10] and [31], the stage coupling constraints (1b) induced by the MPC structure are dualized and the resulting QP is solved in a two level approach, using a non-smooth/semismooth Newton method in the multipliers of the stage coupling constraints on the higher level, and a primal active-set method in the decoupled parametric QPs of each stage on the lower level. Note that in contrast to classical active-set methods, this approach permits several active-set changes at the cost of one block-banded matrix factorization. We refer to this procedure as a dual Newton strategy. More details on the method are given in Sect. 2.

While in [16] only a limited prototype Matlab implementation was described, this work extends the algorithm to a more general problem class and gives details on an efficient implementation of the method. We further provide a theoretical foundation to the algorithm and prove convergence. We discuss parallelization aspects of the method and present a novel algorithm for the solution of the structured Newton system, that results in an parallel runtime complexity of the dual Newton strategy of \(\mathcal {O}( \log N)\) per iteration. Most importantly, we present qpDUNES, an open-source, plain C implementation of the DUal NEwton Stategy. This software comes with interfaces for C/C++ and Matlab. We compare runtimes of qpDUNES to state of the art structure-exploiting QP solvers for dynamic optimization problems based on three challenging benchmark control problems.

This paper bases in parts on the conference proceedings paper [19]. In contrast to [19], however, we provide significant additional details in this paper, such as in-depth algorithmic and theoretical investigations, implementation details, and benchmark results from linear MPC.

2 Method description

For clarity of presentation we group the optimization variables of dynamic optimization QP problems, the system states \(x_k \in \mathbb {R}^{n_x}\) and the control inputs \(u_k \in \mathbb {R}^{n_u}\), into the stage variables \(z_k = \begin{bmatrix}\, x_k^\top&u_k^\top \,\end{bmatrix}^\top \in \mathbb {R}^{n_z}\) for each stage \(k \in \mathcal {S}_N\), and \(z_N = \begin{bmatrix}\, x_N&0 \,\end{bmatrix}^\top \in \mathbb {R}^{n_z}\) for the terminal stage. Here, we define \(n_z := n_x + n_u\). Problem (1) then reduces to

which is the problem that we consider here. The cost function on each stage consists of a positive definite second-order term \(0 \prec H_k \in \mathbb {R}^{n_z \times n_z}\) and a first-order term \(g_k \in \mathbb {R}^{n_z}\) for each \(k \in \mathcal {S}\). Note that therefore primal solutions (if existent) are unique. Two subsequent stages \(k \in \mathcal {S}\) and \(k+1 \in \mathcal {S}\) are coupled by first-order terms \(C_k, E_{k+1} \in \mathbb {R}^{n_x \times n_z}\) and a constant term \(c_k\). We assume that all \(C_k\) have full row rank, i.e., \(\text {rank}(C_k) = n_x, ~\forall \, k \in \mathcal {S}_N\), and that all \(E_k\) have the special structure \(E_k = \begin{bmatrix}\, \mathrm {I} \,&\, 0 \,\end{bmatrix}\), where \(\mathrm {I} \in \mathbb {R}^{n_x \times n_x}\) is an identity matrix and \(0\) is a zero matrix of appropriate dimensions. Vectors \(\underline{d}_k, \overline{d}_k \in \mathbb {R}^{n_d}\), and a matrix \(D_k \in \mathbb {R}^{n_d \times n_z}\) of full row rank denote affine stage constraints.

Unless stated otherwise, we assume in the following that a feasible solution \(z^* := \begin{bmatrix} {z_0^*}^\top ,&\ldots ,&{z_N^*}^\top \end{bmatrix}^\top \) of (P) exists that fulfills the linear independence constraint qualification (LICQ). Section 5 discusses the consequences resulting from infeasibility of (P) and how it can be detected.

2.1 Dual decomposition

We decouple the QP stages by dualizing constraints (P2). By introducing the vector of Lagrange multipliers

we can express (P1) and (P2) by the partial Lagrangian function

where we define zero matrices \(E_{0} = C_{N} = 0 \in \mathbb {R}^{n_x \times n_z}\) and redundant multipliers \(\lambda _0 = \lambda _{N+1} := 0 \in \mathbb {R}^{n_x}\) only for notational convenience in this context (note that they are not among the optimization variables of the dual problem defined below).

By Lagrangian duality, the solution of (P) can be computed as

Because this Problem is separable in the stage variables \(z_k\), minimization and summation can be interchanged, and a solution to (P) can be obtained by solving

where

We refer to \((\text {QP}_k)\) as the kth stage QP. Note that each \((\text {QP}_k)\) depends on at most two block components of the vector of dual optimization variables \(\lambda \) defined in (3).

Remark 1

Since \(\lambda \) only enters in the objective of each \((\text {QP}_k)\), feasibility of \((\text {QP}_k)\), and thus existence of \(f^*_k(\lambda )\) is independent of the choice of \(\lambda \in \mathbb {R}^{N n_x}\). In particular, since the constraints of \((\text {QP}_k)\) are a subset of (P3), feasibility of (P) implies feasibility of \((\text {QP}_k)\).

Remark 2

Each \(f_k^*(\lambda )\) implicitly defines a vector \(z_k^*(\lambda )\), the solution of \((\text {QP}_k)\).

2.2 Characterization of the dual function

It was shown in [16] (based on results from [5, 17, 45]) that \(f^*(\lambda )\) is concave, piecewise quadratic, and once continuously differentiable. We establish the relevant findings in the following.

Definition 1

For a stage \(k \in \mathcal {S}\), the optimal active-set at \(\lambda \) is given by

i.e., the set of row indices of the constraints of \((\text {QP}_k)\) that are satisfied as an equality. Here, \(D_k^i\) refers to the \(i^{\text {th}}\) row of \(D_k\), and \(\underline{d}_k^i\) and \(\overline{d}_k^i\) refer to the \(i^{\text {th}}\) entry of the vector.

Definition 1 naturally extends to a definition of the active-set in the full space of primal variables by \(\mathcal {A}^*(z^*(\lambda )) := \mathcal {A}^*_0(z_0^*(\lambda )) \times \cdots \times \mathcal {A}^*_N(z_N^*(\lambda ))\). The finite number of disjoint active-sets further induces a subdivision of the dual \(\lambda \) space.

Definition 2

Each active-set defines a region \(A \subseteq \mathbb {R}^{N n_x}\) in the dual \(\lambda \) space. For a representative \(\lambda ^{(j)} \in \mathbb {R}^{N n_x}\) we have

By choosing representatives of pairwise distinct regions we can define an arbitrary, but fixed order that allows us to uniquely identify each region \(A^{(j)}\).

The name region is anticipatory, but it will become clear from Corollary 2 that the sets \(A^{(j)}\) indeed are connected. The number of regions \(n_r\) clearly is finite, as there is only a finite number of distinct active-sets. From Remark 1 we can conclude that each \(\lambda \in \mathbb {R}^{N n_x}\) is contained in a region \(A^{(j)}\), and thus

Remark 3

Two distinct regions \(A^{(j_1)}\) and \(A^{(j_2)}\) do not need to be disjoint. Values of \(\lambda \) that lead to weakly active stage constraints at \(z^*(\lambda )\) are contained in two or more regions. These values of \(\lambda \) form the seams of the regions.

Next, we substantiate Remark 2 by characterizing the nature of the dependency of \(z_k^*\) on the dual variables \(\lambda \) in the stage problems \((\text {QP}_k)\).

Lemma 1

Let \((\text {QP}_k)\) be feasible. Then, the optimal solution of \((\text {QP}_k)\), \(z_k^*(\lambda )\), is a piecewise affine and continuous function in \(\lambda \). In particular, the dependency is affine on each region \(A^{(j)}, 1 \le j \le n_r\).

Proof

(cf. [16], Lemma 2]; [45]) For stage Lagrange multipliers \(\mu _k \in \mathbb {R}^{2 n_d}\) the solution of (P) is given by (see, e.g., [18])

where \(\underline{D}_k^*\), \(\underline{d}_k^*\) and \(\overline{D}_k^*\), \(\overline{d}_k^*\) consist of the rows of \(D_k\), \(\underline{d}_k\), and \(\overline{d}_k\) that correspond to the constraints that are active (i.e., fulfilled with equality) at the lower or, respectively, the upper bound in the solution \(z_k^*\), and \(\mu _k^*\) is the vector of consistent dimension that contains the corresponding multipliers. The remaining stage multiplier entries are \(0\) in the solution of (P). As \(\lambda \) enters affinely only on the right-hand side, it is clear that for identical active-sets it holds that \(z_k^*\) depends affinely on \(\lambda \). Continuity has been shown in [17]. \(\square \)

Corollary 2

Each region \(A^{(j)}, ~1 \le j \le n_r\), of the dual space is convex and polyhedral.

Proof

Each stage problem \((\text {QP}_k)\) is constrained by affine constraints. For a given representative \(\lambda ^{(j)}\) the set \(\mathcal {F}_k := \{ z_k \in \mathbb {R}^{n_z} ~|~ \mathcal {A}_k(z_k) = \mathcal {A}^*_k(z_k^*(\lambda ^{(j)})) \}\) is therefore convex and polyhedral. From Lemma 1 we have that \(z_k^*\) is affine in \(\lambda \) for a certain (fixed) active-set. A region \(A^{(j)}\) is therefore the intersection of \(N+1\) (i.e., a finite number) preimages of convex sets, and therefore convex. \(\square \)

Lemma 1 is the basis for the following exhaustive characterization of the dual function \(f^*(\lambda )\), which we take from [16] without proof.

Lemma 3

([16], Lemma 3]) If all stage QPs \((\text {QP}_k)\) are feasible, then the dual function \(f^*(\lambda )\) exists and is described by a concave, continuously differentiable, and piecewise quadratic spline in \(\lambda \) space.

Remark 4

In particular \(f^*(\lambda )\) is quadratic on each region \(A^{(j)}\).

2.3 Solution by a (non-smooth) Newton method

Because (D) is an unconstrained problem and \(f^*(\lambda )\) is a piecewise-quadratic spline we employ a non-smooth Newton method, as originally proposed in [31], and also used in [16]. The dual iterates are updated via the iteration

(the Newton iterates \(\lambda ^{i}\) are not to be confused with the region representatives \(\lambda ^{(j)}\) from Sect. 2.2) for an initial guess \(\lambda ^{0}\) and a suitably chosen step size \(\alpha \) until \(f^*(\lambda ^i)\) is stationary. The step direction \(\varDelta \lambda \) is computed from

where \(\mathcal {M}(\lambda ^i) := - \frac{\partial ^2 {f^*}}{\partial {\lambda }^2}(\lambda ^i)\) and \(\mathcal {G}(\lambda ^i) := \frac{\partial {f^*}}{\partial {\lambda }}(\lambda ^i)\). By Remarks 3 and 4, \(\mathcal {M}(\lambda )\) is unique everywhere but on the seams of \(f^*(\lambda )\) (a null set), so almost everywhere. On the seams we assume that pointwise an arbitrary, but fixed second derivative from the finite number of possible choices is used, thus ensuring general well-definedness of \(\mathcal {M}(\lambda )\). As we will see later on, the specific choice of \(\mathcal {M}(\lambda )\) on the seams is not crucial for the convergence of the method. In Sect. 3.3 we show that the choice of \(\mathcal {M}(\lambda )\) on the seams is done implicitly and automatically by the degeneracy handling mechanism of the stage subproblem solver. We give more details on how the Newton system is set up in Sects. 3.2 and 3.3.

Clearly, stationarity of \(f^*(\lambda )\) is equivalent to optimality of (D). Observe that by definition of (D), \(z^*(\lambda ^i)\) (i.e., the canonical concatenation of all \(z_k^*(\lambda ^i)\)) is always optimal and (P3) are always fulfilled in the spirit of an active-set method. Lemma 4 will show that feasibility of (P2) is identical with stationarity of \(f^*(\lambda )\).

The complete QP solution method is given in Algorithm 1, where we denote the Lagrange multipliers of the stage constraints (P3) by \(\mu _k \in \mathbb {R}^{2 n_d}\) for each stage \(k \in \mathcal {S}\). Note that the parametric solution of the stage problems \((\text {QP}_k)\) for the current iterate \(\lambda ^i\) in Step 2, and, as we will see in Sect. 3, also the setup of \(\mathcal {G}(\lambda ^i)\) and \(\mathcal {M}(\lambda ^i)\) in Steps 3 and 6 permit an independent, concurrent execution on all stages (see also Sect. 6).

We discuss details of the respective steps in Sect. 3 and give a convergence proof for the algorithm in Sect. 4.

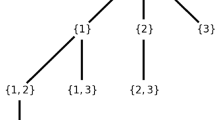

2.4 Characterization of the dual Newton iterations

A full QP solution by Algorithm 1 could be visualized as in Fig. 1. Each cell corresponds to a region \(A^{(j)}\) in \(\lambda \)-space, for which the primal active-set is constant. Starting from an initial guess \(\lambda ^0\), a Newton direction is computed from Eq. (6), leading to \(\lambda ^1_{\mathrm {FS}}\). Using a globalization strategy (see Sect. 3.6), a suitable step rescaling is found, leading to \(\lambda ^1_{\bar{\alpha }}\). In contrast to classical active-set methods, multiple active-set changes are possible in one iteration. For future reference we also indicate a minimum guaranteed step, which (in the regular case) leads at least to \(\lambda ^1_{\alpha _{\min }}\) in the neighboring region. In the second iteration of this illustration, \(\lambda ^2_{\mathrm {FS}}\) already provides sufficient progress, thus no globalization needs to be applied. In the following iteration \(\lambda ^*\) is found. We prove in Lemma 8 that a one-step terminal convergence is guaranteed, once the correct region is identified.

3 Algorithmic details of the dual Newton strategy

The dynamic optimization origin induces a specific structure in Problem (D), that we strive to exploit as explained in the following section.

3.1 Solution of decoupled parametric stage QPs

On each stage \(k \in \mathcal {S}\) we have to repeatedly solve a QP of size \((n_z, n_d)\) that only changes in the first-order term (and the negligible constant term) with the current guess of \(\lambda \). We rephrase problem \((\text {QP}_k)\) as

with

In general, \(H_k\) and \(D_k\) are dense. Such QPs can be solved efficiently (see [7, 14]), for example by employing the online active set strategy, which is implemented in the open-source QP solver qpOASES [15].

In the special, yet practically relevant case where \(H_k\) is a diagonal matrix and \(D_k\) is an identity matrix (i.e., only bounds on states and controls exist) the optimal solution \(z_k^*\) can conveniently be computed by component-wise “clipping” of the unconstrained solution as it was presented in [16]:

3.2 Structure of the Newton system

The right-hand-side vector \(\mathcal {G}: \mathbb {R}^{N n_x} \rightarrow \mathbb {R}^{N n_x}\) of the Newton system (6) is easily seen to only depend on two neighboring stages in each block \(\lambda _k\). It holds that

The left-hand side Newton matrix \(\mathcal {M} : \mathbb {R}^{N n_x} \rightarrow \mathbb {R}^{N n_x \times N n_x}\) has a block tri-diagonal structure, because only neighboring multipliers \(\lambda _k, \lambda _{k+1}\) can have a joint contribution to \(f^*\). At a fixed \(\lambda \)

where the diagonal and off-diagonal block components are given by

3.3 Gradient and Hessian computation

Lemma 4

(cf. [6], App.C]) Let all \((\text {QP}_k)\) be feasible. Then the derivative of \(f_k^*\) with respect to \(\lambda \) exists and its nonzero entries are given by

Proof

The derivative \(\frac{\partial {f_k^*}}{\partial {\lambda }}\) exists by Lemma 3. From (7) and (8) we observe that only \(\lambda _k\) and \(\lambda _{k+1}\) enter in \((\text {QP}_k)\), and therefore the only nonzero blocks of \(\frac{\partial {f_k^*}}{\partial {\lambda }}\) are given by \(\frac{\partial {f_k^*}}{\partial {\breve{\lambda }}}\), where we use \(\breve{\lambda } := \begin{bmatrix} \lambda _k&\lambda _{k+1} \end{bmatrix}\) to denote the projection of \(\lambda \) onto the respective relevant entries. We derive a closed form for these values by regarding the stage QP Lagrangian

Since \(H_k \succ 0\) and \((\text {QP}_k)\) is feasible by assumption, it holds that \((\text {QP}_k)\) has a (finite) optimal primal and dual solution \((z_k^*,\mu _k^*)\), and, by Danskin’s Theorem [11], we can interchange optimization and derivation in the sense that

holds. We then have

where the second and the third term vanish because of stationarity and the complementarity requirement of the optimal stage solution \((z_k^*, \mu _k^*)\). \(\square \)

Remark 5

We can see from Lemma 4 that \(\left\| \, \mathcal {G}(\lambda )\, \right\| \) is indeed a measure for both stationarity of \(f^*(\lambda )\) and infeasibility of (P2), as claimed in Sect. 2.3.

The second derivative of \(f^*\) can be computed as follows:

Lemma 5

Let \(Z^*_k \in \mathbb {R}^{n_z \times (n_z - n_\mathrm {act})}, \; k \in \mathcal {S}\) (where \(n_\mathrm {act}\) denotes the number of active constraints, which depends on \(z_k^*(\lambda )\)) be a basis matrix for the nullspace of the matrix of active constraint rows of \((\text {QP}_k)\), \(D_k^*\), which depends by \(z_k^*\) on \(\lambda \). \(P^*_k := Z^*_k {({Z^*_k}^\top H_k Z^*_k)}^{-1} {Z^*_k}^\top \in \mathbb {R}^{n_z \times n_z}\) denote the elimination matrix for this nullspace. Then \(\mathcal {M}(\lambda )\) is given by

where \(\mathcal {P} := \mathrm {diag}(\begin{bmatrix} P_0^*&P_1^*&\cdots&P_N^* \end{bmatrix})\) and

Proof

We compute the Hessian blocks in (11) explicitly. Differentiating (13) once more with respect to \(\lambda \), we obtain

and

Within a fixed active-set the optimal solution of \((\text {QP}_k)\) at \(\lambda \) is given by (see, e.g., [35])

Accordingly, the Hessian blocks (cf. Eq. (12)) are computed as

and

which concludes the proof. \(\square \)

Remark 6

From Lemma 5 we can see in detail why \(\mathcal {M}(\lambda )\) is naturally well-defined almost everywhere. Only cases where \(\lambda \) leads to weakly active constraints in some \((\text {QP}_k)\), we observe ambiguity of \(Z^*_k\) and need to resort to a point-wise arbitrary but fixed specification of \(Z^*_k\) for well-definedness of \(\mathcal {M}(\lambda )\). In practice, this ambiguity is naturally resolved by the degeneracy handling mechanism of the stage QP solver; it will in particular become clear from Theorem 9 that the convergence of the algorithm is not affected by the specific choice of \(Z^*_k\).

Remark 7

Under the assumption of LICQ, a nullspace basis matrix \(Z^*_k\) can be constructed as  by partitioning the matrix of active constraint rows in the solution \(z_k^* (\lambda )\), \(D^*_{k} =: \begin{bmatrix}\, B&N \,\end{bmatrix} \in \mathbb {R}^{n_{\text {act}} \times n_z}\), into an invertible matrix \(B \in \mathbb {R}^{n_{\text {act}} \times n_{\text {act}}}\) (without loss of generality the first \(n_{\text {act}}\) columns by reordering) and a \(n_{\text {act}} \times (n_z - n_{\text {act}})\) matrix \(N\) (see [23, 35] for details).

by partitioning the matrix of active constraint rows in the solution \(z_k^* (\lambda )\), \(D^*_{k} =: \begin{bmatrix}\, B&N \,\end{bmatrix} \in \mathbb {R}^{n_{\text {act}} \times n_z}\), into an invertible matrix \(B \in \mathbb {R}^{n_{\text {act}} \times n_{\text {act}}}\) (without loss of generality the first \(n_{\text {act}}\) columns by reordering) and a \(n_{\text {act}} \times (n_z - n_{\text {act}})\) matrix \(N\) (see [23, 35] for details).

Remark 8

It is important to note that \(P^*_k\) can be obtained relatively cheaply from a null-space QP solver like qpOASES [15] that directly provides \(Z^*_k\) and a Cholesky factor \(R\) for \(R^\top R = {Z^*_k}^\top H_k Z^*_k\); see [14]. For the special case of diagonal Hessian matrices \(H_k = \text {diag}(h_k^1, \ldots , h_k^{n_z})\) and simple bounds, the projection \(P^*_{k}\) is simply a diagonal matrix with either \(1{/}h_k^{i}\) or \(0\) entries depending on whether the corresponding variable bound is inactive or active. The calculation of the Hessian blocks can then be accelerated even further using diadic products as proposed in [16].

Remark 9

From the construction of \(\mathcal {M}\) in Lemma 5 we can see that the dual Newton strategy is, in principle, also capable of dealing with certain indefinite problems of the form (P), as long as the reduced Hessian blocks \({Z^*_k}^\top {H_k} {Z^*_k}\) remain positive (semi-)definite for all \(k \in \mathcal {S}\). This could, for example, be the case when equality stage constraints eliminate the negative definite directions from the stage Hessians. Basic requirement for this work is of course the stage QP solvers’ support of such indefinite QPs.

3.4 Solution of the Newton system and regularization

By Lemma 3, \(\mathcal {M}(\lambda )\) is positive semidefinite, because \(f^*(\lambda )\) is concave. The block-tridiagonal structure of the \(\mathcal {M}(\lambda )\), cf. Eq. (11), can be exploited for the efficient solution of the Newton system (6). Observing that a lower triangular factor \(L\) of \(\mathcal {M}(\lambda ) = L L^\top \) possesses the same structural zero-blocks below the diagonal, we suggest to employ a banded Cholesky decomposition. This factorization differs from a regular Cholesky decomposition (see, e.g., [35]) by skipping all redundant blocks left and below the subdiagonal block \(U_k^\top \) of each block column \(k\), thus reducing the computational complexity from \(O(N^3 n_x^3)\) to \(O(N n_x^3)\) floating point operations (FLOPs).

In the case of jointly redundant active constraints in several \((\text {QP}_k)\) via the stage coupling constraints (P2), \(\mathcal {M}(\lambda )\) may become rank-deficient [31] and the Newton system (6) ill-posed. We propose to overcome this by applying regularization. In our dual Newton software package qpDUNES both a Levenberg-Marquadt-type regularization and an “on-the-fly” regularization are available. On detection of singularity during the initial banded Cholesky factorization, the Levenberg-Marquadt approach replaces \(\mathcal {M}(\lambda ^i)\) in (6) by

where \(\gamma \in \mathbb {R}^+\) is a (small) constant regularization parameter. The “on-the-fly” regularization changes those diagonal elements for which the crucial division step in the Cholesky decomposition cannot be performed due to singularity (similarly to the modified Cholesky factorization described in [35], which is based on [22]). We note that the “on-the-fly” regularization ensures positive definiteness of the resulting \(\tilde{\mathcal {M}}(\lambda ^i)\) (because it has a unique Cholesky decomposition) and avoids the need of restarting the factorization, but may be numerically less stable.

the latter only regularizes those diagonal elements for which the crucial division step in the Cholesky decomposition cannot be performed due to singularity (similarly to the modified Cholesky factorization described in [35], which is based on [22]). While the former one uses

3.5 A reverse Cholesky factorization for improved stability

In the context of MPC, one expects rather many active constraints in the beginning of the control horizon and few to none towards the end of the horizon in many applications. This may, for example, be a result of the chosen objective being of tracking nature or of the rejection of a perturbation of the controlled process which enters at the first stage. We aim to exploit this knowledge by applying a Cholesky factorization to \(\mathcal {M}\) (we omit the \(\lambda \)-dependency in this section for notational convenience) in reverse order, i.e., starting from the last row/column, as detailed in Algorithm 2. Instead of a factorization \(\mathcal {M}= L L^\top \), we obtain a Cholesky-like factorization \(\mathcal {M}= \mathcal {R} \mathcal {R}^\top \) that is equally well suited for an efficient solution of the Newton system (6). To see this, observe that Algorithm 2 is equivalent to a standard Cholesky factorization applied to \(\hat{\mathcal {M}} :=\mathcal {J} \mathcal {M}\mathcal {J}^\top \) after a full row and column permutation through  .

.

The advantage of applying this reverse Cholesky factorization in the dual Newton strategy is twofold. First, observe that a diagonal block \(W_k\) only changes from one Newton iteration to the next if the active-set on stage \(k\) or stage \(k-1\) changes, and an off-diagonal block \(U_k\) only changes if the active-set on stage \(k\) changes (in particular note that \(\mathcal {M}\) only needs to be recomputed in blocks with active-set changes). As Algorithm 2 only uses data from the last \(k\) block rows (and columns) in block iteration \(k\), it is sufficient to restart the factorization from the block row that corresponds to the last active-set change. Furthermore, we can also expect better numerical properties of \(R\), as the principal submatrix corresponding to stages without active state constraints is positive definite (recall that rank-deficiency of \(\mathcal {M}\) can only arise from a redundancy in active stage constraints over several stages) and of similar conditioning as the original problem; a significant worsening of the conditioning can only appear in block-rows with active stage constraints, which, in a typical MPC setting, tend to appear rather on the earlier than on the later stages, and thus enter later in Algorithm 2 compared to the standard Cholesky factorization.

To formalize this, we identify the reverse Cholesky factorization with the discrete time Riccati recursion in the following. Let us regard the (possibly regularized) Newton Hessian \(\mathcal {M}\succ 0\) in block form as defined in (11). Then, the reverse Cholesky factorization (Algorithm 2) is easily seen to be given by the recursion

where the Cholesky factor \(\mathcal {R}\) in block form is given by

with upper triangular blocks \(\mathcal {R}_{k,k}\) given implicitly (but uniquely) by \(X_k =: \mathcal {R}_{k,k} \mathcal {R}_{k,k}^\top ~~ \forall \, k \in \mathcal {S}_{0}\) and dense blocks \(\mathcal {R}_{k,k+1} = U_{k} \times {\mathcal {R}_{k+1,k+1}} ~~ \forall \, k \in \mathcal {S}_{0,N}\). Note that in this context the subscripts \(\mathcal {R}_{k,k}\) refer to the block entries of \(\mathcal {R}\) rather than to the individual entries (as used in Algorithm 2). We refer to \(X_k\) as Cholesky iterates in the following.

For linear time-invariant systems without active constraints it follows from Lemma 5 that

for \(k \in \mathcal {S}_{0,N}\), where (analogously to QPs (1) and (P))  are the dynamics, \(E = \begin{bmatrix} \mathrm {I}&0 \end{bmatrix}\) is the state selection matrix, and \(H = \small {\begin{bmatrix} Q&S^\top \\ S&R \end{bmatrix}}\) is the objective Hessian. Due to a possibly different choice of the Hessian on the last interval (\(H \equiv P\)),

are the dynamics, \(E = \begin{bmatrix} \mathrm {I}&0 \end{bmatrix}\) is the state selection matrix, and \(H = \small {\begin{bmatrix} Q&S^\top \\ S&R \end{bmatrix}}\) is the objective Hessian. Due to a possibly different choice of the Hessian on the last interval (\(H \equiv P\)),

Theorem 6

If P is the solution to the discrete time algebraic Riccati equation

then the Cholesky iterates \(X\) are constant, i.e., recursion (17) is stationary. In particular, \(X_k := P^{-1} + {\textit{CH}}^{-1} C^\top = W_N ~~\forall \, k \in \mathcal {S}_0\).

Proof

See Appendix.

This proof is easily seen to also extend to the linear time-varying case without active constraints, where we have (cf. Lemma 5):

with \(C_k = \begin{bmatrix} A_k&B_k \end{bmatrix}, ~\forall \, k \in \mathcal {S}_N\), \(E_k = \begin{bmatrix} \mathrm {I}&0 \end{bmatrix}, ~\forall \, k \in \mathcal {S}_N\), \(H_k = \begin{bmatrix} Q_k&S_k^\top \\ S_k&R_k \end{bmatrix}, ~\forall \, k \in \mathcal {S}_N\), and \(H_N = Q_N\).

Corollary 7

Let \(W_k,~k\in \mathcal {S}_0\) and \(U_k,~k\in \mathcal {S}_{0,N}\) be computed from an linear time-varying system without (active) state constraints. Then, the Cholesky iterates \(X_k, ~k \in \mathcal {S}_{0}\) from the Cholesky recursion (17) can be identified with the discrete time time-varying algebraic Riccati recursion

via \(X_k = P_k^{-1} + C_{k-1} H_{k-1}^{-1} C_{k-1}^\top ~~\forall \, k \in \mathcal {S}_0\).

Proof

See Appendix. \(\square \)

3.6 Choice of the Newton step size

The piecewise quadratic nature of \(f^*(\lambda )\) implies that a globalization strategy is needed for the algorithms to converge reliably. For computational efficiency close to the solution, where we assume the quadratic model of the dual function \(f^*(\lambda )\) to be accurate, we propose a line search technique to find an (approximate) solution to

In contrast to general nonsmooth optimization, an exact line search is possible at reasonable cost in our context. In particular, \(f^*(\lambda ^i + \alpha \varDelta \lambda )\) is a one-dimensional piecewise quadratic function along the search direction \(\varDelta \lambda \). An exact quadratic model in search direction can be built up by evaluating each \(f_k^*({\lambda ^i} + \alpha _j {\varDelta \lambda })\), \(k \in \mathcal {S}\) at each value of \(\alpha _j \in [0,1]\) that corresponds to an active-set change on this stage. Alongside, slope information in search direction can be obtained by

cf. Eq. (13). As the second derivate is constant within each region, it can be cheaply and accurately computed as the difference quotient of the slope at the left and the right side of the intersection of each region with the search direction \({\varDelta \lambda }\). Note that a parametric active-set strategy like qpOASES traverses the required points \({z_k^*}({\lambda ^i} + \alpha _j \, {\varDelta \lambda })\) for values of \(\alpha _j\) corresponding to an active-set change on stage \(k\) naturally while computing \({z_k^*}({\lambda ^i} + {\varDelta \lambda })\) for the full step \({\varDelta \lambda }\) (cf. [14]). When employing the clipping operation (9) for the solution of the stage problems \((\text {QP}_k)\), the points of active-set changes along the search direction can analogously be determined by a simple ratio test.

With the piecewise quadratic model in search direction built up on each stage \(k \in \mathcal {S}\), the dual objective value can be cheaply evaluated at each \(\alpha _j\), where an active-set change occurs on any stage \(k \in \mathcal {S}\). Taking the maximum over all these values (e.g., by performing a bisection search) identifies, in conjunction with the slope information, the region containing the maximum dual function value in search direction, and the optimal \(\alpha ^*\) can be found as the maximum of the one-dimensional quadratic model of this region.

Alternatively, also heuristic backtracking-based search strategies seem appropriate in this context. Particularly the fact that the gradient evaluation \(\mathcal {G}({\lambda })\) comes almost at the same cost as a function evaluation \(f^*({\lambda })\) can be exploited within the line search.

A search strategy that seemed to perform particularly well in practice was a combination of a fast backtracking line search, allowing to quickly detect very small step sizes, with a bisection interval search for refinement.

While we make use of a backtracking line search to quickly decrease the maximum step size \(\alpha _{\max }\), the minimum step size \(\alpha _{\min }\) is given by the minimum scaling of the search direction that leads to an active-set change on any stage. While this is intuitively clear, as each region with a constant active-set is quadratic and Newton’s method takes a step towards the minimum of a local quadratic function approximation, we give a formal proof for this in the following section, in the context of convergence (Lemma 8). This guaranteed minimum step size is indicated through \(\lambda ^i_{\alpha _{\min }}\) in Fig. 1.

Remark 10

We obtain all \(\alpha \)-values at which active-set changes occur at no extra cost when employing an online active-set strategy to solve each \((\text {QP}_k)\). Therefore, taking the minimum over all these \(\alpha \)-values over all stages \(k \in \mathcal {S}\) provides us with a lower bound for \(\alpha _{\min }\). If the solution to \((\text {QP}_k)\) is computed by Eq. (9), points of active-set changes can still be obtained cheaply by comparing the unconstrained to the clipped solution in each component.

4 Finite convergence of the algorithm

Convergence of non-smooth Newton methods has been proven before for functions with similar properties [21, 31, 36]. In the following we show convergence in the specific setting present in this paper; this allows us to present a shorter, more straightforward proof following the generic proof concept for descent methods. We furthermore require these details to establish the theory an infeasibility detection mechanism in Sect. 5, which according to our knowledge is novel to QP solvers based on nonsmooth Newton methods.

A bit of notation is needed throughout this and the following section. By \(\mathcal {M}(\lambda )\) we refer to the Hessian matrix of (D), as defined in Eqs. (11–12). The possibly regularized version of \(\mathcal {M}(\lambda )\) used in the solution step of the Newton system (6) is denoted by \(\tilde{\mathcal {M}}(\lambda )\). We omit the dependency on \(\lambda \) occasionally for notational convenience when it is clear from the context. The active-set of stage constraints at \(\lambda \) is denoted by \(\mathcal {A}^*(z^*(\lambda ))\).

We start with a rather obvious result, that nonetheless is crucial for the practical performance of the Dual Newton Strategy.

Lemma 8

(Local one-step convergence) Let (P) be feasible. Let \(\lambda ^i\) be the current dual iterate in Algorithm 1. Let \(\mathcal {M}(\lambda ^i)\) be positive definite, i.e., no regularization is needed during Step 7 in Algorithm 1, and let \(\varDelta \lambda \) be the solution of the Newton equation (6). Then, if \(\mathcal {A}^*(z^*(\lambda ^i)) = \mathcal {A}^*(z^*(\lambda ^i + \varDelta \lambda ))\), it holds that \(\lambda ^{i+1} := \lambda ^i + \varDelta \lambda \) solves Problem (D). In particular it holds that

Proof

By Lemma 3 \(f^*\) is piecewise quadratic in \(\lambda \). By the construction in Lemma 5 we know that \(\mathcal {M}(\lambda ^i)\) is constant within each region \(A(\lambda ^i)\), since the active-set is fixed. By its definition, the Newton step \(\varDelta \lambda \) points to the maximum of the quadratic function characterizing \(A(\lambda ^i)\). By concavity of \(f^*\) it follows that \(\lambda ^i + \varDelta \lambda \) has to be the maximum of \(f^*\) and thus solves (D). The claim (21) follows immediately. \(\square \)

Lemma 8 is applied twofold in the dual Newton strategy. First, it allows us to make our line search smarter by only considering step sizes that lead to at least one active-set change (or full steps) as mentioned above in Sect. 3.6. Second, it shows that once the correct region of the solution is identified we have a one-step convergence to the exact solution (up to numerical accuracy), cf. Sect. 2.4. Next, we show global convergence.

Theorem 9

(Global convergence) Let (P) be feasible. Let \(\lambda ^0 \in \mathbb {R}^{N n_x}\) and let \(\lambda ^i \in \mathbb {R}^{N n_x}\) be defined recursively by \(\lambda ^{i+1} := \lambda ^{i} + \alpha ^i {\varDelta \lambda }^i\), where \({\varDelta \lambda }^i\) is the (possibly regularized) solution to Eq. (6), and \(\alpha ^i\) is the solution to Eq. (20). Then the sequence \(\{\lambda ^i \}_{i \in \mathbb {N}_0} \subset \mathbb {R}^{N n_x}\) converges to the unique maximum \(\hat{\lambda }\) with \(\mathcal {G}(\hat{\lambda }) = 0\).

Proof

The sequence \(\{\lambda ^i \}_{i \in \mathbb {N}_0}\) induces a sequence \(\{ f^i := f^*(\lambda ^i) \}_{i \in \mathbb {N}_0} \subseteq \mathbb {R}\). By definition of the exact line search (20) it holds that \(f^{i+1} \ge f^i\), i.e., \(\{ f^i\}_{i \in \mathbb {N}_0}\) is monotonically increasing. Since (P) is feasible, \(f^*(\lambda )\) is a bounded, concave function by Lemma 3 and duality theory. By the Bolzano-Weierstrass Theorem \(\{f^i \}_{i \in \mathbb {N}_0}\) thus converges to an accumulation point \(\hat{f}\).

Due to monotonicity of \(\{ f^i\}_{i \in \mathbb {N}_0}\) it holds that \(\{\lambda ^i \}_{i \in \mathbb {N}_0}\) is contained in the superlevel set

which is compact since \(f^*(\lambda )\) is a bounded concave function. A convergent subsequence \(\{\lambda ^{i^{(1)}} \} \subseteq \{\lambda ^i \}_{i \in \mathbb {N}_0}\) therefore has to exist and its limit \(\hat{\lambda }\) fulfills \(f^*(\hat{\lambda }) =\hat{f}\) because of the induced monotonicity of \(f^*(\lambda ^{i^{(1)}})\).

What remains to show is that \(\hat{\lambda }\) indeed maximizes \(f^*(\lambda )\), i.e., \(\mathcal {G}(\hat{\lambda }) = 0\). Assume contrarily \(\mathcal {G}(\hat{\lambda }) \ne 0\). Because \(\tilde{\mathcal {M}}(\lambda ^i)\) is strictly positive definite and uniformly bounded away from \(0\) in norm, it holds that \(\hat{\varDelta \lambda } = {\tilde{\mathcal {M}}(\hat{\lambda })}^{-1} \mathcal {G}(\hat{\lambda }) \ne 0\) (cf. Eq. (6)) is an ascent direction. Then \(\hat{\alpha }> 0\) holds for the solution of Eq. (20), and by \(C^1\)-continuity of \(f^*(\lambda )\) we can conclude that there is a \(\delta > 0\) with

Since \(\{\lambda ^{i^{(1)}} \}\) converges to \(\hat{\lambda }\), an index \(\bar{i} \in \mathbb {N}\) exists, such that for all \(i^{(1)} \ge \bar{i}\) we have \({\varDelta \lambda }^{i^{(1)}}\) sufficiently close to \(\hat{\varDelta \lambda }\) and \(\lambda ^{i^{(1)}}\) close enough to \(\hat{\lambda }\) such that

where the first inequality holds by the maximum property of the line search in each iteration, and the second one holds by continuity of \(f^*(\lambda )\). This, however, would be a contradiction to \(\hat{\lambda }\) being an accumulation point of a monotonically increasing sequence, so \(\mathcal {G}(\hat{\lambda }) = 0\), and our claim holds. \(\square \)

Lemma 10

Let \(z^*(\lambda ^*)\) be a feasible solution for (P), that fulfills the LICQ. Then \(\mathcal {M}(\lambda ^*)\) is strictly positive definite.

Proof

From Lemma 5 we have that \(\mathcal {M}(\lambda ^*) = \mathcal {C}\, \mathcal {P(\lambda ^*)}\, \mathcal {C}^\top \), where

with \(\mathcal {Z}^* := \mathrm {block\,diag}( Z_0^*, Z_1^*, \ldots , Z_N^* )\) and \(\mathcal {H} := \mathrm {block\,diag}( H_0, H_1, \ldots , H_N )\). As in Lemma 5, each \(Z_k^*, \, k \in \mathcal {S}\) denotes a basis matrix for the active constraints in the solution of \((\text {QP}_k)\), in this context the solution given the subproblem parameter \(\lambda ^*\). Consider now

Since \(\mathcal {H}\) is positive definite and \(\mathcal {Z^*}\), being a block diagonal composition of basis matrices, has full row rank, we have \(\mathcal {Z^*}^\top \mathcal {H} \mathcal {Z^*} \succ 0\), and thus \((\mathcal {Z^*}^\top \mathcal {H} \mathcal {Z^*})^{-1} \succ 0\). Using Eq. (22), this implies \(\lambda ^\top \mathcal {M}(\lambda ^*) \lambda \ge 0\).

Assume \(\lambda ^\top \mathcal {M}(\lambda ^*) \lambda = 0\). Since \((\mathcal {Z^*}^\top \mathcal {H} \mathcal {Z^*})^{-1} \succ 0\) it must hold that \(\lambda ^\top \mathcal {C} \mathcal {Z^*} = 0\).

The columns of \(\mathcal {Z^*}\) however are linearly independent and span the nullspace of the active stage constraints, i.e., every vector from the nullspace of \(\mathcal {Z^*}\) can be expressed by a linear combination of active stage constraints. If now \(\lambda ^\top \mathcal {C}\) lies in the nullspace of the active stage constraints this means there is a linear combination of active stage constraints that represents \(\lambda ^\top \mathcal {C}\), which is a linear combination of the stage coupling equality constraints. Since LICQ holds we can conclude that \(\lambda = 0\), and thus \(\mathcal {M}(\lambda ^*) \succ 0\) holds. \(\square \)

Corollary 11

(Finite termination of Algorithm 1) Let \(z^*(\lambda ^*)\) be a feasible solution for (P) that fulfills the LICQ. Let \(\lambda ^0, \lambda ^1, \ldots \) be computed from Algorithm 1 (in exact arithmetic). Then \(\{\lambda ^i \}_{i \in \mathbb {N}_0}\) becomes stationary after finitely many iterations, i.e., \(\exists \, \bar{i} ~:~ \lambda ^{i} = \lambda ^* ~\forall \, i \ge \bar{i}\).

Proof

From Lemma 1 we know that \(z_k^*(\lambda )\) depends continuously on \(\lambda \). If no stage constraints of \((\text {QP}_k)\) are weakly active in \(\lambda ^*\), then \(\lambda ^*\) lies in the strict interior of a region \(A^*\) (with \(\mathcal {M}(\lambda )\) constant on \(A^*\)) in the dual \(\lambda \) space. According to Theorem 9 there is a finite iteration index \(\bar{i}\) with \(\lambda ^{\bar{i}} \in A^*\). Due to Lemma 10 we have that \(\mathcal {M}(\lambda )\) is non-singular on \(A^*\), and Lemma 8 guarantees convergence in the next iteration.

If there are weakly active constraints in \(\lambda ^*\) (i.e., \(\lambda ^*\) lies on the boundary between several, but a finite number of, nonempty regions \(A^{(j_1)}, \ldots , A^{(j_n)}\) in Fig. 1), then due to \(C^1\) continuity of \(f\), each quadratic function defining \(f\) on \(A^{(j_i)}, \; i \in \{1, \ldots , n\}\) needs to have its maximum in \(\lambda ^*\). We can define a ball \(B_\epsilon (\lambda ^*)\) of fixed radius \(\epsilon > 0\) around \(\lambda ^*\) with the property that for every \(\lambda \in B_\epsilon (\lambda ^*)\) the dual function \(f\) on a region \(A^{(j_i)}\) containing \(\lambda \) is again defined by a quadratic function having its maximum in \(\lambda ^*\). By the identical argument as before we can conclude finite termination of algorithm 1 with Theorem 9, Lemmas 10 and 8. \(\square \)

5 Infeasibility handling

If the primal problem (P) is infeasible, two possible consequences for the dual problem (D) arise. If a stage \(k\) and a selection of stage constraints \(\{ \underline{d}_k^i \le D_k^i z_k \le \overline{d}_k^i \}_{i \in \mathcal {I}_k}\), \(\mathcal {I}_k \subseteq \{1, ..., n_{d} \}\) exists that cannot be satisfied by any \(z_k \in \mathbb {R}^{n_z}\), then and only then the dual problem (D) is infeasible as well, as no choice of \(\lambda \) will render \((\text {QP}_k)\) feasible (see also Remark 1).

In the following, we describes the behavior of the algorithm when all problems \((\text {QP}_k)\) are feasible, and yet (P) is infeasible.

Lemma 12

Let all \((\text {QP}_k)\) be feasible. If (P) is infeasible, the dual function \(f^*(\lambda )\) is unbounded and there exists (at least) one region in \(\lambda \) space, \(\emptyset \ne A^\mathrm {inf} \subseteq \mathbb {R}^{N n_x}\), with constant \(\mathcal {M}_{\mathrm {inf}}\) on \(A^\mathrm {inf}\) and

-

(i)

\(\mathcal {M}_{\mathrm {inf}}\) singular, i.e., \(\exists \, \lambda \ne 0 \;:\; \mathcal {M}_{\mathrm {inf}} \lambda = 0\),

-

(ii)

\(\forall \, \bar{f} \in \mathbb {R}~ \exists \, \hat{\lambda } \in A^{\mathrm {inf}} \;:\; f^*(\hat{\lambda }) > \bar{f}\),

-

(iii)

for all \(A^{(j)}\), defined by a representative \(\lambda ^{(j)}\), with \(\mathcal {M}(\lambda ^{(j)}) \succ 0\) it holds

$$\begin{aligned} \exists \, \hat{\lambda } \in A^{\mathrm {inf}} ~ \forall \, \bar{\lambda }\in A^{(j)} \;:\; \; f^*(\hat{\lambda }) > f^*(\bar{\lambda }). \end{aligned}$$

Proof

Since all \((\text {QP}_k)\) are feasible, \(f^*(\lambda )\) exists and (D) is feasible. Thus, (D) has to be unbounded by duality theory.

Let \(\lambda ^{(j)} \in A^{(j)}\) for any \(A^{(j)}\). The mapping \(\lambda \rightarrow f^*(\lambda )\) is onto an interval that contains the half-open interval \([ f^*(\lambda ^{(j)}), +\infty )\) since \(f^*(\lambda )\) is continuous and unbounded. Since there is only a finite number of regions by Definition 2, property (ii) holds.

Assume now (i) is violated, i.e., \(\exists \, A^{(j)}\) with \(\mathcal {M}(\cdot ) \succ 0\) on \(A^{(j)}\) and \( \forall \, \bar{f} \in \mathbb {R}~ \exists \, \lambda \in A^{(j)} : f^*(\lambda ) > \bar{f}\). Since \(\mathcal {M}(\cdot ) \succ 0\) is constant on \(A^{(j)}\) we have that \(f^*(\lambda )\) is strictly, and even strongly concave on \(A^{(j)}\). Therefore \(\exists \, \bar{f} < \infty \) with \(f^*(\lambda ) \le \bar{f} ~\forall \, \lambda \in \mathcal {A}^{(j)}\), a contradiction. Therefore (i) holds.

In particular \(f^*(\lambda )\) is bounded (from above) on each \(A^{(j)}\) with \(\mathcal {M}(\cdot ) \succ 0\). Since there is only a finite number of regions, property (ii) implies (iii). \(\square \)

Lemma 12 tells us that unboundedness of the dual objective function value can only occur in regions with singular Newton Hessian matrix \(\mathcal {M}(\cdot )\). We further characterize these unbounded regions in the following.

Definition 3

(Infinite ray) For \(\varDelta \lambda \ne 0\) we call a pair \((\bar{\lambda }, \varDelta \lambda )\) an infinite ray, if there is a region \(A^\mathrm {inf} \subseteq \mathbb {R}^{N n_x}\) represented by \(\bar{\lambda }\in A^\mathrm {inf}\) such that the ray is

-

1.

contained in the region: \(\bar{\lambda }+ \delta \cdot \varDelta \lambda \in A^\mathrm {inf} ~~\forall \, \delta >0\),

-

2.

in the nullspace of the region’s Hessian: \(\mathcal {M}(\bar{\lambda }) \varDelta \lambda = 0\),

-

3.

an ascent direction: \(\mathcal {G}(\bar{\lambda })^\top \varDelta \lambda > 0\).

Clearly every region containing an infinite ray is an unbounded region in the sense of Lemma 12. We additionally have the following characterizations.

Remark 11

Because \(\varDelta \lambda \) lies in the nullspace of the negated dual Hessian \(\mathcal {M}(\cdot )\), the dual gradient \(\mathcal {G}(\cdot )\) along a ray \((\bar{\lambda }, \varDelta \lambda )\) is constant.

Lemma 13

Let \((\bar{\lambda },\varDelta \lambda )\) be an infinite ray contained in \(A^\mathrm {inf}\). For every \(\tilde{\lambda } \in A^\mathrm {inf}\) it holds \(\tilde{\lambda }+ \gamma \cdot \varDelta \lambda \in A^\mathrm {inf} ~~\forall \gamma > 0\), i.e., \((\tilde{\lambda }, \varDelta \lambda )\) is an infinite ray as well.

Proof

Since \(A^\mathrm {inf}\) is convex by Lemma 2 and both \(\tilde{\lambda } \in A^\mathrm {inf}\) and \(\bar{\lambda }+ \gamma \cdot \varDelta \lambda \in A^\mathrm {inf}\) for each choice of \(\gamma >0\), it holds

From Lemma 2 we also have that \(A^\mathrm {inf}\) is polyhedral and therefore closed in \(\overline{\mathbb {R}}^{N n_x}\), where \(\overline{\mathbb {R}} := \mathbb {R}\cup \{ -\infty , +\infty \}\). Thus, the limit of (23) for \(\gamma \rightarrow \infty \) is contained in \(A^\mathrm {inf}\), and the claim holds. \(\square \)

Lemma 13 is interesting because it tells us that \(A^\mathrm {inf}\) has a cone- or beam-like shape. This will play a role in the following, when we characterize certificates for an unbounded dual problem.

Definition 4

(Ridge) Let \(({\lambda }^\ddagger ,\varDelta \lambda ^\ddagger )\) be an infinite ray with \(\varDelta \lambda ^\ddagger \ne 0\). We call \(({\lambda }^\ddagger ,\varDelta \lambda ^\ddagger )\) a ridge if it holds \(\varDelta \lambda ^\ddagger = \gamma \cdot \mathcal {G}(\lambda ^\ddagger )\) for a \(\gamma > 0\), i.e., if \(\varDelta \lambda ^\ddagger \) is aligned with the gradient of the dual function \(f^*\) along the ray it is defining.

Theorem 14

Let all \((\text {QP}_k)\) be feasible. If (P) is infeasible, then a ridge \(({\lambda }^\ddagger ,\varDelta \lambda ^\ddagger )\) exists.

Proof

From Lemma 12 we know that a non-empty region \(A^\mathrm {inf}\) exists. We further know that \(f^*(\lambda )\) is an unbounded concave quadratic function on \(A^\mathrm {inf}\). An infinite ray \((\bar{\lambda },\varDelta \lambda )\) in the sense of Definition 3 therefore has to exist in \(A^\mathrm {inf}\).

From Lemma 13 we have that in this case \((\tilde{\lambda }, \varDelta \lambda )\) is an infinite ray as well for every \(\tilde{\lambda } \in A^\mathrm {inf}\).

Let \(A^\mathrm {inf}_\cup := A^{\mathrm {inf},1} \cup A^{\mathrm {inf},2} \cup \ldots \cup A^{\mathrm {inf},n}\) be the union of all unbounded regions in the sense of Lemma 12. Since \(f^*({\lambda })\) is concave, \(A^\mathrm {inf}_\cup \) is connected. For the same reason it also holds that the superlevel set

is convex if we choose \(\bar{f} \in \mathbb {R}\) sufficiently large. Since \(f^*(\lambda )\) is continuous and unbounded on \(\bar{A}^\mathrm {inf}_\cup \), it holds that \(\bar{A}^\mathrm {inf}_\cup \) is \((N n_x)\)-dimensional, i.e., full-dimensional; otherwise a directional vector \(e \in \mathbb {R}^{N n_x}\) would exist with \(\tilde{\lambda }\in \mathrm {int}(\bar{A}^\mathrm {inf}_\cup )\), i.e., \(f^*(\tilde{\lambda }) > \bar{f}\) and \(f^*(\tilde{\lambda }+ \epsilon \cdot e) < \bar{f} ~~\forall \, \epsilon > 0\), a violation of continuity.

Assume for the moment that every region \(A^{\mathrm {inf},j}\) only contains one infinite ray (up to translation and scaling). Consider the nonempty intersection of \(\bar{A}^\mathrm {inf}_\cup \) with a \((N n_x-1)\)-dimensional Hyperplane \(F \subset \mathbb {R}^{N n_x}\). If \(F \cap \bar{A}^\mathrm {inf}_\cup \) does not contain a singular ray, \(f^*(\lambda )\) has to be bounded on \(F \cap \bar{A}^\mathrm {inf}_\cup \), as \(f^*(\lambda )\) is composed from only a finite number of concave quadratic functions. In particular \(f^*(\lambda )\) attains a maximum \(\hat{\lambda }\) somewhere in the intersection.

This maximum \(\hat{\lambda }\) is characterized by the fact that the dual gradient \(\mathcal {G}(\hat{\lambda })\) is orthogonal to \(F\) if \(\hat{\lambda } \in \mathrm {int}(F \cap \bar{A}^\mathrm {inf}_\cup )\). Since the dual \(\lambda \) space is \((N n_x)\)-dimensional \(\mathcal {G}(\hat{\lambda })/\Vert \mathcal {G}(\hat{\lambda })\Vert \) is uniquely defined by \(F\) and vice versa. Recall that \((\hat{\lambda }, \varDelta \lambda )\) is an infinite ray in every \(\hat{\lambda }\in \bar{A}^\mathrm {inf}_\cup \) for one fixed \(\varDelta \lambda \) as shown above. Since \(\mathcal {G}(\lambda )\) is continuous, varying (i.e., “rotating”) \(F\) eventually has to yield a \(\hat{\lambda }\) with \(\mathcal {G}(\hat{\lambda }) = \gamma \varDelta \lambda \) for some \(\gamma > 0\).

If now there is a region \(A^\mathrm {inf}_j\) with more than one infinite ray (i.e., the directional vectors \(\varDelta \lambda \) of the infinite rays span a space of dimensionality \(k > 1\)) the same argument can be applied, but with a \((N n_x-k)\)-dimensional Hyperplane \(F \subset \mathbb {R}^{N n_x}\). In this case \(\mathcal {G}(\hat{\lambda })\) is not uniquely defined anymore, but lies in the normal space of \(F\). By the same argument as above there has to be an \(F\) whose normal space coincides with the space spanned by the directional vectors \(\varDelta \lambda \) of the infinite rays of \(A^\mathrm {inf}_j\), and thus again there is an infinite ray \((\hat{\lambda }, \varDelta \lambda )\) coinciding with the gradient \(\mathcal {G}(\hat{\lambda })\) at its base point \(\hat{\lambda }\). \(\square \)

Remark 12

Let \((\lambda ^\ddagger , \mathcal {G}(\lambda ^\ddagger )) \subseteq A^\ddagger \subseteq \bar{A}^\mathrm {inf}_\cup \) be a ridge. Clearly, \(\mathcal {M}(\lambda ^\ddagger )\) is rank deficient (and constant on \(A^\ddagger \)) and thus regularized with \(\delta I \succ 0\) by Algorithm 1. By Theorem 14 and Definitions 3 and 4 it holds \(\mathcal {M}(\lambda ^\ddagger ) \mathcal {G}(\lambda ^\ddagger ) = 0\) and we have

i.e., Algorithm 1 only performs gradient steps and thus remains on the ridge. Therefore we can see a ridge as the analogon to a fixed point in the case where the dual function \(f^*(\lambda )\) is unbounded.

Lemma 15

(Minimality of the ridge gradient) Let \((\lambda ^\ddagger , \varDelta \lambda ^\ddagger )\) be a ridge. Then

for all \(\lambda \in \mathbb {R}^{N n_x}\). Furthermore, inequality (25) is strict except for those \(\lambda \) that lie on a ridge, i.e., for which there is a ridge \((\bar{\lambda },\overline{\varDelta \lambda })\) such that \(\lambda = \bar{\lambda } + \gamma \overline{\varDelta \lambda }\) for a \(\gamma \ge 0\). In particular the ridge \((\lambda ^\ddagger , \varDelta \lambda ^\ddagger ) \subseteq A^\ddagger \) is unique up to scaling of \(\varDelta \lambda ^\ddagger \) and translations from the nullspace of \(\mathcal {M}(\lambda ^\ddagger )\).

Proof

Let \(\lambda \in \mathbb {R}^{N n_x}\) and let \((\lambda ^\ddagger , \varDelta \lambda ^\ddagger )\) be a ridge. Due to concavity of \(f^*(\cdot )\) we have \(( \mathcal {G}(\lambda ) - \mathcal {G}(\lambda ^\ddagger ))^\top (\lambda ^\ddagger -\lambda ) \ge 0\). Since the \(\mathcal {G}(\cdot )\) is constant along the ridge, \((\lambda ^\ddagger , \varDelta \lambda ^\ddagger )\) and \(\varDelta \lambda ^\ddagger \) and \(\mathcal {G}(\lambda ^\ddagger )\) only differ by a positve scalar factor, and also

for all \(\gamma > 0\). Since concavity of \(f^*(\cdot )\) clearly also holds on the extended domain \(\overline{\mathbb {R}}^{N n_x}\), where \(\overline{\mathbb {R}} = \mathbb {R}\cup \{ -\infty , +\infty \}\), we have \(( \mathcal {G}(\lambda ) - \mathcal {G}(\lambda ^\ddagger ))^\top \mathcal {G}(\lambda ^\ddagger ) \ge 0\) in the limit for \(\gamma \rightarrow \infty \). This is equivalent to

where the last inequality is the Cauchy-Schwarz inequality. Thus (25) holds.

For the proof of the second part of the Lemma, we note that the Cauchy-Schwarz inequality is strict unless \(G(\lambda )\) is a positive multiple of \(\mathcal {G}(\lambda ^\ddagger )\), so \(\Vert \mathcal {G}(\lambda ) \Vert _2 = \Vert \mathcal {G}(\lambda ^\ddagger ) \Vert _2\) implies \(\mathcal {G}(\lambda ) = \mathcal {G}(\lambda ^\ddagger )\). Let us therefore consider an arbitrary \(\lambda \in \mathbb {R}^{N n_x}\) with \(\mathcal {G}(\lambda ) = \mathcal {G}(\lambda ^\ddagger )\). Due to concavity we have

for \(\beta \in [\,0\,,1\,]\), and analogously

We can multiply (26) by \(\beta \) and (27) by \((1-\beta )\), add both inequalities up and, using \(\mathcal {G}(\lambda ) = \mathcal {G}(\lambda ^\ddagger )\), obtain

By concavity of \(f^*(\cdot )\) also the converse inequality holds and we have

i.e., linearity of \(f^*(\cdot )\) on the interval between \(\lambda \) and \(\lambda ^\ddagger \). Once more since \(\mathcal {G}(\cdot )\) is constant along the ridge \((\lambda ^\ddagger , \varDelta \lambda ^\ddagger )\), (28) holds analogously for all \(\tilde{\lambda }^\ddagger := \lambda ^\ddagger + \gamma \, \varDelta \lambda ^\ddagger \) in place of \(\lambda ^\ddagger \), i.e., \(f^*\) is linear on the interval \([\, \lambda \;,\, \lambda ^\ddagger + \gamma \, \varDelta \lambda ^\ddagger \,]\) for all choices of \(\gamma > 0\). Since the space of linear functions from \(\mathbb {R}^{N n_x}\) to \(\mathbb {R}\) is closed, linearity also holds in the limit for \(\gamma \rightarrow \infty \), which is the half-open interval \(\{ \lambda + \gamma \cdot \varDelta \lambda ^\ddagger ~|~ \gamma \in [\, 0 \;, \infty \,) \}\). Since \(\mathcal {G}(\lambda ) = \mathcal {G}(\lambda ^\ddagger )\) (which itself is a positive multiple of \(\varDelta \lambda ^\ddagger \)) we therefore have that \((\lambda , \mathcal {G}(\lambda ))\) is itself a ridge, which is moreover parallel to \((\lambda ^\ddagger , \varDelta \lambda ^\ddagger )\).

Since \(f^*(\cdot )\) is specifically linear on \([\, \lambda \;,\, \lambda ^\ddagger \,]\), \((\lambda , \mathcal {G}(\lambda ))\) differs from \((\lambda ^\ddagger , \varDelta \lambda ^\ddagger )\) only by a shift that lies in the nullspace of \(\mathcal {M}(\lambda ^\ddagger )\) as we claimed above. \(\square \)

Theorem 16

(Convergence to an infinite ray) If (P) is infeasible, then exactly one of the two following statements is true:

-

1.

\((\text {QP}_k)\) is infeasible for at least one \(k \in \mathcal {S}\);

-

2.

Algorithm 1 converges to an infinite ray (i.e., the distance of the iterates to the half-open set characterized by the ray vanishes) if the regularization parameter \(\delta \) is chosen sufficiently large.

Proof

Let (P) be infeasible. Because of the special time coupling structure of (P) either there exists a minimal infeasible set (a selection of constraints of minimal size that cannot be fulfilled at the same time) that is contained in the set of local stage constraints of one \((\text {QP}_k)\), or all minimal infeasible sets consist of local stage constraints (P3) of several stages \(k_1 < k_2 < \cdots < k_n\) and the corresponding time coupling constraints between \(k_1\) and \(k_n\) (a subset of constraints (P2)). In the former case Statement 1 holds, and infeasibility is detected by the stage QP solver on first execution. In the latter case we have that the partial dual function \(f^*(\lambda )\) exists and can be evaluated. In particular \(f^*(\lambda )\) is unbounded by Lemma 12. In the remainder of the proof we show that in this case Algorithm 1 indeed converges to an infinite ray.

As we have seen in the proof of Theorem 9 the iterates \(\lambda ^i\) of Algorithm 1 defined by Eqs. (5), (6), and (20) induce a monotonically increasing sequence \(\{f^*(\lambda ^i)\}_{i \in \mathbb {N}_0}\). We claim that \(\{f^*(\lambda ^i)\}_{i \in \mathbb {N}_0}\) does not converge if \(f^*(\lambda )\) is unbounded.

Assume to the contrary that \(\{f^*(\lambda ^i)\}_{i \in \mathbb {N}_0}\) converges. Clearly \(\mathcal {G}(\lambda )\) does not vanish since \(\mathcal {G}(\lambda ) = 0\) would imply the existence of a feasible solution of (P) by Remark 5. By Lemma 15 we further know the existence of a

If now \(\{f^*(\lambda ^i)\}_{i \in \mathbb {N}_0}\) converges, clearly the updates \(\alpha ^i \cdot {\tilde{\mathcal {M}}(\lambda ^i)}^{-1} \mathcal {G}(\lambda ^i)\), have to vanish. Since there are only finitely many different \(\tilde{\mathcal {M}}(\cdot ) \succ 0\) and \(\mathcal {G}(\cdot )\) is bounded away from \(0\) by Eq. (29) this implies that

with \(\varDelta \lambda = {\tilde{\mathcal {M}}(\lambda ^i)}^{-1} \mathcal {G}(\lambda ^i)\), cf. Eq. (20), has to drop to \(0\).

At each iteration \(i\) three cases for \(\alpha ^i\) could appear:

-

(i)

\(\alpha ^i = 1\);

-

(ii)

\(\alpha ^i = 0\). We have \(\mathcal {G}(\lambda ^i) \ne 0\) and \(\tilde{\mathcal {M}}(\lambda ^i) \succ 0\), so \({\tilde{\mathcal {M}}(\lambda ^i)}^{-1} \mathcal {G}(\lambda ^i)\) is an ascent direction. By \(\mathrm {C}^1\)-continuity of \(f^*(\cdot )\) an ascent is possible and thus \(\alpha ^i \ne 0 ~~\forall \, i \in \mathbb {N}_0\);

-

(iii)

\(0 < \alpha ^i < 1\). Then by maximality \(\alpha ^i\) fulfills \(\mathcal {G}(\lambda ^i + \alpha ^i \varDelta \lambda )^\top \varDelta \lambda = \mathcal {G}(\lambda ^i + \alpha ^i \varDelta \lambda )^\top {\tilde{\mathcal {M}}(\lambda ^i)}^{-1} \mathcal {G}(\lambda ^i) = 0\). Regard \(\phi (\alpha ) := \mathcal {G}(\lambda ^i + \alpha \varDelta \lambda )^\top {\tilde{\mathcal {M}}(\lambda ^i)}^{-1} \mathcal {G}(\lambda ^i)\) as a function in \(\alpha \). Clearly \(\phi (0) = \mathcal {G}(\lambda ^i)^\top {\tilde{\mathcal {M}}(\lambda ^i)}^{-1} \mathcal {G}(\lambda ^i)\) is bounded away from \(0\), since there are only finitely many different \(\tilde{\mathcal {M}}(\cdot ) \succ 0\) and \(\mathcal {G}(\cdot )\) is bounded away from \(0\) by Eq. (29). Further \(\phi (\alpha )\) is continuous and its derivative \(\phi '(\alpha ) = - {\varDelta \lambda }^\top \mathcal {M}(\lambda ^i + \alpha \varDelta \lambda ) \varDelta \lambda \) is bounded from below (for a fixed \(\varDelta \lambda \)), again since there are only finitely many different \(\tilde{\mathcal {M}}(\cdot )\); note that the ascent direction \(\varDelta \lambda \) cannot grow to infinity for \(i \rightarrow \infty \) since \(f^*(\lambda )\) is concave and \(f^*(\lambda ^i)\) is increasing. Therefore \(\alpha ^i\) has to be bounded away from \(0\) by a problem-data specific constant that is independent from the iteration index \(i\).

Since also \(\alpha ^i\) does not converge to \(0\) we have a contradiction, and our claim that \(\{f^*(\lambda ^i)\}_{i \in \mathbb {N}_0}\) diverges (even monotonically) holds true. In particular every fixed function value \(\bar{f}\) will be exceeded and for every suitably large \(\bar{f}\) the iterates of Algorithm 1 will remain in \(\bar{A}^\mathrm {inf}_\cup \) (as defined in Eq. (24)) after a finite number of iterations.

In the remainder of the proof we first show local and subsequently global attractiveness of a ridge in the sense of Theorem 14.

Let \(A^\ddagger \) denote the region that contains a ridge \((\lambda ^\ddagger , \mathcal {G}(\lambda ^\ddagger ))\). For two subsequent iterates both contained in \(A^\ddagger \) (characterized by a constant Newton matrix \(\mathcal {M}\)) we have the exact linear model

The cone-like quadratic shape of \(A^\ddagger \) (cf. Lemma 13) implies that each subsequent iterate \({\lambda ^{i+1}}\) is contained in \(A^\ddagger \) if \({\lambda ^{i}} \in A^\ddagger \). The linear expansion of the gradient (30), becomes \(\mathcal {G}({\lambda ^{i+1}}) = \left( \mathcal {I}- \mathcal {M}\, {\tilde{\mathcal {M}}}^{-1} \right) \mathcal {G}({\lambda ^i})\). Clearly \(\mathcal {I}- \mathcal {M}\, {\tilde{\mathcal {M}}}^{-1} = \mathcal {I}- \left( {\tilde{\mathcal {M}}} - \delta \,\mathcal {I}\right) \, {\tilde{\mathcal {M}}}^{-1} = \delta \, {\tilde{\mathcal {M}}}^{-1}\) is positive definite. On the other hand \(\mathcal {M}\, {\tilde{\mathcal {M}}}^{-1} = \mathcal {I}- \delta \, {\tilde{\mathcal {M}}}^{-1}\) is positive semidefinite, so \(\Vert \mathcal {G}({\lambda ^{i+1}}) \Vert \le \Vert \mathcal {G}({\lambda ^{i}}) \Vert \).

Whenever \(\mathcal {M}\, {\tilde{\mathcal {M}}}^{-1} \mathcal {G}({\lambda ^{i}}) \ne {0}\) we have \(\delta \, \mathcal {G}({\lambda ^{i}})^\top {\tilde{\mathcal {M}}}^{-1} \mathcal {M}\, {\tilde{\mathcal {M}}}^{-1} \mathcal {G}({\lambda ^{i}}) > 0\) for any \(\delta > 0\). This can be easily verified, e.g., by using the fact that \({0} \preceq \mathcal {M}=: {B} \, {B}^\top \) has a (not necessarily full rank) symmetric factorization. Assuming \(\mathcal {M}\, {\tilde{\mathcal {M}}}^{-1} \mathcal {G}({\lambda ^{i}}) \ne {0}\) it then holdsFootnote 1

This implies that

for all iterates with \(\mathcal {M}\, {\tilde{\mathcal {M}}}^{-1} \mathcal {G}({\lambda ^{i}}) \ne {0}\), and together with (30) we can conclude that the sequence of gradients converges to a limit \({\mathcal {G}^*}\) that fulfills

the ridge property. Recall that by Lemma 15 the ridge is unique.

It remains to show that the iterates \(\lambda ^i\) of Algorithm (1) also converge globally to \(A^\ddagger \). To see this, we consider the auxiliary function

This function is clearly piecewise quadratic and convex, just like \(f^*\), and its second derivative equals the second derivative of \(f^*\), while the first derivative of \(\tilde{f}\) is given by \(\frac{\partial {\tilde{f}}}{\partial {\lambda }} = \mathcal {G}(\lambda )^\top - \mathcal {G}(\lambda ^\ddagger )^\top \). It is furthermore bounded, since due to concavity we have for every \(\lambda \in \mathbb {R}^{N n_x}\)

The iterates \(\lambda ^i\) of Algorithm (1) are increasing in \(\tilde{f}\) if and only if the step directions \({\tilde{\mathcal {M}}(\lambda ^i)}^{-1} \mathcal {G}(\lambda ^i)\) are always ascent directions, i.e., if

holds. We have shown above that \(\alpha ^i\) is bounded away from \(0\) by an iteration-independent constant and therefore we equivalently have the condition

where \(\delta \) is the regularization parameter in Algorithm 1. Since \(\delta \,{\tilde{\mathcal {M}}(\lambda ^i)}^{-1}\) is positive definite, each of its finitely many distinct values \(\delta \,{\tilde{\mathcal {M}}_{(j)}}^{-1}\) defines a scalar product that induces a norm on \(\mathbb {R}^{N n_x}\). Applying the Cauchy-Schwarz inequality to (31) we have that the iterates \(\lambda ^i\) are increasing iff

While \(\lambda ^i\) is not contained in a ridge, we have \(\Vert G(\lambda ^i) \Vert _2 > \Vert G(\lambda ^\ddagger ) \Vert _2\) from Lemma 15; furthermore \(I - \delta \, \tilde{\mathcal {M}}_{(j)}^{-1} = I - \delta \, (\mathcal {M}_{(j)} + \delta I)^{-1}\) vanishes for sufficiently large choices of \(\delta \), fulfilling (32) and thus showing that the iterates \(\lambda ^i\) are also ascending in the auxiliary function \(\tilde{f}\). Since \(\tilde{f}\) is bounded, we can conclude that the region \(A^\ddagger \), containing a ridge, is eventually reached, thus concluding the proof of global convergence. \(\square \)

Theorem 16 can be used algorithmically to detect infeasibility of (P). If any \((\text {QP}_k)\) is infeasible, it will be detected by the stage QP solver (either qpOASES, or during the clipping operation) on the first execution and (P) is then immediately known to be infeasible. Otherwise, in the case of infeasibility through the coupling constraints, we know by Theorem 16 that the iterates of Algorithm 1 will eventually converge to a ridge. Here, we could gradually increase the regularization parameter \(\delta \), if the iterates remain in regions with singular Hessians. A check whether \(\mathcal {G}(\lambda ^i)\) is in the nullspace of \(\mathcal {M}(\lambda ^i)\) (i.e., \(\mathcal {M}(\lambda ^i) \mathcal {G}(\lambda ^i) \approx 0\)) every few (regularized) iterations without an active-set change, combined with a check whether any active-set change occurs at all in the Newton direction will eventually conclude infeasibility as stated by Theorem 16. Note that the check for active-set changes in the Newton direction can cheaply be performed both in qpOASES and the clipping QP solver by simply considering the signs in the ratio test of the active and inactive constraints, cf. Sect. 3.6. Additionally, practical infeasibility can be concluded, when the objective function value exceeds a certain large threshold (caused by an explosion of the norm of the iterates \(\lambda ^i\)).

Remark 13

In practice, the dual iterates \(\lambda ^i\) in Algorithm 1 were indeed always observed to grow very fast and reach a ridge quickly in infeasible problems because of the initially small regularization.

We note that the theoretical result of Theorem 16 is somewhat unsatisfactory, since it requires a modification of the regularization parameter \(\delta \) (or even to only do gradient steps in regions with a singular Hessian). We initially aimed at proving Theorem 16 for generic regularized Newton steps, i.e., independent of \(\delta \). Despite some considerable effort, we could not derive a formal argument that links condition (32) with Lemma 15, since the ordering relations in the Euclidian norm on the one hand and the norm induced by \(\delta \,{\tilde{\mathcal {M}}}_{(j)}^{-1}\) on the other thand might be different. Still, we are confident that also regularized Newton updates in general, independent of the choice of \(\delta \), are increasing in \(\tilde{f}\) on a global scale (though not necessarily monotonically), and thus formulate the following conjecture.

Conjecture 17

Theorem 16 holds independently for all choices of the regularization parameter \(\delta > 0\).

6 Concurrency in the dual Newton strategy

One important advantage of the Dual Newton Strategy is that, as opposed to conventional active-set or interior-point methods, it is an easily parallelizable algorithm. Analyzing Algorithm 1 in this respect, we observe that all stage QPs can be solved concurrently in \(N+1\) threads in Step 2. Next, each block of the dual gradient \(\mathcal {G}(\lambda )\) only depends on the solution of two neighboring stage QPs (cf. Eq. 10), and therefore the setup can be done concurrently in \(N\) threads (Step 3). Also in the setup of the symmetric Newton matrix \(M\), Step 6, each diagonal block only depends on the solution of two adjacent stage QP solutions, while each off-diagonal block only depends on the solution of one stage QP; therefore the workload of the setup of \(M\) can be distributed on \(N\) threads almost equally. During the line search procedure in Step 8 of Algorithm 1, the expensive steps in each iteration consist of solving all stage QPs for the new step size guess and computing the corresponding gradient, both of which can be spread over \(N+1\) threads with almost equal workload.

Therefore, so far all steps of significant workload can be run fully in parallel in each major iteration of Algorithm 1, except for the solution of the structured linear system, Step 7. In a sequential implementation, solving the system based on a structure-exploiting reverse Cholesky factorization as proposed in Sect. 3.5 seems most efficient. This algorithm, however, is completely serial on the higher level and therefore cannot be expected to scale well with the number of available computing threads on modern and forthcoming multi-core CPU architectures.

We therefore propose a parallelizable solution algorithm for the structured Newton system in Sect. 6.1, as an alternative to Algorithm 2. The overall cost of this algorithm is roughly twice as high in number of FLOPs as Algorithm 2, but it comes at a parallel complexity of only \(\mathcal {O}(\log N)\) on \(N\) threads; therefore, the overall time complexity of one iteration of the dual Newton strategy comes down to only \(\mathcal {O}(\log N)\) FLOPs, compared to \(\mathcal {O}(N^3)\) in active-set methods, and \(\mathcal {O}(N)\) in common tailored interior-point method.

6.1 A parallel solution algorithm for the Newton system

The core idea behind the algorithm proposed in this section is to exploit the block-tridiagonal structure of the Newton matrix \(\mathcal {M}\) in Eq. (6) in a cyclic reduction strategy. We note that a similar and to some extent more general algorithm has been proposed in [44] for the parallel solution of a linear system of similar structure, that originated form a related but slightly different problem. The work [44] contains a deeper analysis of the applicability and extensions of the cyclic reduction strategy, as well as extensive numerical comparisons. In the following we only present the central algorithmic idea in our context and refer to [44] for complementary reading. Note that in contrast to the setting of [44], we have general applicability, since \(\mathcal {M}\) is positive definite and therefore the diagonal minors are invertible.

We consider the Newton system given by

where \(\lambda _i \in \mathbb {R}^{n_x}, ~ i \in \mathcal {S}_0\) denote the block components of \(\lambda \) defined in Eq. (3). We assume the Newton matrix \(\mathcal {M}\) strictly positive definite (otherwise we regularize). We can use the 2nd, 4th,\(\dots \) equation of (33) to eliminate \(\lambda _2, \lambda _4,\ldots \) concurrently from (33). This yields a reduced-size system of equations, which is again block-tridiagonal,

with block components given by

where \(i- = i-1\), and \(i+ = i+1\). Applying this reduction step recursively, we obtain a system

after \(\Theta (\log N)\) iterations, from which we can eliminate \(\lambda _N\), yielding

a dense system of size \(n_x \times n_x\). This system can efficiently be solved by a direct Cholesky decomposition, followed by two backsolve steps.

With \(\lambda _1\) we can recover \(\lambda _N\) from

and in general, we can recover \(\lambda _i\) from \(\lambda _{i-}\) and \(\lambda _{i+}\) by

concurrently in reverse level order of the previous elimination procedure. Here \(\lambda _{i-}\) and \(\lambda _{i+}\) denote the \(\lambda \)-blocks preceding and succeeding \(\lambda _i\) in the system of equations remaining in the reduction step that eliminated \(\lambda _i\).

The complete solution algorithm can be summarized as follows:

Remark 14

Obviously the products of the block matrices \(U_{\bullet }\) and \(D_{\bullet }^{-1}\) in Equations (34) and (35) are most efficiently computed by a backsolve with a Cholesky factor of the diagonal blocks of the reduced size system, \(D_{\bullet }\). If the system of Eq. (33) is linearly dependent, i.e., if \(\mathcal {M}\) is rank deficient, the Cholesky factorization of one of these blocks will fail (otherwise, i.e., if all \(D_{\bullet }\) have full rank (35–37) would constitute an linear injective mapping \(\lambda \rightarrow \mathcal {G}\)), and we can restart the factorization with a regularized \(\mathcal {M}\), analogously to Sect. 3.4.

Remark 15

We note that Algorithm 3 can also be employed in the factorization step of tailored interior-point methods, thus obtaining also \(\mathcal {O} ( \log N )\) parallel time complexity versions of this class of methods. This has been established in [29] for a structure-exploiting variant of Mehrotra’s predictor-corrector scheme [33].

7 Open-source software implementation