Abstract

In this paper, we present a cooperative primal-dual method to solve the uncapacitated facility location problem exactly. It consists of a primal process, which performs a variation of a known and effective tabu search procedure, and a dual process, which performs a lagrangian branch-and-bound search. Both processes cooperate by exchanging information which helps them find the optimal solution. Further contributions include new techniques for improving the evaluation of the branch-and-bound nodes: decision-variable bound tightening rules applied at each node, and a subgradient caching strategy to improve the bundle method applied at each node.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

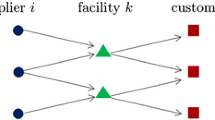

The uncapacitated facility location problem is a well-known combinatorial optimization problem, also known as the warehouse location problem and as the simple plant location problem. The problem consists in choosing among \(n\) locations where plants can be built to service \(m\) customers. Building a plant at a location \(i \in \{1,\ldots ,n\}\) incurs a fixed opening cost \(\varvec{\mathbf {c}}_i\), and servicing a customer \(j \in \{1,\ldots ,m\}\) from this location incurs a service cost \(\varvec{\mathbf {s}}_{ij}\). The objective is to minimize the total cost:

Note that all solutions in \(\{0,1\}^n\) are feasible except for \(\varvec{\mathbf {0}}\). The problem can be formulated as a 0–1 mixed integer programming problem. This requires the introduction of a vector of auxiliary variables \(\varvec{\mathbf {y}} \in [0,1]^{nm}\) in which each component \(\varvec{\mathbf {y}}_{ij}\) represents the servicing of customer \(j\) by location \(i\). The following model is the so-called ‘strong’ formulation, denoted by \((P)\):

Note that this model has at least one optimal solution in which \(\varvec{\mathbf {y}}\) is integer. Note also that vectors are represented by symbols in boldface font while scalars are in normal font, a convention which we shall adhere to throughout this paper.

Many different approaches to solving the UFLP have been studied over the years to the extent that a comprehensive overview of the literature falls beyond the scope of this paper. We refer the reader to [9, 24, 26] for relevant survey articles. More recent publications include approximation algorithms [7, 12, 29, 30], lagrangian relaxation[2, 27], metaheuristics [10, 14, 18, 19, 21, 22, 25, 31, 35, 36], etc.

Most recent methods for solving the UFLP exactly are centered on the dual ascent methods proposed by Erlenkotter [11] and by Bilde and Krarup [5]. The DualLoc method of Erlenkotter, subsequently improved by Körkel [23] and recently by Letchford and Miller [28], is a branch-and-bound method in which a lower bound at each node is evaluated by a dual ascent heuristic. Being a linear program, the dual can also be solved by general-purpose solvers such as CPLEX or Gurobi, or by other specialized methods [8]. It seems however that dual ascent heuristics have been preferred, possibly because the exact resolution of such (often large) linear programs was not found to be cost-effective from a computational point of view. A study of the optimality gap of the dual ascent heuristic can be found in [32].

A different method which was proposed recently for solving the UFLP exactly is the semi-lagrangian approach of Beltran-Royo et al. [4], originally developed for solving the \(k\)-median problem [3]. Other methods include the primal-dual method proposed by Galvão and Raggi [13], which combines a primal procedure to find a good solution and a dual procedure to close the optimality gap. The heuristic proposed by Hansen et al. [19] can be adapted to solve the UFLP exactly. On a related note, Goldengorin et al. [16, 17] propose some preprocessing rules to improve branch-and-bound search methods applied to the UFLP. These rules analyze the relationships between the opening costs \(\varvec{\mathbf {c}}\) and the service costs \(\varvec{\mathbf {s}}\) to try to determine the optimal state of each location. However, many of the more difficult instances in the UflLib collection are purposely designed to have a contrived cost structure on which few deductions can be made. General purpose MIP solvers such as CPLEX or Gurobi struggle to solve these instances, in many cases spending a very long time just to evaluate the root node. Indeed, the constraints (2) make the linear relaxation of \((P)\) difficult to solve using simplex algorithms because of degeneracy issues. There are also \(mn\) of them, and for many instances in UflLib we have \(n = m = 1000\). It is possible to aggregate these constraints into \(\sum _{j=1}^m\varvec{\mathbf {y}}_{ij} \le m \varvec{\mathbf {x}}_i\) for all \(i \in \{1,\ldots ,n\}\), but the bound induced by the corresponding linear relaxation is too weak to be of any practical use, even when strengthened with cuts.

We propose a new method for solving \((P)\) exactly, in which a metaheuristic and a branch-and-bound procedure cooperate to reduce the optimality gap. In this respect, this is somewhat similar to the work of Galvão and Raggi [13], however our method is cooperative: the metaheuristic and the branch-and-bound procedure exchange information such that they perform better than they would in isolation. Information is exchanged by message-passing between two processes. Each message has a sender, a receiver, a send date, a type and maybe some additional data. Each process sends and receives messages asynchronously at certain specific moments during their execution, and if at any of these moments there are several incoming messages of the same type, then all but the most recent are discarded. Similar approaches have been proposed before, a recent example is the work of Muter et al. [33] on solving the set-covering problem, but to our knowledge this paper introduces the first cooperative approach specifically for solving the UFLP exactly.

Section 2 describes the primal process, which improves an upper bound by searching for solutions through a metaheuristic. Section 3 describes the dual process, which improves a lower bound by enumerating a branch-and-bound search tree, using lower bounds derived from the lagrangian relaxation obtained by dualizing constraints (1). Contrary to most work in the literature which use dual-ascent heuristics (e.g., DualLoc), we use a bundle method to optimize the corresponding lagrangian dual. Additional contributions of this paper include new strategies for partitioning \((P)\), as well as a subgradient caching technique to improve the performance of bundle method in the context of our branch-and-bound procedure.

Section 4 concludes this paper by presenting our computational results. Our method is very effective on almost all UFLP instances in UflLib, and solved many to optimality for the first time. These new optimal values can be found in the appendix, along with several implementation details which do not enrich this paper.

2 Primal process

The purpose of the primal process is to find good solutions for \((P)\). Specifically, we use a variant of the tabu search procedure proposed by Michel and Van Hentenryck [31] which we shall now briefly describe. This local search method explores the 1OPT neighborhood of a given solution \(\check{\varvec{\mathbf {x}}}\in \{0,1\}^n\). This neighborhood is defined as the set of solutions obtained by flipping one component of \(\check{\varvec{\mathbf {x}}}\): either opening a closed location, or closing an open location.

We let \(\check{\varvec{\mathbf {x}}}^i\) denote the neighbor of \(\check{\varvec{\mathbf {x}}}\) obtained by flipping its \(i\)-th component. For all components \(i \in \{1,\ldots ,n\}\), we let \(\varvec{\mathbf {\delta }}_i\) denote the transition cost \(f(\check{\varvec{\mathbf {x}}}^i) - f(\check{\varvec{\mathbf {x}}})\) associated with moving from \(\check{\varvec{\mathbf {x}}}\) to \(\check{\varvec{\mathbf {x}}}^i\). Michel and Van Hentenryck [31] show how to update the vector of transition costs \(\varvec{\mathbf {\delta }}\) in \(O(m \log n)\) time after each move in the 1OPT neighborhood (see also Sun [36]). They argue that this efficient evaluation of the neighborhood is the main reason why their local search scheme performs as well as it does. The basic idea is to maintain t he open locations in a heap for each customer \(j \in \{1,\ldots ,m\}\), ordered in increasing supply costs \(\varvec{\mathbf {s}}_{ij}\): when opening a closed location add this location inside each heap, and when closing an open location remove this location from each heap.

Our tabu search procedure is a variant of that presented in [31]. Our main modification is that it allows some solution components to be fixed to their current values by having a tabu status with infinite tenure. Tabu status is stored in an array \(\varvec{\mathbf {\tau }}\) of dimension \(n\), where each component can take any value in \(\mathbb {N} \cup \{\infty \}\) specifying the iteration at which the tabu status expires.

At each iteration, the search performs a 1OPT-move on a location \(w \in \{1,\ldots ,n\}\) selected as follows. The location \(w\) is the one decreasing the objective function the most. Furthermore the move should not be tabu or it should satisfy the aspiration criterion. If no such move exists, then we perform a diversification by selecting \(w\) at random among all non-tabu locations. If none of these exist, then we select \(w\) at random among all non-fixed locations. If none of these exist either, then there is nothing left to do and we end the search.

We modify the tabu status \(\varvec{\mathbf {\tau }}_w\) according to the current tenure variable tenure, which indicates the number of subsequent iterations during which \(\check{\varvec{\mathbf {x}}}_w\) cannot change. The value of tenure, initially set to 10, remains within the interval \([2, 10]\). During the search it is decreased by 1 following each improving move (if tenure \(>2\)) and it is increased by 1 following each non-improving move (if tenure \(<10\)).

Before performing the next iteration, the primal process performs its communication duties, dealing with incoming messages and sending outgoing messages to the dual process. Although this tabu search procedure works well enough for finding good solutions [31] on its own, communication with the dual process nonetheless allows us (among other things) to fix some solution components to their optimal values, thereby narrowing the search space. The following list specifies the kinds of messages our primal process sends and receives:

-

Outgoing:

-

send the incumbent solution \(\bar{\varvec{\mathbf {x}}}^\mathtt{primal }\),

-

send a request for a guiding solution.

-

-

Incoming:

-

receive a solution \(\bar{\varvec{\mathbf {x}}}^\mathtt{dual }\),

-

receive an improving partial solution \(\bar{\varvec{\mathbf {p}}}\),

-

receive a guiding solution \(\underline{\varvec{\mathbf {x}}}\).

-

Let \(\bar{\varvec{\mathbf {x}}}^\mathtt{primal }\) be the best solution known by the primal process. Whenever \(\check{\varvec{\mathbf {x}}}\) is such that \(f(\check{\varvec{\mathbf {x}}}) < f(\bar{\varvec{\mathbf {x}}}^\mathtt{primal })\), the primal process sets \(\bar{\varvec{\mathbf {x}}}^\mathtt{primal }\) to \(\check{\varvec{\mathbf {x}}}\) and sends it to the dual process as soon as possible. The dual process likewise maintains its own incumbent solution \(\bar{\varvec{\mathbf {x}}}^\mathtt{dual }\), which like \(\bar{\varvec{\mathbf {x}}}^\mathtt{primal }\) is a 0-1 \(n\)-vector. As we shall see further on, the dual process may improve \(\bar{\varvec{\mathbf {x}}}^\mathtt{dual }\) through its own efforts. In this case, the dual process sends \(\bar{\varvec{\mathbf {x}}}^\mathtt{dual }\) to the primal process, which will update \(\bar{\varvec{\mathbf {x}}}^\mathtt{primal }\) if (as is likely) it is improved upon.

A guiding solution \(\underline{\varvec{\mathbf {x}}}\in \{0,1\}^n\) is used to perturb \(\varvec{\mathbf {\tau }}\) such that a move involving any location \(i \in \{1,\ldots ,n\}\) such that \(\check{\varvec{\mathbf {x}}}_i = \underline{\varvec{\mathbf {x}}}_i\) becomes tabu. As a consequence, \(\check{\varvec{\mathbf {x}}}\) moves closer to \(\underline{\varvec{\mathbf {x}}}\) during the next iterations. This is similar in spirit to path-relinking [15].

An improving partial solution \(\bar{\varvec{\mathbf {p}}}\in \{0,1,*\}^n\) defines a solution subspace which is known to contain all solutions cheaper than \(\bar{\varvec{\mathbf {x}}}^\mathtt{dual }\), and hence \(\bar{\varvec{\mathbf {x}}}^\mathtt{primal }\) upon synchronization. In other words, given any solution \(\varvec{\mathbf {x}} \in \{0,1\}^n\), if there exists \(i \in \{1,\ldots ,n\}\) for which \(\bar{\varvec{\mathbf {p}}}_i \in \{0,1\}\) and such that \(\varvec{\mathbf {x}}_i \ne \bar{\varvec{\mathbf {p}}}_i\), then we know that \(f(\varvec{\mathbf {x}}) \ge f(\bar{\varvec{\mathbf {x}}}^\mathtt{primal })\). We shall see further down how the dual process generates \(\bar{\varvec{\mathbf {p}}}\) at any given time by identifying common elements in all the remaining unfathomed leaves of the branch-and-bound search tree. In any case, having such a partial solution allows us to restrict the search space by fixing the corresponding components of \(\check{\varvec{\mathbf {x}}}\), and consequently this speeds up the tabu search procedure.

Algorithm 1 summarizes our primal process. In the following section, we present our dual process.

3 Dual process

Our dual process computes and improves a lower bound for \((P)\) by enumerating a lagrangian branch-and-bound search tree. In other words, the problem \((P)\) is recursively partitioned into subproblems, and for each of these subproblems we compute a lower bound by optimizing a lagrangian dual. Recall that a lagrangian dual function maps a vector of lagrangian multipliers to the optimal value of the lagrangian relaxation parameterized by these multipliers.

This section is organized into subsections as follows. In Sect. 3.1 we specify the subproblems into which we recursively partition \((P)\) and we explain how we compute a lower bound for them, given any vector of multipliers. In Sect. 3.2 we present our branch-and-bound procedure and the dual process. In Sect. 3.4 we explain how we search for a vector of multipliers inducing a good lower bound for a given node.

3.1 Subproblems and lagrangian relaxation

In general, a branch-and-bound method consists in expanding a search tree by separating open leaf nodes into subnodes. This is typically done by selecting a particular 0–1 variable which is unfixed in the open node, then fixing it to 0 in one subnode and to 1 in the other. In our method we apply this to the location variables \(\varvec{\mathbf {x}}_i\), however we also separate on the number of open locations, i.e. by limiting the value of \(\sum _{i=1}^n\varvec{\mathbf {x}}_i\) within an interval \([\underline{n}, \overline{n}]\), with \(1 \le \underline{n}\le \overline{n}\le n\). For this reason, we shall consider subproblems of \((P)\) defined by the following parameters:

-

a partial solution vector \(\varvec{\mathbf {p}} \in \{0,1,*\}^n\) specifying some locations which are forced to be open or closed,

-

two integers \(\underline{n}\) and \(\overline{n}\) specifying the minimum and maximum number of open locations.

We let \(SP(\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{})\) denote the subproblem formulated as follows:

We compute lower bounds for such subproblems using the lagrangian relaxation obtained by dualizing the \(m\) constraints (1). We let \(LR(\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}})\) denote the lagrangian relaxation of \(SP(\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{})\) using the vector of multipliers \(\varvec{\mathbf {\mu }}\in \mathbb {R}^m\), and let \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}})\) denote the lagrangian dual at \(\varvec{\mathbf {\mu }}\):

To eliminate the decision variables \(\varvec{\mathbf {y}}\) from the formulation, we introduce a vector of reduced costs \(\bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}}\) defined as follows for all \(i \in \{1,\ldots ,n\}\):

This yields the following formulation for \(LR(\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}})\):

If \(LR(\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}})\) has no feasible solution, then for the sake of consistency we let \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}}) = \infty \).

Since \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}})\) is a lower bound on the optimal value of \(SP(\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{})\) for all \(\varvec{\mathbf {\mu }}\in \mathbb {R}^m\), we are interested in searching for

There exist several methods for this purpose, we shall discuss this matter further in Sect. 3.4. We now show how to efficiently evaluate \(\phi \).

Evaluating a lagrangian dual function \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\,\cdot \,})\) for a particular vector of multipliers \(\varvec{\mathbf {\mu }}\in \mathbb {R}^m\) consists in searching for an optimal solution \(\underline{\varvec{\mathbf {x}}}\) of the corresponding lagrangian relaxation \(LR(\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}})\). We do this in three stages:

-

1.

we compute the reduced costs \(\bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}}\);

-

2.

we try to generate an optimal partition \(\varPi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}}) = (F^1, L^1, L^*, L^0, F^0)\) of the set of locations, i.e. \(F^1\cup L^1\cup L^*\cup L^0\cup F^0= \{1,\ldots ,n\}\);

-

3.

if successful, we generate \(\underline{\varvec{\mathbf {x}}}\) using \(\varPi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}})\) and \(\bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}}\).

We generate the location sets \(F^1\), \(L^1\), \(L^*\), \(L^0\) and \(F^0\) as follows. The set \(F^1\) contains the locations fixed open, i.e. \(F^1\) is the set of all locations \(i \in \{1,\ldots ,n\}\) for which \(\varvec{\mathbf {p}}_i = 1\). Likewise, \(F^0\) contains the locations fixed closed. Now consider constraint (5), and notice that it cannot be satisfied if \(|F^1| > \overline{n}\) or if \(n - |F^0| < \underline{n}\). In this case \(LR(\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}})\) is infeasible, we fail to generate the partition \(\varPi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}})\), and \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}}) = \infty \). Otherwise, note that if \(|F^1| < \underline{n}\), then in order to satisfy constraint (5) at least \(\underline{n}- |F^1|\) additional unfixed locations must be open. Let \(L^1\) be this set, the optimal decision is for \(L^1\) to contain the unfixed locations which are the least expensive in terms of \(\bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}}\). Likewise, if \(n - |F^0| < \overline{n}\), then at least \(\overline{n}- n + |F^0|\) unfixed locations must be closed. Let \(L^0\) be this set, the optimal decision is for \(L^0\) to contain the unfixed locations which are the most expensive in terms of \(\bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}}\). Let \(L^*\) be the set of locations in \(\{1,\ldots ,n\}\) not in \(F^1\), \(L^1\), \(L^0\) or \(F^0\). The optimal decision for each location \(i \in L^*\) is to be open or closed if \(\bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}}_i\) is negative or positive, respectively.

The optimal value of a lagrangian relaxation \(LR(\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}})\) which is feasible, i.e. if and only if \(\underline{n}\le n - |F^0|\) and \(|F^1| \le \overline{n}\), is

We can use the reduced costs and the optimal partition to identify an optimal solution \(\underline{\varvec{\mathbf {x}}}\):

Proposition 1

The lagrangian relaxation \(LR(\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}})\) can be solved in \(O(mn)\) time.

Proof

To begin with, the computation of the reduced costs \(\bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}}\) requires \(O(mn)\) time, and the generation of the sets \(F^0\) and \(F^1\) requires \(O(n)\) time. Next, the generation of \(L^1\) requires us to select a certain number of the cheapest locations in \(\{1,\ldots ,n\}{\setminus }(F^0\cup F^1)\). This can be done using an appropriate selection algorithm such as quickselect which requires \(O(n)\) time. We generate \(L^0\) in a similar fashion, and generate \(L^*\) in \(O(n)\) time also. Finally, we generate \(\underline{\varvec{\mathbf {x}}}\) simply by enumerating the elements in these sets and hence the whole resolution is done in \(O(mn)\) time. \(\square \)

We now use the concepts introduced in this subsection to present our dual process.

3.2 Branch-and-bound procedure and dual process

Throughout the search, the dual process maintains a incumbent solution \(\bar{\varvec{\mathbf {x}}}^\mathtt{dual }\) as well as a global upper bound \(\bar{z}^\mathtt{dual }= f(\bar{\varvec{\mathbf {x}}}^\mathtt{dual })\). Initially, \(\bar{\varvec{\mathbf {x}}}^\mathtt{dual }\) is undefined and \(\bar{z}^\mathtt{dual }\) is set to \(\infty \). The dual process then performs a preprocessing phase before solving the problem \((P)\) by branch-and-bound. The preprocessing phase consists in computing, for all ranks \(k \in \{1,\ldots ,n\}\), a lower bound \(\varvec{\mathbf {\ell }}_k\) on the cost of any solution of \((P)\) with \(k\) open locations:

These lower bounds are subsequently used in the following manner during the resolution of \((P)\). Note that

During the resolution of \((P)\) we therefore discard all solutions whose number of open locations is not in the interval

In practice, given good enough bounds \(\varvec{\mathbf {\ell }}\) and \(\bar{z}^\mathtt{dual }\), this interval is small enough to justify the extra effort required for computing \(\varvec{\mathbf {\ell }}\).

Before giving an overview of the dual process, we begin this subsection by specifying our Branch-and-bound procedure. We shall see that it is applied both to compute \(\varvec{\mathbf {\ell }}\) during the preprocessing phase as well as to solve \((P)\) afterwards.

3.2.1 Branch-and-bound

The Branch-and-bound procedure described here performs a lagrangian branch-and-bound search on \((P)\), updating \(\bar{\varvec{\mathbf {x}}}^\mathtt{dual }\) and \(\bar{z}^\mathtt{dual }\) whenever it finds a cheaper solution. At all times, our Branch-and-bound procedure maintains an open node queue \(\mathcal {Q}\) storing all the open leaf nodes of the search tree. Any node of the search tree is represented by a 4-tuple \((\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\bar{\varvec{\mathbf {\mu }}}})\): the subproblem corresponding to this node is \(SP(\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{})\) and a lower bound for this node is \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\bar{\varvec{\mathbf {\mu }}}})\). Let \({\varvec{\mathbf {*}}}\) be the \(n\)-dimensional vector of \(*\)-components. We compute

using a bundle method as explained in Sect. 3.4, and initialize the open node queue \(\mathcal {Q}\) with \(({\varvec{\mathbf {*}}},1,n,\bar{\varvec{\mathbf {\mu }}}^{root})\). This 4-tuple corresponds to the root node of the search tree. The Branch-and-bound procedure then iteratively expands the search tree until all leaf nodes have been closed, i.e. until \(\mathcal {Q}= \emptyset \).

At the beginning of each iteration of Branch-and-bound, we select a node \((\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\bar{\varvec{\mathbf {\mu }}}}) \in \mathcal {Q}\) according to a predefined strategy NodeSelect. For example, our implementation applies one of the following two node selection strategies:

-

LFS, which selects the node with the lowest lower bound in \(\mathcal {Q}\) (lowest-first search, also known as best-first search),

-

DFS, which selects the node most recently added into \(\mathcal {Q}\) (depth-first search).

Note that since LFS always selects the node with the smallest lower bound, i.e. \((\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\bar{\varvec{\mathbf {\mu }}}}) \in \mathcal {Q}\) which minimizes \(\phi \), this lower bound is then also a global lower bound for \((P)\).

We then improve this lower bound by updating \(\bar{\varvec{\mathbf {\mu }}}\) with a bundle method such that

In the case where the lower bound does not exceed the upper bound \(\bar{z}^\mathtt{dual }\), we try to separate this node into subnodes by applying a given branching procedure Branch. We define a branching procedure Branch as a procedure which, when applied to a 4-tuple \((\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\bar{\varvec{\mathbf {\mu }}}})\) returns either nothing or two 3-tuples \((\varvec{\mathbf {p}}{'},\underline{n}{'},\overline{n}{'})\) and \((\varvec{\mathbf {p}}{''},\underline{n}{''},\overline{n}{''})\) which satisfy the following property: for all solutions \(\varvec{\mathbf {x}}\) feasible for \(SP(\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{})\) and cheaper than \(\bar{z}^\mathtt{dual }\), \(\varvec{\mathbf {x}}\) must be feasible either for \(SP(\varvec{\mathbf {p}}{'},\underline{n}{'},\overline{n}{'})\) or for \(SP(\varvec{\mathbf {p}}{''},\underline{n}{''},\overline{n}{''})\). If \(\phi (\varvec{\mathbf {p}}{'},\underline{n}{'},\overline{n}{'},{\bar{\varvec{\mathbf {\mu }}}}) < \bar{z}^\mathtt{dual }\), then we insert the subnode \((\varvec{\mathbf {p}}{'},\underline{n}{'},\overline{n}{'},{\bar{\varvec{\mathbf {\mu }}}})\) into the open node queue \(\mathcal {Q}\), and likewise for \((\varvec{\mathbf {p}}{''},\underline{n}{''},\overline{n}{''},{\bar{\varvec{\mathbf {\mu }}}})\).

The final step of our Branch-and-bound iteration consists in communicating with the primal process and possibly replacing \(\bar{\varvec{\mathbf {x}}}^\mathtt{dual }\) to decrease \(\bar{z}^\mathtt{dual }= f(\bar{\varvec{\mathbf {x}}}^\mathtt{dual })\). Let us list all types of messages which our dual process may send and receive (mirroring the list in Sect. 2) before discussing how to handle them:

-

incoming:

-

receive a solution \(\bar{\varvec{\mathbf {x}}}^\mathtt{primal }\),

-

receive a request for a guiding solution.

-

-

outgoing:

-

send the solution \(\bar{\varvec{\mathbf {x}}}^\mathtt{dual }\),

-

send the improving partial solution \(\bar{\varvec{\mathbf {p}}}\),

-

send the guiding solution \(\underline{\varvec{\mathbf {x}}}\).

-

The first step is to try replacing \(\bar{\varvec{\mathbf {x}}}^\mathtt{dual }\) by a cheaper solution. Consider \(\underline{\varvec{\mathbf {x}}}\), the optimal solution of \(LR(\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\bar{\varvec{\mathbf {\mu }}}})\): if \(f(\underline{\varvec{\mathbf {x}}}) < \bar{z}^\mathtt{dual }\), then we replace \(\bar{\varvec{\mathbf {x}}}^\mathtt{dual }\) with \(\underline{\varvec{\mathbf {x}}}\) and \(\bar{z}^\mathtt{dual }\) with \(f(\underline{\varvec{\mathbf {x}}})\). Likewise, if we have received a solution \(\bar{\varvec{\mathbf {x}}}^\mathtt{primal }\) from the primal process and if \(f(\bar{\varvec{\mathbf {x}}}^\mathtt{primal }) < \bar{z}^\mathtt{dual }\), then we replace \(\bar{\varvec{\mathbf {x}}}^\mathtt{dual }\) with \(\bar{\varvec{\mathbf {x}}}^\mathtt{primal }\) and \(\bar{z}^\mathtt{dual }\) with \(f(\bar{\varvec{\mathbf {x}}}^\mathtt{primal })\). Note that an improved upper bound \(\bar{z}^\mathtt{dual }\) may allow us to fathom some open nodes in \(\mathcal {Q}\).

Next, we occasionally update the improving partial solution \(\bar{\varvec{\mathbf {p}}}\) and send it to the primal process if it has changed. The improving partial solution (a notion already introduced in Sect. 2) is a partial solution \(\bar{\varvec{\mathbf {p}}}\in \{0,1,*\}^n\) which satisfies the following for all \(i \in \{1,\ldots ,n\}\):

Since the effort required to compute an improving partial solution \(\bar{\varvec{\mathbf {p}}}\) with a minimum number of \(*\)-components scales with the size of \(\mathcal {Q}\), the frequency of the updates of \(\bar{\varvec{\mathbf {p}}}\) must not be too high (this is set by a predefined parameter).

Finally, if a request for a guiding solution was received from the primal process, then we send \(\underline{\varvec{\mathbf {x}}}\) as a guiding solution. If the open node queue \(\mathcal {Q}\) is now empty, then we end the search, otherwise we perform the next iteration of the Branch-and-bound procedure.

We specify the whole Branch-and-bound procedure in Algorithm 2. The procedure takes two callback procedures as arguments: NodeSelect which selects a node in the open node queue, and a branching procedure Branch.

3.2.2 Dual process overview

At the beginning of this subsection we mentioned that the dual process begins by performing a preprocessing phase to generate a vector \(\varvec{\mathbf {\ell }}\in \mathbb {R}^n\) which satisfies

For this purpose, we initialize \(\varvec{\mathbf {\ell }}_k \leftarrow \infty \) for all \(k \in \{1,\ldots ,n\}\), and apply Branch-and-bound using an appropriate branching procedure which will dictate how the nodes are subdivided in step 3b of Algorithm 2. This particular branching procedure is denoted by BrP and is specified recursively in Algorithm 3. Its input is a 4-tuple \((\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\bar{\varvec{\mathbf {\mu }}}})\) specifying a node to be split, and its final output is either nothing or two 3-tuples specifying the subnodes. By defining this branching procedure recursively, we may effectively tighten the constraint (5) in each of the two resulting subproblems, which we hope improves the search overall. Since this procedure branches on the number of open locations \(\sum _{i=1}^n\varvec{\mathbf {x}}_i\), by applying it we separate \((P)\) into subproblems \(SP({\varvec{\mathbf {*}}},\underline{n},\overline{n})\) with \(1 \le \underline{n}\le \overline{n}\le n\).

Note that this branching procedure requires several evaluations of \(\phi \). In general this is done in \(O(mn)\) time, however we shall see in Sect. 3.3 that it is possible to compute \(\varPi (\varvec{\mathbf {p}},\underline{n}+1,\overline{n},\bar{\varvec{\mathbf {\mu }}})\) and \(\phi (\varvec{\mathbf {p}},\underline{n}+1,\overline{n},\bar{\varvec{\mathbf {\mu }}})\) in \(O(n)\) time by reusing \(\bar{\varvec{\mathbf {c}}}^{\bar{\varvec{\mathbf {\mu }}}}\), \(\varPi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\bar{\varvec{\mathbf {\mu }}}})\) and \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\bar{\varvec{\mathbf {\mu }}}})\). The same can also be done for \((\varvec{\mathbf {p}},\underline{n},\overline{n}-1,\bar{\varvec{\mathbf {\mu }}})\). When Branch-and-bound terminates, we set \(\varvec{\mathbf {\ell }}_k \leftarrow \bar{z}^\mathtt{dual }\) for all \(k \in \{1,\ldots ,n\}\) for which \(\varvec{\mathbf {\ell }}_k = \infty \). In this manner, for all \(k \in \{1,\ldots ,n\}\), \(\varvec{\mathbf {\ell }}_k\) is a lower bound on the solutions of \((P)\) with \(k\) open locations, i.e. on the solutions of \(SP({\varvec{\mathbf {*}}},k,k)\).

Having completed preprocessing, we solve \((P)\) by applying Branch-and-bound using another recursive branching procedure BrS, which we specify in Algorithm 4. In this case, recursion allows us to tighten constraint (4) as well as (5) in the resulting subproblems. For all \(\varvec{\mathbf {p}} \in \{0,1,*\}^n\) and for all \(k \in \{1,\ldots ,n\}\), we define \(\varvec{\mathbf {p}}^{+k}\) and \(\varvec{\mathbf {p}}^{-k}\) as follows. For all \(i \in \{1,\ldots ,n\}\),

In a sense, for all \(i \in \{1,\ldots ,n\}\) such that \(p_i = *\), the value \(|\bar{\varvec{\mathbf {c}}}^{\bar{\varvec{\mathbf {\mu }}}}_i|\) is a measure of the impact of the decision to branch on the unfixed location \(i\), and BrS chooses to branch on the unfixed location with the smallest impact. Like BrS, this procedure is recursive and seemingly requires many \(O(mn)\) evaluations of \(\phi \) which in fact can be performed in \(O(n)\). We explain how in the following subsection.

3.3 Reoptimization

Given any vector \(\varvec{\mathbf {\mu }}\in \mathbb {R}^m\), suppose that we have evaluated \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}})\) and that this value is not \(\infty \). We thus also have the reduced costs \(\bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}}\) as well as an optimal partition \(\varPi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}}) = (F^1, L^1, L^*, L^0, F^0)\). The following proposition and its corollary specify how to reuse these to compute \(\phi (\varvec{\mathbf {p}},\underline{n}+k,\overline{n},\varvec{\mathbf {\mu }})\) and \(\phi (\varvec{\mathbf {p}},\underline{n},\overline{n}-k,\varvec{\mathbf {\mu }})\) in \(O(n)\) time, for all \(k > 0\). Without loss of generality, suppose that the values in \(\bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}}\) are all different.

Proposition 2

For all \(k > 0\), if \(\underline{n}+ k > \min \{\overline{n}, n - |F^0|\}\) then

else if \(\underline{n}+ k \le |F^1|\) then

else, let \(\tilde{L}\) be the set of the \(k\) least expensive locations in \(L^*\) in terms of \(\bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}}\), we have

Proof

If \(\underline{n}+ k > \overline{n}\), then by definition of \(SP(\varvec{\mathbf {p}},\underline{n}+k,\overline{n})\), this subproblem is infeasible, hence \(\phi (\varvec{\mathbf {p}},\underline{n}+k,\overline{n},\varvec{\mathbf {\mu }}) = \infty \). Likewise, if \(\underline{n}+ k > n - |F^0|\), then there exists no \(\varvec{\mathbf {x}} \in \{0,1\}^n\) which can satisfy both \(\underline{n}+ k \le \sum _{i=1}^n\varvec{\mathbf {x}}_i\) and \(\varvec{\mathbf {x}}_i = 0\) for all \(i \in \{1,\ldots ,n\}\) for which \(\varvec{\mathbf {p}}_i = 0\), i.e. for all \(i \in F^0\).

Suppose henceforth that \(\underline{n}+ k \le \min \{\overline{n}, n - |F^0|\}\), and let \(\varPi (\varvec{\mathbf {p}},\underline{n}+k,\overline{n},\varvec{\mathbf {\mu }}) = (F'^1, L'^1, L'^*, L'^0, F'^0)\). We know that \(F'^1= F^1\) and \(F'^0= F^0\) because both these sets are induced solely by \(\varvec{\mathbf {p}}\).

If \(\underline{n}+ k \le |F^1|\), then all \(\varvec{\mathbf {x}} \in \{0,1\}^n\) which satisfy \(\varvec{\mathbf {x}}_i = 1\) for all \(i \in F^1\) also satisfy \(\underline{n}+ k \le \sum _{i=1}^n\varvec{\mathbf {x}}_i\), consequently \(L^1\) and \(L'^1\) are empty by definition of these sets. Likewise, \(L'^0= L^0\) and \(L'^*= L^*\), hence \(\varPi (\varvec{\mathbf {p}},\underline{n}+ k,\overline{n},\varvec{\mathbf {\mu }}) = \varPi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}})\) and \(\phi (\varvec{\mathbf {p}},\underline{n}+ k,\overline{n},\varvec{\mathbf {\mu }}) = \phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}})\).

Suppose now that \(\underline{n}+ k > |F^1|\). The set \(L'^1\) consists of the \(\underline{n}+ k - |F^1|\) unfixed locations which are least expensive in terms of \(\bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}}\), and we know that this is a subset of \(L^1\cup L^*\) because

hence \(L'^1= L^1\cup \tilde{L}\) and \(L'^*= L^*{\setminus }\tilde{L}\). Consequently,

\(\square \)

Corollary 1

For all \(k > 0\), if \(\overline{n}- k < \max \{\underline{n}, |F^1|\}\) then

else if \(n - (\overline{n}- k) \le |F^0|\) then

else, let \(\tilde{L}\) be the set of the \(k\) most expensive locations in \(L^*\) in terms of \(\bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}}\), we have

The next propositions show how to reoptimize \(LR(\varvec{\mathbf {p}}^{+k},\underline{n},\overline{n},\varvec{\mathbf {\mu }})\) for all \(k \in \{1,\ldots ,n\}\) for which \(\varvec{\mathbf {p}}_k = *\), i.e. for all \(k \in (L^1\cup L^*\cup L^0)\). For this purpose we need to identify the following locations:

Each proposition also has a corollary stating a symmetric result for \(\varvec{\mathbf {p}}^{-k}\).

Proposition 3

For all \(k \in L^1\),

Proof

Let \(\varPi ({\varvec{\mathbf {p}}^{+k}},\underline{n},\overline{n},\varvec{\mathbf {\mu }}) = (F'^1,L'^1,L'^*,L'^0,F'^0)\). Since \({\varvec{\mathbf {p}}^{+k}}\) differs from \(\varvec{\mathbf {p}}\) only in its \(k\)-th component, for which we have \(p_k = *\) and \(\varvec{\mathbf {p}}^{+k} = 1\), it follows that \(F'^1= F^1\cup \{k\}\) and that \(F'^0= F^0\). Recall that by definition, if \(|F'^1| \ge \underline{n}\) then \(L'^1= \emptyset \) else \(L'^1\) contains the \(\underline{n}- |F'^1|\) cheapest unfixed locations in terms of \(\bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}}\). Consequently, we have \(L'^1= L^1{\setminus }\{k\}\), and by using a similar reasoning we also have \(L'^0= L^0\), hence \(L'^*= L^*\). Note that \(F'^1\cup L'^1= F^1\cup L^1\), hence \(\phi ({\varvec{\mathbf {p}}^{+k}},\underline{n},\overline{n},\varvec{\mathbf {\mu }}) = \phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}})\). \(\square \)

Corollary 2

For all \(k \in L^0\),

Proposition 4

For all \(k \in L^*\), if \(L^1\) is empty then

else

Proof

Let \(\varPi ({\varvec{\mathbf {p}}^{+k}},\underline{n},\overline{n},\varvec{\mathbf {\mu }}) = (F'^1,L'^1,L'^*,L'^0,F'^0)\). As in the proof of Proposition 3, we have \(F'^1= F^1\cup \{k\}\), \(L'^0= L^0\), and \(F'^0= F^0\). If \(L^1\) is empty, then \(|F^1| \ge \underline{n}\) and therefore \(L'^1\) is empty also, hence:

If \(L^1\) is not empty, then \(L'^1\) must contains one location less than \(L^1\), which therefore must be \(i_>^1\). Consequently, \(i_>^1 \in L'^*\) and

\(\square \)

Corollary 3

For all \(k \in L^*\), if \(L^0\) is empty then

else

Proposition 5

For all \(k \in L^0\), if \(L^1\) and \(L^*\) are both empty then

else if \(L^1\) is nonempty and \(L^*\) is empty then

else if \(L^1\) is empty and \(L^*\) is nonempty then

else

Proof

If \(L^1= L^*= \emptyset \), then \(|\{i \in \{1,\ldots ,n\}: \varvec{\mathbf {p}}_i = 1\}| = |F^1| = \overline{n}\) and thus \(SP({\varvec{\mathbf {p}}^{+k}},\underline{n},\overline{n})\) is infeasible and \(\phi ({\varvec{\mathbf {p}}^{+k}},\underline{n},\overline{n},\varvec{\mathbf {\mu }}) = \infty \). Suppose henceforth that \(L^1\cup L^*\ne \emptyset \), and let \(\varPi ({\varvec{\mathbf {p}}^{+k}},\underline{n},\overline{n},\varvec{\mathbf {\mu }}) = (F'^1,L'^1,L'^*,L'^0,F'^0)\). Again, as in the proof of Proposition 3, we have \(F'^1= F^1\cup \{k\}\) and \(F'^0= F^0\). Also, \(k \in L^0\) implies that \(L^0\) is not empty and hence \(|F^0| + |L^0| = n - \overline{n}\).

In the case where \(L^1\ne \emptyset \) and \(L^*= \emptyset \), we have \(|F^1| + |L^1| = \underline{n}= \overline{n}\). Consequently \(L'^1\) must contain one location less than \(L^1\), this location therefore being \(i_>^1\), and \(L'^0\) must remain the same size as \(L^0\), hence \(L'^0= (L^0{\setminus }\{k\}) \cup \{i_>^1\}\).

In the case where \(L^1= \emptyset \) and \(L^*= \emptyset \), we have \(|F^1| \ge \underline{n}\) and thus \(L'^1\) is also empty. Consequently \(L'^0\) must remain the same size as \(L^0\), hence \(L'^0= (L^0{\setminus }\{k\}) \cup \{i_>^*\}\).

In the final case where both \(L^1\) and \(L^*\) are nonempty, we have \(|F^1| + |L^1| = \underline{n}\). Similarly as in both previous cases, \(L'^1\) must contain one location less than \(L^1\) and hence \(L^1= L^1{\setminus }\{i_>^*\}\), and \(L'^0\) must remain the same size as \(L^0\). Note that \(\bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}}_{i_>^*} = \max _{i \in L^*} \bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}}_i < \bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}}_{i_>^1}\), hence \(L'^0= (L^0{\setminus }\{k\}) \cup \{i_>^*\}\). We deduce the values of \(\phi ({\varvec{\mathbf {p}}^{+k}},\underline{n},\overline{n},\varvec{\mathbf {\mu }})\) like in the proof of Proposition 4. \(\square \)

Corollary 4

For all \(k \in L^1\), if \(L^*\) and \(L^0\) are both empty then

else if \(L^*\) is empty and \(L^0\) is nonempty then

else if \(L^*\) is nonempty and \(L^0\) is empty then

else

3.4 Bundle method

In this subsection we describe how we adapt a bundle method to optimize the lagrangian dual of any subproblem \(SP(\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{})\) with \(\varvec{\mathbf {p}} \in \{0,1,*\}^n\), \(1 \le \underline{n}\le \overline{n}\le n\):

Recall that such subproblems correspond to nodes evaluated by the Branch-and-bound procedure, and that the optimization of the lagrangian duals takes place in steps 0 and 2 of Algorithm 2.

There exist many different methods to compute \(\bar{\varvec{\mathbf {\mu }}}\): the simplex method, dual-ascent heuristics [11], the volume algorithm [1], simple subgradient search, Nesterov’s algorithm [34], etc. However, our preliminary experiments have indicated that bundle methods works well for this problem. Indeed, on the one hand, optimizing \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\,\cdot \,})\) exactly with the simplex method is too difficult in practice for all but the easiest and smallest instances of the UFLP, and in any case we are not interested in exact optimization per se. On the other hand, subgradient search methods might not capture enough information about \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\,\cdot \,})\) during the optimization process to be as effective as the bundle method, as suggested by some preliminary experiments with a bundle method using very small bundle size limits. Bundle methods therefore seem like a good compromise between two extremes. After giving a brief summary of the bundle method, we shall explain how we adapted it to solve our problem. A detailed description of bundle and trust-region methods in general lies beyond the scope of this paper, see, e.g., [20] for a detailed treatment on the subject.

3.4.1 Overview

The bundle method is a trust-region method which optimizes a lagrangian dual function iteratively until a termination criterion is met. At each iteration \(t\), a trial point \(\varvec{\mathbf {\mu }}^{(t)}\in \mathbb {R}^m\) is determined. In the case of the first iteration (i.e. \(t = 0\)), the trial point depends on where we are in the dual process:

-

during preprocessing, in step 0 of Algorithm 2: this is the very first application of the bundle method, and the initial trial point is the result of a dual-ascent heuristic such as DualLoc;

-

during the resolution of \((P)\), in step 0 of Algorithm 2: the initial trial point is \(\bar{\varvec{\mathbf {\mu }}}^\mathtt{root}\), the best vector of multipliers for the root node (computed during preprocessing);

-

otherwise, i.e. in step 2 of Algorithm 2: the initial trial point is \(\bar{\varvec{\mathbf {\mu }}}\), the best vector of multipliers for the parent node.

At any subsequent iteration \(t > 0\), \(\varvec{\mathbf {\mu }}^{(t)}\) is determined by optimizing a model approximating \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\,\cdot \,})\).

Next, \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}^{(t)}})\) is evaluated, and this value and a subgradient are used to update the model of the dual. A new iteration is then performed, unless either a convergence criterion, or a predefined iteration limit, or a bound limit is reached, in which case we update the best multipliers:

The convergence criterion is satisfied when the bundle method determines that its model of the dual \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\,\cdot \,})\) provides a good enough approximation of the dual in the neighborhood of an optimal vector of multipliers. The bound limit is reached when the current trial point \(\varvec{\mathbf {\mu }}^{(t)}\) satisfies \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}^{(t)}}) \ge \bar{z}\), where \(\bar{z}\) is an upper bound on the optimal value of \((P)\).

The model of the lagrangian dual is specified using a bundle \(\mathcal {B}\), defined by a set of vector-scalar pairs \((\varvec{\mathbf {\sigma }}, \epsilon )\) such that the vector \(\varvec{\mathbf {\sigma }} \in \mathbb {R}^m\) and the scalar \(\epsilon \in \mathbb {R}\) define a first-order outer approximation of \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\,\cdot \,})\), i.e.

At each iteration \(t\), the bundle \(\mathcal {B}\) [and hence, the model of \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\,\cdot \,})\)] is updated using a pair \((\varvec{\mathbf {\sigma }}^{(t)}, \epsilon ^{(t)})\) generated as follows. First, we determine an optimal solution \(\underline{\varvec{\mathbf {x}}}^{(t)}\) of the lagrangian relaxation \(LR(\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}^{(t)}})\). Assuming that it exists, let

To determine a subgradient \(\varvec{\mathbf {\sigma }}^{(t)}\in \mathbb {R}^m\), we specify a vector \(\underline{\varvec{\mathbf {y}}}^{(t)}\) associated with the optimal solution \(\underline{\varvec{\mathbf {x}}}^{(t)}\) of \(LR(\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}^{(t)}})\):

For all suitable \(\underline{\varvec{\mathbf {y}}}^{(t)}\), the vector in \(\mathbb {R}^m\) with components \(\left( 1 - \sum _{i=1}^n\underline{\varvec{\mathbf {y}}}^{(t)}_{ij}\right) _j\) for all \(j \in \{1,\ldots ,m\}\) is a subgradient of \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\,\cdot \,})\). We let

and

The function \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\,\cdot \,})\) is concave, thus \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}}) \le \sum _{j=1}^m\varvec{\mathbf {\sigma }}^{(t)}_j \varvec{\mathbf {\mu }}_j + \epsilon ^{(t)}\) for all \(\varvec{\mathbf {\mu }}\in \mathbb {R}^m\). We therefore update the bundle \(\mathcal {B}\) using the pair \((\varvec{\mathbf {\sigma }}^{(t)}, \epsilon ^{(t)})\) specifying a first-order outer approximation of \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\,\cdot \,})\) at \(\varvec{\mathbf {\mu }}^{(t)}\).

3.4.2 Subgradient cache

Recall that the subproblems of the form \(SP(\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{})\) are associated with the nodes in a branch-and-bound search tree, therefore if a bundle method is to be performed at each node, then it stands to reason that some lagrangian dual functions may be very similar to one another. In particular, one \((\varvec{\mathbf {\sigma }}, \epsilon )\) pair generated during the optimization of one dual function may also specify a valid first-order outer approximation function of another dual function. For this reason, our method maintains a cache \(\mathcal {C}\) in which \((\varvec{\mathbf {\sigma }}, \epsilon )\) pairs are stored as soon as they are generated. Thus, before optimizing any dual function \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\,\cdot \,})\), our method retrieves some suitable pairs from \(\mathcal {C}\) to populate the current bundle (which is otherwise empty), thus improving the model and hopefully also the quality of the trial points. Note that although there exist other ways of re-using the subgradient information generated during the application of the bundle method, ours is simple, effective, and requires only a limited amount of memory. We now introduce the result which tells us if and how a pair is suitable.

Proposition 6

Suppose that \(\underline{\varvec{\mathbf {x}}}\) is an optimal solution of any lagrangian relaxation \(LR(\varvec{\mathbf {p}}{'},\underline{n}{'},\overline{n}{'},{\varvec{\mathbf {\mu }}})\), and let \((\varvec{\mathbf {\sigma }},\epsilon )\) be the corresponding pair which defines a first-order outer approximation of \(\phi (\varvec{\mathbf {p}}{'},\underline{n}{'},\overline{n}{'},{\,\cdot \,})\). This pair defines a first-order outer approximation of any other function \(\phi (\varvec{\mathbf {p}}{''},\underline{n}{''},\overline{n}{''},{\,\cdot \,})\) if \(\underline{\varvec{\mathbf {x}}}\) is also an optimal solution of \(LR(\varvec{\mathbf {p}}{''},\underline{n}{''},\overline{n}{''},{\varvec{\mathbf {\mu }}})\).

Proof

Since \(\varvec{\mathbf {\sigma }}\) is generated using only \(\bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}}\) and \(\underline{\varvec{\mathbf {x}}}\), and since \(\underline{\varvec{\mathbf {x}}}\) is optimal for \(LR(\varvec{\mathbf {p}}{''},\underline{n}{''},\overline{n}{''},{\varvec{\mathbf {\mu }}})\) as well as for \(LR(\varvec{\mathbf {p}}{'},\underline{n}{'},\overline{n}{'},{\varvec{\mathbf {\mu }}})\), then:

-

\(\phi \left( \varvec{\mathbf {p}}{''},\underline{n}{''},\overline{n}{''},{\varvec{\mathbf {\mu }}}\right) = \phi \left( \varvec{\mathbf {p}}{'},\underline{n}{'},\overline{n}{'},{\varvec{\mathbf {\mu }}}\right) = \sum _{j=1}^m\varvec{\mathbf {\mu }}_j + \sum _{i=1}^n\bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}}_i\,\underline{\varvec{\mathbf {x}}}_i\),

-

\(\varvec{\mathbf {\sigma }}\) is a subgradient of \(\phi (\varvec{\mathbf {p}}{''},\underline{n}{''},\overline{n}{''},{\,\cdot \,})\) as well as of \(\phi (\varvec{\mathbf {p}}{'},\underline{n}{'},\overline{n}{'},{\,\cdot \,})\).

This last function is concave, therefore for all \(\tilde{\varvec{\mathbf {\mu }}} \in \mathbb {R}^m\):

\(\square \)

Remark 1

Suppose that \(\varvec{\mathbf {p}}''\), \(\underline{n}''\) and \(\overline{n}''\) are such that \(SP(\varvec{\mathbf {p}}{''},\underline{n}{''},\overline{n}{''})\) is a subproblem of \(SP(\varvec{\mathbf {p}}{'},\underline{n}{'},\overline{n}{'})\). In this special case, \(\underline{\varvec{\mathbf {x}}}\) only needs to be feasible for \(SP(\varvec{\mathbf {p}}{''},\underline{n}{''},\overline{n}{''})\), because it is then also optimal for \(LR(\varvec{\mathbf {p}}{''},\underline{n}{''},\overline{n}{''},{\varvec{\mathbf {\mu }}})\).

We therefore specify the cache \(\mathcal {C}\) as containing key-value pairs of the form \(\left( (\underline{\varvec{\mathbf {x}}}, \varvec{\mathbf {p}}', \underline{n}', \overline{n}'), \,(\varvec{\mathbf {\sigma }}, \epsilon )\right) \). Prior to optimizing a dual function \(\phi (\varvec{\mathbf {p}}{''},\underline{n}{''},\overline{n}{''},{\,\cdot \,})\), we update the bundle using \((\varvec{\mathbf {\sigma }}, \epsilon )\) pairs obtained by traversing \(\mathcal {C}\) for keys which match the following conditions:

-

\(\underline{n}' \le \underline{n}'' \le \sum _{i=1}^n\underline{\varvec{\mathbf {x}}}_i \le \overline{n}'' \le \overline{n}'\), and

-

for all \(i \in \{1,\ldots ,n\}\): either \(\underline{\varvec{\mathbf {x}}}_i = \varvec{\mathbf {p}}''_i\) or \(\varvec{\mathbf {p}}''_i = *\), and either \(\varvec{\mathbf {p}}''_i = \varvec{\mathbf {p}}'_i\) or \(\varvec{\mathbf {p}}'_i = *\).

When properly implemented, these tests can be performed quickly enough for the cache to be of practical use.

Consider now the problem of populating such a cache \(\mathcal {C}\). The straightforward way to do so is as follows: at each iteration \(t\) of the bundle method applied to any dual function \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\,\cdot \,})\), simply add the key-value pair \(\left( (\underline{\varvec{\mathbf {x}}}^{(t)}, \varvec{\mathbf {p}}, \underline{n}, \overline{n}), \,(\varvec{\mathbf {\sigma }}^{(t)}, \epsilon ^{(t)})\right) \). However, we can instead try to improve this key in terms of increasing the likelihood of subsequently reusing \((\varvec{\mathbf {\sigma }}^{(t)}, \epsilon ^{(t)})\). For this purpose we generate a vector \(\tilde{\varvec{\mathbf {p}}}\) in the following manner, using the partition \(\varPi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}^{(t)}}) = (F^1, L^1, L^*, L^0, F^0)\) as well as the reduced costs \(\bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}^{(t)}}\) computed during the evaluation of \(\phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}^{(t)}})\). Let

we initialize \(\tilde{\varvec{\mathbf {p}}} \leftarrow \varvec{\mathbf {p}}\) and set \(\tilde{\varvec{\mathbf {p}}}_i \leftarrow *\) for all \(i \in \tilde{F}^1 \cup \tilde{F}^0\).

Proposition 7

This partial solution \(\tilde{\varvec{\mathbf {p}}}\) is such that \(\underline{\varvec{\mathbf {x}}}^{(t)}\) is also an optimal solution of \(LR(\tilde{\varvec{\mathbf {p}}}, \underline{n}, \overline{n}, \varvec{\mathbf {\mu }}^{(t)})\).

Proof

The locations in \(\tilde{F}^1\) form a subset of \(F^1\) and are not more expensive (in terms of \(\bar{\varvec{\mathbf {c}}}^{\varvec{\mathbf {\mu }}^{(t)}}\)) than any location in \(L^*\) or \(L^0\), therefore there exists an optimal partition \(\varPi (\tilde{\varvec{\mathbf {p}}},\underline{n},\overline{n},\varvec{\mathbf {\mu }}^{(t)})= (F'^1, L'^1, L'^*, L'^0, F'^0)\) such that \(\tilde{F}^1 \subset L'^1\). Likewise, there also exists \(L'^0\) such that \(\tilde{F}^0 \subset L'^0\). Consequently, \(F'^1\cup L'^1= F^1\cup L^1\), \(F'^0\cup L'^0= F^0\cup L^0\) and \(L'^*= L^*\). \(\square \)

Corollary 5

\(\phi (\tilde{\varvec{\mathbf {p}}},\underline{n},\overline{n},\varvec{\mathbf {\mu }}^{(t)}) = \phi (\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{},{\varvec{\mathbf {\mu }}^{(t)}})\).

This result ensures that \((\varvec{\mathbf {\sigma }}^{(t)}, \epsilon ^{(t)})\) is also a first-order outer approximation of \(\phi (\tilde{\varvec{\mathbf {p}}},\underline{n},\overline{n},\,\cdot \,)\) at \(\varvec{\mathbf {\mu }}^{(t)}\). By associating the key \((\underline{\varvec{\mathbf {x}}}^{(t)},\tilde{\varvec{\mathbf {p}}},\underline{n},\overline{n})\) instead of \((\underline{\varvec{\mathbf {x}}}^{(t)},\varvec{\mathbf {p}},\underline{n},\overline{n})\) with the pair \((\varvec{\mathbf {\sigma }}^{(t)}, \epsilon ^{(t)})\) in \(\mathcal {C}\), we increase its potential for reuse.

A proper implementation of such a cache is crucial to ensure good performance. Details are provided in the Appendix.

4 Computational results

We begin this section by outlining how our implementation executes the primal and dual processes concurrently. Following this, we present our test parameters and methodology, and then we present our results. The complete source code, in C, can be freely obtained from the authors.

4.1 Concurrency

Recall that the primal and dual processes both consist of a loop: in the primal process one iteration corresponds to one 1OPT move, and in the dual process one iteration corresponds to one branch-and-bound node. Although it could be, our implementation is not concurrent. The main reason behind this choice is that concurrency induces significant variance in execution times, as we found out during preliminary tests.Footnote 1 Instead of being concurrent, our implementation performs iterations of the primal and dual processes sequentially and alternately. The dual and primal iterations are not performed in equal proportions: at the beginning of the search 100 primal iterations are performed for every dual iteration, and this ratio progressively inverts itself until 100 dual iterations are performed for every primal iteration. The inversion speed is set by a predefined parameter, and in our implementation the default setting is such that a few thousand primal iterations should have been performed before the steady state of 100 dual per 1 primal iterations is reached. This policy seems to work well for reducing overall CPU time for all instances. Indeed the primal process is much more likely to find an improving solution at the beginning of the search than later on, and vice-versa for the dual process. Additionally, the dual process also benefits from having a good upper bound early in the search.

4.2 Parameters

The machines which we used for our tests are identical workstations equipped with Intel i7-2600 CPUs clocked at 3.4 GHz. We have tested our implementation on all instances in UflLib, with a maximum execution time limit set at 2 h of CPU time. To date, the UflLib collection consists of the following instance sets: Bilde-Krarup; Chessboard; Euclidian; Finite projective planes (k = 11, k = 17); Galvão-Raggi; Körkel-Ghosh (symmetric and asymmetric, \(n = m \in \{250,500,750\}\)); Barahona-Chudak; Gap (a, b, c); M*; Beasley (a.k.a. ORLIB); Perfect codes; Uniform. Most of these instances are such that \(n = m = 100\), but in the largest case (some instances in Barahona-Chudak) we have \(n = m = 3000\). Part of the motivation behind applying our method on so many instances stems from how few results on the exact resolution of the UFLP have been published in the last decade.

We performed some preliminary experiments to determine good maximal bundle size settings, and we noticed that execution times seem to be little affected by this parameter. Without going into the details, it appears that for comparatively small \((n = m = 100)\) and easy UflLib instances, a maximal bundle size between 20 and 50 is best. However since we hope to solve the larger and more difficult instances, we set this parameter to 100 for all of our tests. We use the following bundle method iteration limits: iter_limit_initial = 2000 for the very first application of the bundle method during the dual process; iter_limit_other = 120 for all the subsequent applications. Other search parameters are set as follows: the improving partial solution \(\bar{p}\) is updated after every 250 consecutive iterations of the dual process, or whenever the best-known solution is updated; likewise, the primal process sends requests for guiding solutions after 250 consecutive primal process iterations with no improvement of the best-known solution. Again, these settings are not very sensitive.

4.3 Tests

Our first series of tests aims to illustrate the effectiveness of our branching rules and of maintaining a cache \(\mathcal {C}\). We performed these tests on the 30 instances in Gap-a, which are the easier instances in Gap. The instances in this set are such that \(n = m = 100\), and they are characterized by their large duality gap, inducing suitably large search trees for our tests to be meaningful. Because we are not measuring anything related to the primal process, in our tests we disable the primal process and initialize the upper bound \(\bar{z}^\mathtt{dual }\) with the optimal value of the corresponding instance. As a consequence, when solving any one instance, the search trees obtained at the end of the dual process should in theory be identical to one another whichever node selection strategy is used (LFS or DFS). In practice, many components of our implementation are not numerically stable and hence results differ a little. In fact the mean difference in the number of nodes of the search trees obtained by solving with DFS and with LFS is 5.4 %, with a standard deviation of 3.3 %.

Figure 1 illustrates the profile curves corresponding to solving with DFS and the following settings:

-

‘-cache’ without cache,

-

‘+cache’ with cache, limited to 1,024 elements,

-

‘-branch’ without preprocessing, and only considering the first and last cases in the branching procedures BrP and BrS (i.e. cases ‘\(\underline{n}= \overline{n}\)’, ‘\(\varvec{\mathbf {p}} \in \{0,1\}^n\)’ and ‘otherwise’),

-

‘+branch’ with preprocessing, with BrP and BrS as specified in Algorithms 3 and 4.

Observe that ‘+branch’ is always worthwhile when used in conjunction with ‘+cache’, despite the extra computational effort involved. In fact, performance without the cache is still improved when solving the more difficult instances. For this reason, we use ‘+branch’ in all of our subsequent tests.

Figure 2 illustrates the profile curves corresponding to solving with DFS (unless otherwise specified) with the associated maximal cache size. For example, the curve ‘1024’ corresponds to the results using DFS and \(|\mathcal {C}| < 1024\). Notice that the cache does not improve performance when used in conjunction with LFS, unlike with DFS. This is not surprising because in large branch-and-bound trees, the nodes consecutively selected by LFS often bear little relation to one another and too few elements of the cache are reused for it to be worthwhile. Conversely, the nodes consecutively selected by DFS are often closely related. Otherwise, we can see that the use of a cache in conjunction with DFS improves performance provided that the cache remains small enough.

4.4 Solving UflLib

For purposes of comparison, we solve all instances with the Gurobi solver (version 5.0.1) in single-threaded mode, and summarize the execution time results in Table 1. The columns ‘min’, ‘median’, ‘max’ list the execution time of the easiest, median and hardest instance in each set, respectively. The column ‘mean’ lists the mean execution time for the instances in each set which were solved before reaching the time limit, and the column ‘std.dev.’ lists the corresponding standard deviation. Timeouts are represented by a dash (recall that the time limit is 2 CPU-hours), all other times are in minutes and seconds, rounded up.

Finally, we apply our method on all instances in UflLib with the following two settings:

-

1.

DFS and a cache with less than \(128\) elements,

-

2.

LFS and no cache.

Tables 2 and 3 summarize the respective execution time results for each instance set. Figure 3 illustrates the corresponding profile curves for the instance sets whose hardest instance required at least one minute: Barahona-Chudak, Finite projective planes, Gap, Körkel-Ghosh and M*.

First, note that the only instance set for which our method does not work well is Barahona-Chudak. These are large instances, for which

We noticed that the tabu search procedure does not always work very well, and that our branch-and-bound approach often fails to partition \((P)\) adequately. Conversely, the Gurobi solver solved 15 out of 18 instances within 2 h, and even more dramatically, the semi-lagrangian approach of Beltran-Royo et al. [4] was able to solve 16 out of these 18 instances to optimality in a matter of seconds. Likewise, the primal-dual approach of Hansen et al. [19] also works very well on instances of this type.

Nonetheless, our method works well for all other instances in UflLib and can solve many instances which until now were beyond the capabilites of other general or specialized solvers. We can see that several instance sets are better solved by one setting than by the other. This is especially the case for the Finite projective planes instances, for which ‘DFS with \(|\mathcal {C}| < 128\)’ performs very badly, in stark contrast to ‘LFS’. Looking more closely we noticed that these poor results were correlated to a very poor incumbent solution. Therefore it follows that the tabu search procedure in the primal process remains stuck in a local optimality trap very early in the search. It is therefore up to the dual process to send information to the primal process to enable it to escape from its trap. Evidently this does occur with LFS node selection, but not with DFS. We performed another series of tests on the Finite projective planes instances using LFS node selection but with the primal process disabled. We found that the dual process was able to find the optimal solution on its own in a matter of seconds, but not as quickly as in the original ‘LFS’ tests. This illustrates the pertinence of the cooperative aspect of our approach.

Note that in the case of the Gap instance set, the easier instances in the a and b subsets are better solved with ‘LFS’ while the opposite is true of the harder instances in the c subset. On the other hand, the ‘DFS with \(|\mathcal {C}| < 128\)’ setting performs better for M* and Körkel-Ghosh. The instances in these sets have not yet all been solved to optimality, unlike all other sets in UflLib. Until now, only about half of those in M* had known optima, yet our method solves all instances but the largest one to optimality in less that a minute on a modern computer, and the largest one in less than an hour. Our method solves all instances in M* and a good proportion of those in Körkel-Ghosh to optimality. Also, to the best of our knowledge, only 2 of the 90 instances in Körkel-Ghosh had known optima [4], yet our method was able to find 28 more.

Table 4 illustrates our results for the instances of the Körkel-Ghosh set which we were able to solve to optimality. To the best of our knowledge, most of these were unknown until now. Table 5 illustrates our results for the M* set, which we were able to solve to optimality in its entirety in less than an hour. The column ‘#nodes’ lists the number of nodes in the search tree for each instance, and the column ‘#lag.eval.’ lists the number of times a lagrangian relaxation was solved as per Sect. 3.1. The column ‘#moves’ lists the number of 1OPT moves performed by the primal process. As before, all times are rounded up.

5 Concluding remarks

In this paper, we presented a cooperative method to solve \((P)\) exactly, in which a primal process and a dual process exchange information to improve the search. The primal process performs a variation of the tabu search procedure proposed by Michel et al. [31], and the dual process performs a lagrangian branch-and-bound search. We selected this particular metaheuristic for its simplicity and its good overall performance, however any other metaheuristic can be used instead.

Our main contributions lie in the dual process. Partitionning \((P)\) into subproblems \(SP(\varvec{\mathbf {p}}{},\underline{n}{},\overline{n}{})\) allows us to apply sophisticated branching strategies. Our branching rules rely on results for quickly reoptimizing lagrangian relaxations with modified parameters \(\varvec{\mathbf {p}}\), \(\underline{n}\) or \(\overline{n}\), and hence require relatively little computational effort. Note that the branch-and-bound method presented in this paper could be supplemented with the preprocessing and branching rules presented by Goldengorin et al. [16, 17].

Furthermore, we introduced a subgradient caching technique which helps improve performance of the bundle method, which we apply to compute a lower bound at each node. The subgradients are stored in the cache as soon as they are generated during the application of the bundle method, and may be retrieved to initialize the bundle at the beginning of a subsequent application. We introduced results to improve the potential for reuse of a subgradient within the specific context of the UFLP, but these possibly could be extended to lagrangian branch-and-bound methods for other problems. Maintaining a subgradient cache may not be the ideal way of reusing information obtained during the optimization of the lagrangian dual, but this technique has certain practical advantages: it requires constant time and space, it is conceptually simple (and hence, easy to implement), its behavior is easy to control and it works well enough in conjunction with a depth-first node selection strategy.

Note also that by computing the lower bound at each node using a bundle method allows us a certain level of control on the computational effort expended at each node, which more or less directly translates into bound quality. This is in contrast to the dual-ascent heuristic of DualLoc, and also to integer programming solvers such as CPLEX or Gurobi which apply the dual simplex algorithm to completion.

Finally, we presented extensive computational results. Our method performs well for a wide variety of problem instances, several of which having been solved to optimality for the first time.

Notes

Naturally, in a practical setting, this argument may be completely irrelevant. Furthermore, our method may benefit from having more than just one primal process. We can easily imagine that better results may be achieved by running several different primal heuristics concurrently in addition to our tabu search procedure.

References

Barahona, F., Anbil, R.: The volume algorithm: producing primal solutions with a subgradient method. Math. Program. 87(3), 385–399 (2000)

Beasley, J. E.: Lagrangean heuristics for location problems. Eur. J. Oper. Res. 65(3), 383–399 (1993)

Beltran, C., Tadonki, C., Vial, J. P.: Solving the p-median problem with a semi-lagrangian relaxation. Comput. Optim. Appl. 35(2), 239–260 (2006)

Beltran-Royo, C., Vial, J.P., Alonso-Ayuso, A.: Semi-lagrangian relaxation applied to the uncapacitated facility location problem. Comput. Optim. Appl. 51(1), 387–409 (2012)

Bilde, O., Krarup, J.: Sharp lower bounds and efficient algorithms for the simple plant location problem. Ann. Discret. Math. 1, 79–97 (1977)

Bloom, B. H.: Space/time trade-offs in hash coding with allowable errors. Commun. ACM 13(7), 422–426 (1970)

Byrka, J., Aardal, K.: An optimal bifactor approximation algorithm for the metric uncapacitated facility location problem. SIAM J. Comput. 39(6), 2212–2231 (2010)

Conn, A. R., Cornuejols, G.: A projection method for the uncapacitated facility location problem. Math. Program. 46(1), 273–298 (1990)

Cornuejols, G., Nemhauser, G.L., Wolsey, L.A.: The uncapacitated facility location problem. In: Mirchandani, P.B., Francis, R.L. (eds.) Discrete Location Theory, pp. 1–54. Wiley, New York (1983)

Cura, T.: A parallel local search approach to solving the uncapacitated warehouse location problem. Comput. Ind. Eng. 59(4), 1000–1009 (2010)

Erlenkotter, D.: A dual-based procedure for uncapacitated facility location. Oper. Res. 26(6), 992–1009 (1978)

Flaxman, A. D., Frieze, A. M., Vera, J. C.: On the average case performance of some greedy approximation algorithms for the uncapacitated facility location problem. Comb. Probab. Comput. 16(05), 713–732 (2007)

Galvão, R. D., Raggi, L. A.: A method for solving to optimality uncapacitated location problems. Ann. Oper. Res. 18(1), 225–244 (1989)

Ghosh, D.: Neighborhood search heuristics for the uncapacitated facility location problem. Eur. J. Oper. Res. 150(1), 150–162 (2003)

Glover, F.: A template for scatter search and path relinking. In: Artificial evolution, pp. 1–51. Springer, Berlin (1998)

Goldengorin, B., Ghosh, D., Sierksma, G.: Branch and peg algorithms for the simple plant location problem. In: Computers and operations research. Comput. Oper. Res. 30(1), 967–981 (2003)

Goldengorin, B., Tijssen, G. A., Ghosh, D., Sierksma, G.: Solving the simple plant location problem using a data correcting approach. J. Global Optim. 25(4), 377–406 (2003)

Guner, A. R., Sevkli, M.: A discrete particle swarm optimization algorithm for uncapacitated facility location problem. J. Artif. Evol. Appl. 2008, 1–9 (2008)

Hansen, P., Brimberg, J., Urošević, D., Mladenović, N.: Primal-dual variable neighborhood search for the simple plant-location problem. INFORMS J. Comput. 19(4), 552 (2007)

Hiriart-Urruty, J.B., Lemaréchal, C.: Convex analysis and minimization algorithms: Fundamentals, vol. 305. Springer, Berlin (1996)

Homberger, J., Gehring, H.: A two-level parallel genetic algorithm for the uncapacitated warehouse location problem. In: Hawaii international conference on system sciences, proceedings of the 41st Annual, p. 67. IEEE (2008)

Julstrom, B.: A permutation coding with heuristics for the uncapacitated facility location problem. Recent advances in evolutionary computation for combinatorial optimization. pp. 295–307 (2008)

Körkel, M.: On the exact solution of large-scale simple plant location problems. Eur. J. Oper. Res. 39(2), 157–173 (1989)

Krarup, J., Pruzan, P. M.: The simple plant location problem: survey and synthesis. Eur. J. Oper. Res. 12(1), 36–81 (1983)

Kratica, J., Tošic, D., Filipović, V., Ljubić, I.: Solving the simple plant location problem by genetic algorithm. Oper. Res. 35(1), 127–142 (2001)

Labbe, M., Louveaux, F.: Location problems. In: Dell’Amico, M., Maffioli, F., Martello, S. (eds.) Annotated bibliographies in combinatorial optimization, pp. 261–281. Wiley, New York (1997)

Letchford, A. N., Miller, S. J.: An aggressive reduction scheme for the simple plant location problem. Tech. rep., Department of Management Science, Lancaster University (2011)

Letchford, A. N., Miller, S. J.: Fast bounding procedures for large instances of the simple plant location problem. Comput. Oper. Res. 39(5), 985–990 (2012)

Li, S.: A 1.488 approximation algorithm for the uncapacitated facility location problem. Automata, languages and programming, pp. 77–88 (2011)

Mahdian, M., Ye, Y., Zhang, J.: Approximation algorithms for metric facility location problems. SIAM J. Comput. 36(2), 411–432 (2006)

Michel, L., Van Hentenryck, P.: A simple tabu search for warehouse location. Eur. J. Oper. Res. 157(3), 576–591 (2004)

Mladenović, N., Brimberg, J., Hansen, P.: A note on duality gap in the simple plant location problem. Eur. J. Oper. Res. 174(1), 11–22 (2006)

Muter, İ., Birbil, S. I., Sahin, G.: Combination of metaheuristic and exact algorithms for solving set covering-type optimization problems. INFORMS J. Comput. 22(4), 603–619 (2010)

Nesterov, Y.: Smooth minimization of non-smooth functions. Math. Program. 103(1), 127–152 (2005)

Resende, M. G.C., Werneck, R. F.: A hybrid multistart heuristic for the uncapacitated facility location problem. Eur. J. Oper. Res. 174(1), 54–68 (2006)

Sun, M.: Solving the uncapacitated facility location problem using tabu search. Comput. Oper. Res. 33(9), 2563–2589 (2006)

Acknowledgments

We wish to thank Paul-Virak Khuong who kindly helped us with our code, improving its performance significantly. We are also grateful to Antonio Frangioni for his bundle method implementation and for taking the time to answer our questions. Finally, we wish to thank the referees and editors which helped improve this paper.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Cache implementation details

An efficient implementation of \(\mathcal {C}\) is crucial to its performance. In our implementation, \(\mathcal {C}\) is a FIFO queue whose maximal size is a predefined parameter. Consequently, an insertion of a new element into a full \(\mathcal {C}\) is preceded by the removal of its oldest element. When inserting a new element into \(\mathcal {C}\), our implementation does not examine the contents of \(\mathcal {C}\) to detect if it is already present, but instead it tests its presence using a counting Bloom filter. A Bloom filter is a data structure originally proposed by Bloom [6] which allows testing efficiently whether a set contains an element (with occasional false positives, but no false negatives). A counting Bloom filter is a variant defined using any vector \(\varvec{\mathbf {\beta }}\) of positive integers and a set \(H\) of different hash functions \(h \in H\) which each map any element possibly in \(\mathcal {C}\) to an index of \(\varvec{\mathbf {\beta }}\):

-

if an element \(e\) is added into \(\mathcal {C}\), then increment \(\varvec{\mathbf {\beta }}_{h(e)}\) by 1 for all \(h \in H\),

-

therefore an element \(e\) is certainly not in \(\mathcal {C}\) if there exists \(h \in H\) for which \(\varvec{\mathbf {\beta }}_{h(e)} = 0\),

-

if an element \(e\) is removed from \(\mathcal {C}\), then decrement \(\varvec{\mathbf {\beta }}_{h(e)}\) by 1 for all \(h \in H\).

Bloom filters are efficient in time and in space even for very large sets. By hard-coding the maximum size of \(\mathcal {C}\) to \(2^{16}-1\) elements, we implemented \(\varvec{\mathbf {\beta }}\) as an array of 16-bit unsigned integers. In our implementation, the dimension of this array is in the low hundred thousands, and \(|H| = 7\). As a consequence, the probability of false positives when testing whether \(e \in \mathcal {C}\) is less than 1%.

When trying to insert a new element \(e\) into \(\mathcal {C}\), the following steps are performed:

-

1.

If \(\varvec{\mathbf {\beta }}_{h(e)} > 0\) for all \(h \in H\), then \(e\) is probably already in \(\mathcal {C}\): go to step 4.

-

2.

If \(\mathcal {C}\) is full, then remove the oldest element \(e'\) in \(\mathcal {C}\) and decrement \(\varvec{\mathbf {\beta }}_{h(e')}\) by 1 for all \(h \in H\).

-

3.

Insert \(e\) into \(\mathcal {C}\) and increment \(\varvec{\mathbf {\beta }}_{h(e)}\) by 1 for all \(h \in H\).

-

4.

Done.

The counting Bloom filter thus ensures the unicity of the elements in \(\mathcal {C}\).

Appendix B: Bundle method iteration limits