Abstract

We consider a class of optimization problems for sparse signal reconstruction which arise in the field of compressed sensing (CS). A plethora of approaches and solvers exist for such problems, for example GPSR, FPC_AS, SPGL1, NestA, \(\mathbf{\ell _1\_\ell _s}\), PDCO to mention a few. CS applications lead to very well conditioned optimization problems and therefore can be solved easily by simple first-order methods. Interior point methods (IPMs) rely on the Newton method hence they use the second-order information. They have numerous advantageous features and one clear drawback: being the second-order approach they need to solve linear equations and this operation has (in the general dense case) an \({\mathcal {O}}(n^3)\) computational complexity. Attempts have been made to specialize IPMs to sparse reconstruction problems and they have led to interesting developments implemented in \(\mathbf{\ell _1\_\ell _s}\) and PDCO softwares. We go a few steps further. First, we use the matrix-free IPM, an approach which redesigns IPM to avoid the need to explicitly formulate (and store) the Newton equation systems. Secondly, we exploit the special features of the signal processing matrices within the matrix-free IPM. Two such features are of particular interest: an excellent conditioning of these matrices and the ability to perform inexpensive (low complexity) matrix–vector multiplications with them. Computational experience with large scale one-dimensional signals confirms that the new approach is efficient and offers an attractive alternative to other state-of-the-art solvers.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We are concerned with the solution of the underdetermined system, \(m < n\), of linear equations

where \(A\in {\mathbb {R}}^{m\times n},\,x\in {\mathbb {R}}^n,\,\hat{b}\in {\mathbb {R}}^m\). In particular, we are interested in the solution \(x\) with the smallest possible number of nonzero elements, otherwise known as the sparsest solution \(\hat{x}\). Such problems arise in the fields of Statistics [32] and Signal processing [9].

The sparsest solution \(\hat{x}\) of system (1) can be found by solving the following problem:

where \(\Vert x\Vert _0=\{\# \text{ of } \text{ nonzero } \text{ entries } \text{ in } x\}\) and “s.t.” stands for “subject to”. The use of zero-norm makes the problem combinatorial and untractable in practice. Recent advances in the field of CS show that in certain situations [9] exact recovery of the sparsest solution \(\hat{x}\) of (1) can be achieved with an overwhelming probability by solving the following Basis Pursuit [14] problem:

where \(\Vert x\Vert _1=\sum \nolimits _{i=1}^n|x_i|\). The problem (3) has a major advantage over (2). Unlike the zero-norm objective in (2), the \(\ell _1\)-norm objective in (3) can be reformulated as a linear function and therefore the problem (3) may be recast as a linear problem and becomes computationally tractable. Having a linear reformulation of (3), standard efficient optimization methods can be used to recover the sparsest solution \(\hat{x}\).

In real-life applications the right hand side of (1) is often corrupted with noise and (1) is replaced with:

where \(e\in {\mathbb {R}}^m\) denotes the error: we assume it has a normal distribution \(e_i \sim {\mathcal {N}}(0,\sigma ^2) \ \forall i=1,2,\dots ,m\). For the noisy case (4) the sparsest solution \(\hat{x}\) can be found by solving one of the following problems:

where \(\tau , \epsilon _1\) and \(\epsilon _2\) are positive scalars that regulate the sparsity and the upper bound on the noise error, respectively. Problem (5a) is the well-known Basis Pursuit Denoising introduced in [14], problem (5b) is the Least Absolute Shrinkage and Selection Operator (LASSO) used frequently in the field of computational statistics [32]. It can be shown using Theorem 27.4 from [34] that the problems in (5) are equivalent for specific values of scalars \(\tau ,\,\epsilon _1\) and \(\epsilon _2\).

Practical problems have large dimensions and off-the-shelf approaches such as the simplex method or the (standard) IPM are often impractical. However, matrices \(A\) that appear in CS problems display several attractive features which may be exploited within an optimization algorithm. This has created an interest in developing specialized approaches to solving such problems.

There have been various first-order methods developed for the solution of (3) and (5). Let us mention the ones known to be the most efficient.

-

Gradient Projection Sparse Reconstruction GPSR [20] defines new variables \(u,v\in {\mathbb {R}}^n\) such that

$$\begin{aligned} |x_i| = u_i + v_i \ \ \forall \, i=1,2,\dots ,n, \end{aligned}$$(6)where \(u_i = \max (x_i,0)\) and \(v_i = \max (-x_i,0)\). Then linearization of the \(\ell _1\)-norm is performed

$$\begin{aligned} \Vert x \Vert _1 = 1_n^{\mathsf {T}} u + 1_n^{\mathsf {T}} v, \end{aligned}$$(7)with \( u,v \ge 0\) and \(1_n \in {\mathbb {R}}^n\) being a column vector of all ones. Using the above linearization technique, GPSR solves the following constrained smooth reformulation of problem (5a)

$$\begin{aligned} \begin{array}{ll} \displaystyle \min _{z\in {\mathbb {R}}^{2n}} &{} \tau 1_{2n}^{\mathsf {T}}z + \dfrac{1}{2}\Vert F^{\mathsf {T}} z- b \Vert _2^2 \\ \text{ s.t.: } &{} z \ge 0, \\ \end{array} \end{aligned}$$(8)where \(z=[u\;;\;v]\in {\mathbb {R}}^{2n},\,F^{\mathsf {T}} = [A \ -A]\in {\mathbb {R}}^{m\times 2n}\). Once optimal values of variables \(u\) and \(v\) are found the solution \(x\) of the initial problem is retrieved by computing

$$\begin{aligned} x = u - v. \end{aligned}$$The price for the linearization is that comparing to the initial BPDN problem (5a) the dimension of the problem is doubled and \(2n\) new non-negativity constraints are added. At each step of the algorithm a line search is performed along the negative gradient direction and the new iterate is projected to the feasible set defined by the imposed constraints \(z\ge 0\).

-

Fixed Point Continuation Active Set FPC_AS [39] solves problem (5a). FPC_AS is a two stage algorithm. At the first stage a shrinkage scheme is employed which aims to spot quickly the nonzero components of the sparse representation. Then the second stage is enabled to solve a smooth version of (5a) limited to the indexes of nonzero components found by the first stage of the algorithm.

-

Spectral Projected Gradient SPGL1 [4] solves any of the problems (3), (5b) and (5c). The SPGL1 is a spectral projection gradient algorithm which iteratively solves (5b) for some values of \(\epsilon _1\), each approximate solution of (5b) is used to build a root-finding problem, which is equivalent to (5c), and is solved by employing a Newton method.

-

NestA [3] solves problem (5c) by using a variant of the Nesterov’s smoothing gradient algorithm [33], which has been proved to have the optimal bound \({\mathcal {O}}({1}/{\epsilon })\) on the number of iterations, where \(\epsilon \) is the required accuracy.

Independently there have been several attempts to design suitable IPM implementations. The most efficient among them, which can also handle large scale CS problems, are listed below.

-

\(\ell _1\_\ell _s\) algorithm [29] solves a constrained smooth reformulation of problem (5a) which allows a straightforward preconditioning of the Newton equation system that is solved with a conjugate gradient method.

-

PDCO algorithm [36] solves regularized constrained smooth reformulations of problems (3) and (5a). The Newton equation system is solved by applying an Least Squares QR factorization (LSQR) method.

Both \(\mathbf{\ell _1\_\ell _s}\) and PDCO have been demonstrated to be robust in comparison with other IPM implementations. However, they are not as accurate and as fast as state-of-the-art first-order methods.

In this paper we present a primal–dual feasible IPM specialized to CS problems. Primal–dual because it iterates simultaneously on primal and dual variables of a smooth reformulation of problem (5a) and feasible because the smooth reformulation of (5a) consists only of conic constraints which are always satisfied. Primal–dual methods have been shown to have the best theoretical convergence properties [30] among various IPMs, but they also enjoy the best practical convergence [24, 40]. Here we give a brief introduction of the structure of primal–dual IPM methods and we discuss important modifications that result in the proposed approach. The actual implementation used in this paper is given in Sect. 5.1.

Primal–dual methods rely on Newton method to calculate primal–dual directions at each iteration. Newton method for primal–dual IPMs finds roots for linearized Karush–Kuhn–Tucker (KKT) systems or their reduced versions known as augmented and normal equations systems. These systems arise as first-order optimality conditions of log-barrier primal–dual pairs. The linearized KKT systems, referred as Newton linear systems, can be solved in two ways,

-

by employing a direct linear solver, or

-

by using an iterative solver, such as Krylov subspace methods [28].

The first option delivers a very robust primal–dual IPM where exact Newton directions are calculated. Despite its robustness this approach has the potential drawback of being computationally expensive. Especially in the case when the Newton linear system does not have an exploitable sparsity pattern and the computational effort per iteration reaches \({\mathcal {O}}(n^3)\).

The second option involves the use of approximate Newton directions. Although this might slightly increase the number of IPM iterations [26, 31], one hopes that the decreased computational effort per iteration should offset such a disadvantage. The performance of iterative methods depends on the spectral properties of the Newton linear system [28] and benefit from the use of appropriate preconditioning techniques which cluster the eigenvalues of the Newton linear system. If the Newton linear system is ill-conditioned and no low-cost preconditioner is applicable, then a direct approach might be more efficient. To conclude, a criterion to select between the two approaches of solving the Newton linear systems should take into account

-

1.

the sparsity pattern of the systems,

-

2.

the existence of a computationally inexpensive preconditioner,

-

3.

the memory requirements of storing problem’s data,

-

4.

the existence of fast matrix–vector product implementations with the matrix of the linear system to be solved.

In this paper, we focus on the situation where there is no particular sparsity pattern, the memory requirements can be high but conditions \(2\) and \(4\) are satisfied. For this reason, a preconditioned conjugate gradient method is more attractive than a direct method. Indeed, in the approach proposed in this paper, at each step of the primal–dual IPM the preconditioned conjugate gradient method is applied to compute an approximate Newton direction. Since we rely on an iterative method for linear algebra, the proposed primal–dual IPM is matrix-free [25], i.e. the explicit problem formulation is avoided and the measurement matrix \(A\) is used only as an operator to produce results of matrix–vector products \(Ax\) and \(A^{\mathsf {T}}y\). Although matrices \(A\) used in CS can be completely dense, i.e. Gaussian, partial Fourier, partial Discrete Cosine Transform (DCT), partial Discrete Sine Transform (DST), Haar wavelets etc, they do have interesting (exploitable) features. Arguments \(3\) and \(4\) are satisfied because for many measurement matrices that appear in sparse signal reconstruction problems there are super-fast algorithms (e.g. \({\mathcal {O}}(n)\) or \({\mathcal {O}}(n\log n)\) complexity) for multiplication by a vector. For example, for Fourier, DCT and DST matrices there exists the FFTW implementation (“Fastest Fourier Transform in the West”) [22] with complexity \({\mathcal {O}}(n\log n)\), for Haar wavelet and Noiselet matrices there exist algorithms of complexity \({\mathcal {O}}(n)\), see [1] and [15], respectively. Finally, to satisfy argument \(2\) we propose a preconditioner efficient on certain problems that is based on the fact that sub-matrices of \(A\) with a given number of columns are uniformly well-conditioned (this is called the Restricted Isometry Property, see the discussion in Sect. 2).

The objective of our developments is to design an IPM which preserves the main advantage of IPM, that is, it converges in merely a few iterations, and removes the main drawback of IPM, that is, avoids expensive computations of the Newton direction. Ideally, we would like to solve the CS problems in \({\mathcal {O}}(\log n)\) IPM iterations and keep the cost of a single IPM iteration as low as possible and not exceeding \({\mathcal {O}}(n \log n)\).

The paper is organized as follows. In Sect. 2 we discuss the particular features of CS matrices that are exploited in our approach. In Sect. 3 we reformulate sparse recovery optimization problems (3) and (5a) to make them suitable for matrix-free IPM. Section 4 concerns finding approximate Newton directions required at each step of the IPM. We calculate the normal equations system formulation of the above stated problem and analyze its properties. We propose an efficient preconditioner that can be used in the preconditioned conjugate gradient method. We prove that under certain conditions (that are satisfied in practice) eigenvalues of the preconditioned matrix are well clustered around 1. In Sect. 5 we compare the proposed matrix-free IPM with other state-of-the-art first and second-order solvers.

2 Properties of compressed sensing matrices

Matrices which appear in sparse reconstruction problems originate from different bases in which signals are represented. What they all have in common are the conditions that guarantee recoverability of the sparsest solution of (1) by means of the \(\ell _1\)-norm minimization (3). The restricted isometry property (RIP) [13] is one of such conditions which shows how efficiently a measurement matrix captures information about sparse signals.

Definition 1

The restricted isometry constant \({\delta }_k\) of a matrix \(A\in {\mathbb {R}}^{m\times n}\) is defined as the smallest \({\delta }_k\) such that

for all at most \(k\)-sparse \(x\in {\mathbb {R}}^n\).

In words, for small \(\delta _k\), statement (9) requires that all column sub-matrices of \(A\) with at most \(k\) columns are well-conditioned. Informally, \(A\) is said to satisfy the RIP if \({\delta }_k\) is small for a reasonably large \(k\). The next theorem due to [21] establishes the relation between the RIP property and the sparse recovery.

Theorem 1

Every \(k\)-sparse vector \(x\in {\mathbb {R}}^{n}\) satisfying \(A x = \hat{b}\) is the unique solution of (3) if

The restricted isometry property also implies stable recovery by \(\ell _1\)-norm minimization for vectors that can be well approximated by sparse ones, and it further implies robustness under noise on the measurements [13].

RIP is a very restrictive condition that depends on the size of the measurement matrix \(A\). Clearly, the more columns \(n\) matrix \(A\) has (the larger the size of the vector \(x\) to recover) the larger \({\delta }_k\) in (9) is (the harder it is to guarantee sparse recovery). On the other hand, number of rows \(m\) of \(A\) is the number of measurements taken and, hence, the RIP constant \(\delta _k\) decreases with \(m\). Currently known measurement matrices satisfying RIP with small number of measurements fall into two categories [35]: (i) random matrices with i.i.d. sub-Gaussian variables, e.g., normalized i.i.d. Gaussian or Bernoulli matrices; (ii) random partial bounded orthogonal matrices obtained by choosing \(m\) rows uniformly at random from a normalized \(n\times n\) Fourier or Walsh–Hadamard transform matrices. Number of measurements required to satisfy the RIP property for both classes of matrices is given in the Table 1.

Although it follows from Table 1 that Gaussian matrices are optimal for sparse recovery, they have limited use in practice because many applications impose structure on the matrix. Furthermore, recovery algorithms are significantly more efficient when the matrix admits a fast matrix–vector product. Due to the two former practical reasons, and since we are dealing with large-scale CS applications we limit ourselves to applications with measurement matrices \(A\) that

-

are not stored explicitly,

-

admit a low-cost matrix–vector product with \(A\) (e.g. \({\mathcal {O}}(n\log n)\) or \({\mathcal {O}}(n)\)).

An important broad class of CS matrices comes from random sampling in bounded orthonormal systems. Partial Fourier matrix mentioned earlier is just one example of this type. Other examples are matrices related to systems of real trigonometric polynomials (partial discrete cosine (DCT) and discrete sine (DST) matrices), Haar wavelets and noiselets. Quite often in applications a signal is sparse with respect to a basis different from the one in which measurements are made. Then it is said that a measurement/sparsity pair is given [9]. Assume that a vector \(z\) is sparse with respect to the basis of columns of a unitary matrix \(\Psi \) (sparsity matrix), i.e. \(z=\Psi x\) for a \(k\)-sparse vector \(x\). Further, assume that \(z\) is sampled with respect to the basis of columns of a unitary matrix \(\Phi \) (measurement matrix): \(y = R_{m}\Phi ^{\mathsf {T}}z\), where \(R_m\) is a random sampling operator which satisfies \(R_mR_m^{\mathsf {T}}=I\). Hence, matrix \(A\) in (1) is equal to \(R_{m}\Phi ^{\mathsf {T}}\Psi \) and its rows are orthonormal:

The recoverability property of matrix \(A\) depends on the value of the so-called mutual coherence \(\mu (\Phi ,\Psi )\) of the measurement/sparsity pair (see [16]):

where \(\phi _i,\psi _i\) are the \(i\)th columns of matrices \(\Phi ,\Psi \), respectively. Coherence simply measures the largest correlation between any two elements of \(\Phi \) and \(\Psi \). Next theorem due to [12] shows that the smaller the value of mutual coherence the better the recoverability property of matrix \(A\).

Theorem 2

Fix \(z\in {\mathbb {R}}^n\) and suppose that the coefficient sequence \(x\) of \(z\) in the unitary \(n\times n\) basis \(\Psi \) is \(k\)-sparse. Select \(m\) measurements in the unitary \(n\times n\, \Phi \) domain uniformly at random. Then if

for some positive constants \(C,C'\), then with overwhelming probability exceeding \(1-p\), the vector \(x\) is the unique solution to the \(\ell _1\)-minimization problem (3) with \(A=R_{m}\Phi ^{\mathsf {T}}\Psi \), where \(R_mR_m^{\mathsf {T}}=I\) and \(A\) has orthonormal rows (10).

Let us note that condition (12) differs from those given in Table 1. Conditions in Table 1 ensure that once the random matrix is chosen, then with high probability all sparse signals can be recovered (uniform recovery). Although, (12) only guarantees that each fixed sparse signal can be recovered with high probability using a random draw of the matrix (nonuniform recovery).

To conclude, CS matrices have many useful properties that must be taken into account in the development of an efficient matrix-free IPM solver. In the current paper we make use only of the most general of them that are satisfied by every CS matrix. First, we weaken a little bit the condition of orthonormality (10) to include random matrices such as Gaussian and Bernoulli:

-

P1: Rows of matrix \(A\) are close to orthonormal, i.e. there exists a small \({\delta }\) such that

$$\begin{aligned} \Vert AA^{\mathsf {T}} - I_m\Vert _2\le {\delta }. \end{aligned}$$(13)

Restricted isometry property (9) on the contrary assumes that columns of \(A\) are normalized. So, our interpretation of the RIP property that will be used throughout the paper is as follows.

-

P2: Every \(k\) columns of \(A\) with \(k \ll m\) are almost orthogonal and have similar norms, i.e. for every matrix \(B\) composed of arbitrary \(k\) columns of \(A\)

$$\begin{aligned} \left\| \frac{n}{m} B^{\mathsf {T}}B - I_k\right\| _2\le {\delta }_k. \end{aligned}$$(14)

By treating property P2 as the chosen RIP, the bound for the RIP constant in Theorem 1 which relies on RIP in (9) will change. The following theorem is a modified version of Theorem 1 when property P2 is used as a RIP.

Theorem 3

Every \(k\)-sparse vector \(x\in {\mathbb {R}}^{n}\) satisfying \(A x = \hat{b}\) is the unique solution of (3) if

where \(\delta _{2k}\) is the minimum constant such that property P2 holds for every \(2k\) columns of matrix \(A\), denoted by matrix \(B\) in P2.

Proof

Let \(x\in {\mathbb {R}}^{n}\) have \(k\) nonzero components and \(B\) in P2 be any \(k\) column submatrix of \(A\). Then from P2 it follows that

Proposition \(2\) in [21] gives bounds for \(\delta _{2k}\) by using the RIP in (9). In our case, we replaced the RIP in (9) with (15). Therefore, the four modified conditions for \(\delta _{2k}\) in Proposition \(2\) in [21] which guarantee that every \(k\)-sparse vector \(x\in {\mathbb {R}}^n\) which satisfies \(A x = \hat{b}\) is the unique solution of (3), take the following forms:

where \(\omega =0,1,\dots \) is an integer variable. Table 2 shows with bold font which condition of the above four is the weakest for \(2\le s\le 8\). This table is equivalent of the table in proof of Theorem 1 in [21]. However, in [21] the table has exact values, where our Table 2 has functions depending on the ratio \({m}/{n}\) instead. Using the same arguments as in proof of Theorem \(1\) in [21] and Table 2 we conclude that every \(k\)-sparse vector \(x\in {\mathbb {R}}^{n}\) satisfying \(A x = \hat{b}\) is the unique solution of (3) if

This completes the proof. \(\square \)

Comparing the two bounds of the RIP constants in Theorems 1 and 3 we observe that the former is smaller, see Fig. 1. For the purpose of the proposed preconditioner, discussed in Sect. 4, the smaller bound on \(\delta _{2k}\) in Theorem 3 results in tighter bounds of the spectral properties of the preconditioned systems. The former is an advantage of property P2 against RIP in (9), used in Theorem 3. However, property P2 and Theorem 3 result in a limitation of the maximum number of sparsity \(k\) for which problem (3) guarantees an exact recovery of the sparsest solution of \(Ax= \hat{b}\). Fortunately, both results in Theorems 1 and 3 are rather pessimistic. It has been shown in [7] that RIP conditions of the form (9) and their scaled versions (P2) or (15) provide worst case scenarios of \(\delta _{2k}\) and consequently of the sparsity level \(k\) such that problem (3) guarantees exact sparse recovery. To support the former argument, we refer the reader to [18], where it is shown that for Gaussian measurement matrices the average maximum sparsity level \(k\) that is guaranteed to be reconstructable by (3) is much greater than the one shown in Theorems 1 and 3. Moreover, it has been shown empirically in [18] that approximately the same result holds for various types of measurement matrices \(A\), i.e. partial Fourier, partial Hadamard, Bernoulli etc. In Sect. 5.8 it is shown that the proposed algorithm satisfies approximately the average maximum sparsity \(k\) shown in [18]. Therefore, we conclude that by replacing RIP (9) with property P2:

-

improved bounds on the spectral properties of the preconditioned systems in Sect. 4 are obtained,

-

a better approximation of matrix \(B^{\mathsf {T}}B\) with a scaled diagonal \(\rho I\) by choosing appropriate constant \(\rho \) is possible and

-

the empirical average reconstruction properties as shown in Sect. 5.8 are maintained.

3 Primal–Dual problems in matrix-free IPM

Non-smooth Basis Pursuit (3) and Basis Pursuit Denoising (5a) optimization problems can be reformulated into equivalent linear and convex quadratic problems, respectively. This is achieved via linearization of the non-smooth \(\ell _1\)-norm in the objective function.

After reformulating the BPDN problem (5a) to (8) as proposed in [20] for GPSR algorithm, we solve the latter using a primal–dual IPM. The reader interested in the theory of primal–dual IPMs is referred to the book of Wright [40]. Aspects of practical implementation have been addressed in a recent survey [24]. A description of the primal–dual IPM used in this paper is given in Sect. 5.1. For the primal problem (8) of interest the dual is

where \({\small \begin{array}{c} c = \left[ \begin{array}{c} \tau 1_n - A^{\mathsf {T}} b \\ \tau 1_n + A^{\mathsf {T}} b \end{array} \right] \in {\mathbb {R}}^{2n}. \end{array}} \)

At each step of the primal–dual IPM applied to the primal–dual pair (8) and (16) the corresponding Newton direction \((\Delta z,\Delta s)\) is computed by solving the following system of linear equations:

where \(S\) and \(Z\) are diagonal matrices with vectors \(s\) and \(z\) on the diagonal, respectively, \(I_{2n}\) denotes an identity matrix of dimension \(2n\) and

\(\mu ={z^{\mathsf {T}}s}/{(2n)}\) is the barrier term of the IPM and \(0\le \sigma \le 1\) the centering parameter. In the matrix-free framework the dual variables \(\Delta s\) in (17) are eliminated to get:

where \(\varTheta = S^{-1}Z \in {\mathbb {R}}^{2n \times 2n}\). The reduced Newton system (19a), also known as normal equations, is solved by an appropriate preconditioned iterative method for which only matrix–vector product with the constraint matrix \(F\) is allowed. Thus, the matrix-free IPM approach has two major components:

-

iterative solver for the normal equations,

-

special-purpose preconditioner that exploits matrix structure.

The next section addresses these two issues.

4 Preconditioned conjugate gradient method

The system (19a) has a symmetric positive definite matrix and the conjugate gradient (CG) method can be employed to solve it in a matrix-free regime. However, the convergence of the CG method can be too slow when a matrix is ill-conditioned and/or its eigenvalues are not clustered. In this section we discuss an efficient spectrally-equivalent diagonal matrix preconditioner for (19a). In particular, we give theoretical and practical justification of our approach to fast iterative solution of the system.

The proposed preconditioner for the system of equations (19a) is based on the exploitation of general properties of CS matrices and the behavior of the \(\varTheta \) matrix in (19a) close to optimality. Let us recall that in the notation of primal–dual pair (8)–(16), variable \(s \in {\mathbb {R}}^{2n}\) is a Lagrange multiplier associated with the non-negativity constraint \(z \ge 0\). Hence, at optimality \(s_j z_j = 0\, \forall \, j = 1,2,\dots , 2n\). IPMs force the convergence to the optimal solution by perturbing this condition \(s_j z_j = \mu \, \forall \, j\), where \(\mu \) is the barrier term of the IPM, and gradually reducing the perturbation \(\mu \) to zero. At optimality indices \(j \in \{ 1, 2,\dots , 2n \}\) are split into two disjoint sets:

that determine the activity of constraints. This partitioning has highly undesirable consequences for the diagonal scaling matrix \(\varTheta = S^{-1} Z\). Indeed, when \(\mu \) approaches zero, for indices \(j \in {\mathcal {B}},\,\varTheta _j\) goes to infinity and for indices \(j \in {\mathcal {N}},\,\varTheta _j\) goes to zero.

Recall that \(z=[u\;;\;v]\), where \(u\) and \(v\) are the positive and negative components of vector \(x\) [see (6)], respectively. For sparse signals there are merely \(k\) (\(k \ll 2n\)) nonzero components in the optimal solution. The positive ones will contribute a nonzero element in \(u\) and the negative ones will contribute a nonzero element in \(v\). At optimality the cardinality of set \({\mathcal {B}}\) is \(k\). Hence, at later iterations of an IPM

Let us now return to the question of preconditioning of the system of equations (19a). Its matrix is

The behavior of matrix \(\varTheta \) near optimality is described by (21). It is clear that matrix \(\varTheta ^{-1}\) has many large entries and only few small entries well before the IPM reaches the optimal solution. Let us introduce a number \(C\gg 1\) that separates entries of \(\varTheta ^{-1}\) of different magnitudes:

Here \(l\) is just the number of small entries in \(\varTheta ^{-1}\) and may be different from the sparsity \(k\) of the optimal solution. In the regime \(l < m\), the second term \(\textit{FF}^{\mathsf {T}}\), whose rank is exactly \(m\), works as a low-rank perturbation for the matrix \(\varTheta ^{-1}\) in (22). Since, in Frobenius norm the first term \(\varTheta ^{-1}\) dominates the second term \(\textit{FF}^{\mathsf {T}}\), we propose to replace \(\textit{FF}^{\mathsf {T}}\) in the preconditioner by a simple approximant. First, let us write system’s matrix of (19a) in the block form by using the facts that \(\varTheta ={{\mathrm{diag}}}(\varTheta _u, \varTheta _v)\) and \(F^{\mathsf {T}} = [A \ -A]\):

Our preconditioner is based on the approximation of \(A^{\mathsf {T}}A\) by the closest (in Frobenius norm) scaled identity matrix \(\rho I_n,\,\rho =m/n\):

To simplify the analysis of the preconditioner, we first consider the case of \(n\times n\) matrices \(H\) and \(P\) rather than block \(2n\times 2n\) ones as defined by (24) and (25). The following lemma establishes spectral properties of the preconditioned matrix \(P^{-1}H\) in the non-block case.

Lemma 1

Define matrix \(H\) as

where \(\varTheta ={{\mathrm{diag}}}(\varTheta _1,\varTheta _2,\dots ,\varTheta _n)\)—diagonal \(n\times n\) matrix with \(\varTheta _j>0\), and \(A\)—\(m\times n\) matrix with \(m \le n/2\). Let \(C\) be any positive constant and \(l\) be defined as in (23), \(\#(\varTheta _{j}^{-1}<C)=l\). Additionally, let \(A\) satisfy property P2 for \(k=l\) with some constant \(\delta _l\). If matrix \(A\) has orthonormal rows (10), then the eigenvalues of matrix \(H\) preconditioned by matrix \(P\):

are clustered around 1, i.e.

If matrix \(A\) has nearly orthonormal rows, i.e. satisfies P1 defined on page 8, then

where \(\delta \) has been defined in P1.

Proof

Let \(C\) be any positive constant, then the following two disjoint sets of indices can be defined:

Let \(B\) and \(N\) be matrices of columns of \(A\) with indices from \({{\mathcal {B}}_C}\) and \({{\mathcal {N}}_C}\), respectively. Without loss of generality we can assume that \({{\mathcal {B}}_C}\) are the first \(l\) indices, then

Let \(\lambda \) be an eigenvalue of the preconditioned matrix \(P^{-1}H\) corresponding to an eigenvector \(v=[v_{{\mathcal {B}}_C}\;;\;v_{{\mathcal {N}}_C}]\) of norm one, then

or, in the block form,

Obviously, eigenvalues of \(P^{-1}H\) are all real, hence \(\tau \) is also real. Multiplication of (28) by \([v_{{\mathcal {B}}_C}\;;\;v_{{\mathcal {N}}_C}]^{\mathsf {T}}\) from the left gives

Let us denote \(\Vert v_{{\mathcal {B}}_C}\Vert _2^2\) by \({\alpha }\), then \(\Vert v_{{\mathcal {N}}_C}\Vert _2^2=1-{\alpha }\) since \(v=[v_{{\mathcal {B}}_C}\;;\;v_{{\mathcal {N}}_C}]\) has unit norm. Bounding left hand side of (29) from below is trivial:

Next, let us bound right hand side of (29) from above. We will distinguish two cases, orthonormal and nearly orthonormal rows of matrix \(A\). First, we study the case of nearly orthonormal rows of matrix \(A\). For this purpose we will use the SVD decompositions of matrices \(B\) and \(N\):

and

Restricted isometry property P2 implies that

First, notice that

Using property P1 we have

Next, using (32) obtain

and, hence,

Finally,

because our assumptions \(m \le n/2\) and \({\delta }_l<1\) imply \(\sigma _i^2\le \sigma _1^2\le \rho (1+{\delta }_l)<1\). We conclude that

Bounds (33) and (34) are sharp and can be used to obtain very tight estimate on \(\tau \) but we do not need them that sharp to obtain a sufficiently good estimate. So, we will release them a little bit to simplify the analysis:

Using (30), (31) and (35) we finally get

Let us denote \(\xi =(1+\delta -\rho +2\sqrt{1+\delta })\) and show that \(\varepsilon \) is small for large values of \(C\). Indeed (36) implies that

It can be checked by simple calculus, that \(\sqrt{x}\le C_1x+C_2\) on \([0,1]\) whenever \(C_1\ge {1}/{(4C_2)}\). In our case this implies

The largest solution of the quadratic equation in \(\varepsilon \)

is

Hence, it is sufficient to take any \(\varepsilon \ge {\xi ^2}/{(4\rho {\delta }_l^2 C)}\) to satisfy the inequality (36):

This completes the proof for matrix \(A\) which satisfies property P1. For the case of orthonormal rows of matrix \(A\), i.e. \(AA^{\mathsf {T}}=I_m\) simply set \(\delta =0\) in property P1 to get

This completes the proof. \(\square \)

For the result of the theorem to be useful we obviously need the bound in the right-hand side of inequalities in (37) and (38) to be sufficiently smaller than one. Let us take a closer look at the terms forming this bound. We are free to choose any value for the constant \(C\) we want, the larger the better. However, according to (23), \(l\) increases with the increase in \(C\) and, consequently, the restricted isometry constant \({\delta }_l\) also increases. Inequalities (37) and (38) hold for any value of \(C\), hence we can replace it with

and

and choose constant \(C\) that delivers the minimum.

For number of measurements \(m\) just a fraction \(\rho =1/4\) of the length \(n\) of the unknown signal, it is natural to assume the restricted isometry constant \({\delta }_{2l}\) to be \(<1/4\) (see Theorem 3), hence, according to [6], \({\delta }_{2l}<1/4\), implies \({\delta }_l<1/4\). Therefore, to have \(|\tau |\le 17/20\) we need \(C=20(0.75+\delta +2\sqrt{1+\delta })^2/3\) in (23). For nearly orthonormal rows of matrix \(A\) we can assume that \(\delta \le 1\), which gives us \(C\approx 139.74\) and certainly holds near optimality in the IPM. For orthonormal rows of matrix \(A\) we have \(\delta =0\), hence, \(C\approx 50.41\).

The bounds in (37) and (38) are rather pessimistic. Computational experience suggests that eigenvalues of the preconditioned matrix get well clustered around 1 as long as \(l=\#(\varTheta _{j}^{-1}<1)\) is such that the RIP constant \({\delta }_l <1\). For example, for the discrete cosine (DCT) matrix with \(n=2^{10}\) and \(m=2^8\) the corresponding \(l\le 74\) (this number is obtained in a series of random tests).

Now we are ready to state the spectral properties of the preconditioned matrix \(P^{-1}H\) for the system of equations (19a). We leave the theorem without a proof as it a straightforward corollary of Lemma 1.

Theorem 4

Let \(H\) and \(P\) be block matrices defined in (24) and (25), respectively. Then the preconditioned matrix \(P^{-1}H\) has

-

1.

the eigenvalue \(1\) of multiplicity \(n\);

-

2.

remaining \(n\) eigenvalues defined in Lemma 1 with \(\varTheta =\varTheta _u+\varTheta _v\).

Theorem 4 establishes the clustering of eigenvalues of \(P^{-1}H\) around \(1\). Hence, iterative method such as conjugate gradient applied to the system of equations (19a) is expected to converge in just a few iterations if the preconditioner \(P\) in (25) is used. The previous theoretical results are also confirmed in practical experiments. Figure 3 demonstrates clustering of eigenvalues \(\lambda (H)\) and \(\lambda (P^{-1}H)\) in the case that the \(A\) matrix in \(H\) (24) is a Discrete Cosine Transform (DCT) matrix with normalized rows, \(AA^T=I\). The parameters for the size of the problem are set to \(m=2^{10},\,n=2^{12}\) and the sparsity level is fixed to \(k=51\). In the left sub-Fig. 2a the clustering of the eigenvalues \(\lambda (H)\) is shown. Every vertical line presents the spreading of \(\lambda (H)\) at a particular CG call as the matrix-free IPM progresses. One can observe that the clustering worsens as the matrix-free IPM approaches optimality. On the contrary, eigenvalues of the preconditioned matrices \(P^{-1}H\) show the opposite behavior. In particular, as the matrix-free IPM progresses eigenvalues \(\lambda (P^{-1}H)\) start to cluster around one. The latter is depicted with the vertical columns in the right sub-Fig. 2b.

Clustering of the eigenvalues for the matrices \(H\) and \(P^{-1}H\) as the matrix-free IPM approaches optimality. The matrix \(A\) in \(H\) (24) is a DCT matrix with normalized rows. The parameters of the problem set to \(m=2^{10},n=2^{12}\) and \(k=51\). Twenty systems for the matrices \(H\) and \(P^{-1}H\) are solved in total

5 Computational experience

We illustrate our developments by comparing the matrix-free IPM’s efficiency with those of the state-of-the-art first-order methods, FPC_AS and SPGL1 and with two other interior point based solvers, \(\mathbf{\ell _1\_\ell _s}\) and PDCO. The experiments are made on Sparco test suite [5].

We use the FPC_AS CG version of FPC_AS algorithm, where “CG” stands for the conjugate gradient method. The FPC_AS CG has been shown in [39] to be considerably faster than other versions of FPC and FPC_AS software packages. The FPC_AS CG solves problem (5a). The code of FPC_AS CG package can be found at http://www.caam.rice.edu/~optimization/L1/FPC_AS/. We use the SPGL1_bp version of SPGL1 software package for noiseless signals and the SPGL1_bpdn version for noisy signals, where “bp” stands for basis pursuit and “bpdn” for basis pursuit denoising, respectively. The SPGL1_bp solves problem (3) and the “bpdn” version solves problem (5c). The code of SPGL1 package can be found at http://www.cs.ubc.ca/labs/scl/spgl1. Those versions of the FPC_AS and SPGL1 software packages were found to be faster and more accurate than other first-order methods mentioned in Sect. 5.2. Therefore, GPSR and NestA solvers are excluded from the comparison. The \(\ell _1\_\ell _s\) solver implements problem (5a), it can be found at http://www.stanford.edu/~boyd/l1_ls/. The PDCO solver is used through the file SolveFasBP.m of SparseLab software package. The PDCO solver can be found at http://www.stanford.edu/group/SOL/software/pdco.html and the SparseLab software package at http://sparselab.stanford.edu/. The PDCO solver implements problems (3) and (5a).

In addition, three more experiments are performed. The first one tests the robustness of solvers matrix-free IPM, SPGL1_bpdn, FPC_AS CG and \(\ell _1\_\ell _s\), on problems of Sparco test suite, given a fixed level of noise. The second, replaces the core of matrix-free IPM, which is the preconditioned CG with a direct solver and shows how the CPU time required for reconstruction scales for each case. The third, demonstrates that the empirical phase transition properties of matrix-free IPM fit the theoretical average phase transition properties shown in [18].

All solvers used in this section, including the matrix-free IPM are MATLAB implementations. All experiments were performed using MATLAB version R\(2012\)b (\(8.0.0.783\)) \(64\)-bit on a Dual \(8\) Core Intel Xeon (Sandybridge) running Redhat Enterprise Linux in \(64\)-bit mode. Finally, the RICE Wavelet toolbox, included in Sparco test suite, was compiled using gcc compiler version \(4.4.6 20120305\) (Red Hat 4.4.6-4). The matrix-free IPM, the data files and the MATLAB scripts used to generate the results in this section can be downloaded from http://www.maths.ed.ac.uk/ERGO/mfipmcs/.

Before proceeding to the following subsections it would be convenient for the reader to be familiarized with symbols and abbreviations used in the subsequent figures and comparison tables explained in Table 3.

5.1 Single centrality corrector primal–dual matrix-free IPM

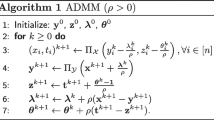

The implementation used in this paper is a single-corrector primal–dual IPM [23]. The original version proposed in [23] makes use of multiple centrality correctors, however, after computational experimentation it was observed that a single corrector was enough for the fast convergence of the IPM in few iterations. In a standard multiple-corrector variant at every iteration multiple centrality corrector directions are calculated, which are combined with a predictor direction in order to produce the final primal–dual direction [23]. To compute the corrector and predictor directions one needs to solve multiple linear systems (19) where only the right hand side varies. In case that a direct solver is used to solve the linear systems, the extra cost of solving several equations instead of one is negligible, because the dominating cost is the decomposition of the matrix \((\varTheta ^{-1} + \textit{FF}^{\mathsf {T}})\). However, this is not the case when iterative method (PCG) is used to solve systems (19). In particular, the cost of calculating every term in composite direction is approximately the same. In order to avoid the high cost of computing extra corrector directions at every iteration in our single-corrector matrix-free IPM we slightly bias the predictor direction to point to the central path and perform corrector directions only when necessary. Like a long-step variant of primal–dual IPM [40] this guarantees that at every iteration the objective function is decreased rapidly while the algorithm maintains the small distance to the central path. As proposed in [23], the criterion to decide whether a corrector direction is calculated is the value of the primal and dual step sizes. When many biased predictor directions are performed the primal–dual iterates tend to approach the boundary of the feasible region. This results in small step sizes of the subsequent iterations. When this happens a strong re-centering corrector is employed which pushes the next iteration to the vicinity of central path such that next step sizes are more likely to have large values. Ideally, the values of the step sizes of the primal and dual directions should be bounded away from zero while global convergence of the method is guaranteed. This would allow fast practical convergence of matrix-free IPM, which translates into few iterations. Indeed, one can observe from the computational experience reported in Sect. 5.7 that \(10\) to \(20\) iterations of the matrix-free IPM is enough for convergence. This behaviour has been observed also in all computational experiments discussed in Sect. 5.5. We make our software available to the research community so that the interested reader can reproduce any numerical experiments from this paper. The pseudo-code of the implemented single-corrector primal–dual matrix-free IPM follows.

The input parameters \(\sigma _1,\sigma _2\) are user-defined and control the centering bias of the predictor directions, while \(\sigma _3\) parameter controls the strong centering in the corrector directions. For all experiments they have been set to \(\sigma _1=0.1,\,\sigma _2=0.5\) and \(\sigma _3=0.8\). The input parameters \(\bar{\alpha }\) and \(\tilde{\alpha }\) are user-defined, \(\bar{\alpha }\) controls whether \(\sigma _1\) or \(\sigma _2\) will be used as a centering parameter for the predictor directions and \(\tilde{\alpha }\) controls the frequency of the corrector updates. For all experiments they have been set to \(\bar{\alpha }=0.5\) and \(\tilde{\alpha }=0.1\).

5.2 Benchmarks

In order to have a base of comparison we choose to show the efficiency of the matrix-free IPM on already existing benchmarks, which have been used by several researchers including [4, 39]. Experiments are performed on \(18\) real valued sparse reconstruction problems, see Table 4, from the Sparco collection [5]. In total, Sparco collection consists of \(26\) problems, out of which \(6\) are complex valued and \(20\) real valued. For the experiments in this section, the complex valued problems with IDs \(1,\,4,\,8,\,501\)–\(503\), are ignored since the matrix-free IPM manipulates only real data. Moreover, \(2\) out of the \(20\) real valued problems, with IDs \(703\) and \(901\), are also ignored because of their difficulty to be generated on any machine in a stand-alone approach, since, they require external packages such as CurveLab [8] and FFTW [22]. For problems in Table 4 with IDs \(401\)–\(403\), 601–603, \(701\) and \(702\), the optimal representation \(\hat{x}\) is not given by Sparco toolbox. Therefore, the SPGL1_bp solver is used to obtain \(\hat{x}\) with required high accuracy. In particular to obtain \(\hat{x}\), the parameters of SPGL1_bp are set to

where bpTol controls the tolerance for identifying a basis pursuit solution, optTol controls the optimality tolerance and decTol controls the frequency of Newton updates. Some of the components of the obtained solution from the SPGL1_bp might be nearly but not exactly zero, hence, as nonzero components are considered the ones in the set \(nnz(x) := \{k=1,2,\ldots ,n \ | \ \sum \nolimits _{i=1}^k|\bar{x}_i|\le 0.999\Vert x\Vert _1\}\), where \(\bar{x}\) is the vector \(x\) sorted in decreasing order of absolute values of its components. Then we set \(\hat{x}_i=x_i\) if \(i\in W\) otherwise \(\hat{x}_i=0\), where \(W := \{i=1,2,\ldots ,n \ | \ i\in nnz (x) \}\).

Noise is introduced to the noiseless measurements \(\hat{b}\) using the following command in MATLAB:

The function awgn is a MATLAB function from Communications Systems Toolbox which adds white Gaussian noise to signal \(\hat{b}\). The SNR is the signal to noise ratio, measured in dB. The ‘measured’ option specifies that the power of the signal is calculated first before the addition of the noise.

5.3 Equivalence of \(\text{ BP }_{\epsilon _2}\) and BPDN

It has already being stated in Sect. 1 that problems \(\text{ BP }_{\epsilon _2}\) in (5c) and BPDN in (5a) are equivalent given particular parameters \(\epsilon _2\) and \(\tau \). In this paper the tested solvers implement problem \(\text{ BP }_{\epsilon _2}\), i.e. SPGL1_bpdn, or problem BPDN, i.e. matrix-free IPM, FPC_AS CG, \(\ell _1\_\ell _s\) and PDCO. In order to perform a fair comparison among these solvers it has to be made certain that all codes solve equivalent problems. Otherwise, different optimal solutions will be obtained, therefore, a straightforward and clear comparison would be impossible. Unfortunately, exact values of \(\epsilon _2\) and \(\tau \) which make problems \(\text{ BP }_{\epsilon _2}\) and BPDN equivalent are not known a priori, except for the case of orthogonal matrix \(A\). However, given \(\epsilon _2\) an approximate \(\tau \) can be computed such that an approximate equivalence holds.

According to [4] given \(\epsilon _2\) the parameter \(\tau \) which makes problems \(\text{ BP }_{\epsilon _2}\) and BPDN equivalent, is the optimal Lagrange multiplier of the dual problem of \(\text{ BP }_{\epsilon _2}\). Since, SPGL1_bpdn outputs both the primal iterates and the optimal Lagrange multiplier of \(\text{ BP }_{\epsilon _2}\), it can be used to approximately find \(\tau \). Having such a parameter \(\tau \) the BPDN solvers, matrix-free IPM, FPC_AS CG \(\ell _1\_\ell _s\), PDCO and the \(\text{ BP }_{\epsilon _2}\) solver SPGL1_bpdn can be legitimately compared.

Moreover, in order to be able to compare the quality of the reconstructed representations for each solver when solving equivalent problems, the optimal sparsest representation for a particular level of noise needs to be known in advance. This is definitely not the case when noise is added manually by the user to a noiseless signal \(\hat{b}\) using (42). Due to manual corruption of signal \(\hat{b}\), the energy of the added noise \(\epsilon _2=\Vert e\Vert _2\) is known in advance. Hence, solving \(\text{ BP }_{\epsilon _2}\) will give the optimal sparsest representation for this particular level of noise, \(\epsilon _2\). This solution is obtained by first calling SPGL1_bpdn solver to solve \(\text{ BP }_{\epsilon _2}\) by setting \(\epsilon _2=\Vert e\Vert _2\) with required high accuracy, see (41). During this process the approximate \(\tau \) which makes problems \(\text{ BP }_{\epsilon _2}\) and BPDN equivalent is obtained from SPGL1_bpdn as has been described before. Hence, it is concluded that approximate \(\tau \) and optimal sparse representations can be calculated such that a fair comparison can be conducted.

Finally, for noiseless signals \(\hat{b}\), the problem is easier. Problems BP (3) and BPDN (5a) are almost equivalent for sufficiently small \(\tau \), i.e. 1.0e\(-\)10. However, such a small \(\tau \) can make the \(\ell _1\)-norm in BPDN numerically negligible, see Figure 6.2 in [4] for numerical examples. For the former reason, if such a case is observed for BPDN solvers, parameter \(\tau \) is set experimentally to a larger value, their values are given in Table 6.

5.4 Termination criteria and parameter tuning

Termination of the compared solvers is forced when a solution of similar quality to the one of matrix-free IPM is obtained. In order to do so, the termination criteria of the compared solvers are changed. In particular, SPGL1 solver is terminated when the following criteria are satisfied

where \(x_W^k\) is the projected representation at the \(k\)th iteration of SPGL1 and \(x_W^m\) is the projected representation obtained by matrix-free IPM. Solvers FPC_AS, \(\ell _1\_\ell _s\) and PDCO are terminated when the following conditions are satisfied

Using these criteria for the compared solvers it is made certain that the reconstructed representations have approximately the same \(\ell _1\)-norm, \(\ell _2\)-norm of residual \(Ax_W-b\) and number of non zero elements in \(x_W\). The differentiation of the termination criteria for solver SPGL1 is done because SPGL1 solves problem \(\text{ BP }_{\epsilon _2}\), unlike all other codes which solve the BPDN problem. Hence, it is more natural and fair for SPGL1 to be compared with other solvers using termination criteria in SPGL1 way.

Occasionally, certain solvers required too many matrix–vector products without achieving a solution of similar quality to the one delivered by the matrix-free IPM. In this case the solvers were terminated when \({ nMat} > 40{,}000\).

Regarding the parameter tuning of the compared solvers, all their parameters are set to their default values. For the matrix-free IPM the following parameters need to be set.

-

tol: Relative duality gap of primal–dual pair (8) and (16). For noisy problems, this parameter varies between 1.0e\(-\)6 and 1.0e\(-\)10. For noiseless problems it varies between 1.0e\(-\)7 and 1.0e\(-\)14.

-

maxiters: Maximum number of iterations. For all problems this parameter is set to \(100\).

-

tolpcg: Tolerance of preconditioned CG method. For noisy problems this parameter varies between 1.0e\(-\)1 and 1.0e\(-\)2 and for noiseless ones it varies between 1.0e\(-\)1 and 1.0e\(-\)6.

-

mxiterpcg: Maximum number of iterations of preconditioned CG method. For all problems this parameter is set to \(200\).

Since a large number of experiments has been performed, the exact parameter tuning of matrix-free IPM is not given here. However, it can be found in the MATLAB scripts which reproduce the results in this section, see http://www.maths.ed.ac.uk/ERGO/mfipmcs/.

Finally, the parameters \(\epsilon _2\) and \(\tau \) in problems (5c) and (5a), respectively, for noisy problems are set as described in Sect. 5.3 for \(\epsilon _2=\Vert e\Vert _2\). For noiseless problems \(\tau \) is set to arbitrarily small values given in Table 6.

5.5 Comparison

In this section we present computational results obtained for the Sparco collection problems discussed in the Benchmarks section. Both noisy and noiseless measurements are considered. Noise is added to measurements using (42) by fixing the \(\text{ SNR }=60\) dB. A comparison among the previously mentioned solvers is made in terms of the quality of reconstruction and computational effort. The results of experiments are shown in Table 5. The first column in Table 5 shows the IDs of the Sparco problems. For each ID the first and second sub-rows give results for noisy and noiseless measurements, respectively. The second column reports the \(\ell _1\)-norm of the projected reconstructed representation for matrix-free IPM. The third column shows the relative error \(r.e\), see Table 3, of the projected reconstructed representation that was achieved by matrix-free IPM. The forth column shows the \(\ell _2\)-norm of the residual, denoted by \(res\) in Table 3, for matrix-free IPM. The rest of the table shows the number of matrix–vector products, nMat, that were needed by each solver to reconstruct a solution of similar quality to the one of matrix-free IPM. In cases when number of matrix–vector products required by a solver exceeded \(40{,}000\), the solver was terminated with a failure status. To be precise, it is a failure to converge to a solution similar to the one obtained by matrix-free IPM. Problems for which the matrix-free IPM converged with the lowest number of matrix–vector products among all solvers compared are denoted in bold. In Table 6 are shown the regularization parameters \(\tau \) for noiseless signals that were used for BPDN for solvers matrix-free IPM, FPC_AS, \(\ell _1\_\ell _s\) and PDCO. Finally, for noiseless signals the version SPGL1_bp of SPGL1 solver is called.

One can observe in Table 5 that the matrix-free IPM was the fastest solver in 11 out of 36 noisy and noiseless problems, while it was the second fastest for another 14 problems, denoted by italic font. It is important to be mentioned that the performance of the compared solvers crucially depends on the condition number of matrices build of subsets of columns of matrix \(A\) with cardinality \(\kappa \), less than \(m\), i.e. full-rank sub-matrices of \(A\). Unfortunately, it is a computationally demanding task to check the condition number of every full-rank sub-matrix for the problems shown in Table 4. Nevertheless, by experimenting with a few sub-matrices one can get a picture of how well-conditioned sub-matrices of \(A\) might be.

Based on the previous criterion we observed that on problems that the matrix-free IPM was first or second, matrix \(A\) had relatively ill-conditioned sub-matrices, at least for the ones that we experimented with. The previous implies that the proposed preconditioner was not as efficient as predicted in Sect. 4. However, the ill-conditioning also adversely affected the performance of SPGL1 and FPC_AS, as shown in Table 5. On the contrary, on problems that matrix \(A\) seemed to have well-conditioned sub-matrices, the preconditioner was very efficient, which resulted in a very fast matrix-free IPM. However, SPGL1 and FPC_AS were faster. For example, see problems with IDs \(2,\,3,\,7\) and \(902\).

5.6 Robustness to noise

In this subsection we compare the matrix-free IPM with SPGL1, FPC_AS CG, \(\ell _1\_\ell _s\), in terms of their reconstruction capabilities for different levels of noise. The results collected in Table 5 and analysed in Sect. 5.5 reveal that PDCO and \(\ell _1\_\ell _s\) demonstrate comparable efficiency but the latter is usually faster. Therefore, solver PDCO will not be used in our further experiment.

For this experiment, the level of noise is varied from \(\text{ SNR }=10\) dB to \(\text{ SNR }=120\) dB with a step of \(10\) dB. The quality of reconstruction for all solvers is measured using the amplitude criterion [38]

The main purpose of using the \(amp\) criterion, instead of \(r.e\), is that the former amplifies the \(r.e\), the nominator of \(amp\), as \(\Vert e\Vert _2\rightarrow 0\). Hence, less accurate representations will be emphasized.

As in Sect. 5.5 when the optimal representation \(\hat{x}\) of BP is unknown it is calculated approximately using solver SPGL1_bp with required high accuracy (41). In order to have a fair comparison it is necessary to know at least approximately the parameter \(\tau \) which makes problems \(\text{ BP }_{\epsilon _2}\) and BPDN equivalent and moreover, the optimal sparse representation of \(\text{ BP }_{\epsilon _2}\) for \(\epsilon _2=\Vert e\Vert _2\). The former issues are solved as described in Sect. 5.3.

To compare the solvers the following criterion is defined

where \(rampd\) stands for relative amplitude difference, \(x_W^*\) is the reconstructed projected representation by solvers matrix-free IPM, FPC_AS CG, \(\ell _1\_\ell _s\), and \(x_W^s\) is the reconstructed projected representation of solver SPGL1_bpdn. Notice that if \(rampd\) equals zero, then the representation \(x_W^*\) is of better quality than \(x_W^s\), otherwise the inverse is true.

In Table 7 is shown the average value of \(rampd\) over all SNR for each solver. The first column of Table 7 reports the ID of every Sparco problem. From the second to the forth column the average \(rampd\) over all SNR for each solver is shown. The last three columns report the average \(rampd\) for SNRs from \(10\) to \(60\) dB for each solver. Notice in Table 7 that matrix-free IPM for problems with IDs \(2\)–\(11\) and \(701\)–\(903\) was consistently recovering a high quality solution. For problems with IDs \(401\)–\(603\) for \(\text{ SNR }>60\) dB all BPDN solvers, matrix-free IPM, FPC_AS CG and \(\ell _1\_\ell _s\), were unable to reconstruct an adequate representation and this is in contrast to SPGL1. A similar observation has been reported in [4]. In this work the authors mentioned that this issue of BPDN solvers might be due to very small regularisation parameter \(\tau \), obtained from SPGL1 solver as the energy of noise is decreased. In this case, the regularization effect of the \(\ell _1\)-norm starts to be negligible and the solvers face considerable numerical difficulties. However, in our experiments we observed for these problems that not always the \(\tau \) parameter was small and additionally, there were other problems were \(\tau \) was even smaller but successful reconstruction was possible. Therefore, we conclude that this failure of BPDN solvers might be problem dependent.

5.7 Preconditioned conjugate gradient method against direct linear solver

In this subsection we replace PCG in steps \(8\) and \(10\) of matrix-free IPM with a direct linear solver. It has been mentioned in Sect. 1 that direct linear solvers are efficient when the system to be solved is sufficiently sparse. However, for CS the systems (19) to be solved are completely dense due to density of matrix \(A\). For this reason, large scale problems are not storable in a moderate computer with \(8\) GB of random access memory. Even worse, matrix \(A\) might be an algorithmic operator, i.e. DCT, therefore, direct solvers cannot be employed. Hence, direct linear solvers for CS inside an IPM are only applicable when the measurement matrix \(A\) is explicitly available, i.e. Gaussian matrix, and only for small scale problems, i.e. \(n=2^{12}\) or smaller. In addition to the former disadvantages of a direct solver for CS problems, its computational complexity for systems (19) will be of order \({\mathcal {O}}(n^3)\). This is a well known result, for completely dense linear systems. Therefore, it is expected that for very small instances the two approaches might require similar CPU time to converge, while as dimensions grow the CPU time of the IPM version with the direct linear solver will increase rapidly. Indeed, this is confirmed by Fig. 3a.

Despite the higher computational effort required by direct solvers for CS problems, such an approach will produce exact Newton directions, hence, one would expect that IPM iterations will be the minimum possible. Surprisingly, in Fig. 3b we show, that matrix-free IPM with PCG requires as few iterations as its IPM version with a direct linear solver. Indeed, recent analysis of [26] indicates that allowing the use of inexact Newton directions in an IPM does not adversely affect the worst-case complexity result of this method.

In the experiments reported in Fig. 3a, b matrix \(A\) is Gaussian, the sparsity pattern of the optimal representation \(\hat{x}\) is chosen at random, while the nonzero components follow a standard normal distribution. The noiseless measurements are produced by \(\hat{b}=A\hat{x}\). The size of problem \(n\), is varied from \(2^5\) to \(2^{12}\) with a step of times \(2\), the measurements \(m\) are varied from \(2^3\) to \(2^{10}\) with a step of times \(2\) and the sparsity level \(k\) is set to \(\lceil m/{20}\rceil \). Finally, the \(\tau \) parameter in BPDN problem (5a) is set to \(\tau =\)1.0e\(-\)3. To solve the linear systems we use the mldivide function of MATLAB, which in case of symmetric real matrices with positive diagonal, i.e. (19), performs Cholesky factorisation. For details of the mldivide function we refer the reader to http://www.mathworks.co.uk/help/matlab/math/systems-of-linear-equations.html.

5.8 Average phase transition

Recently, it has been shown in [18] that for any problem instance (\(A,b\)), where \(A\) is Gaussian, there is a maximum ratio \(\bar{\nu }_{\rho }=k/m\) given \(\rho =m/n\) that below of it the problems (3) or (5a) guarantee on average reconstruction of the optimal sparse representation. The previous has been introduced as the notion of average phase transition for Gaussian matrices. Moreover, it has been shown empirically that other measurement matrices such as partial Fourier, partial Hadamard, Bernoulli etc, have the same average phase transition properties. Ideally, an efficient \(\ell _1\)-regularization solver should have empirical average phase transition at the same level \(\bar{\nu }_{\rho }\).

In this section we show that the empirical phase transition properties of matrix-free IPM fit the average Gaussian phase transition properties by reproducing a similar experiment as that in Section \(2\) of [18]. Let us now explain the experiment. The parameter \(n\) is fixed to \(n=1{,}000\). The measurements \(m\) are varied from \(m=100\) to \(m=900\) with a step of \(100\). For each of the nine measurements \(m\) the sparsity of the optimal representation is varied from \(k=1\) to \(k=m\) with a step of one and for each \(k,\,100\) trials are conducted. The sensing matrix \(A\) is chosen by taking randomly \(m\) rows from an \(n\times n\) normalized discrete cosine transform matrix. Each nonzero coefficient of the sparse representation is set to \(\pm 1\) with equal probability, while the sparsity pattern is chosen at random. All the generated problems are solved using the matrix-free IPM solver, the reconstruction is considered successful when \(r.e\le \) 1.0e\(-\)5. For each ratio \(\nu _{\rho }\) we compute the success ratio \(p(\nu _{\rho })=S/100\), where \(S\) is the number of trials for which the \(r.e\le \) 1.0e\(-\)5. It has been demonstrated empirically in [18] that for any problem instance (\(A,b\)), where \(A\) is a partial DCT matrix a solver with average phase transition properties has \(\displaystyle \max \{\nu _{\rho } \ | \ p(\nu _{\rho })\ge 0.5 \}\approx \bar{\nu }_{\rho }\). The previous means that the empirical average phase transition for \(50~\%\) success rate overlaps with the theoretical average phase transition for Gaussian matrices. In Fig. 4, we plot the empirical phase transition for \(50~\%\) success rate of matrix-free IPM and the theoretical average phase transition. The two curves overlap.

6 Conclusions

We propose and implement a computationally inexpensive matrix-free primal–dual interior point method, based on [25] and [23], for the \(\ell _1\)- regularized problems arising in the field of Compressed Sensing. At every iteration of the proposed primal–dual interior point method the direction is obtained by solving the linear system (19a) using the conjugate gradient method. Unfortunately, the matrices \(\varTheta ^{-1} + \textit{FF}^{\mathsf {T}}\) in these systems tend to be ill-conditioned as the algorithm converges, hence, the conjugate gradient method might get slow. To remedy this ill-conditioning we propose a low-cost preconditioner for the conjugate gradient method. The proposed preconditioning technique exploits features of Compressed Sensing matrices as well as interior point methods. Its efficiency is justified theoretically and confirmed in numerical experiments.

Computational experience presented in this paper shows that although the Compressed Sensing research community seems to favor first-order methods, a specialized (matrix-free) interior point method is very competitive and offers a viable alternative.

References

Ahmed, N., Rao, K.R.: Orthogonal transforms for digital signal processing. Springer, Berlin (1975)

Baraniuk, R., Davenport, M., DeVore, R., Wakin, M.: A simple proof of the restricted isometry property for random matrices. Constr. Approx. 28(3), 253–263 (2008)

Becker, S., Bobin, J., Candés, E.J.: Nesta: a fast and accurate first-order method for sparse recovery. SIAM J. Imaging Sci. 4(1), 1–39 (2011)

Van Den Berg, E., Friedlander, M.P.: Probing the Pareto frontier for basis pursuit solutions. SIAM J. Sci. Comput. 31(2), 890–912 (2008)

Van Den Berg, E., Friedlander, M.P., Hennenfent, G., Herrman, F.J., Saab, R., Yılmaz, Ö.: Sparco: a testing framework for sparse reconstruction. ACM Trans. Math. Softw. 35(4), 1–16 (2009)

Blanchard, J.D., Cartis, C., Tanner, J.: Decay properties of restricted isometry constants. Comput. Optim. Appl. 16(7), 572–575 (2009)

Blanchard, J.D., Cartis, C., Tanner, J.: Compressed sensing: how sharp is the restricted isometry property? SIAM Rev. 53(1), 105–125 (2011)

Candés, E., Demanet, L., Donoho, D., Ying, L.: Curvelab. http://www.curvelet.org/software.html

Candés, E.J.: Compressive sampling. In: Proceedings of the International Congress of Mathematicians (2006)

Candés, E.J., Romberg, J.: Practical signal recovery from random projections. In: Proceedings of the SPIE Conference on Wavelet Applications in Signal and Image Processing XI, 5914 (2004)

Candés, E.J., Romberg, J.: \(\ell _1\)-magic. Technical Report, Caltech. http://users.ece.gatech.edu/justin/l1magic/ (2007)

Candés, E.J., Romberg, J.: Sparsity and incoherence in compressive sampling. Inverse Probl. 23(3), 969–985 (2007)

Candés, E.J., Romberg, J., Tao, T.: Stable signal recovery from incomplete and inaccurate measurements. Comm. Pure Appl. Math. 59(8), 1207–1223 (2006)

Chen, S.S., Donoho, D.L., Saunders, M.A.: Atomic decomposition by basis pursuit. SIAM J. Sci. Comput. 20(1), 33–61 (1998)

Coifman, R., Geshwind, F., Meyer, Y.: Noiselets. Appl. Comput. Harmon. Anal. 10(1), 27–44 (2001)

Donoho, D.L., Huo, X.: Uncertainty principles and ideal atomic decomposition. IEEE Trans. Inf. Theory 47(7), 2845–2862 (2001)

Donoho, D.L., Johnstone, I.M.: Ideal spatial adaptation by wavelet shrinkage. Biometrika 81(3), 425–455 (1994)

Donoho, D.L., Tanner, J.: Precise undersampling theorems. Proc. IEEE 98(6), 913–924 (2010)

Dossal, C., Mallat, S.: Sparse spike deconvolution with minimum scale. Proc. Signal Process. Adapt. Sparse Struct. Represent. 81(3), 123–126 (1994)

Figueiredo, M.A.T., Nowak, R.D., Wright, S.J.: Gradient projection for sparse reconstruction: application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process. 1(4), 586–597 (2007)

Foucart, S.: A note on guaranteed sparse recovery via 1-minimization. Appl. Comput. Harmon. Anal. 29(1), 97–103 (2010)

Frigo, M., Johnston, S.G.: The design and implementation of FFTW3. Proc. IEEE 93(2), 216–231 (2005)

Gondzio, J.: Multiple centrality corrections in a primal–dual method for linear programming. Comput. Optim. Appl. 6, 137–156 (1996)

Gondzio, J.: Interior point methods 25 years later. Eur. J. Oper. Res. 218(3), 587–601 (2012)

Gondzio, J.: Matrix-free interior point method. Comput. Optim. Appl. 51(2), 457–480 (2012)

Gondzio, J.: Convergence analysis of an inexact feasible interior point method for convex quadratic programming. SIAM J. Optim. 23(3), 1510–1527 (2013)

Hennenfent, G., Herrmann, F.J.: Random sampling: new insights into the reconstruction of coarsely-sampled wavefields. In: SEG International Exposition and 77th Annual Meeting (2007)

Kelley, C.T.: Iterative methods for linear and nonlinear equations, volume 16 of Frontiers in Applied Mathematics. SIAM, Philadelphia (1995)

Kim, S.-J., Koh, K., Lustig, M., Boyd, S., Gorinevsky, D.: An interior-point method for large-scale \(\ell _1\)-regularized least squares. IEEE J. Sel. Top. Signal Process. 1(4), 606–617 (2007)

Kojima, M., Megiddo, N., Mizuno, S.: A primal-dual infeasible-interior-point algorithm for linear programming. Math. Progr. 61, 263–280 (1993)

Lu, Z., Monteiro, R.D.S., O’Neal, J.W.: An iterative solver-based infeasible primal–dual path-following algorithm for convex quadratic programming. SIAM J. Optim. 17, 287–310 (2006)

Miller, A.J.: Subset selection in regression. Chapmain & Hall/CRC, London (2002)

Nesterov, Y.: Smooth minimization of non-smooth functions. Math. Program. 103(1), 127–152 (2005)

Rockafellar, R.T.: Convex analysis, Princeton landmarks in mathematics and physics. Princeton University Press, USA (1970)

Rudelson, M., Vershynin, R.: On sparse reconstruction from Fourier and Gaussian measurements. Commun. Pure Appl. Math. 61(8), 1025–1045 (2008)

Saunders, M., Kim, B.: PDCO: Primal-dual interior method for convex objectives. Technical Report, Stanford University. http://www.stanford.edu/group/SOL/software/pdco.html (2002)

Takhar, D., Laska, J.N., Wakin, M., Duarte, M., Baron, D., Sarvotham, S., Kelly, K. K., Baraniuk, R.G.: A new camera architecture based on optical-domain compression. In: Proceedings of the IS &T/SPIE Symposium on Electronic Imaging: Computational, Imaging, 6065 (2006)

Thomson, A.: Compressive single-pixel imaging. In: Proceedings of the 2nd IMA Conference on Mathematics in Defence, Defence Academy, Shrivenham, UK (2011)

Wen, Z., Yin, W., Goldfarb, D., Zhang, Y.: A fast algorithm for sparse reconstruction based on shrinkage, subspace optimization and continuation. SIAM J. Sci. Comput. 32(4), 1809–1831 (2010)

Wright, S.J.: Primal–dual interior-point methods. SIAM, Philadelphia (1997)

Author information

Authors and Affiliations

Corresponding author

Additional information

J. Gondzio is supported by EPSRC Grant EP/I017127/1.

Rights and permissions

About this article

Cite this article

Fountoulakis, K., Gondzio, J. & Zhlobich, P. Matrix-free interior point method for compressed sensing problems. Math. Prog. Comp. 6, 1–31 (2014). https://doi.org/10.1007/s12532-013-0063-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12532-013-0063-6

Keywords

- Matrix-free interior point

- Preconditioned conjugate gradient

- Compressed sensing

- Compressive sampling

- \(\ell _1\)-regularization