Abstract

We present an exact rational solver for mixed-integer linear programming that avoids the numerical inaccuracies inherent in the floating-point computations used by existing software. This allows the solver to be used for establishing theoretical results and in applications where correct solutions are critical due to legal and financial consequences. Our solver is a hybrid symbolic/numeric implementation of LP-based branch-and-bound, using numerically-safe methods for all binding computations in the search tree. Computing provably accurate solutions by dynamically choosing the fastest of several safe dual bounding methods depending on the structure of the instance, our exact solver is only moderately slower than an inexact floating-point branch-and-bound solver. The software is incorporated into the SCIP optimization framework, using the exact LP solver QSopt_ex and the GMP arithmetic library. Computational results are presented for a suite of test instances taken from the Miplib and Mittelmann libraries and for a new collection of numerically difficult instances.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Mixed-integer programming (MIP) is a powerful and flexible tool for modeling and solving decision problems. Software based on these ideas is utilized in many application areas. Despite their widespread use, few available software packages provide any guarantee of correct answers or certification of results. Possible inaccuracy is caused by the use of floating-point (FP) numbers [25]. FP-calculations necessitate the use of built-in tolerances for testing feasibility and optimality, and can lead to calculation errors in the solution of linear-programming (LP) relaxations, in the methods used for creating cutting planes to improve these relaxations and in pre-solving routines applied to strengthen models.

Due to a number of reasons, for many industrial MIP applications near optimal solutions are sufficient. Cplex [26], for example, terminates if the relative gap between upper and lower bound is less then 0.001 (relative MIP optimality tolerance). Moreover, when data describing a problem arises from imprecise sources, exact feasibility is usually not necessary. Nonetheless, accuracy is important in many settings. Direct examples arise in the use of MIP models to establish fundamental theoretical results and in subroutines for the construction of provably accurate cutting planes. Furthermore, industrial customers of MIP software request modules for exact solutions in critical applications. Such settings include the following.

-

Chip design verification in the VLSI design process [2].

-

Compiler optimization, including instruction scheduling [45].

-

Combinatorial auctions [19], where serious legal and financial consequences can result from incorrect solutions.

Chip design verification and compiler optimization are applications where demonstrating that a particular MIP instance has no feasible solutions is equivalent to verifying the correctness of a proposed point. For pure feasibility problems such as these, accurate answers are extremely important.

The article describing the latest version of the mixed-integer programming library, Miplib 2010, discusses the limitations of finite-precision arithmetic in the context of mixed-integer programming [29]. Problem instances were collected from a wide range of applications and a number of the instances were classified as numerically unstable. We now report some computational behavior observed on these instances after they were passed to different solvers using a variety of parameter settings. When called to solve the instance transportmoment, under default parameter settings, SCIP 2.1 [2, 3, 46] (using the SoPlex 1.6 [47] LP solver) reports to have found an optimal solution, while Cplex 12.3 claims that the instance is infeasible or unbounded. However, if presolving and cutting planes are disabled, SCIP claims the problem to be unbounded, (but warns of an error in the proof of unboundedness), and Cplex reports finite primal and dual bounds. Another example from Miplib 2010 is the instance ns2122603 which at the printing of the paper [29] was incorrectly thought to be infeasible, the answer returned by Cplex 12.2 (and 12.3); after disabling presolving in Cplex, a feasible solution can quickly be identified.

Other examples of numerically difficult MIPs occur in the chip design verification instances collected by Tobias Achterberg [2]. There are a total of 98 instances, which are publicly available for download [1]. These instances model property checking on simple arithmetic logical units (ALU). Proving infeasibility of an alu instance certifies the correctness of the unit, whereas a feasible solution gives a counter example to the correctness of the design. Although the instances are pure IPs defined by integral data, incorrect conclusions are reached on some of them. For example, the instance alu10_7, when calling SCIP 2.1 or Cplex 12.3 with default settings or with cutting planes and presolving disabled, we get the three different solution values 83, 84, 91. However, none of these values are correct as, by construction, the instance is known to be infeasible. The solutions returned by the solvers only violate the constraints by a small amount and satisfy the relative tolerance thresholds used to measure feasibility, so they are accepted as valid solutions and returned to the user. Further numerically difficult instances are presented in Sect. 6.

Software libraries such as the GNU Multiple Precision Arithmetic Library (GMP) [24] offer routines for infinite-precision rational arithmetic; in contrast to the commonly used finite-precision arithmetic systems, GMP dynamically allocates as much memory as is necessary to exactly represent numbers and is limited only by the available system memory. We use the terms symbolic or exact when referring to this type of exact computation over the rational numbers; we use the terms numeric or approximate when referring to the use of inexact finite-precision and floating-point computation. One straightforward strategy to solve MIPs exactly would be to implement the standard solution procedures entirely in exact arithmetic. Unfortunately, it has been observed that optimization software relying exclusively on exact arithmetic can be prohibitively slow [8]. This motivates the development of more sophisticated algorithms to compute exact solutions. Significant progress has been made recently toward computationally solving LP models exactly over the rational numbers using hybrid symbolic/numeric methods [8, 20, 22, 27, 30], including the release of the software QSopt_ex [7]. Exact MIP has seen less computational progress, but significant first steps have been taken. An article by Neumaier and Shcherbina [35] describes methods for safe MIP computation, including strategies for generating safe LP bounds, infeasibility certificates, and cutting planes. Their methods include directed rounding and interval arithmetic with FP-numbers to avoid incorrect results.

This article introduces a hybrid branch-and-bound approach for solving MIPs exactly over the rational numbers. It can be extended to a branch-and-cut algorithm with primal heuristics and presolving; but the focus of this article is on the development of the basic branch-and-bound approach. Section 2 describes how exact rational and safe-FP computation can be coupled together, providing a fast and general framework for exact computation. Section 3 discusses several methods for computing valid LP bounds, a critical component of the hybrid approach. It describes an exact branch-and-bound implementation within SCIP and includes detailed computational results on a range of test libraries comparing different dual bounding strategies. In Sect. 4, the implementation is further improved by incorporating sophisticated branching rules. The resulting exact solver is compared against a floating-point solver restricted to pure branch-and-bound and observed to be only moderately slower. In Sect. 5, it is used to test the accuracy of current floating-point MIP solvers and in Sect. 6 it is applied to a test set of numerically difficult instances. As our focus has been exclusively on the branch-and-bound procedure, the exact solver is still not directly competitive with the full version of SCIP. However, it is realistic to think that the future inclusion of additional MIP machinery such as cutting planes, presolving, and primal heuristics into this exact framework could lead to a full featured exact MIP solver that is not prohibitively slower than its inexact counterparts.

2 Hybrid rational/safe floating-point approach

Two ideas for exact MIP proposed in the literature, and tested to some extent, are the pure rational approach [8] and the safe-FP approach [17, 35]. Both utilize LP-based branch-and-bound. The difference lies in how they ensure the computed results are correct.

In the pure rational approach, correctness is achieved by storing the input data as rational numbers, by performing all arithmetic operations over the rational numbers, and by applying an exact LP solver [22] in the dual bounding step. This approach is especially interesting because it can handle a broad class of problems: MIP instances described by rational data. However, replacing all FP-operations by rational computation increases running times significantly. For example, while the exact LP solver QSopt_ex avoids many unnecessary rational computations and is efficient on average, Applegate et al. [8] observed a greater slowdown when testing an exact MIP solver that relied on rational arithmetic and called QSopt_ex for each node LP computation (see also Sect. 3.1).

In order to limit the degradation in running time, the idea of the safe-FP approach is to continue to use FP-numbers as much as possible, particularly within the LP solver. However, extra work is necessary to ensure correct decisions in the branch-and-bound algorithm. Correctness of certain computations can be ensured by controlling the rounding mode for FP-operations. Valid dual bounds can often be obtained by post-processing approximate LP solutions; this type of safe dual bounding technique has been successfully implemented in Concorde [6] for the traveling salesman problem. A generalization of the method for MIPs is described in [35]. Furthermore, the idea of manipulating the rounding mode can be applied to cutting-plane separation. In [17], this idea was used to generate numerically safe Gomory mixed-integer cuts. Nevertheless, whether the safe-FP approach leads to acceptable running times for general MIPs has not been investigated. Although the safe-FP version of branch-and-bound has great advantages in speed over the pure rational approach, it has several disadvantages. Everything, including input data and primal solutions, is stored as FP-numbers. Therefore, correct results can only be ensured for MIP instances that are given by FP-representable data and that have a FP-representable optimal solution if they are feasible. Some rationally defined problems can be scaled to have FP-representable data. However, this is not always possible due to the limited representation of floating-point numbers, and the resulting large coefficients can lead to numerical difficulties. The applicability is even further limited as the safe dual bounding method discussed in [35] requires, in general, lower and upper bounds on all variables. Weakness in the safely generated bound values may also increase the number of nodes processed by the branch-and-bound solver. Additionally, due to numerical difficulties, some branch-and-bound nodes may only be processable by an exact LP solver.

To summarize, the pure rational approach is always applicable but introduces a large overhead in running time while the safe-FP approach is more efficient but of limited applicability.

Since we want to solve MIPs that are given by rational data efficiently and exactly we have developed a version of branch-and-bound that attempts to combine the advantages of the pure rational and safe-FP approaches, and to compensate for their individual weaknesses. The idea is to work with two branch-and-bound procedures. The main procedure implements the rational approach. Its result is surely correct and will be issued to the user. The other one serves as a slave procedure , where the faster safe-FP approach is applied. To achieve reasonable running time, whenever possible the expensive rational computation of the main procedure will be skipped and certain decisions from the faster safe-FP procedure will be substituted. In particular, safe dual bound computations in the slave procedure can often replace exact LP solves in the main procedure. The rational procedure provides the exact problem data, allows for the storage of exact primal solutions, and makes exact LP solves possible whenever needed.

The complete procedure is given in Algorithm 1. The set of FP-representable numbers is denoted by \(\mathbb{M }\); lower and upper approximations of \(x\in \mathbb{Q }\) are denoted \(\underline{x} \in \mathbb{M }\) and \(\overline{x} \in \mathbb{M }\), respectively. We now explain the details of the algorithm.

The slave procedure, which utilizes the safe-FP approach, works on a MIP instance with FP-representable data. It is set up in Step 1 of the algorithm. If the input data are already FP-representable, both procedures solve the same MIP instance, i.e., \(\widetilde{P} := P \) and \(\widetilde{c} := c \) in Step 1. Otherwise, an approximation of the MIP with \(P \approx \widetilde{P} \), \(c \approx \widetilde{c} \) or a relaxation with \(P \subseteq \widetilde{P} \), \(c = \widetilde{c} \) is constructed; called FP-approximation and FP-relaxation , respectively. The choice depends on the dual bounding method applied in the slave procedure (see Sect. 3).

On the implementation side, we maintain only a single branch-and-bound tree. At the root node of this common tree, we store the LP relaxations of both procedures: \(\max \{c ^{T}x: x \in LP \}\) with \(LP :=\{x \in \mathbb{R }^{n}:A x \le b \}\) and \(\max \{\widetilde{c} ^{T}x: x \in \widetilde{LP } \}\) with \(\widetilde{LP }:=\{x \in \mathbb{R }^{n}: \widetilde{A} x \le \widetilde{b} \}\). In addition, for each node, we know the branching constraint that was added to create the subproblem in both procedures. Branching on variables, performed in Step 8, introduces the same bounds for both procedures.

The use of primal and dual bounds to discard subproblems (see Steps 5, 6, and 7) is a central component of the branch-and-bound algorithm. In particular, in the exact MIP setting, the efficiency highly depends on the strength of the dual bounds and the time spent generating them (Step 5). The starting point of this step is an approximate solution of the LP relaxation of the MIP. It is obtained in the slave procedure by an LP solver that works on FP-numbers and allows rounding errors; referred to as inexact LP solver. Depending on the result, we check whether the exact LP relaxation is also infeasible or we compute a safe dual bound by post-processing the approximate LP solution. Different techniques are discussed in Sect. 3 and are computationally evaluated in Sect. 3.6.

Dual and primal bounds are stored as FP-numbers and the bounding in Step 6 is performed without tolerances; a computed bound that is not FP-representable is relaxed in order to be safe. For the primal (lower) bound \(L\), this means \(L < c ^{\text{ MIP }} \) if the objective value \(c ^{\text{ MIP }}\) of the incumbent solution \(x ^{\text{ MIP }}\) is not in \(\mathbb{M }\).

Algorithm 1 identifies primal solutions by checking LP solutions for integrality. This check, performed in Step 7, depends on whether the LP was already solved exactly at the current node. If so, we test, without tolerances, the integrality of the rational LP solution. Otherwise, we decide if it is worth solving the LP exactly. We deem it worthy if the approximate LP solution is nearly integral. In this case, we solve the LP exactly, using the corresponding basis to warm start the LP solver (hopefully with few pivots and no need to increase the precision) and perform the exact integrality test on the rational LP solution. In order to correctly report the optimal solution found at the end of Step 3, the incumbent solution (that is, the best feasible MIP solution found thus far) and its objective value are stored as rational numbers.

3 Safe dual bound generation

This section describes several methods for computing valid LP dual bounds in Step 5 of Algorithm 1. The overall speed of the MIP solver will be influenced by several aspects of the dual bounding strategy; how generally applicable the method is, how quickly the bounds can be computed and how strong the bounds are.

3.1 Exact LP solutions

The most straightforward way to compute valid LP bounds is to solve each node LP relaxation exactly. This strategy is always applicable and produces the tightest possible bound. The fastest exact rational LP solver currently available is QSopt_ex [7]. The strategy used by this solver can be summarized as follows: the basis returned by a double-precision LP solver is tested for optimality/feasibility by symbolically computing the corresponding basic solution, if it is suboptimal or infeasible then additional simplex pivots are performed with an increased level of precision and this process is repeated until the optimal basis is identified. When possible, the extended precision pivots are warm started with the previously identified LP basis. This method is considerably faster than using rational arithmetic exclusively; it was observed to be only two to five times slower than inexact LP solvers on average over a large test set [8].

In most cases, the double-precision LP run already produced an optimal basis, so the overhead mainly came from computing and verifying the exact rational basic solution. For some instances, this dominates the overall solution time. The costs associated with solving each basis systems exactly may be especially noticeable in the MIP setting. Within a branch-and-bound framework the dual simplex algorithm can be warm started with the final basis computed at the parent node, usually resulting in a small number of dual simplex pivots.

If the basis determined by the double-precision subroutines of QSopt_ex is not optimal, the additional increased precision simplex pivots and additional exact basic solution computations significantly increase the solution time. It is important to note that the solution time of the exact LP solver is influenced not only by the dimension, density, structure, etc., of the LP, but also by the number of bits required to encode the data and solution.

3.2 Basis verification

This strategy avoids the extended precision simplex pivoting that can occur when solving each node LP exactly, but sometimes results in more nodes being processed.

Any exactly feasible dual solution provides a valid dual bound, even if it is not optimal. Therefore, instead of solving each node LP exactly, valid dual bounds can be determined by symbolically computing only the dual solution from a numerically obtained LP basis. If the obtained dual solution is feasible, its objective value gives a valid bound. If it is infeasible, then instead of performing the extra steps required to identify the exact optimal solution, an infinite dual bound is returned. However, if a finite bound was computed at the node’s parent, this bound can be inherited, strengthening an infinite dual bound from basis verification. Within the branch-and-bound algorithm, infinite or weak dual bounds can lead to more branching, but due to the fixing of variables, branching often remediates numerical problems in the LP relaxations down in the tree.

3.3 Primal-bound-shift

Valid bounds can also be produced by correcting approximate dual solutions to be exactly feasible. A special case occurs when all primal variables have finite upper and lower bounds. The following technique was employed by Applegate et al. in the Concorde software package [6] and is described more generally for MIPs by Neumaier and Shcherbina [35]. Consider a primal problem of the form \(\max \{c ^{T}x: Ax \le b, 0\le x \le u \}\) with dual \(\min \{b^{T}y + u^{T}z: A^{T}y + z \ge c,\;y,\,z \ge 0\}\). The dual variables \(z \), which correspond to the primal variable bounds, appear as non-negative slack variables in the dual constraints; they can be used to correct any errors existing in an approximate dual solution. Given an approximate dual solution \((\tilde{y},\,\tilde{z})\), an exactly feasible dual solution \((\hat{y},\, \hat{z})\) is constructed by setting \(\hat{y} _i := \max \{0,\,\tilde{y} _i\}\) and \(\hat{z} _i := \max \{0,\,(c-A^{T}\hat{y})_i\}\). This gives the valid dual bound \(b^{T}\hat{y} + u^{T} \hat{z} \). This bound can be computed using floating-point arithmetic with safe directed rounding to avoid the symbolic computation of the dual feasible solution, but note that this requires the slave procedure to work on an FP-relaxation of the original problem (Step 1 of Algorithm 1).

The simplicity of computing this bound means that it is an excellent choice when applicable. However, if some primal variable bounds are large or missing it may produce weak or infinite bounds, depending on the feasibility of \((\tilde{y},\, \tilde{z})\).

3.4 Project-and-shift

Correcting an approximate dual solution to be exactly feasible in the absence of primal variable bounds is still possible. Consider a primal problem of the form \(\max \{c ^{T}x: Ax \le b\}\) with dual \(\min \{b^{T}y: A^{T}y = c,\, y \ge 0\}\). An approximate dual solution \(\tilde{y} \) can be corrected to be feasible by projecting it into the affine hull of the dual feasible region and then shifting it to satisfy all of the non-negativity constraints, while maintaining feasibility of the equality constraints. These operations could involve significant computation if performed on a single LP. However, under some assumptions explained below, the most time consuming computations need only be performed once, at the root node of the branch-and-bound tree, and reused for each node bound computation.

To efficiently reuse information through the tree we assume that \(A^{T}\) has full row rank, and that none of the dual variables are implied to be zero. In this case, an LU factorization of a full rank subset of columns \(S\) of \(A^{T}\) is computed, this can be reused at every subsequent node of the branch-and-bound tree to compute projections. Also, a point \(y ^*\) satisfying \(A^{T}y = c,\, y \ge 0\) and \(y _i > 0~\forall \, i \in S\) is computed at the root node, and will remain dual feasible at all nodes in the branch-and-bound tree. If the root node dual problem is as above, the dual problem at any node can be represented as \(\min \{b^{\prime T}y + b^{\prime \prime T} z : A^{T}y + A^{\prime \prime T}z = c,~ y,\,z \ge 0 \}\) where \(b^{\prime T}\le b^{T}\) and the additional dual variables \(z\) correspond to newly introduced primal variable bounds or cutting planes.

An approximately feasible node dual solution \((\tilde{y},\, \tilde{z}) \ge 0\) can be corrected to be exactly feasible by performing the following two steps. First, the violation of the constraints is calculated exactly as \(r := c-A^{T}\tilde{y} - A^{\prime \prime T}\tilde{z} \) and a correction vector \(w\) satisfying \(A^{T}w = r\) is computed in exact arithmetic using the pre-computed LU factorization; adding \(w\) to the approximate solution projects it to satisfy the equality constraints of the problem exactly. This solution \((\tilde{y} + w,\, \tilde{z})\) might violate the non-negativity constraints, but can only have negative components in the set \(S\). Second, a convex combination of this projected point and \(y ^*\) is computed as \((\hat{y},\, \hat{z}) := (1-\lambda )(\tilde{y} + w,\, \tilde{z}) + \lambda (y ^*,\, 0 )\), such that \((\hat{y},\, \hat{z}) \ge 0\). The resulting point \((\hat{y},\, \hat{z})\) is then exactly feasible since it is a convex combination of two points satisfying all of the equality constraints and gives a valid dual bound \(b^{\prime T}\hat{y} + b^{\prime \prime T}\hat{z} \).

Thus, the root node computations involve solving an auxiliary LP exactly to obtain the point \(y ^*\) and symbolically LU factoring a matrix; the cost of each node bound computation is dominated by performing a back-solve of a pre-computed symbolic LU factorization, which is often faster than solving a node LP exactly. This method is more generally applicable than the primal-bound-shift method, but relies on some conditions that are met by most, but not all, of the problems in our test set. Namely, it is assumed that \(A^{T}\) has full row rank and that no dual variables are implied to be zero. A detailed description and computational study of this algorithm can be found in [43]. A related method is also described by Althaus and Dumitriu [5].

3.5 Combinations and beyond

The ideal dual bounding method is generally applicable, produces tight bounds, and computes them quickly. Each of the four methods described so far represents some trade-off between these conflicting characteristics. The exact LP method is always applicable and produces the tightest possible bound, but is computationally expensive. The primal-bound-shift method computes valid bounds very quickly, but relies on problem structure that may not always be present. The basis verification and project-and-shift methods provide compromises in between, with respect to speed and generality. The relative performance of these dual bounding methods highly depends on the (sub)problem structure, which may change throughout the tree. Therefore, a strategy that combines and switches between the bounding techniques is the best choice for an exact MIP solver intended to efficiently solve a broad class of problems.

In Sect. 3.6, we will evaluate the performance of each dual bounding method presented here and analyze in what situations which technique works best. In a final step, we then study different strategies to automatically decide how to compute safe dual bounds for a given MIP instance. The central idea of the automatic selection strategy is to apply fast primal-bound-shift as often as possible and if necessary employ another method depending on the problem structure. In this connection, we will address the question of whether this decision should be static or dynamic.

In the first version (“Auto ”), Algorithm 1 decides on the method dynamically in Step 5. At each node primal-bound-shift is applied, and in case it produces an infinite bound one of the other methods is applied. The drawbacks are that it allows for unnecessary computations and that it requires an FP-relaxation for the slave procedure in order to support primal-bound-shift. Alternatively, we can guess whether primal-bound-shift will work (“Auto-Static ”). Meaning the dual bounding method is selected depending on the problem structure at the beginning of Algorithm 1, in Step 1, and remains fixed throughout the tree. This allows us to work with FP-approximations whenever we do not select primal-bound-shift. As we will see in the following section, dynamically choosing the dual bounding method at each node achieves superior performance.

After establishing that the dynamic choice of the bounding method is a good strategy, we consider additional ideas, giving two different variants of the “Auto ” setting. First, in the “Auto ” setting, safe dual bounds are computed at every branch-and-bound node. We will analyze (“Auto-Limited ”), whether it is better to compute them only at those nodes where it is required, i.e., if the unsafe dual bound coming from the approximate LP solution would lead to pruning (using tolerances for the comparison with the primal bound). Pruning decisions are critical for the correctness of the final result and have to be safely verified, whereas correct dual bounds are not required for subproblems which will be further processed by branching.

Second, we experiment with interleaving our selection strategy “Auto ” with exact LP solves (“Auto-Ileaved ”). A safe dual bound obtained by primal-bound-shift can be weaker than the exact LP bound. Sometimes this difference slows down the solution process unnecessarily strong because the solver keeps branching in subtrees that would have been cut off by the exact LP bound. To eliminate some of these cases, we compute the exact LP bound whenever the safe bound from primal-bound-shift is very close to cutting off the node, but not close enough. More precisely, if the bound is within a tolerance smaller than or equal to the primal bound, but not without the tolerance. The hope is that the exact LP bound is slightly stronger and then cuts off the node. Computational results and additional discussion about these ideas are given in Sect. 3.6.3.

3.6 Computational study

In this section, we investigate the performance of our exact MIP framework employing the different safe dual bounding techniques discussed above: primal-bound-shift (“BoundShift ”), project-and-shift (“ProjectShift ”), basis verification (“VerifyBasis ”), and exact LP solutions (“ExactLP ”). We will first look at each method at the root node, to study their behavior when applied to a single LP, then examine them within the branch-and-bound algorithm. At the end, we discuss and test strategies to automatically switch between the most promising bounding techniques.

As explained in Sect. 3.3, we have to create an FP-relaxation of the original problem in Step 1 of Algorithm 1 when we want to apply primal-bound-shift, whereas we can use an FP-approximation for the other bounding methods. The discussed algorithms were implemented into the branch-and-bound algorithm provided by the MIP framework SCIP 1.2.0.8 [2, 3, 46], using best bound search with plunging for node selection and first fractional variable branching. All additional features of SCIP, like cutting planes, presolving, and primal heuristics, were disabled. For comparison, we also consider the corresponding inexact version of SCIP, i.e., the pure branch-and-bound algorithm with the same node selection strategy and branching rule as in the exact setting (“Inexact ”). To solve LPs approximately and exactly we call Cplex 12.2 [26] and QSopt_ex 2.5.5 [7], respectively. Rational computations are based on the GMP library 4.3.1 [24]. In the following, we will refer to the above version numbers of the software packages if not otherwise stated. All benchmark runs were conducted on 2.5 GHz Intel Xeon E5420 CPUs with 4 cores and 16 GB RAM each. To maintain accurate results only one computation was run at the same time. We imposed a time limit of 24 h and a memory limit of 13 GB. The timings used to measure computation times are always rounded up to one second if they are smaller. We used the same set-up for the experiments in Sect. 4, 5, and 6.

Our test set contains all instances of the Miplib 3.0 [10] and Miplib 2003 [4] libraries and from the Mittelmann collections [33] that can be solved within 2 h by the inexact branch-and-bound version of SCIP (“Inexact ”). This gives a test suite of 57 MIP instances (see Table 2), which we call easy test set . Note that we also analyzed the performance on the remaining, harder, instances of the libraries. The conclusions drawn here, on the smaller suite, were confirmed by these results. At the root node, the individual dual bounding methods were applicable for a similar percentage of instances and also computed bounds of good quality. The overall slowdown factor of the exact solver (“Auto-Ileaved ” versus “Inexact ”) can be expected to be in the same order as for the easy test set. We drew this conclusion by looking at the number of branch-and-bound nodes which both solvers had processed when they hit a certain time limit (but had not solved the instance to optimality). On the easy test set, as we will see later, the exact and the inexact solver require a similar number of branch-and-bound nodes to solve an instance to optimality. This is because the considered benchmarking libraries contain mainly instances that do not cause serious numerical difficulties, and therefore, we expect the number of nodes processed within the time limit to be a good indicator of how much slower the exact code will be.

The easy test set will also be used in Sects. 4 and 5 for studying different branching rules and checking the accuracy of the inexact version of SCIP, respectively. In Sect. 6, we will analyze our exact solver on numerically more difficult instances.

3.6.1 Root node performance

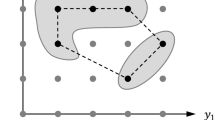

We start by evaluating the root node behavior of the dual bounding methods. Our performance measures are: time overhead and bound quality. The performance profile, see [21], in Fig. 1 visualizes the relative overhead times for the safe dual bounding methods. For each of them, it plots the number of instances for which the safe dual bounding step was performed within a given factor of the bounding time of the fastest method. Table 1 presents the geometric mean of these additional safe dual bounding times in seconds (“DB” ) and states the number of instances for which a certain dual bound quality was achieved.

This quality is given by the relative difference between the computed safe dual bound \(c ^{\star } \) and the exact LP value \(c ^{\star \star }:= \max \{c ^{T}x: x \in LP_{j} \}\). However, we actually compare the FP-representable upper approximations of both values, as used in Algorithm 1, and define the relative difference as \(d:= (\overline{c ^{\star }} - \overline{c ^{\star \star }}) / \max \{1, |\overline{c ^{\star }} |, |\overline{c ^{\star \star }} | \} \). The corresponding columns in Table 1 are: “Zero” difference for \(d = 0\), “S(mall)” difference for \(d \in (0,10^{-9}]\), “M(edium)” difference for \(d \in (10^{-9},10^{-3}]\), and “L(arge)” difference for \(d \in (10^{-3},\infty )\). Column “\(\infty \)” counts the worst case behavior, i.e., infinite dual bounds.

We observe that basis verification has a similar behavior as exact LP for the root node. Still, as we will see in the next section, it gives an improvement over the exact LP solver when expensive basis repair steps are required to find the exact LP solution at certain branch-and-bound nodes.

As expected, primal-bound-shift is the fastest method. However, it produces infinite dual bounds on 16 instances in contrast to only two failsFootnote 1 for project-and-shift and no fails for basis verification. This is, the bases obtained by Cplex are often dual feasible and even optimal and project-and-shift meets its requirements most of the time. Still, the finite bounds provided by primal-bound-shift are of very good quality; most of them fall into the “Zero” and “S(mall)” categories. Thus, when primal-bound-shift works we expect to obtain strong bounds and whenever it fails we assume basis verification or project-and-shift to be applicable.

Where basis verification is in most cases only up to 10 times slower than primal-bound-shift, project-and-shift is up to 100 times slower at the root node because of the expensive initial set-up step. In the next section, we will see that the overhead incurred by the set-up step of project-and-shift often pays off when it is applied within the entire branch-and-bound tree.

3.6.2 Overall performance

We will now analyze the effect of the dual bound methods on the overall performance of the exact MIP solver and compare it with the inexact branch-and-bound version of SCIP (“Inexact ”). Table 3 reports the number of instances that were solved within the imposed limits (Column “slv”), for each setting. On 37 instances all settings succeeded. For this group, we present in Table 3, the number of branch-and-bound nodes “Nodes”, the solution time “Time” in seconds, and the additional time spent in the safe dual bounding step “DB” in seconds, all in geometric mean for each method. In addition, Fig. 2 gives a performance profile comparing the solution times. For a setting where an instance had a timeout, it is reported with an infinite ratio to the fastest setting. Thus, the intersection points at the right border of the graphic reflect the “slv” column. “Nodes” and “Time” for the individual instances are reported in Table 2. When a dual bounding method leads to a solving time that is within 5 % of the fastest run, the “Time” entry is put in bold. Details for the inexact run can be found in Table 5 (“Inexact-Firstfrac ”). Note that in the next section, “Inexact ” will be referred to as “Inexact-Firstfrac ” in order to emphasis the applied branching rule.

The observations made for the root node carry forward to the application in the branch-and-bound algorithm. Primal-bound-shift leads to the fastest node processing. Basis verification has a slightly better performance than solving LPs exactly. However, basis verification is often outperformed by project-and-shift.

Concerning the quality of the safe dual bounds, project-and-shift, basis verification, and exact LP solves perform equally well, which is reflected in a similar (e.g., bell3a, misc07, and rentacar), or even identical (e.g., acc-0, nug08, and vpm1), number of branch-and-bound nodes, see Table 2. Minor node count variations between these exact versions can be explained by slightly different safe dual bounds leading to different node selection decisions. This can, for example, change the point in time when nodes can be cut off due to a new primal solution. It also explains why weaker dual bounds occasionally result in lower overall node counts (e.g., “VerifyBasis ” can solve neos21 using fewer nodes than “ExactLP ”). On the instances, where no bounds on the variables are missing, i.e., where primal-bound-shift will always work, the node count is often even similar for all four dual bounding methods. However, the variation is slightly more significant for primal-bound-shift, because an FP-relaxation of the original problem is set up in Step 1 of Algorithm 1 instead of an FP-approximation; relative to the others, this may produce different approximate LP solutions. Sometimes this even leads to fewer nodes for primal-bound-shift (e.g., rgn). Table 2 also gives an example (khb05250) for an instance where primal-bound-shift works even though bounds are missing on some variables; these bounds were not required in the correction step.

Concerning the overall solution time, we observe that, when applicable, primal-bound-shift computes valid dual bounds with very little overhead. For the instances it solved we usually experience a slow-down of at most 2, relative to the inexact branch-and-bound solver. The few large slow-down factors of up to 10 can be explained by a node increase due to a small number of missing variable bounds and by expensive exact LP calls for computing primal bounds. The one extreme slow-down factor comes from rentacar, which is solved by pure enumeration; primal-bound-shift produces infinite bounds at all nodes. However, due to its limited applicability it solved only 43 instances within the imposed limits.

In contrast, project-and-shift solves 49 instances. The dual bound quality was strong enough such that instances could be solved without requiring a significant increase in the number of nodes processed, relative to the “ExactLP ” strategy. In the previous section we observed a large overhead required at the root node by this method, making it impractical for computing valid bounds on a single LP; however, we observe that amortized over the entire branch-and-bound tree, the resulting solution time is competitive. In mean, it is only 6 times slower than the inexact code. In this fashion, most of the instances were solved within 20 times the time used by the inexact code.

If we compare project-and-shift with basis verification (Table 2; Fig. 2) we see a similar and often better performance for project-and-shift. Still, on some instances basis verification works better. For example, it solves two more instances of our test set. To figure out when we should choose basis verification instead of project-and-shift, i.e., when (the setup step of) project-and-shift is too expensive, we tested different, easy to compute, problem characteristics. Since the setup costs of project-and-shift are dominated by an exact LP solve and a symbolic LU factorization (see Sect. 3.4), we tested criteria in connection with the constraint matrix: dimension of the matrix, ratio between number of rows and columns, percentage of nearly parallel rows (sometimes introduced to build an FP-relaxation), number of non-zeros, percentage of integral non-zeros, ratio between largest and smallest absolute value. Another idea was to estimate whether project-and-shift will be called often enough such that the setup step pays off. Here, we looked at the percentage of variables with missing bounds. The best results were obtained with the number of non-zeros in the constraint matrix. In the automatic dual bound selection strategies, discussed below, we prefer project-and-shift as long as the matrix has at most 10,000 non-zeros.

Only one instance, markshare1_1, could not be solved within the imposed limits by any of the four exact versions. In contrast to the other instances, the node count for markshare1_1 significantly increases with the exact solvers, see Tables 2 and 5. The reason is that in the course of the branch-and-bound processes some of the nearly integral approximate LP solutions do not correspond to integral exact LP solutions, which causes many additional branchings; in particular, this holds for the final primal solution found by the inexact solver.

3.6.3 Combinations

We already gave some remarks concerning a strategy that automatically chooses a dual bounding method. Another important observation for this purpose is that replacing FP-approximations by FP-relaxations does not affect the performance much: on our test set, running project-and-shift on an FP-relaxation gave similar results to running it on an FP-approximation. Therefore, we decided to always set up an FP-relaxation in Step 1 of Algorithm 1. This way, we are allowed to apply primal-bound-shift at any node we want to.

The automatic decision process used in the “Auto ” run works as follows. At every node, we first test whether primal-bound-shift produces a finite bound. If not, we choose project-and-shift or basis verification depending on the constraint matrix as explained above. The root node results for the combined versions are presented in Table 1 and Fig. 1; the overall performance results can be found in Table 3 and Fig. 2. Note that we excluded “Auto-Limited ” from Table 1 as it never computed safe finite bounds at the root node and that we only included the best auto setting in the performance profiles as their graphs look very similar. Detailed results for the inexact run and the best auto setting are given in Table 5. Notice that in this table for reasons of clarity, “Inexact ” and “Auto-Ileaved ” are called “Inexact-Firstfrac ” and “Exact-Firstfrac ”, respectively.

The experiments show that “Auto ” combines the advantages of all dual bounding methods. We can solve all 43 instances that primal-bound-shift solved as well as 11 additional ones by automatically switching to other dual bounding methods at the nodes. In Sect. 3.5, we discussed three possible improvements for the automatic dual bound selection procedure. The first one, to only guess whether primal-bound-shift will work, is implemented in the test run “Auto-Static ”. The guess is static, i.e., does not change throughout the tree; we skip primal-bound-shift if more than 20% of the problem variables have lower or upper bounds with absolute value larger than \(10^{6}\). Comparing both automatic settings shows that it is no problem to actually test at each node whether primal-bound-shift works, and it even leads to a slightly improved performance.

The second idea was to interleave the strategy with exact LP calls (“Auto-Ileaved ”). This strategy tries to avoid situations when branching is applied repeatedly on nodes that could be safely cut off if their LPs were solved exactly, but not if a weaker bound was computed. Examples are nodes where the exact LP dual bound is equal to the best known primal bound. This situation does not occur on many instances in our test set, but when it does, the interleaving strategy is helpful. We solve one more instance (30:70:4_5:0_95:100) to optimality without introducing a significant time overhead on the other instances.

The third extension was to only compute bounds safely at nodes where the (unsafe) bound coming from the approximate dual solution would lead to cutting off the node. Looking at the overall behavior for the corresponding test run, “Auto-Limited ”, it is not clear whether this is a good idea in general. It solved fewer instances than the other automatic settings and processed more nodes. On harder instances the node count at timeout was higher than the other methods, i.e., the node processing is much faster on average. However, we cannot draw strong conclusions about the quality of this approach on harder instances, as in this setting the exact primal-dual-gap does not improve steadily. Moreover, one advantage of computing safe dual bounds at more nodes of the branch-and-bound tree is that these safe bounds are inherited by a node’s children. Therefore, if safe bounds were computed previously, the discovery of an improved primal bound may allow immediate pruning of many unprocessed nodes. In a similar situation, the setting “Auto-Limited ” may incur extra cost computing safe bounds at each of these nodes individually.

4 Branching rules

So far we have introduced a branch-and-bound algorithm for solving MIPs exactly and developed an advanced strategy for computing the dual bounds safely in this framework (“Auto-Ileaved ”, which we refer to as “Exact-Firstfrac ” in the following). Here we will improve the branching step of the current implementation. Choosing which variable to branch on is crucial for MIP solvers. The experiments in [2] (using SCIP version 0.90f) showed that replacing the default branching rule in SCIP by other less sophisticated ones increases the running time by a factor of up to 4. For comparison, disabling cutting plane separation doubles the solution time.

The inexact version of SCIP supports various branching rules. We tested the following ones in the exact MIP setting, listing them in increasing order of their performance in the inexact full version of SCIP as evaluated by Achterberg [2].

-

“Exact-Leastinf ”: Least infeasible branching

-

“Exact-Firstfrac ”: First fractional branching

-

“Exact-Mostinf ”: Most infeasible branching

-

“Exact-Fullstrong ”: Full strong branching

-

“Exact-Pseudocost ”: Pseudocost branching

-

“Exact-Reliability ”: Reliability branching

Least infeasible and most infeasible branching consider the fractional parts of the integer variables in the LP solution. By solving the LP relaxation of the potential subproblems for all branching candidates, full strong branching chooses the variable which leads to the best dual bound improvement. Pseudocost branching tries to estimate this improvement by analyzing the dual bound gains achieved by previous branchings. Reliability branching uses strong branching only on variables with unreliable pseudocosts, i.e., with a limited branching history. First fractional branching, the rule used so far in our implementation, simply decides to branch on the first integer variable (w.r.t. variable index) with fractional LP solution value. SCIP applies this scheme when no special branching rule is implemented. It was not tested in [2], but the performance is in the range of most infeasible and least infeasible branching.

When selecting the branching variable in the exact MIP setting, exact branching score calculation is not required to obtain a correct solution. In particular, the strong branching LPs do not need to be solved exactly. The only restriction is that all other conclusions drawn from strong branching LPs are ignored; they are not safe anymore if the LPs are only solved by an inexact LP solver. These additional conclusions include prunable subproblem detection (if we find a branching candidate for which both strong branching LPs are infeasible), domain reduction (of all variables for which one of the strong branching LPs is infeasible), and dual bound improvement (the weaker objective function value of the two strong branching LP solutions of a variable provides a valid dual bound for the current subtree).

For full strong branching, this significantly reduces its potential. Table 4 summarizes, alongside others, the results for running the inexact branch-and-bound algorithm of SCIP without additional conclusions from strong branching LP solutions (“Inexact-Fullstrong ”) and with them (“Inexact-Fullstrong+ ”). Supporting this step, as done in standard floating-point MIP solvers, speeds up the branch-and-bound process by a factor of \(1.8\). The node count is reduced by a factor of \(3.7\). So the positive impact of full strong branching is not only due to good branching decisions based on additional LP solves; to a certain extent it is also achieved by drawing further conclusions from these strong branching LPs, which includes variable fixings, domain reductions and node cutoffs. Regarding the node count improvement, one should keep in mind that if full strong branching “creates” subproblems and detects them to be prunable, they are not counted as branch-and-bound nodes. Performing the same experiment with reliability branching, i.e., if strong branching is only used in case of unreliable pseudocosts, we observe that the additional conclusions have only a very small impact (“Inexact-Reliablity ” versus “Inexact-Reliablity+ ” in Table 4).

For the exact MIP setting, the impact of each tested branching rule is summarized in Table 4. The ranking is similar to what was experienced for the floating-point version of SCIP in [2]; except for full strong branching which performs in our tests worse than most infeasible branching for the reasons explained above. The best results were obtained with reliability branching.

Tables 4 and 5 compare the performance of first fractional branching and reliability branching in the inexact branch-and-bound version of SCIP (“Inexact-Firstfrac ” versus “Inexact-Reliablity ”) and in the exact version (“Exact-Firstfrac ” versus “Exact-Reliability ”). In both settings, the impact of reliability branching is of the same range. In mean, the running time with this rule improves by a factor of \(3.9\) for the inexact and \(4.4\) for the exact code. In addition, Fig. 3 visualizes the changes in running time and in the number of branch-and-bound nodes between the inexact and the exact code when both apply reliability branching. The performance degradation is similar to what was experienced with first fractional branching in the previous section (see Fig. 2; Table 3). In geometric mean, the exact version is only \(2.2\) times slower than the inexact one. However, with reliability branching the branch-and-bound tree diverges more between the inexact and the exact solver; in Table 5 we observe that the node count differs more with reliability branching than with first fractional branching. In the extreme, both solvers need the same number of branch-and-bound nodes with first fractional branching, while having different node counts with reliability branching (e.g., air05, egout, and vpm1). The reason is that the sophisticated strategy of reliability branching is more sensitive to small changes, for example, in the dual bounds and the number of LP iterations (see [2] for details on the reliability branching algorithm). To summarize, the exact code benefits from better branching rules in the same way as the inexact one.

In addition to standard branching strategies, one that aims at making the fast safe dual bounding method primal-bound-shift (see Sect. 3.3) work more often would be interesting. If a missing bound constraint is necessary to repair the approximate dual solution by primal-bound-shift, the method will fail and we apply one of the more expensive dual bounding methods at this branch-and-bound node. Branching on such variables would introduce a missing bound in one of the created subproblems and this way could increase the chance of primal-bound-shift to be applicable in this subtree. On the easy test set, 29 of the 57 instances contain variables with infinite lower or upper bounds. They are marked by a “\(\times \)” in Table 5. However, examining the problem characteristics we noticed that all missing bounds were on continuous variables only. That is, we are not able to introduce the required bounds through branching decisions; branching is only performed on integer variables. On numerically difficult instances, considered in the next section, we observed a similar situation. In Table 11, 28 out of 50 instances had infinite bounds, but only in a few cases this involved integer variables (dfn6_load, dfn6fp_load, dfn6f_cost, and dfn6fp_cost).

5 How accurate are current MIP solvers?

On the easy test set, with reliability branching, we are able to solve all but one instances exactly (markshare1_1). Thus, having exact objective function values for nearly all instances at hand, we now want to analyze how accurate the floating-point version of SCIP is. In the inexact setting, errors in the branch-and-bound process can be introduced at several different places: while reading in the instance, in the bounding step and in the feasibility test (because of the FP-arithmetic and the consequent usage of tolerances), and because of inaccurate LP solutions. See [29, 42] for further discussion regarding possible sources and types of errors that might be encountered.

We considered our best exact branch-and-bound version (“Exact-Reliability ”) and its inexact counterpart (“Inexact-Reliablity ”) and present in Table 6 the “Difference” between the objective function values returned by the exact and the inexact run (“Exact Objval ” Footnote 2 and “Approx Objval ”).

We mark cases where an instance was not solved to optimality within the limits (see Table 5) by a “—” and also use “—” in the “Difference ” column then. Otherwise, the exact absolute difference is computed. If it is non-zero, the closest FP-number is displayed.

For the majority of the instances, the objective values are identical. On 12 instances, the inexact branch-and-bound solver reports results that differ from the exact objective values, but the differences are not significant. This indicates that no dramatic mistakes were made by the FP branch-and-bound solver. But this is not surprising as the instances come from standard MIP libraries, for which numerical troubles are very seldom.

Only markshare1_1, which we were not able to solve, is numerically less stable. As explained in Sect. 3.6.2, in contrast to the other instances, the node count for markshare1_1 significantly increased with the exact solver. The reason is that in the course of the branch-and-bound process some of the nearly integral approximate LP solutions do not correspond to integral exact LP solutions (best primal bound found within the imposed limits is \(235/398953428\).), which causes additional branchings. On all other easy instances, this did not happen.

Notice that this experiment also shows that all studies on the easy test set were fair. We did not compare solution times for instances where the inexact code terminates quickly, but computes a result that is far from correct. The picture is more diverse on numerically more difficult instances as considered in the next section.

6 Numerically difficult MIP instances

In the last section, we showed that the exact branch-and-bound code was able to solve the problems in our easy test set within a reasonable factor of the time required by the inexact branch-and-bound solver. Here we will analyze its behavior on numerically difficult instances.

6.1 Selecting the test set

Before going any further we must ask: what does it mean for a MIP to be numerically difficult? It would be nice if there were some clear, well defined properties that would predict which instances could be solved easily using floating-point computation, and which instances would succumb to numerical issues in the solution process. Unfortunately, this question does not have a simple answer.

We first focus our attention to linear programming where a number of authors have studied the related idea of condition measures [13, 16, 36, 39, 40]. LPs are considered ill-conditioned if small modifications in the problem data can have a large effect on the solution; in particular, if they lead to changes in the optimal objective value, changes in primal or dual feasibility, or changes in the structure of the final LP basis. Connections have been made between LP condition measures and the complexity of solving them [14, 15, 41, 44]; ill-conditioned LPs may require higher precision arithmetic or more interior point iterations. Computational studies have also investigated these ideas [12, 37]. However, LP condition numbers are not always a good predictor that LP instances will or will not be solvable by floating-point software packages. For example, in [37], 71 % of the Netlib LP instances [9, 23] were observed to have infinite condition measures, 19 % after pre-processing. However, in [27], double-precision LP solvers were used to identify the optimal basis for all Netlib LP instances; this could be seen as an indication that, in some practical sense, these instances are not numerically difficult. Conversely, one could easily construct well conditioned LPs that are unsolvable by double-precision based software by, e.g., scaling the data to contain entries too large or small to be represented by a double-precision number.

Turning our attention back to MIPs, to the best of our knowledge no study has defined or discussed the notion of a condition measure. When switching from continuous to discrete variables arbitrarily small changes in the data defining an instance is more likely to alter the feasibility or optimality. As the nature of our study is computational we will prefer a test set that is numerically difficult in the practical sense – meaning it is composed of instances on which software packages available today compute incorrect or conflicting results or exhibit evidence of incorrect computations within the solution process.

Starting from a total of over 600 instances taken from the unstable test set of the Miplib 2010 library [29], the Cor@l MIP collection [31, 32], instances that were submitted to the NEOS server [18, 34] to be solved exactly, and instances from projects at ZIB, we collected a test suite of 50 instances, which we will call the numerically difficult test set . Table 7 states the origin and a short description of the chosen instances. Furthermore, Table 8 shows the statistics that were relevant for the selection of these instances; met criteria are put in bold.

We now describe the empirically motivated criteria which we have used to classify instances as numerically difficult. They are based directly on the behavior of floating-point MIP solvers applied to the instances.

One attempt to identify numerical issues during the solving process was recently, with version 12.2, introduced in Cplex. It considers the condition number of the optimal LP bases at the branch-and-bound nodes and classifies them as stable, suspicious, unstable, and ill-posed (see [29] for more details). Even though this measure is highly dependent on the solution process and may not help to identify numerically unstable instances, we found it reasonable to take it as one criterion for the selection of our test set. In Table 8, the first block of columns, “\(\mathsf p _\mathsf{susp }\)”, “\(\mathsf p _\mathsf{unstab }\)”, and “\(\mathsf p _\mathsf{illpo }\)”, states the relative frequency of the sampled bad condition number categories. We used set mip strategy kappastats 2, i.e., computed LP condition numbers for every subproblem. Furthermore, Cplex weights these three groups into one estimate for the probability of numerical difficulties. It is called attention level (column “AL ”). Since the estimate depends on the solution process, we run the solver with five different parameter settings: default settings, presolving disabled, cuts disabled, primal heuristics disabled, and all three components disabled. The statistics in Table 8 refer to the worst (largest) values observed among the runs (time limit of 2 h), and we display only non-zero values.

Our second indicator of numerical issues is, whether the input data contain values of very different magnitude. The columns “\(\mathsf r _\mathsf{coef }\)”, “\(\mathsf r _\mathsf{rhs }\)”, and “\(\mathsf r _\mathsf{obj }\)” state the ratio between the largest and the smallest absolute non-zero entry in the coefficient matrix, the right hand side vector, and the objective function vector, respectively. Largest and smallest values are taken from the log files of Cplex.

As a third point, we checked for inconsistent results returned by different MIP solvers on various parameter settings. We run SCIP 2.0.2 and Cplex (with mipkappa computation, and without) and in both solvers, applied the five settings mentioned above. Columns “db ” and “pb ” report the maximum dual bound and the minimum primal bound returned at termination among all runs. Notice that all instances have minimization form. In case of infeasibility detection, we work with primal and dual bounds of \(10^{{20}}\) and display “Infeas” in Table 8. We selected instances that meet one of the following criteria

-

Unstable LPs: “AL ” \(\ge 0.1\), “\(\mathsf p _\mathsf{susp }\)” \(\ge 0.5\), “\(\mathsf p _\mathsf{unstab }\)” \(\ge 0.3\), or “\(\mathsf p _\mathsf{illpo }\)” \(\ge 0.1\)

-

Wide input data range: “\(\mathsf r _\mathsf{coef }\)” \(\ge 10^{10}\), “\(\mathsf r _\mathsf{rhs }\)” \(\ge 10^{10}\), or “\(\mathsf r _\mathsf{obj }\)” \(\ge 10^{10}\)

-

Inconsistent results: \((\text{``db } \text{'' }-\text{``pb } \text{'' })/ \max \{|\text{``db } \text{'' }|, |\text{``pb } \text{'' }|, 1\} > 10^{-6}\).

6.2 Computational study

We will discuss three topics on the numerically difficult instances: the error-proneness of the inexact solver, the performance of the exact solver, and the relevance of branching decisions based on exact LP solutions. The last point will be addressed in the next section on possible improvements.

For this purpose, we evaluated the results and performance of our best exact branch-and-bound version of SCIP (“Exact-Reliability ” with reliability branching and dual bounding strategy: automatic selection interleaved by exact LP calls) and its inexact counterpart (“Inexact-Reliablity ”). The set-up of the experiment is the same as for the easy test set, described in Sect. 3.6; in particular, we use a time limit of 24 h and a memory limit of 13 GB. Note that during the test set selection, very hard instances for which both solvers failed to terminate within the imposed limits were removed.

First, we check how often the inexact run produced wrong results. Like Table 6 for the easy instances, Table 9 presents the absolute “Difference ” between the objective function values returned by the exact and the inexact run (“Exact Objval ” and “Approx Objval ”). Since bernd2 and ns1770598 have very long exact objective function values, we only print the number of digits of their numerators and denominators. Again, we mark cases where a solver did not terminate at optimality within the limits (see Table 11 discussed later) by a “—”. For non-zero values, we report in column “Difference ” the closest FP-number.

We can compare the results for 26 instances, on the others, one of the solvers did not terminate within the limits. For half of them, the returned objective values were different, where for five instances (alu10_1, bernd2, dfn6fp_cost, ns1866531, and opti_157_0) this difference was significant. Furthermore, it is known that except for alu10_9 and alu16_9 all of the alu instances in our test set are infeasible, meaning the inexact run fails on at least five more instances.

Now we evaluate the performance. On the easy test set, the exact solver was only moderately slower than the inexact one and could solve all but one instance within the limits. In geometric mean, the solution time doubled, where most instances were only up to 20 times slower and the largest slowdown factor was 40. The node count in geometric mean was similar in the exact and the inexact branch-and-bound runs. Here, the picture is more diverse. Table 11 presents the solution times and the node count for the individual instances; they are split into four subsets depending on the accuracies of the inexact solver (zero, small, significant (\(>\!10^{-6}\)), or unknown “Difference ” in Table 9). The results are summarized in Table 10 and visualized in Fig. 4. They have the same layout as the tables and plots in Sect. 3.6.

First of all, on 9 instances, we actually benefit from taking care of the numerics. There were 4 instances (alu16_5, cnr_dual_mip1, p4, and x01) that were solved within the limits by the exact solver, but not by the inexact one, and 5 instances (norm-aim, neos-839838, sp98ir, tkat3T, and tkat3TV) that were solved by both versions, but where the exact solver was faster; the speed factors vary between 1.5 and 15.

We now analyze the other 41 instances, where the exact code is slower than the inexact one. Notice that, by definition of the numerically difficult test set (it contains only instances which one of the solvers can process within the imposed limits), the inexact code terminates on all these instances. We first observe that the exact code can solve only 21 instances, a much smaller portion than on the easy test set. Here, the degradation factors for the time are in most cases only up to 20 as well; but we also observe larger factors of up to 300 (alu10_1, neos-1053591, and ns1629327). However, for alu10_1 the inexact solver returned a wrong answer. Examining the remaining 20 instances, which were not solved by the exact code within the imposed limits, we already see that they will include even larger slowdown factors. But some of the results of the inexact solver will be wrong (for five alu instances this is already known for sure, see above), since most of these instances were collected because of inconsistent results between different solvers and settings.

Why is the performance not as good as on the easy test set? For the numerically more difficult instances, the exact code has to often process more branch-and-bound nodes. As explained in Sect. 4, this is to some extend due to reliability branching being sensitive to small changes in the solving process, but the main reason is that the inexact solver wrongly cuts off some nodes due to FP-errors. Table 11 presents, in Columns “NotInfeas ”, “NotInt ”, and “NotInt-Inf ”, how often the exact code would have made wrong decisions if the result of the inexact LP solver would not have been safely verified; which indicates wrong decisions in the inexact MIP solver. All larger slowdown factors come along with mistakes in the inexact solver; except for dfn6fp_load, ns2017839 and prodplan1, where the degradation is caused by expensive LP calls.

Column “NotInfeas ” states the number of nodes where the inexact LP solver wrongly claims LP infeasibility at a node, which leads to more branchings in the exact solver and thus increases the node count. This happens on 9 of the 50 instances, but never occurred on the easy MIPs.

Column “NotInt ” counts the nodes where the floating-point LP solution was integral within tolerances (i.e., would have been accepted by the inexact solver) but verifying the primal bound (Step 7 of Algorithm 1) did not allow us to cut off the node. This happens on 20 of the 50 instances, in contrast to only one instance for the easy test set (markshare1_1, where “NotInt ” is 256). Note that “NotInt ” only considers nodes where branching on the exact LP solution takes place afterwards. That is, approximate integral LP solutions for which the corresponding exact LP turns out to be infeasible (so pruning is legal but the argumentation of the inexact solver is wrong) are not counted here but in Column “NotInt-Inf ”. A rejected approximate primal solution does not only mean that we can not cut off the current subtree in the exact code, but it may also affect other parts of the tree because the primal bound in the exact code is weaker than the, possibly incorrect, bound in an inexact solver. In the extreme case, this leads to rejecting all approximate solutions found and we are not able to cut off any node early by bounding; an italic font in Columns “NotInt ” and “NotInt-Inf ” indicates such cases. The unsolved alu instances, npmv07, neos-1062641, and ns2080781, all with extreme degradation factors, are examples for this effect.

6.3 How to tackle numerically difficult instances?

All in all, the exact code is slower on the numerically difficult test set, sometimes requiring much more time to solve an instance, or even failing to finish within the imposed limits. However, a direct comparison of the solution times is not always fair here because the inexact solver frequently, in particular, on instances with huge differences in the performance, takes advantage of incorrect bounding decisions.

Introducing presolving, cutting planes, and primal heuristics will certainly help to improve the performance as it normally shrinks the size of the branch-and-bound tree and thus reduces the space of the search tree which the inexact solver would incorrectly ignore, but the exact code has to process.

In addition to the generally increased node count, the time overhead also comes from the exact LP solves in the safe primal bounding step and the ones for disproving LP infeasibility of nodes. On the numerically difficult instances, such exact LP solves are more often experienced or they occur so often that they add up to a large portion of the running time. Thus, more sophisticated methods for the safe primal feasibility check are required.

The current solver uses the first fractional variable branching rule when it branches on the exact LP solution. This type of branching happens in two situations. First, if the approximate LP solution is nearly integral, but the safe primal bounding step (where the exact LP is warm started with the basis of the approximate LP solution) does not allow to prune the node (the computed exact LP solution is not integral). Second, if the LP relaxation is claimed to be infeasible, but there exists an exact LP solution. Our fast safe dual bounding methods are useless here, we have to solve this LP exactly to prove LP feasibility. In contrast to the easy test set, both situations occur frequently on the numerically difficult test set; numbers were given in Table 11 in Columns “NotInt ” and “NotInfeas ”. Furthermore, both situations can easily occur again in the subtrees created after branching on the exact LP solution. A branching rule that reduces the risk of such expensive situations for the new subtrees could be helpful for numerically difficult instances.

7 Conclusion

From the computational results we can make several key observations. Each safe dual bounding method studied has strengths and weaknesses depending on the problem structure. Automatically switching between these methods in a smart way solves more problems than any single dual bounding method on its own. Of the 57 problems from the easy test set solved within 2 h by the floating-point branch-and-bound solver, 55 could also be solved exactly within 24 h and the solution time was usually no more than 20 times slower. This demonstrates that the hybrid methodology can lead to an efficient exact branch-and-bound solver, not limited to specific classes of problems.

When turning to numerically more difficult instances, where floating-point solvers face numerical troubles and even compute incorrect results, we observe some stronger slowdowns with our current exact solver. However, this mainly happens on instances where the inexact MIP solver strongly benefits from incorrect bounding decisions. As a consequence, the bottleneck of the exact solver is a large number of nodes for which the hybrid rational/safe floating-point approach cannot skip the expensive rational computations of the main procedure by replacing them with certain decisions from the faster slave procedure with FP-arithmetic. Examples are wrong infeasibility detections of the floating-point LP solver and incorrect integrality claims based on the approximate LP result. In the future, we will investigate techniques to process such nodes without calling exact LP solvers and how to prevent situations like this from repeating in subsequent subtrees.

Notes

Project-and-shift fails to produce finite bounds on two instances, swath1 and swath2. The conditions necessary for its applicability within the tree (see Sect. 3.4) were not satisfied by these instances.

Of course, even with a very carful implementation and extensive testing, a certain risk of an implementation error remains (also in the underlying exact LP solver and the software package for rational arithmetic). So, the exact objective values reported here come with no warranty.

References

Achterberg, T.: ALU instances. http://miplib.zib.de/miplib2003/contrib/ALU

Achterberg, T.: Constraint Integer Programming. Ph.D. thesis, Technische Universität Berlin (2007)

Achterberg, T.: SCIP: Solving constraint integer programs. Math. Program. Comput. 1(1), 1–41 (2009)

Achterberg, T., Koch, T., Martin, A.: The mixed integer programming library: MIPLIB (2003). http://miplib.zib.de

Althaus, E., Dumitriu, D.: Fast and accurate bounds on linear programs. In: Vahrenhold, J. (ed.) SEA 2009. LNCS, vol. 5526, pp. 40–50. Springer, Berlin (2009)

Applegate, D.L., Bixby, R.E., Chvátal, V., Cook, W.J.: The Traveling Salesman Problem: A Computational Study. Princeton University Press, Princeton (2006)

Applegate, D.L., Cook, W.J., Dash, S., Espinoza, D.G.: \(\text{ QSopt }\_\text{ ex }.\) http://www.dii.uchile.cl/daespino/ESolver_doc/main.html

Applegate, D.L., Cook, W.J., Dash, S., Espinoza, D.G.: Exact solutions to linear programming problems. Oper. Res. Lett. 35(6), 693–699 (2007)

AT &T Bell Laboratories, The University of Tennessee Knoxville, and Oak Ridge National Laboratory. Netlib Repository. http://www.netlib.org/netlib/lp

Bixby, R.E., Ceria, S., McZeal, C.M., Savelsbergh, M.W.: An updated mixed integer programming library: MIPLIB 3.0. Optima 58, 12–15 (1998)

Bley, A., Koch, T.: Optimierung des G-WiN. DFN-Mitteilungen 54, 13–15 (2000)

Chai, J., Toh, K.: Computation of condition numbers for linear programming problems using Peña’s method. Optim. Methods Softw. 21(3), 419–443 (2006)

Cheung, D., Cucker, F.: A new condition number for linear programming. Math. Program. 91, 163–174 (2001)

Cheung, D., Cucker, F.: Solving linear programs with finite precision: I. Condition numbers and random programs. Math. Program. 99, 175–196 (2004)

Cheung, D., Cucker, F.: Solving linear programs with finite precision: II. Algorithms. J. Complex. 22(3), 305–335 (2006)

Cheung, D., Cucker, F., Peña, J.: Unifying condition numbers for linear programming. Math. Oper. Res. 28(4), 609–624 (2003)

Cook, W.J., Dash, S., Fukasawa, R., Goycoolea, M.: Numerically safe Gomory mixed-integer cuts. INFORMS J. Comput. 21(4), 641–649 (2009)

Czyzyk, J., Mesnier, M.P., Moré, J.J.: The Network-Enabled Optimization System (NEOS) server. IEEE J. Comput. Sci. Eng. 5(3), 68–75 (1998)

de Vries, S., Vohra, R.: Combinatorial auctions: a survey. INFORMS J. Comput. 15(3), 284–309 (2003)

Dhiflaoui, M., Funke, S., Kwappik, C., Mehlhorn, K., Seel, M., Schömer, E., Schulte, R., Weber, D.: Certifying and repairing solutions to large LPs, how good are LP-solvers? In: SODA 2003, pp. 255–256. ACM/SIAM, New York (2003)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2), 201–213 (2001)

Espinoza, D.G.: On Linear Programming, Integer Programming and Cutting Planes. Ph.D. thesis, Georgia Institute of Technology (2006)

Gay, D.M.: Electronic mail distribution of linear programming test problems. Math. Program. Soc. COAL Newsl. 13, 10–12 (1985)

GMP. GNU multiple precision arithmetic library. http://gmplib.org

Goldberg, D.: What every computer scientist should know about floating-point arithmetic. ACM Comput. Surv. (CSUR) 23(1), 5–48 (1991)

IBM, ILOG. CPLEX. http://www.ilog.com/products/cplex

Koch, T.: The final NETLIB-LP results. Oper. Res. Lett. 32(2), 138–142 (2004)

Koch, T.: Rapid Mathematical Programming. Ph.D. thesis, Technische Universität Berlin (2004)

Koch, T., Achterberg, T., Andersen, E., Bastert, O., Berthold, T., Bixby, R.E., Danna, E., Gamrath, G., Gleixner, A.M., Heinz, S., Lodi, A., Mittelmann, H., Ralphs, T., Salvagnin, D., Steffy, D.E., Wolter, K.: MIPLIB 2010. Math. Program. Comput. 3(2), 103–163 (2011)

Kwappik, C.: Exact Linear Programming. Universität des Saarlandes, Master thesis (1998)

Lehigh University. COR@L mixed integer programming collection. http://coral.ie.lehigh.edu/data-sets/mixed-integer-instances

Linderoth, J.T., Ralphs, T.K.: Noncommercial software for mixed-integer linear programming. In: Karlof, J. (ed.) Integer programming: theory and practice, pp. 253–303. CRC Press, Boca Raton (2005)

Mittelmann, H.D.: Benchmarks for Optimization Software (2010). http://plato.asu.edu/bench.html

NEOS Server. http://neos-server.org/neos

Neumaier, A., Shcherbina, O.: Safe bounds in linear and mixed-integer linear programming. Math. Program. 99(2), 283–296 (2004)

Nunez, M., Freund, R.M.: Condition measures and properties of the central trajectory of a linear program. Math. Program. 83, 1–28 (1998)

Ordónez, F., Freund, R.M.: Computational experience and the explanatory value of condition measures for linear optimization. SIAM J. Optim. 14(2), 307–333 (2003)

Pseudo-Boolean Competition (2010). http://www.cril.univ-artois.fr/PB10/

Renegar, J.: Some perturbation theory for linear programming. Math. Program. 65, 73–91 (1994)

Renegar, J.: Incorporating condition measures into the complexity theory of linear programming. SIAM J. Optim. 5, 506–524 (1995)

Renegar, J.: Linear programming, complexity theory and elementary functional analysis. Math. Program. 70, 279–351 (1995)

Steffy, D.E.: Topics in Exact Precision Mathematical Programming. Ph.D. thesis, Georgia Institute of Technology (2011)