Abstract

In this paper pixel-based and object-oriented classifications were investigated for land-cover mapping in an urban area. Since the image fusion methods are playing a useful role in supplying classification different fusion approaches such as Gram-Schmidt Transform (GS), Principal Component Transform (PC), Haar wavelet, and À Trous Wavelet Transform (ATWT) algorithms have been used and the fused image with the best quality has been assessed on its respected classification. A Hyperion image and IRS-PAN image covering a region near Tehran, Iran have been used to demonstrate the enhancement and accuracy assessment of fused image over the initial images. The evaluation results of fused images showed that the Haar wavelet approach has good quality in preserving spectral information as well as spatial information. Classification results were compared to evaluate the effectiveness of the two classification approaches. Result of the pan-sharpened image classifications displayed that the object-oriented procedure presented more accurate outcomes (90.47 %) than those obtained by pixel-based classification method (77.33 %).

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The accuracy and reliability of urban image analysis from panchromatic satellite imagery could be enhanced using extra spectral bands of the high spectral image.

A Pan image has high spatial resolution however weak spectral resolution. On the contrarily, a multi-band image has high spectral resolution but low spatial resolution. Since, a few numbers of spectral bands that defines multispectral sensors can be adequate to separate among various land-cover classes; their discrimination ability is restricted when different types of the same varieties are to be identified. Hyperspectral sensors can be utilized to handle this issue. These sensors are known by a very high spectral resolution that usually results in many spectral bands. Hence, it is achievable to deal with different applications in need of high discrimination abilities in the spectral domain. It is worth noting that image fusion approach is able to compose a high spatial resolution panchromatic image and a high spectral resolution hyperspectral image into a new image with high spatial/spectral resolution. Many researches in the particular field of image fusion goal expanding new algorithms for visual improvement or higher quantitative evaluation value while not much research on the effect of fusion on effective applications, i.e. image classification (Li and Li 2010). Teggi et al. (2003) carried out image classification using IRS-1C-PAN and TM data based on the ‘à trous’ wavelet transform fused data. It was appealing that all fused data resulted in accuracies decrease than the original. XU (2005) considered fusion algorithms on the Landsat 7 ETM+ image, and discovered that fused image increased the classification accuracy.

Fusing the panchromatic and hyperspectral images to achieve the high spatial/spectral resolution fusion images can efficiently improve the accuracy of image classification. It is worth noting that the most commonly utilized applications of high spatial resolution imagery are classification of urban land covers (Salehi 2012). Nevertheless, due to the sophistication of urban scenery, the failure to take into account spatial properties of the image in traditional pixel-based classifiers, and variance between pixel size and the spatial attributes of objects such as buildings, traditional spectral-based classification approaches are unsuccessful (Melesse et al. 2007). Therefore, advanced approaches are needed to integrate not only the spectral information of the image, but in addition the spatial information into the classification procedure. Classification of pervious surfaces such as vegetation, and soil with the spectral information of high spatial resolution imagery is providing high classification accuracies as a result of the high spectral difference between such classes (Salehi 2012). Nevertheless, when the purpose is extracting impervious land cover classes, the difficulty is appeared. Indeed, fusion of hyperspectral and high resolution images is a way of dealing with this limitation. Consequently, hyperspectral data provides more effective separation of feature types based on their specific spectral reflectance properties. Hyperion imagery has been the concentrate of land cover classification until today (Pignatti et al. 2009). A few analysts have examined the capability of the combined utilize of object-based or pixel based classification with image fusion. Pixel-based classification allocates a pixel to a class by considering the spectral similarities with the class or with other classes. However the strategies well developed, it does not make use of the spatial content. information (Zhou and Robson 2001). While, object-oriented analysis classifies objects in place of single pixels. This type of classification is based on the texture of image data which is excluded in traditional classifications (Blaschke and Hay 2001). Currently, object-oriented image analysis merge spectral details and spatial information, so spectral information in the image will be applied as classification information, as well as the spatial information in the image will be joined into classification (Flanders et al. 2003). Because of improving the accuracy of thematic maps derived from remote sensing data there is a numerous literature comparing several classification methods (Foody 2004). The fundamental difference between pixel-based and object-based image classification is that, the basic processing factors are image segments in object-oriented image analysis. Also, the object-oriented classification is based on fuzzy logic. In essence, soft classifier utilize membership to exhibit an object’s allocation to a class (Matinfar et al. 2007).

In this paper, the pixel-based and the object-oriented image classifications were used to accomplish land-cover mapping in an urban area. Four land use / land cover classes were desired to be classified: building, road, vegetation and bare soil.

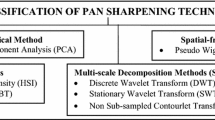

Gram- Schmidt (GS), Principal Component Analysis (PCA), Haar Wavelet, and À trous Wavelet Transform (ATWT) pan-sharpening approaches have been applied to combine the IRS-PAN (high-spatial resolution image of CARTOSAT-1) and Hyperion (high-spectral resolution) data for a mainly urban area. The accuracy assessment for both supervised based on pixel and object-oriented classification has been used on fused and original images to find out the optimum result.

Challenges and limitations of this research include the unavailability of proper data (panchromatic and hyperspectral images) for the same time in the fusion process which are required for reaching high accuracies in the classification process.

Materials and Method

Study Area and Datasets

For this study, EO-1 Hyperion image, level L1R (USGS) imagery as a high spectral image which consisted of 220, 30 m resolution spectral bands was acquired on 29th October, 2009, over Nasim-shahr, Tehran, Iran. In addition, a 2.5 m IRS-PAN (CARTOSAT-1) band high spatial resolution imagery acquired on 31th December, 2010 over the same area. It has to be noticed that, the Hyperion data taken contains 220 bands with the spectral cover range of 400–2500 nm whereas; the spectral range of the IRS-PAN data is from 500 to 850 nm. As the mainstay thought of the study is image fusion that the two datasets should be fused in almost similar spectral range, the spectral subsets for the Hyperion data have been selected in the same wavelength range as that of the IRS-PAN i.e. 500–850 nm (42 spectral bands). The area is made up of urban segments that include residential and undeveloped places such as unmanaged soil, providing diverse classes. Additionally to the original bands, digital vector map were utilized in the analysis of object-oriented procedure. Furthermore, a multi-spectral GeoEye-1 image with spatial resolution of 0.5 m from 2010 was also used as reference data to calculate the accuracy assessment for the classified image. The study area is shown in Fig 1. Also, Tables 1 and 2 represent sensor details of Cartosat-1 (IRS) and Hyperion (EO-1).

Image Pre-Processing

Several primary image processing operations are needed to be carried out on the raw images before implementing classification processes. These steps comprise, atmospheric correction, conversion to reflectance, co-registration and image fusion. Processing the Hyperion data was performed utilizing the ENVI 4.7. In reality, pre-processing steps included the removal of overlapping and inactive bands. Then, atmospheric correction of the Hyperion image was executed by applying the FLAASH algorithm which is provided in ENVI 4.7 software. At this stage, due to the fact the images are received from different satellite platforms, they all have to be registered to the same projection and geographic coordinate system.

Hence, geometric correction was carried out on Hyperion by using PCI Geomatica. The image-to-image registration accuracy was 0.81 pixels of RMSE. The co-registration procedure was set up by third-order polynomial transformation. For registration effort 25 ground control points and 8 check points were selected to accomplish an acceptable accuracy RMSE standard. Well distributed GCPs were selected on the images. The GCPs were distinct points, such as building and road junctions.

Image Fusion

The literature of image fusion demonstrates that an ideal quality for a fused image is described as having lowest colour distortion, highest spatial resolution that has all the spatial property of high resolution image (Sarup and Singhai 2011). But this ideal situation mainly is generally provided in theory (Zhou et al. 1998)). In this paper, four methods have been utilized for merging: Gram- Schmidt (GS), Principal Component Analysis (PCA), Haar Wavelet, and À trous Wavelet Transform (ATWT). All these methods maintains 42 number of bands in fused outputs as well as 42 input bands.

Gram-Schmidt Transform (GS)

GS spectral sharpening extracts the high frequency variance of a high resolution image afterward inserts it into the multi-band frame of a corresponding low resolution image. In this algorithm, algebraic methods perform on images at the level of the specific pixel to the number of spectral information between the bands of the multi-band image. The replacement (high resolution) image switches one of the bands of the initial image and can then be allocated correct spectral brightness. In this paper, we used the GS algorithm which is presented in ENVI software for the fusion process.

Principal Component Transform (PC)

The PC transform is a mathematical process that alters a multivariate dataset of related parameters into a dataset of uncorrelated linear combinations of the primary parameters. For images, it makes an uncorrelated feature space that could be applied for additional investigation as a substitute for the original multi-band feature space. The benefit of the PC fusion is that the number of bands is not limited (Klonus and Ehlers 2009). It is even so, a statistical approach which usually indicates that it is affected by the area to be sharpened. The fusion results may be different in accordance with the selected image subsets (Jensen 2007). ENVI package was used to implement this algorithm.

Haar Wavelet

Wavelet basics commonly utilized are orthogonal wavelets and biorthogonal wavelets. Haar Wavelet is the most well-known and easiest orthogonal wavelet which is discrete. It employs averaging and differencing conditions, saving detail coefficients, removing data, and rebuilding the matrix so that the producing matrix is comparable to the primary matrix (Jaywantrao and Hasan 2012). Overall, Discrete wavelet transform decomposes an image in to low frequency band and high frequency band in diverse levels, and it could also be reconstructed at these levels, when images are combined in this technique various frequencies are shifted in different ways, it increases the level of quality of new images. ENVI package was used to implement this algorithm.

À Trous Wavelet Transform

Multiresolution analysis based on the wavelet transformation (WT) is according to the decomposition of the image directly into several channels determined by their localized frequency information. Whereas the Fourier transform provides a concept of the frequency content in image, the wavelet description is an advanced reflection and gives a good localization in both frequency and space domain. Therefore, the discrete strategy of the wavelet transform could be carried out with the special model of the so-called à trous algorithm. One considers that the sampled data are the scalar products at pixels of a function with a scaling function which corresponds to a low pass filter. In this task, this approach was implemented as ENVI extension (Canty 2006). This ATWT programs will only work if the ground resolution ratio multispectral (MS) to PAN is exactly 2:1 or 4:1, for example for IKONOS the MS image is 4 m and the PAN image is 1 m. In our case, since the ratio is 12:1, so the Hyperion data was re-sampled to 10 m to have the ratio of 4:1 and then implemented the algorithm.

Results of Image Fusion

The spectral quality of pan-sharpened images is assessed by ERGAS, RASE. For the spatial quality evaluation, the Average Gradient (AG) is used and the final results were analyzed. Output fused images showed in Fig. 2.

As the resulting images achieved by fusion approaches, the ATWT fused image has more colour distortion with respect to the original image, since, it is extremely sensitive to co-registration errors as well as re-sampling effect. The assessment values are presented in Table 1. However, a very good quality is accomplished when the ERGAS, RASE indexes are smaller as well as larger average gradient. The value of ERGAS and RASE signifies that the spectral quality of the Haar wavelet image is better compared with the others in urban area, while, ATWT has the maximum value. The values in Table 1 point out that the Haar wavelet method produces the best ERGAS and RASE result. However, the GS and the PC methods gave the same results against the ATWT gave the worst result.

Approximately, all the fusion techniques are good in increasing the spatial details and in conserving the spectral information. Although for a comparison analysis the experimental results have to be evaluated significantly. According to experimental results, in this work, the Haar wavelet pan-sharpened imagery has been selected for the next step i.e., image classification.

Classification Methods

Image classification is applied based on two methods which includes Support vector machine as a pixel based classification and object-based classification.

Support Vector Machines as a Pixel Based Classification

Support Vector Machines was carried out in ENVI® 4.7. SVM is a supervised machine learning approach that executes classification based on the statistical learning theory (Licciardi et al. 2009) . The basic idea of applying SVM to form classification can be established by outlining the input vectors into one feature space, either linearly or non-linearly, which is correspondent to the selection of the kernel function (Petropoulos et al. 2012). Linear function, polynomial function, radial basis function, and sigmoid function are the four kernel functions usually used in SVM (Petropoulos et al. 2012). SVM outperforms many traditional methods in different applications. In this study, the SVM classifier was carried out to the pan-sharpened imagery for mapping the land cover of the study area applying the training data. Training samples associate of the classes (building, road, vegetation, soil) were collected from the pan-sharpened imagery based on photo-interpretation of the high resolution imagery provided by USGS, and our knowledge with the study area following a stratified random sampling process. Nearly 130 pixels per class (in total 520 pixels) were identified as training data representing the classes defined in our classification scheme. Then, the SVM was applied, using the training samples. It is important that training sample be prototypical of the class that was intending to find. Therefore with the help suitable endmembers were effectively identified by the pixel purity index (PPI) process. The PPI image was used and the image used to identify classes for collecting endmembers as pure pixels that are used for training samples. In our case, the radial basis function (RBF) kernel was selected for carrying out the SVM classification. The RBF kernel is preferred as it needs determining a small number of factors and can be identified to generate good outcome (Widjaja et al. 2008). The input variables needed for operating SVM in ENVI contain the ‘gamma’ in the kernel function, the penalty value, the amount of pyramid levels to utilize and the classification possibility threshold value. Consequently ɣ parameter was set to a value equal to the inverse of the number of the spectral bands of the selected imagery (i.e., 0.023), while the penalty parameter was set to its highest value (i.e., 100), making no misclassification throughout the training procedure (Petropoulos et al. 2012). The pyramid parameter was set to a value of zero, whereas a classification probability threshold of zero was chosen (Petropoulos et al. 2012). The classification result is shown in Fig. 3.

Object-based Image Classification

Object-oriented classification does not perform on one pixels, but objects containing of several pixels that are already collected together in a particular way by image segmentation. eCognition version 7.0.9 was applied for object-oriented analysis and classification. Segmentation is the first and the most important step in the object oriented classification and its purpose is to generate significant objects. In this research, the multi-resolution segmentation algorithm is used which is a bottom-up region merging approach starting with single pixel objects to produce objects. By applying vector map, the heterogeneity has been well. Indeed, eCognition supports import of shape file of vector map for use as a thematic layer for use in the segmentation. This situation results in overlap in the segmentation step when similar objects are nearby each other and has typically occurred in the building structures within urban texture. For the experiment, several segmentations were carried out to find the most suitable parameters, and the parameters were selected using a ‘trial and error’ process. In reality, the largest scale level that can outline the borders of features properly should be selected. For this task a scale factor of 30 and a shape factor of 0.9 (colour factor of 0.1) and a compactness factor of 0.1 were selected to present the best segmentation results.

The next step is classification. eCoginition software provides two general classifiers: a nearest neighbour classifier and fuzzy membership functions. Both operate as class descriptors. The nearest neighbour classification applies samples for various classes so as to allocate membership values. After a representative set of sample image objects has been determined for each class, the algorithm looks for the nearest sample image object in the feature space for each image. To the contrary, the fuzzy membership functions explain intervals of feature attributes in which the objects do suit to a specific class or not.

In this study, classification refinement based on fuzzy membership function classifier was designed to allocate each object to a land cover class (four different features such as roads, buildings, vegetation, and bare soil). Such that, after the sample selection (approximately 10 objects for each class) and classification using Standard Nearest Neighbour Classification (SNN) technique based on a representative set of features, some classes needed to be determined correctly. Various parametric criteria (Table 2) using Membership Function (MF) was applied additional function to get more accurate classification. In fact, the fuzzy membership thresholds are used for the features were selected in nearest neighbour classification to refine the classification results derived from SNN classifier.

The features set for extracting each class in this research are presented in Table 2. Additional unmixing layers were added to the project in the eCognition software to improve the performance of object-based classification. The endmembers available in the unmixing layers (fraction maps) generally presented impervious surface, vegetation and soil. Figure 4 shows the fraction map layers imported into eCognition. These layers were determined from the result of running the “VIPER Tools” under ENVI® 4.7. The wizard performs the spectral unmixing algorithms using pan-sharpened image which is modelled by Multiple Endmember Spectral Mixture (MESMA) to generate the final fraction images of the four physical components were produced: vegetation, road, building, and soil. Then, the fuzzy membership thresholds are used for the features were selected in SNN classification to refine the classification results derived from SNN classifier. The resulting SNN-Fuzzy Membership Function classification was increased to handle the distribution of classes. Overall, The primary results will always require refining and the improvement process usually takes numerous classification iterations, until the user is persuaded that the result is the finest that could be produced. The object-based classification map based on fused image is shown in Fig. 5a.

Fraction images generated from MESMA using pan-sharpened image achieved by Haar wavelet pan-sharpening method. Building fraction map a, road fraction map b, soil fraction map c, and vegetation d. Brighter areas from a, b, d indicate higher fractions, while darker areas indicate lower fractions. While brighter areas from c indicate lower soil fractions, while darker areas indicate higher soil fractions

Furthermore, an object-based classification was carried out on IRS-PAN image. Firstly, a multi-resolution segmentation algorithm is applied by using vector map. For this task a scale factor of 30, shape factor of 0.9 (colour factor of 0.1), and compactness factor of 0.1 were selected. Afterward, the standard nearest neighbour classifier was performed with the training sample selected from PAN image. In this task, we used the classification procedure which is implemented on fused image. However, only panchromatic band in combination with texture such as GLCM contrast, GLCM entropy, dissimilarity, and shape features such as density, border index, length /width were used to classify image objects. The result of PAN classification is displayed in Fig. 5b.

Classification Accuracy Assessment

The confusion matrix is applied and its measures which include overall accuracy, users’ accuracy, producers’ accuracy, and the kappa coefficient to evaluate and analyze each classification technique.

The outcome map of the per-pixel method includes several incorrectly detected pixels of classes, whereas the output map produced by the object based classifier seems more accurate (Figs. 3 and 5a). From Table 3, it can be noticed that the per-pixel classifier generated low overall accuracy (77.33 %) and kappa coefficient (0.7). For the accuracy assessment of pixel based classification 80 samples points are selected that resulted in 20 points per class (4 total classes), using a stratified random sampling strategy.

The overall pixel-based classification accuracy made only about 77.33 %. The minimum producer’s accuracy (34.55 %) was assigned to the soil class. The vegetation class made the second lowest producer’s accuracy (84.09 %), because there was considerable spectral confusion between vegetation and road.

The signature confusion between road and buildings is not very uncommon, as both classes show many land cover substances such as asphalt. Nevertheless, signature confusion between roads and soil seemed uncommon. The spectral confusion between road and vegetation could have been as a result of the point that some vegetation areas in and on the edges of road might have been partially contained as road objects.

On the contrary to the classical method, to accomplish the object-based accuracy assessment a total of 50 objects were randomly selected from the study area to create a training and test area mask. In order to assess the final result of classification, an error matrix was made by the eCognition software based on training and test area mask. This method utilizes test areas from reference information to compute the error matrix.

In this approach a training and test area mask is generated from Geo-Eye image which includes test areas. Then this mask is used to identify the agreement between the test areas in this mask and the classified image.

The accuracy evaluation of object-based classification made a meaningfully higher overall accuracy (90.47 %) and kappa coefficient (0.85) (Table 4). Buildings and roads classes produced remarkably high producer’s accuracies (95 %, 100 %). It is significant to notice that spatial information performs an important role in selecting segmented objects as well as spectral information. The lowest user’s accuracy was produced by the soil and vegetation (75 %). This user’s accuracy was lower than all other classes, since they all achieved at least 83.33 %. This accuracy was low because of some signature disorder with road and vegetation classes as well as building and road and soil. Building and road categories reached the maximum accuracy (95 %, 83.33 %) for user’s accuracy and road, and building classes achieved the maximum producer’s accuracy. As mentioned earlier, the comparison of overall accuracy and kappa accuracy for the classifications shows that the fused image presents improved meaning features and more efficient outcomes. Fused image has higher level of accuracy in classification as demonstrated in Table 5. For the PAN classified image, as shown in Fig. 5b most image objects were classified incorrectly.

Overall, the unavailability of suitable reference data and the acquisition time differences of Hyperion/cartosat-1 images used to generate pan-sharpened input image for the classification of images keeps the accuracies of classifications lower than that were expected.

Conclusions

To use the advantages of the fusion on image classification, four fusion algorithms have been used. Then two supervised classifications are performed on the fused images. After that, quantitative assessment is constructed on the fused and classified images. It has been discovered that the best outcomes are acquired when the images are fused with the Haar wavelet method and then classified using object-oriented image classification.

As expected, using the pan-sharpening methods conserved the spatial content from the PAN band and spectral information of hyperspectral image led to accurate classification. The outcomes of both classification techniques have a good ability to get high classification accuracy, when combined with pan-sharpened imagery, taking benefits of the high spectral and spatial resolution. As it is shown in this paper, the classification of urban land-cover classes with the per-pixel method (i.e., support vector machines) is probably not very successful, because many urban land-cover classes are spectrally very similar and pixel based classifications do not consider spatial preparations of pixels. The classification maps generated from the SVM and object-based classification based on the pan-sharpened imagery obtained for the study area are shown in Figs. 3 and 5. Tables 5 summarize the final results of classifications accuracy assessment. Accuracy assessment results displayed that the object-based method implemented better than the SVM classification.

As mentioned, the purpose of this study has been to assess the combined effectiveness of the image fusion with the support vector machines and object-based classification methods for urban land cover classification. Generally, results from this study recommended that a low cost Hyperion hyperspectral satellite imagery together with object-oriented classification can help in accurate classification. The pixel-based techniques were not very successful in distinguishing urban land-cover classes. This was confirmed by the classification of the pan-sharpened Hyperion image using the support vector machines. The object-based classifier made a significantly better overall accuracy (90.47 %), while the SVM classifier produced (77.33 %).

References

Blaschke, T., & Hay, G. J. (2001). Object-oriented image analysis and scale-space: theory and methods for modeling and evaluating multiscale landscape structure. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 34(4), 22–29.

Canty, M. J. (2006). Evaluation of different fusion algorithms. Boca Raton: CRC Press.

Flanders, D., Hall-Beyer, M., & Pereverzoff, J. (2003). Preliminary evaluation of eCognition object-based software for cut block delineation and feature extraction. Canadian Journal of Remote Sensing, 29(4), 441–452.

Foody, G. M. (2004). Thematic map comparison: evaluating the statistical significance of differences in classification accuracy. Photogrammetric Engineering and Remote Sensing, 70(5), 627–634.

Jaywantrao, P. G., & Hasan, A. P. S. (2012). Application of Image Fusion Using Wavelet Transform In Target Tracking System. International Journal of Engineering, 1(8).

Jensen, J. (2007). Remote senging o the environment: an earth resource perspective. Prentice Hall series in geographic informaction science).

Klonus, S., & Ehlers, M. (2009) Performance of evaluation methods in image fusion. In Information Fusion, 2009. FUSION’09. 12th International Conference on, (pp. 1409–1416): IEEE.

Li, S., & Li, Z. (2010) Effects of image fusion algorithms on classification accuracy. In Geoinformatics, 2010 18th International Conference on, (pp. 1–6): IEEE.

Licciardi, G., Pacifici, F., Tuia, D., Prasad, S., West, T., Giacco, F., Thiel, C., Inglada, J., Christophe, E., Chanussot, J., Gamba P. (2009). Decision fusion for the classification of hyperspectral data: outcome of the 2008 GRS-S data fusion contest. Geoscience and Remote Sensing, IEEE Transactions on, 47(11), 3857–3865.

Matinfar, H., Sarmadian, F., AlaviPanah, S., & Heck, R. (2007). Comparisons of object-oriented and pixel-based classification of land use/land cover types based on Lansadsat7, Etm + spectral bands (case study: arid region of Iran). American-Eurasian Journal of Agricultural & Environmental Sciences, 2(4), 448–456.

Melesse, A. M., Weng, Q., Thenkabail, P. S., & Senay, G. B. (2007). Remote sensing sensors and applications in environmental resources mapping and modelling. Sensors, 7(12), 3209–3241.

Petropoulos, G. P., Arvanitis, K., & Sigrimis, N. (2012). Hyperion hyperspectral imagery analysis combined with machine learning classifiers for land use/cover mapping. Expert systems with Applications, 39(3), 3800–3809.

Pignatti, S., Cavalli, R. M., Cuomo, V., Fusilli, L., Pascucci, S., Poscolieri, M., Santinib, F. (2009). Evaluating Hyperion capability for land cover mapping in a fragmented ecosystem: pollino national park, Italy. Remote Sensing of Environment, 113(3), 622–634.

Salehi, B. (2012). Urban Land Cover Classification and Moving Vehicle Extraction Using Very High Resolution Satellite Imagery. University of New Brunswick, Department of Geodesy and Geomatics Engineering.

Sarup, J., & Singhai, A. (2011). Image fusion techniques for accurate classification of remote sensing data. International Journal of Geomatics and Geosciences, 2(2), 602–612.

Teggi, S., Cecchi, R., & Serafini, F. (2003). TM and IRS-1C-PAN data fusion using multiresolution decomposition methods based on the’a tròus’ algorithm. International Journal of Remote Sensing, 24(6), 1287–1301.

Widjaja, E., Zheng, W., & Huang, Z. (2008). Classification of colonic tissues using near-infrared raman spectroscopy and support vector machines. International Journal of Oncology, 32(3), 653–662.

Xu, H. Q. (2005). Study on data fusion and classification of landsat 7 ETM + imagery. Journal of Remote Sensing, 9(2), 186–194.

Zhou, Q., & Robson, M. (2001). Automated rangeland vegetation cover and density estimation using ground digital images and a spectral-contextual classifier. International Journal of Remote Sensing, 22(17), 3457–3470.

Zhou, J., Civco, D., & Silander, J. (1998). A wavelet transform method to merge landsat TM and SPOT panchromatic data. International Journal of Remote Sensing, 19(4), 743–757.

Author information

Authors and Affiliations

Corresponding author

About this article

Cite this article

Zoleikani, R., Zoej, M.J.V. & Mokhtarzadeh, M. Comparison of Pixel and Object Oriented Based Classification of Hyperspectral Pansharpened Images. J Indian Soc Remote Sens 45, 25–33 (2017). https://doi.org/10.1007/s12524-016-0573-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12524-016-0573-6