Abstract

This paper aims in presenting a thorough comparison of performance of pan sharpening techniques, belonging to spatial, spectral, scale-space and spatial-frequency domain, for Very High Resolution Satellite Data. With the availability of new Very High Resolution sensors, especially, World View-2 sensor, which provides data at sub-meter level, the need for fusion of Panchromatic (PAN) and Multispectral (MS) images has to be further investigated. Pan-sharpening techniques namely, Hue-Saturation-Intensity, Brovey Transform, Principal Components Analysis, Discrete Wavelet Transform, Stationary Wavelet Transform, Non Sub-sampled contourlet Transform and Pseudo Wigner Distribution (PWD) fusion method have been selected for the fusion of PAN and MS images of World View-2 sensor. Further, the comparison of performance of each of the techniques have been carried out by using various evaluation indicators, such as, Root Mean Square Error, Peak Signal-to-Noise Ratio, Correlation Coefficient, Universal Image Quality Index. It is found that PWD based fusion technique gives good result with a good trade-off between the preservation of spectral information and enhancement of spatial resolution.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

One of the challenging application in satellite image fusion is to fuse PAN and MS images acquired from different satellite sensor without introducing inconsistencies or artifacts, which may tamper the attribute of the fused image. High spatial and spectral resolution images are necessary to perform various sophisticated tasks in urban scenario and land–cover classifications (Karathanassi et al. 2007; Nikolakopoulos 2008).

A number of methods, as well as, software tools have been developed for the pan-sharpening over the years (Pohl and van Genderen 1998). This time duration can be divided into two generations i.e. before and after the launch of very high resolution satellite sensors, such as, IKONOS and Quick bird. With the availability of high resolution satellite data, several researchers have focused on developing algorithms (Welch and Ehlers 1987; Chavez et al. 1991; Ranchin et al. 1996; Padwick et al. 2010) and simultaneously, carried out comparative study among these developed algorithms (Ranchin and Wald 2000; Alparone et al. 2007; Rodriguez-Galiano et al. 2012; Yuhendra et al. 2012; Ghosh and Joshi 2013). The pan-sharpening techniques developed can be placed into different categories: (i) color, (ii) statistical, (iii) multi-scale decomposition, (iv) spatial-frequency method. The accuracy of pan-sharpened images is evaluated by means of well defined mathematical framework i.e. by using different evaluation indicators (Karathanassi et al. 2007; Beaulieu et al. 2003; Wang and Bovik 2002; Rajput et al. 2014). Large number of studies have been carried to highlight the utility and significance of fusion techniques using very high-resolution satellite data (Zhang 2004; Wang et al. 2005; Karathanassi et al. 2007; Nikolakopoulos 2008). However, these studies compared fusion techniques based on very few quantitative indicators, which are inept of evaluating overall image quality. Furthermore, none of these studies have carried out a comparative study of fusion methods, belonging to spatial, spectral, scale-space and joint spatial-frequency methods altogether, using VHR data for the analysis of urban environment.

With the availability of new sensors, especially, World View-2 sensor, it is of utmost significance to further investigate the performance and analysis of some of the prevalent existing fusion techniques using World View-2 data, along with different evaluation indicators. The selection of objective metrics is done in such a way that image attributes, such as, spatial, spectral, and structural similarity information are represented.

Thus, the objective of present study is to evaluate the applicability and performance of various pan-sharpening techniques belonging to different category, using a variety of evaluation indicators, especially, for the analysis of urban land-cover.

Methodology

An Overview of Image Fusion Methods

A large number of image fusion techniques have been developed. These can be classified as color, statistical, scale-space and spatial-frequency methods (Fig. 1). The brief description of these techniques are given in the next section.

Fusion Method Based on Hue-Saturation-Intensity

The HSI pan sharpening technique is the oldest and the simplest known image fusion method. One of the main advantage of the HSI implementation in image fusion, is that, it allows the segregation of spatial information as an Intensity (I) component from the spectral information, which is represented by the Hue (H) and Saturation (S) components (Carper et al. 1990; Chavez et al. 1991; Kang et al. 2008). Different transformations have been developed to transfer a colour image from the RGB space to the HSI space. As a result of this transformation, the Red, Green, and Blue modalities are transformed to Hue (H) and Saturation (S) and Intensity (I) components. In addition to Image Registration (IR) and Re-sampling (RS), radiometric transformation of PAN image using histogram matching technique with the Intensity component, in order to eliminate illumination and atmospheric differences. Thereafter, contrast stretching of the PAN image to substitute the Intensity image with a new PAN image having similar average and variance as that of Intensity image. Transform the new I image together with the Hue, Saturation components back into RGB color space i.e. by applying inverse HSI transform.

Different transformations have been developed to transfer a colour image from the RGB space to the HSI space. One common transformation is based on a HSI color Model. HSI colour space is a popular colour space, since it is based on human color perception (Shettigara 1992; Pohl and Van Genderen 1998). The conversion from RGB space to HSI space can be described by the Eq. 1.

Here, in this study, intensity as the average of the three RGB values has been used, which is represented by (Eq. 1) (Smith 1978; Núñez et al. 1999; González-Audícana et al. 2004).

Fusion Method Based on Brovey Transform

The Brovey Transform (BT) is a simple method to fuse data from different sensors based on the chromaticity transformation (Gillespie et al. 1987). This method falls under the category of color based technique, where the fused bands are produced by multiplying each band of the original MS by a ratio of the high resolution data divided by the intensity image (sum of the color bands) (Liu and Moore 1998 and Liu 2000). BT uses a mathematical combination of the color image and high-resolution PAN data. The mathematical representation of Brovey transformation is given by (Eq. 2).

where \( \left[ {{\text{FUS}}_{\text{i}} } \right]_{{{\text{i}} = 1,2,3}} \) are the fused pan-sharpened bands, \( \left[ {{\text{MS}}_{\text{i}} } \right]_{{{\text{i}} = 1,2,3}} \) are the low spatial resolution original bands and \( {\text{PAN}} \) is the high spatial resolution image band to be fused with the low spatial resolution bands.

Fusion Method Based on Principal Component Analysis

The Principal Component Analysis (PCA) method, also known as Hotelling or the Karhunen–Loeve transform. PCA is mathematically rigorous as it transforms a multivariate dataset of correlated variables into a small number of uncorrelated linear combinations of variables called Principal Components (PCs) (Chavez et al. 1991). For Multi-spectral images, PCA creates an uncorrelated feature space that can be used for further analysis instead of the original MS feature space. As a result, a small number of uncorrelated linear combinations of variables called Principal Components \( {\text{PC}}_{\text{s}} \) are generated. Here, the first Principal Component contains most of the data variance amongst all the bands. The rest of the components contain monotonically decreasing amount of the variance found in the dataset (Jensen 1986).

Hence, first Principal Component (\( {\text{PC}}_{1} \)) is an ideal choice for replacing it with high spatial details from the high resolution histogram-matched PAN image i.e. the PAN image histogram is matched to the first Principal Component (\( {\text{PC}}_{1} \)) before substitution (Shettigara 1992; Pohl and Van Genderen 1998). It then replaces \( {\text{PC}}_{1} \) with the modified PAN image (histogram matched). Thereafter, inverse PCA is performed on the modified PAN image and the PCs to obtain a high resolution fused image.

Fusion Method Based on Discrete Wavelet Transform

In the DWT algorithm, an image can be analysed by passing it through an analysis filter bank followed by decimation operation. The analysis filter bank consist of Low Pass Filter (LPF) and High Pass Filter (HPF) at each decomposition stage. When a signal passes through these filters, it splits into two bands. The LPF, which corresponds to an averaging operation, extracts the coarse (average) information of the signal. The HPF, which corresponds to a differencing operation, extracts the detail information of the signal. The output of the filtering operation is then decimated by two (Mallat 1989; Ranchin and Wald 1993; Garguet-Duport et al. 1996; Zhou et al. 1998; Mallat 1999; Ranchin and Wald 2000) i.e. first, the image is filtered along the row and decimated by two, thereafter, the image is filtered along the column and decimated by two. This operation splits the image into four bands namely \( I_{LL }^{A} \)(Approximation), \( I_{LH}^{H} \)(Horizontal), \( {\text{I}}_{\text{HL}}^{\text{V}} \)(Vertical) and \( I_{HH}^{D} \)(Diagonal) respectively. The approximation band \( \left( {{\text{I}}_{\text{LL }}^{\text{A}} } \right) \) contains coarse information where as the detail bands \( \left( {I_{LH}^{H} , I_{HL}^{V} ,I_{HH}^{D} } \right) \) contains information about the edges, points and lines. An J level decomposition can be performed resulting in (3J + 1) different frequency bands.

Further, in this study, Short filter and the number of decomposition level is four, have been considered (Li et al. 2011). The fusion procedure for the pan sharpening of PAN and MS World View-2 images using DWT (Rajput et al. 2014) can be summarized in section “Fusion Method Based on Pseudo Wigner Distribution” (Fig. 3).

Fusion Method Based on Stationary Wavelet Transform

The Discrete Wavelet Transform (DWT) is not a shift-invariant transform. This limitation can be overcome by using some of the DWT’s extensions such as Stationary Wavelet Transform (SWT), also known as ‘à trous’ algorithm (Holschneider and Tchamitchian 1990; Núñez et al. 1999; Aiazzi et al. 2002; Chibani and Houacine 2002; González-Audícana et al. 2002; Beaulieu et al. 2003; González-Audícana et al. 2005). In the “à trous” algorithm, the down-sampling step is suppressed and instead the filter is up-sampled by inserting zeros between the filter coefficients. In the SWT technique, it uses a two-dimensional filter derived from the scaling function. This produces two images, of which one is an approximation image while other is a detailed image called the wavelet plane. A wavelet plane, represents the horizontal, vertical and diagonal detail between 2j and 2j−1 resolution and is computed as the difference between two consecutive approximations \( I_{l - 1} {\text{and }}I_{l} \) levels.

All the approximation images obtained, by applying this decomposition, have the same number of columns and rows as the original image, since filters at each level are up-sampled by inserting zeros between the filter coefficients, make the size of the image same. The fusion procedure for the pan sharpening of MS and PAN images using SWT can be summarized as follows (Fig. 2):

-

(i)

To generate new PAN images, match histograms of PAN image to their corresponding MS image.

-

(ii)

Perform the second level wavelet transform only on the modified PAN image.

-

(iii)

The resulting wavelet planes of PAN are added directly to each MS images.

Fusion Method Based on Non Sub-sampled Contourlet Transform

In order to reduce the frequency aliasing of contourlets, enhance directional selectivity, as well as, to achieve shift-invariance (Cunha et al. 2006) have proposed Non Sub-sampled Contourlet Transform. This is based on the Non sub-sampled Pyramid Filter Banks (NSPFB) and the Non sub-sampled Directional Filter Banks (NSDFB) structure. The former provides multi-scale decomposition, which can be attainable by using two-channel non sub-sampled 2-D filter banks, while the latter provides directional decomposition, to split band pass sub-bands in each scale into different directions (Bamberger and Smith 1992; Li et al. 2011). As a result, NSCT is shift-invariant technique and leads to better regularity and frequency selectivity than Contourlet Transform. The fusion procedure for the pan sharpening of PAN and MS images using NSCT technique can be enumerated in section “Fusion Method Based on Pseudo Wigner Distribution” (Yang et al. 2007) (Fig. 3):

Fusion Method Based on Pseudo Wigner Distribution

Spatial-frequency information of a non-stationary image can be effectively extracted with one of the well known spatial-frequency technique known as Pseudo Wigner Distribution (PWD). PWD is a powerful and efficient tool for capturing the essential non-stationary image structures, and for the characterization of local spectral properties of images in a more descriptive manner (Claasen and Mecklenbrauker 1980; Gabarda and Cristobal 2005). Further, PWD provides a pixel-wise, multi-direction, shift-invariant, spatial-frequency image representation, and moreover, it is not based on a multi-scale decomposition procedure. The significant properties of PWD motivate its use in the field of image fusion (Gabarda and Cristóbal 2007; Redondo et al. 2009; Rajput et al. 2014). The mathematical background of PWD has been discussed below.

Let us consider an arbitrary 1-D discrete function v(n). The PWD of a given array v(n) of \( N \) pixels can be represented by the ‘Eq. 3’.

where, n and m represent the spatial and frequency discrete variables respectively, and k is a shifting parameter. ‘Equation 3’ can be interpreted as the Discrete Fourier Transform (DFT) of the product \( v\left( {n + k} \right)v*\left( {n - k} \right) \). Here, \( v^{*} \) indicates the complex-conjugate of v. W(n n, m) is a matrix where every row represent the pixel-wise PWD of the pixel at position n. Here, v[n] is a 1-D sequence of data from the image, containing the gray-values of N pixels, aligned in the desired direction. By scanning the image with a 1-Dimensional window of \( N \) pixels, i.e. shifting the 1-D window to all possible positions over the full image, the full pixel-wise PWD of the image is produced (Gabarda et al. 2009; Rajput et al. 2014). The window can be tilted in any direction to obtain a directional distribution (Gabarda and Cristóbal 2007). Here, in this study, the value of N = 8 has been taken, the reasons for selecting a small value of N (short 1-D window) for PWD analysis are:

-

(i)

First, it greatly decreases the computational cost.

-

(ii)

Second, it allows to obtain a pixel-wise spectral analysis of the data more efficiently.

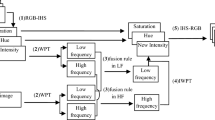

Further, the value of N should be an even number to preserve the symmetry of the window. The general methodology adopted for the pan-sharpening of PAN and MS images by DWT, NSCT and the PWD techniques can be summarized as follows (Fig. 3).

-

(i)

Co-register both source images and resample the multi-spectral image to make its pixel size equal to that of the PAN, in order to avoid the problem of mis-registration, otherwise produce severe artifacts.

-

(ii)

Apply DWT/NSCT/PWD mathematical decomposition procedure to co-registered input images one by one to get their respective coefficients.

-

(iii)

Fuse the corresponding coefficients of the images generated in Step (ii), by using defined fusion rule.

-

(iv)

The fused coefficients are now subjected to an inverse DWT/NSCT/PWD transform to construct the fused image.

Further, it may be noted that DWT, NSCT and PWD techniques has its unique mathematical properties, leading to different image decomposition procedure of an image. Image decomposition plays a vital role in image fusion, since it affects the information extraction quality, as well as, the whole fusion quality.

Fusion Rules

Here, in this study, the basic fusion rule chosen for pan-sharpening is listed below:

-

(i)

Average Fusion Rule (AFR)

The AFR takes the average value of the coefficients, both for Panchromatic W P A and Multi-spectral W MS B images. This rule can be mathematically expressed as (Eq. 4).

Evaluation Criteria

It is obvious, that in most cases, there is slight variation among fusion results i.e. quantitative evaluation method sometimes produce results that cannot be supported by visual inspection. Therefore, qualitatively (subjective) means are difficult to accurately assess the fusion results. For these reasons, many quantitative evaluation methods have been developed. However, there is no universally accepted metric to objectively evaluate the image fusion results. Here, in this study, the accuracy of the fusion techniques has been carried out by using some significant metrics requiring reference image, as listed in Table 1.

Study Area/Data Set

The image fusion techniques used in this study, have been evaluated using dataset acquired from World View-2 sensor. All images are radio metrically calibrated. The MS and PAN images are co-registered for each dataset. Datasets corresponding to different land use variety have been preferred, in order to examine the effect of different kinds of spatial characteristics in terms of size and shape, and spectral characteristics. Table 2 represents the details of the dataset as related to their spectral bands and range, spatial and radiometric resolutions, size, location and date of acquisition.

Dataset (DS-I) belongs to an urban area where there are many man-made objects, such as buildings of different characteristics and patterns, roads and grass lawn. Further, there are many individual and cluster of trees (Fig. 4).

Pre-processing of the Input Images

The first step in pan-sharpening is to pre-process the input images. This may include (i) Image Registration (ii) Re-sampling, and (iii) Histogram Matching, of the input images. The following process are described as follows:

-

(i)

Image Registration: Image registration is the key pre-processing procedure in high resolution, multi-temporal or multi-sensor images. Further, if the source images are from the same sensor and captured at the same time, they are usually already co-registered and can be directly used for fusion process (Roy 2000; Gungor 2008).

-

(ii)

Re-sampling: In pan-sharpening scenario, the MS images may need to be re-sampled (up-sampled) such that they have the same spatial resolution as that of PAN image before pan-sharpening i.e. re-sampled to the pixel spacing of the PAN image (Ghosh and Joshi 2013). Amongst Nearest Neighbour, Bilinear Interpolation, Cubic Convolution and Bi-cubic Spline re-sampling techniques, Bi-cubic spline has been adopted in this study (Rajput et al. 2014).

-

(iii)

Histogram Matching: It is necessary, when the images of same scene from different sensors will have different brightness levels due to variety of reasons such as, different illumination conditions of the scene, different pointing angles of the sensor, difference between the wavelength extensions of the different sensors, and the different image acquisition times. Matching the histogram of one image to that of another image makes the distribution of brightness values in the two images, as close as, possible and thus, reduce the spectral distortion in the resulting fused images (Gonzalez and Woods 2003; Richards and Jia 2006; Nikolakopoulos 2008).

Identification of Best 3-Band Combination for Worldview-2 Data Using Optimum Index Factor

In this study, it is proposed to use the best 3-band combination for dataset to be used using Optimum Index Factor (OIF), proposed by Chavez et al. 1982, for selecting optimum band triplets having Maximum information. The OIF values of all 3-band combination for World View-2 has been computed, is represented by (Eq. 5).

where, σ is the Standard Deviation of a single band. r is the pair-wise Correlation Coefficient among two bands in the triplet.

On the basis of OIF, it is found that the combination of the Band 3, 4 and 7 yields the highest OIF value of 116.04 (Table 3). Hence, the band combination of 3, 4 and 7 will be used subsequently for further studies. However, to get the colour of a standard FCC, band combination of 7:4:3 give the desired results. Further, it is observed that the spectral range of Band 1 and 8 of World View-2 data lies completely outside the panchromatic data range and hence, they have not considered for the implementation and analysis purpose of fusion techniques (Fig. 5).

Spectral response and wavelength details of World View-2 (Digital Globe 2010)

Evaluation of Results and Discussion

In this study, a comparison of performance of the fusion techniques has been evaluated, both qualitatively and quantitatively, using WorldView-2 data.

Visual (Qualitative) Analysis of the Fused Images

The visual comparison of the fused images is carried out for the subjective (qualitative) assessment, since, it is a simple, yet one of the effective method for assessing advantages and disadvantages of any fusion technique. The fused images are visually evaluated in terms of different parameters as listed below:

-

(i)

Colour Radiometry (CR),

-

(ii)

Outline of Building Roofs (OBR)

-

(iii)

Trees (T)

-

(iv)

Outline of the roads (OR)

-

(v)

Vehicles on the roads (VR)

-

(vi)

Water Tank (WT)

-

(vii)

Edge-Sharpening (ES)

Further, these parameters have been used for the purpose of visual assessment. For visualization purposes, fusion techniques have been categorized from “Excellent” to “Poor” (Shi et al. 2005), as shown in Table 4.

In image fusion, it is found that one of the major problem is distortion of colours. Hence, preservation of colour is one of the important criterion while evaluating the performance of any fusion technique (Wang et al. 2005). It is observed that all the fused images exhibit improved geometric details indicating that the small features that were not noticeable in the original MS image are now be distinguishable and identifiable. The fused images generated by different fusion techniques using different fusion rules and their corresponding magnified subset of the selected region have been shown in Fig. 6.

With reference to Fig. 6, it is observed that the fused image generated using HSI technique shows good improvement in the spatial content (geometric details) when compared to the original MS data (Fig. 6a). However, at the same time, seriously alter the spectral values of the original colour of the MS image. This may be due to low correlation between the PAN image and the Intensity component of the MS image, resulting in spectral distortion. Further, the fused image generated with BT, exhibits good spatial resolution when compared with the original MS (Fig. 6b). However, the intensity of colour are darker when compared to the colour of the original MS image, leading to a loss of shadow information and edges, as these get merged with the shadow part.

From Fig. 6, it is observed that the fused images generated by SWT (Fig. 6e), NSCT (Fig. 6f), and the PWD (Fig. 6g) techniques using Average Fusion Rule (AFR) exhibit good geometric details, when compared to the original MS image. However, the intensity of colour in the fused images generated by PWD and NSCT techniques are lighter when compared to the original MS image. This is followed by SWT and DWT for the same fusion rule. However, the fused image generated by DWT-AVG (Fig. 6d), fusion technique yields lower spatial quality. This may be due to the sub-sampling process involved in DWT technique, leading to the introduction of artifacts in the resulting fused image. Another factor affecting the performance of DWT-AVG technique in terms of spatial quality is due to the limited directional selectivity i.e. horizontal, vertical and diagonal directions possess by the technique, which in turn deteriorate the geometry of the features in the fused images.

Further, the fused image generated by PCA (Fig. 6c) fusion technique exhibits good spatial resolution when compared with the original MS and shows comparable results in terms of spectral quality, when compared to other fusion techniques. Furthermore, it is found that PWD, NSCT, SWT and DWT techniques using AFR are successful in terms of keeping the spectral quality of the original MS image. However, it is also observed that their performances are not appealing in terms of improving the spatial detail content, when compared to HSI and PCA techniques. The comparison results of different fusion techniques on the basis of visual object detection are listed in Table 5.

Table 5 shows that PWD-AVG and NSCT-AVG techniques have better performance when compared to SWT-AVG and DWT-AVG techniques. In other words, PWD-AVG and NSCT-AVG techniques gives a good trade-off between the enhancement of spatial resolution and the preservation of spectral information and yields the best results in terms of color radiometry, outline of building roof, outline of road, and water tank when compared to other techniques.

Quantitative Analysis

Visual inspection is a straightforward method for appreciating the quality of fusion techniques. However, a statistical comparison is necessary in order to evaluate precisely the performance of each fusion result. The assessment of accuracy of different fusion techniques has been evaluated quantitatively in terms of spectral, spatial and structural similarity metrics such as Root Mean Square Error (RMSE), Peak Signal-to-Noise Ratio (PSNR), Correlation Coefficient (CC), Universal Image Quality Index (UIQI) and Spatial Correlation Coefficient (SCC) (Table 6).

The fused image which will best preserve the spectral, spatial and structural similarity information of the original low-resolution MS image is the one that has satisfied the following conditions, i.e. for a good fusion of images assessed, the following conditions must be satisfied (Table 7).

Analysis Based on RMSE

Generally, smaller RMSE value represents a greater accuracy measure in terms of spectral fidelity. The results of RMSE generated by different fusion techniques using AFR are tabulated in Table 8.

With reference to Table 8, a low average value for RMSE is observed for PWD-AVG (35.408) technique. This is an indication of less spectral distortion. This is followed by NSCT-AVG, SWT-AVG and DWT-AVG fusion techniques. RMSE (>33) is large for almost all bands derived from DWT-AVG technique indicating that DWT-AVG technique has the lowest performance amongst PWD-AVG, NSCT-AVG and SWT-AVG techniques. This may be due to the sub-sampling process associated with the DWT technique, leading to the introduction of artifacts in the resulting fused image, which in turn produces spectral distortion. Further, RMSE is large for almost all bands derived from HSI and PCA techniques. This may be due to the low correlation between the PAN image and the Intensity component or the first principal component of the original MS image, which in turn results in spectral distortion. Further, the fused image generated by BT technique gives the poor performance in terms of RMSE (69.218), amongst all the fusion techniques.

Thus, it can ascertained that PWD-AVG technique yields the highest performance in terms of preservation of spectral information, when compared to NSCT-AVG, SWT-AVG and DWT-AVG fusion techniques.

Analysis Based on PSNR

Generally, higher values of PSNR reflect less amount of image distortion. The analysis of PSNR values for different fusion techniques using AFR are tabulated in Table 9.

A high value for PSNR is observed for PWD-AVG (29.82) technique (Table 9). This is an indication of less image distortion of the fused image, followed by NSCT-AVG, SWT-AVG and DWT-AVG techniques. The fused images generated by DWT-AVG technique yields low values of PSNR, when compared to other techniques. This may be due to the sub-sampling process involved in DWT technique, which causes artifacts such as, loss of linear continuity in the resulting fused image. This is followed by PCA and HSI techniques.

Further, the fused image generated by BT technique gives the poor performance in terms of PSNR (13.940), amongst all the fusion techniques.

Thus, it can be concluded that PWD-AVG technique yields the highest performance in terms of spectral quality, when compared to other fusion techniques.

Analysis Based on Correlation Coefficient (CC)

Correlation Coefficient is one of the most frequently used evaluation parameter, since it has the capability to quantify the spectral distortion that occurs during fusion process. The correlation of the fused bands should be close to that of the original multi-spectral image to ensure the good spectral fidelity. If the correlation coefficient of two images approaches one, it indicates that the fused image and original image match perfectly. High value of the correlation shows that the spectral characteristic of the multispectral image has been preserved well. Ideally, the value of CC should be close to 1. Table 10 shows the Band-wise CC between original re-sampled multi-spectral mage bands and their corresponding fused bands.

Table 10 shows that the PWD-AVG (>0.890) fusion technique create bands with a high CC and is also effective in preserving the spectral information of the MS image. In other word, PWD-AVG technique shows better results for colour reproduction compared with the other techniques. This is followed by NSCT-AVG and SWT-AVG techniques. Further, the low values for CC is observed for DWT-AVG fusion technique. This is due to the fact that DWT being shift-variant technique produces artifacts in the resulting fused image, which in turn results in spectral distortion. For all selected bands, the CC of PCA, HSI and BT image is lesser than <0.85. Thus, it can be ascertained that PWD-AVG technique yields the highest performance in terms of spectral quality indicating that the PWD-AVG technique tends to preserves the spectral information of the original MS image, when compared to other fusion techniques.

From the Table 10, the fused images can be categorized as per their correlation with the original MS bands, such as, High, Medium and Low correlation (Rajput et al. 2014). The categorization of the fusion techniques as per their correlation values is shown in Table 11.

Analysis Based on UIQI

Ideally, the value of UIQI should be equal to 1. Advantage of the UIQI over ERGAS is that the UIQI also considers the correlations between the fused and reference (MS) images. UIQI indexes for the dataset are calculated using 32 × 32 image blocks, and given in Table 12. The higher the value of UIQI, higher will be the spectral fidelity of the fused image. Ideally, the value should be equal to 1. A good fusion of images would yield UIQI value close to 1.

The UIQI values obtained for DS dataset shows that PWD-AVG and NSCT-AVG fusion techniques are the best two techniques in terms of spectral quality, Among these two techniques, PWD-AVG is the best technique, as indicated by UIQI values (>0.9) (Table 12). This is followed by SWT-AVG and DWT-AVG techniques. The lowest performance of DWT-AVG technique using is due to the sub-sampling process involved in DWT, which causes distortion in the resulting fused image.

Further, the fused image generated by PCA and HSI techniques yields low results in terms of UIQI values for all bands. This may be due to the low correlation between the PAN image and the Intensity component or the first principal component of the original MS image, leading to the loss of spectral fidelity. Amongst all the fusion techniques, the fused image generated by BT fusion technique yields low results in terms of UIQI values for all bands. Thus, it can be concluded that PWD-AVG technique is best in preserving the spectral and structural information of the original MS image, closely followed by NSCT-AVG technique.

Spatial Quality Assessment

In order to assess the spatial quality of the fused image quantitatively, procedure proposed by (Zhou et al. 1998) has been adopted. This approach is used to measure the amount of edge information from the PAN image is transferred into the fused images. The high spatial resolution information missing in the MS image is present in the high frequencies of the PAN image. The pan-sharpening process insert the higher frequencies from the PAN image into the MS image. Therefore, the CC between the high pass filtered PAN and the fused images would indicate how much spatial information from the PAN image has been incorporated into the MS image. A higher correlation between the two high pass filtered images implies that the spatial information has been retained faithfully. This CC is called the Spatial Correlation Coefficient (SCC). In order to extract the spatial detail of the images to be compared, following Laplacian filter has been used, is represented by ‘Eq. 6’.

From Table 13, it is found that HSI based fusion technique effectively incorporates the spatial detail information of features present in the PAN image which is missing in the MS image, when compared to selected fusion techniques. This is closely followed by BT and PCA techniques. Amongst the NSCT-AVG, PWD-AVG, SWT-AVG and DWT-AVG techniques, it is found that NSCT-AVG and PWD-AVG fusion techniques represent image edges better than the SWT-AVG and DWT-AVG techniques, by taking the advantage of the multi-direction concept i.e. it produces spatially enhanced images with sharper geometric representation of feature edges in the fused images. Further, the SWT-AVG and DWT-AVG techniques comparatively produces low values of SCC, when compared to other techniques. This may be due to the limited directional selectivity possess by the SWT and DWT fusion techniques, which in turn produces artifacts that deteriorate the geometry of the features in the fused image. Another factor affecting the performance of DWT technique is due to the absence of shift-invariant property in DWT technique, which in turn causes artifacts such as, loss of linear continuity in the resulting fused image.

Thus, it can be ascertained that HSI technique effectively incorporates the spatial detail information of features present in the PAN image which is missing in the MS image, when compared to selected fusion techniques.

Analysis Based on Computational Time

Computational time also plays an important role while evaluating the performance of pan-sharpening techniques, and this property can be more important when real-time processing is considered. As far as computational time is concerned, it is a function of fusion algorithm, image dimensions, complexity of the image, software used, number of bands and hardware specifications. Further, all fusion processes are executed in the 64 bit operating system, using i5 processor 2.50 GHz operating frequency, along with 6 GB RAM. The results of different fusion techniques in terms of computation time is given in Table 14.

From Table 14, it has been observed that HSI fusion technique took less time i.e. it is the fastest process, amongst all the fusion techniques, when implemented in Matlab. This is followed by DWT-AVG and SWT-AVG fusion techniques. The average time to run PWD-AVG technique is 1.213 s, followed by BT and PCA techniques. Further, the computational time is more for NSCT-AVG fusion technique, when compared to selected techniques.

Thus, it can be concluded that the HSI fusion technique yields the highest performance in terms of computational time amongst all the fusion techniques.

Conclusion

In this study, a comparative assessment of different pan-sharpening techniques using World View-2 data has been carried out in terms of computational time, subjective and objective parameters.

The results show that the PWD-AVG technique emerged as one of the most effective fusion technique by minimizing the trade-off between spatial and spectral fidelity, along with a balanced computational time i.e. preservation of edge (spatial details) and colour details are achievable at the cost of slight increment in the computational time, when compared to selected techniques. Further, PWD-AVG technique, allows the analyst to combine high spatial resolution panchromatic data with lower spatial resolution MS data, resulting in a fused image having good spectral and spatial properties. This is essential for the identification of objects in urban land-cover, such as, buildings, independent trees, and roads.

Eventually, it can be concluded from this study that analysis of non-stationary image can be analyzed effectively by using PWD technique, a spatial-frequency domain based technique, in comparison to multi-scale decomposition techniques (NSCT, SWT, DWT), statistical techniques (PCA, BT) and color technique (HSI).

The outcome of this study could therefore be used to rationalize the selection of pan-sharpening techniques across scenes and sensors.

References

Aiazzi, B., Alparone, L., Baronti, S., & Garzelli, A. (2002). Context-driven fusion of high spatial and spectral resolution images based on oversampled multi-resolution analysis. IEEE Transactions on Geo-Science and Remote Sensing, 40, 2300–2312.

Alparone, L., Wald, L., Chanussot, J., Thomas, C., Gamba, P., & Bruce, L. M. (2007). Comparison of pan-sharpening algorithms: Outcome of the 2006 GRS-S data-fusion contest. IEEE Transactions on Geo-Science and Remote Sensing, 45, 3012–3021.

Bamberger, R. H., & Smith, M. J. T. (1992). A filter bank for the directional decomposition of images: Theory and design. IEEE Transaction Signal Processing, 40(4), 882–893.

Beaulieu, M., Foucher, S., & Gagnon, L. (2003). Multi-spectral image resolution refinement using stationary wavelet transform. In Proceedings of the international geo-science and remote sensing symposium (IGARSS’03) (pp. 4032–4034). July 21–25, 2003. New York: IEEE International.

Carper, W. J., Lillesand, T. M., & Kiefer, R. W. (1990). The use of intensity-hue-saturation transformations for merging SPOT panchromatic and multispectral image data. Photogrammetric Engineering and Remote Sensing, 56, 459–467.

Chavez, P. S., Berlin, G. L., & Sowers, L. B. (1982). Statistical method for selecting landsat MSS ratios. Journal of Applied Photogrammetric Engineering, 8, 23–30.

Chavez, P. S., Sides, S. C., & Anderson, J. A. (1991). Comparison of three different methods to merge multi-resolution and multispectral data land sat TM and SPOT panchromatic. Photogrammetric Engineering and Remote Sensing, 57(3), 295–303.

Chibani, Y., & Houacine, A. (2002). The joint use of IHS transform and redundant wavelet decomposition for fusing multispectral and panchromatic images. International Journal of Remote Sensing, 23, 3821–3833.

Claasen, T. A. C. M., & Mecklenbrauker, W. F. G. (1980). The wigner distribution—A tool for time—Frequency analysis. Parts I–III. Philips Journal of Research, 35, 217–250.

Cunha, A. L., Zhou, J., & Do, M. N. (2006). The nonsubsampled contourlet transform: Theory, design and applications. IEEE Transaction on Image Processing, 15, 3089–3101.

Digital Globe, Inc. (2010). Radiometric use of worldview-2 imagery, technical note. Release date: 1 November 2010. http://Ww.digitalglobe.com/sites/default/files/Radiometric_Use_of_WorldView-2_Imagery%20(1).pdf

Gabarda, S., & Cristobal, G. (2005). On the use of a joint spatial-frequency representation for the fusion of multi-focus images. Pattern Recognition Letters, 26, 2572–2578.

Gabarda, S., & Cristóbal, G. (2007). Blind image quality assessment through anisotropy. Journal Optical of Society America A, 24(12), B42–B51.

Gabarda, S., Redondo, R., Gil, E., & Cristóbal, G. (2009). Image de-noising and quality assessment through the Rényi entropy. Proceedings of SPIE, 7444, 744419.

Garguet-Duport, B., Girel, J., Chassery, J., & Pautou, G. (1996). The use of multi-resolution analysis and wavelet transform for merging SPOT panchromatic and multispectral image data. Photogrammetric Engineering and Remote Sensing, 62, 1057–1066.

Ghosh, A., & Joshi, P. K. (2013). Assessment of pan-sharpened very high-resolution WorldView-2 images. International Journal of Remote Sensing, 34(23), 8336–8359.

Gillespie, A. R., Kahle, A. B., & Walker, R. E. (1987). Color enhancement of highly correlated images. II. Channel ratio and “chromaticity” transformation techniques. Remote Sensing of Environment, 22, 343–365.

Gonzalez, R. C. & Woods, R. E. (2003). Digital image processing using MATLAB. Prentice-Hall, Inc.

González-Audícana, M., Otazu, X., & Fors, O. (2005). A comparison between Mallat’s and the a’trous discrete wavelet transform based algorithms for the fusion of multispectral and panchromatic images. International Journal of Remote Sensing, 26, 595–614.

González-Audícana, M., Otazu, X., Fors, O., García, R., & Núñez, J. (2002). Fusion of different spatial and spectral resolution images: Development, application and comparison of new methods based on wavelets. In Proceedings of international symposium recent advances in quantitative remote sensing, pp. 228–237.

González-Audícana, M., Saleta, J. L., Catalań, R. G., & García, R. (2004). Fusion of multispectral and panchromatic images using improved IHS and PCA mergers based on wavelet decomposition. IEEE Transaction on Geo-Science Remote Sensing, 42, 1291–1299.

Gungor, O. (2008). Multi-sensor multi-resolution image fusion. Ph.D dissertation. Indiana: Purdue University West Lafayette.

Holschneider, M., & Tchamitchian, P. (1990). Regularité local de la function non-differentiable’ the Riemann. In P. G. Lemarié (Ed.), Les Ondelettes en 1989 (pp. 102–124). Paris: Springer.

Jensen, J. R. (1986). Introductory digital image processing: A remote sensing perspective. New Jersey: Prentice Hall.

Kang, T. J., Zhang, X. C., & Wang, H. Y. (2008). Assessment of the fused image of multi-spectral and panchromatic images of SPOT-5 in the investigation of geological hazards. Science China Series E, 51, 144–153.

Karathanassi, V., Kolokousis, P., & Ioannidou, S. (2007). A comparison study on fusion methods using evaluation indicators. International Journal of Remote Sensing, 28, 2309–2341.

Li, S., Li, Z., & Gong, J. (2010). Multivariate statistical analysis of measures for assessing the quality of image fusion. International Journal of Image and Data Fusion, 1, 47–66.

Li, S., Yang, B., & Hu, J. (2011). Performance comparison of different multi-resolution transforms for image fusion. Information Fusion, 12, 74–84.

Liu, J. G. (2000). Evaluation of landsat-7 ETM+ panchromatic band for image fusion with multispectral bands. Natural Resources Research, 9, 269–276.

Liu, J. G., & Moore, J. M. (1998). Pixel block intensity modulation: Adding spatial detail to TM band 6 thermal imagery. International Journal of Remote Sensing, 19, 2477–2491.

Mallat, S. (1989). A theory for multi-resolution signal decomposition: The wavelet representation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 11, 674–693.

Mallat, S. (1999). A wavelet tour of signal processing, 2nd Edn. San Diago: Academic Press. ISBN: 012466606-1:1-637.

Nikolakopoulos, K. G. (2008). Comparison of nine fusion techniques for very high resolution data. Photogrammetric Engineering and Remote Sensing, 74(5), 647–659.

Núñez, J., Otazu, X., Fors, O., Prades, A., Palà, V., & Arbiol, R. (1999). Multi-resolution-based image fusion with additive wavelet decomposition. IEEE Transactions on Geo-Science and Remote Sensing, 37, 1204–1211.

Padwick, C., Deskevich, M., Pacifici, F., & Smallwood, S. (2010). WorldView-2 pan-sharpening. In ASPRS 2010 annual conference, San Diego, CA, pp. 26–30.

Pohl, C., & Van Genderen, J. L. (1998). Multi-sensor image fusion in remote sensing: concepts, methods and applications. International Journal of Remote Sensing, 19, 823–854.

Rajput, U. K., Ghosh, S. K., & Kumar, A. (2014). Multi-sensor fusion of satellite images for urban information extraction using Pseudo–Wigner distribution. Journal of Applied Remote Sensing, 8, 083–668.

Ranchin, T., & Wald, L. (1993). The wavelet transform for the analysis of remotely sensed images. International Journal of Remote Sensing, 14, 615–619.

Ranchin, T., & Wald, L. (2000). Fusion of high spatial and spectral resolution images: the ARSIS concept and its implementation. Photogrammetric Engineering and Remote Sensing, 66, 49–61.

Ranchin, T., Wald, L., & Mangolini, M. (1996). The ARSIS method: A general solution for improving spatial resolution of images by the means of sensor fusion. In Fusion of earth data, proceedings EARSeL conference. Cannes: European Space Agency.

Redondo, R., Sroubek, F., Fischer, S., & Cristóbal, G. (2009). Multi-focus image fusion using the Log–Gabor transform and a multi-size windows technique. Information Fusion, 10, 163–171.

Richards, J. A. & Jia, X. (2006). Remote sensing digital image analysis: an introduction. Berlin: Springer.

Rodriguez-Galiano, V. F., Pardo-Iguzquiza, E., Chica-Olmo, M., Mateos, J., Rigol-Sánchez, J. P., & Vega, M. (2012). A comparative assessment of different methods for landsat 7/ETM+ pan-sharpening. International Journal of Remote Sensing, 33, 6574–6599.

Roy, D. (2000). The impact of mis-registration upon co posited wide field of view satellite data and implications for change detection. IEEE Transactions on Geoscience on Remote Sensing, 38(4), 2017–2032.

Shettigara, V. K. (1992). A generalized component substitution technique for spatial enhancement of multispectral images using a higher resolution data set. Photogrammetric Engineering and Remote Sensing, 58, 561–567.

Shi, W., Zhu, C.O., Tian, Y. and Nichol, J. (2005). Wavelet-based image fusion and quality assessment. International Journal of Applied Earth Observation and Geo-information, 6(3), 241–251.

Smith, A. R. (1978). Color gamut transform pairs. Computer Graph, 12, 12–19.

Wald, L., Ranchin, T., & Mangolini, M. (1997). Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogrammetric Engineering and Remote Sensing, 63, 691–699.

Wang, Z., & Bovik, A. C. (2002). A universal image quality index. IEEE Signal Processing Letters, 9, 81–84.

Wang, Z., Bovik, A. C., Sheik, H., & Simoncelli, E. (2004). Image quality assessment: From error visibility to structural similarity. IEEE Transactions on Image Processing, 13(4), 600–612.

Wang, Z., Ziou, D., Armenakis, C., Li, D., & Li, Q. (2005). A comparative analysis of image fusion methods. IEEE Transactions on Geo-Science and Remote Sensing, 43, 1391–1402.

Welch, R., & Ehlers, W. (1987). Merging multi-resolution SPOT HRV and landsat TM data. Photogrammetric Engineering and Remote Sensing, 53, 301–303.

Yang, B., Li, S., & Sun, F. (2007). Image fusion using nonsubsampled contourlet transform. IEEE international conference on image and graphics, pp. 719–724.

Yuhendra, Alimuddin, I., Sumantyo, J. T., & Kuze, H. (2012). Assessment of pan-sharpening methods applied to image fusion of remotely sensed multi-band data. International Journal of Applied Earth Observation and Geo-Information, 18, 165–175.

Zhang, Y. (2004). Understanding image fusion. Photogrammetric Engineering Remote Sensing, 70, 657–661.

Zhou, J., Civco, D. L., & Silander, J. A. (1998). A wavelet transform method to merge land sat TM and SPOT panchromatic data. International Journal of Remote Sensing, 19, 743–757.

Author information

Authors and Affiliations

Corresponding author

About this article

Cite this article

Rajput, U.K., Ghosh, S.K. & Kumar, A. Comparison of Fusion Techniques for Very High Resolution Data for Extraction of Urban Land-Cover. J Indian Soc Remote Sens 45, 709–724 (2017). https://doi.org/10.1007/s12524-016-0615-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12524-016-0615-0