Abstract

Reservoir inflow prediction is a key factor to flood control decisions and is also crucial concerning the operational planning and scheduling of the reservoir. Today, machine learning methods have become very common in analyzing the reservoir inflow time series data. However, freezing a particular model requires rigorous trial and error practices depending on the structure of the data. The main objective of this paper is to forecast the reservoir inflow of Idukki Reservoir, Kerala, by analyzing the historical daily time-series data (2015–2020) using machine learning (ML)—Exponential smoothing (ES), Autoregressive Integrated Moving Average (ARIMA), and deep learning algorithms—Long Short-Term Memory (LSTM). The prediction results were evaluated for their MAPE (mean absolute percentage error) and FA (forecast accuracy). It was observed that the MAPE for ES, ARIMA, and LSTM were 61%, 43%, and 55%, respectively. As the conventional approach of using a single algorithm did not give a satisfactory result, the authors have devised a hybrid approach by using a weighted combination of the above 3 algorithms. The weights were appropriately chosen so as to lower the error in prediction. Experimental analysis showed that the weighted combination approach lowered the MAPE to 29%, thereby significantly improving the forecast accuracy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Inflow prediction in a reservoir, often characterized by its complexity and random behavior, is one of the most challenging tasks in hydrology. Especially in recent times owing to climate change and increased flood frequency, accurate inflow predictions are pivotal to flood control decisions (Chau and Thanh 2021). There are many factors that influence the same such as rainfall (which depends on temperature, humidity, wind speed, cloud formation, etc.), the topography of the catchment area, etc. The main contributors of the incoming water to a reservoir are the rainfall occurring at the catchment area during a particular time period and the release of water from an upstream reservoir if any. By day, the task of reliable prediction is becoming intricate due to global environmental changes and associated changes in temperature, rainfall patterns, etc.

Often the methods used for prediction vary from mathematical or physical models (Adeyemi 2021) to the artificial intelligence–based data-driven models. The data-driven models provide much better and more reliable accuracy because the model is formulated by finding the association between the system input and output variables rather than understanding the physical behavior of the system, which is often complex with a large degree of uncertainty (Solomatine et al. 2008; Guergachi and Boskovic 2008). Some examples of such models are the statistical linear regression models, Autoregressive Moving Average (ARMA) and Autoregressive Integrated Moving Average (ARIMA) models, and other machine learning (ML) models.

Many investigators have worked on prediction forecasting techniques based on historical data, i.e., time series analysis. These methods vary from simple averaging techniques to developing complex and highly sophisticated statistical models. When compared with statistical methods, machine learning techniques are found to be more precise in predicting forecasts with improved accuracy (Wang et al. 2017). These days, machine learning models such as ARIMA and SARIMA (Box and Pierce 1970; Chatfield 2003), exponential smoothing, support vector regression (SVR) (Zhang et al. 2018a, b; Yu et al. 2018), and deep belief network (DBN)(Xu et al. 2019) have been widely used for making predictions based on time series data. The vivid range of applications varies from forecasting road accidents (Rabbani et al. 2021), estimating pandemic death rates (Talkhi et al. 2021), and predicting corporate net income level (Gorbatiuk et al. 2021) among several others.

Today, many works in hydrology, specifically related to inflow prediction, use machine learning techniques. The use of ARIMA models for reliability forecasting and analysis was demonstrated by Ho and Xie (1998). The effectiveness of ARIMA over ARMA and autoregressive ANN was evaluated by Valipour et al. (2013). Flow forecasting of Hirakud Reservoir with ARIMA model was done by Rath et al. (2017) and a MAPE of 31.4% was obtained. A model using ARIMA and two types of ensemble models were used by Gupta and Kumar (2020) to predict the reservoir inflow of three major reservoirs Linganmakki, Supa, and Mani in Karnataka with an error of less than 5% between the actual and predicted values. Using ARIMA to predict short-term water tank levels was experimented with by Viccione et al. (2020). Rahayu et al. (2020) studied the discharge prediction of Amprong River using five different ARIMA models and the best was chosen based on MSE and other parameters. Recently, researchers have also incorporated deep learning techniques for inflow forecasting as they seem to produce much better results. Time lagged recurrent neural networks were used by Kote and Jothiprakash (2009) for reservoir inflow prediction with good forecast accuracy. Lee and Kim (2021) have recently developed a sequence-to-sequence (Seq2Seq) mechanism combined with a bidirectional LSTM for forecasting inflow. Devi Singh and Singh (2021) have worked on reservoir inflow prediction of the Bhakra Dam in India using LSTM.

Often in machine learning, ensemble averaging is used to produce separate models and then combine their results to create a better model. In most cases, an ensemble of models produces a better performance than in the case of a single model because the errors are averaged out. Lee et al. (2020) developed an ensemble average-based model with multiple linear regression (MLR), support vector machines (SVM), and artificial neural networks (ANN) for the inflow prediction of Boryeong dam, Korea. Similar ensemble approaches were carried out by many other investigators across the globe (Sun et al. 2022; Ostad-Ali-Askari and Ghorbanizadeh-Kharazi 2017).

The state of Kerala, the southernmost state in India, was devastated by one of the biggest floods of the century, the Kerala floods 2018. It was during August 2018 that all the shutters of the Idukki Reservoir were opened for the first time in 26 years. As it is known, any uncontrolled or excessive release of a huge amount of water has the potential for loss of life and damage to property due to flooding. Subsequent floods along with incessant rainfall and unexpected landslides affected a large population along the banks of Periyar.

According to the reports by the Kerala State Disaster Management, despite the CWC (Central Water Commission) setting up 275 flood forecasting stations across the country by the year of 2017, no flood forecasting stations (FFS) were set up by the CWC in Kerala (CAG 2018). Developing a flood forecasting system requires forecasted precipitation along with inflow data among various other inputs. Hence, inflow forecasting is very crucial when it comes to flood risk management. Therefore, for proper reservoir management, reliable and efficient inflow forecasting is of utmost importance. Although there are several works across the globe on reservoir inflow prediction using hydrological and machine learning mechanisms, there are very few works pertaining to reservoirs in Kerala.

The main objective of this paper is to analyze the daily time series inflow data of the Idukki Reservoir, Kerala, from 2005 to 2020 so as to predict the future inflow using machine learning and deep learning models, viz., ES, ARIMA, and LSTM, and to compare their results. In addition to this, an attempt has also been made to reduce the deficiencies and improve the results of the model by ensemble averaging ES, ARIMA, and LSTM methods.

Background

Exponential smoothing and ARIMA are two widely used time series forecasting techniques with complementary ways of approaching data. While exponential smoothing describes data in terms of trends and seasonality, the ARIMA model describes data in terms of its correlation and autocorrelation functions. The LSTM on the other hand is a powerful deep learning memory–based forecasting technique that eliminates the vanishing problem in RNN.

Exponential smoothing (ES)

During the late 1950s, Brown (1959) and Holt (1957) proposed exponential smoothing, which is one of the successful forecasting techniques even today. Exponential smoothing is a time-series forecasting method where the forecasts are predicted using exponentially decaying weighted averages of past observations. To simplify, the recent observations are given relatively more weight than the previous ones and so on. There are several types of smoothing techniques, namely, single exponential smoothing, double exponential smoothing, and triple exponential smoothing also called the Holt-Winters exponential smoothing.

Single exponential smoothing is best suited for data showing no trend or seasonality. It has a single smoothing parameter, alpha (α) where 0 ≤ α ≤ 1.

where \({y}_{T+1}\) is the one step ahead forecast for time T + 1. The smoothing parameter determines the weights to be assigned, so the more the value of α, the more the weightage.

Further extending the single exponential smoothing, Holt 1957 proposed the double exponential smoothing to forecast data with a trend. Double exponential smoothing is suitable for data showing trends in either an additive or multiplicative form. Here, in addition to α, the forecast equation has one more smoothing parameter for the trend, which is beta (β).

where \({y}_{T+h}\) is the h step ahead forecast for time T + h.

The triple exponential smoothing method also referred to as Holt-Winters exponential smoothing has the forecast function and three smoothing parameters alpha (α), beta (β), and gamma (γ). The method has two variations to incorporate additive and multiplicative seasonality. Additive seasonality is used for almost constant seasonal variations whereas multiplicative seasonality is for varying seasonal components.

Autoregressive Integrated Moving Average

ARIMA is a powerful statistical algorithm used in forecasting and it employs a different approach to time series forecasting by describing the autocorrelations in the data. It is capable of modeling any seasonal or non-seasonal (SARIMA) time-series data. It comprises three parts, auto regression (AR), integration (I), and moving average (MA). ARIMA relies on AR which means that it uses weighted past values to forecast future values. Integration reduces the seasonality of a time series by differencing. Moving average eliminates randomness from a time series.

An ARIMA model is characterized by 3 terms: p, d, and q where p is the order of the AR term, q is the order of the MA term, and d is the number of differencing required to make the time series stationary.

A pure AR only model is one where Yt depends only on its own lags and a pure MA only model is one where Yt depends only on the lagged forecast errors. So basically in an ARIMA model, the time series is at least differenced once in order to make it stationary and the AR and MA terms are combined so that the equation takes the form:

where α is the intercept term estimated by the model, \(Y_{t-1}\) is the first lag of the series, β is the coefficient of the lag, and \({\Phi}_{1}\) is the coefficient of forecast errors \(\in_{t-1}\).

As in the case of any DL or ML algorithms, the selection of input has a major role in making a reliable forecast. It is a common practice to use the autocorrelation function (ACF) and partial auto correlation function (PACF) curves to prioritize and select the appropriate input to the model, for determining AR and MA in the ARIMA model. ACF function is also used to determine the stationarity of data. The ACF function curve abruptly drops to zero for stationary data whereas for non-stationary data, it decreases gradually.

Similar to ACF and PACF, AIC (Akaike Information Criterion) and BIC (Bayesian Information criterion) can also be used to determine the order of the model. AIC tells how well the model and low AIC indicate a better model. BIC is very similar to AIC and both these metrics suggest a lower value for a better model.

Long Short Term Memory (LSTM)

LSTM was introduced by Hochreiter and Schmidhuber (Hochreiter and Schmidhuber 1997) in 1997 where they used gates and memory cells to handle the long-term dependency and mitigate the problem due to short-term memory in RNN.

where the different gates are the input gate (\({i}_{t}\)), input modulate gate (\({c`}_{t}\)), forget gate (\({g}_{t}\)) with weights U and W, and the output gate (\({o}_{t}\)). Also b is bias vector, \({c}_{t}\) is cell state, and \({h}_{t}\) is the hidden state. The forget gate (\({g}_{t}\)) decides which information should be retained and which should be left out. The cell state \({(c}_{t}\)) contains the new values that are relevant to the network. The output gate (\({o}_{t}\)) decides what the next hidden state should be. The hidden state (\({h}_{t}\)) contains information about previous inputs. These gates in LSTM have the capability to sequence data and will decide on the amount of information that is important and also pass them down the long chain to make predictions. This way, LSTM intelligently chooses the amount of information that has to be stored, while rejecting the rest and thus provides a better solution.

A basic LSTM architecture (Chevalier 2018) is shown in Fig. 1. It has the Tanh function which controls the values passing through the network, \({h}_{t}\) which denotes the new hidden state, \({h}_{t-1}\) which denotes the previous hidden state, and \({x}_{t}\) the input. The sigmoid activation, similar to Tanh, decides how much value should be passed and it takes values between 0 and 1. A value of 0 means not passing any value and 1 means passing everything.

LSTM architecture. Chevalier 2018

Materials and methods

Study area

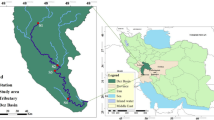

The Idukki Reservoir in Kerala, India, is one of the highest arch dams in Asia. It is situated between two mountains, Kuravanmala and Kurathimala, in the district of Idukki. The dam, built on the Periyar River, has gross storage of 1996 Mm3 which is equivalent to 1.996 km3. The Government of Canada aided in the construction of the dam and it was inaugurated by Prime Minister Smt. Indira Gandhi on February 17, 1976. It is currently owned by the Kerala State Electricity Board (KSEB) of the Government of Kerala. The water from the dam is primarily used for the generation of electricity for the state. Table 1 details the salient features of the reservoir. Figure 2 shows the location of the reservoir on Google Earth.

Data collection and preparation are among the primary step in any data analytics research. One common problem concerning data collection is missing data because of copious information and the interpretation of the same (Hamzah et al. 2021). For the purpose of the study, daily reservoir inflow data of Idukki Reservoir from January 2015 to December 2020 were carefully collected and sorted from the Dam Safety Authority Department of the Kerala State Electricity Board (KSEB) with very negligible data loss. Next, the data was checked and cleared for any ambiguous values/characters using Pythonic Data cleansing. Table 2 depicts the descriptive data of the deployed information and Fig. 3 depicts the day-to-day reservoir inflow of Idukki Reservoir.

Methodology

Data from 1st January 2015 to 30th November 2020 were used for training purposes and the rest of the data till 31st December 2020 were used for model reliability testing. Furthermore, depending on the results, inflow predictions were done for a period of 59 days from 1st January 2021 to 28th February 2021.

From Fig. 4, it can be interpreted that the data is an additive time series and hence the time series data is considered to be a sum of its various components as in the case of additive decomposition. Figure 5 depicts the ACF and PACF on the inflow data. It calculates the dependence of present samples on the past samples of the same series.

-

• Data pre-processing: Firstly, the reservoir data obtained from the authorities is checked for missing values and is normalized. The data set is checked for stationarity using the Augmented Dicky Fuller test (ADF). The ADF Test Statistic indicated a p value less than − 0.05 which means that the data was stationary and, hence, no further pre-processing was required.

-

• Model implementation: The clean data set is now divided into training data and test data in the ratio of 80:20. The data is trained and tested using 3 models, namely, ES, ARIMA, and LSTM.

-

a. Exponential smoothing: The data is fed into a single exponential smoothing network with optimization parameter alpha (α) = 0.8036. As observed in the time-series decomposition, the input data does not exhibit any trend as such; hence, the most suitable form of ES is single exponential smoothing. The smoothing factor alpha (α) ranges between 0 and 1. A value of alpha (α) closer to 1 indicates fast learning, which means that the recent observations are given more weightage (Shmueli and Lichtendahl 2016).

-

b. Autoregressive Integrated Moving Average: Firstly, the Dickey-Fuller test was performed to check the stationarity of data as data should be stationary to apply ARIMA. The hypothesis returned p = 0.01 which meant that the data is stationary. To optimize the parameters p, d, and q for the best fit, the ACF and PACF were plotted. After evaluation, the ARIMA model was implemented with p, d, q values ARIMA (1, 0, 1) respectively as it resulted in the smallest AIC value. In machine learning, Akaike’s information criterion (AIC) and Bayesian Information Criterion (BIC) are the statistics that help in model selection. They compare the superiority of a set of statistical models to each other and a lower AIC or BIC value shows a better fit (Snipes and Taylor 2014). Hence, the AIC is a factor that determines the order (p, d, q) of the ARIMA model. The model was tested for many other structures including ARIMA (3, 1, 1), ARIMA (4, 2, 0), etc., and the best was chosen based on AIC values as shown in Table 3.

-

c. Long Short-Term Memory: Here, the data is fed into a model with an input layer followed by a hidden layer which comprises 5 LSTM layers and 1 dense layer with the number of neurons 50, 100, 100, 100, and 50, respectively, with activation function ReLU (Rectified Linear Unit), loss function MSE, and optimizer as Adam. The performance of the LSTM model can be optimized by an appropriate selection of these parameters. The ReLU is a common activation function used in the hidden layer as it results in better performance. It is because ReLU does not activate all the neurons at the same time and hence provides better computational efficiency. It returns the input or 0 if the input is less than 0 (Agarap 2018). The Adam is an optimization algorithm used by DL models for stochastic gradient descent. Adam is very similar to other optimizers such as AdaDelta and is chosen because of its less memory requirement, easy implementation, and efficiency (Kingma and Ba 2014). The output layer, which is the third layer, uses the sigmoid activation function. The input is a weighted sum of observations and a bias is added to each hidden layer. The output is obtained such as to minimize the error by correcting the weights and the bias.

-

• Model prediction: The results obtained were assessed using statistical methods like mean absolute percentage error (MAPE) and forecast accuracy (FA). Pertaining to the current data set, the initial results indicate that ARIMA performs better than LSTM.

The above models were simulated in RStudio’s Keras package with the TensorFlow backend (Gandrud 2018; Hodnett and Wiley 2018). As the prediction results from the individual models (ES, ARIMA, and LSTM) were not satisfactory, a weighted ensemble average was taken for a much more reliable forecast. A weighted ensemble average, an extension of simple ensemble averaging, produces an output with the weights added in such a way that it is proportional to each model’s individual performance. Hence, ARIMA was given slightly more bias as it performed better individually. The weights were optimized using Solver-based optimization technique to minimize the error. Solver is a Microsoft Excel add-on for optimization used in what-if analysis.

Experiment results

There are different tools to evaluate the accuracy of a prediction model. In this study, the efficiency of prediction for each of the models is calculated in terms of MAPE and FA.

Mean Absolute Percentage Error =

The smaller the value of MAPE, the better is the prediction result.

Forecast accuracy =

The higher the value of FA, the more accurate is the prediction.

where, pred (t) is the predicted value at time t; org (t) is the actual value at time t; and n is the total predicted time.

The prediction results for ES gave a MAPE of 61% with FA of 35%. ARIMA gave 43% MAPE with 57% FA whereas LSTM gave MAPE of 55% with FA of 45%. To generate a much more reliable result, a weighted average of the above 3 models were taken. This combined weighted average provided a better model in terms of MAPE and FA. These results are tabulated in Table 4.

A graphical plot of the predictions is shown in Figs. 6, 7, 8, and 9 with gross inflow (MCM) on the y-axis and date on the x-axis. The right-side plots in these figures are a zoomed version of the predictions for better understanding.

Apart from tuning the hyper-parameters, there are different optimization algorithms employed in ES, ARIMA, and LSTM models. A summary of a few of these techniques is included below in Table 5.

Conclusion

A reliable reservoir inflow forecasting mechanism is beneficial for an optimal operation as it largely influences many decisions including flood management. Owing to the recent climatic changes and flood scenarios, scheming inflow rates have become a crucial part of reservoir management. The current work has explored a combined weighted model of ES, ARIMA, and LSTM, for developing a reservoir inflow forecast model for the Idukki Reservoir, India. For the experiment, daily reservoir inflow data was collected from the authorities for a period from 2015 to 2020. The input data was cleaned and divided into two sets for training and testing purposes. These were then individually fed into ES model with smoothing factor alpha (α) 0.8036; ARIMA model with p, d, q (1, 0, 1); and LSTM network with 5 layers and 1 dense layer. Each model’s efficiency was defined in terms of MAPE and FA. The individual results showed that ARIMA performed better for the particular input data of the reservoir with a MAPE of 43% whereas ES and LSTM showed a MAPE of 61% and 55%, respectively. A distinctive improvement in performance was observed while taking a weighted ensemble average of the three models with MAPE reduced to 29% thereby increasing the forecast accuracy to 71%. One drawback of the current study was that it deals with only historical time series data of the inflow and does not take into account various other climatic factors such as rainfall and temperature. Hence, for further investigation, it is advised to include the above-said parameters for an enhanced result.

Data availability

The data that support the findings of this study are available on request from the corresponding author. Some of the data are not publicly available due to restrictions by the data-providing agency.

References

Adeyemi TS (2021) Analytical solution of unsteady-state forchheimer flow problem in an infinite reservoir: the Boltzmann transform approach. J Hum Earth Future 2(3):225–233

Agarap AF (2018) Deep learning using rectified linear units (relu). arXiv preprint. arXiv:1803.08375

A Rath, KSBhoi, S Samantaray, PC Swain (2017) Flow forecasting of Hirakud reservoir with ARIMA model. International Conference on Energy, Communication, Data Analytics and Soft Computing (ICECDS). IEEE, Chennai, India, 9 pages (2017)

Box GE, Pierce DA (1970) Distribution of residual autocorrelations in autoregressive-integrated moving average time series models. J Am Stat Assoc 65(332):1509–1526

Brown RG (1959) Statistical forecasting for inventory control. McGraw/Hill.

CAG (2018) Report on performance audit of ‘Preparedness and response to floods in Kerala (2018), Chapter 3 https://cag.gov.in/en

Chatfield C (2003) The analysis of time series: an introduction. Chapman and Hall/CRC.

Chau TK, Thanh NT (2021) Primarily results of a real-time flash flood warning system in Vietnam. Civ Eng J 7(4):747–762

Chevalier G (2018) LARNN: linear attention recurrent neural network. arXiv preprint. arXiv:1808.05578

Deng C, Zhang X, Huang Y, Bao Y (2021) Equipping seasonal exponential smoothing models with particle swarm optimization algorithm for electricity consumption forecasting. Energies 14(13):4036

Devi Singh A, Singh A (2021) Optimal reservoir operations using long short-term memory network. arXiv e-prints, pp.arXiv-2109

Ho S, Xie M (1998) The use of ARIMA models for reliability forecasting and analysis. Comput Ind Eng 35:213–216

Hodnett M, Wiley JF (2018) R deep learning essentials: a step-by-step guide to building deep learning models using TensorFlow, Keras, and MXNet. Packt Publishing Ltd.

Holt CE (1957) Forecasting seasonals and trends by exponentially weighted averages (O.N.R. Memorandum No. 52). Carnegie Institute of Technology, Pittsburgh USA

Gandrud C (2018) Reproducible research with R and RStudio. Chapman and Hall/CRC.

Gorbatiuk K, Hryhoruk P, Proskurovych O, Rizun, N, Gargasas A, Raupelienė A, Munjishvili T (2021) Application of fuzzy time series forecasting approach for predicting an enterprise net income level. In E3S Web of Conferences (Vol. 280). EDP Sciences

Guergachi A, Boskovic G (2008) System models or learning machines? Appl Math Comp 204:553–567

Gupta A and Kumar A (2020) Two-step daily reservoir inflow prediction using ARIMA-machine learning and ensemble models.

Hamzah FB, Hamzah FM, Razali SFM, Samad H (2021) A comparison of multiple imputation methods for recovering missing data in hydrological studies. Civil Eng J 7(9):1608–1619

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Kingma DP and Ba J (2014) Adam: a method for stochastic optimization. arXiv preprint. arXiv:1412.6980

Kote SA, Jothiprakash V (2009) Monthly reservoir inflow modeling using time lagged recurrent networks

Kumar R, Kumar P, Kumar Y (2022) Multi-step time series analysis and forecasting strategy using ARIMA and evolutionary algorithms. Int J Inf Technol 14(1):359–373

Lee S, Kim J (2021) Predicting inflow rate of the Soyang River Dam using deep learning techniques. Water 13(17):2447

Lee D, Kim H, Jung I, Yoon J (2020) Monthly reservoir inflow forecasting for dry period using teleconnection indices: a statistical ensemble approach. Appl Sci 10(10):3470

Li BJ, Sun GL, Liu Y, Wang WC, Huang XD (2022) Monthly runoff forecasting using variational mode decomposition coupled with gray wolf optimizer-based long short-term memory neural networks. Water Resour. Manage 1–21

Liu L, Wu L (2020) Predicting housing prices in China based on modified Holt’s exponential smoothing incorporating whale optimization algorithm. Socioecon Plann Sci 72:100916

Musdholifah A, Sari AK (2019) Optimization of ARIMA forecasting model using firefly algorithm. IJCCS (indonesian Journal of Computing and Cybernetics Systems) 13(2):127–136

Ostad-Ali-Askari SM, Ghorbanizadeh-Kharazi H (2017) Artificial neural network for modeling nitrate pollution of groundwater in marginal area of Zayandeh-rood River, Isfahan. Iran KSCE Journal of Civil Engineering 21(1):134–140

Rabbani MBA, Musarat MA, Alaloul WS, Rabbani MS, Maqsoom A, Ayub S, Bukhari H, Altaf M (2021) A comparison between seasonal autoregressive integrated moving average (SARIMA) and exponential smoothing (ES) based on time series model for forecasting road accidents. Arabian Journal for Science and Engineering, pp.1–26

Rahayu WS, Juwono PT, Soetopo W (2020) February. Discharge prediction of Amprong river using the ARIMA (autoregressive integrated moving average) model. In IOP Conference Series: Earth and Environmental Science (Vol. 437, No. 1, p. 012032). IOP Publishing

Rashid TA, Fattah P, Awla DK (2018) Using accuracy measure for improving the training of LSTM with metaheuristic algorithms. Procedia Comput Sci 140:324–333

Shmueli G and Lichtendahl Jr KC (2016). Practical time series forecasting with r: a hands-on guide. Axelrod Schnall Publishers.

Skariah M, Suriyakala CD (2021) Gauging of sedimentation in Idukki Reservoir, Kerala (1974–2019), and the impact of 2018 Kerala floods on the reservoir. J. Indian Soc. Remote Sens: 1–10

Solomatine DP, Abrahart, R, See L (2008) Data-driven modelling: concept, approaches, experiences. , In: Practical hydroinformatics: computational intelligence and technological developments in water applications (Abrahart, See, Solomatine, eds), Springer-Verlag

Snipes M, Taylor DC (2014) Model selection and Akaike information criteria: an example from wine ratings and prices. Wine Econ Policy 3(1):3–9

Sun X, Zhang H, Wang J, Shi C, Hua D, Li J (2022) Ensemble streamflow forecasting based on variational mode decomposition and long short term memory. Sci Rep 12(1):1–19

Talkhi N, Fatemi NA, Ataei Z, Nooghabi MJ (2021) Modeling and forecasting number of confirmed and death caused COVID-19 in IRAN: a comparison of time series forecasting methods. Biomed Signal Process Control 66:102494

Valipour M, Banihabib ME, Behbahani SMR (2013) Comparison of the ARMA, ARIMA, and the autoregressive artificial neural network models in forecasting the monthly inflow of Dez dam reservoir. J Hydrol 476:433–441

Viccione G, Guarnaccia C, Mancini S, Quartieri J (2020) On the use of ARIMA models for short-term water tank levels forecasting. Water Supply 20(3):787–799

Wang L, Wang B, Zhang P, Liu M, Li C (2017) Study on optimization of the short-term operation of cascade hydropower stations by considering output error. J Hydrol 549:326–339

Wu F, Cattani C, Song W, Zio E (2020) Fractional ARIMA with an improved cuckoo search optimization for efficient short-term power load forecasting. Alexandria Eng J 59(5):3111–3118

Xu W, Peng H, Zeng X, Zhou F, Tian X, Peng X (2019) Deep belief network-based AR model for nonlinear time series forecasting. Appl Soft Comput 77:605–621

Yu X, Zhang X, Qin H (2018) A data-driven model based on Fourier transform and support vector regression for monthly reservoir inflow forecasting. J Hydro-Environ Res 18:12–24

Zhang D, Lin J, Peng Q, Wang D, Yang T, Sorooshian S, Liu X, Zhuang J (2018a) Modeling and simulating of reservoir operation using the artificial neural network, support vector regression, deep learning algorithm. J Hydrol 565:720–736

Zhang Z, Yang R, Fang Y (2018b) LSTM network based on on antlion optimization and its application in flight trajectory prediction. In 2018b 2nd IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC) (1658–1662). IEEE

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Responsible Editor: Broder J. Merkel

Rights and permissions

About this article

Cite this article

Skariah, M., Suriyakala, C.D. Forecasting reservoir inflow combining Exponential smoothing, ARIMA, and LSTM models. Arab J Geosci 15, 1292 (2022). https://doi.org/10.1007/s12517-022-10564-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12517-022-10564-x