Abstract

This study was conducted on the Karkheh catchment area in the country of Iran on the basis of data from four stations of Cham Anjir, Kashkan, Pole-zal, and Jologir. The data were monthly collected for the years from 1997 to 2017, from which 70% were used for calibration and 30% for test validation. Artificial neural networks (ANNs) were used in the prediction of river flow. To optimize the weight coefficients of the network, the meta-heuristic genetic algorithm (GA), particle swarm optimization (PSO), ant colony optimization (ACO), harmonic balance algorithm (HB), and algorithm of the innovative gunner (AIG) were employed. To assess the modeling performance of the statistic parameters, the coefficient of determination (R2), root mean square error (RMSE), Nash-Sutcliffe efficiency (NSE), mean absolute error (MAE), and percentage of bias (PBIAS) were adopted. The achieved best input combination was different for each algorithm. In summary, variables with lower correlations had poorer performances. Hybrid algorithms improved the predicting power of the considered independent model. The results showed that for the studied four stations, among the meta-heuristic algorithms, AIG had the highest predictive power correlation coefficient R2 = 0.985–0.995, root mean square error (RMSE) = 0.036–0.057 m3/s, mean absolute error (MAE) = 0.017–0.036 m3/s, Nash-Sutcliffe coefficient (NSE) = 0.984–0.994, and bias = 0.008–0.024 and had the best performance in estimating the daily flow of the river.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Different factors such as population growth and human activity development have led to the increase in water demand. This, in turn, has made considerable changes to water catchments and uses of rivers, resulting in economic losses such as runoffs, destructive floods, landslides, storms, and tsunamis (Yapo et al. 1996; Tokar and Johnson 1999). Studies have shown that half of the countries around the world are faced with drought. In many other regions, the hazards of storms and runoffs have caused the destruction of infrastructures (Wheater et al. 2008; Khaing et al. 2019). Adopting traditional and non-modernized, i.e., non-scientific and non-applicable, procedures in the agricultural section and inappropriate management factors (weakness in strategic management and programming, unskilled plans, etc.) have exerted a double pressure on natural resources, ecosystem, and hydrological cycles (Samra et al. 1999). In some regions, increasing rainfall leads to the rise of the runoff level, causing erosion and increased base flow in rivers (Friedman 1991). Rapid economic development and increased human activities have raised natural hazards, such as severe climate change, and transformed runoffs (Li et al. 2009). Thus, exact prediction and estimation of rainfall-runoff have an important role in programming and management of water resources in different fields, such as flood control (Bai et al. 2015; Liu et al. 2018), water transformation (Madani 2011), environmentology (He et al. 2018), optimization of water distribution systems (Zheng et al. 2015), and so on (Xu et al. 2019; Liu et al. 2019). For systematic and optimal modeling of hydrological processes, proper perception of the involved phenomena and their physical relations play a significant role (Jothityangkoon et al. 2001). Accordingly, researchers from around the world have developed and applied various hydrological models in different scientific fields (Chow et al. 1988; Pinos and Timble 2019). Accurate modeling of the hydrological processes can provide important fundamental information in different areas and significantly improve different systems of water resources (Nourani et al. 2007; Nourani and Mano 2007). Different models for predicting hydrological phenomena have been developed by researchers in the past decades (Niu et al. 2019). These models are classified into physically based, conceptual, and data driven, and each category has its specific advantages and disadvantages (Zhu et al. 2019). Artificial intelligence and data mining techniques are nowadays broadly applied to predicting non-linear hydrological time series in different fields of science. The main advantage of these techniques is their applicability to a wide range of conditions with dynamic and non-linear data with a high level of noise (Jain et al. 2004; Senthil Kumar et al. 2004). Black-box time series models are extensively used in the prediction of hydrological and environmental processes (Salas et al. 1980; Tankersley et al. 1993). Artificial neural networks (ANNs) are a powerful model for modeling rainfall-runoff processes with complex and non-linear nature (Senthil Kumar et al. 2004). The first efforts to perform simulation with ANNs were made by McCulloch and Walter Pitts in the early 1940s (McCulloch and Walter 1943), and afterwards, they were extended by Hebb (1949), Rosenblatt (1958), and Minsky and Papert (1969). Today, there are very powerful models in hand that can simulate complex, non-linear, time-consuming problems with high accuracy and low computational time (Hebb 1949; Minsky and Papert 1969). The main advantages of ANNs are the capability of utilizing incomplete data (in case of missing or low volume of data on a phenomenon or poor statement of the problem), fault tolerance (i.e., weakness in a cell or a neuron does not end in the failure of the whole project), machine learning and compatibility with other artificial intelligence (meta-heuristic and optimization) models, parallel computing (the ability to perform multiple tasks simultaneously), etc. (Minsky and Papert 1969). On the other hand, their main two disadvantages are requiring a great amount of long-term data to reach the best response and uncertain and imprecise prediction with out-of-range data (Melesse et al. 2011; Kisi et al. 2012). Two major strategies have been proposed to overcome these weaknesses: incorporation of fuzzy logic (FL) in ANNs to create an adaptive network-based fuzzy inference system (ANFIS) and combining ANNs with meta-heuristic optimization algorithms (Rajaee et al. 2009; Ebtehaj and Bonakdari 2014). The hybrid ANN-ANFIS model is applied to different fields of science and technology including the prediction of concrete compressive strength (Mohammadi Golafshani et al. 2020), daily potential evapotranspiration (Ramiro et al. 2020), effective factors in physical properties of nanofluids (Abdullah et al. 2018) and lake-level fluctuations (Talebizadeh and Moridnejad 2011), estimation of daily solar radiation (Quej et al. 2017), groundwater simulation and prediction (Zare and Koch 2018), etc. The development of computational intelligence has enhanced modeling efficiency, speed, and accuracy, enabling us to solve problems, the solutions to which could not be achieved through classical methods. In recent years, meta-heuristic models have drawn increasing attention for solving optimization problems. Simplicity, flexibility, a broad range of algorithms, and preventing local optimization in computational processes are the main advantages of these models. Simulated annealing (Kirkpatrick et al. 1983), Tabu search (Glover 1986), grey wolf optimization (Mirjalili et al. 2014), cuckoo optimization algorithm (COA) (Yang and Suash 2009), gravitational search algorithm (GSA) (Rashedi et al. 2009), particle swarm optimization (PSO) (Eberhart and Kennedy 1995), grasshopper optimization algorithm (GOA) (Mirjalili 2018), ant colony optimization (ACO) (Dorigo et al. 1999), harmony search (HS) method (Reynolds 1994), bee swarm optimization (BSA) (Karaboga 2005), momentum balance equation, etc. are samples of such algorithms. The main aim of this research was the estimation of river flow through hybrid smart algorithms. For this purpose, ANNs were used. However, as known, these networks go through trial and error to calculate the weight coefficients and bias, which decreases their prediction accuracy. To overcome this weakness and optimize weight coefficients to enter into modeling, five powerful meta-heuristic optimization algorithms, namely genetic algorithm (GA), PSO, harmonic balance algorithm (BFA), ACO, and algorithm of innovative gunner (AIG), were used. Finally, for better appraisal of the performance of the applied algorithms, the old GA model was compared with the brand new AIG method.

Based on the above review of literature and given that the Karkheh river is one of the main catchment areas in Iran that supply much water for drinking and nearby agriculture, especially under the pressure of low river water volume due to the recent drought, the importance of simulating river flow and taking constructive measures for water management is now felt more than ever. Therefore, this study aims to predict the daily flow of the Karkheh catchment area by using the proposed hybrid ANN-AIG model as well as to compare the obtained results with those of other hybrid models (ANN-GA, ANN-PSO, ANN-BFA, ANN-ACO).

Materials and methods

The studied catchment area

Karkheh catchment in the country of Iran was studied in this research. It is located between 48° 10′ to 50° 21′ E and 31° 34′ to 34° 7′ N with an area of 59,143 km2 from the western to the middle and south western Zagros Mountains in the Persian Gulf region. It is limited to the Sirvan, Sefid-rud, and Ghareh-chai rivers in the north, Dez catchment area in the west, and some parts of the western borders of the country in the south. Average annual rainfall in the Karkheh catchment area ranges from 951 mm in the southern to 9111 mm in the northern highlands and eastern regions. Vegetation was insignificant at lower altitudes, but it increased with the rise of altitude. Average annual rainfall was higher in the northern and eastern regions of the catchment, with 48.8% of the total precipitation occurring in winter on average, 30.60% in autumn, 20.4% in spring, and only 0.2% in summer. Figure 1 shows the geographical positions of the selected four stations in the Karkheh catchment area, namely Cham Anjir, Kashkan, Pole-zal, and Jologir. Table 1 also presents the geographical data on these stations

Data collection and selection of the best input combination for the model

Different methods of prediction are intended to relate dependent and independent variables. The relation between the variables of hydrological cycle and runoff is one of the major challenges for hydrologists. In this study, the preliminary step was determining the factors influencing the river flow discharge. The major factors in the estimation of runoff in an area are rainfall, discharge, temperature, evapotranspiration, humidity, and wind pressure and direction. Due to the lack of enough records and the required knowledge about the physical process of converting these parameters to flow variables and their effects as well as negligible values of some parameters in this study, only rainfall and discharge were accounted for. Time series were monthly, and the data were collected for the years from 1997 to 2017, from which 70% data were used for calibration and 30% for validation of the test (Khosravi et al. 2018). Model structure and the best input combination are the effective factors in the prediction power of a model and achieved results. Table 2 presents the variables (maximum, minimum, mean, standard deviation, and skewness) in the calibration and test data for the considered four stations. Before modeling, the best input combination with the optimum amounts to operate each model should be determined. To achieve the best input combination of parameters, Pearson correlation coefficient (PCC) was employed. Variables were considered as the potential input, and PCC was calculated between the input and the output. After calculating a correlation between variables, the models were ranked in descending order. Hence, the first combination had the highest correlation coefficient, and the last combination showed the lowest value. The highest correlation would indicate whether the variable with the highest correlation could predict output (discharge) on its own or not. Combination 2 was a complex of model 1 and the highest correlation in the following combination and so on (Khosravi et al. 2018; Sharafati et al. 2019). Table 3 shows the values of the correlation coefficient between different variables. These variables were the criteria for choosing the best model combination, as given in Table 4. To select the best input combination and the optimum values for different operators, MATLAB 2019a and, in some other analyses, Microsoft Excel 2019 were employed.

As observed in Table 3, the input was a combination of rainfall and discharge, and the output was discharge. In this table, P(t) shows rainfall; P(t − 1) is rainfall with a day delay, and P(t − 2) is rainfall with 2 days delay, Q(t − 1), Q(t − 2), and Q(t − 3) shows discharge with 1, 2, and 3 days delay, respectively. As noted above, selection of the combinations was based on PCC between variables in a way that, after calculating the correlation between discharge and all the other factors, in the first combination, the highest correlation was selected, and at the lower levels, the respective higher combinations and the highest coefficient were considered.

Evaluation criteria and comparison of the models

Different criteria are used in each project to evaluate model performance. In this study, the coefficient of determination (R2), root mean square error (RMSE), Nash-Sutcliffe efficiency (NSE), mean absolute error (MAE), and percentage of bias (PBIAS) were used for this purpose (Legates and McCabe 1999; Nagelkerke 1991). The values were between 0 and 1 for R2 with 1 showing higher prediction and precision. The value of zero indicates that the model does not determine the variability of the data around the mean, and the value of 1 implies that it identifies all the variability around the mean (Nagelkerke 1991). NSE is a normalized statistic that determines residual variance compared to the measured variance (Nash and Sutcliffe 1970) ranging within − ∞ < NSE < 1 with the optimum value of 1. In other words, the response is optimum if the value of NSE is equal to 1. Moreover, NSE values from 0 to 1 are generally regarded to be at the acceptable level, standing for better observational amounts than estimation amounts (Duie Tien et al. 2020). This parameter has also been suggested by ASCE (ASCE 1993), and it is very frequently used because it presents a broad range of information for reported values. Moreover, its applicability has experienced a significant increase to different sciences in recent years (Sevat and Dezetter 1991; Kesgin et al. 2020). PBIAS measures the mean of (simulated) calculation (Dabanlı and Şen 2018) and can be positive and/or negative. Zero is the optimum, and low-magnitude values show model precision in the simulation process; positive PBIAS values indicate underestimation, and negative values stand for overestimation of the model (Gupta et al. 1999). PBIAS is also a broadly used indicator by many researchers in different fields, including hydrology, water resource management, and earth sciences (Pengxin et al. 2019). The formulations for calculating all the considered indicators are given in Eqs. 1 to 5. In this study, in addition to the above criteria, boxplot and Taylor diagram were used. Taylor diagram and boxplots are common graphic procedures used in model performance comparison. The Taylor diagram is an appropriate tool for assessing different methods and has recently been applied to the fields of weather forecast, water science, and so on. It is represented in the form of a semi-circle indicating positive and negative correlations (a quadrant shows only a positive correlation). The values of the correlation coefficient are given in the form of radii, and standard deviations are concentric circles (Taylor 2001). Boxplot was introduced by John Tukey (1969). It is a common figure that shows statistic values for data. In other words, it compares data in observational form (Lo Conti et al. 2014).

The models used in the research

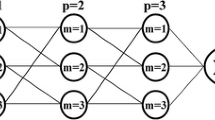

ANNs have different components, such as input layers, weights and bias, hidden layers, operator, and output. An efficient calibration step makes ANNs capable to predict an output and an output layer of neurons (Schalkoff 1997). The main steps for designing data collection network are network construction and configuration, assigning initial values and obtaining optimum weights and bias, education, and finally, validation of the network (Haykin 1994; Engelbrecht 2007). It can be stated that the most important part in networks is the selection of weight coefficients and bias, which is performed through trial and error and defined as a calibration step. The greatest challenge in selecting these coefficients is the trial and error, which is in turn subject to many negatively influencing factors, such as selection of incorrect initial amounts, improper configuration, human error, etc. The augmentation of the errors caused by such factors in the final run decreases prediction accuracy in the modeling process. To overcome the weakness, two strategies are often adopted, as mentioned in the Introduction section. To improve network precision in estimating weight coefficients and bias, four optimization algorithms were used in this study. The research was carried out in two steps. In the first step, ANNs were constructed as an independent model, and their precision was evaluated. In the second step, the hybrid compounds of ANN-GA, ANN-PSO, ANN-HB, and ANN-AIG were used. The purpose was to find the best (optimum) response for weight coefficients and increase model accuracy. The applied optimization algorithms in this study are discussed in the following.

Algorithm of the innovative gunner (AIG)

AIG is one of the novel meta-heuristic optimization algorithms presented by Pijarski and Kacejko (1970). With regard to the strong structure of the algorithm, it is expected to find significant applicability to different fields of science and technology. This algorithm has high efficiency and speed in solving optimization problems (e.g., mechanical and mathematical benchmark performances). High convergence speed and obtaining an optimum response in the shortest possible time with the lowest cost and highest precision are the advantages of this algorithm. It conducts deep exploration in the searching space by swarm response vectors to achieve the optimum response. AIG obtains different solutions and responses that are considerably efficient in preventing being trapped in local optima. This algorithm is extraordinarily useful in solving objective functions with multiple dimensions and different forms. It is predicted to obtain better results than other swarm intelligence methods (e.g., GA, PSO, GOA, etc.) Figure 2 shows the flowchart of the algorithm. The steps of the algorithm are as follows:

-

1)

Determine the start point (the initial amount for the first bullet).

-

2)

Determine the distribution distance (bullet shooting distance from the gun to the point of impact).

-

3)

Calculate the produced bullet (the second bullet in the third step originating from the first bullet).

-

4)

Investigate the bullet impact (bullet place, or if the bullet accurately hits the target).

-

5)

Select N bullets randomly as the main bullets (if the bullet hits the target).

-

6)

Investigate and update the place and position of the point of impact (if the bullet hits the target, end; otherwise, repeat the previous steps).

-

7)

Determine the best recorded position.

-

8)

End.

Genetic algorithm (GA)

GA has been inspired by genetic science and Darwin’s evolution theory and is based on the survivability of the top members or natural selection. The algorithm was presented by Halland in 1970, and since then, it has been extensively applied to different engineering problems, such as hydrological models, ground water, etc. GA is a searching method based on selection mechanism, which combines the best artificial remains with the genetic functions obtained from nature (Podger 2004). GA searches among different points, uses codes instead of real amounts, and follows probability laws (Rouhani and Farahi Moghadam 2014). According to the results of the assessments, it directs the searching process towards the optimal responses. After giving values to a random set of strings to achieve the optimal point, it calculates the objective function, which is the criterion for evaluating the performance and compatibility of the strings. If the optimum state criterion is not satisfied, a new generation begins. After generating the offspring of the second generation (elite, hybrid, and mutant) in defined ratios, using the parent selection algorithms, new generations are replicated until the stopping criteria are met (Kennedy and Eberhart 1995). Figure 3 gives the flowchart of the GA algorithm.

Particle swarm optimization (PSO)

PSO was first presented by Kennedy and Eberhart (1995). It is a simulation algorithm for colony behavior, the main idea of which originates in regular flocking of birds and fishes. In this algorithm, similar to other developmental techniques, potential solutions are investigated for exploration of the searching space (Kennedy and Eberhart 1995). Different types of PSO have successfully been applied to hydrological projects as a useful and powerful optimization algorithm. In this method, each particle has a speed vector that is responsible for changing its position to explore among the present responses. If the searching space is D-dimensional, it represents a particle in the population with D-dimensional vector Xi = (xi1,xi2,…,xiD), velocity of place change Vi = (vi1,vi2,…,viD), and the best place Pi = (pi1,pi2,…,piD). The best particle in the whole population is identified by the index g. The particle swarm is follows:

where i = 1, 2, ⋯N with N representing the swarm size and d = 1, 2, ⋯D with D being the dimension size. n is the replication number, and w is the weight inertia. c1 and c2 are fixed positive coefficients that stand for cognitive and social parameters, and r1, i, d and r2, i, d are random numbers in a range of (0,1) with uniform distribution. Finally, T is the time period. Since there was no speed control process involved, it was essential to consider the VMax; above which, the particles would bypass the range of acceptable solutions, and below which exploring the search space properly would be impossible (Kennedy 1998). Figure 4 presents the PSO flowchart.

Harmonic balance algorithm

Harmonic balance is a commonly applied method of approximating periodical responses for normal and non-linear differential equations (Sarrouy and Sinou 2011; Detroux et al. 2014). Harmonic balance has various applications, e.g., to quasi-periodic flows that are determined by a finite number of governing frequencies and do not necessarily need to be harmonic (Krack and Gross 2019). Harmonic balance is of high importance for non-linear systems. The main duty of harmonic balance is to determine non-linear Fourier coefficients. Harmonic balance is a method for calculating the steady response of non-linear equations (Deuflhard 2006), and it is applied to non-linear electric circuits (Nakhla et al. 1976). Harmonic balance is efficient and flexible, and it calculates the steady-state response directly through Fourier series. It is a pure frequency domain technique.

Ant colony optimization (ACO)

ACO was presented by Dorigo (1992) for solving seller problems. It has been inspirited by ant behavior in searching food. The main steps for solving optimization problems by ACO are as follows. Figure 5 shows the flowchart of the algorithm.

-

1.

After defining the appropriate graph for the problems in hand, the pheromone level is considered for all options, and each ant is placed on an initial point from which it moves.

-

2.

Each ant must select and go to another point by considering a possible relation. If an ant moves from all the points, it will build a possible response.

-

3.

After achieving a complete response by an ant, the objective function value for the respective response is calculated. Steps 2 and 3 are repeated for all the ants. Selection probability increases with the subsequent replications, and this will help in reaching the optimum response.

-

4.

If a stop criterion of the algorithm is met, modeling is finished.

Results and discussion

Models and algorithms were assessed by a dataset, and the highest efficiency for modeling and analysis was selected. This was performed at six phases, as shown in Table 4. In other words, the phases were the best input combinations chosen based on the correlation coefficient. Moreover, for each model, six combinations were used for calibration and testing (Khosravi et al. 2016). Researchers often use models based on R2 coefficient and RMSE. The main purpose of artificial intelligence-based systems is decreasing estimation error, and hence, their criterion for ranking the models is RMSE. It can be stated that adding variables with high CC generally increases point prediction efficiency. However, this is not always the case, and increasing such variables may lead to decrease in prediction accuracy. Table 5 shows input combinations based on RMSE.

The results illustrate that variable combinations are different for various structures of models. The RMSE values for each model were calculated for the calibration and test purposes. The lowest RMSE was selected for testing to be able to evaluate precision. The results in Table 4 show that all the models in all the four stations of input combination 6 achieved their best performance with the lowest RMSE. Since the data were normalized within the range of 0 to 1, the error was calculated precisely. Moreover, since combination 6 has the highest number of parameters or variables, the error decreases, and it is the preferred combination.

Performance evaluation of the models

For all the four stations and all the developed models (ANN-AIG, ANN-HB, ANN-PSO, and ANN-GA), scatter plots and time series graphs were drawn for the observational and calculation data, as illustrated in Fig. 6. The results showed that for Cham Anjir station, the highest precision belonged to ANN-HB (Qpred = 1.0213Qobs + 0.6734, R2 = 0.9998), and the lowest belonged to ANN-GA (Qpred = 0.9582Qobs + 3.6453, R2 = 0.1572). ANN-AIG had the highest precision in Jologir station, and ANN-GA achieved the lowest precision. In Kashkan station, ANN-ANT model reached the highest, and ANN-PSO had the lowest precision. ANN-AIG and ANN-GA showed the highest and the lowest precisions, respectively, in Pole-zal station. After selecting the best input combination for each model and drawing scatter plots and time series graphs for the observations and calculations in all the four stations, the performances of the models with the calibration and test data are given in Tables 6, 7, 8, and 9. In summary, after selection of the best input combination for each model, the predictions in discharge simulation for all the stations were studied. The values for the performance of Cham Anjir station in test validation (Table 6) showed that ANN-AGI had the highest accuracy of prediction (RMSE = 0.054, MAE = 0.032, R = 0.987), followed by ANN-PSO (RMSE = 0.062, MAE = 0.037, R = 0.963), ANT-ANN (RMSE = 0.075, MAE = 0.047, R = 0.948), HB-ANN (RMSE = 0.086, MAE = 0.053, R = 0.932), and GA-ANN (RMSE = 0.098, MAE = 0.064, R = 0.921). For the Jologir, Kashkan, and Pole-zal stations, the results were similar with ANN-AIG having the best and GA-ANN having the lowest performance. The PBIAS value was positive for all the stations, which indicated underestimation with all the models. This study applied two main groups of quantitative and graphical approaches (scatter, boxplot, and Violin plots) to assess the performance of the given models. The distinguishing advantage that the boxplot diagram enjoys is that the predicted mean, quartiles, and range of datasets (max and min values) can be compared with the observational data. The boxplot for fluctuations of discharge displayed in Fig. 7 suggests that the hybrid ANN-AIG model is well compatible with the prediction of the observed maximum fluctuations of discharge (Fig. 8).

Conclusion

In summary, it can be stated that the developed models were appropriate for all the stations. Comparing stations showed that Kashkan station had the best performance, which could be attributed to low waste data, accuracy of measurements and observational parameters, operator precision, and appropriate data quality compared to other stations. The results also showed that more efficient parameters (dependent variables) would lead to improved performance. Higher efficiency and precision were also achieved with greater amounts of input. Our results showed that, according to the R2 and RMSE values, AIG had the highest efficiency among all the considered algorithms. It had the best response for all the stations with the highest prediction capability and accuracy. The reasons for the superiority of this model were the use of primary and secondary parameters, low loss function, saving time in reaching the optimum solution, and higher convergence, which made the weights converge to the best values. In fact, it can be stated that while GA, PSO, and ACO are focused in loss function and the primary criteria, AIG, in addition to these factors, considers the secondary parameters, which play a significant role in achieving the optimum results. The secondary parameters basically decrease the search domain, which result in more accurate and faster convergence. Overall, this research demonstrated that the ANN-AIG hybrid model could be effective in predicting the daily flow of a river. Given that the decision to exploit water resources and to implement management strategies in many consumption processes (especially in agriculture and industry) hinges on an estimate of river flow, the proposed hybrid model in predicting river flow can be an appropriate tool for management decisions.

References

Abdullah AAAA, Soltanpour Gharibdousti M, Goodarzi M, de Oliveira LR, Safaei MR, Pedone Bandarra Filho E (2018) Effects on thermophysical properties of carbon based nanofluids: experimental data, modelling using regression, ANFIS and ANN. Int J Heat Mass Transf 125:920–932. https://doi.org/10.1016/j.ijheatmasstransfer.2018.04.142

ASCE (1993) Criteria for evaluation of watershed models. J Irrig Drain Eng 119(3):429–442. https://doi.org/10.1061/(ASCE)0733-9437

Bai T, Chang JX, Chang FJ, Huang Q, Wang YM, Chen GS (2015) Synergistic gains from the multi-objective optimal operation of cascade reservoirs in the Upper Yellow River basin. J Hydrol 523(479):758–767. https://doi.org/10.1016/j.jhydrol.2015.02.007

Chow VT, Maidment DR, Mays LW (1988) Applied hydrology. McGraw–Hill, Singapore

Dabanlı İ, Şen Z (2018) Precipitation projections under GCMs perspective and Turkish Water Foundation (TWF) statistical downscaling model procedures. Theor Appl Climatol 132:153–166. https://doi.org/10.1007/s00704-017-2070-4

Detroux T, Renson L, Kerschen G (2014) The harmonic balance method for advanced analysis and design of nonlinear mechanical systems, in: Nonlinear Dynamics, Volume 2: Proceedings of the 32nd IMAC. A Conference and Exposition on Structural Dynamics. https://doi.org/10.1007/978-3-319-04522-1_3

Deuflhard, P (2006) Newton methods for nonlinear problems. Berlin: Springer-Verlag. Section 7.3.3.: Fourier collocation method

Dorigo, M (1992) "Optimization, learning and natural algorithms." Ph.D. Thesis, Politecnico di Milano, Milan, Italy.

Dorigo M, Di Caro G, Gambardella LM (1999) Ant algorithms for discrete optimization. Art&Life 5(2):137–172. https://doi.org/10.1162/106454699568728

Duie Tien B, Khosravi K, Tiefenbacher J, Nguyen H, Kazakis N (2020) Improving prediction of water quality indices using novel hybrid machine-learning algorithms. Journal of Science of the Total Environment 721:136612. https://doi.org/10.1016/j.scitotenv.2020.137612

Eberhart R, Kennedy J (1995) “A new optimizer using particle swarm theory.” In Proceedings of the Sixth International Symposium on Micro Machine and Human Science, pp 39–43: IEEE. 10.1109/MHS.1995.494215

Ebtehaj I, Bonakdari H (2014) Performance evaluation of adaptive neural fuzzy inference system for sediment transport in sewers. Water Resour Manag 28(13):4765–4779. https://doi.org/10.1007/s11269-014-0774-0

Engelbrecht AP (2007) Computational intelligence: an introduction. John Wiley & Sons. https://doi.org/10.1007/978-3-540-78293-3_1

Friedman JH (1991) Multivariate adaptive regression splines (with Discussion). Ann Stat 19(1):1–141. https://doi.org/10.1214/aos/1176347963

Glover F (1986) Future paths for integer programming and links to artificial intelligence. Comput Oper Res 13(5):533–549. https://doi.org/10.1016/0305-0548(86)90048-1

Gupta HV, Sorooshian S, Yapo PO (1999) Status of automatic calibration for hydrologic models: comparison with multilevel expert calibration. J Hydrol Eng 4(2):135–143. https://doi.org/10.1061/(ASCE)1084-0699(1999)4:2(135)

Haykin S (1994) Neural networks: a comprehensive foundation, 1st edn. Upper Saddle River, NJ, USA, Prentice Hall PTR

He L, Chen Y, Kang Y, Tian P, Zhao H (2018) Optimal water resource management for sustainable development of the chemical industrial park under multi-uncertainty and multi-pollutant control. Environ Sci Pollut Res 25(27):27245–27259. https://doi.org/10.1007/s11356-018-2758-8

Hebb D (1949) the organization of behavior. New York: Wiley. ISBN 978-1-135-63190-1. 10.1002/1097-4679(195007)6:3<307::AID JCLP2270060338>3.0.CO;2-K

Jain A, Sudheer KP, Srinivasulu S (2004) Identification of physical processes inherent in artificial neural network rainfall–runoff models. Hydrol Process 18:571–581. https://doi.org/10.1002/hyp.5502

Jothityangkoon C, Sivapalan M, Farmer DL (2001) Process controls of water balance variability in a large semi-arid catchment: downward approach to hydrological model development. J Hydrol 254(1–4):174–198. https://doi.org/10.1016/S0022-1694(01)00496-6

Karaboga D (2005) An idea based on honey bee swarm for numerical optimization. Technology Repeport TR06, Erciyes Univ. Press, Erciyes

Kennedy J (1998) The behavior of particles, Porto, V. W., Saravanan, N., Waagen, D., and Eiben, A. E. (eds.), In: Evolutionary programming VII, Springer, 581-590

Kennedy J Eberhart R (1995) Particle swarm optimization, Proc. Of the International Conference on Neural Networks, Perth, Australia, IEEE, Piscataway, pp. 1942-1948. https://doi.org/10.1007/978-0-387-30164-8_630

Kesgin E, Agaccioglu H, Dogan A (2020) Experimental and numerical investigation of drainage mechanisms at sports fields under simulated rainfall. J Hydrol 580:124251. https://doi.org/10.1016/j.jhydrol.2019.124251

Khaing ZM, Zhang K, Sawano H, Shrestha BB, Sayama T, Nakamura K (2019) Flood hazard mapping and assessment in data-scarce Nyaungdon area. Myanmar. PLoS One 14(11):e0224558. https://doi.org/10.1371/journal.pone.0224558

Khosravi K, Nohani E, Maroufinia E, Pourghasemi HR (2016) A GIS-based flood susceptibility assessment and its mapping in Iran: a comparison between frequency ratio and weights of evidence bivariate statistical models with multi-criteria method. Nat Hazards 83(2):1–41. https://doi.org/10.1007/s11069-016-2357-2

Khosravi K, Pham BT, Chapi K, Shirzadi A, Shahabi H, Revhaug I, Prakash I, Tien Bui D (2018) A comparative assessment of decision trees algorithms for flash flood susceptibility modeling at Haraz watershed, northern Iran. Sci Total Environ 627:744–755. https://doi.org/10.1016/j.scitotenv.2018.01.266

Kirkpatrick S, Gelatt CD, Vecchi MP (1983) Optimization by simulated annealing. Science 220(4598):671–680. https://doi.org/10.1126/science.220.4598.671

Kisi O, Dailr AH, Cimen M, Shiri J (2012) Suspended sediment modeling using genetic programming and soft computing techniques. J Hydrol 450:48–58. https://doi.org/10.1016/j.jhydrol.2012.05.031

Krack M, Gross J (2019) Harmonic balance for nonlinear vibration problems. Springer. https://doi.org/10.1007/978-3-030-14023-6

Legates DR, McCabe GJ (1999) Evaluating the use of “goodness-of-fit” measures in hydrologic and hydroclimatic model validation. Water Recourse Research 35:233–241. https://doi.org/10.1029/1998WR900018

Li Y, Huang GH, Nie SL (2009) Water resources management and planning under uncertainty: an inexact multistage joint-probabilistic programming method. Water Recour Manag 23(12):2515–2538. https://doi.org/10.1007/s11269-008-9394-x

Liu D, Guo S, Wang Z, Liu P, Yu X, Zhao Q, Zou H (2018) Statistics for sample splitting for the calibration and validation of hydrological models. Stoch Env Res Risk A 32(11):3099–3116. https://doi.org/10.1007/s00477-018-1539-8

Liu S, Feng ZK, Niu WJ, Zhang HR, Song ZG (2019) Peak operation problem solving for hydropower reservoirs by elite-guide sine cosine algorithm with Gaussian local search and random mutation. Energies 12(11):101–123. https://doi.org/10.3390/en12112189

Lo Conti F, Hsu KL, Noto LV, Sorooshian S (2014) Evaluation and comparison of satellite precipitation estimates with reference to a local area in the Mediterranean Sea. Atmos Res 138:189–204. https://doi.org/10.1016/j.atmosres.2013.11.011

Madani K (2011) Hydropower licensing and climate change: insights from cooperative game theory. Adv Water Recou 34(2):174–183. https://doi.org/10.1016/j.advwatres.2010.10.003

MATLAB. MATLAB Versuion R2019a (2019a) The MathWorks Inc., Natick, Massachusetts, available at www.mathworks.com/products/matlab

McCulloch W, Walter P (1943) A logical calculus of ideas immanent in nervous activity. Bull Math Biol 5(4):115–133. https://doi.org/10.1007/BF02478259

Melesse AM, Ahmad S, McClain ME, Wang X, Lim YH (2011) Suspended sediment load prediction of river systems: an artificial neural network approach. Agric. Water Manag 1:16–31. https://doi.org/10.1016/j.agwat.2010.12.012

Minsky M, Papert S (1969) Perceptrons: an introduction to computational geometry. MIT Press. ISBN 978-0-262-63022-1

Mirjalili S (2018) Evolutionary population dynamics and grasshopper optimization approaches for feature selection problems. Knowl-Based Syst 145:25–45. https://doi.org/10.1016/j.knosys.2017.12.037

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007

Mohammadi Golafshani E, Behnood A, Arashpour M (2020) Predicting the compressive strength of normal and high-performance concretes using ANN and ANFIS hybridized with grey wolf optimizer. Constr Build Mater 232:117266. https://doi.org/10.1016/j.conbuildmat.2019.117266

Nagelkerke NJD (1991) A note on a general definition of the coefficient of determination. Biometrika. https://doi.org/10.1093/biomet/78.3.691

Nakhla A, Michel S, Vlach J (1976) A piecewise harmonic balance technique for determination of periodic response of nonlinear systems. IEEE Transactions on Circuits and Systems CAS-23:85–91. https://doi.org/10.1109/TCS.1976.1084181

Nash JE, Sutcliffe JV (1970) River flow forecasting through conceptual models: Part 1. A discussion of principles Journal of Hydrology 10(3):282–290. https://doi.org/10.1016/0022-1694(70)90255-6

Niu WJ, Feng ZK, Zeng M, Feng BF, Min YW, Cheng CT, Zhou JZ (2019) Forecasting reservoir monthly runoff via ensemble empirical mode decomposition and extreme learning machine optimized by an improved gravitational search algorithm. Appl Soft Computing J 82. https://doi.org/10.1016/j.asoc.2019.105589

Nourani V, Mano A (2007) Semi-distributed flood runoff model at the sub continental scale for southwestern Iran. Hydrol Process 21:3173–3180. https://doi.org/10.1002/hyp.6549

Nourani V, Monadjemi P, Singh VP (2007) Liquid analog model for laboratory simulation of rainfall–runoff process. J Hydrol Eng 12(3):246–255. https://doi.org/10.1061/(ASCE)1084-0699(2007)12:3(246)

Pengxin D, Zhang M, Bing J, Jia J, Zhang D (2019) Evaluation of the GSMaP_Gauge products using rain gauge observations and SWAT model in the Upper Hanjiang River Basin. Atmos Res 2191:153–165. https://doi.org/10.1016/j.atmosres.2018.12.032

Pinos J, Timble L (2019) Performance assessment of two-dimensional hydraulic models for generation of flood inundation maps in mountain river basins. Water Sci Eng 12(1):11–18. https://doi.org/10.1016/j.wse.2019.03.001

Podger G (2004) Rainfall Runoff Library (RRL), user guide. CRC for Catchment Hydrology, Australia

Quej VH, Almorox J, Arnaldo JA, Saito L (2017) ANFIS, SVM and ANN soft-computing techniques to estimate daily global solar radiation in a warm sub-humid environment. J Atmos Sol Terr Phys 155:62–70. https://doi.org/10.1016/j.jastp.2017.02.002

Rajaee T, Mirbagheri SA, Zounemat-Kermani M, Nourani V (2009) Daily suspended sediment concentration simulation using ANN and neuro-fuzzy models. Sci Total Environ 407:4916–4927. https://doi.org/10.1016/j.scitotenv.2009.05.016

Ramiro T, Gonzalez del Cerro MSP, Subathra NMK, Verrastro S, SG T (2020) Modelling the daily reference evapotranspiration in semi-arid region of South India: a case study comparing ANFIS and empirical models. Information Processing in Agriculture. https://doi.org/10.1016/j.inpa.2020.02.003

Rashedi E, Nezamabadi-Pour H, Saryazdi S (2009) A gravitational search algorithm. InformationSciences 179(13):2232–2248. https://doi.org/10.1016/j.ins.2009.03.004

Reynolds RG (1994) “An introduction to cultural algorithms, ” in Proceedings of the 3rd Annual Conference on Evolutionary Programming, World Sci Publish 131–139. https://doi.org/10.1142/2401

Rosenblatt F (1958) The perceptron: a probabilistic model for information storage and organization in the brain. PsycholRev 65(6):386408–386408. https://doi.org/10.1037/h0042519

Rouhani H, Farahi Moghadam M (2014) Application of the genetic algorithm technique for optimization of the hydrologic TANK and SimHyd model’s parameters. Journal of Range and Watershed Management Iranian Journal of Natural Resources 66(4):512–533

Salas JD, Delleur JW, Yevjevich V, Lane WL (1980) Applied modeling of hydrological time series, first ed. Water Resources Publications, Littleton. https://doi.org/10.1002/9781118445112.stat07809

Samra JS, Dhyani BS, Sharma AR (1999) Problems and prospects of natural resource management in Indian Himalayas—a base paper. Hill and Mountain Agro-Ecosystem Directorate, NATP. CSWCRTI, 218 Kaulagarh Road, Dehradun, 145

Sarrouy E, Sinou JJ (2011) Non-linear periodic and quasi-periodic vibrations in mechanical systems-on the use of the harmonic balance methods, in: Advances in vibration analysis research. Intech 11:23–39. https://doi.org/10.5772/15638

Schalkoff RJ (1997) Artificial neural networks. International ed. New York. McGraw-Hill, London https://trove.nla.gov.au/version/18998022

Senthil Kumar AR, Sudheer KP, Jain SK, Agarwal PK (2004) Rainfall–runoff modeling using artificial neural network: comparison of networks types. Hydrol Process 19(6):1277–1291. https://doi.org/10.1002/hyp.5581

Sevat E, Dezetter A (1991) Selection of calibration objective functions in the context of rainfall-runoff modeling in a Sudanese savannah area. Hydrol Sci J 36(4):307–330. https://doi.org/10.1080/02626669109492517

Sharafati A, Khosravi K, Khosravinia P, Ahmed K, Salman SA, Mundher Z, Shamsuddin Y (2019) The potential of novel data mining models for global solar radiation prediction. Int J Environ Sci Technol 16:7147–7164. https://doi.org/10.1007/s13762-019-02344-0

Talebizadeh M, Moridnejad A (2011) Uncertainty analysis for the forecast of lake level fluctuations using ensembles of ANN and ANFIS models. Expert Syst Appl 38:4126–4135. https://doi.org/10.1016/j.eswa.2010.09.075

Tankersley C, Graham W, Hatfield K (1993) Comparison of univariate and transfer function models of groundwater fluctuations. Water Recou Res 29(10):3517–3533. https://doi.org/10.1029/93WR01527

Taylor KE (2001) Summarizing multiple aspects of model performance in a single diagram. J Geophys Res-Atmos 106:7183–7192. https://doi.org/10.1029/2000JD900719

Tokar SA, Johnson PA (1999) Rainfall-runoff modeling using artificial neural-networks. J Hydrol Eng 4(3):232–239. https://doi.org/10.1061/(ASCE)1084-0699(1999)4:3(232)

Tukey JW (1969) Analyzing data: Sanctification or detective work? Am Psychol 24(2):83–91. https://doi.org/10.1037/h0027108

Wheater HS, Sorooshian S, Sharma KD (2008) Hydrological modelling in arid and semi-arid areas. Cambridge University Press, Cambridge. https://doi.org/10.1017/CBO9780511535734

Xu B, Pang R, Zhou Y (2019) Verification of stochastic seismic analysis method and seismic performance evaluation based on multi-indices for high CFRDs. Eng Geol:105412. https://doi.org/10.1016/j.enggeo.2019.105412

Yang XS, Suash D 2009 “Cuckoo search via Lévy flights.” In World Congress on Nature & Biologically Inspired Computing (NaBIC), 210–214: IEEE. 10.1109/NABIC.2009.5393690

Yapo PO, Gupta VH, Sorooshian S (1996) Automatic calibration of conceptual rainfall-runoff models: sensitivity to calibration data. J Hydrol 181:23–48. https://doi.org/10.1016/0022-1694(95)02918-4

Zare M, Koch M (2018) Groundwater level fluctuations simulation and prediction by ANFIS- and hybrid wavelet-ANFIS/fuzzy C-means (FCM) clustering models: Application to the Miandarband plain. J Hydro Environ Res 18:63–76. https://doi.org/10.1016/j.jher.2017.11.004

Zheng F, Zecchin AC, Simpson AR (2015) Investigating the run-time searching behavior of the differential evolution algorithm applied to water distribution system optimization. EnvModelSoftware 69:292–307. https://doi.org/10.1016/j.envsoft.2014.09.022

Zhu S, Heddam S, Wu S, Dai J, Jia B (2019) Extreme learning machine-based prediction of daily water temperature for rivers. Environ Earth Sci 78(6):87–101. https://doi.org/10.1007/s12665-019-8202-7

Acknowledgements

The authors are very grateful to the Regional Water Company, Lorestan Province, Iran, because they participate in gathering the data required to perform the work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author(s) declare that they have no competing interests.

Additional information

Responsible Editor: Broder J. Merkel

Rights and permissions

About this article

Cite this article

Dehghani, R., Poudeh, H.T. Applying hybrid artificial algorithms to the estimation of river flow: a case study of Karkheh catchment area. Arab J Geosci 14, 768 (2021). https://doi.org/10.1007/s12517-021-07079-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12517-021-07079-2