Abstract

This study compares the predictive performance of GIS-based landslide susceptibility mapping (LSM) using four different kernel functions in support vector machines (SVMs). Nine possible causal criteria were considered based on earlier similar studies for an area in the eastern part of the Khuzestan province of southern Iran. Different models and the resulting landslide susceptibility maps were created using information on known landslide events from a landslide inventory dataset. The models were trained using landslide inventory dataset. A two-step accuracy assessment was implemented to validate the results and to compare the capability of each function. The radial basis function was identified as the most efficient kernel function for LSM with the resulting landslide susceptibility map showing the highest predictive accuracy, followed by the polynomial kernel function. According to the obtained results, it concluded that using SVMs can generally be considered to be an effective method for LSM while it demands careful consideration of kernel function. The results of the present research will also assist other researchers to select the best SVM kernel function to use for LSM.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Landslide is one of the most damaging common geologic hazards all over the world. It poses a threat to the safety of human lives as well as the environment, resources and property (Yesilnacar and Topal 2005; Kanungo et al. 2006; He et al. 2012). In order to mitigate the impacts and consequences of landslides, landslide susceptibility mapping (LSM) is supposed to be an appropriate approach to enhance the understanding and to predict future hazards (Feizizadeh and Blaschke 2013a). LSM aims to assess the proneness of the terrain to future mass movements and slope failures. It also helps decision makers and managers to become aware of landslide-susceptible regions in order to diminish the unpleasant consequences of this hazard by appropriate management of slope. Susceptibility values are usually expressed in a cartographic way. LSM is a multi-faceted approach, and landslide as a spatial decision problem takes a variety of decision making-related spatial factors into account. Over the last three decades, regional landslide susceptibility assessment has been considered as one of the most challenging issues in the international landslide literature (Pradhan 2012). As a response to this challenge, many researchers have attempted to produce LSM by using different techniques and methods (Ayalew and Yamagishi 2005; Gorsevski et al. 2006; Yalçın 2008; Gorsevski and Jankowski 2010; Feizizadeh and Blaschke 2011; Pradhan 2012; Shadman Roodposhti et al. 2014; Hong et al. 2015a, b; Tsangaratos et al. 2016; Chen et al. 2016; Hong et al. 2016a, b, c). Since the LSM is generated through a number of related spatial factors, using an integrated approach of multi-criteria decision analysis (MCDA) and the geographical information systems (GIS) leads to making an appropriate approach for assessing more effective factors of landsliding (MCDA, Feizizadeh and Blaschke 2011; Feizizadeh and Blaschke 2012). However, due to the fact that GIS-MCDA deals with a wide range of spatial factors, it is increasingly known that the process of this method itself is a main source of the inherent uncertainties in the results (Feizizadeh and Blaschke 2012; Feizizadeh and Blaschke 2013b; Feizizadeh and Kienberger 2017).

Soft computing approaches are increasingly used for LSM during more recently, and a large number of methodologies such as support vector machines (SVM, Yao et al. 2008; Yilmaz 2010; Micheletti 2011; Ballabio and Sterlacchini 2012), artificial neural networks (ANNs, Lee et al. 2007; Pradhan and Lee 2010; Bui et al. 2012a) and neuro-fuzzy model (adaptive neuro-fuzzy inference strategy (ANFIS), Ercanoglu and Gokceoglu 2004; Pradhan et al. 2009; Bui et al. 2012b) have been proposed for LSM. When studying the literature, one may conclude that each of these approaches can report on success stories, but disadvantages were identified in several comparison studies. For example, ANN and ANFIS methods do not work properly when there are some limitations such as lack of enough knowledge about the study area. As a result of incomplete data, these methods lead to imaginary and also inaccurate results (Yilmaz 2010). Generally, although all of the mentioned approaches have own benefits and drawbacks, a large number of researches argue that the advantages of the SVM method are far more. SVM is a supervised learning method based on statistical learning theory and the structural risk minimization principle (Vapnik 1998; Yao et al. 2008; Pradhan 2012). This method is a fairly new method which transfers the covariates into a higher dimensional feature space within nonlinear transformations (Brenning 2005; Yilmaz 2010). The main advantage of this method is that it can use large input data with fast learning capacity. This method is well suited to nonlinear high-dimensional data modelling problems and provides promising perspectives in the LSM (Bai et al. 2008). Moreover, kernel methods generally are a class of algorithms for pattern analysis in SVM modelling and the selection of the kernel function is a very important and mission-critical step. The classes divide with a decision surface which maximizes the margin among the classes (Pourghasemi et al. 2013).

Although many kernel functions have been previously proposed and used, only some have been found to work well for a wide variety of applications including LSM (Xu et al. 2012). Given the fact that, when used for LSM, any change in SVM kernel function coincides with changes in the resulting susceptibility map, an obvious question that arises is that “with so many different kernel functions to choose from, which is the best for LSM?” The question as such is not new. The key difference between this paper and the mentioned literature earlier is that we will systematically compare within one single framework these functions and their direct consequences on the resulting maps and their respective accuracies in this study. Considering the fact that hazard management and mitigation costs are directly related to the spatial extension of potentially hazardous areas, it seems obvious that more accurate and specific susceptibility maps will critically reduce both economic and social costs in the provision of measures aimed at decreasing the risk of living with landslides. In using of SVM for LSM, it is believed that any change in SVM kernel function leads to changes in resultant landslide susceptibility map. By considering this importance, another difference of this study with other studies which are done in this field is that this research focused on a comparative approach of applying different kernel functions of SVM in order to identify the most effective function for LSM, unlike the previous studies. In the following sections, first, the study area will be introduced and further described; then, four different types of SVM kernel functions including linear, polynomial, radial basis function (RBF) and sigmoid function will be used for LSM purpose. Accordingly, the obtained results for each different previously mentioned kernels will be compared considering the result of accuracy assessment phase.

Study area

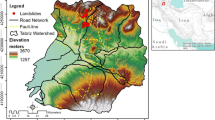

The study area was the Izeh basin, which is located in the eastern part of the Khuzestan province, Iran (Fig. 1). The region is important for the whole of southern Iran in terms of the agricultural activities because of the significant amount of hydropower plants. Landslides are common in Izeh basin, and so far, in 2014, the occurrences of 109 landslide events have been recorded by the Ministry of Natural Resources (MNR) Iran. The information of these landslides has been gathered from several field surveys. The temperature regime in the Izeh basin is directly related to elevation parameters. The annual average precipitation within the study area strongly depends on elevation and varies from 453 to 671 mm. Averages of the annual temperature range from a high of 33.00 to a low of 1.00 °C.

The geology of this area is very complicated, and there are several faults that make this area highly susceptible to landslides and mass movements (see Fig. 1). For the modelling of future landslides and the creation of landslide susceptibility maps, the unstable geology formations need to be considered. In particular, the dominant constituents of sedimentary rocks need to be differentiated, predominantly marl, shale, limestone, gypsum and siltstone within the southern parts of the study area. To summarize, it is anticipated that proximity to faults and the dominant constituents of sedimentary rocks contribute to slope instability and potentially to landslide occurrence.

Material and methods

Landslide influencing factors and data processing

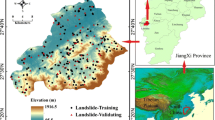

The most important factors in the occurrence of a landslide are slope and aspect (Feizizadeh and Blaschke 2012). Moreover, the slopes between 7° and 10° are the decisive factor for a landslide to occur. Other factors that play their roles in this phenomenon include distance to river, drainage density, distance to fault, precipitation, distance to road, lithology and land use/cover. Note that these factors were selected based on the physical properties of the study area, data availability and reviewing related literatures (Yalçın 2008; Nandi and Shakoor 2009; Ozdemir 2011; Feizizadeh and Blaschke 2011; Feizizadeh et al. 2012; Moradi et al. 2012; Kayastha et al. 2012; Feizizadeh and Blaschke 2013a, b; Feizizadeh et al. 2013a, b; Shadman Roodposhti et al. 2014; Jaafari et al. 2014; Feizizadeh et al. 2014; Faraji Sabokbar et al. 2014). The proposed LSM model starts with these selected nine causal criteria. Relevant dataset was used to prepare maps for each of these factors as input for the LSM. Roads, rivers and drainage area were extracted from a 1:50,000 topography map, while the fault and lithology maps were obtained from Iranian standard 1:100,000-scale geologic maps. In addition, the slope and aspect criteria were derived from 30-m shuttle radar topography mission (SRTM) digital elevation model (DEM). Land use/cover maps were derived from Landsat ETM+ satellite images with a 30-m spatial resolution by the method of maximum, and the accuracy was 95.63%.

Finally, the annual reports of the Iranian Meteorological Organization from a number of 23 stations have been used to generate precipitation map. A landslide inventory dataset (109 known landslide events) and information on historic landslides (MNR, Ministry of Natural Resources, Khuzestan Province 2010), which are 121 landslides in total, have also been used for further training and validation of the model. After the preprocessing and preparation of the spatial datasets, all necessary geometric thematic editing was done on the original datasets. Respectively, all vector layers (with different resolutions) were converted into raster format with a 30-m resolution, and the spatial datasets were processed. Figure 2 depicts the spatial distribution of all criteria for LSM model.

One-class support vector machine

Support vector machine (SVM) is a supervised learning method derived from statistical learning theory and the structural risk minimization principle (Boser et al. 1992; Vapnik 1995; Vapnik 1998). It separates the classes with a decision surface that maximizes the margin between the classes (Abe 2010). The surface is often called the optimal hyperplane, and the data points closest to the hyperplane are called support vectors. The support vectors are the critical elements of the training set. However, typically, SVMs are an example of a linear two-class classifier which seeks to maximize the margin between the two classes (Fig. 3a); it could be used for one-class classification purpose, where one tries to detect one class and reject the others (Fig. 3b) (Gunn 1997; Muñoz-Marí et al. 2010).

Typical support vector machine classifiers. a Two-class SVM (Gunn 1998). b One-class SVM (Muñoz-Marí et al. 2010)

The one-class support vector machine (OC-SVM) was proposed by Schölkopf et al. (2001) as a support vector methodology to estimate a set that encloses most of a given random sample wherex i ∈ R d. Eachx i is first transformed via a map φ : R d → H where H is a high (possibly infinite)-dimensional Hilbert space generated by a positive definite kernelk(x i , y i ). The kernel function corresponds to an inner product in H throughk(x i , y i ) = 〈φ(x i ), φ(y i )〉.The OC-SVM attempts to find a hyperplane in the feature space that separates the data from the origin with maximum margin (the distance from the hyperplane to the origin). In the event that no such hyperplane exists, slack variables ξ i allow for some points to be within the margin, and the free parameter v ∈ [0, 1] controls the cost of such violations. In fact, v can be shown to be an upper bound on the fraction of points within the margin (outliers) (Schölkopf et al. 2001). The hyperplane in feature space induces a generally nonlinear surface in the input space. More precisely, the OC-SVM as presented by Schölkopf et al. (2001) and Tax and Duin (1999) requires the solution of the following optimization problem:

Here, w is a vector perpendicular to the hyperplane in H, and p is the distance to the origin. Since the training data distribution may contain outliers, a set of slack variables ξ i ≥ 0 is introduced to deal with them (which allows for penalized constraint violation), as usual in the SVM framework. The parameter v ∈ [0, 1] controls the trade-off between the number of examples of the training set mapped as positive by the decision function f(x) = sng(w, Φ(x i )) − p and having a small value of ‖w‖to control model complexity. Finally, it must be noted that it is possible to separate landslide and nonlandslide patterns either through one two-class support vector machine (TC-SVM) or two OC-SVMs, which the latter produces more conservative decision regions (Elshinawy et al. 2010).

Kernel functions

The performance of the SVM model depends on the choice of the kernel parameters. Accordingly, the selection of the kernel function is very important in SVM modelling (Xu et al. 2012). However, new kernels are being proposed by researchers, four kinds of them are often used: linear kernel, polynomial kernel, RBF kernel (often called Gaussian kernel) and sigmoid kernel as the last one (Gunn 1997; Hsu et al. 2010; Pradhan 2012; Hong et al. 2015a, b).

Linear

The linear kernel was proposed by Campbell et al. (2006) as a simplest kernel function. A linear kernel function which is a popular method for a linear modelling is defined by the following:

There are some situations where the linear kernel becomes more appropriate. In particular, when the number of features is very large, one may just use the linear kernel (Hsu et al. 2010).

Polynomial

The polynomial kernel is a nonstationary kernel and a popular method for nonlinear modelling (Gunn 1997; Hsu et al. 2010) and can then be written as follows:

where γ is the gamma term in the kernel function for all kernel types except linear, d is the polynomial degree, and r is the bias term in the kernel function.

Radial basis functions

RBFs have received significant attention (Hsu et al. 2010), most commonly with a Gaussian of the form

where γ ≻ 0 is a parameter that controls the width of Gaussian. It plays a similar role as the degree of the polynomial kernel in controlling the flexibility of the resulting classifier (Ben-Hur and Weston 2010).

Sigmoid

The sigmoid kernel is defined as (Gunn 1997; Hsu et al. 2010) follows:

The use of such a kernel is equivalent to a neural network with one hidden layer. This kernel depends on two parameters γand r, which can cause problems during its implementation.

Separability measure

With respect to the fact that identifying landslide-prone locations was the goal of the present study, the separability assessment focused on distinguishing the landslide from the nonlandslide class. Separability was assessed through the Jeffries-Matusita (JM) separability approach that used both training subsets including landslide and nonlandslide locations. The JM distance between a pair of class-specific probability functions is defined as follows (Richards and Jia 2006):

where p(x|w i ) and p(x|w j ) are the conditional probability density functions for the feature vector x, given in data classes of w i = landslide andw j = nonlandslide events, respectively. Under normally distributed classes, this becomes the following:

where

In this notation, m i and m j correspond to class-specific, expected landslide values, and ∑ i and ∑ j are unbiased estimates for the class-specific covariance matrices of landslide and nonlandslide subsets, respectively. In the natural logarithm function, |∑ i |and|∑ j |are the determinants of ∑ i and ∑ j (matrix algebra). JM separability measure takes on a maximum value of 2.0, and values above 1.9 indicate excellent separability (Richards and Jia 2006). For lower separability values, it should be taken into consideration to improve the separability by editing the position of nonlandslide points, which are located in landslide-prone areas.

Model implementation

Implementation of the proposed methodology includes three steps: in step 1, test and train landslide subset are randomly selected from landslide inventory database. Nonlandslide test and train subsets are produced in nonlandslide areas with respect to the widely used Jeffries-Matusita (JM) separability measure. In step 2, two OC-SVMs (i.e. for landslide and nonlandslide data points) were performed with respect to each proposed kernel function. Then, a five-class susceptibility map was produced from each two OC-SVMs of respective kernel functions. Finally, in step 3, a two-step approach of accuracy assessment was applied to make a more robust comparison between resultant susceptibility maps of proposed kernel functions. The overall process of the comparative LSM is schematically presented in Fig. 4.

Preparing test and train landslide and nonlandslide points

Here, the achieved value for the landslide and nonlandslide separability measures was equal to 1.977, which suggests that the two landslide and nonlandslide training subsets may be distinct with high separability. Figure 5 also depicts the visual separation of landslide and nonlandslide subsets for every nine criteria using minimum, maximum and mode diagrams.

Training and classification

The proposed model of LSM is based on the OC-SVM classification technique that could be considered as a quantitative soft computing method within which less subjectivity is guaranteed. In this respect, following the accomplishments of necessary data preprocessing steps, each criterion of the study area was divided into a 30 m × 30-m square grid, which contains 2130,613 pixels, laid out in 1583 columns and 1799 lines. Accordingly, after importing the preprocessed data into the MatLab environment, an evaluation matrix is then constructed to be used in the classification process. Experimental results not only showed that OC-SVM is more efficient compared to TC-SVM algorithms while producing results of similar accuracy, but also it requires less time and storage space to run compared to TC-SVMs (Manevitz and Yousef 2001; Senf et al. 2006). As a result, two OC-SVMs were applied for each selected kernel function to construct the respective susceptibility maps in a further step.

Landslide susceptibility values can be assessed and expressed in different ways. Previous studies of LSM typically use four or five categories of susceptibility potential (Gorsevski et al. 2006; Yalçın 2008; Nandi and Shakoor 2009; Gorsevski and Jankowski 2010; Feizizadeh and Blaschke 2013a, b; Pradhan 2012; Shadman Roodposhti et al. 2014). In the present study, each of the proposed susceptibility maps is divided into five susceptibility classes namely very low, low, moderate, high and very high using 2D scatter plots and nine fuzzy if-then rules (Figs. 6 and 7). Figure 6 illustrates how fuzzy if-then rules have been used for pattern classification problems.

Validation of models and obtained results

Model validation is a fundamental step when developing a susceptibility map and for the determination of its prediction ability in any natural hazard study. The prediction capability of LSM and its resultant output is usually estimated by using independent information such as landslide inventory dataset. In this regard, the test landslide subset has been used and two-step evaluation procedures were conducted for validation of each LSM map. In order to make more robust comparison between resultant susceptibility maps of proposed kernel functions, root-mean-square error (RMSE) and percent bias (PBIAS) measures alongside with relative operating characteristics (ROC) curve were used to validate results as follows:

Step 1: RMSE and PBIAS measures

Step 1 consists of using two separate estimators (i.e. RMSE and PBIAS) in order to assess operational efficiency of the obtained LSMs. The RMSE is a frequently used measure of the differences between values predicted by a model or an estimator and the values actually observed (Hyndman and Koehler 2006), while PBIAS measures the average tendency of the estimated values to be larger or smaller than their observed ones (Yapo et al. 1996). Table 1 shows the estimator assessment results of each SVM kernel function for both landslide and nonlandslide subsets, respectively.

Both selected estimators identified RBF as the best method for LSM (Table 1). In terms of RMSE, however, the difference is very small between the two best kernel functions (i.e. polynomial and RBF); the comparison of estimator assessment results confirms that the RBF method seems superior to the others. Positive values indicate overestimation bias, whereas negative values indicate model underestimation bias. Here, comparison of the four LSM models shows that while all the proposed kernel functions tend to underestimating the bias in landslides and nonlandslide susceptibility values, RBF underprediction is much less severe (Table 1). The lower rate of underprediction of each model yields much greater estimates of accuracy and precision compared to the other models.

Step 2: ROC curve analysis and simple overlay

In the second step of the accuracy assessment workflow, the proposed LSM maps were evaluated by calculating the ROC curve (Fawcett 2006; Nandi and Shakoor 2009; Shadman Roodposhti et al. 2014) and percentage of known landslides in various susceptibility classes. The ROC curve is a useful method of representing the quality of deterministic and probabilistic detection and forecast systems (Swets 1988; Pradhan 2012). In the ROC method, the area under the ROC curve (AUC) values, ranging from 0.5 to 1.0, are used to evaluate the accuracy of the model. If the AUC is close to 1, the result of the test is excellent. On the contrary, the closer the AUC to 0.5, the fairer the result of the test (Pradhan 2012).

Two concise and representative test datasets, including 50 recent and historic landslide points and 50 randomly selected nonlandslide locations (same number to occurred landslide events) of the study area, were used for further implementation of the ROC method. The purpose of selecting these numbers of landslide and nonlandslide points is in order to achieve an appropriate dispersion in the study area. When ROC curves of these four LSMs were considered together, their overall performances are seen to be close to each other. However, as expected, the obtained results confirm that the RBF method (AUC = 0.893) seems to be the most successful model and to be superior to the others. Table 2 shows the estimated AUC and standard error value of each ROC curve.

The LSM results were also verified using the test landslide inventory itself. Accordingly, the 50 landslide locations were overlaid on the proposed LSMs (Table 3). The result shows that no recorded landslide appears in neither low- nor very low-susceptibility zones. This holds true for all four proposed susceptibility maps. Also, except the case of sigmoid LSM within which 12 landslides fall into the moderate-susceptibility zone, the same numbers of landslide events occur for the moderate-susceptibility zones of all other LSMs, namely six. The respective numbers of landslide events in the high and very high susceptibility zones vary for all four resultant maps (Table 3).

In addition to the previously mentioned, it should be mentioned that while the RBF-based susceptibility map shows the most reliable results regarding the number of occurred landslide events in high- and very high-susceptibility zones (i.e. 44 occurred landslide events), the sigmoid kernel function seems to not work reliably to the same extent (i.e. 38 occurred landslide events).

Discussion

The application of the SVM has been successfully demonstrated in this paper for the spatial prediction of landslide susceptibility.

The application of the SVM has been gaining significant attention in hazard mapping. It is believed to be an effective method since it has gained several advantages including robustness to noise, nonlinear decision boundaries, easily implementable probabilistic outcome and an inherent capability to deal with high-dimensional classification problems (Ballabio and Sterlacchini 2012). In addition, the susceptibility map produced by SVM appears to have a lower spatial variability when compared with the ones produced by other LSM techniques, while retaining a superior prediction performance (Ballabio and Sterlacchini 2012). Based on these capabilities, the mean objectives of this research were to apply SVM for LSM and to investigate the differences in terms of the functions used. We started from the assumption that the performance of the SVM model significantly depends on the choice of the kernel parameters. Therefore, in the present study, a comparative LSM study was performed by using OC-SVM with four different types of kernel functions namely, linear, polynomial, RBF and sigmoid.

Regarding the fact that SVM is a kernel-based algorithm, it uses a kernel function to transform data from input space into a high-dimensional feature space in which it searches for a separating hyperplane (Bak 2009). However, the validation results from both accuracy assessment steps (prior and posterior to map classification) showed that RBF kernel followed by polynomial kernel assumes to be more reliable in LSM among other applied kernel functions. In other words, according to both statistical estimator assessment results of each SVM kernel function for landslide and nonlandslide test subsets, RBF kernel demonstrated less prediction error (Table 1), which conclusively demonstrates more accurate prediction with respect to ROC curve analysis and simple overlay results (Tables 2 and 3). Thus, after elaboration of detailed comparison studies, we notice that the RBF kernel performance followed by polynomial kernel function assumes to be the best kernels for LSM while the sigmoid kernel seems to be the least suitable. In addition, if compared with other landslide susceptibility maps previously produced for the study area using subjective (AHP and Fuzzy-AHP) and hybrid (Fuzzy Shannon Entropy) methodologies (Feizizadeh et al. 2013b), resultant landslide susceptibility maps of two superior kernel functions (i.e. RBF and polynomial) appear to be far more specific in their spatial delineation of landslide-prone areas where higher accuracy is guaranteed.

Conclusion

The main goal of this paper was to generate landslide susceptibility map via support vector machine (SVM). Four proposed kernels including linear, polynomial, radial basis function (RBF) and sigmoid function of SVM classifier have been found feasible and able to produce reliable susceptibility maps in terms of both accuracy and performance speed regardless of expert’s opinions. However, detailed results revealed that the RBF-based landslide susceptibility map yields the most prediction accuracy and it is identified as the most efficient kernel functions for LSM. The results of accuracy assessment analysis prior and posterior to map classification also show that the RBF followed by polynomial kernel function is the most accurate kernel function for LSM. While the susceptibility map created with the sigmoid function contains the largest portion of landslide-prone areas (high- and very high-susceptibility classes together) followed by the least prediction accuracy, it possesses the least prediction efficiency in comparison with the other three kernel functions.

Ultimately, we need to state that the obtained landslide prediction maps were not only accomplished for the sake of comparison. The authors will provide all four versions with respective explanations to the responsible authorities in Izeh for risk management. The information provided by these maps shall help citizens, planners and engineers to reduce losses caused by existing and future landslides by means of prevention, mitigation and avoidance. The results are therefore useful for explaining the driving factors of the known existing landslides, for supporting emergency decisions and for supporting the efforts on the mitigation of future landslide hazards in the Izeh basin.

References

Abe S (2010) Two-class support vector machines. In Support vector machines for pattern classification, Advances in Pattern Recognition. Springer, London, pp 21–112

Ayalew L, Yamagishi H (2005) The application of GIS-based logistic regression for landslide susceptibility mapping in the Kakuda–Yahiko Mountains, Central Japan. Geomorphology 65(1/2):15–13

Bai SB, Wang J, Lu GN, Kanevski M, Pozdnoukhov A (2008) GIS-based landslide susceptibility mapping with comparisons of results from machine learning methods versus logistic regression in basin scale, Geophysical Research Abstracts, EGU, vol. 10, A-06367

Bak M (2009) Support vector classifier with linguistic interpretation of the kernel matrix in speaker verification, man-machine interactions, Krzysztof A. Cyran, Stanislaw Kozielski, James F. Peters (eds.), ISSN 1867–5662, Springer, 2009, 399–406

Ballabio C, Sterlacchini S (2012) Support vector machines for landslide susceptibility mapping: the Staffora River basin case study, Italy. Math Geosci 44(1):47–70

Ben-Hur A, Weston J (2010) A user’s guide to support vector machines. Methods Mol Biol 609:223–239

Boser BE, IM Guyon, VN Vapnik (1992) A training algorithm for optimal margin classifiers. In Proceedings of the 5th Annual ACM Work-shop on Computational Learning Theory, pp. 144–152. ACM Press

Brenning A (2005) Spatial prediction models for landslide hazards: review, comparison and evaluation. Natural Hazards Earth System Science 5:853–862

Bui DT, Pradhan B, Lofman O, Revhaug I, Dick OB (2012a) Landslide susceptibility assessment in the Hoa Binh province of Vietnam using artificial neural network. Geomorphology. doi:10.1016/ j.geomorph.2012.04.023

Bui DT, Pradhan B, Lofman O, Revhaug I, Dick OB (2012b) Spatial prediction of landslide hazards in Vietnam: a comparative assessment of the efficacy of evidential belief functions and fuzzy logic models. Catena 96:28–40

Campbell W, D Sturim, D Reynolds, A Solomonoff (2006) SVM based speaker verification using a GMM supervector kernel and NAP variability compensation, in ICASSP, vol. 1, pp 97–100

Chen W, Xie X, Wang J,Pradhan B, Hong H, Bui DT, Ma J (2016) A comparative study of logistic model tree, random forest, and classification and regression tree models for spatial prediction of landslide susceptibility, doi.org/10.1016/j.catena.2016.11.032

Elshinawy MY, AHA Badawy, WW Abdelmageed, MF Chouikha. (2010) Comparing one-class and two-class SVM classifiers for normal mammogram detection, IEEE Applied Imagery Pattern Recognition Workshop. DOI: 10.1109/AIPR.2010.5759708

Ercanoglu M, Gokceoglu C (2004) Use of fuzzy relations to produce landslide susceptibility map of a landslide prone area (West Black Sea Region, Turkey). Eng Geol 75(3–4):229–250

Faraji Sabokbar H, Shadman Roodposhti M, Tazik E (2014) Landslide susceptibility mapping using geographically-weighted principal component analysis. Geomorphology. doi:10.1016/j.geomorph.2014.07.026

Fawcett T (2006) An introduction to ROC analysis. Pattern Recogn Lett 27:861–874

Feizizadeh B, Blaschke T (2011) Landslide risk assessment based on GIS multi-criteria evaluation: a case study in Bostan-Abad County, Iran. J Earth Scie Eng 1(1):66–71

Feizizadeh B, T Blaschke (2012) Uncertainty analysis of GIS-based ordered weighted averaging method for landslide susceptibility mapping in Urmia Lake Basin, Iran International Conference of GIScience 2012, Columbus, Ohio, USA, September, 18–21, 2012

Feizizadeh B, Blaschke T (2013a) GIS-multicriteria decision analysis for landslide susceptibility mapping: comparing three methods for the Urmia lake basin, Iran. Nat Hazards 65:2105–2128

Feizizadeh B, Blaschke T (2013b) Land suitability analysis for Tabriz County, Iran: a multi-criteria evaluation approach using GIS. J Environ Plan Manag 56:1–23

Feizizadeh B, Kienberger S (2017) Spatial explicit sensitivity and uncertainty analysis for multicriteria based vulnerability assessment. J Environ Plan Manag. doi:10.1080/09640568.2016.1269643

Feizizadeh B, Blaschke T, Nazmfar H (2012) GIS-based ordered weighted averaging and Dempster Shafer methods for landslide susceptibility mapping in Urmia lake basin Iran. Int J Digital Earth. doi:10.1080/17538947.2012.749950

Feizizadeh B, Blaschke T, Nazmafar H, Rezaei Mogadam MH (2013a) Landslide susceptibility mapping using GIS-based analytical hierarchical process for the Urmia Lake basin, Iran. Int J Environ Res 7(2):319–3336

Feizizadeh B, Blaschke T, Shadman Roodposhti M (2013b) Integration of GIS based fuzzy set theory and multicriteria evaluation methods for landslide susceptibility mapping. Int J Geoinformatics 9(3):49–57

Feizizadeh B, Shadman Roodposhti M, Jankowski P, Blaschke T (2014) A GIS-based extended fuzzy multi-criteria evaluation for landslide susceptibility mapping. Comput Geosci. doi:10.1016/j.cageo.2014.08.001

Gorsevski PV, Jankowski P (2010) An optimized solution of multi-criteria evaluation analysis of landslide susceptibility using fuzzy sets and Kalman filter. Comput Geosci 36:1005–1020

Gorsevski PV, Jankowski P, Gessler PE (2006) An heuristic approach for mapping landslide hazard by integrating fuzzy logic with analytic hierarchy process. Control Cybern 35:21–141

Gunn SR (1997) Support vector machines for classification and regression. Technical Report, Image Speech and Intelligent Systems Research Group, University of Southampton, USA

He S, P Pan, L Dai, H Wang, J Liu (2012) Application of kernel-based Fisher discriminant analysis to map landslide susceptibility in the Qinggan River delta, Three Gorges, China, Geomorphology, 171–172:30–41

Hong H, Pardahan B, Jebur MN, Bui DT, Akgun A (2015a) Spatial prediction of landslide hazard at the Luxi area (China) using support vector machines. Int J Environ Earth Sci 75:1–14

Hong H, Pradhan B, Xu C, Bui DT (2015b) Spatial prediction of landslide hazard at the Yihuang area (China) using two-class kernel logistic regression, alternating decision tree and support vector machines, Catena, 133, 266-281 Jaafari, A., A

Hong HY, Pourghasemi HR and Pourtaghi ZS (2016a) Landslide susceptibility assessment in Lianhua County (China): a comparison between a random forest data mining technique and bivariate and multivariate statistical models, Geomorphology, DOI: 10.1016/j.geomorph.2016.02.012

Hong H, Pradhan, Bui DT, Xu C, Youssef AM, Chen W (2016b) Comparison of four kernel functions used in support vector machines for landslide susceptibility mapping: a case study at Suichuan area (China), doi.org/10.1080/19505.2016.1250112

Hong H, Chen w, Xu C, Youssef AM, Pradhan B, Bui DT (2016c) Rainfall-induced landslide susceptibility assessment at the Chongren area (China) using frequency ratio, certainty factor, and index of entropy, doi.org/10.1080/1049.2015.1130086

Hsu CW, CC Chang, CJ Lin. (2010) A practical guide to support vector classification, Technical Report, Department of Computer Science and Information Engineering, National Taiwan University, Taipei

Hyndman JR, Koehler AB (2006) Another look at measures of forecast accuracy. Int J Forecast 22(4):679–688

Jaafari A, Najafi HR, Pourghasemi J, Rezaeian AS (2014) GIS-based frequency ratio and index of entropy models for landslide susceptibility assessment in the Caspian forest, northern Iran. Int J Environ Sci Technol. doi:10.1007/s13762-013-0464-0

Kanungo DP, Arora MK, Sarkar S, Gupta RP (2006) A comparative study of conventional, ANN black box, fuzzy and combined neural and fuzzy weighting procedures for landslide susceptibility zonation in Darjeeling Himalayas. Eng Geol 85:347–366

Kayastha P, Dhital M, De Smedt F (2012) Landslide susceptibility mapping using the weight of evidence method in the Tinau watershed, Nepal. Nat Hazards 63(2):479–498

Lee S, Ryu JH, Kim IS (2007) Landslide susceptibility analysis and its verification using likelihood ratio, logistic regression, and artificial neural network models: case study of Youngin, Korea. Landslide 4(4):327–338

Manevitz LM, Yousef M (2001) One-class SVMs for document classification. J Mach Learn Res 2:139–154

Micheletti N (2011) Landslide susceptibility mapping using adaptive support vector machines and feature selection, A Master thesis submitted to University of Lausanne Faculty of Geosciences and Environment for the Degree of Master of Science in Environmental Geosciences, 99p

MNR, Ministry of Natural Resources, Khuzestan Province (2010) Landslide event report, Khuzestan, Iran

Moradi M, Bazyar MH, Mohammadi Z (2012) GIS-based landslide susceptibility mapping by AHP method, a case study, Dena City, Iran. J Basic Appl Sci Res 2(7):6715–6723

Muñoz-Marí J, Bovolo F, Gómez-Chova L, Bruzzone L, Camps-Valls G (2010) Semisupervised one-class support vector machines for classification of remote sensing data. IEEE Trans Geosci Remote Sens 48(8):3188–3197

Nandi A, Shakoor A (2009) A GIS-based landslide susceptibility evaluation using bivariate and multivariate statistical analyses. Eng Geol 110(1–2):11–20

Ozdemir A (2011) Landslide susceptibility mapping using Bayesian approach in the Sultan Mountains (Akşehir, Turkey). Nat Hazards 59(3):1573–1607

Pourghasemi, H. R., Jirandeh, A. G., Pradhan, B., Gokceoglu, C. 2013. Landslide susceptibility mapping using support vector machine and GIS at the Golestan Province, Iran 2, Journal of Earth System Science 122, (2)

Pradhan B (2012) A comparative study on the predictive ability of the decision tree, support vector machine and neuro-fuzzy models in landslide susceptibility mapping using GIS. Comput Geosci 51:350–365

Pradhan B, Lee S (2010) Regional landslide susceptibility analysis using back-propagation neural network model at Cameron Highland, Malaysia. Landslides 7(1):13–30

Pradhan B, Lee S, Buchroithner MF (2009) Use of geospatial data for the development of fuzzy algebraic operators to landslide hazard mapping: a case study in Malaysia. Applied Geomatics 1:3–15

Richards JA, Jia X (2006) Remote sensing digital image analysis. Springer-Verlag, Berlin, p 240

Schölkopf B, Platt JC, Shawe-Taylor J, Smola AJ, Williamson RC (2001) Estimating the support of a high-dimensional distribution. Neural Comput 13:1443–1472

Senf A, Chen X, Zhang A (2006) Comparison of one-class SVM and two-class SVM for fold recognition. In ICONIP 2:140–149

Shadman Roodposhti M, Rahimi S, Jafar Beglou M (2014) PROMETHEE II and fuzzy AHP: an enhanced GIS-based landslide susceptibility mapping. Nat Hazards 73(1):77–95

Swets JA (1988) Measuring the accuracy of diagnostic systems. Science 240:1285–1293

Tax DMJ, Duin RPW (1999) Support vector domain description. Pattern Recogn Lett 20:1191–1199

Tsangaratos P, Ilia L, Hong H, Chen W, Xu C (2016) Applying information theory and GIS-based quantitative methods to produce landslide susceptibility maps in Nancheng County, China, DOI: 10.1007/s10346-016-0769-4

Vapnik V (1995) The nature of statistical learning theory. Springer-Verlag, New York

Vapnik VN (1998) Statistical learning theory. Wiley, New York

Xu C, Dai F, Xu X, Lee YH (2012) GIS-based support vector machine modeling of earthquake-triggered landslide susceptibility in the Jianjiang River watershed, China. Geomorphology 145–146(1):70–80

Yalçın A (2008) GIS-based landslide susceptibility mapping using analytical hierarchy process and bivariate statistics in Ardesen (Turkey): comparisons of results and confirmations. Catena 72(1):1–12

Yao X, Thamb LG, Dai FC (2008) Landslide susceptibility mapping based on support vector machine: a case study on natural slopes of Hong Kong, China. Geomorphology 101(4):572–582

Yapo PO, Gupta HV, Sorooshian S (1996) Automatic calibration of conceptual rainfall-runoff models: sensitivity to calibration data. J Hydrol 181(1–4):23–48

Yesilnacar E, Topal T (2005) Landslide susceptibility mapping: a comparison of logistic regression and neural networks methods in a medium scale study, Hendek region (Turkey). Eng Geol 79(3–4):251–266

Yilmaz I (2010) Comparison of landslide susceptibility mapping methodologies for Koyulhisar, Turkey: conditional probability, logistic regression, artificial neural networks, and support vector machine. Environmental Earth Science 61:821–836

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Feizizadeh, B., Roodposhti, M.S., Blaschke, T. et al. Comparing GIS-based support vector machine kernel functions for landslide susceptibility mapping. Arab J Geosci 10, 122 (2017). https://doi.org/10.1007/s12517-017-2918-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12517-017-2918-z