Abstract

The main goal of this paper is to design and develop a new technique and software tool that help automatic lithofacies segmentation from well logs data. Lithofacies is a crucial problem in reservoir characterization and our study intends to prove that techniques like wavelet transform modulus maxima lines (WTMM) and detrended fluctuation analysis (DFA) approaches allow geological lithology segmentation from well logs data. On the one hand, WTMM prove to be useful for delimitation of each layer. We based on its sensitivity on the presence of more than one texture. On the other hand, DFA is used to enhance the estimation of the roughness coefficient of each facies. We have used them jointly to segment the lithofacies of boreholes located in the Algerian Sahara. Obtained results are encouraging to publish this method because the principal benefit is economic.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

One of the main goals of geophysical studies is to apply suitable mathematical and statistical techniques to extract information about the subsurface properties. Well logs are largely used for characterizing reservoirs in sedimentary rocks. In fact, it is one of the most important tools for hydrocarbon research for oil companies. Several parameters of the rocks can be analyzed and interpreted in terms of lithology, porosity, density, resistivity, salinity, and the quantity and the kind of fluids within the pores.

Geophysical well logs often show a complex behavior which seems to suggest a fractal nature (Pilkington and Tudoeschuck 1991; Wu et al. 1994). They are geometrical objects exhibiting an irregular structure at any scale. In fact, classifying lithofacies boundary from borehole data is a complex and nonlinear problem. This is due to the fact that several factors, such as pore fluid, effective pressure, fluid saturation, pore shape, etc. affect the well log signals and thereby limit the applicability of linear mathematical techniques. To classify lithofacies units, it is, therefore, necessary to search for a suitable nonlinear method, which could evade these problems.

The scale invariance of properties has led to the well-known concept of fractals (Mandelbrot 1982). It is commonly observed that well log measurements exhibit scaling properties and are usually described and modeled as fractional Brownian motions (Pilkington and Tudoeschuck 1991; Wu et al. 1994; Kneib 1982; Bean 1982; Holliger 1982; Turcotte 1997; Shiomi et al. 1997; Dolan et al. 1996; Li 2003).

In previous works (Ouadfeul 2008, 2011), we have shown that well logs fluctuations in oil exploration display scaling behavior that has been modeled as self-affine fractal processes. They are therefore considered as fractional Brownian motion (fBm), characterized by a fractal \( {k^{ - }}^{\beta } \) power spectrum model where k is the wavenumber and β is related to the Hurst exponent (Herrmann 1995). These processes are monofractal whose complexity is defined by a single global coefficient, the Hurst parameter H, which is closely related to the Hölder degree regularity. Thus, characterizing scaling behavior amounts to estimating some power law exponents.

Petrophysical properties and classification of lithofacies boundaries using the geophysical well log data is quite important for the oil exploration. Multivariate statistical methods such as principle component and cluster analyses and discriminant function analysis have regularly been used for the study of borehole data. These techniques are, however, semi-automated and require a large amount of data, which are costly and not easily available every time.

The wavelet transform modulus maxima lines is a multifractal analysis type where the mathematical measure is replaced by the modulus of the continuous wavelet transform (CWT) and the support of this measure is replaced by the points of maxima of the modulus of the CWT (Arneodo and Bacry 1995).

The detrended fluctuation analysis is a statistical method introduced by Peng et al. (1994) in genetic works, it was used for estimation of Hurst exponent of DNA nucleotides.

We use in this paper the wavelet transform modulus maxima lines (WTMM) combined with the detrended fluctuation analysis (DFA) to establish a technique of lithofacies segmentation from well logs data.

Principle of the DFA estimator

The DFA is a method for quantifying the correlation propriety in no-stationary time series based on the computation of a scaling exponent H by means of a modified root mean square analysis of a random walk (Peng et al. 1994).

To compute H from a time-series x(i) [i = 1,…, N], like the interval tachogram, the time series is first integrated:

where, M is the average value of the series x(i) and k ranges between 1 and N.

Next, the integrated series y(k) is divided into boxes of equal length n and the least-square line fitting the data in each box, y n (k), is calculated. The integrated time series is detrended by subtracting the local trend y n (k), and the root mean square fluctuation of the detrended series, F(n) is computed:

F(n) is computed for all time-scales n.

Typically, F(n) increases with n, the "box-size". If log F(n) increases linearly with log n, then the slope of the line relating F(n) and n in a log–log scale gives the scaling exponent H.

where, \( \alpha = 2.H - 1 \)

- If H = 0.5:

-

the time-series x(i) is uncorrelated (white noise).

- If H = 1.0:

-

the correlation of the time-series is the same of 1/f noise.

- If H = 1.5:

-

x(i) behaves like Brown noise (random walk)

The wavelet transform modulus maxima lines

The WTMM is a multifractal analysis method based on the continuous wavelet transform (Arneodo et al. 1988). It is composed of five steps (Arneodo and Bacry 1995):

-

a.

Calculation of the continuous wavelet transform.

-

b.

Calculation of the local maxima of the modulus of the CWT.

-

c.

Calculation of the function of partition Z(q, a) where a is the dilatation and q is a scale factor.

-

d.

Estimation of the spectrum of exponents τ(q).

-

e.

Estimation of the spectrum of singularities D(h).

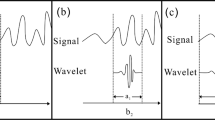

The continuous wavelet transform

Here, we review some of the important properties of wavelets without any attempt at being complete. What makes this transform special is that the set of basic functions, known as wavelets, are chosen to be well-localized (have compact support) both in space and frequency (Arneodo and Bacry 1995; Arneodo et al. 1988). Thus, one has some kind of “dual-localization” of the wavelets. This contrasts the situation met for the Fourier transform where one only has “mono-localization” meaning that localization in both position and frequency simultaneously is not possible.

The CWT of a function s(z) is given by Grossman and Morlet (1985) as:

Each family test function is derived from a single function ψ(z) defined to as the analyzing wavelet according to (Torrésani 1995):

Where a ∊ R +*is a scale parameter, b ∊ R is the translation and ψ* is the complex conjugate of ψ. The analyzing function ψ(z) is generally chosen to be well localized in space (or time) and wavenumber. Usually, ψ(z) is only required to be of zero mean, but for the particular purpose of multiscale analysis ψ(z) is also required to be orthogonal to some low order polynomials, up to the degree n − 1, i.e., to have n vanishing moments:

According to Eq. 5, p order moment of the wavelet coefficients at scale a reproduce the scaling properties of the processes. Thus, while filtering out the trends, the wavelet transform reveals the local characteristics of a signal and more precisely its singularities.

It can be shown that the wavelet transform can reveal the local characteristics of s at a point z 0 . More precisely, we have the following power–law relation (Herrmann 1995; Audit et al. 2002):

where, h is the Hölder exponent (or singularity strength). The Hölder exponent can be understood as a global indicator of the local differentiability of a function s.

The scaling parameter (the so-called Hurst exponent) estimated when analyzing process by using Fourier transform (Herrmann 1995) is a global measure of self-affine process, while the singularity strength h can be considered as a local version (i.e., it describes “local similarities”) of the Hurst exponent. In the case of monofractal signals, which are characterized by the same singularity strength everywhere (h(z) = constant), the Hurst exponent equals h. Depending on the value of h, the input signal could be long-range correlated (h > 0.5), uncorrelated (h = 0.5), or anticorrelated (h < 0.5).

The function of partition of the spectrum of exponents

Positioning of maxima is carried out using the first and the second derivatives of the modulus of the wavelets coefficients |C(a,b)|.

i.e., |C(a,b)| able a local maximum at the point b i if and only if:

The function of partition is the sum of the modulus of the wavelet coefficients on the local maxima, with a power of order q.

For low dilations, the function of partition is dependent to the spectrum of exponent by: \( Z(q,a) \approx {a^{{\tau (q)}}} \)

By consequences, the spectrum of exponents is obtained by a simple linear fit of log(Z(q,a)) versus log(a).

The singularities spectrum

Estimation of the spectrum of singularities is based on the direct Legendre transform of the spectrum of exponents (Arneodo and Bacry 1995).

In our algorithm, we use the functions defined in (Arneodo and Bacry 1995), based on Boltzmann’s weight. These functions are defined as:

where \( \widehat{T} \) is the Boltzmann weight defined as:

The spectrum of exponents is obtained by the graphical representation of D(q)versus h(q)for different values of q (Arneodo and Bacry 1995). \( {T_{\psi }}\left[ S \right] \) is the continuous wavelet transform of the S(z) signal.

Optimization of the processing parameters

Theoretically, the spectrum of exponents of an fBm signal is a segment of a straight line. It has the following equation (Arneodo and Bacry 1995):

H is the Hurst exponent. This stage consists to optimizing the processing parameters by WTMM in order to check this condition. Parameters to be optimized are:

-

The maximum value of the scale factor qmax where the calculation of the function of partition is carried out on the interval [−q max, +q max].

-

Parameters of the analyzing wavelet which is the complex Morlet wavelet. It has the expression (10):

$$ \Psi (Z) = \exp \left( { - {Z^2}/2} \right) \times \exp \left( {i \times \Omega \times Z} \right) \times \left( {1 - \exp \left( { - {\Omega^2}/4} \right) \times \exp \left( { - {Z^2}/2} \right)} \right) $$(10)

Several experiments on fBm realizations show that optimal value of Ω for a better estimation of the coefficients of Hurst is equal to 4.8.

Processing of an fBm signal with 1,024 samples

We calculate the spectrum of exponents by WTMM of an fBm signal with 1,024 samples and a Hurst coefficient H = 0.60 for the following values of q max: 2.0, 1.5, 1.0, and 0.50. Table 1 summarizes all obtained results.

The spectrum of exponents calculated for q max = 0.50 shows that this value is optimal. In fact, the spectrum of exponent is a segment of straight line; this last is an s indicator of textures homogeneity. Estimation of the Hurst exponent is better for this value of q max.

Remark

Many numerical experiences on fBm realizations of 512 and 256 samples show that q max = 0.50 is the optimal the value.

Short time series analysis

Our objective is to seek very fine textures; for that, we have to concentrate our studies on short time series. We have analyzed sets of signals with 128, 64, and 32 samples. First, we built several fBm realizations with 128 samples with various roughness coefficients; this last is varied from 0 to 1. A detailed study showed that optimal values of q max are related to the Hurst exponents. Obtained results are summarized in Table 2.

To enhance the estimation, we calculated the Hurst exponent by DFA, obtained results showed that this estimator gives better results compared to the WTMM.

Same work was made for signals with 64 and 32 samples; we have obtained the following results:

-

WTMM analysis showed that the two types of signals able the same optimal parameters as those obtained for signals of 128 samples.

-

DFA estimate better the Hurst exponent than the WTMM.

Analysis of physical responses of several textures

The theory developed by Arneodo and Bacry (1995) shows that:

That is to say the S(t) signal which constitute of a whole of fBm signals of Hurst exponents H 1, H 2, H 3.,…, Hn. The spectrum of exponentsτ(q) calculated by WTMM formalism is depends only on the maximal and minimal Hurst coefficients. The spectrum of exponents consists of two segments of straight lines of Eq. 11.

where, H min and H max are the maximum and the minimum of the set of Hurst exponents {H 1, H 2, H 3.,…, Hn}

Application on synthetic data

In order to check our source codes developed in C language, we have generated a model made up of 04 fBm realizations with the following Hurst exponents: 0.40, 0.60, 0.70, and 0.80; each signal has 64 samples. The realization of this model is presented in Fig. 1. We applied a WTMM analysis to this signal, the modulus of the wavelets coefficients and the spectrum of exponents are represented in Fig. 2a and b. One can remark that the WTMM method is sensitive only to the two textures characterized by a maximum and minimum roughness coefficient.

WTMM analysis of the signal of Fig. 5. a Modulus of the wavelet coefficients. b Spectrum of exponents

Automatic segmentation algorithm

Our algorithm of segmentation is based on the sensitivity of the WTMM of more than one homogeneous texture; this last phenomenon is expressed by two segments of straight lines in the spectrum of exponents. Estimation of roughness coefficient of each texture is enhanced by DFA. The input of the program of segmentation is composed of two variables:

-

(a)

Threshold of decision of homogeneity of textures, which it is equal to the difference between the slopes of the two segments of straight lines calculated for q < 0 and q > 0. We indicate by ΔH this variable in the flow chart and Δz is the sampling interval.

-

(b)

Minimal size where texture is considered homogeneous, we indicate by W this length.

The flow chart of the algorithm of segmentation is presented in Fig. 3.

Application on synthetic data

We applied this algorithm at a whole of fBm realizations with different Hurst exponents in order to model geological diversity. The modeled well log consists of 02 fBm signals, each signal has 64 samples. Their Hurst exponents are equals to 0.4 and 0.8.

Figure 4 represents the synthetic model with the obtained segmentation in the half-plane depth Hurst exponent. The thickness of each column represents the thickness of the layer cut-out and its height represents the value of the Hurst exponent estimated either by WTMM (black) or by DFA (red). The signal in green is the normalized fBm process. All details of segmentation are summarized in Table 3.

One can remark that the proposed segmentation algorithm detects with an excellent precision the limits of each layer, for estimation of the roughness coefficient, DFA estimator gives better results compared to the WTMM.

Application on real data

The proposed idea has been applied at the boreholes A and B located in the Berkine basin (Algeria), it is a vast Paleozoic formation located in the South East of Algeria (see Fig. 5). It represents a very important hydrocarbon field.

Geological context of the berkine basin

The Berkine basin is a vast circular Palaeozoic depression, where the basement is situated at more than 7,000 m in depth. Hercynian erosion slightly affected this depression because only Carboniferous and the Devonian are affected at their borders. The Mesozoic overburden varied from 2,000 m in southeast to 3,200 m in the northeast. This depression is an intracratonic basin which has preserved a sedimentary fill out of more than 6,000 m. It is characterized by a complete section of Palaeozoic formations spanning from the Cambrian to the Upper Carboniferous. The Mesozoic to Cenozoic buried very important volume sedimentary material contained in this basin presents an opportunity for hydrocarbons accumulations. The Triassic province is the geological target of this study. It is mainly composed by the clay and sandstone deposits.

Its thickness can reach up to 230 m. The sandstone deposits constitute very important hydrocarbon reservoirs.

The studied area contains many drillings. However, this paper will be focused on the A and B boreholes. The main reservoir, the Lower Triassic clay sandstone labeled TAGI, is represented by fluvial and eolian deposits. The TAGI reservoir is characterized by three main levels: upper, middle, and lower. Each level is subdivided into a total of nine subunits according to SONATRACH nomenclature (Zeroug et al. 2007).The lower TAGI is often of a very small thickness. It is predominantly marked by clay facies, sometimes by sandstones and alternatively by the clay and sandstone intercalations, with poor petrophysical characteristics (Fig. 6).

Well logs data processing

We applied this technique of segmentation at the A and B boreholes located in the Berkine basin; we have processed the sonic P wave velocity well logs data of the two boreholes. Figure 7 shows these logs; the sampling interval is 0.125 m. Segmentation given in the stratigraphic column is used as a priori information, by consequences we have cut out each interval of the stratigraphic column. The various intervals of this last one are detailed in Table 4. Obtained lithofacies models of the three intervals are schematized in Fig. 8.

Conclusion

We planned an automatic algorithm of segmentation based on the sensitivity of the WTMM; the spectrum of exponents is an indicator of homogeneities of textures. We construct the lithofacies of the boreholes A and B located in Algeria Sahara. Obtained lithofacies are compared with well logs fluctuations. Obtained results exhibit a big correlation between the two models.

The aim of this study is to realize a more consistent lithologic interpretation of logs optimizing the use of the multifractal analysis resisted by the continuous wavelet transform. A technique of lithofacies segmentation based on the wavelet transform modulus maxima lines WTMM combined with the detrended fluctuation analysis DFA is developed and successfully applied the well log data of B borehole located in Berkine basin in order to classify lithofacies. It is important to outline that the comparison of data sets with classification derived from the gamma ray log is legitimate because the studied interval is a limited part of the TAGI. Our results suggest an enhanced facies segmentation which leads an accurate interpretation process to update the reservoir architecture.

By implementing our method, we have demonstrated that it is possible to provide an accurate geological interpretation within a short time in order to take immediate drilling and completion decisions, but also, in a longer-term purpose, to update the reservoir model. Because of its computational efficiency, it is proposed that the present methods can be further exploited for analyzing large number of borehole data in other areas of interest.

References

Arneodo A, Bacry E (1995) Ondelettes, multifractal et turbelance de l’ADN aux croissances cristalines. Diderot editeur arts et sciences, Paris

Arneodo A, Grasseau G, Holschneider M (1988) Wavelet transform of multifractals. Phys Rev Lett 61:2281–2284

Audit B, Bacry E, Muzy J-F, Arneodo A (2002) Wavelet-based estimators of scaling behavior. IEEE 48:2938–2954

Bean CJ (1982) On the cause of 1/f-power spectral scaling in borehole sonic logs. Geophys Res Lett 23:3119–3122

Dolan SS, Bean CJ, Riollet B (1996) The broad-band fractal nature of heterogeneity in the upper crust from petrophysical logs. Geophys J Int 132:489–507

Grossman A, Morlet J-F (1985) Decomposition of functions into wavelets of constant shape, and related transforms. In: Streit L (ed) Mathematics and physics, lectures on recent results. World Scientific, Singapore

Herrmann FJ (1995) A scaling medium representation, a discussion on well-logs, fractals and waves. Phd thesis Delft University of Technology, Delft, p 315

Holliger K (1982) Upper crustal seismic velocity heterogeneity as derived from a variety of P-wave sonic log. Geophys J Int 125:813–829

Kneib G (1982) The statistical nature of the upper continental cristalling crust derived from in situ seismic measurements. Geophys J Int 122:594–616

Li C-F (2003) Rescaled-range and power spectrum analysis on well-logging data. Geophys J Int 153:201–212

Mandelbrot BB (1982) The fractal geometry of nature. WH Freeman, San Francisco, USA

Ouadfeul S (2008) Reservoir characterization using the continuous wavelet transform (CWT) combined with the self-organizing map (SOM), ECMOR

Ouadfeul S (2011) Analyse Multifractal des signaux géophysiques. Application sur les signaux de diagraphies. Editions Universitaires Européennes, ISBN 978-613-1-58257-8.

Peng C-K, Buldyrev SV, Havlin S, Simons M, Stanley H, Goldberger AL (1994) Mosaic organization of DNA nucleotides. Phys Rev E 49:1685–1689

Pilkington M, Tudoeschuck JP (1991) Naturaly smooth inversions with a priori information from well logs. Geophysics 56:1811–1818

Shiomi K, Sato H, Ohtake M (1997) Broad-band power-law spectra of well-log data in Japan. Geophys J Int 130:57–64

Torrésani B (1995) Analyse continue par ondelettes, Inter Editions/CNRS Edition.

Turcotte DL (1997) Fractal and chaos in geology and geophysics. Cambridge University Press, Cambridge

Wu RS, Zhengyu X, Li XP (1994) Heterogeneity spectrum and scale-anisotropy in the upper crust revealed by the German Continental Deep-Drilling (KTB) holes. Geophys Res Lett 21:911–914

Zeroug S, Bounoua N, Lounissi R (2007) Algeria well evaluation conference. http://www.slb.com/content/services/resources/premium_content.asp?mode=slb&

Acknowledgment

The authors would like to thank Profs. Alain Arneodo and Patrice Abry for their assistances. Our thanks go to the reviewers for their suggestions and recommendations to improve the quality of the paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ouadfeul, SA., Aliouane, L. Automatic lithofacies segmentation using the wavelet transform modulus maxima lines combined with the detrended fluctuation analysis. Arab J Geosci 6, 625–634 (2013). https://doi.org/10.1007/s12517-011-0383-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12517-011-0383-7