Abstract

Social robots are being developed to support care given to older adults (OA), people with dementia (PWD) and OA with mild cognitive impairment (MCI) by facilitating their independence and well-being. The successful deployment of robots should be guided by knowledge of factors which affect acceptability. This paper critically reviews empirical studies which have explored how acceptability issues impact OA, PWD and OA with MCI. The aim is to identify the factors governing acceptability, to ascertain what is likely to improve acceptability and make recommendations for future research. A search of the literature published between 2005 and 2015 revealed a relatively small body of relevant work has been conducted focusing on the acceptability of robots by PWD or OA with MCI (n \(=\) 21), and on acceptability for OA (n \(=\) 23). The findings are presented using constructs from the Almere robot acceptance model. They reveal acceptance of robots is affected by multiple interacting factors, pertaining to the individual, significant others and the wider society. Acceptability can be improved through robots using humanlike communication, being personalised in response to individual users’ needs and including issues of trust and control of the robot which relates to degrees of robot adaptivity. However, most studies are of short duration, have small sample sizes and some do not involve actual robot usage or are conducted in laboratories rather than in real world contexts. Larger randomised controlled studies, conducted in the context where robots will be deployed, are needed to investigate how acceptance factors are affected when humans use robots for longer periods of time and become habituated to them.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Dementia, which affects mainly people over age 65, is expected to affect 66 million people by 2030 and 115 million by 2050 [1]. This progressive degenerative syndrome can cause memory loss, mood and personality changes, communication problems and difficulty performing routine tasks [2]. Mild Cognitive Impairment (MCI) is estimated to affect between five and 20% of people over 65 and is a condition where people have minor problems with memory or thinking. People with MCI do not have a diagnosis of dementia but are at increased risk of developing this condition [3]. Social robots are being developed to support the care given by human care givers to older adults (OA), people with dementia (PWD) and MCI [4, 5]. These aim to reduce social isolation, improve quality of life and support people in their social interactions [5,6,7,8,9].

Social robots are defined as being useful, and possessing social intelligence and skills which enable them to interact with people in a socially acceptable manner [10]. This means they need to be able to communicate with the user and be perceived by the user as a social entity [11]. This definition includes companion-type robots, with a primary purpose to enhance mental health, and the psychological well-being of its users, and service-type robots which support people in undertaking daily living functions. Acceptability is defined as the ‘robot being willingly incorporated into the older person’s life’ [12], which implies long term usage.

Acceptability of these robots to PWD, OA with MCI and OA is an important issue which depends on multiple variables [13, 14]. Future research and the design, development and deployment of robots, in this rapidly expanding field, needs to be guided by knowledge of factors which affect acceptability. This paper critically reviews empirical studies which have explored how acceptability issues impact these groups of people. It aims to: (1) determine how this issue has been examined to date; (2) identify the importance of particular factors; (3) ascertain what is likely to improve acceptability; and, (4) make recommendations for future research.

2 Literature Search Methodology

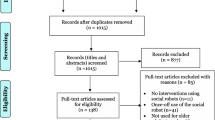

Literature published between January 2005 and May 2015 was searched systematically by a librarian in the following databases: Cochrane library, PubMed, Scopus, CINAHL, EMBASE, Web of Science Core Collection, PsychINFO, Compendex (EI Village 2), using the terms: accept*, dementia*, Alzheimer*, robot*, ‘’cognitive deficiency”, elderly, technology accept*, user accept*, attitude, social robots, assistive technology, social commitment, social, therapeutic, relationship building, companionship, caring, mental health, entertainment, interactive autonomous, interactive engaging, mental commitment. The titles of 198 articles were read and 141 were discounted as they were not in English, lacked relevance or were duplicates. Abstracts from the remaining 55 papers were then examined and 11 were excluded as they were not empirical studies or did not focus on PWD, OA with MCI or OA. Therefore in total 44 studies were identified for inclusion in this review. OAs were defined as being people over 65 years who do not have a diagnosis of dementia or cognitive impairment and PWD describes participants who have a diagnosis of dementia.

3 Literature Review

This review uses the Almere theoretical model of technology acceptance [15] as a framework to present its findings. Constructs from this model, which was developed to test acceptance of assistive social agents by elderly users, are defined in Table 1.

The review begins by introducing literature which explains how psychological factors affect acceptance by impacting users’ anxiety levels and their attitude towards robots. These factors predispose a user to respond to a robot in a particular way, influencing the degree of acceptance likely at an initial robot–user encounter.

3.1 Attitudes and Anxieties Towards Technology

Before a person has their first direct experience of robots, users form a mental model about them which conditions their responses to the robot. Mental models are influenced by past personal experience and second hand sources of information external to the individual, such as science fiction and the media [11, 17,18,19]. For example, zoomorphic robots, such as Paro, appearing as a baby harp seal, may stimulate users and connect with prior experiences, by evoking happy caring emotions, previously generated when interacting with pets [20].

Prior experiences and an individual’s attitude towards a robot is affected by their expectations about what it can and cannot do. This is also linked to anthropomorphism and the human tendency to regard robotic and non-robotic objects as living entities with humanlike capacities of mind. How this occurs is explored further below.

Attitudes to particular robots, and the degree of anxiety or emotional reaction that they evoke, are influenced by the degree to which a human perceives a robot to have an ability to feel (mind experience) and an ability to do things (mind agency). The latter includes perceptions about its capacity for self-control, memory and morality [21]. Takayama [22] suggests that a robot which is perceived to have a high level of mind agency, appears to have its own needs, desires and goals, i.e. it is perceived to possess human attributes. Whereas, a robot perceived to have a middle level of perceived agency, does not have its own motivations and is regarded as a tool. Takayama [22] distinguishes between in-the-moment perceptions of agency and more reflective perceptions which result from considered thinking about a situation. Robots can be perceived as highly agentic entities in-the-moment, as people respond to them instinctively. This tendency may facilitate humans forming emotional bonds with a robot and eliciting social responses. It has been proposed that the tendency to anthropomorphise may increase if a person is lonely or feels gratitude towards a device which helps them [17].

Stafford et al. [21] investigated whether perceptions about mind can predict robot usage and how this affects attitudes towards robots. They studied attitudes towards Healthbot, which can respond to face recognition and touch–screen interaction and perform vital signs measurements. It also provided medication reminders, entertainment or telephone calling and had the ability to assess brain fitness, with self- selected OA participants (n \(=\) 25) living independently in a residential unit. Having obtained baseline measurements, fourteen participants did not interact with the robot, four used it in their apartments, four used it in the residents’ foyer, and three used it in both places. Participants who attributed more agency to the robot were more wary of it and used it less, but their attitudes improved when they became aware of the robots limited ability to think and remember.

It is possible that acceptability will be improved if robots are perceived to have a level of agency appropriate to their purpose and the context in which they are employed. Indeed it has been speculated that robots perceived as having low agency but high experience (feelings) might make more acceptable companion robots [11]. Paro is a highly successful robot which conforms to this specification, appearing to have a lot of feelings but little agency. The evidence regarding how gender, education, age and prior computer experience (CE) impacts anxiety and attitude towards robots presents a complex picture. Arras and Cerqui [24] found that 34% of men had a more positive image of robotics compared to 9% of women and the latter were more skeptical on every aspect of robot technology. In addition 39% of OA had a more positive image of robots compared to 22% of those under 18 years. OA believed robots could contribute to their personal happiness and quality of life, although they rejected the idea of robots replacing human social contact [24].

Heerink [16] explored the influence of gender, education, age and computer experience on acceptance by showing OA, who were living semi-independently in residential care (n \(=\) 66, 43 female, 23 male, aged 65–92), a film of a RoboCare robot being used by an older adult. Authors describe this robot as a mobile cylinder with a female screen face which can act autonomously and connect to smart-home technology. Data collected using questionnaires suggested that participants with more education were less open to perceiving the robot as a social entity. In addition, people with more CE perceived it as easier to use (PEOU). Gender differences coincided with correlations of CE and PEOU, which suggested that males had more CE and this increased their PEOU. However, this study also found that anxiety levels towards the robot were influential and correlated with age, CE and education levels (0.331, \({p}<0.005\); \(-\,0.356\), \({p}<0.005; -\,0.229, {p}<0.25\)).

The effect of age and anxiety towards robots was also investigated by Normura et al. [25] who conducted an online survey randomly selecting respondents from a Japanese survey company, based on age and gender (n \(=\) 100; aged 20–70). They found that people in their twenties, who had experienced humanoid robots directly or in the media, reported higher anxiety levels toward robots than those aged 50–60. However, OA mistrusted technology significantly more than younger adults. The former also found technology more difficult to use and had less knowledge of its capabilities. Women were more skeptical about using robots than men. Interestingly, the age groups used different strategies when learning how to use unfamiliar technologies: young people used trial and error, adults read instructions whereas OA preferred to ask for help. This research also found that more OA compared to younger adults preferred robots not to be freely mobile within the home (90 vs 28%) and only 8% of OA compared with 54% of younger adults reported that they would feel completely safe and comfortable to have a robot performing tasks in their house. Scopelliti et al. [19] supported the inference that OA may respond to technologies differently to younger people. Their pilot qualitative study, which involved three generations in six families (n \(=\) 23), found that OA evaluated robotic technology positively. However, OA were concerned about the harmonious integration of robots into the home environment, whereas participants in other age groups expressed different priorities [19].

3.2 Intention to Use (ITU)

The evidence suggests that factors impacting acceptance can change when a person uses a robot and becomes more familiar with it, rather than just hearing about it from a third party [26,27,28]. For this and other reasons described below, ITU as a measure of robot acceptability can provide less reliable and valid information than studies which examine actual robot usage over a prolonged period of time. For example, Stafford et al. [23] recorded attitudes towards the robot Cafero, using a robot attitude scale, before and after staff (n \(=\) 32) and OA residents (n \(=\) 21) in a retirement village had 30 min to interact with it. Following the interaction, both participant groups had less negative attitudes towards the robots. A similar improvement in attitude was found by Gross and Schroeter et al. [23], in their observational qualitative field trial conducted in a ‘smart’ house. They found some OA with MCI and their carer partners (n \(=\) 4 dyads), were initially negative toward the companionable robot and perceived it as frightening [27]. However, they started to appreciate its benefits and found it more acceptable after spending 1 day using it. Heerink [15] evaluated whether ITU predicted actual robot usage, with OA residents (n \(=\) 30) who were introduced to iCat, played with it for 3 min and then had their ITU measured by completing a questionnaire. Afterwards, iCat was left in a residents lounge for participants to use if they wished when they were alone. This subsequent usage was video recorded. They found that ITU sometimes predicted actual usage but did not always do so.

In a subsequent experiment involving OA (n \(=\) 30), usage of Steffie, a virtual screen character used to assist participants with online computer activities, was recorded. This program was installed in participants’ home computers. Heerink [15] found ITU is impacted by other acceptance factors and can be predicted by users’ attitude and how much they perceive the robot to be useful.

Stafford [11] suggests that ITU can be problematic when researching robot acceptability with OA and PWD. This is because questions about intending to use robots in the future do not always make sense to participants when they know a robot is not going to be available to them after completion of a study.

In contrast to studies which have used ITU measures, those examining the impact of direct robot experience on robot acceptance over longer periods of time in the user’s usual living situation [6, 7, 9, 26] have the potential to provide more useful information on acceptability. Pfadenhauer and Dukat’s [28] provides insight on the importance of exploring acceptability factors in context. They ethnographically examined the deployment of Paro in a German residential care setting for PWD, using participant observation and video-graphic documentation of approximately three group activity sessions per month, over 1 year. They found that Paro was used in a variety of ways: to facilitate communication, as a conversationalist, and as an observation instrument. They concluded that the robots appearance and its deployment were interdependent, as through this humans establish how (and if) a technology will be used and what it means to them. Such decisions are influenced by users’ perceptions about their unmet needs and how well they think a particular robot will meet these needs.

3.3 Perceived Usefulness (PU)

Social robots need to be perceived by users as useful and relevant to their current unmet needs [15, 21, 29,30,31]. De Graaf [26] explored acceptability with a rabbit-like health promotion robot, Karotz, placed in the homes of OA (n \(=\) 6) during three 10 day periods over 5 months. The robot was programmed to greet participants, provide a weather report, advise on activity levels, discuss daily activities and remind participants to weigh themselves. Interactions were videoed and semi structured interviews were conducted. Researchers found that, at each usage phase, participants talked most about whether or not the robot was useful to them.

This suggests that identifying needs accurately may improve robot acceptability. However, ascertaining perceived needs of OA and PWD can be difficult and is impacted by many factors. For instance, identifying unmet needs is complicated if OA have reduced awareness of their own needs due to habituation or if they are unwilling to acknowledge disability fearing stigmatization or loss of independence [11]. Furthermore, PWD may not have the cognitive ability to identify or express their needs [31] or they might believe that social robots are not useful if their needs are currently being fulfilled by caregivers [32]. Indeed, several studies suggest that PWD and their carers can disagree as to the nature of their unmet needs and potential solutions provided by robots [12, 30, 33]. This fact impacts robot acceptability by individuals and is discussed further below with reference to social influences.

Due to the challenge involved in accurately assessing the unmet needs of PWD and OA, Stafford [29] recommends that robot designers consider this issue early and regularly during the robot design stage using data triangulation and ‘open’ methodologies, with participants who match the end target users.

3.4 Perceived Ease of Use (PEOU)

This section examines research which has addressed issues of perceived practical utility, which includes usability and PEOU. It focuses on what can enhance usability and therefore potentially increase acceptability.

The impact of usability issues of social robots for PWD is illustrated by Kerssens et al.’s [34] study. This tested the acceptability of companion, a touch screen technology which delivers psychosocial interventions to assist in the management of neuropsychiatric symptoms of dementia and seeks to reduce carers’ distress. PWD and carers (n \(=\) 7 dyads) were studied in participants’ own homes, interacting with companion for 3 weeks. PEOU and utility issues were important as all participants had comorbidities and the majority experienced visual, hearing, or fine motor difficulties. Companion was personalised to individual PWD by uploading information such as photographs, videos and messages from trusted people, information from life story interviews including food preferences, important routines, positive life events, memories and interests. Carers selected problematic symptoms that they would like to be targeted as intervention goals. Baseline status of these goals were recorded along with measures of participants’ expectations of the technology using Davis’ [35] scales of PEOU and PU. Post intervention objective and subjective measures suggested that companion was perceived as easy to use and it significantly facilitated meaningful positive engagement and simplified the carer’s daily lives. However, PWD (n \(=\) 2) did not use companion independently, due to their physical limitations, and others (n \(=\) 2) ignored the robot’s interventions, even when these were noticed. Notably, carers also enjoyed the reminiscence of their shared past afforded by companion. Regarding the targeted goals for reducing symptomatic behaviour, in 50% of cases carers rated PWD status as improved.

Improving the acceptability and usability of robots requires robot design to be matched to user group (i.e. carer, PWD, OA), individual requirements and environmental considerations. This means that all social robots need to be easily cleaned [14]. Those for use in peoples’ homes need to be robust, require little maintenance or troubleshooting, and to be able to navigate environments with dynamic and static obstacles, uneven floors and possibly stairs, in conditions in which lighting varies along with door thresholds. In residential care, different designs are possible due to wider hallways, possibly static floor plans and duplicate furnishings [31].

In the context of residential care, robots need to accommodate the needs of multiple users with different physical and cognitive limitations. Campbell [36] conducted an observational case study involving nursing home residents (n \(=\) 5), some of whom had advanced dementia. She found that a robotic dog and cat enhanced communication and were enjoyed by residents, but the off switch on the abdomen of a robotic dog was too stiff for people with arthritic fingers. Saaskilahti et al. [37] also found that having a microphone hanging around the neck or worn on the wrist of OA, helped participants (n \(=\) 4) to use a Kompai robot skype call function, when it was difficult for them to bend over the device. Participants in this study liked the intuitive skype-call feature with only two buttons and the capacity to adjust the touch screen, making it optimally sensitive for specific users. It was also useful having controls operated through touch and speech options, although touch was more reliable as operating the robot through voice-commands required extremely clear speech. Researchers also noted that users needed to learn to wait 3 s for the robots response and a participant suggested that the robot could say ‘please wait a moment’ to avoid the user giving it too many commands at the same time [37].

OA with reduced hearing, visual impairment or cognitive deficiency can find robots easier to use if they accommodate multiple interactive modalities [23, 38]. Khosala et al. [38] found that nursing home residents (n \(=\) 34) with sensory impairments and short term memory loss used different modes of communication at different times during a card game of Hoy with a robot called Matilda. The robot’s visual display helped participants remember and see the numbers which were called out verbally. However, it should be noted that although people want robots to communicate with them via acoustic and visual modalities, ultimately OA prefer robots to use direct speech [19].

PEOU may be rated higher with longer use, habituation and learning. Torta et al’s. [39] study tested acceptability of a small robot used as a communication interface with an integrated smart home system in a usability laboratory set up as real-life user apartments. OA (n \(=\) 6) had two sessions during a 2 week period and (n \(=\) 2) had eight sessions over 3 months. Participants found that the system easier to use during later sessions, particularly commenting how overtime they became more accustomed to the robot’s speed and behaviour.

A small amount of work has examined how OA and PWD learn to use robot interfaces and what helps them to remember how to use these after a period of non-use. Some evidence is provided by Granata and Pino [40] who found that people with MCI (n \(=\) 11) completed tasks slower, learned slower and committed more errors than OA (n \(=\) 11) when performing tasks using the agenda and shopping list function on the robot Kompai. Prior computer experience influenced rates of learning but there were no differences based on age or educational levels. Some participants had difficulty understanding the navigation and the authors recommend that the use of more intuitive designs, which reduce the number of steps in a process and hide choice lists until ‘parent’ categories are selected by users [40].

In summary, it is important that robots are matched to the needs and capabilities of the end users. PEOU can be improved over time with practice and learning. However, the literature has identified the following as factors related to PEOU; audio and visual communication of robot, ease of use of buttons and adjustability of monitor. It is also noteworthy that no studies were identified to have explored PEOU in depth, concerning how psychological factors of PWD and OA impact their perceptions on how easy robots will be to use.

3.5 Perceived Enjoyment (PE)

If people are able to use robots and have a choice about doing so in a voluntary domestic context, motivational factors such as PE come into play as acceptability increases if the robot is perceived to be fun and if it provides entertainment [15, 17, 41]. Heerink et al. [42] found that PE correlated significantly with intention to use (0.420, \({p}<\) 0.05) and minutes of actual usage (0.625, \({p}<\) 0.01) in an experiment with an iCat robot, made conversational using a hidden operator. Participants consisted of semi-independent OA (n \(=\) 30). Participants completed questionnaires on their experience of conversing with iCat, asking for information on weather, the TV schedule or for a joke.

However, de Graaf’s [26] and Torta et al. [39] found that PE reduced over 6 and 8 months respectively. This suggests that novelty effects may enhance PE initially but then decrease over time, potentially resulting in less robot acceptance in the longer term.

3.6 Social Presence (SP)

Robots whose function is to motivate and stimulate users require a degree of social presence (SP) relevant to their purpose, because users need to perceive that they are in the company of a social entity. Indeed, robots’ potential to possess SP appears to be their advantage over non-robotic technologies. SP can be optimised by using embodied robots which are physically rather than virtually present, sharing the same space as the user. Tapus and Tapus [43] explored a robot which was used as a tool to monitor and encourage cognitive activities for PWD, in an 8 month study with PWD (n \(=\) 9). The robot provided customised cognitive stimulation by playing music and games with the user. Researchers compared responses to a humanoid torso design on a mobile platform with a simulation on a large computer screen. They found that participants consistently preferred the embodied robot to the computer and concluded that embodiment facilitated users’ engagement with the robot as they shared their context.

However, the size of the robot is also important, as SP can be sub-optimal if it is too small and users fail to notice it. Torta et al. [39] evaluated a 55 cm tall socially assistive humanoid robot as a communication interface within a smart home environment, in a usability laboratory set up to mimic a real apartment. OA (n \(=\) 8) tested robot acceptability with scenarios including; asking about weather conditions, listening to music, doing exercises, receiving environmental warnings, and calling a friend to make plans to meet up. Participants experienced 2–8 sessions over variable time periods lasting 2–12 weeks. They found that participants had low anxiety levels and enjoyed the robot but its SP scored very low due to its small size.

It is also important that robots are not too large. Robinson et al. [44] tested the acceptability of two robots, Guide and Paro for PWD (n \(=\) 10) living in an institution. Guide at 1.6 m tall can facilitate making phone calls, provide access to websites, and offers games and music, whereas Paro is approximately 55 cm long. Over a 1 week period, 5 min demonstrations of robots were provided to PWD residents (n \(=\) 10), family members (n \(=\) 11), and staff (n \(=\) 5) and a 1 h long interactive session with the robots was videoed, transcribed and analysed. Semi-structured interviews were also held with staff and relatives. The findings suggested that residents responded and talked to Paro (n \(=\) 6) more often than Guide (n \(=\) 2). All residents touched Paro where as 40% (n \(=\) 4) touched Guide. Staff and relatives were more enthusiastic about Paro compared to Guide. They thought that Paro would be more useful in their setting because it encouraged tactile contact and had beautiful eyes. However, some relatives (n \(=\) 5) and staff (n \(=\) 3) thought it was too bulky and recommended it be made smaller. The potential for Guide to facilitate activities and stimulate residents was acknowledged but most participants considered that PWD would be unable to use it alone. In particular, participants had mixed opinions about Guide’s size. Some thought it was too big and intimidating, whilst others acknowledged that its size enabled people to interact socially around it and it was not likely to be overlooked. This finding is supported by the findings of other studies which suggest that large robots can induce feelings of intimidation, anxiety and feelings of being unsafe [32, 45].

Acceptance is likely to be enhanced if robots are customised regarding their size, to fit the context in which they are deployed [14] and their function. Larger robots could be useful for mobility aids [14], they may have more SP and are less likely to be overlooked by PWD or OA who may have poor eyesight [12]. The literature also reveals that it is paramount for people to feel comfortable during interactions with a robot [19] and this can be affected by perceived sociability.

3.7 Perceived Sociability (PS)

Social presence and PS have been found to correlate (beta 0.540, t 3.399, \({p}<\) 0.005) [15]. PS concerns a user’s need to believe that the robot has social abilities which enables them to function as an assistive device. PS is impacted by aspects of robot appearance, behaviour and communication styles.

3.7.1 Robot Appearance

Scopelliti et al. [19] found that people hold a variety of opinions about the materials that robots should be made from and their colour. Begum et al. [32] conducted an acceptability and feasibility study in a home simulation laboratory, for a 40 inch tall prototype robot (Ed), based on an iRobot Create platform, which can deliver speech prompts to assist PWD performing a domestic sequence of events such as making a cup of tea. Researchers videoed interactions and interviewed PWD and caregivers (n \(=\) 5 dyads). They reported a lack of consensus regarding whether a robot’s voice should be soft or authoritative, and the gender it should represent.

Other issues influencing robot design concern how realistic they should appear to be and user preferences for a humanlike or mechanical-like appearance. These questions relate to the uncanny valley concept [46], which suggests that people find robots more acceptable as they become realistic and humanlike but when they are almost human, people are uncomfortable with them. Perceived human likeness was associated with more anxiety and elevated heart rates in OA participants compared to their formal carers in the Stafford et al. [23] study described above. This suggests that the uncanny valley concept varies between individuals and groups and it may be linked to anxiety.

Pino et al. [30] found that PWD (n \(=\) 10) preferred a mechanical humanlike robot with anthropomorphic facial features and global mechanical looking design. These authors used a mixed methods approach which aimed to discover how the views of PWD, their carers (n \(=\) 7) and OA (n \(=\) 8) converge and diverge regarding robot applications, feelings about technology, ethical issues and barriers and facilitators to adoption. Twenty five participants completed a survey and 7 completed a focus group. Few people preferred the android robot and no-one voted for the one which was humanlike. Participants with dementia were moderately interested in a robot having realistic humanlike features, but OA were less so. Arras and Cerqui [24] conducted a large survey with respondents who were attending an international Swiss Expo-02 robotics exhibition (n \(=\) 2042; Male 56%, Female 44%; OA 11%). They found that only 10% of people aged over 65 prefer humanoid robots.

However, the impact of realism on acceptability may differ concerning zoomorphic robots. Heerink et al. [47] compared the acceptability of Paro with other zoomorphic robots a: baby seal, puppy, cat, dinosaur and bear. They interviewed professional caregivers (n \(=\) 36) and observed the responses of people with moderate dementia (n \(=\) 15). In the hour long sessions, each PWD was presented with the various robots for 1 min, and their responses were observed. The baby seal scored highest for its simplicity, softness and because it was lighter and more portable than Paro. The cat was preferred second as it was realistic. Pleo, the dinosaur scored lowest, being regarded as unfamiliar and reptilian.

However, degrees of realism may not be key as acceptance can increase if a robot has an ‘undetermined design’ which facilitates interpretive flexibility by allowing people to interact with it in a variety of ways to fulfil their needs [5]. Chang et al. [5] explored the social and behavioural mechanism behind Paro’s therapeutic effects. They analysed participant behaviours in video recorded 8 weekly group interactions between PWD (n \(=\) 10) living in a retirement facility, and therapists and found that PARO was used in a variety of ways, and it increased physical and verbal interactions between participants. Spiekman et al. [13] also found that realism did not increase preference for a robot when they conducted an experiment to determine which characteristics are most important for a robot to support OA living alone. They evaluated four robots (iCat, Nao, Ashley and Nabartag) on ‘wizard of oz’ settings where researchers controlled them but they appeared autonomous to OA (n \(=\) 29). Data was collected by questionnaire after interactions with the robots which involved a short scripted conversation, initiated by the robot. Three components were found to determine participants’ evaluation of the robot: realism, intellectuality and friendliness. Realism was not the key to preference as the most unrealistic agent (Nabaztag) scored as high as the most realistic (Ashley) in terms of which agent participants would prefer to have at home. However, realistic facial features were important as they increased acceptability by effecting levels of trust, and perceptions of social presence, enjoyment and sociability.

Research concerning facial features suggests that opinion varies as to which features are preferred and whether or not they should be humanlike [32]. Broadbent et al. [12] concluded that some OA prefer a robot without a face, whereas no significant preferences for male/female human or machinelike faces was reported by Stafford et al. [48] when they evaluated the responses of participants (n \(=\) 20; over 55 years), recruited at a university, to six different face conditions presented on a computer screen in a randomised order. With each display condition, participants interacted with the robot for 5 min using a psychotherapy programme which provides a constant conversational platform. Similar work was completed by Disalvo [49], who explored which aspects of robot faces needs to be present for them to be regarded as humanlike. Disalvo [49] collected images of 48 robots and OA (n \(=\) 20) rated their degrees of humanness on a scale of 1–5 in a paper survey. They found that specific facial features accounted for 62% of variance in perception of humanness, which is most increased by a nose, eyelids and mouth being present. Robots with the most facial features were regarded as more humanlike. Disalvo [49] concludes that humanoid heads should have wide heads and wide eyes; the brow line to the bottom of the mouth should dominate the face; less space should be afforded to the forehead hair jaw or chin, and detail is needed in the eyes. For a humanoid face, eyes need to include a shaped eyeball, iris, pupil and four or more other features, preferably a nose, mouth, eyelids and skin.

Some robot designers have explored acceptance of humanlike robots with minimalistic design and facial features [45, 50]. Khosla et al. [45] describe successful field trials with Matilda, an emotionally engaging small social robot with a minimalistic baby face which has a facial expression recognition system and is able to incorporate user preferences and personalise its services. Trials were conducted over a 6 month period, in seven Australian households involving PWD (n \(=\) 7) and their carers. Interviews were conducted and interactions video-recorded with data analysed for participants’ emotional response and quality of robot experience. The findings suggest that PWD enjoyed one to one activity with Matilda. All participants agreed or strongly agreed to the question ‘Matilda makes me smile’, saying ‘Matilda is a friend’ and ‘Matilda does not worry me’.

A minimalistic tele-operated android, Telenoid, has also been evaluated regarding its acceptability in a 1 day field trial involving PWD (n \(=\) 10) [50]. Researchers asked participants how they perceived its appearance to be compared to a human and if they thought Telenoid could help them. Participants were told that Telenoid could be used like a telephone, although they could see the robot operator in the room. Researchers observed participants showing strong attachment to its child-like huggable design and were willing to converse with it. Some perceived it as a doll or a baby.

It is clear that there is a lack of consensus regarding the optimal appearance of social robots. However, a robots appearance does not affect acceptance in isolation but users respond to a package which includes the robots expressions and communication behaviour. These are now discussed below.

3.7.2 Robot Behaviour and Communication Styles

The way in which a robot communicates and behaves should be compatible with the social context in which it is deployed and should be consistent with users’ perceptions of its status and role [28, 42]. Sääskilahti [42] found that OA (n \(=\) 6) felt safer when Kompai gave a short warning signal before it started to move and stopped a sufficient distance from them.

Walters and Dautenhahn [51] compared user stress responses and preferred stopping distances of the human size mechanical-like PeopleBot as it behaved ‘ignorantly’ and then in a socially acceptable humanlike way. Participants, university staff and students (OA n \(=\) 3, in a total sample of n \(=\) 28) performed a prescribed task which was interrupted by the robot in a simulated living room. The ignorant robot (optimal from a robotics perspective) took the shortest path between two locations and made little change in its behaviour in relation to the human. The socially interactive robot modified its behaviour to not get too close to the person, especially if their backs were turned. It moved slowly when closer than 2 m, took a circuitous route when necessary, it appeared to be alert and interested in what the human was doing by looking actively at them. It also anticipated, by interpreting the human’s movements, and waited for an opportune moment to interrupt the person. Stress was measured using a hand held device, video observation and questionnaires. Reports from this study do not separate findings pertaining to OA rom the rest of the participants, but findings suggest the majority of participants disliked the robot moving behind them, blocking their path or moving on a collision course towards them, especially when it was nearer than 3 m. Sixty percent preferred the robot to stop 0.45–3.6 m from them and 40% allowed it to 0.5 m from them which is on the edge of the intimate zone for human–human contact. Ten percent were uncomfortable with the robot approaching closer than between 1.2 and 3.5 m, reserved for conversations between human strangers. Walters and Dautenhahn [51] acknowledge that longer term studies are needed to establish how becoming familiar with the robot over time affects these preferences.

Communicating in a humanlike way may be particularly important for robots designed to stimulate PWD. Cohen-Mansfield et al. [52] found that PWD (n \(=\) 163) living in a nursing home were significantly longer engaged, more attentive and positive towards 23 types of social stimulus compared to non-social stimulus. These stimuli included a doll, a real dog, a plush animal, a robotic animal, a squeeze ball, an expanding sphere, music and a magazine [52].

It has been suggested that robots need to develop ‘robotiquette’ [9]. This needs to include being experienced as warm, open, creative, calm, spontaneous, efficient, systematic, cooperative, polite and happy [14, 55]. Issues of robot and user personality are also important. Brandon [55] interviewed relatively fit and able OA (n \(=\) 22) and conducted two experiments in a simulated home-like laboratory aiming to discover the effect of matching personalities of the user and robot, with a mobile robot able to provide agenda and medication reminders. They found that participants recognised different personalities designed into the robots and extrovert robots were perceived as having significantly higher sociability, social presence and PE compared to an introverted robot. Participants preferred robots with similar rather than complementary personalities to themselves. However, they were more anxious about the robot who had similar extraversion levels to themselves. However, personality and behaviours need to be consistent with robot function and the users’ expectations of their role [11, 12, 56]. Amirabdollahain et al. [57] investigated OA (n \(=\) 41) response to robots undertaking specific tasks and roles in the laboratory setting. They aimed to investigate if preferences for a robot depended on context and the stereotypical perceptions held by people about certain jobs. They found that the acceptance of robots was not increased by complimentary or similarity of personality between the user and the robot but through the robot having a personality which fits the users’ expectation for the particular task and context.

Heerink et al. [41] investigated which social features are necessary for robots to make effective social partners. The responses of cognitively able nursing home residents (OA; n \(=\) 40) to iCat robots, manipulated to be socially or non-socially expressive were compared. The socially expressive iCat was designed to look at participants, be co-operative, nod and be smilingly pleasant, use participants’ names and remember personal details about them and admit its own mistakes. The researchers concluded that participants were more comfortable with the more socially expressive robot and they communicated with it more extensively. Participants in Pino’s [30] study cited above also considered facial expressions were important as they represent emotional capabilities. Sakai et al. [58] describe an autonomous virtual agent, capable of speech recognition, which can nod its head, providing verbal acknowledgment to users. Details of their evaluation experiment are not provided, but authors state that their participants with dementia were more engaged by the robot when it provided them with feedback.

Recent advances in technology are making robots which are more emotionally responsive to users and this may enable them to be perceived as more sociable. The robot Matilda, whose field trials are described above, can respond to users’ emotions as it incorporates emotion measuring techniques which can recognise the user’s facial expression. This facilitates more natural social interaction which can incorporate user preferences and personalised services [45]. Brian is another robot which can determine user engagement and activity states and uses this information to determine its own emotional assistive behaviours [59]. McColl [59] tested Brian’s acceptability and ability to provide encouragement, prompts and orientating statements to PWD (n \(=\) 40), living in long term care, during mealtimes and when playing a memory card game. Participants were observed interacting with Brian for an average of 12.6 min and 22 questionnaires were analysed. The robot was relatively successful in motivating and engaging participants: (n \(=\) 33) engaged all the time, (n \(=\) 7) engaged some of the time; (n \(=\) 35) complied with Brian all the time, (n \(=\) 4) some of the time, (n \(=\) 1) didn’t compile (the robot’s voice interfered with his hearing aid); 82% smiled or laughed in response to Brian’s emotions and some were successfully re-engaged on task by Brian.

3.8 Trust and Perceived Adaptivity (PA)

This section reviews studies that explored the importance of trust, suggesting that it underlies and interacts with the need for perceived control of the robot and PA. It is argued that users need to trust the robot and be comfortable with a particular level of perceived control but they also require socially savvy robots to have a degree of autonomy and adaptability [17]. An acceptable balance between these variables probably varies between individual users, with robot purpose and deployment context. However, further research is needed with larger samples to confirm these propositions.

Heerink [15] evaluated the effects on acceptance of PA using identical experimental conditions to those described above (Heerink 2011 [16]), showing a film of an adaptive and less adaptive RoboCare version providing OA with medication reminders, fitness advice, health monitoring and help calling for assistance. Participants preferred the more adaptive robot and rated it higher in terms of ITU, perceived enjoyment and perceived usefulness. However, they felt more anxiety towards the robot, which the authors suspected was because they had less control over its actions.

Users have to trust that robots will be safe and reliable [19], and trust has to be earned [14]. Frennert et al. [14] conducted a series of workshops with OA living in their own homes who had moderate sensory and mobility impairments. Participants were asked to respond to sketches of different robots stating their preferences for an ideal robot. They also interviewed OA (n \(=\) 5) and one couple who lived with polystine style foam mock-ups of these ideals for 1 week. They found that feelings of control were crucial and connected with issues of trust and privacy.

The determinants affecting user trust and ITU with assisted living robots has also been investigated using a survey questionnaire with OA (n \(=\) 292) [60]. This study described to participants two emergency scenarios in which the robots would be available to help respondents in a fire and when they were very unwell. Unsurprisingly, respondents said that they would be highly motivated to use the robots in these situations and trust in the robot strongly related to ITU (0.51). Trust levels were also correlated with PEOU (0.49), PU (0.50), and expected reliability (0.63). Scopelliti [19] also found that trust in the capabilities of robots for use in a domestic situation influenced OA (n \(=\) 37) responses concerning three dimensions; robot benefits, disadvantages, and mistrust of robots. Mistrust was shown by 85% of participants who did not want a robot to move freely in the house and 82% were afraid of potential damages.

Within the literature the question arises as to how predictable and controllable users want robots to be. De Graaf [26] found that participants wanted more control over Karotz. As time passed they felt that this would help maintain their privacy and help them cope when unexpected human events occurred. For example, it was problematic when Karotz continued to remind them about their health promotion activity schedule when guests were present. They wanted Karotz to adapt to their needs, have more sophisticated interaction capabilities and more conversation topics.

A need for adaptability may be influenced by user perceptions of the opinions of significant others. Heerink [15] found that users were more influenced by the opinions of significant others when robots had greater adaptive capability.

3.9 Social Influences and Facilitating Conditions

Most studies identified here do not focus on examining the impact of social influences. However, the social influence of significant others was one of the strongest predictors of ITU home healthcare robots by patients and healthcare professionals (n \(=\) 108; OA 11.15%; 18–33 years 77.7%) who all used a computer daily [61]. This online and paper survey which collected quantitative and qualitative data, also found PU, trust, privacy, ethical concerns and facilitating conditions to be important. Wu et al. [62] also found social influence to be important after OA with MCI (n \(=\) 5) and OA (n \(=\) 5) interacted with the Kompai robot in their living laboratory study.

Social influences also encompass broader cultural issues, but few studies identified in this review appear to take account of cultural factors, and none specified the cultural background of their samples. Two studies were conducted in more than one country. Klein and Cook [6] found participants in care homes in UK and Germany accepted PARO and PLEO to similar degrees. Whereas Amirabdollahian et al. [56] noted that OA in the UK and France had greater concerns about the need for privacy when asked about robot design, than those in the Netherlands. The former did not want images from within their home shared with other parties.

Another cultural and societal issue which can reduce the acceptance of robots involves negative ageist stereotypes [30, 62, 63]. Neven [63] examined how images of OA shape technology development by observing researchers interviewing OA (n \(=\) 6) and 30–60 min interactions between them and an unnamed robot. They found that ageist assumptions influenced robot design and implementation and that OA may have different representations of what being older means. Furthermore, if potential robot use is associated by OA with being perceived as lonely, isolated and dependent, they can be reluctant to be associated with them. This may be because using the robot would be contrary to their self-image and the image that they want to project, which is that they are healthy and independent [11, 14, 63].

Acceptability is also impacted by stakeholder opinions concerning the ethics of robot usage. Wu et al. [64] conducted three videotaped focus groups with OA (n \(=\) 8) and OA with

MCI (n \(=\) 7) who held a variety of views about the appearance of 25 robots displayed on a screen but all participants discussed ethical issues, expressing concern about robots replacing or reducing human contact.

4 Discussion and Future Research Directions

Findings from the studies reviewed here reveal the key factors affecting the acceptability of robots by OA, PWD and OA with MCI. The literature suggests that acceptance is influenced by the psychological variables of individual users [11, 21, 23, 47, 48] and their social and physical environment [30, 32, 61, 62, 65]. These variables interact with one another to influence acceptance in each context [16, 61, 65, 66]. This includes being easy and enjoyable to use [26, 42, 44] and fulfilling their function [32, 34, 64]. To entice people to use and engage with robots, they have to be designed so that they are personalised and conform to user expectations and environmental considerations. The opinions of significant others and what OA anticipate these will be are important in determining whether or not a robot a will be accepted [26, 57]. This may relate to OAs’ need, as social beings, to be able to project their referred self-image to other people, therefore maintaining their privacy [11, 14, 63]. It appears important for robot acceptability into OA lives that users are comfortable with the robots degree of adaptability and controllability [15, 26], as this will impact their relationships with other people. Indeed it may be crucial for acceptability that robots can function balancing these variables.

The literature suggests that it is important that users are able to engage with the robot and this requires that they feel at ease when interacting with it. The possibility of psychological and emotional comfort is increased if a robot has a realistic humanlike, expressive face, if its behaviour conforms to human social norms deemed appropriate to its robot role and function, and if it has the capacity to be emotionally responsive to the user [13, 15, 30, 45, 59, 67, 68]. This suggests that acceptability of humanlike non-zoomorphic robots designed for social companionship will be enhanced by current and future technological developments regarding the capacity of the robot to read and respond to users’ emotional needs.

However, the research identified here has limitations which reflect the relative youth of this developing field and suggests generalisation of findings should be done with caution. Only ten studies were identified to have focused on examining the interaction between variables concerning acceptability of robots [15, 16, 23, 26, 30, 41, 42, 60, 61, 65]. Studies conducted to date have employed a range of research designs (see Table 2), which frequently had sample sizes of less than ten [6, 9, 26, 27, 32, 34, 36, 43, 45, 50, 62]. Other potential biases in studies exist through the lack of blinding in observational studies and that selection bias is not addressed. The latter is problematic in acceptability work where the views of participants who find robots least acceptable may not be captured.

No randomised controlled trials were identified and the studies include several pilot or feasibility trials [6, 9, 27, 32, 34, 50]. Many of the other studies were primarily aimed to determine robot user preferences and needs [12, 30, 40, 47, 56, 57, 69]. These did not always include all stakeholders who could impact eventual acceptance. Some studies which do involve a range of stakeholders, collect data using mixed stakeholder focus groups [30, 45, 57]. Focus groups can be used to gather information from PWD and OA with MCI [70] but it is important that the views of carers do not dominate people with cognitive impairment or dementia [71, 72] who may be less able to articulate their views [73, 74]. Indeed, these difficulties may be exacerbated when in unfamiliar study situations or feeling less powerful relative to other participants. Alternative methods of data collection such as combining observational data collection with individual interviews may improve research validity particularly if the dementia is severe [74,75,76].

It is noteworthy that most of the studies which had mixed populations of OA, PWD and/or OA with MCI, analyse and report their findings together, rather than separating the data and comparing them along group lines. As people in these groups differ in terms of their cognitive ability, future research involving comparative studies may help to determine how the degree of dementia or cognitive impairment impacts acceptability issues. Many studies identified have not involved direct interaction between participants and robots [19, 24, 25, 48, 49, 56, 60, 61, 64, 69] or base their findings on participant–robot interaction which were less than 1 h long [8, 12, 13, 15, 16, 23, 32, 36, 40,41,42, 44, 47, 51, 55, 63].

According to the Almere model, intention to use (ITU) results in actual robot usage depending on facilitating conditions and social influences [15]. Findings from this review suggest that ITU is not a reliable predictor of long term robot acceptability [15, 26, 27, 39, 51, 62] and that people interpret and make use of robots in their own context [66] and that variables such as attitudes, perceived ease of use and enjoyment change over time [26, 39, 62] as users become more familiar with a particular robot. Therefore, robot acceptability should ideally be examined over long duration in the participants living situation. Most of the studies identified here which conform to this ideal [5,6,7, 9, 26, 34, 45, 51, 66] have involved Paro. Those which used university rooms or simulated living laboratories [13, 27, 32, 48, 51, 55, 62, 63, 65] provide helpful but tentative information about how factors affect robot acceptability.

There are many opportunities for future empirical investigation to confirm the findings of this review and to develop this field of study. The impact of acceptability variables needs further examination with larger samples, in real world situations, with a variety of robots, using longitudinal robust study designs which address the complexities of conducting research with PWD and OA. In particular, there is potential to explore how acceptability is affected by the manner in which OA and PWD are introduced to robots and supported in learning how to use this technology. Related to this topic, it would be valuable to know more about how psychological factors impact users’ perceptions on how easy robots will be to use. It would also be useful to investigate if optimal levels between robot controllability and adaptability can be determined, if these vary between users, and if acceptability is increased by varying the adaptability of robot behaviour according to whether it is being used in a public or private situation. If robot behaviour is made more humanlike in this regard, robot users may be able to present their preferred public personae whilst using the robot. This topic may be important as it links to users’ needs as social beings and because it is the ability of robots to be autonomously adaptive which makes them different to traditional technologies and potentially more useful.

Future research needs to focus on the impact of stakeholders and significant others as facilitators or barriers to acceptance. It also needs to be conducted with different cultural groups, to explore the impact of cultural factors and cross-cultural differences within a user’s social or physical environment and their impact on robot acceptability. In addition, research is needed to explore the impact on acceptance of macro societal level factors, such as power relationships, ageism, economics, the media and legislation. These factors potentially influence every aspect of the arena in which individuals’ research, develop, deploy and experience robots and no studies concerning them were identified by this review.

5 Conclusion

This paper adds to the state of the art as for the first time a body of literature has been analysed according to a validated theoretical acceptability model. The review found acceptability of robots for OA, PWD and OA with MCI is likely to be improved if robots use humanlike communication and if they meet users’ emotional, psychological, social and environmental needs. Robots acceptability is impacted by factors which interact at the level of the individual user and robot. These are influenced by significant social others and other macro-societal level factors. Future work aiming to promote acceptability will need to address the facilitators and barriers to acceptance at the level of individual users, significant others and society. Whilst valuable work has been completed to date, exploration about robot acceptability for PWD and OA is in its infancy. There are numerous opportunities to explore and investigate this expanding field further.

References

Prince M, Guerchet M, Prina M (2013) Policy brief for heads of government: the global impact of dementia 2013–2050. Alzheimer’s disease International (ADI), London

Wimo A, Prince M (2010) World alzheimer report: the global economic impact of dementia. Alzheimer’s Disease International (ADI), London

Alzheimers Society (2015) What is mild cognitive impairment, Factsheet 47. https://www.alzheimers.org.uk. Accessed 18 June 2017

Mordoch E, Osterreicher A, Guse L, Roger K, Thompson G (2013) Use of social commitment robots in the care of elderly people with dementia: a literature review. Maturitas 74(1):14–20. https://doi.org/10.1016/j.maturitas.2012.10.015

Chang W-L, Sabanovi S, Huber L (2013) Use of seal-like robot PARO in sensory group therapy for older adults with dementia. In: IEEE robotics and automation magazine

Klein B, Cook G (2012) Emotional robotics in elder care—a comparison of findings in the UK and Germany. In: al. SSGe (ed) Emotional robotics in elder care. Springer, Berlin, pp 108–116

Sung HC, Chang SM, Chin MY, Lee WL (2015) Robot-assisted therapy for improving social interactions and activity participation among institutionalised older adults: a pilot study. Asia Pac Psychiatry 7(1):1–6. https://doi.org/10.1111/appy.12131

Takayanagi K, Kirita T, Shibata T (2014) Comparison of verbal and emotional responses of elderly people with mild/moderate dementia and those with severe dementia in responses to seal robot, PARO. Front Aging Neurosci 6:257. https://doi.org/10.3389/fnagi.2014.00257

Moyle W, Cooke M, Jones C, O’Dwyer S, Sung B (2013) Assistive technologies as a means of connecting people with dementia. Int Psychogeriatr 25:S21–22

Dautenhahn K (2007) Socially intelligent robots: dimensions of human–robot interaction. Philos Trans R Soc Lond B Biol Sci 1480:679–704

Stafford R (2013) The contribution of people’s attitudes and perceptions to the acceptance of eldercare robots. The University of Auckland, Auckland

Broadbent E, Tamagawa R, Kerse N, Knock B, Patience A, MacDonal B (2009) Retirement home staff and residents’ preferences for healthcare robots. Paper presented at the 18th IEEE international symposium on robot and human interactive communication Toyama Japan, 27th Oct

Spiekman ME, Haazebroek P, Neerincx MA (2011) Requirements and platforms for social agents that alarm and support elderly living alone. In: International conference on social robotics. Springer, Berlin, pp 226–235

Frennert S, Eftring H, Östlund B (2013) Older people’s involvement in the development of a social assistive robot. In Herrmann G, Pearson MJ, Lenz A, Bremner P, Spiers A, Leonards U (eds) Social robotics: 5th international conference, ICSR 2013, Bristol, UK, October 27–29, 2013, Proceedings, Springer International Publishing, Cham, pp 8–18

Heerink M, Krose B, Evers V, Wielinga B (2010) Assessing acceptance of assistive social agent technology by older adults: the almere model. Int J Soc Robot 2:361–375

Heerink M (2011) Exploing the influence of age, gender, education and computer experience on robot acceptance by older adults. Paper presented at the HRI’11, Lausanne Switzerland, March 6–9th

Young J, Hawkins R, Sharlin E, Igarashi T (2009) Toward acceptable domestic robots: applying insights from social psychology. Int J Soc Robot 1:95–108

Flandorfer P (2012) Population ageing and socially assistive robots for elderly persons: the importance of sociodemographic factors for user acceptance. Int J Pop Res 2012:1–13. https://doi.org/10.1155/2012/829835

Scopelliti M, Giuliani MV, Fornara F (2004) Robots in a domestic setting: a psychological approach. Univ Access Inf Soc 4:146–155

Shibata T (2012) Therapeutic seal robot as biofeedback medical device. Proc IEEE 100(8):2527–2538

Stafford R, MacDonald B, Jayawardena D, Wegner D, Broadbent E (2013) Does the robot have a mind? Mind perception and attitudes towards robots predict use of an eldercare robot. Int J of Soc Robot 6:17–32

Takayama L (2011) Perspectives on agency: interacting with and through personal robots. In: Zacarias M, Oliveira JV (eds) Human-computer interaction: the agency perspective. Springer, Berlin, pp 195–214

Stafford R, Broadbent E, Jayawardena C, Unger U, Kuo I, Igic A, Wong R, Kerse N, Watson C, MacDonald B (2010) Improved robot attitudes and emotions at a retirement home after meeting a robot. Paper presented at the RO-MAN international symposium on robots and human interaction, Viareggio, Italy

Arras K, Cerqui D (2005) Do we want to share our lives and bodies with robots? A 2000-people survey. Technical report Nr 0605-001 Autonomous Systems Lab Swiss Federal Institute of Technology, EPFL

Nomura T, Sugimoto K, Syrdal DS, Dautenhahn K (2012) Social acceptance of humanoid robots in Japan: a survey for development of the Frankenstein Syndorome Questionnaire. Paper presented at the 12th IEEE_RAS international conference on humanoid robots, Osaka Japan, 29th–1st Dec

de Graaf MMA, Allouch SB, Klamer T (2015) Sharing a life with Harvey: exploring the acceptance of and relationship-building with a social robot. Comput Hum Behav 43:1–14. https://doi.org/10.1016/j.chb.2014.10.030

Gross H, Schroeter C, Mueller S, Bley A, Langner T, Volkhardt M, Einhorn E, Merten M, Huijnen C, Heuvel Hvd, Berlo AC (2012) Further progress towards a home robot companion for people with mild conitive impairment. Paper presented at the IEEE international conference on systems, man and cybernetics, COEX Seoul Korea, 14–17th October

Pfadenhauer M, Dukat C (2015) Robot caregiver or robot-supported caregiving? Int J Soc Robot 7(3):393–406. https://doi.org/10.1007/s12369-015-0284-0

Stafford R, MacDonald B, Broadbent E (2012) Identifying specific reasons behind unmet needs may inform more specific eldercare robot design. Paper presented at the 4th international conference on social robotics ICSR

Pino M, Boulay M, Jouen F, Rigaud A-S (2015) ’Are we ready for robots that care for us?’ Attitudes and opinions of older adults toward socially assistive robots. Front Aging Neurosci 7(141):1–15

Mitzner TL, Chen TL, Kemp CC, Rogers WA (2014) Identifying the potential for robotics to assist older adults in different living environments. Int J Soc Robot 6(2):213–227. https://doi.org/10.1007/s12369-013-0218-7

Begum M, Wang R, Huq R, Mihailidis A (2013) Performance of daily activities by older adults with dementia: the role of an assistive robot. Paper presented at the IEEE international conference on rehabilitation robotics, Seattle, Washington, USA, June 24–26

Sääskilahti K, Kangaskorte R, Pieska S, Jauhiainen J, Luimula M (2012) Needs and user acceptance of older adults for mobile service robot. Paper presented at the IEEE RO-MAN: the 21st IEEE international symposium on robot and human interactive communication, Paris, France, September 9th–13th

Kerssens C, Kumar R, Adams A, Knott C, Metalenas L, Sanford J, Rogers W (2015) Personalized technology to support older adults with and ithout cognitive impairment living at home. Am J Alzheimers Dis Other Dement 30(1):85–97

Davis F, Bagozzi R, Warshaw P (1989) User acceptance of computer technology. A comparison of two theoretical models. Manag Sci 35:982–1003

Campbell A (2011) Dementia care: could animal robots benefit residents? Nurs Resid Care 13(12):602–606

Sääskilahti K, Kangaskorte R, Pieska S, Jauhiainen J, Luimula M (2012) Needs and user acceptance of older adults for mobile service robot. In: IEEE RO-MAN: the 21st IEEE international symposium on robot and human interactive communication, Paris, France

Khosla R, Chu MT, Kachouie R, Yamada K, Yoshihiro F, Yamaguchi T (2012) Interactive multimodal social robot for improving quality of care of elderly in Australian nursing homes. In: Proceedings of the 20th ACM international conference on multimedia. ACM, pp 1173–1176

Torta E, Werner F, Johnson D, Juola J, Cuijpers R, Bazzani M, Oberzaucher J, Lemberger J, Lewy H, Bregman J (2014) Evaluation of a small socially-assistive humanoid robot in intelligent homes for the care of the elderly. J Intell Robot Syst 76:57–71

Granata C, Pino M, Legouverneur G, Vidal JS, Bidaud P, Rigaud AS (2013) Robot services for elderly with cognitive impairment: testing usability of graphical user interfaces. Technol Health Care 21(3):217–231. https://doi.org/10.3233/THC-130718

Heerink M, Kröse B, Evers V, Wielinga B (2006) The influence of a robot’s social abilities on acceptance by elderly users. In: RO-MAN 2006—the 15th IEEE international symposium on robot and human interactive communication, Hatfield, 2006, pp 521–526. https://doi.org/10.1109/ROMAN.2006.314442

Heerink M, Krose B, Wielinga B, Evers V (2008) Enjoyment, intention to use and actual use of a conversational robot by elderly people. Paper presented at the HRI’08, Amsterdam Netherlands, March 12–15th

Tapus A, Tapus C, Mataric M (2009) The rold of physical embodiment of a therapist robot for individuals with cognitive impairments. Paper presented at the The 18th IEEE international symposium on robot and human interactive communication, Toyama Japan, Sept. 27th–Oct. 2nd

Robinson H, MacDonald B, Kerse N, Broadbent E (2013) Suitability of healthcare robots for a dementia unit and suggested improvements. J Am Med Dir Assoc 14(1):23–40

Khosla R, Khanh N, Chu M-T, Ieee (2014) Assistive robot enabled service architecture to support home-based dementia care. In: 2014 IEEE 7th international conference on service-oriented computing and applications. IEEE international conference on service-oriented computing and applications, pp 73–80. https://doi.org/10.1109/soca.2014.53

Mori M (2012) The uncanny valley. IEEE Robot Autom Mag 19:98–100

Heerink M, Albo-Canals J, Valenti-Soler M, Martinez-Martin P, Zondag J, Smits C, Anisuzzaman S (2013) In: Herrmann G, Pearson MJ, Lenz A, Bremner P, Spiers A, Leonards U (eds) Exploring requirements and alternative pet robots for robot assisted therapy with older adults with dementia. ICSR, Social Robotics, pp 104–115

Stafford R, MacDonald B, Broadbent E (2014) Older people’s prior robot attitudes influence evaluations of a conversational robot. Int J Soc Robot 6:281–297

DiSalvo C, Gemperle F, Forlizzi H, Kiesler S (2002) All robots are not created equal: the design and perception of humanoid robot heads. DIS

Yamazaki R, Nishio S, Ishiguro H, Nørskov M, Ishiguro N, Balistreri G (2014) Acceptability of a teleoperated android by senior citizens in danish society. Int J Soc Robot 6(3):429–442. https://doi.org/10.1007/s12369-014-0247-x

Walters ML, Dautenhahn K, Woods SN, Koay KL, Boekhorst RT, Lee D (2006) Exploratory studies on social spaces between humans and a mechanical-looking robot. Connect Sci 18:429–439

Cohen-Mansfield J, Thein K, Dakheel-Ali M, Rigier N, Marx M (2010) The value of social attributes of stimuli for promoting engagement in persons with dementia. J Nerv Mental Dis 198(8):586–592

Cohen-Mansfield J, Dakheel-Ali M, Marx MS (2009) Engagement in persons with dementia: the concept and its measurement. Am J Geriatr Psychiatry 17:299–307

Morris J, Hawes C, Murphy K, Nonemaker S, Phillips C, Fries B, Mor V (1991) MDS resident assessment. Eliot Press, Natick

Brandon M (2012) Effect of robot-user personality matching on the acceptance of domestic assistant robots for elderly. University of Twente, Masters

Amirabdollahian F, Op Den Akker R, Bedaf S, Bormann R, Draper H, Evers V, Gelderblom GJ, Ruiz CG, Hewson D, Hu N, Iacono I, Koay KL, Krose B, Marti P, Michel H, Prevot-Huille H, Reiser U, Saunders J, Sorell T, Dautenhahn K (2013) Accompany: acceptable robotics companions for ageing years—multidimensional aspects of human–system interactions. In: 2013 6th international conference on human system interactions, HSI 2013, June 6, 2013–June 8, 2013. IEEE Computer Society, Gdansk, Sopot, Poland, pp 570–577

Amirabdollahian F, Akker Rod, Bedaf S, Bormann R, Draper H, Evers V, Perez JG, Gelderblo GJ, Ruiz CG (2013) Assistive technology design and development for acceptable robotics companions for ageing years. PALADYN J Behav Robot 4(2):94–112

Sakai Y, Nonaka Y, Yasuda K, Nakano Y (2012) Listener agent for elderly peolpe with dementia. Paper presented at the HRI’12, Boston Massachausetts USA, March 5–8th 2012

McColl D, Wing-Yue, Louie G, Nejat G (2013) Brian 2.1 a socially assistive robot for the elderly and cognitively impaired. In: IEEE robotics and automation magazine (March), pp 74–83

Steinke F, Bading N, Fritsch T, Simonsen S (2014) Factors influencing trust in ambient assisted living technology: a scenario-based analysis. Gerontechnology 12(2):81–100

Alaiad A, Zhou L (2014) The determinants of home healthcare robots adoption: an empirical investigation. Int J Med Inform 83:825–840

Wu Y-H, Wrobel J, Cornuet M, Kerherve H, Damnee S, Rigaud A-S (2014) Acceptance of an assistive robot in older adults: a mixed-method study of human-robot interaction over a 1-month period in the living lab setting. Clin Interv Aging 9:801–811

Neven L (2010) ’But obviously not for me’: robots, laboratories and the defiant identity of elder test users. Soc Health Illn 32(2):335–347. https://doi.org/10.1111/j.1467-9566.2009.01218.x

Wu Y-H, Faucounau V, Boulay M (2010) Robotic agents for supporting community-dwelling elderly people with memory complaints: perceived needs and preferences. Health Inform J 17(1):33–40

Frennert S, Eftring H, Ostlund B (2013) Older people’s involvement in the development of a social assistive robot. In: Herrmann G, Pearson M, Lenz A, Bremner P, Spiers A, Leonards U (eds) 5th international conference, ICSR 2013. Springer, Bristol, pp 8–18

Pfadenhauer M, Dukat C (2015) Robot caregiver or robot-supported caregiving? The performative deployment of the social robot PARO in dementia care. Int J of Soc Robots 7:393–406

Khosla R, Chu MT, Kachouie R, Yamada K, Yoshihiro F, Yamaguchi T, (2012) Interactive multimodal social robot for improving quality of care of elderly in Australian nursing homes. In: 20th ACM international conference on multimedia, MM 2012, October 29, 2012–November 2, 2012, Association for Computing Machinery, Nara, Japan, pp 1173–1176

Sakai Y, Nonaka Y, Yasuda K, Nakano YI (2012) Listener agent for elderly people with dementia. In: 7th annual ACM/IEEE international conference on human–robot interaction, HRI’12, March 5, 2012–March 8, 2012. Association for Computing Machinery, Boston, MA, United states, pp 199–200

Wu YH, Faucounau V, Boulay M (2010) Robotic agents for supporting community-dwelling elderly people with memory complaints: perceived needs and preferences. Health Inform J 17:33–40

Bamford C, Bruce E (2000) Defining the outcomes of community care: the perspectives of older people with dementia and their carers. Aging Soc 20:543–570

McKillop J, Wilkinson H (2004) Make it easy on yourself! Advice to researchers from someone with dementia on being interviewed. Dementia 3:117–125

Bartlett R (2012) Modifying the diary interview method to research the lives of people with dementia. Qual Health Res 22:1717–1726

Lloyd V, Gatherer A, Kalsy S (2006) Conducting qualitative interview research with people with expressive language difficulties. Qual Health Res 16:1386–1404

Hubbard G, Downs MG, Tester S (2003) Including older people with dementia in research: challenges and strategies. Aging Mental Health 7:351–362

Murphy K, Jordan F, Hunter A, Cooney A, Casey D (2015) Articulating the strategies for maximising the inclusion of people with dementia in qualitative research studies. Dementia 14(6):800–824

Cowdell F (2008) Engaging older people with dementia in research: myth or possibility. Int J Older People Nurs 3:29–34

Wing-Yue Louise G, McColl D, Nejat G (2014) Acceptance and attitudes toward a human-like socially assistive robot by older adults. Assist Technol Off J RESNA 26(3):140–150

Sabanovic S, Bennett C, Chang W-L, Huber L (2013) PARO robot affects diverse interaction modalities in group sensory therapy for older adults with dementia. Paper presented at the IEEE international conference on rehabilitation robotics, Seattle, Washington, USA, 24–26 June 2013

Funding

The research leading to these results has received funding from the European Union Horizons 2020—the Framework Programme for Research and Innovation (2014–2020) under Grant Agreement 643808 Project MARIO ‘Managing active and healthy aging with use of caring service robots”.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Whelan, S., Murphy, K., Barrett, E. et al. Factors Affecting the Acceptability of Social Robots by Older Adults Including People with Dementia or Cognitive Impairment: A Literature Review. Int J of Soc Robotics 10, 643–668 (2018). https://doi.org/10.1007/s12369-018-0471-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-018-0471-x