Abstract

There are a certain number of arm dysfunction patients whose legs could move. Considering the neuronal coupling between arms and legs during locomotion, this paper proposes a novel human-robot cooperative method for upper extremity rehabilitation. Legs motion is considered at the passive rehabilitation training of disabled arm, and its traversed trajectory is represented by the patient trunk motion. A Kinect based vision module, two computers and a WAM robot construct the human-robot cooperative upper extremity rehabilitation system. The vision module is employed to track the position of the subject trunk in horizontal; the WAM robot is used to guide the arm of post-stroke patient to do passive training with the predefined trajectory, and meanwhile the robot follows the patient trunk movement which is tracked by Kinect in real-time. A hierarchical fuzzy control strategy is proposed to improve the position tracking performance and stability of the system, which consists of an external fuzzy dynamic interpolation strategy and an internal fuzzy PD position controller. Four experiments were conducted to test the proposed method and strategy. The experimental results show that the patient felt more natural and comfortable when the human-robot cooperative method was applied; the subject could walk as he/she wished in the visual range of Kinect. The hierarchical fuzzy control strategy performed well in the experiments. This indicates the high potential of the proposed human-robot cooperative method for upper extremity rehabilitation.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Stroke is a leading cause of serious, long-term disability. For instance, in China every year there are about 2,000,000 people suffering from a stroke, of which approximately 66 percent survives the stroke, commonly involving deficits of motor function [1]; each year approximately 795,000 people continue to experience a new or recurrent stroke only in the U.S. [2]. Also accident is another cause of upper limb disabilities. Movement disorders of the upper extremities dramatically reduce the quality of people’s life [3]. Upper extremity rehabilitation is a monstrous task, for the increasing number of the patients and the small amount of rehabilitation physician. Rehabilitation robot is an efficient instrument to solve this problem.

In recent years, numerous rehabilitation robots have been invented to do the upper limb rehabilitation. Considering the mechanical structure, the robotic devices can be divided into two categories: the end-effector-based (MIT Manus [4], NeReBot [5], CRAMER [6], etc.) and the exoskeleton-based (ARMin [7], CADEN-7 [8], Pneu-WREX [9], etc.) robots. Many different control methods were implemented in the rehabilitation training. He et al. used several adaptive neural networks for robots by state and output feedback, input saturation and full-state constraints [10,11,12]. Xu et al. [13] presents an adaptive impedance force controller for an upper limb Rehabilitation Robot. Richardson et al. [14] controlled a 3-dof pneumatic upper-limb rehabilitation robot using a position-based impedance control strategy.

Among the recent academic studies, Microsoft Kinect has been used as an engaging and accurate markerless motion capture tool and controller interface in stroke rehabilitation [15, 16]. Chang et al. [17] built a Kinect-based rehabilitation system for young adults with motor disabilities. Hussain et al. made use of Kinect-monitored manipulation of specially designed intelligent objects (i.e a can, a jar, and a key-like object embedded with inertial sensors) for fine motor control diagnostics of the hand and wrist [18]. Su et al. [19] developed a Kinect-enabled system for ensuring home-based rehabilitation (KEHR) using a Dynamic Time Warping (DTW) algorithm and fuzzy logic to ensure the effectiveness and safety of home-based rehabilitation.

The rehabilitation devices have provided different types of training: active, passive, haptics, and coaching [3]. Moreover, for different kinds of upper limb disabled patients, their rehabilitation training methods should be different. To stimulate patient participation in the rehabilitation training, the real-time states of the patient should be considered, what is more, “patient cooperative training” [20, 21] have been widely used.

Current upper extremity rehabilitation systems enable only upper limb motion without considering the connections between upper and legs movement. Although a complete explanation of the neuronal connection between the upper and lower limbs has not yet been developed, some research studies have shown that there exists a neuronal coupling between arms and legs during locomotion [22, 23]. Some studies on lower limb rehabilitation usually connect with upper limb [24], so considering the lower limb motion the upper limb rehabilitation system may get better result than the traditional system.

The neuromuscular of upper limb is more complicated than the lower limb [25]. Clinically, motor function recovery of lower extremity is better than the upper extremity [26]. Also, there are a certain number of arm dysfunction patients whose legs could move. Towards these patients the motion of legs can be used at the passive rehabilitation training of disabled arm.

Traditional passive training thought the location of the patient is changeless, only the disabled arm followed the robot arm to move. However every passive rehabilitation training process requires some time, and in this period the patients may have trunk motion by walking to guarantee a nature and comfortable training process, so the states of the patient are changed frequently, the interaction force between patient and rehabilitation robot will be changed, thus the stability and training effect of the rehabilitation system will be influenced.

The movement direction by robot should be increased to offset the autonomous motion by patient in horizontal. Thus when patient do rehabilitation training, he can walk around as his wish, and the enthusiasm of patient on the training is improved. In addition, walking will help with health; it can enhance patient’s physical strength and improve the effect of rehabilitation training.

This paper presents a novel human-robot cooperative method for upper extremity rehabilitation to the arm dysfunction patients whose legs could move. The legs motion which is represented by the patient trunk motion is considered to realize a more natural and comfortable upper extremity training. In this method, the WAM robot is used to guide the disabled arm to do passive rehabilitation training, and the patient can walk around the robot as he/she wished; the motion trajectory of the patient is collected by Kinect and transmitted to the WAM robot to drive the robot to move followed the patient. A hierarchical fuzzy control architecture which consists of a fuzzy dynamic interpolation strategy and a fuzzy PD position controller is used to ensure the position tracking performance and stability of the human-robot cooperative rehabilitation training.

2 Upper Extremity Rehabilitation System

2.1 The Human-Robot Cooperative Upper Extremity Rehabilitation Robot System

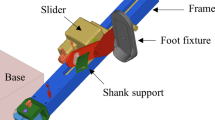

The human-robot cooperative upper extremity rehabilitation robot system consists of a Barrett WAM (Whole Arm Manipulator) robot [27], a three-dimensional force sensor developed by us [28], a handle, a Microsoft Kinect, a tripod, a client PC and a server PC, as shown in Fig. 1. The standard WAM Arm is a 4-dof highly dexterous, naturally back-drivable manipulator. The handle is fixed at the end of the WAM arm to ensure easy grasping by patients. The Kinect fixed on a tripod is used to measure the skeleton data of the patient in real-time.

The client PC is running Windows 7 operating system, it is responsible for processing position data and sending the control command to the server PC. The pose tracking part and fuzzy dynamic Interpolation strategy part are both operated on the client PC. Kinect for Windows SDK (1.8.0) is used to collect signals from the patient in real-time. The 3D joint position data is acquired at the Kinect native sampling frequency of 30 Hz.

The server PC is running Ubuntu Linux system, it is responsible for running the control loop and providing high-level command of the WAM rehabilitation system. Real-time communication between the server PC and the motor Pucks is realized by high-speed CAN bus with baud rate of 1Mbps. The server PC and client PC are connected by Transmission Control Protocol/Internet Protocol (TCP/IP).

The whole system can be architecturally divided into two main modules as follows:

-

1.

A Kinect based vision module, the Kinect is employed to locate the position of WAM Joint, track the trunk motion of the patient, and communicate the motion information to the WAM controller.

-

2.

The WAM robot module, the WAM robot is applied to guide the stroke arm to do passive training with the desired trajectory, and at the same time this robot followed the patient trunk movement tracked by Kinect in real-time. The Joint1 of the WAM robot is used to follow the movement of the patient trunk.

The predefined passive trajectory cooperates with the patient trunk online movement trajectory, thus the patient could do the passive training and walk around to adjust position as he/she wished.

2.2 Kinect Coordinate System on Rehabilitation

Kinect [29] has three autofocus cameras: two infrared cameras optimized for depth detection and one standard visual-spectrum camera used for visual recognition. On X and Y dimensions the spatial accuracy are both 3 mm, on Z dimensions the spatial accuracy is 1 cm, it could satisfy the needs of rehabilitation training. The Kinect SDK for Windows provides detailed location, position and orientation information for up to two players standing in front of the Kinect sensor array [15]. Previous devices have difficulty tracking human motion using a camera without body sensors; Kinect is a noninvasive, nonconacting and markerless method for motion tracking [12]. In this paper the Kinect is mainly used to track the human skeleton data to assistive control the human-robot cooperative rehabilitation training in real-time, 20 joints per subject can be tracked.

The training scene is shown in Fig. 2; a stroke patient does rehabilitation training beside the WAM robot and in the view range of the Kinect. Due to the technical limitations of the Kinect, the perspective is limited, so the key joint points of the patient and the WAM must stay within the optimal range defined by the Kinect. Figure 2 shows the initial position of rehabilitation training, WAM robot remains standing; the Kinect, forearm of the WAM robot, hand joint and shoulder joint are in alignment. The motion domain of the patient is set range from −0.7 to 0.7 rad. The figure also shows the position of Kinect (K), WAM (W), hand (H) and shoulder position (S) in the Kinect coordinate system. Mapping the Kinect coordinate into the WAM arm coordinate, the WAM arm is placed approximately in the center of the Kinect visual field.

In training the trunk motion of the patient mainly occurred in the horizontal direction. Because the height of the patient is constant, and the shoulder-center point may revolve around the shoulder. So the shoulder point was used to represent patient’s trunk movement when doing the human-robot cooperative rehabilitation training.

3 Methods

The human-robot cooperative rehabilitation training system is a multi-variable, nonlinear system. During the human-robot cooperative rehabilitation training, the patient walking is random, so the movement of the patient trunk (direction, speed and acceleration) is indeterminate. To ensure the safety and stability of the rehabilitation training, a hierarchical fuzzy controller that consisted of two stages (external controller and internal controller) was adopted, as shown in Fig. 3.

The skeleton data reading, pose tracking and fuzzy dynamic interpolation are contained in the external controller, and the external controller is running on the client PC. Fuzzy PD control of the WAM robot arm is carried out by the internal controller on the server PC. The trajectory generated by the external controller is transmitted to the server PC through TCP/IP. At last the rehabilitation robot will follow the trunk movement of the stroke patient, and manipulate the impaired upper limb to perform passive training which was predefine by linear segments with parabolic blends [30] at the same time.

3.1 Pose Tracking Module

The right hand was used as an example to show the human-robot cooperative rehabilitation training; the left side could also be derived in the same way. Figure 4 shows the rehabilitation training scene, the last frame(W-H\(^{\prime }\)-S\(^{\prime }\)) and the current frame (W-H-S) of the Kinect, W represents the Joint4 coordinate of the WAM robot, H and S represents the position of hand and shoulder of the patient. The distance WH is the length of the forearm of the WAM L. In the original location of the rehabilitation training, the position of the WAM upper arm remained vertical, the forearm position kept horizontal, the patient stood by and hand held on the handle. According to the isosceles triangle \(\Delta \hbox {WHH}^{\prime }\), the horizontal coordinate of the WAM Joint4 W could be obtained as follows:

where L is 0.35 m, \(\hbox {W}=(w_{x} , w_{z}), \hbox {H}=(h_{x} , h _{z}), \hbox {H}'=(h'_{x} , h'_{z})\), the point H and H\(^{\prime }\) can obtained from Kinect. In this paper, we mainly studied the patient trunk motion in horizontal, so only the horizontal coordinate was discussed in this section.

All of the skeleton data collected from the kinect were smoothed by Kalman filter [31]. According to the location of the patient limb at the current moment, Kalman filter can predict the location of each joint at next moment, and improve the real-time of the system and smoothness of the movement.

Assuming the system equation:

where \(x_{k}\) is system state, \(u_{k-1 }\) is system input, \(Q _{k-1}\) is system state noise, \(y_{k }\) is measurements, \(R_{k}\) is the measurement noise, \(k=1,2,3,\ldots n.\) The update step of the Kalman filter is given by:

where \(K_{k}\) is the kalman gain matrix, I is a identity matrix. In this paper \(\mathbf{A=}\left[ {{\begin{array}{cccc} 1&{} 0&{} {\Delta t}&{} 0 \\ 0&{} 1&{} 0&{} {\Delta t} \\ 0&{} 0&{} 1&{} 0 \\ 0&{} 0&{} 0&{} 1 \\ \end{array} }} \right] ,H=\left[ {{\begin{array}{cccc} 1&{} 0&{} 0&{} 0 \\ 0&{} 1&{} 0&{} 0 \\ \end{array} }} \right] , u_{k-1} =0, \Delta {t}\) is the sampling period.

In the human-robot cooperative rehabilitation training, the stroke patient kept standing and did training around the WAM robot. The initial angle was set to zero. Next frame the angle could be given by vector method as:

Where \(\alpha \) is the angle that patient turned, \(S=(\hbox {s}_\mathrm{x} , \hbox {s}_\mathrm{y} ,\hbox {s}_\mathrm{z}),\hbox {S}^{\prime }=(\hbox {s}_\mathrm{x}^{\prime }, \hbox {s}_\mathrm{y}^{\prime }, \hbox {s}_\mathrm{z}^{\prime })\), the point S and \(\hbox {S}^{\prime }\) can be obtained from Kinect. The positive or negative sign of the \(\alpha \) depends on the position of the \(s_x^{\prime }\) and \(s_x \).

3.2 Hierarchical Fuzzy Control Strategy

The hierarchical fuzzy controller consisted of an external controller which was fuzzy dynamic interpolation controller and an internal controller which was fuzzy PD position controller.

3.2.1 Fuzzy Dynamic Interpolation Controller

The patient trunk motion was mainly subjected to human control, the trunk speed was random, so the signal which was captured by kinect and imported to robot was not smooth. To ensure smooth movement of the robot, some trajectory interpolation methods were discussed.

Trajectory interpolation is used to smooth the data according to the path points. In joint space it means to get the processing position for starting position and target position with certain interpolation method [32]. The interpolation methods can decide the capability of the control system. Many interpolation methods have been adopted by researchers, such as LSPB (linear segments with parabolic blends), linear interpolation [33], Lagrange interpolation [34], sample-based interpolation [35] and so on.

To get a good movement performance of the robot, a novel fuzzy dynamic interpolation strategy was adopted which used fuzzy logic connected with dynamic interpolation strategy [28]. The velocity of the patient trunk was used to evaluate the path condition by fuzzy logic in real-time, so as to interpolate the trajectory dynamicly. According to the general experience, the method was used as follows:

-

1.

When the patient speed was small, LSPB was employed;

-

2.

speed was middle, linear interpolation (LI) was employed;

-

3.

speed was big, pulse linear interpolation (PLI) was employed.

LSPB and linear interpolation are from the reference [28]. The pulse linear interpolation is a method based on the linear interpolation, which is combined with a similar pulse variance. In this work, it is expressed as follow:

where \(\theta _{lki} \) and \(\delta _\mathrm{k}\) are the ith interpolation value by linear interpolation and variance for the subsegment connecting with kth and \((k-1)\)th waypoints, respectively, \(\theta _\alpha \) is the actual position, \(\theta _k\) and \(\theta _{k-1}\) are the values of kth and \((k-1)\)th waypoints, respectively, and \(n_{k}\) is the whole interpolation points for this subsegment.

The fuzzy dynamic interpolation adopted the velocity collected by kinect as the input of the fuzzy logic, the output was the level of the interpolation method. Because the movement of the patient trunk was uncertain, the velocity of the patient exported from Kinect contained spike pulses. The input velocity was defined as seven fuzzy sets, negative big (NB), negative medium (NM), negative small (NS), zero (ZE), positive small (PS), positive medium (PM), and positive big (PB). The output level was defined as LSPB, LI (linear interpolation), PLI (pulse linear interpolation). The domain of discourse of input was [−0.1,0.1], while the output was [0,3]. For convenience, two scaling factors were used \(\hbox {G}_{v}=12\) and \(G_{l}=1\) for velocity and level respectively. Mamdani algorithm was used. Triangular-shaped and trapezoidal membership functions were used as fuzzy membership function. The input-output membership functions for fuzzy reasoning are shown in Fig. 5. Figure 5a shows the membership functions of the input variable VEL (velocity); Fig. 5b shows membership functions of the output variable Level. The fuzzy control rules are shown in Table 1. Where level 1 represents LSPB, level 2 corresponding to LI, level 3 corresponding to PLI.

In order to achieve a safe movement exercise, the velocity of the patients should be maintained in lower level. When \(V>V_{max}\) (\(V_{max }\) is the maximum velocity), the training should be switched into emergency mode. According to the experiment on several stroke patients, the \(V_{max}\) was set to 0.3 rad/s.

3.2.2 Fuzzy PD Position Controller

The fuzzy PD position controller was applied to control the WAM robot to do the rehabilitation training stably and smoothly. The block diagram of the WAM robot fuzzy position controller is presented in Fig. 3 in the internal controller. The proportional regulation can reduce deviation and differential regulation can improve the dynamic performance of the system. So the fuzzy controllers were designed for P and D separately. Joint position tracking error \(\theta _\mathrm{e}\) and the error rate \(\theta _{\mathrm{ec}} \) were the input of the fuzzy PD position controller, \(\Delta K_\mathrm{D} \) and \(\Delta K_\mathrm{P}\) were the output of the fuzzy P controller and fuzzy D controller. Both of the input and output were defined as seven fuzzy sets, negative big (NB), negative medium (NM), negative small (NS), zero (ZE), positive small (PS), positive medium (PM), and positive big (PB). In real training, the domain were \(\theta _\mathrm{e} [-0.1,0.1], \theta _{\mathrm{ec}} [-0.01,0.01], \Delta \hbox {K}_\mathrm{D} [-100,100]\), and \(\Delta \hbox {K}_\mathrm{P} [-2.5,2.5]\) separately. For convenience, four scaling factors were used \(G_{\theta _\mathrm{e} } =0.1/6,G_{\theta _{\mathrm{ec}} } =0.01/6, \Delta \hbox {K}_\mathrm{D} =100/6\), and \(\Delta \hbox {K}_\mathrm{P} =2.5/6\). Torque applied to the WAM is as follow:

where \(\hbox {K}_\mathrm{P}\) and \(\hbox {K}_\mathrm{D}\) are gain parameters of PD controller respectively, \(\Delta \hbox {K}_\mathrm{P}\) and \(\Delta \hbox {K}_\mathrm{D}\) are the outputs of the fuzzy P controller and fuzzy D controller separately. \(\theta _\mathrm{d}\) and \({\theta }\) are the desired and actual position respectively, M is the link mass vector of the WAM, Gscale is the gravity compensation coefficient, and g is the gravity compensation coefficient. M, Gscale and g are used for gravity compensation. Mamdani algorithm was used. Triangular shaped and trapezoidal membership functions were used as fuzzy membership function. The output characteristic of controllers are shown in Fig. 6. Figure 6a shows the input and output relationship of \(\Delta \hbox {K}_\mathrm{P}\), Fig. 6b shows the input and output relationship of \(\Delta \hbox {K}_\mathrm{D}\).

4 Experiment and Results

4.1 Experiment

The experiments were performed by six young healthy volunteers (three males, age: \(25\pm 3\)) with no history of upper limb impairment. All of them were from Southeast University in Nanjing, China. To verify the effectiveness of our proposed human-robot cooperative upper extremity rehabilitation method and the hierarchical fuzzy control strategy, several experiments were conducted.

To obtain obvious results, the vertical flexion/extension exercises were adopted as the passive training trajectory. Several experiments were conducted.

To show the effects of the human-robot cooperative rehabilitation training, the experiments were designed as follow:

- ①:

-

the subject followed the WAM Arm to do passive training, and at the same time the subject was asked to simulate the patient trunk movement occasionally;

- ②:

-

Upper limb did human-robot cooperative movement training and the subject walked around the WAM robot occasionally.

In the period of human-robot cooperative rehabilitation training experiment, subject was asked to simulate the trunk movement of the patient occasionally; and the robot used the hierarchical fuzzy controller.

To show the effects of the hierarchical fuzzy controller, contrast experiments in each hierarchy were designed:

- ③:

-

External hierarchy: LSPB and fuzzy dynamic interpolation were used separately on the comparative experiments.

- ④:

-

Internal hierarchy: Traditional PD control method and fuzzy PD control were used separately on the comparative experiments. To reflect the effect of each control method, the same trajectory was adopted in advance.

In order to decrease the influence produced by the accidental elements each subject was asked to do some simulation exercises first, moreover, each experiment was carried out for several times, until the result was relatively stable.

4.2 Results

Because each subject’s trajectory is different, the result which was staying roughly at a moderate level was selected to represent the performance of the human-robot cooperative rehabilitation training system.

The joint position error and the corresponding control torque were recorded and demonstrated to reflect the tracking performance and stability respectively. The interaction forces between patient and WAM robot which were measured by the three-dimensional force sensor show the comfort level of the rehabilitation training.

The results of the experiment ① and ② were depicted in Figs. 7 and 8. In the ① experiment, when the subject was following the WAM Arm to do passive training, if the subject was asked to simulate the trunk movement of the patient occasionally, but the WAM did not do the synchronous motion, the interaction forces between patient and WAM robot changed with a wide range. The variation trends of the interaction forces are shown in Fig. 8. T1 shows the beginning of the patient trunk movement, and T2 is the trunk position restored time. The absolute value of three forces in X, Y and Z direction are all increased in the period from T1 to T2. After T2, the interaction forces are gradually gone back to normal. In this situation the patient feels very uncomfortable, and the rehabilitation training system is very unstable. In the ② experiment, when subject simulated the trunk movement of the patient occasionally, the WAM also did the synchronous motion. The interaction forces between patient and WAM robot are shown in Fig. 8. The figure shows that when subject did the human-robot cooperative rehabilitation training, the generated forces are all smaller than the first comparative experiment. And each force is very uniform in each direction (X, Y and Z). Thus the patient feels comfortable and natural in the rehabilitation training.

The feelings of the volunteers in the experiment ① and ② were showed in the Table 2. Scores from 0 to 10 are used to describe the feelings. 10 represents vary comfortable, nature, convenience and interesting; 0 represents uncomfortable, restricted, illiberality on the contrary. It shows the subjective results on the comparison experiments. The numbers in the table show that experiment ② scored higher. It indicates that the volunteers prefer the human-robot cooperative rehabilitation training method than the traditional one subjectively. Moreover the experiment ③ and ④ show the effects of the human-robot cooperative method on each joints objectively.

The comparison illustrates that the human-robot cooperative rehabilitation training method could reduce the interaction forces when patient trunk motion occurred in upper limb passive rehabilitation training, thus human-robot cooperative upper extremity rehabilitation system is more stable than the traditional rehabilitation training system. Moreover using the human-robot cooperative movement training method, the subject can walk as he/she wished in the visual range of Kinect, and the training is more natural and comfortable and interesting, the volunteers feels better. Because the legs are added to the upper limb rehabilitation training, the human-robot cooperative training could enhance the effect of the rehabilitation training.

The results of the experiment ③ and ④ were depicted in Figs. 9–12, the training mainly included movement in two directions: the upper limb did passive training in vertical direction, and the trunk of the subject moved as he/she wished in horizontal direction.

Figure 9 shows the trunk trajectory of the subject in horizontal after Kalman filter. The trunk trajectory was imported to the Joint1 of the WAM robot. Figure 10 shows the Joint1 position tracking error (Error1). The dash line shows the result of LSPB, the red full line shows the result of fuzzy dynamic interpolation. From the figures it is evident that error1 is smaller in the fuzzy dynamic interpolation than the LSPB, so the former has better tracking performance than the latter.

Figure 11 shows the position tracking error and the corresponding torque in horizontal motion. Figure 12a shows the pre-defined passive training sinusoidal trajectory which is performed by Joint4. Figure 12b shows the Joint4 position tracking error and the corresponding torque in vertical. The dash line shows the result of traditional PD control, the red full line shows the result of fuzzy PD control. From the graph it is evident that traditional PD controller has more frequent vibration and larger torque overshoot than the fuzzy PD controller, especially in the vertical flexion/extension exercise. Also the fuzzy PD control has better tracking performance and smoothness than the traditional.

The system moving performance tracking errors and control torques were further analyzed. The maximum of the absolute error (MAE) and the sum of absolute error (SAE) of the trajectory tracking errors were adopted to evaluate position tracking performances. Moreover, maximum absolute torque (MAT) and maximum absolute rate of the torque (MART) were considered to analyze the movement stability and smoothness of the control performance.

In experiment ③ the position tracking performance is showed in Table 3, and the value of MAE and SAE show that the Error1 is smaller in the fuzzy dynamic interpolation than the LSPB. Position tracking and torque control performance in experiment ④ are present in Table 4. For fuzzy PD controller the values of MAE, SAE, MAT and MART are both smaller than the traditional PD control in the two directions.

The results of the experiments reveal that the hierarchical fuzzy control strategy was succeeding in improving the training.

5 Conclusions

Considering the neuronal coupling between arms and legs during locomotion, and motor function recovery of lower extremity is better than the upper extremity clinically. In the upper limb passive rehabilitation training, the legs motion is considered to realize a more natural and comfortable upper extremity training. So considering the participation of the patient, human-robot cooperative becomes needed.

This paper presented a human-robot cooperative method for upper extremity rehabilitation training. A Kinect based vision module was employed to track the trunk motion of the patient and communicated its position to the WAM controller to lead the robot follow the position of the patient trunk. A WAM robot was used to guide the stroke arm to do passive training with the predefined trajectory, and at the same time the robot followed the patient trunk movement tracked by Kinect in real-time. A hierarchical fuzzy control strategy was proposed to improve the position tracking performance and stability.

The experiments show that the human-robot cooperative upper extremity rehabilitation training method can successfully realize natural and comfortable upper extremity training. The subject can walk as he/she wished in the visual range of Kinect. The enthusiasm to use legs of the patient is boosted in the human-robot cooperative training.

The analyses of the MAE and SAE show that position tracking performance of the human-robot cooperative rehabilitation training system is very well in each hierarchy. The analyses of the MAT and MART show that the fuzzy control performance is good. So the human-robot cooperative upper extremity rehabilitation training can obtain a good result.

References

Song A, Pan L, Xu G, Li H (2015) Adaptive motion control of arm rehabilitation robot based on impedance identification. Robotica 33(9):1795–1812. doi:10.1017/S026357471400099X

Go AS, Mozaffarian D, Roger VL et al (2014) Heart disease and stroke statistics-2014 update: a report from the American Heart Association. Circulation 129:28–292. doi:10.1161/01.cir.0000441139.02102.80

Maciejasz P, Eschweiler J, Gerlach-Hahn K, Jansen-Troy A, Leonhardt S (2014) A survey on robotic devices for upper limb rehabilitation. J Neuroeng Rehabil 11:3. doi:10.1186/1743-0003-11-3

Krebs HI, Palazzolo JJ, Dipietro L et al (2003) Rehabilitation robotics: performance based progressive robot-assisted therapy. Auton Robots 15:7–20. doi:10.1023/A:1024494031121

Rosati G, Gallina P, Masiero S (2007) Design, implementation and clinical tests of a wire-based robot for neurorehabilitation. IEEE Trans Neural Syst Rehabil Eng 15(4):560–569. doi:10.1109/TNSRE.2007.908560

Spencer SJ, Klein J, Minakata K, Le V, Bobrow J E, Reinkensmeyer D J (2008) A low cost parallel robot and trajectory optimization method for wrist and forearm rehabilitation using the Wii. In: Proceedings \(2^{\rm nd}\) IEEE RAS&EMBS international conference on biomedical robotics and biomechatronics, Scottsdale, pp 869–874. doi:10.1109/BIOROB.2008.4762902

Nef T, Mihelj M, Riener R (2007) ARMin: a robot for patient-cooperative arm therapy. Med Biol Eng Comput 45(9):887–900. doi:10.1007/s11517-007-0226-6

Perry JC, Rosen J, Burns’ S (2007) Upper-limb powered exoskeleton design. IEEE/ASME Trans Mechatron 12(4):408–417

Sanchez R, Reinkensmeyer D, Shah P et al (2004) Monitoring functional arm movement for home-based therapy after stroke. In: Annual international conference of the IEEE engineering in medicine and biology, pp 4787–4790: doi:10.1109/IEMBS.2004.1404325

He W, Ge SS, Li Y, Chew E, Ng YS (2015) Neural network control of a rehabilitation robot by state and output feedback. J Intell Robot Syst 80(1):15–31. doi:10.1007/s10846-014-0150-6

He W, Chen Y, Yin Z (2016) Adaptive neural network control of an uncertain robot with full-state constraints. IEEE Trans Cybern 46(3):620–629. doi:10.1109/TCYB.2015.2411285

He W, Dong Y, Sun C (2016) Adaptive neural impedance control of a robotic manipulator with input saturation. IEEE Trans Syst Man Cyber Syst 46(3):334–344. doi:10.1109/TSMC.2015.2429555

Xu G, Song A, Li H (2011) Control system design for an upper-limb rehabilitation robot. Adv Robot 25(1):229–251

Richardson R, Brown M, Bhakta B, Levesley MC (2003) Design and control of a three degree of freedom pneumatic physiotherapy robot. Robotica 21:589–604. doi:10.1017/S0263574703005320

Webster D, Celik O (2014) systematic review of kinect applications in elderly care and stroke rehabilitation. J Neuroeng Rehabil 11:108. doi:10.1186/1743-0003-11-108

Du G, Zhang P (2014) Markerless human-robot interface for dual robot manipulators using Kinect sensor. Robot Comput Integr Manuf 30:150–159

Chang YJ, Chen SF, Huang JD (2011) A Kinect-based system for physical rehabilitation: a pilot study for young adults with motor disabilities. Res Dev Disabil 32(6):2566–2570

Hussain A, Roach N, Balasubramanian S, Burdet E (2012) A modular sensor-based system for the rehabilitation and assessment of manipulation. In: Haptics symposium (HAPTICS), 2012 IEEE, pp 247–254. doi:10.1109/HAPTIC.2012.6183798

Su CJ, Chiang CY, Huang JY (2014) Kinect-enabled home-based rehabilitation system using Dynamic Time Warping and fuzzy logic. Appl Soft Comput 22:652–666

Riener R, Lunenburger L, Jezernik S et al (2005) Patient cooperative strategies for robot-aided treadmill training: first experimental results. IEEE Trans Neural Syst Rehabil Eng 13(3):380–394. doi:10.1109/TNSRE.2005.848628

Wolbrecht ET, Chan V, Reinkensmeyer DJ, Bobrow JE (2008) Optimizing compliant, model-based robotic assistance to promote neurorehabilitation. IEEE Trans Neural Syst Rehabil Eng 16(3):286–297. doi:10.1109/TNSRE.918389

Dietz V, Fouad K, Bastiaanse CM (2001) Neuronal coordination of arm and leg movements during human locomotion. Eur J Neurosci 14(11):1906–1914. doi:10.1046/j.0953-816x.2001.01813.x

Ferris DP, Huang HJ, Kao PC (2006) Moving the arms to activate the legs. Exerc Sport Sci Rev 34(3):113–120

Yoon J, Novandy B, Yoon CH, Park KJ (2010) A 6-DOF gait rehabilitation robot with upper and lower limb connections that allows walking velocity updates on various terrains. IEEE/ASME Trans Mechatron 15(2):201–215. doi:10.1109/TMECH.2010.2040834

Ni C, Fu J, Han R et al (2000) Rehabilitation therapy on recovery of the paretic arm functions in stroke patients. Chin J Phys Med Rehabil 22:204–206

Cong G, Pu S (2001) Effects of early rehabilitation on motor function of upper and lower extremities and activities of daily living in patients with hemiplegia after stroke. Chin J Rehabil Med 16(1):27–29

Pan L, Song A, Xu G, Xu B, Xiong P (2013) Hierarchical safety supervisory control strategy for robot-assisted rehabilitation exercise. Robotica 31:757–766. doi:10.1017/S0263574713000052

Song A, Wu J, Qin G, Huang W (2007) A novel self-decoupled four degree-of-freedom wrist force/torque sensor. Measurement 40(9):883–891. doi:10.1016/j.measurement.2006.11.018

Yu T (2014) Kinect application and development: natural human-machine interactive. China machine press, Beijing

Pan L, Song A, Xu G, Li H, Xu B (2013) Novel dynamic interpolation strategy for upper-limb rehabilitation robot. J Rehabil Robot 1:19–27

Long Y, Du Z, Wang W (2015) control and experiment for exoskeleton robot based on Kalman prediction of human motion intent. Robot 37(3):304–309

Cai Z (2000) Robotics. Tsinghua University Press, Beijing

Scaglia G, Rosales A, Quintero L, Mut V, Agarwal R (2010) A linear-interpolation-based controller design for trajectory tracking of mobile robots. Control Eng Pract 18:318–329. doi:10.1016/j.conengprac.2009.11.011

Li THS, Su YT, Lai SW, Hu JJ (2011) Walking motion generation, synthesis, and control for biped robot by using PGRL, LPI, and Fuzzy Logic. IEEE Trans Syst Man Cybern Part B (Cybern) 41(3):736–748. doi:10.1109/TSMCB.2010.2089978

Harada K, Hattori S, Hirukawa H et al (2010) Two-stage time-parametrized gait planning for humanoid robots. IEEE/ASME Trans Mechatron 15(5):694–703. doi:10.1109/TMECH.2009.2032180

Acknowledgements

The authors appreciate all colleagues in Robot Sensor and Control Laboratory who sent valuable contributions to this work. This work was supported by the National Natural Science Foundation of China (No. 61325018, 61673114); Natural Science Foundation of Jiangsu Province (No. BK20141284); Science and Technology Support Program of Jiangsu Province (No. BE2014132); National Key Research and Development Plan (No.2016YFB1001300). The authors would also like to thank anonymous reviewers for their useful comments.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bai, J., Song, A., Xu, B. et al. A Novel Human-Robot Cooperative Method for Upper Extremity Rehabilitation. Int J of Soc Robotics 9, 265–275 (2017). https://doi.org/10.1007/s12369-016-0393-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-016-0393-4