Abstract

Supplier Selection (SS) is a critical issue due to intense competition in the current market and the need to provide customer necessities with acceptable quality. On the other hand, SS depends on various criteria that make it a Multi-Criteria Decision-Making problem. Hence, a novel framework has been proposed in the current study to evaluate and rank suppliers. The proposed framework by aggregating the Process Control Score (PCS) and Process Evaluation Score (PES) evaluate and rank suppliers. For calculating PCS, a new structure and logic of the Fuzzy Cognitive Map based on the Nash Bargaining Game (BG-FCM) has been proposed to solve FCM’s shortcoming in distinguishing between the important concepts in the real world. Moreover, for generating solutions with high separability and helping decision-makers to have a precise analysis of the system, a modified learning algorithm based on the Particle Swarm Optimization (PSO) and S-shaped transfer function (PSO-STF) has been utilized for training BG-FCM. For calculating PES, experimental mathematical equations in the inspected case have been utilized for important criteria of quality, delivery time, and price of the shipment. The proposed framework has been applied in an auto parts industry for validation. The results show that BG-FCM can successfully highlight the most important concepts and assign their original value. Also, PSO-STF in the comparison between other conventional FCMs’ learning algorithms has better performance in generating solutions with high separability. It can be concluded that BN-FCM with more progressive intelligence can analyze the complex systems and help decision-makers to have a vivid insight into the system.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Current supply management needs long-term partnerships with suppliers and uses fewer but more reliable suppliers. Therefore, selecting the proper supplier involves more than just a set of prices, and the choices depend on a range of quantitative and qualitative factors (Ho et al. 2010). Due to the high number of suppliers in today’s competitive industrial world, choosing the proper supplier is momentous. Current competitive markets require companies to respond quickly and effectively to customers’ demands to gain customer satisfaction and improve their market status. In such circumstances, the role of suppliers and their Supply Chain Management (SCM) is very important, as the wrong decision may lead to increased costs for the manufacturing unit and, consequently, significant damage to the Supply Chain (SC) relationship. For this reason, SCM has drawn attention among both practitioners and academics because of market globalization, severe competition between firms, and understanding the significance of customer satisfaction (Yousefi et al. 2017). To obtain an acceptable profit, which is essential for the survival of the organization, selecting the proper suppliers is a multi-criteria problem with quantitative and qualitative factors that must be resolved. Moreover, if these goals and management principles achieve, it will boost the level of customer satisfaction and ultimately will increase the profit of the organization. If organizations participate in this process, Supplier Selection (SS) will become more complex and will have different criteria for each supplier group. Finally, selecting a proper supplier and monitoring the requested demand from each source should be managed (Alinezad et al. 2013).

The Fuzzy Cognitive Map (FCM) is a powerful tool for modeling complex systems in the real world (Bakhtavar and Yousefi 2018). Axelrod (1976) introduced cognitive maps in the 1970s to provide scientific and social knowledge. A cognitive map is defined as the graphical representation of a system that consists of nodes that represent concepts and arcs that demonstrate the perceived relationships between these concepts (Nikas and Doukas 2016). A decade later, Kosko (1986) introduced the FCM, which was an extension of the cognitive map. The most important development is the representation of connections that have become fuzzy numbers. This means that their description has been upgraded to a number rather than merely a symbol. This feature allows different amounts of causal relationships to be applied. The application of FCMs in the simulation, modeling, and decision-making are widely used in various domains like manufacturing process (Rezaee et al. 2017), medical diagnosis (Salmeron et al. 2017; Bourgani et al. 2014), time series prediction (Papageorgiou and Poczęta 2017), decision support system (Stylios et al. 2008; Kyriakarakos et al. 2014), risk assessment (Papageorgiou et al. 2015; Dabbagh and Yousefi 2019; Jahangoshai Rezaee et al. 2018), renewable energy management (Jahangoshai Rezaee et al. 2019), environmental science (Anezakis et al. 2016; Singh and Nair 2014), and performance optimization (Azadeh et al. 2017).

In the SS literature, the used methods for this realm are categorized into six main groups: methods for prequalification of suppliers, Multi-Criteria Decision Making (MCDM) techniques, mathematical programming models, Artificial Intelligence (AI) models, fuzzy logic approaches, and combined approaches (Pal et al. 2013). Although FCM is one of the AI methods, it does not have any status in that classification, and generally does not have a notable application in the SS problem. Nonetheless, FCM has very powerful potential like (1) considering causal relationships between concepts; (2) modeling complex systems with limited data; (3) more intelligent, and (4) less dependent on the experts’ opinion (Bakhtavar and Yousefi 2018). On the other hand, the most important shortcomings of the MCDM methods are their incapability in determining causal relationships between characteristics. Nevertheless, in the real world, each characteristic is affected by other characteristics and conversely. Thus, disregarding this issue will lead to uncertainty in the outcomes (Jahangoshai Rezaee and Yousefi 2018). Based on the provided information, the implementation of FCM instead of MCDM methods can relieve the shortcoming of the MCDM methods. FCM’s concepts indicate key factors and characteristics of the modeled complex system (Zare Ravasan and Mansouri 2016), and these characteristics are based on human knowledge or historical data (Papageorgiou 2013). Although FCM, according to the causal relationship interaction, can determine the value of concepts, based on the human knowledge, some concepts may have more outstanding importance, and they are expected to have more significance on the system’s behavior. Conventional FCMs have integrated logic, and concepts do not have any differences with each other, and their genuine importance in the real world is disregarded. The only way to distinguish the importance of the concepts in FCM is their initial numerical value; however, these values will change after constructing the FCM and reaching the steady-state. In constructing the FCM, the importance of the concepts is updated through causal relationships. These values may increase or decrease according to the weights of casual relationships. FCM’s holistic attitude of the system as an integrated complex may disregard the real impact of some of the concepts and leads to the imprecise perception of the problem. On the other hand, ignoring the real value of concepts, in the long term, may have negative consequences on the system. Hence, a new logic should be organized to the FCMs to consider the importance of the concepts in the learning process and allocates more outstanding significance than other concepts. In this study, by establishing the Nash Bargaining Game (BG) between critical concepts of FCM, it has been tried to help FCM to distinguish the most important concepts of the system. In fact, in the learning process, concepts with cooperating will try to raise their payoffs, and finally, achieve their original value.

This study aims to implement the proposed novel structure of the BG-FCM in an SS framework to have a better insight into the problem. For analyzing the purposed approach, a case study has been selected in an auto parts industry for evaluating and ranking the suppliers. For this purpose, a specific framework is presented according to their achieved scores. In the first step, using the proposed BG-FCM, the Process Control Score (PCS) is determined for each supplier. Firstly, after collecting the suppliers, the main criteria for evaluating them are determined based on the Control Team’s (CT) opinion. Then, experts assign scores to the performance of the suppliers based on the determined criteria by fuzzy numbers. In this study, every criterion of the SS problem is considered as the FCM’s concept, and the objective concepts for establishing the Nash BG are ascertained. The objective concepts of the FCM in the learning process will try to raise their value based on the Nash BG to emphasize their significance in the evaluation process. In this step, the FCM is constructed by experts, and a learning algorithm extracts the causal relationships’ optimal weights between concepts. After reaching the steady-state, the final weight of the concepts that shows the importance of the relevant criteria is obtained. For learning the FCM, a new modified learning algorithm based on the combination of the Particle Swarm Optimization (PSO) and S-shaped transfer function (PSO-STF) has been utilized. This learning approach has great capability in generating solutions with high separability, which can help decision-makers to analyze the system reliably. For evaluating the performance of the proposed approach, it has been compared by some of the conventional FCM algorithms. Then, in the second step, the Performance Evaluation Score (PES) is obtained based on the quantitative criteria and the presented experimental mathematical equations of the company. In the final step, the suppliers are ranked by aggregating the obtained scores from the PCS and the PES.

The rest of this paper is organized as follows: Sect. 2 deals with the literature on SS problem, application of the FCM in the SC realm, and FCM learning algorithms. In Sect. 3, the proposed methods in this study are explained. Then, in Sect. 4, the new proposed approach in this study is illustrated. In Sect. 5, the proposed approach is applied to the problem in the auto parts industry, and the results are analyzed. Finally, in Sect. 6, conclusions and suggestions for future studies are presented.

2 Literature review

The literature review in this section is divided into three sub-sections. In Sect. 1, the researches on SS problems and different MCDM methods that have been used in this area are presented. In Sect. 2, the applications of FCM in the SC are investigated. In Sect. 3, some of the studies for developing FCM learning algorithms are presented.

2.1 SS problem

As the supplier is part of a good and well-managed SC, it will have a pivotal role in this realm. The importance of SS is that it undertakes the supply of resources while simultaneously influences activities such as inventory management, production planning and control, financial requirements, and product quality (Choi and Hartley 1996). The importance of these observations is further enhanced by recent advances in SCM, as its membership tends to be stable over a long-term relationship (Choi and Hartley 1996). Araz and Ozkarahan (2007) described SS and evaluation for strategic recourses with the new multivariate sorting approach based on the Preference Ranking Organization Method for Enrichment of Evaluations (PROMETHEE) method, in which suppliers are categorized according to supplier design capabilities and overall performance. Banaeian et al. (2018) contributed to the green SS area by comparing the application of the Technique for Order of Preference by Similarity to Ideal Solution (TOPSIS), VIKOR and gray relational analysis in a fuzzy environment. Liu et al. (2018) implemented the Analytic Network Process (ANP) and entropy weight to obtain the subjective and objective weights of criteria and then, based on the Decision-Making Trial and Evaluation Laboratory (DEMATEL) and Game Theory (GT), determined the overall weight of ANP and entropy weight. The Analytic Hierarchy Process (AHP) method is a widely used approach to SS and has been studied extensively. Lu et al. (2007) presented a multi-objective decision-making process for green SCM to assist the SC manager in measuring and evaluating suppliers’ performance based on fuzzy AHP. Özgen et al. (2008) designed the integration of the AHP method and a multi-objective probabilistic linear programming method to identify all the tangible, intangible, quantitative, and qualitative factors used to evaluate and select suppliers to determine optimal order quantities. Alinezad et al. (2013) used the Quality Function Deployment (QFD) method to select suppliers of pharmaceuticals and ranked the QFD method using fuzzy AHP method. Vivas et al. (2020) studied an integrated approach combined with analytical and mathematical models by using AHP and PROMETHEE methods to assess SC sustainability in the oil and gas industry.

Data Envelopment Analysis (DEA) is another widely used approach in SS. Chen (2011) examined the evaluation of suppliers in Taiwan’s textile industry by analyzing competitive organizational strategy using SWOT and potential suppliers represented using the DEA and TOPSIS methods. Sabouhi et al. (2018) attempted to design an SC in the pharmaceutical industry using a fuzzy DEA method and used this hybrid approach to evaluate the efficiency and flexibility of SC design. Ramezankhani et al. (2018) presented a new dynamic network DEA framework as a comprehensive performance management system in the automotive industry coupled with the combined QFD method with DEMATEL to select the optimal systems for the best stability and flexibility factors used in the DEA model. Li et al. (2019) presented a fuzzy epsilon-based DEA to evaluate SC performance. To achieve an organized process, Yousefi et al. (2019) presented a multi-buyer coordinated model and the DEA model for selecting efficient suppliers, order allocation, and pricing in an SC concerning coordination among its members. In a study, Lamba et al. (2019) proposed mixed-integer nonlinear programming for SS, along with determining the large integer in a dynamic environment.

2.2 FCM application in the SC

Kim et al. (2008) proposed a study to extract the cause-and-effect knowledge from the state data and to develop an FCM for a Radio Frequency Identification technology in SC. Chen (2011) designed the autonomous agent-based tracing system based on the internet of things architecture using FCMs and fuzzy rule method for product usage life cycle. Irani et al. (2014) using FCM, attempted to contribute to the perspective of the Information System (IS) investment valuation based on a fuzzy expert system, to emphasis on expanding knowledge and learning to evaluate the mostly ambiguous valuation of IS investments. Also, Irani et al. (2017), by identifying key factors extracted from the literature, presented a model for implementing green SC collaboration using a future-based perspective to examine the role of knowledge management in facilitating green SC collaboration with the help of FCM. Bevilacqua et al. (2018) proposed a method for analyzing the domino effect among concepts affecting SC resilience based on FCMs to allow the players of the SC to evaluate the indirect and total causal effect among different concepts affecting the SC resilience. Shojaei and Haeri (2019) proposed an approach included an SC risk management approach for construction projects that consists of grounded theory, FCM, and gray relational analysis to bridge the gap in effectively managing risks along the project’s SC to avoid increasing time and cost. Alizadeh and Yousefi (2019) provided a unified framework for SS problem concerning the loss of standard deviation criteria, causal relationships between decision-makers criteria, and preferences in SS problem, combining the Taguchi method and FCM.

2.3 FCM learning algorithms

FCMs’ learning algorithms have focused on learning E-matrix, i.e., causal relationships and their weights (Papageorgiou 2013). Depending on the type of available knowledge, learning techniques can be divided into three groups: Hebbian-based, population-based, and hybrid, which integrates the core aspects of Hebbian-based and population-based learning algorithms. Dickerson and Kosko (1994) were the first to propose a simple method of differential learning based on the Hebbian theory. During Differential Hebbian Learning (DHL), the weight values are repeatedly updated to achieve the desired structure. Generally, the weight in the connection matrix changes only when the value of the corresponding concept changes. Papageorgiou introduced two unbiased Hebbian-based earning algorithms, called Nonlinear Hebbian Learning (NHL) (Papageorgiou 2013) and Active Hebbian Learning (AHL) (Papageorgiou et al. 2004) which were able to adjust the weight of FCM. Population-based learning algorithms usually seek to find models that emulate input data. Population-based learning algorithms are optimization techniques and, therefore, algorithmically strict (Papageorgiou 2013). Several population-based algorithms, such as evolutionary strategies (Koulouriotis et al. 2001), swarm intelligence (Papageorgiou et al. 2005), tabu search (Alizadeh et al. 2007), game-based learning (Luo et al. 2009), chaotic simulated annealing (Alizadeh and Ghazanfari 2009), genetic algorithms (Froelich and Juszczuk 2009), Real Coded Genetic Algorithm (RCGA) (Stach 2010), ant colony optimization (Ding and Li 2011) have been suggested for learning FCMs. In the hybrid learning method of an FCM, the objective is to modify/update weight matrices based on initial experience and historical data in a two-step process. The presented algorithms in the literature target different application requirements and try to overcome some limitations of FCMs (Papageorgiou 2013). Papageorgiou and Groumpos (2005a) proposed a hybrid learning scheme consisting of the Hebbian algorithm and the Differential Evolution (DE) algorithm. Later, Ren (2007) proposed an FCM learning approach combining the NHL and Extended Great Deluge Algorithm (EGDA). This blended learning method has NHL performance and EGDA absolute optimization capability. Another hybrid scheme was proposed by Zhu and Zhang (2008) using the RCGA and NHL algorithms and discussed in a partner selection problem.

3 Methodology

In this section, the used methods in this study are presented. In Sect. 1, the concept of FCM and its mechanism are provided. In Sect. 2, the Nash BG is introduced.

3.1 FCM

FCMs are a structured AI technique that incorporates ideas from Artificial Neural Networks (ANNs) and fuzzy logic. FCMs create models as a set of causal relationships and concepts (Kosko 1986). Nodes represent concepts, and causal relationships are shown by direct edges, which represent causal relationships between concepts. Each edge has a weight that determines the type of causal relationship between the two nodes. The weight sign determines the positive or negative causal relationship between the nodes of the two concepts. Concepts reflect the characteristics, qualities, and perceptions of the system. The relationship between the concepts of the FCM indicates the causal relationship that one concept has over another. These weighted connections indicate the direction and degree and which concept influences the value of the weighted connection (Papageorgiou and Groumpos 2005b) (see Fig. 1). The values of the concepts change over iterations (van Vliet et al. 2010), and the qualitative weights for edges are normalized on the range [− 1.0, + 1.0], and concepts can be squashed in the interval [0.0, 1.0] or [− 1.0, + 1.0] based on the threshold function (Nikas et al. 2019).

If the weight sign indicates a positive causality (\(W_{ij} > 0\)) between \(C_{i}\) and \(C_{j}\), then increasing the value of \(C_{i}\) will increase the value of \(C_{j}\) and decreasing the value of \(C_{i}\) will decrease the \(C_{j}\) value. When there is a negative causality (\(W_{ij} < 0\)) between two concepts, increasing the value of the first concept (\(C_{i}\)) reduces the value of the second concept (\(C_{j}\)), and decreasing \(C_{i}\) increases the \(C_{j}\) value. When there is no relationship between two concepts, \(W_{ij} = 0.\) The power of the \(W_{ij}\) indicates the effect of \(C_{i}\) on \(C_{j}\) (Papageorgiou et al. 2004).

Experts typically develop FCMs of a mental model manually based on their knowledge of a related area. First, they identify the key aspects of the domain, namely concepts; then, each expert determines the causal relationship between these concepts and the strength of causal relationships (Papageorgiou 2013). For the FCM reasoning process, a simple mathematical formula is usually used as follows:

where the \(A_{i} (k)\) state vector represents the \(c_{i}\) value at the time \(t\). Depending on the notion of autocorrelation \(A_{i} (k)\) can be eliminated. Functions of this form assume that no autocorrelation has been utilized in FCM literature. Depending on whether the weight matrix consists of autocorrelation or not, both functions can be considered as equal. By devoting ones on the main diagonal of the weight matrix, i.e. \(w_{ij} = 1\), then autocorrelation is implied and included in the first term, so the second term should be eliminated (Nikas and Doukas 2016). \(f(.)\) is a threshold function and two kinds of threshold functions are utilized in the FCM framework: the unipolar sigmoid function, where m > 0 determines the steepness of the continuous function f:

where \(m\) is a real positive number and \(x\) is the value of \(A_{i}^{(k)}\) at the equilibrium point. When the nature of concepts can be negative, their values belong to the interval [− 1.0, 1.0], the hyperbolic function is used (Groumpos 2010):

The threshold function is used to reduce the sum of infinite weights to a specific range that impedes quantitative analysis but allows for qualitative comparisons between concepts. FCM calculations using Eq. (1) will continue to achieve one of the following conditions (Papageorgiou et al. 2006):

-

A.

Reaching a steady-state, as long as \(A_{new}\) is equal to \(A_{old}\) or slightly different,

-

B.

Reaching the desired iteration with the conceptual values in a loop, the numerical values are assigned to a specific period.

-

C.

Demonstrating chaotic behavior that selects each value with different numerical values in a non-random way.

3.2 Nash BG

GT is the theory of strategic interaction and, as a mathematical tool, aims to formalize strategic interactions between players. It is defined by a set of players, strategies, and payoffs. GT assumes that players rationally choose a strategy to maximize their payoff with being aware of the game’s knowledge structure, which is other players’ attempt to maximize their payoffs (Mulazzani et al. 2017). If there is a Nash equilibrium point, where no player has the motivation to back down from their strategy, the result is considered to be rational behavior. There are different categories for games: First, it is important to distinguish between cooperative and non-cooperative games. In cooperative games, players pursue a common goal, and in non-cooperative games, players perform behaviors that are in apparent contradiction with other players. Second, some games are played at the same time (in this case, the information is said to be imperfect because a player does not know the adopted strategy by other competitors), and others sequentially games (playing with perfect information). Furthermore, games can have incomplete information, where some players do not know one or more features associated with other players’ identities. In this case, players only know the probabilistic distribution of competitors’ decisions without any information (Mulazzani et al. 2017).

A Two-person BG involves two players who have the opportunity to cooperate in more than one way (cooperative game) (see Fig. 2). In other words, no action is taken by a player without the consent of the opposing player to threaten the profits of the other party (playing with perfect information) (Nash 1950). Players may endeavor to resolve their conflict and voluntarily commit themselves to practical action that is beneficial to all. If there is more than one set of actions that is more favorable to the disagreement of both players and there is a conflict between those sets to resolve the conflict, then the process of negotiating with how to resolve the conflict is necessary. The negotiation process can be modeled using GT tools (Osborne and Rubinstein 1994).

BGs can be described as a tool that helps managers to understand the bargaining problem in different problem settings. A BG is one in which two or more players compete on the distribution of benefits (Jahangoshai Rezaee et al. 2012a), and its purpose as a cooperative game is to divide the benefits between two players based on their competition (Jahangoshai Rezaee et al. 2012b). If both players disagree on how to distribute the benefits in a two-person BG, each player receives “disagreement value” and is called breakdown points (breakdown payoffs). Breakdown payoffs are the starting point for a bargain that indicates the pair of possible payoffs when a player decides not to bargain with other players (Jahangoshai Rezaee et al. 2012a). The Nash model needs to be feasible set, compact, convex, and contain some payoff vectors so that each individual’s payoff is greater than the individual’s breakdown payoff (Jahangoshai Rezaee et al. 2012b). Players can always reach the breakdown point D if they fail to bargain and fail to reach an agreement. It can be regarded as the Nash equilibrium point of the non-cooperative version of the game. The \(DBB^{\prime}\) dotted area of Fig. 3 determines the feasible set. The set of all results that are: (a) compared to the breakpoint, being Pareto optimal, and (b) can be received from the same point. There are no details on the bargaining process or agreement between the players. The Nash bargaining solution is achieved by maximizing the Nash Product Equation (NPE) (Lambertini 2011). If u is the utility function of player 1, and v is the utility function of player 2, then \(u_{0}\) and \(v_{0}\) are the breakpoints for players 1 and 2, respectively (Jahangoshai Rezaee and Shokry 2017):

By changing u and v, NPE changes in a way that convex curves are drawn to the origin of axes (see Fig. 4). The maximization of Eq. (4) must be consistent with the constraint, meaning players must select a point in the feasible set or maximize along its frontier. Therefore, Eq. (4) is maximized when players maximize the tangent point between the Pareto boundary of the set and the highest possible curve produced by Eq. (4) that is compatible with the feasible set (Lambertini 2011).

4 Proposed approach

In this section, the proposed approach of this study is presented. In Sect. 1, the BG-FCM is introduced, which is based on the combination of FCM and Nash BG. Then, in Sect. 2, the implemented learning algorithm for this study is presented. Finally, in Sect. 3, the proposed framework for evaluating the proper suppliers is provided.

4.1 BG-FCM

The main objective of this sub-section is developing FCM by Nash BG to make it more intelligent in distinguishing between the most important components in the modeled system. The main motivation for this issue is that based on human knowledge, some components of systems in the real world have a more vital impact on the system and its behavior. FCMs, by combining the main aspects of fuzzy logic, ANNs, expert systems, and semantic networks, have attained remarkable research interest and are widely used to analyze complex causal systems (Papageorgiou 2013). FCM has the capability of considering components as concepts, and causal relationships between concepts can determine the final value of the concepts. However, FCM has an integrated structure for the model and does not precisely distinguish between various concepts based on their importance in the real world. The only way that FCM emphasize the important concepts is their initial numerical value. After constructing FCM by learning algorithms, according to the weight of the causal relationships and the number of edges that a concept can receive or sends, the final value of the concepts is determined. Based on the causal relationships, their weight, and signs, the final value of the concepts may be different than the expectations of human knowledge. It is suggested to accomplish a Nash BG between critical concepts to achieve their original value to overcome the mentioned problem. The NPE is considered as the fitness function of the FCM learning algorithm. For this purpose, the most important components of the system are determined by experts to accomplish the Nash BG. These concepts are considered as the players of the Nash BG, and in the learning process of the FCM, they will endeavor to raise their payoffs to achieve their original value. The utility function of the FCM’s selected concepts, in the role of Nash BG players, is calculated according to the Eq. (5).

In this Equation, \(A_{j} (k)\) and \(W_{ij}\) have the same meaning which they have in FCM. At first sight, it may conclude that the number of causal relationships and the numerical value of concepts have the main role in calculating the selected concepts’ value. However, based on the NPE and the logic of Nash BG, NPE’s value will be maximum when all of the players achieve their desired payoffs. Every concept has its breakdown payoff, which ascertains the value that it does not bargain with other players. In other words, each player achieves its desired value by cooperating with other players regarding breakdown payoff that leads to maximizing the NPE. Figure 5 demonstrates an overview of BG-FCM in which three concepts have accomplished a Nash BG. For instance, the concept BG1 receives three causal relationships from Concept i, Concept j, and BG3 that, with their concept value and causal relationship weights constitute its utility function. Concepts BG1, BG2, and BG3 accomplish a Nash BG with each other, and they maximize NPE by cooperating and raising their payoffs.

4.2 Learning algorithm

In FCM, accurate estimation of map’s weights is essential to improve their accuracy, structure, and reduce dependence on expert opinions. In recent years to overcome this deficiency, various learning algorithms have been implemented to enhance the accuracy of obtained weights and map convergence (Rezaee et al. 2017). Abbaspour Onari et al. (2020) proposed a modified fuzzy learning algorithm based on the combination of the PSO algorithm and S-shaped transfer function (PSO-STF). In this algorithm, the S-shaped transfer function has been utilized for relieving the PSO algorithm’s shortcomings in the lack of separability in the generated solutions. The proposed algorithm aims to generate solutions with high separability to help decision-makers to have precise insight into the problem. The PSO algorithm generates new solutions based on two main equations:

\(c_{1}\) and \(c_{2}\) in Eq. (6) are acceleration constants that refer to the weighting of the stochastic acceleration terms that pull each particle toward pbest (personal best) and gbest (global best) positions. Rand () is a random variable that is generated by uniform distribution between 0 and 1. w is inertia weight, \(x\) refers to the position vector, and \(v\) velocity vector (Kennedy and Eberhart 1995).

The S-shaped transfer function has been implemented as the transfer function of the PSO algorithm. The aim is to make separability between generated solutions and make concepts distinguishable. The S-shaped curve (see Fig. 6) is dependent on two parameters a and b that determine the two upper and lower boundaries of the slope of the curve (see Eq. 8) (Abbaspour Onari et al. 2020).

After generating the initial population by PSO, the vector of the obtained FCM concepts and generated populations (weight matrix) are entered into the S-shaped transfer function. Random populations and concept values’ vector for evaluating by fitness function are transferred into NPE. Ultimately, the best solutions are those that maximize the Nash BG fitness function by enhancing their payoff in cooperation with other players. The objective concepts by cooperating will try to enhance their payoffs, which leads to enhance the Nash BG value. The pseudo-code of the proposed approach has been represented below (Fig. 7).

The pseudo-code of the PSO-STF for BG-FCM (Abbaspour Onari et al. 2020)

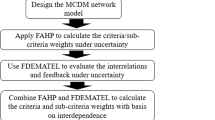

4.3 Integrated framework for SS

The main purpose of the current study is to implement the novel structure of the BG-FCM in a framework for the SS problem. The main goal is evaluating the performance of the suppliers and selecting proper suppliers of materials, parts, production tools, packaging materials, and other necessities that affect the quality of the final product, with a focus on supplier evaluation criteria in the auto parts industry. In a comprehensive view at the under reviewed problem, it can be said that the process of evaluating suppliers is carried out in two steps:

Step 1 Strategic identification: The initial selection and identification of new suppliers are carried out through participating at exhibitions, based on catalogs’ information, and recommendations of other buyers or producers, and customers. Then, the business manager contacts under investigation companies and informs the business context and requirements of the organization to the suppliers. These companies should be aware of the range of quality requirements and necessities of the company. If positive feedback was received and suppliers were interested, they would be provided with a “suppliers’ profile form” to complete it with up-to-date and accurate information.

Step 2 Evaluation and selection: At this step, based on the technical manager’s opinion, the suppliers’ companies and their facilities are investigated, and their capability is evaluated. Then, the accuracy of the provided information in the suppliers’ profile form is investigated. In this step, the selection of the authorized suppliers is announced based on the items in the “suppliers’ evaluation form” to conclude the contract. Suppliers’ evaluation is based on the suppliers’ final score, and their rating is based on two scores: PCS and PES. 70% of each suppliers’ final score comes from the pre-shipment PCS (based on the annual checklist), and 30% is related to their PES after receiving the shipment. The used methods for calculating PCS and PES is presented as follows:

4.3.1 Calculating suppliers’ PCS (based on the BG-FCM)

Suppliers’ process control includes criteria for quality management systems, modern quality systems, human resources, resources and facilities, technical documentation, and process control. Based on the CT’s opinion, a list of qualitative criteria that are important in evaluating proper suppliers is organized, and based on suppliers’ performance; an initial fuzzy score is assigned to every qualitative criterion. A fuzzy set is a class of objects with sequential grades of membership. Such a set is determined by a membership (characteristic) function, which devotes to each object a grade of membership ranging between zero and one (Zadeh 1965). The following expression represents the fuzzy set of “A”:

where \(\mu_{A} (x)\) indicates the membership function of the fuzzy number, which grades membership between [0, 1] is allocated to \(x\). In this study, the fuzzy triangular numbers are implemented to scoring the suppliers’ evaluation criteria. The membership function of fuzzy triangular numbers can be expressed as follows:

Let \(A = (l,m,u)\) be the symbol indicating a triangular fuzzy number. Thus, it is fully characterized by a triple: \((l,m,u)\). The parameter “m” gives the grade of \(\mu_{A} (x)\) where parameters “l” and “u” are the lower and upper bounds (Aboutorab et al. 2018). Table 1 represents the transformation rules of linguistic variables to fuzzy triangular membership function in this study. CT assigns a linguistic variable to every criterion which describes the performance of suppliers in the investigated domain.

The continuous membership function of the used triangular fuzzy number is represented in Fig. 8. For ease of calculation, the center of gravity (CoG) method (Chandramohan et al. 2006) is implemented to aggregate the allocated scores by CT and convert fuzzy numbers to crisp numbers.

Afterward, using the recorded information of evaluation criteria and BG-FCM, it is attempted to determine the weight of every evaluation criterion for calculating the PCS. The weight of each evaluation criteria is the importance of that criterion in evaluating suppliers. For calculating each criterion’s weight, evaluation criteria are considered as concepts of the BG-FCM and the map of these concepts. Then, the vital concepts for participating in the Nash BG are selected and considered as objective concepts. After depicting the BG-FCM and determining the constraint of the causal relationships between concepts is by experts, the final weight of each concept is calculated using the PSO-STF learning algorithm. To this end, allocated scores for each supplier’s evaluation criteria are considered as the initial numerical value of concepts in BG-FCM. In the following, the learning algorithm is executed for each supplier. After reaching the steady-state, concepts’ weights are considered as their importance for each supplier. By aggregating the obtained weights, the PCS is obtained for each supplier. Then, by applying the obtained weights from BG-FCM to the initial score, which is allocated by the experts on each evaluation criterion and aggregating them, “process control score by applying weights (PCSAW)” is obtained for each supplier. Then, the “process control score ratio (PCSR)” is obtained by dividing the (PCSAW) to the “highest possible PCS between suppliers.”

4.3.2 Calculating suppliers’ PES (based on quantitative criteria)

Based on the ISO/TS principles and prescriptions of the company, the qualitative average of shipments is calculated according to Eq. (11) by the CT in the case study, using the output of the qualitative product inspection. ISO/TS is provided to control the quality of production in the automotive industry. There are codified principles and prescriptions for implementing ISO/TS; however, every company based on its historical documentation and experiments codifies its relevant prescriptions for implementing ISO/TS. There is no generic model; each industry seems to have developed a process to match its own needs (Hoyle 2005).

In Eq. (11), \(C_{R}\) is the qualitative average, \(Q_{R}\) is the evaluation of the shipment quality, \(D_{R}\) is the evaluation of the shipment delivery time, and \(P_{R}\) is the evaluation of the received shipment price. Also, the evaluation of the shipment quality, shipment delivery time, and received shipment price are calculated basis on Eqs. (12) to (14), respectively. Then, they are recorded in the qualitative evaluation form of received shipment.

In Eqs. (12) to (14), \(Q_{1}\) is the matched shipment volume, \(Q_{2}\) is the matched and accepted shipment volume after correction and rework, \(Q_{3}\) is the accepted shipment volume with minor mismatches, and \(Q_{4}\) is the unmatched and referential shipment volume. Q is the shipment volume, \(Q_{P}\) is the volume shipment which should be sent on time, \(Q_{E}\) is the extra shipped shipment volume, and \(Q_{F}\) is the shortage shipment volume. \(P_{L}\) is the lowest sales price among all suppliers, and P is the supplier sales price. After calculating the suppliers’ final score (70% of PCS and 30% of PES), the suppliers are ranked based on the obtained final scores. The flowchart of the proposed approach in this study has been illustrated in Fig. 9.

5 Case study and analyzing the results

In this section, the proposed framework is applied to a case study in the auto parts industry. In Sect. 1, the preprocessing phase is applied to information and data. In Sect. 2, the proposed BG-FCM for calculating the final weight of the criteria is presented. Because the BG-FCM is presented for the first time, its performance is validated in comparison with other conventional FCMs. In Sect. 3, the PCS and PES are calculated, and the final evaluation score of suppliers based on them is provided, and suppliers are ranked based on their score.

5.1 Preprocessing phase

The studied company in the auto parts industry operates with extensive casting and machining equipment to produce raw materials needed for light and heavy-duty vehicle manufacturing industries. With a variety of mechanized equipment for melting, casting and polishing, the company pursues the self-sufficiency task of supplying the necessary parts to the domestic industry, the ability to export parts and create the necessary platform to create the right level of employment opportunities. In the first step of the proposed framework for supplier evaluation, based on the documentation of the understudy company and the opinions of the CT, the main criteria used to evaluate suppliers are presented in Table 2. In the next step, CT’s experts assign scores to the evaluation criteria for the investigated suppliers. For assigning scores to evaluation criteria, linguistic variables are utilized, and they are classified into five categories: very bad, bad, medium, good, and very good, which illustrates the performance of the investigated supplier in that domain. Five membership function is defined for every linguistic variable according to the fuzzy triangular number (see “Appendix 1”). Then, fuzzy numbers are converted into crisp numbers by the CoG method for ease of the calculation. These numbers are initial values of the concepts of the BG-FCM (see “Appendix 2”).

5.2 BG-FCM and comparing it with conventional FCMs

For obtaining the final weight of the criteria, every criterion is considered as the concept of the BG-FCM. Then, based on the CT’s opinion, the causal relationships between concepts and directions are determined, and the weight of the causal relationships is specified as constraints for the PSO-STF. It should be mentioned that unlike Abbaspour Onari et al. (2020) in this study, the fuzzy learning approach for BG-FCM has not been used, and BG-FCM is trained by crisp numbers. For determining the constraints of the weights, CT’s experts allocate a range for every weight, and after consensus on the ranges of the weights, they finalize them. For example, two of the weights’ ranges are provided below. Due to causal relationships’ abundance, rest of them are disregarded for representing, and only their centers have been denoted on the map:

Then, the objective concepts for establishing the Nash BG is selected. “Customer Satisfaction” and “Quality of Service” are selected concepts for Nash BG, and BG-FCM determines the utility function. However, the breakpoints are determined by experts, and they are 0.2 and 0.18, respectively. Due to CT’s opinion, those two criteria have the most outstanding role in the suppliers’ evaluation, and their final weights should be extracted precisely. Figure 10 demonstrates the depicted BG-FCM for the proposed study. Before executing PSO-STF, setting the initial parameters of the learning algorithm is indispensable. The NPE is selected as the fitness function of the PSO-STF, which the main objective is maximizing it. The maximum number of iterations and population size is set to 400 and 50, respectively. Clerc and Kennedy (2002) generalized the model of the PSO algorithm, containing a set of coefficients to control the system’s convergence tendencies. Their approach is implemented in this study, and the rest of the PSO parameters are set based on Eq. (17). The constriction coefficients are \(\phi_{1} = \phi_{2} = 2.05\), and \(\Phi = \phi_{1} + \phi_{2}\). The value \(\chi\) is attained based on Eq. (17) and \(\Phi\). The inertia weight \(\omega\) is set to \(\chi\), and acceleration coefficients, \(c_{1}\) and \(c_{2}\) are obtained as \(c_{1} = \phi_{1} \times \chi\) and \(c_{2} = \phi_{2} \times \chi\).

The PSO-STF has been executed 50 times independently, and solutions with the highest fitness function value have been selected as the optimal solution of the algorithm. The PSO-STF for every supplier is executed with their corresponding initial concepts’ values (see “Appendix 2”) and the depicted BG-FCM (see Fig. 10). For evaluating the performance of the BG-FCM and PSO-STF learning algorithms, they are compared with NHL, extended Delta-rule, and PSO algorithms. Because NHL and extended Delta-rule algorithms need the initial weight matrix for training FCM, so the center of the weight ranges (see Fig. 10) is selected as the initial matrix of the causal relationship weights. It should be mentioned that all of the parameters and the fitness function of the PSO algorithm and PSO-STF are the same for unbiased comparison of their performance. Meanwhile, in Table 3, a comparison between the performance of the NHL, extended Delta-rule, PSO, and the proposed learning algorithm has been provided. In this table, the performance of only three suppliers is provided.

Table 3 illustrates two main outcomes: first, the performance of the BG-FCM in the comparison between NHL and extended Delta-rule algorithms in case of highlighting the significance of the objective concepts; secondly, the performance of the PSO-STF in generating solutions with high separability and various weights in comparison with other learning algorithms. As highlighted in Table 3, nodes 20 and 21 ranks in the BG-FCM (both in PSO and PSO-STF learning algorithms) have been changed for suppliers. Due to the Nash BG, they have achieved lower ranks that indicate their higher significance. For instance, for “Supplier 1”, concepts 20 and 21 have achieved the fourth and sixth ranks in NHL and fifth and eighths rank in extended Delta-rule algorithms, respectively. However, in BG-FCM, they have achieved the third and fourth ranks in the PSO algorithm and the first and fourth ranks in the PSO-STF, respectively. As it is obvious, the BG-FCM has a more powerful capability in distinguishing the importance of the concepts, and by higher intelligence can highlight the significance of the crucial concepts of the problem.

As mentioned earlier, the accurate estimation of the map’s weights is essential to improve their accuracy, structure, and reduce dependence on experts’ opinions. On the one side, the separability of the solutions is very important for decision-makers to analyze the behavior of the system reliably. For this purpose, a modified learning algorithm has been utilized in this study to enhance the generated solution’s separability. At this step, the performance of the PSO-STF is compared with other conventional FCM’s learning algorithms for the “Supplier 1”. First, based on the initial concepts’ value (“Appendix 2”) and weights of the causal relationships between concepts Fig. 10, the NHL algorithm is trained. The performance of the NHL algorithm is illustrated as a scatter plot in Fig. 11. The NHL has an acceptable separability, but the solutions have dispersed in a short interval of [0.7980, 0.9852] that is not broad. In this situation, decision-makers can not reliably distinguish the importance of concepts since lack of separability can eclipse the true value of some of the concepts. On the other hand, Hebbian-based algorithms have a very critical shortcoming, and that is the lack of convergence of the algorithm in ANNs learning when there is a correlation between the input vectors or when they are independent. Further, they are not orthogonal, which this issue does not lead to convergence based on the minimum squares of errors (Rezaee et al. 2017). Another deficiency of these learning algorithms is the dependence of the final weights on the initial weight matrix. Wrong estimation of the initial weights or large deviation among the experts’ opinions may lead to decreased efficiency of the algorithms and (or) in undesired states of the system (Papageorgiou et al. 2005). Therefore, the obtained final solutions of the NHL algorithm is not completely reliable.

Next, the performance of the extended Delta-rule algorithm is evaluated. The mechanism of it resembles the NHL algorithm, but it is based on the Delta-rule in the ANNs (Rezaee et al. 2017). As is obvious in the scatter plot in Fig. 12, the extended Delta-rule algorithm does not have appropriate performance in this study. After converging, it cannot successfully distinguish between various concepts, and most of the generated solutions are very close to each other. This performance can question the accuracy of the ranking, and decision-makers may not rely on the generated solutions by this algorithm. Hence, it cannot consider as an appropriate learning algorithm for this study. Generated solutions by this algorithm are in the interval [0.4731, 0.9085]. Still, this broad interval does not show the truth about the performance of this algorithm, and it suffers from generated solutions’ closeness. Although the algorithm can rank the generated solution, they are not distinguishable, and most of the solutions are near or on the regression line. Thus, it cannot compete with other learning algorithms.

Figure 13 exhibits the performance of the PSO algorithm, which, unlike NHL and extended Delta-rule algorithms, is a population-based algorithm. The population-based algorithm has less tendency to be trapped in the local minimum and is more reliable than Hebbian-based algorithms in this case. The scatter plot of the PSO algorithm in Fig. 13 demonstrates its weak performance in generating solutions with high separability. As mentioned before, PSO due to avoiding to generate unjustified solutions cannot properly distinguish between various concepts. The generated solutions are in the short interval of [0.7034, 0.9038]; however, in this short interval has an acceptable performance in diffusing solutions. Nevertheless, the closeness of the solution to the regression line indicates that decision-makers still cannot reliably analyze the problem and significance of the concepts. To overcome this shortcoming of the PSO algorithm, the PSO-STF has been proposed.

Figure 14 vividly demonstrates the excellent and promising performance of the PSO-STF in generating solutions with high separability. The solutions have spread in various areas, and their fluctuation indicates that PSO-STF can generate reliable solutions for decision-makers. Solutions have spread in the broad [0.2845, 0.9383] interval, and their dispersion than the regression line proves its acceptable performance.

In Fig. 15, a comparison has been accomplished between the generated solutions by the conventional FCM learning algorithms and BG-FCM and its learning algorithms for the “Supplier 1”. In this Figure, the NHL algorithm has spread in a short interval in which its values are abnormally large, so it cannot be a practical algorithm in this study. Then, it is clear that the extended Delta-rule algorithm after reaching the steady-state shows a linear behavior. The resolution of this solution is very poor, and, as illustrated in Fig. 12, the scatter plot for this algorithm shows a linear behavior. Most of the generated solutions are close to the regression line, indicating that the algorithm does not properly distinguish between concepts, and experts cannot precisely realize the significance of the concepts. This algorithm shows the weakest performance among the other algorithms. Moreover, neither NHL algorithm nor extended Delta-rule cannot emphasize on the most important concepts determined by experts (20 and 21). Although the PSO algorithm, due to implementing the NPE as the fitness function, can emphasize the important concepts of the system, it still cannot generate solutions with high separability. The PSO behaves more appropriately than the extended Delta-rule algorithm, but the behavior of this algorithm in Fig. 13 still shows near-linear behavior. The fluctuation of the generated solutions by this algorithm is weak and still cannot distinguishes between different concepts. The most desirable performance belongs to the PSO-STF, which can emphasize not only the most important concepts of the problem but also properly can generate solutions with high separability, and it is obvious in Fig. 14. Finally, The results of the final weights of the concepts for suppliers with BG-FCM and PSO-STF are presented in Table 4.

5.3 Evaluating suppliers

In the following, for evaluating the suppliers, after executing PSO-STF to all suppliers and achieving a steady map structure, the obtained values for suppliers are considered as their weights and importance. By aggregating the obtained weights, the PCS is obtained for each supplier. For instance, for “Supplier 1”, by aggregating weights of the first row of Table 4, the amount of 14.686 is achieved, which demonstrates its PCS. Then, by applying the obtained weights from BG-FCM for every criterion to the initial concepts’ value given by the CT on each criterion, and aggregating them, PCSAW is achieved to each supplier. For “Supplier 1”, by the scalar product of the first row of “Appendix 2” to the first row of Table 4, the amount of 10.45 is achieved. Finally, the PCSR is obtained by dividing the PCSAW into the highest possible PCS. The highest possible PCS belongs to “Supplier 8,” which is 15.4127. By dividing 10.45 to 15.4127, the amount of 0.6777 is achieved, which is PCSR for “Supplier 1”.

In the following, the suppliers’ PES is calculated according to the three important criteria of shipment quality, delivery time, and price. This score is calculated using the recorded data in Table 5 and Eqs. (11) to (14).

After obtaining the PCS and PES for suppliers, their final score is calculated, and they are ranked based on the obtained scores. In this regard, 70% of the PCS, and 30% of the PES are aggregated, and suppliers’ final scores are achieved. The final scores for every supplier are presented in Table 6. Three suppliers (5, 8, and 1) have the highest scores and ranked from first to third ranks, and the company wants to resume its cooperation with them. However, two suppliers (9 and 20) have the lowest scores, and the company will withdraw its cooperation with them. According to the obtained scores from Table 6, besides the two objective concepts “Customer Satisfaction” and “Quality of Service” which were selected as the Nash BG players, the three criteria “Certificates of the quality management system, health safety, environment, etc.”, “Presence in the list of accredited companies suppliers/foreign company agents or licensed production” and “Method of feasibility and creation of new products” are the most powerful criteria. It means, after “Customer Satisfaction” and “Quality of Service,” they have the most important role in selecting suppliers based on the experts’ opinions and output of the BG-FCM. Meanwhile, “Identification and tracking (raw materials, during manufacturing, final product) and packaging,” “Controlling of technical documentation at the workshop level” and “Logistics status of the organization” are the weakest criteria according to the proposed approach which indicates their weak importance in selecting suppliers.

6 Conclusion

Selecting proper suppliers in the competitive market is a vital factor for companies to achieve success. In this regard, the purpose of this research is to present a novel SS framework based on the combination of the FCM and Nash BG and compare it with conventional FCM, also related learning algorithms to evaluate and rank suppliers. The evaluation phase of the framework is based on aggregating the PCS and PES. First, evaluation criteria determined by CT are considered as the concepts of the FCM. For obtaining the original weights of the objective concepts, a new structure of the FCM is proposed based on the Nash BG (BG-FCM). By accomplishing a Nash BG between objective concepts, BG-FCM endeavor to reach their original weights in the real world by raising the NPE. For learning BG-FCM, a new modified learning algorithm is proposed based on the combination of the PSO algorithm and S-shaped transfer function (PSO-STF). This algorithm can successfully generate solutions with separability, which is very helpful for decision-makers. After constructing BG-FCM and reaching the steady-state, the final weights achieved by the map are considered as the significance of the related concepts in the problem, and PCS is obtained. It should be mentioned that for evaluating the BG-FCM and PSO-STF performance, they have been compared with some of the conventional structure and learning algorithms of the FCM. After obtaining PCS, the PES is obtained by mathematical equations for three important criteria of quality, delivery time, and price of the shipment. Finally, by aggregating PCS and PES, the final score of the suppliers is achieved, and they are ranked according to their final scores. In this study, three suppliers (5, 8, and 1) achieved the highest scores and had the best performance among other suppliers, and the company wants to have more cooperation with them. On the other hand, two suppliers (9 and 20) showed the weakest performance, and the company will not resume its cooperation with them and reject them. Moreover, the most powerful criteria which have the most outstanding effect on the evaluation of the suppliers (besides objective criteria) have been marked, and they are “Certificates of the quality management system, health safety, environment, etc.”, “Presence in the list of accredited companies suppliers/foreign company agents or licensed production” and “Method of feasibility and creation of new products.” Also, criteria that did not have an important role in evaluating suppliers were determined, and they were “Identification and tracking (raw materials, during manufacturing, final product) and packaging,” “Controlling of technical documentation at the workshop level” and “Logistics status of the organization.”

Examining the performance of the Nash BG utility function in other metaheuristic algorithms and examining other transfer functions to improve the performance of the algorithm can be one of the objects to future researches. Moreover, for determining the breakdown, payoff mathematical models can be proposed. It is also recommended to develop this research to evaluate the suppliers of service-based systems such as hospitals or transportation.

References

Abbaspour Onari M, Yousefi S, Jahangoshai Rezaee M (2020) Risk assessment in discrete production processes considering uncertainty and reliability: Z-number multi-stage fuzzy cognitive map with fuzzy learning algorithm. Artif Intell Rev. https://doi.org/10.1007/s10462-020-09883-w

Aboutorab H, Saberi M, Asadabadi MR, Hussain O, Chang E (2018) ZBWM: the Z-number extension of best worst method and its application for supplier development. Expert Syst Appl 107:115–125. https://doi.org/10.1016/j.eswa.2018.04.015

Alinezad A, Seif A, Esfandiari N (2013) Supplier evaluation and selection with QFD and FAHP in a pharmaceutical company. Int J Adv Manuf Technol 68(1–4):355–364. https://doi.org/10.1007/s00170-013-4733-3

Alizadeh A, Yousefi S (2019) An integrated Taguchi loss function–fuzzy cognitive map–MCGP with utility function approach for supplier selection problem. Neural Comput Appl 31(11):7595–7614. https://doi.org/10.1007/s00521-018-3591-1

Alizadeh S, Ghazanfari M (2009) Learning FCM by chaotic simulated annealing. Chaos Solitons Fractals 41(3):1182–1190. https://doi.org/10.1016/j.chaos.2008.04.058

Alizadeh S, Ghazanfari M, Jafari M, Hooshmand S (2007) Learning FCM by tabu search. Int J Comput Sci 2(2):142–149

Anezakis V-D, Dermetzis K, Iliadis L, Spartalis S (2016) Fuzzy cognitive maps for long-term prognosis of the evolution of atmospheric pollution, based on climate change scenarios: the case of Athens. In: Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics), vol 9875 LNCS. Springer, pp 175–186. https://doi.org/10.1007/978-3-319-45243-2_16

Araz C, Ozkarahan I (2007) Supplier evaluation and management system for strategic sourcing based on a new multicriteria sorting procedure. Int J Prod Econ 106(2):585–606. https://doi.org/10.1016/j.ijpe.2006.08.008

Axelrod R (1976) The cognitive mapping approach to decision making. In: Structure of decision, p 221–250

Azadeh A, Ghaderi SF, Pashapour S, Keramati A, Malek MR, Esmizadeh M (2017) A unique fuzzy multivariate modeling approach for performance optimization of maintenance workshops with cognitive factors. Int J Adv Manuf Technol 90(1–4):499–525. https://doi.org/10.1007/s00170-016-9208-x

Bakhtavar E, Yousefi S (2018) Assessment of workplace accident risks in underground collieries by integrating a multi-goal cause-and-effect analysis method with MCDM sensitivity analysis. Stoch Environ Res Risk Assess 32(12):3317–3332. https://doi.org/10.1007/s00477-018-1618-x

Banaeian N, Mobli H, Fahimnia B, Nielsen IE, Omid M (2018) Green supplier selection using fuzzy group decision making methods: a case study from the agri-food industry. Comput Oper Res 89:337–347. https://doi.org/10.1016/j.cor.2016.02.015

Bevilacqua M, Ciarapica FE, Marcucci G, Mazzuto G (2018) Conceptual model for analysing domino effect among concepts affecting supply chain resilience. Supply Chain Forum 19(4):282–299. https://doi.org/10.1080/16258312.2018.1537504

Bourgani E, Stylios CD, Manis G, Georgopoulos V. C (2014) Time dependent fuzzy cognitive maps for medical diagnosis. In: Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics), vol 8445 LNCS. Springer, pp 544–554. https://doi.org/10.1007/978-3-319-07064-3_47

Chandramohan A, Rao MVC, Senthil Arumugam M (2006) Two new and useful defuzzification methods based on root mean square value. Soft Comput 10(11):1047–1059. https://doi.org/10.1007/s00500-005-0042-6

Chen YJ (2011) Structured methodology for supplier selection and evaluation in a supply chain. Inf Sci 181(9):1651–1670. https://doi.org/10.1016/j.ins.2010.07.026

Choi TY, Hartley JL (1996) An exploration of supplier selection practices across the supply chain. J Oper Manag 14(4):333–343. https://doi.org/10.1016/S0272-6963(96)00091-5

Clerc M, Kennedy J (2002) The particle swarm—explosion, stability, and convergence in a multidimensional complex space. IEEE Trans Evol Comput 6(1):58–73. https://doi.org/10.1109/4235.985692

Dabbagh R, Yousefi S (2019) A hybrid decision-making approach based on FCM and MOORA for occupational health and safety risk analysis. J Saf Res 71:111–123. https://doi.org/10.1016/j.jsr.2019.09.021

Dickerson JA, Kosko B (1994) Virtual worlds as fuzzy cognitive maps. Presence Teleoper Virtual Environ 3(2):173–189. https://doi.org/10.1162/pres.1994.3.2.173

de Castro Vivas R, Sant’Anna AMO, Esquerre KPSO, Freires FGM (2020) Integrated method combining analytical and mathematical models for the evaluation and optimization of sustainable supply chains: a Brazilian case study. Comput Ind Eng 139:105670. https://doi.org/10.1016/j.cie.2019.01.044

Froelich W, Juszczuk P (2009) Predictive capabilities of adaptive and evolutionary fuzzy cognitive maps—a comparative study. Stud Comput Intell 252:153–174. https://doi.org/10.1007/978-3-642-04170-9_7

Groumpos PP (2010) Fuzzy cognitive maps: basic theories and their application to complex systems. Stud Fuzziness Soft Comput 247:1–22. https://doi.org/10.1007/978-3-642-03220-2_1

Ho W, Xu X, Dey PK (2010) Multi-criteria decision making approaches for supplier evaluation and selection: a literature review. Eur J Oper Res 202(1):16–24. https://doi.org/10.1016/j.ejor.2009.05.009

Hoyle D (2005) Automotive quality systems handbook: ISO/TS 16949, 2002nd edn. Elsevier, Amsterdam

Irani Z, Sharif A, Kamal MM, Love PED (2014) Visualising a knowledge mapping of information systems investment evaluation. Expert Syst Appl 41(1):105–125. https://doi.org/10.1016/j.eswa.2013.07.015

Irani Z, Kamal MM, Sharif A, Love PED (2017) Enabling sustainable energy futures: factors influencing green supply chain collaboration. Prod Plann Control 28(6–8):684–705. https://doi.org/10.1080/09537287.2017.1309710

Jahangoshai Rezaee M, Shokry M (2017) Game theory versus multi-objective model for evaluating multi-level structure by using data envelopment analysis. Int J Manag Sci Eng Manag 12(4):245–255. https://doi.org/10.1080/17509653.2016.1249425

Jahangoshai Rezaee M, Yousefi S (2018) An intelligent decision making approach for identifying and analyzing airport risks. J Air Transp Manag 68:14–27. https://doi.org/10.1016/j.jairtraman.2017.06.013

Jahangoshai Rezaee M, Yousefi S, Hayati J (2019) Root barriers management in development of renewable energy resources in Iran: an interpretative structural modeling approach. Energy Policy 129:292–306. https://doi.org/10.1016/j.enpol.2019.02.030

Jahangoshai Rezaee M, Moini A, Haji-Ali Asgari F (2012a) Unified performance evaluation of health centers with integrated model of data envelopment analysis and bargaining game. J Med Syst 36:3805–3815. https://doi.org/10.1007/s10916-012-9853-z

Jahangoshai Rezaee M, Moini A, Makui A (2012b) Operational and non-operational performance evaluation of thermal power plants in Iran: a game theory approach. Energy 38(1):96–103. https://doi.org/10.1016/j.energy.2011.12.030

Jahangoshai Rezaee M, Yousefi S, Valipour M, Dehdar MM (2018) Risk analysis of sequential processes in food industry integrating multi-stage fuzzy cognitive map and process failure mode and effects analysis. Comput Ind Eng 123:325–337. https://doi.org/10.1016/j.cie.2018.07.012

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: Proceedings of ICNN’95—international conference on neural networks, vol 4. IEEE, pp 1942–1948. https://doi.org/10.1109/ICNN.1995.488968

Kim MC, Kim CO, Hong SR, Kwon IH (2008) Forward-backward analysis of RFID-enabled supply chain using fuzzy cognitive map and genetic algorithm. Expert Syst Appl 35(3):1166–1176. https://doi.org/10.1016/j.eswa.2007.08.015

Kosko B (1986) Fuzzy cognitive maps. Int J Man Mach Stud 24(1):65–75. https://doi.org/10.1016/S0020-7373(86)80040-2

Koulouriotis DE, Diakoulakis IE, Emiris DM (2001) Anamorphosis of fuzzy cognitive maps for operation in ambiguous and multi-stimulus real world environments. In: 10th IEEE international conference on fuzzy systems (Cat. No. 01CH37297), vol. 3. IEEE, pp 1156–1159

Kyriakarakos G, Patlitzianas K, Damasiotis M, Papastefanakis D (2014) A fuzzy cognitive maps decision support system for renewables local planning. Renew Sustain Energy Rev. https://doi.org/10.1016/j.rser.2014.07.009

Lambertini L (2011) Game theory in the social sciences: a reader-friendly guide. Routledge, London

Lamba K, Singh SP, Mishra N (2019) Integrated decisions for supplier selection and lot-sizing considering different carbon emission regulations in Big Data environment. Comput Ind Eng 128:1052–1062. https://doi.org/10.1016/j.cie.2018.04.028

Li Y, Abtahi AR, Seyedan M (2019) Supply chain performance evaluation using fuzzy network data envelopment analysis: a case study in automotive industry. Ann Oper Res 275(2):461–484. https://doi.org/10.1007/s10479-018-3027-4

Liu T, Deng Y, Chan F (2018) Evidential supplier selection based on DEMATEL and game theory. Int J Fuzzy Syst 20(4):1321–1333. https://doi.org/10.1007/s40815-017-0400-4

Lu LYY, Wu CH, Kuo TC (2007) Environmental principles applicable to green supplier evaluation by using multi-objective decision analysis. Int J Prod Res 45(18–19):4317–4331. https://doi.org/10.1080/00207540701472694

Luo X, Wei X, Zhang J (2009) Game-based learning model using fuzzy cognitive map. In: Proceedings of the first ACM international workshop on Multimedia technologies for distance learning, pp 67–76

Mulazzani L, Manrique R, Malorgio G (2017) The role of strategic behaviour in ecosystem service modelling: integrating bayesian networks with game theory. Ecol Econ. https://doi.org/10.1016/j.ecolecon.2017.04.022

Nash JF (1950) The bargaining problem. Econometrica 18(2):155. https://doi.org/10.2307/1907266

Nikas A, Doukas H (2016) Developing robust climate policies: a fuzzy cognitive map approach. In: International series in operations research and management science, vol 241. Springer New York LLC, pp 239–263. https://doi.org/10.1007/978-3-319-33121-8_11

Nikas A, Ntanos E, Doukas H (2019) A semi-quantitative modelling application for assessing energy efficiency strategies. Appl Soft Comput J 76:140–155. https://doi.org/10.1016/j.asoc.2018.12.015

Osborne MJ, Rubinstein A (1994) A course in game theory. MIT press, Cambridge

Özgen D, Önüt S, Gülsün B, Tuzkaya UR, Tuzkaya G (2008) A two-phase possibilistic linear programming methodology for multi-objective supplier evaluation and order allocation problems. Inf Sci 178(2):485–500. https://doi.org/10.1016/j.ins.2007.08.002

Pal O, Gupta AK, Garg RK (2013) Supplier selection criteria and methods in supply chains: a review. Int J Soc Manag Econ Bus Eng 7(10):1403–1409

Papageorgiou EI (2013) Fuzzy cognitive maps for applied sciences and engineering: from fundamentals to extensions and learning algorithms, vol 54. Springer, Berrlin

Papageorgiou EI, Groumpos PP (2005a) A new hybrid method using evolutionary algorithms to train fuzzy cognitive maps. Appl Soft Comput J 5(4):409–431. https://doi.org/10.1016/j.asoc.2004.08.008

Papageorgiou EI, Groumpos PP (2005b) A weight adaptation method for fuzzy cognitive map learning. Soft Comput 9(11):846–857. https://doi.org/10.1007/s00500-004-0426-z

Papageorgiou EI, Poczęta K (2017) A two-stage model for time series prediction based on fuzzy cognitive maps and neural networks. Neurocomputing 232:113–121. https://doi.org/10.1016/j.neucom.2016.10.072

Papageorgiou EI, Stylios CD, Groumpos PP (2004) Active Hebbian learning algorithm to train fuzzy cognitive maps. Int J Approx Reason 37(3):219–249. https://doi.org/10.1016/j.ijar.2004.01.001

Papageorgiou EI, Parsopoulos KE, Stylios CS, Groumpos PP, Vrahatis MN (2005) Fuzzy cognitive maps learning using particle swarm optimization. J Intell Inf Syst 25(1):95–121. https://doi.org/10.1007/s10844-005-0864-9

Papageorgiou EI, Stylios C, Groumpos PP (2006) Unsupervised learning techniques for fine-tuning fuzzy cognitive map causal links. Int J Hum Comput Stud 64(8):727–743. https://doi.org/10.1016/j.ijhcs.2006.02.009

Papageorgiou EI, Subramanian J, Karmegam A, Papandrianos N (2015) A risk management model for familial breast cancer: a new application using fuzzy cognitive map method. Comput Methods Programs Biomed 122(2):123–135. https://doi.org/10.1016/j.cmpb.2015.07.003

Ramezankhani MJ, Torabi SA, Vahidi F (2018) Supply chain performance measurement and evaluation: a mixed sustainability and resilience approach. Comput Ind Eng 126:531–548. https://doi.org/10.1016/j.cie.2018.09.054

Ren Z (2007) Learning fuzzy cognitive maps by a hybrid method using nonlinear Hebbian learning and extended great deluge algorithm. www.aaai.org

Rezaee MJ, Yousefi S, Babaei M (2017) Multi-stage cognitive map for failures assessment of production processes: an extension in structure and algorithm. Neurocomputing 232:69–82. https://doi.org/10.1016/j.neucom.2016.10.069

Sabouhi F, Pishvaee MS, Jabalameli MS (2018) Resilient supply chain design under operational and disruption risks considering quantity discount: a case study of pharmaceutical supply chain. Comput Ind Eng 126:657–672. https://doi.org/10.1016/j.cie.2018.10.001

Salmeron JL, Rahimi SA, Navali AM, Sadeghpour A (2017) Medical diagnosis of rheumatoid arthritis using data driven PSO–FCM with scarce datasets. Neurocomputing 232:104–112. https://doi.org/10.1016/j.neucom.2016.09.113

Shojaei P, Haeri SAS (2019) Development of supply chain risk management approaches for construction projects: a grounded theory approach. Comput Ind Eng 128:837–850. https://doi.org/10.1016/j.cie.2018.11.045

Singh PK, Nair A (2014) Livelihood vulnerability assessment to climate variability and change using fuzzy cognitive mapping approach. Clima Change 127(3–4):475–491. https://doi.org/10.1007/s10584-014-1275-0

Stach WJ (2010) Learning and aggregation of Fuzzy Cognitive Maps—an evolutionary approach. https://doi.org/10.7939/R32M6Z

Stylios CD, Georgopoulos VC, Malandraki GA, Chouliara S (2008) Fuzzy cognitive map architectures for medical decision support systems. Appl Soft Comput J 8(3):1243–1251. https://doi.org/10.1016/j.asoc.2007.02.022

van Vliet M, Kok K, Veldkamp T (2010) Linking stakeholders and modellers in scenario studies: the use of Fuzzy cognitive maps as a communication and learning tool. Futures 42(1):1–14. https://doi.org/10.1016/j.futures.2009.08.005

Yousefi S, Mahmoudzadeh H, Jahangoshai Rezaee M (2017) Using supply chain visibility and cost for supplier selection: a mathematical model. Int J Manag Sci Eng Manag 12(3):196–205. https://doi.org/10.1080/17509653.2016.1218307

Yousefi S, Jahangoshai Rezaee M, Solimanpur M (2019) Supplier selection and order allocation using two-stage hybrid supply chain model and game-based order price. Oper Res Int Journal. https://doi.org/10.1007/s12351-019-00456-6

Zadeh LA (1965) Fuzzy sets. Inf Control 8(3):338–353. https://doi.org/10.1016/S0019-9958(65)90241-X

Zare Ravasan A, Mansouri T (2016) A dynamic ERP critical failure factors modelling with FCM throughout project lifecycle phases. Prod Plann Control 27(2):65–82. https://doi.org/10.1080/09537287.2015.1064551

Zhu Y, Zhang W (2008) An integrated framework for learning fuzzy cognitive map using RCGA and NHL algorithm. In 2008 international conference on wireless communications, networking and mobile computing, WiCOM 2008. IEEE, pp 1–5. https://doi.org/10.1109/WiCom.2008.2527

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Abbaspour Onari, M., Jahangoshai Rezaee, M. A fuzzy cognitive map based on Nash bargaining game for supplier selection problem: a case study on auto parts industry. Oper Res Int J 22, 2133–2171 (2022). https://doi.org/10.1007/s12351-020-00606-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12351-020-00606-1