Abstract

Meshless methods, that appeared in the early nineties, constitute nowadays an appealing method for the simulation of forming processes. In this review we revisit the basic ingredients of the most common of such methods, by analyzing their theoretical foundations, applicability and limitations, and give some examples of performance to show the wide variety of situations in which they can be employed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Although there are some examples of meshless methods dating back to the late seventies [57], the strong development of meshless methods came after the little revolution provoked by the seminal paper of Villon and coworkers on the so-called “diffuse element method” (DEM) [86] and the popularity given to them by some modifications introduced on it by Belytschko and coworkers to create the “Element Free Galerkin” (EFG) method [14, 15] and by Liu to create the somehow equivalent Reproducing Kernel Particle Method (RKPM) [80].

These pioneering works opened the possibility to develop numerical methods without the need for time-consuming meshing procedures, since the connectivity of the “elements” was created by the method itself in a process transparent to the user. What is even more important, meshless methods do not suffer from lack of accuracy due to mesh (or cloud) distortion, as finite elements do. This is why, some years later, a great interest was paid on them in the forming processes community [24].

After those initial years of exploration, meshless methods came into an age of maturity once their theoretical foundations were established by Oden and coworkers [42], and Babuška and coworkers [9, 10]. The flexibility, nice properties such as arbitrary degree of consistency or continuity, the never-before obtained spectacular simulations obtained with these methods, and also, why not, the understanding they provide on the sound properties of finite elements, provoked a decade or more of strong popularity of meshless methods and very active research interest on the numerical community. A plethora of modifications or (very often slight) improvements over existing methods gave rise to an almost endless list of different names for methods that share some common characteristics (notably, the lack of sensitivity to mesh distortion) but that nowadays remains a difficulty for those coming for the first time to the field.

After these years of maturity, only some methods came into play with really different characteristics to the ones mentioned before. Among them we can cite the Natural Element Method (NEM) [100], or the Maximum Entropy (MaxEnt) methods [6, 98]. They respond to some of the most important criticisms of meshless methods, namely the lack of true interpolation along the boundary of the domain, which leads to difficulties on the imposition of essential boundary conditions, and (although only partially in the case of MaxEnt) numerical integration errors.

In this paper a review is made on the use of meshless methods, with a particular emphasis on their usage in the framework of material forming, irrespective of the particular process considered. There have been some prior reviews on the field of meshless methods and, although some of them are nowadays somewhat old, we recommend the interested reader to consult [16, 17, 77, 87], to name a few. There are also some books available on the topic, such as [78], some of them specifically devoted to aspects related to forming processes [27, 28].

During these years, meshless methods have successfully been applied to the simulation of a variety of forming processes, involving both solid and fluids, but with the main characteristic of employing, in general, an updated Lagrangian perspective for the description of the equations of motion. This is perhaps the most relevant novelty that meshless methods introduced, when viewed form the forming process point of view: they successfully overcome the traditional difficulty related to mesh distortion (or numerical diffusion, if prefer to employ extensive remeshings, as in many commercial codes in the field).

In this paper a review is made of the most relevant meshless (or meshfree) approaches to the field of material forming. First, a brief overview of the theoretical aspects is made in Section “Theoretical foundations of meshless methods”. In it, common aspects of all meshless methods are reviewed, as well as those characteristics that truly differentiate them, far beyond the long list of different names for methods that differ only in very subtle details. These include the different approximation schemes, the numerical integration schemes available to perform quadrature of the weak form of the equations, and the imposition of essential boundary conditions. These are some of the most prominent aspects of meshless methods in today’s literature.

To provide the reader with a clear picture on where do we stand on the field, Section Examples of application includes some interesting examples of application of meshless methods that explore the ability of these methods to be applied under general conditions for the simulation of material forming processes.

Theoretical foundations of meshless methods

In this section an overview of the theoretical foundations of meshless methods is made. Particular attention is paid to the three most relevant aspects of any of such methods, namely, its approximation scheme (strictly related to its degree of continuity and consistency), its numerical integration schemeFootnote 1 and aspects related to the imposition of essential boundary conditions. These aspects are here judged as the most relevant ones for the field of forming processes. For instance, the degree of consistency of each method (the order of the polynomial they are able to reproduce exactly) is of utmost importance in the development of stable (LBB-compliant) approximations when dealing with incompressible media, as in plasticity or fluid mechanics. The degree of continuity is generally not so important, but having smooth (differentiable) approximations is essential when developing shell formulations for sheet metal forming, for instance. In turn, numerical integration is a well known source of error when dealing with meshless approximations, due to their inherent non-polynomial character, and this is so irrespective of the particular application. Finally, many physical phenomena occur near the boundaries of the domain (contact, friction, merging flows, among others), so reproducing accurately the essential field of the problem in these regions is again a key aspect. That is why the imposition of essential boundary conditions or, more generally, the accurate interpolation of the displacement (velocity) field along the boundary is often required, and not always achieved, for meshless methods.

Meshless approximations

Probably the best form to integrate a meshless method within an existing finite element solver is to think of a finite element as the set of a particular approximation scheme (in this case, an interpolation scheme formed by piecewise polynomials) and an integration cell where Gauss quadrature is performed (again, in the case of finite elements, the intersection of the supports of its nodal shape functions). By combining different approximation schemes and different quadrature schemes, as will be seen, different meshless methods can be obtained. We review (not exhaustively) in this section the most important ones in a historic sense. In the review, moving least squares, natural neighbor, and local maximum entropy approximations are reviewed. Although not the only ones in the literature, these three approximation schemes somewhat represent three different ages in the development of meshless methods, for reasons that will be clear soon.

Moving least squares approximation

Although not the first in a strict historical sense, the responsible for the popularity of meshless methods is the so-called Diffuse Element Method (DEM) [86] and, notably, the Element-Free Galerkin method (EFG) [14, 15]. Both are based upon the approximation of the essential field of the problem (here, assume the displacement) through a moving least squares approximation. We do not include here the Smooth Particle Hydrodynamics method (SPH) [57, 77, 83], since it lacks of some very basic properties such as linear consistency, nor the Reproducing Kernel Particle Method (RKPM) [65, 80, 81], that has been shown to be equivalent, despite its very different origin, to the EFG [16].

In general, not only in EFGM, the domain \(\Omega \) is discretized by cloud of nodes, rather than a mesh, as seen in Fig. 1. In EFG methods, the essential field (usually, the displacement or velocity fields) is approximated as

where m refers to number of terms in the basis, \(p_{i}(\boldsymbol {x})\) constitutes a polynomial basis up the desired order and, finally, \(a_{i}(\boldsymbol {x})\) are the coefficients to determine, that notably depend on \(\boldsymbol {x}\).

As a polynomial basis, in one dimension, the linear one is often used

or a quadratic one

which in two dimensions reads

The approximation given by Eq. 1 can be made local through

where coefficients \(a_{i}\) are obtained by minimization of a functional composed by the difference between the local approximation to the sought function and the essential field itself, in a least squares sense, i.e., by minimizing the following quadratic functional

where \(I=1, \ldots , n\) represents the number of nodes in the model.

Weighting, and the corresponding local character of the approximation, is given by the function \(w(\boldsymbol {x}-\boldsymbol {x}_{I})\).This function is often a gaussian or a cubic spline. We can then re-formulate the functional as

where, as usual,

(in a one-dimensional case) and \(\boldsymbol {P}\)

where, finally, \(\boldsymbol {W}\)

To determine the coefficient’s value, it is then necessary to minimize J

Matrix \(\boldsymbol {A}\) is called matrix of moments and has the following expression

such that

or, equivalently,

where

and where k denotes the order of the approximation. The resulting shape function, for the quadratic case, is depicted in Fig. 2 for the interval [0,1] discretized with eleven nodes. Different support sizes for W are considered, from \(r=2h\), with h the nodal spacing, to \(r=4h\).

Duarte and Oden [42] studied the EFG method and established for the first time one key characteristic of the method: that the shape functions constitute a partition of unity and that, despite the order of the approximation (there called intrinsic), there is the possibility of establishing and extrinsic enrichment of these functions so as to be able to make them reproduce a polynomial or arbitrary order. Melenk and Babuška generalized this approach by defining the so-called Partition of Unity method [9, 10]:

where \(\beta _{ji}\) represent the new unknowns of the problem (additional degrees of freedom per node) and \(p_{j}\) represent a basis including monomials up to a certain degree (here, care must be taken so as not to produce linear dependencies with the basis \(\phi _{i}\), see details in [11]).

In general, EFG methods acquired a great popularity for some years, but still present some notable drawbacks. Some of them are common for most meshless methods, others are particular of EFG. Among them we can cite the imposition of essential boundary conditions (due to the influence of interior nodes on the boundary, see Fig. 1), and the errors related to numerical integration (due to the use of quadrature cells not conforming to shape functions’ support and the use of non-polynomial shape functions, see Eq. 2), that will be discussed specifically in Sections “Imposition of essential boundary conditions” and “Numerical integration”.

Natural neighbor approximation

In the quest for a method free of errors in the interpolation of the essential variable along the boundary, the Natural Element Method (NEM) [99–101] was the first successful attempt. The NEM was originally a Galerkin method in which interpolation was achieved through natural neighbor (NN) methods [12, 13, 95, 96].

NEM, as was the case in EFGM, also construct the connectivity of each integration point in a process transparent to the user (and thus the appearance of no need of any mesh), but relies on the concept of Delaunay triangulations to do it. Although Delaunay triangulations are widely used in FE technology to construct meshes (it can be demonstrated that it is the best possible mesh in two dimensions), here the advantage is not the lack of any mesh, but the good accuracy provided by the NEM despite the quality or distortion of this triangulation, as proved in [100] for the first time and also in [3, 29, 30, 32], among other references.

The Delaunay triangulation \(\mathcal {D}\) [40] of a cloud of nodes \(X=\{ \boldsymbol {x}_{1}, \boldsymbol {x}_{2}, \ldots ,\boldsymbol {x}_{N} \} \subset \mathbb {R}^{d}, d=2,3\), is the unique triangulation of the cloud that satisfies the so-called circumcircle criterion, i.e., no node of the cloud lies within the circumcircle of any triangle (see Fig. 3).

The dual structure of the Delaunay triangulation is the Voronoi diagram. It is composed by a tessellation of the space into cells of the form:

where \(T_{I}\) represents the Voronoi cell and \(d(\cdot ,\cdot )\) the Euclidean distance. The simplest interpolation scheme that can be constructed on top of this geometrical construction is the so-called Thiessen interpolation, a piecewise constant interpolation within each Voronoi cell [104], which is therefore of continuity \(\mathcal {C}^{-1}\) and that has been used for mixed velocity-pressure approximations in [58, 61], among others.

But the undoubtedly most popular natural neighbor interplant is due to Sibson [96]. If we define the second-order Voronoi cell as

then, the Sibsonian shape function is defined as (see Fig. 4):

where \(\kappa (\boldsymbol {x})\) and \(\kappa _{I}(\boldsymbol {x})\) represents the Lebesgue measure of the cells \(T_{x}\) and \(T_{xI}\), respectively.

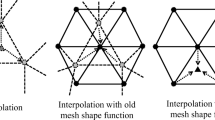

Thus defined, natural neighbor interpolation has some remarkable properties, if compared to EFG shape functions. For instance, NN shape functions are smooth (\(\mathcal {C}^{1}\)) everywhere except from the nodes, where they are simply continuous. A simpler form to calculate NN interpolation (termed Laplace interpolation) that involves area computations instead of volumes (but produces less smooth shape functions) has been proposed in [12, 13]. Also, an alternative definition has also been proposed by Hiyoshi and Sugihara to achieve any degree of continuity in [69]. See Fig. 5 for a comparison of different shape functions on a regular grid of nodes. All the before mentioned interpolation schemes posses linear consistency, but when needed (as in the case of plasticity, where incompressibility restraints needs for the use of LBB-compliant mixed interpolations) higher-order NN interpolations can also be achieved [62]. Even a combination of NN with bubble shape functions seems to give good result for incompressible media [108].

In addition to these interesting properties, in the pioneer work of Sukumar it was reported they Sibson interpolation was interplant along convex boundaries (which is in sharp contrast to EFGM, for instance). Albeit in non-convex domains errors of about 2 % were reported due to the lack of true interpolation. Later on, in [101], Sukumar claimed the interpolatory character of Laplace functions along the boundary, although in [31] some counter-examples were found that demonstrate that in concave domains this interpolation may not be true and proposed some nodal spacing restrictions so as to ensure a proper imposition of essential boundary conditions. For a deeper analysis of the issue of imposition of essential boundary conditions we refer the reader to Section “Imposition of essential boundary conditions”.

Despite these interesting properties of NN interpolation (and others explained in Section “Imposition of essential boundary conditions”), the main drawback of NEM is perhaps its high computational cost, especially for Sibson interpolation. In [3] a deep analysis of the computational cost of several meshless methods was accomplished. In it, it was shown that mesh distortion could lead to important inaccuracies when dealing with finite elements in practical applications, while Sibson interpolation is several orders of magnitude heavier to compute than traditional piecewise polynomial shape functions for finite elements. However, in non-linear computations, while frequent Newton-Raphson iterations are needed, the relative cost of shape function computation is obscured by the cost of updating tangent stiffness matrices.

Local maximum entropy approximation

In the quest for the “perfect” meshless method, local maximum entropy (hereafter max-ent) methods are maybe the last to come into play. Sukumar seems to have been the first in employing global max-ent methods to solve PDEs [98]. Max-ent approximations provide smoothness (not easily attainable by NEM), interpolation on the boundary (not easy to obtain with EFG), but are non-local in nature. That is why the original work of Sukumar proposed to use this type of interpolation on polygonally-shaped cells, thus avoiding full matrices.

More recently, Arroyo and Ortiz [6] proposed a new form of local max-ent approximation, by viewing it as a problem of statistical inference in which the linear consistency requirement is set as a restriction to the problem. A new parameter controls the support of the shape function, thus making unnecessary to construct it over polygons.

To see the form of this new way of constructing meshless methods, consider as usual a set of nodes \(X=\{ \boldsymbol {x}_{1}, \boldsymbol {x}_{2}, \ldots , \boldsymbol {x}_{N} \} \subset \mathbb {R}^{d}\). Let \(u:\text { conv}X\rightarrow \mathbb {R}\) be a function whose values \(\{u_{I};\;I=1,\ldots , N\}\) are known on the node set. “\(\text {conv}\)” represents here the convex hull of the node set. Consider an approximation of the form

where the functions \(\phi _{I}: \text { conv}X\rightarrow \mathbb {R}\) represent the shape or basis functions. These functions are required, to be useful in the solution of second-order PDEs, to satisfy the zeroth and first-order consistency conditions:

If these shape functions are, in addition, non-negative (\(\phi _{I}(\boldsymbol {x}) \geq 0\;\; \forall \boldsymbol {x}\in \text {conv}X\)), then, the approximation scheme given by Eq. 2.1.3 is referred to as a convex combination, see for instance [46].

Since we look for positive functions \(\phi _{I}\), the max-ent rationale is based upon the consideration of such functions as probability measures [98]. In this sense, the Shannon entropy of a discrete probability distribution is given by:

Proceeding in this way, the basis function value \(\phi _{I}(\boldsymbol {x})\) is viewed as the probability of influence of a node I at a position \(\boldsymbol {x}\) [98]. The problem of approximating a function from scattered data can thus be viewed as a problem of statistical inference. Following [6], the optimal, or least biased, convex approximation scheme (at least from the information-theoretical point of view) is the solution of the problem

Proofs of the existence and uniqueness of the solution to this problem are given in [6].

While approximations obtained after solving the problem (4) are global by definition (and thus would produce full matrices when applied in a Galerkin framework), Arroyo and Ortiz proposed a local max-ent approximation by adding spatial correlation to the problem given by Eq. 4. In this way, the width of the shape function \(\phi _{I}\) can be defined [6] as

which is equivalent to the second moment of \(\phi _{I}\) about \(\boldsymbol {x}_{I}\). The most local approximation is that which minimizes

subject to the constraints given by Eqs. 3a, 3b and the positivity restraint.

The new problem

has solutions if and only if \(\boldsymbol {x}\) belongs to the convex hull of the set of points [6]. If these points are in general position, then the problem (6) has unique solution, corresponding to the piecewise affine shape functions supported by the unique Delaunay triangulation associated with the node set X (see [6] and references therein for the proof of this assertion).

Arroyo and Ortiz [6] found an elegant solution to the problem of finding a local approximation satisfying all the interesting properties of a (global) Maximum Entropy approximation by seeking a compromise between problems (4) and (5):

Proofs of the existence and uniqueness of problem (6) are also given in the before mentioned reference. Note that the evaluation of the approximation (6) does not require the solution of this problem. It is enough to solve an unconstrained minimization problem that arises from the dual form of the problem (6). The calculation of the shape function derivatives is also explicit, see [6].

In general, max-ent shape functions provide with a smooth approximation of the essential field, but the max-ent shape functions recover the piece-wise linear polynomials over the Delaunay triangulation of the point set if the parameter \(\beta \) tends to infinity (see Fig. 6). Thus defined, the method possesses linear consistency, very much like natural neighbor interpolation. It is possible, however, to obtain a (very complex) quadratic form [37] or, by extending the algorithm initially designed for NEM in [62], to obtain any degree of consistency also in a max-ent approach [64]. The computational cost of max-ent approaches is considerably less than that of NEM (and higher than the corresponding FEM approach), making it an appealing candidate for the simulation of complex forming processes [63, 91]. We will compare some results in Section “ Examples of application” to see the relative importance of this assertion in practical implementations.

Influence of the \(\beta \) parameter on the resulting shape function. Functions \(\phi _{I}(\boldsymbol {x})\) for the point located at the centre of the cloud and parameters \(\beta =0.2\), 0.6, 2.0 and 4.0, respectively. Note the different supports, but also the different heights of the functions on the scale

Imposition of essential boundary conditions

As introduced before, the imposition of essential boundary conditions, something very natural in finite elements, has been a kind of nightmare for meshless methods. See the excellent analysis by Huerta and coworkers in [48]. Other works on the topic include [65, 66]. This is so since, due to the inherent unstructured connectivity between nodes in the model, interior nodes could eventually influence the result on the boundary, see Fig. 7. In general, EFG or RKP methods, whose shape function’s support is normally circular or rectangular, present this problem. Also very frequently shape functions do not verify Kronecker delta properties (i.e., the approximated function does not pass through nodal values), but this is normally easier to overcome by several methods.

One of the earliest methods to impose essential boundary conditions in EFG methods was simply to couple them with a strip of finite elements along the boundary [47, 70, 75]. But obviously this method somewhat eliminates the meshless character of the approximation, although it is very simple to implement. Other techniques include constrained variational principles [56], penalty formulations, Lagrange multipliers, and many others, but in general focused in the lack of Kronecker delta condition [21] and therefore did not attain a true interpolation along the boundary.

If we restrict ourselves to the case of NEM, it was assumed that the Sibson approach was interplant along convex boundaries. In [29] it was demonstrated that through the use of he concept of \(\alpha \)-shapes a true interpolation could be achieved even in non-convex boundaries. \(\alpha \)-shapes are particular instances of the so-called shape constructors. Shape constructors are geometrical techniques that enable to find the shape of the domain, described solely by a cloud of nodes, at each time step. \(\alpha \)-shapes [43] have been employed in a number of previous works involving free surface flows, see for instance [18, 61, 71, 72, 84, 88, 89], among others. In essence, through the definition of a parameter \(\alpha \) that represents the level of detail up to which the geometry is to be represented, this technique allows to extract almost with no user intervention the actual geometry of the domain, see Fig. 8.

Evolution of the family of \(\alpha \)-shapes of a cloud of points representing a wave breaking on a beach. Different shapes for different \(\alpha \) values (\(\mathcal {S}_{\alpha =0}\) or cloud of points (a), \(\mathcal {S}_{0.5}\) (b), \(\mathcal {S}_{1.0}\) (c), \(\mathcal {S}_{2.0}\) (d), \(\mathcal {S}_{3.0}\) (e) and \(\mathcal {S}_{\infty }\) (f)) are depicted

Therefore, in addition to the importance of the true interpolation achieved by this method, \(\alpha \)-shapes (or, in general, shape constructors) provide a very efficient means to deal with nodal clouds evolving in time, as will be analyzed in Section “Examples of application” for fluid mechanics problems. Fragmentation, coalescence, merging flows, etc., can be treated with no user intervention very conveniently in this way.

In [106] a new approach was developed for the imposition of essential boundary conditions in the context of the NEM (Constrained-NEM, or C-NEM) that is based upon the usage of a visibility criterion, a concept initially developed in the context of EFGM [90]. It can be demonstrated that, up to some differences in the implementation aspects, it produces results entirely equivalent to those obtained by employing \(\alpha \)-shapes, but needs for an explicit description of the boundary of the domain in the form of a triangulation or planar straight line graph [27, 28, 73, 74, 107, 109].

For Laplace-type of NN interpolations, it was initially claimed [101] that they were interplant even on non-convex domains, but in [31] it was proved that this interpolation was only node-wise and that some counter-examples could be found. An alternative method was proposed in this last reference to impose exactly essential boundary conditions by employing a planar straight line graph describing the boundary.

If we restrict ourselves to the case of local max-ent approximation, in [6] a demonstration is included that proves that the method possesses a weak Kronecker delta property, i.e., for convex domains, the value of shape functions associated to interior nodes vanishes on the boundary. Thus imposing the exact value of the nodal displacement with Lagrange multipliers, for instance, will suffice to verify essential boundary conditions straightforwardly, very much like in finite elements. This is in general not true for non-convex domains, however. In [62] this approach was later generalized to arbitrary order of consistency. See Fig. 9 for an example of quadratic max-ent shape function on the boundary of the domain verifying Kronecker delta property.

Numerical integration

As mentioned before, only formulations based upon weak forms of the governing equations are being covered in this review, since collocation methods are, in principle, not so well suited for the simulation of forming processes. In this framework, numerical integration plays a fundamental role for the accuracy of the results. In general, meshless formulation do not employ polynomial shape functions, and since quadrature formulas were initially designed to integrate such functions, an error is unavoidable. In addition, to perform numerical integration an integration cell should be chosen, and these do not generally conform with the support of shape functions (as is trivially the case in finite elements, where the integration cell arises naturally as the intersection of the element’s shape functions support). This provokes a second source of error.

Historically, the first attempt to overcome problems related to numerical integration errors was to try to conform integration cells to shape function support, as in [7, 8, 38, 39, 41, 59], for instance. In general, none of these methods completely overcome the mentioned deficiencies, due to the presence of the second source of error mentioned earlier: the non-polynomial character of the approximation.

The most important advancement in the numerical integration of meshless methods arose with the development of the so-called Stabilized Conforming Nodal Integration (SCNI) by Chen [23]. In essence, this method is based on assuming a modified strain field at each node:

where \(\varepsilon \) represents the Cauchy strain tensor and \(\Phi \) is a distribution function, that is usually taken as:

with \(\Omega _{I}\) the Voronoi cell associated to node I (other approaches in the definition of the area associated to each node are equally possible) and \(A_{I}\) the corresponding area of this cell. With this definition, the strain smoothing leads to:

By the divergence theorem, it can be obtained

\(\Gamma _{I}\) being the boundary of the Voronoi cell associated to node I. By introducing shape functions into Eq. 7, a matrix expression is obtained in the form:

where \(NN(I)\) represents the set of nodes neighboring the point \(\boldsymbol {x}_{I}\). The approximation to the weak form of the problem leads to a stiffness matrix and a force vector, in the absence of body forces, that can be expressed as:

where \(V_{m}^{n}\) denotes the volume in dimension n of the Voronoi cell associated to node m. Nnb represents the number of nodes in the natural boundary.

This nodal quadrature scheme has rendered excellent results when applied to the EFGM [23]. This technique is also very well suited for its application within the NEM, since most of the geometrical entities appearing in its computation (Voronoi cells, circumcenters, etc.) are in fact previously obtained in the NE shape function computation and can be easily stored with important computational saving.

Also specially noteworthy is the fact that SCNI leads to a truly nodal implementation of the Natural Element method (or whatever method it is applied to), which means that no recovery of secondary variables must be performed, nor nodal averaging, like in the traditional version of the Finite Element method or in previous non-linear versions of the NEM [55]. In Fig. 10 an example of application of this technique is shown over the geometry of a hollow cylinder [59]. It can be noticed how a Voronoi cell must be computed around each node and a quadrature scheme stablished on the boundary of it, usually by performing a division on triangles.

Division of a hollow cylinder [59] into Voronoi cells. Note that concave regions need for an additional triangulation to intersect Voronoi cells, defined on the convex hull of the node cloud

Surprisingly or not, Quak [93, 94] found that by applying SCNI to standard finite elements very good results were obtained in terms of accuracy for very distorted meshes. In this reference, a bending test was simulated with five different clouds of nodes, see Fig. 11. It was found that finite elements provided very competitive results, comparable to those of max-ent approximations, and much better than EFG methods. Nowadays there is a plethora of nodal integration techniques developed for finite elements that has provided them with the best characteristics of meshless methods in terms of robustness to mesh distortion [20, 45, 76, 92].

Examples of application

Once meshless methods acquired an important maturity, their application to forming process simulation became almost straightforward, as will be seen. There have been many applications in the field of solid (bulk) forming processes, as well as in fluid mechanics, but slightly less in sheet metal forming, for reasons that will become clearer later on. In this section a revision is made on the different applications that can be envisaged for an advantageous application of meshless methods.

Bulk forming processes

Bulk forming processes is maybe the most natural application of meshless methods to the field of forming, and one of the first where they were applied [1, 2, 22]. One of the most classical formulations when dealing with processes like aluminum extrusion, for instance, is to assume that, since plastic strains are much larger than elastic ones, one can neglect them and employ the so-called flow formulation (see [82, 110–112], just to cite some of the first and more recent works using this assumption) to assume that aluminum behaves like a non-Newtonian fluid, i.e.,

By assuming a rigid-viscoplastic constitutive law, and a von Mises plasticity criterion, one arrives at

where

is the effective strain rate. In particular, in [1, 2] it was assumed that the aluminum yield stress varied according to a Sellars-Tegart law:

and a coupled thermo-mechanic model was implemented. The key ingredient in this model was to employ an updated Lagrangian framework to describe flow kinematics, i.e., an explicit update of the geometry is achieved through

and where the velocity field \(\boldsymbol {v}\) is obtained after a consistent linearization of the variational problem. Since no loss of accuracy is produced by ‘mesh’ distortion, this very simple approach to many forming processes produces very appealing results for many forming processes like aluminum extrusion (already mentioned, but also in [5, 49]), friction stir welding [4], forging [102], casting [1], laser surface coating [60], machining [25], and many others.

Many practical aspects of forming simulation were studied in [3]. In particular, aspects related to computational cost and accuracy were deeply studied. Natural element and finite element methods were compared and, noteworthy, it was found than the computational cost of NEM was higher, particularly if Sibson shape functions are used. However, since we deal with highly non-lineal applications, this computational cost is obscured by the time spent in obtaining consistent tangent stiffness matrices in the Newton-Raphson loop.

In Fig. 12a geometry of an extrusion test is show (only one half of the domain is simulated by applying appropriate boundary conditions). At this location, equivalent strain rate contour plots for finite element, Sibson-NEM and Laplace-NEM, respectively. Note how finite element approximation produces an artificial stress concentration near the symmetry plane, where nothing can provoke this spurious stress level, that can only be due to mesh distortion. On the contrary, NEM (both Sibson and Laplace approximations) produced excellent results, with no apparent spurious stress concentrations.

Specially noteworthy is the ability of meshless methods to accurately reproduce the process of porthole extrusion. Due to the particular geometrical conditions of the dies necessary to obtain hollow profiles, porthole extrusions needs for a simulation strategy able to take into account the process of material separation and welding through extrusion. In [50] a deep comparison of FE and NEM techniques was performed for the porthole die extrusion of AA-6082 and compared with experimental results. In Fig. 13 a comparison is made with different die geometries in which different results can be noticed. In the model, it was assumed that a proper welding necessitated of a prior level of pressure within the die chamber. A nodal implementation of NEM allowed to track the pressure history at nodal positions, thus enabling to know if a proper welding had been achieved. In Fig. 13 blue nodes represent lack of true welding after the porthole, whereas red nodes indicate a good process. It can be noticed that only the geometry represented in the middle figure produces good quality profiles due to its particular geometry. The rightmost figure, despite its good apparent geometry, shows that no proper welding is achieved (note the blue nodes all along the geometry of the extrudate). Under internal pressure, it is expected that this welding will open again, as shown by experimental results.

Porthole die extrusion under different die geometries. Red nodes indicate a good quality in the welding of aluminum after the porthole, whereas blue nodes indicate defects. Under these assumptions, only the central geometry will provide an appropriate quality in the extruded profile. The rightmost geometry, despite its apparent good geometry, will provide a profile that will open once it is subjected to internal pressure

Sheet metal forming

The corps of literature of meshless methods devoted to sheet metal forming is considerably smaller than that of bulk forming. In some way it is natural, since meshless methods do not employ any connectivity dictated by the user to define a “mesh”. Therefore, shells, that constitute a manifold geometry in three-dimensional space, are difficult to reproduce by a method based solely on a cloud of nodes.

Among the first works devoted to sheet metal forming one can cite [105] and [79]. In both a kind of solid-shell approach was employed, i.e., several nodes were placed through the thickness direction of the shell. Very few works were devoted to true shell formulations. Maybe [97] can be considered as the first one, up to our knowledge. In all these references, since no connectivity is established, and in order to avoid computations of distances between nodes in secant directions (instead of in directions tangent to the manifold), a nodal spacing, h, must be prescribed.

It was not until very recently that a sound basis has been established for the proper simulation of shells with meshless methods [85], in this case by employing max-ent approaches, although the method is completely general. Briefly speaking, the proposed method is based upon the assumption that a shell is actually a manifold in geometrical terms, i.e., each point of a shell resembles locally an Euclidean (flat) space. Therefore, in order to perform a consistent meshless analysis of shells, it is necessary to locally learn its manifold structure, by identifying its locally flat principal directions. This is done on a node-by-node basis by employing non-linear principal component analysis (PCA) techniques [103].

Fluid forming processes

Fluid forming processes involve a great variety of situations, ranging from casting [2, 107] to mould filling [84], from Resin Transfer Moulding [54] to spin coating processes [33–35], where a plethora of different models for polymers, among other materials, could be taken into account [26]. As in the case of bulk forming processes, meshless methods allow for an updated Lagrangian description of fluid flows easily [84]. This is particularly noteworthy when free surface flows are present, and can be of little help if not. However, nodal implementations can help in designing efficient algorithms when variables depending to history are to be taken into account.

To exemplify how this affects the simulation of fluid flows, let us restrict to Navier-Stokes equations, for instance:

The weak form of the problem associated to Eqs. 9a, 9b and 9c is:

and

where “:” denotes the tensor product twice contracted and \(\boldsymbol {b}\) the vector of volumetric forces applied to the fluid. \(\boldsymbol {D}^{*}\) represents an admissible variation of the strain rate tensor, whereas \(\boldsymbol {v}^{*}\) represents equivalently an admissible variation of the velocity.

The second term in the right-hand side of Eq. 10 represents the inertia effects. Time discretization of this term represents the discretization of the material derivative along the nodal trajectories, which are precisely the characteristic lines related to the advection operator. Thus, assuming known the flow kinematics at time \(t^{n-1} = (n-1) \Delta t\), meshless methods allow to proceed easily as follows, by virtue of the updated Lagrangian framework:

where \(\boldsymbol {X}\) represents the position at time \(t^{n-1}\) occupied by the particle located at position \(\boldsymbol {x}\) at present time \(t^{n}\), i.e.:

So we arrive at

and

where the superindex in all the variables corresponding to the current time step has been dropped for clarity.

The most difficult term in Eq. 12 is the second term of the right-hand side. The numerical integration of this term depends on the quadrature scheme employed [61].

If traditional Gauss-based quadratures on the Delaunay triangles are employed, it will be necessary to find the position at time \(t^{n-1}\) of the point occupying at time \(t^{n}\) the position of the integration point \(\boldsymbol {\xi }_{k}\) (see Fig. 14):

where \(\omega _{k}\) represents the weights associated to integration points \(\boldsymbol {\xi }_{k}\), and \(\boldsymbol {\Xi }_{k}\) corresponds to the position occupied at time \(t^{n-1}\) by the quadrature point \(\boldsymbol {\xi }_{k}\), see Fig. 14.

On the contrary, if some type of nodal integration, as in [59], is employed, this procedure becomes straightforward, with the only need to store nodal velocities at time step \(t^{n-1}\).

In [72] a Lagrangian method that employs Natural Neighbor interpolation to construct the discrete form of the problem was presented. In that case, however, an implicit three-step fractional method was employed to perform the time integration. This approach needs for a stabilization if small time increments are chosen. See [72] for more details. In that reference, however, the method is truly a particle method, since nodes posses volume and mass associated to them.

If free-surface flows are considered, it is again of utmost importance to employ any technique for the reconstruction of the geometry of the evolving domain. While most meshless approaches do not employ any particular technique, thus provoking results depending on the shape functions’ support, shape constructors have been employed in a number of works [19, 61, 68, 71, 72, 84]. \(\alpha \)-shapes [29, 43, 44] are perhaps the most widely used shape constructors in this context. However, it is well known that this technique usually fails in the presence of holes or merging flows during the simulation, unless a very fine nodal cloud is employed. In [53] an improved \(\alpha \)-shape method is developed that takes into account not only geometrical features of the cloud of points, but also the history of velocity field in order to anticipate merging flows, the appearance of holes, etc, to a higher degree of accuracy. This method has rendered excellent results in the simulation of free-surface flows and also in the simulation of fluid-structure interactions [51].

Particularly noteworthy is the ability of meshless methods to construct node-based approaches for fluids with complex constitutive equations [52]. Multiscale methods arising from kinetic theory [67] are a clear example of this. In these models, a constitutive equation of the type

where \(\boldsymbol {\tau }\) represents an extra-stress contribution coming from the micro-scale. To obtain this extra-stress contribution, kinetic theory provides also the equation governing the evolution of the probability distribution function \(\psi \) at the micro-level. This equation is known as the Fokker-Planck equation:

where \(\boldsymbol {A}\) represents a vector describing the drift exerted by the fluid on the function \(\psi \), and \(\boldsymbol {C}\) is a symmetric, positive-definite matrix accounting for brownian effects in the model. \(D/Dt\) represent material derivative. The expression, finally, that relates the obtained configuration state with its enforced state of stress is known as the Kramers formula:

where the brackets \(\langle \cdot \rangle \) denote an ensemble average over all the molecular conformational space at a physical point, and \(\boldsymbol {g}\) is some function of the configuration state, depending on the particular model considered.

The stochastic approach to these problems makes use of the equivalence of the Fokker-Planck Eq. 14 to the following Itô’s stochastic differential equation [67]:

where

and \(\boldsymbol {W}\) represents a Wiener process. Eq. 15 applies along individual molecule trajectories.

Meshless methods allow to attach molecule ensembles to the nodes, allowing for the integration of Eq. 15 by the method of characteristics, along the nodal paths straightforwardly [36]. The integration is performed by means of the so-called Euler-Maruyama scheme:

where n refers to the current time step and j to the individual molecule being integrated.

For instance, in [36] an study is made by means of natural elements of the swelling behavior of an entangled polymer modeled by a Doi-Edwards fluid. Four snapshots of the velocity field are shown in Fig. 15. Elastic effects are notorious after the outlet of the channel. A minor loss of symmetry in the flow is noticed due mainly to the statistical noise. Despite the very low number of realizations per node, the statistical noise remains surprisingly low.

In [52], for instance, it is shown how meshless methods provide an approximation to the swelling flow of non-newtonian flows that improves the accuracy of existing finite element approximations.

Conclusions

Meshless methods arose in the middle nineties as a promising alternative to finite elements where the process of generation of complex meshes constitutes a major issue or where mesh distortion provoke loss of accuracy in the results. Meshless methods provide a very flexible alternative for these cases, although all the problems they presented initially needed for a very active research activity during more than a decade. Today, some twenty years after, meshless methods have overcome most of their initial limitations and constitute nowadays an appealing alternative in many fields. Noteworthy, material forming simulation is one of such fields.

During this review it has been highlighted how meshless methods are now able to provide a very competitive alternative to finite element simulation in many fields, from bulk forming to complex fluid flows (and perhaps to a lesser extent in sheet metal forming). Their main ability is perhaps to provide a very convenient way of performing updated Lagrangian descriptions of forming processes. This type of description is helpful when dealing with bulk forming, for instance, or when free-surface flows are present.

Noteworthy, all the research activity generated by meshless methods has helped to improve also the properties of finite elements. It has been shown, for instance, how finite elements with stabilized conforming nodal integration provide very competitive results at a fraction of the computational cost of meshless methods. All this research activity is today, after some 20 years, helping us to understand why finite elements, originated some sixty years ago, are still among us, probably for many years to come.

Notes

We focus here on methods based on the weak form of the problem, although many methods exist that are based upon the strong form, and that utilize a collocation approach, see [17]. However, their applicability to the simulation of forming processes is somewhat lower, due to aspects such as the description occurring in the vicinity of the boundary of the domain—contact, friction—and therefore are not included here. We refer the interested reader to the before mentioned reviews or books on the field for detailed explanations.

References

Alfaro I, Bel D, Cueto E, Doblare M, Chinesta F (2006) Three-dimensional simulation of aluminium extrusion by the α-shape based natural element method. Comput Methods Appl Mech Eng 195(33–36):4269–4286. doi:10.1016/j.cma.2005.08.006. http://www.sciencedirect.com/science/article/pii/S0045782505003543

Alfaro I, Yvonnet J, Cueto E, Chinesta F, Doblare M (2006) Meshless methods with application to metal forming. Comput Methods Appl Mech Eng 195(48–49):6661–6675. doi:10.1016/j.cma.2004.10.017. http://www.sciencedirect.com/science/article/pii/S0045782505004809

Alfaro I, Yvonnet J, Chinesta F, Cueto E (2007) A study on the performance of natural neighbour-based galerkin methods. Int J Numer Methods Eng 71(12):1436–1465. doi:10.1002/nme.1993

Alfaro I, Fratini L, Cueto E, Chinesta F (2008) Numerical simulation of friction stir welding by natural element methods. Int J Mater Form 1(1):1079–1082. doi:10.1007/s12289-008-0206-x

Alfaro I, Gagliardi F, Olivera J, Cueto E, Filice L, Chinesta F (2009) Simulation of the extrusion of hollow profiles by natural element methods. Int J Mater Form 2(1):597–600. doi:10.1007/s12289-009-0614-6

Arroyo M, Ortiz M (2006) Local maximum-entropy approximation schemes: a seamless bridge between finite elements and meshfree methods. Int J Numer Methods Eng 65(13):2167–2202. doi:10.1002/nme.1534

Atluri S, Zhu T (2000) New concepts in meshless methods. Int J Numer Methods Eng 47:537–556

Atluri S, Kim HG, Cho JY (1999) A critical assesment of the truly meshless local Petrov-Galerkin and local boundary integral equation methods. Comput Mech 24:348–372

Babuška I, Melenk JM (1996) The partition of unity finite element method: basic theory and applications. Comp Meth Appl Mech Eng 4:289–314

Babuška I, Melenk JM (1997) The partition of unity method. Int J Numer Methods Eng 40:727–758

Babuška I, Banerjee U, Osborn J (2002) Meshless and generalized finite element methods: a survey of some major results. Tech. Rep. TICAM 02-03. Texas Institute for Computational and Applied Mathematics, University of Texas atAustin

Belikov VV, Semenov AY (1998) Non-sibsonian interpolation on arbitrary system of points in euclidean space and adaptive generating isolines algorithm. In: Cross M, Soni BK, Thompson JF, Hauser J, Eiseman PR (eds) Numerical grid generation in computational field simulations. University of Greenwich, London, pp 277–286

Belikov VV, Ivanov VD, Kontorovich VK, Korytnik SA, Semenov AY (1997) The non-sibsonian interpolation: a new method of interpolation of the values of a function on an arbitrary set of points. Comput Math Math Phys 37(1):9–15

Belytschko T, Lu YY, Gu L (1993) Crack propagation by element free galerkin methods. Adv Comput Methods Mater Model 180:268

Belytschko T, Lu YY, Gu L (1994) Element-free Galerkin methods. Int J Numer Methods Eng 37:229–256

Belytschko T, Krongauz Y, Organ D, Fleming M, Krysl P (1998) Meshless methods: an overview and recent developments. Comput Methods Appl Mech Eng 139:3–47

Belytschko T, Rabczuk T, Huerta A, Fernández-Méndez S (2004) Encyclopaedia of computational mechanics, chap Meshfree Methods. Wiley. doi:10.1002/0470091355ecm005

Birknes J, Pedersen G (2006) A particle finite element method applied to long wave run-up. Int J Numer Methods Fluids 52:237–261

Cante J, Riera MD, Oliver J, Prado J, Isturiz A, Gonzalez C (2011) Flow regime analyses during the filling stage in powder metallurgy processes: experimental study and numerical modelling. Granul Matter 13(1):79–92. doi:10.1007/s10035-010-0225-4

Castellazzi G, Krysl P (2009) Displacement-based finite elements with nodal integration for Reissner-Mindlin plates. Int J Numer Methods Eng 80(2):135–162. doi:10.1002/nme.2622

Chen JS, Wang HP (2000) New boundary condition treatments in meshfree computation of contact problems. Comput Methods Appl Mech Eng 187(34):441–468. doi:10.1016/S0045-78250080004-3

Chen JS, Roque CMOL, Pan C, Button ST (1998) Analysis of metal forming process based on meshless method. J Mater Process Technol 80–81(0):642–646. doi:10.1016/S0924-0136(98)00171-X

Chen JS, Wu CT, Yoon S, You Y (2001) A stabilized conforming nodal integration for galerkin mesh-free methods. Int J Numer Methods Eng 50:435–466

Chinesta F, Cueto E (2007) Advances in material forming. ESAFORM: 10 years on chap. New and advanced numerical strategies for material forming. Springer

Chinesta F, Lorong P, Ryckelynck D, Martinez MA, Cueto E, Doblare M, Coffignal M, Touratier M, Yvonnet J (2004) Thermomechanical cutting model discretisation. Eulerian or Lagrangian, Mesh or Meshless? Int J Form Process 7:83–97

Chinesta F, Ammar A, Mackley M, Cueto E, Regnier G, Chatel S (2009) Modeling nanocomposites: from rheology to forming processes simulation. Int J Mater Form 2(1):141–144. doi:10.1007/s12289-009-0453-5

Chinesta F, Cescotto S, Cueto E, Lorong P (2009) La méthode des éléments naturels dans la simulation des structures et des procédés. Hermes Lavoisier, Paris

Chinesta F, Cescotto S, Cueto E, Lorong P (2011) Natural element method for the simulation of structures and processes. Wiley, London

Cueto E, Doblare M, Gracia L (2000) Imposing essential boundary conditions in the natural element method by means of density-scaled α-shapes. Int J Numer Methods Eng 49–4:519–546

Cueto E, Calvo B, Doblare M (2002) Modeling three-dimensional piece-wise homogeneous domains using the α-shape based natural element method. Int J Numer Methods Eng 54:871–897

Cueto E, Cegonino J, Calvo B, Doblare M (2003) On the imposition of essential boundary conditions in natural neighbour Galerkin methods.Commun Numer Methods Eng 19(5):361–376

Cueto E, Sukumar N, Calvo B, Martinez MA, Cegonino J, Doblare M (2003) Overview and recent advances in natural neighbour Galerkin methods. Arch Comput Methods Eng 10(4):307–384

Cueto E, Ma A, Chinesta F, Mackley M (2008) Numerical simulation of spin coating processes involving functionalised carbon nanotube suspensions. Int J Mater Form 1(2):89–99. doi:10.1007/s12289-008-0377-5

Cueto E, Ma A, Chinesta F, Mackley M (2008) Numerical simulation of spin coating processes with carbon nanotubes suspensions. Int J Mater Form 1(1):711–714. doi:10.1007/s12289-008-0314-7

Cueto E, Monge R, Chinesta F, Poitou A, Alfaro I, Mackley M (2010) Rheological modeling and forming process simulation of cnt nanocomposites. Int J Mater Form 3(2):1327–1338. doi:10.1007/s12289-009-0659-6

Cueto E, Laso M, Chinesta F (2011) Meshless stochastic simulation of micro-macro kinetic theory models. Int J Multiscale Comput Eng 9(1):1–16

Cyron CJ, Arroyo M, Ortiz M (2009) Smooth, second order, non-negative meshfree approximants selected by maximum entropy. Int J Numer Methods Eng 79(13):1605–1632. doi:10.1002/nme.2597

De S, Bathe KJ (2001) The method of finite spheres with improved numerical integration. Comput Struct 79:2183–2196

De S, Bathe KJ (2001) Towards an efficient meshless computational technique: the method of finite spheres. Eng Comput 18:170–192

Delaunay B (1934) Sur la Sphre Vide. A la memoire de Georges Voronoi. Izvestia Akademii Nauk SSSR, Otdelenie Matematicheskii i Estestvennyka Nauk 7:793–800

Dolbow J, Belytschko T (1999) Numerical integration of the Galerkin weak form in meshfree methods. Comput Math Model 23:219–230

Duarte CAM, Oden JT (1996) An h-p adaptive method using clouds. Comput Methods Appl Mech Eng 139:237–262

Edelsbrunner H, Mcke E (1994) Three dimensional alpha shapes. ACM Trans Graph 13:43–72

Edelsbrunner H, Kirkpatrick DG, Seidel R (1983) On the shape of a set of points in the plane. IEEE Trans Inf Theory 29(4):551–559

Elmer W, Chen J, Puso M, Taciroglu E (2012) A stable, meshfree, nodal integration method for nearly incompressible solids. Finite Elem Anal Des 51:81–85. doi:10.1016/j.finel.2011.11.001, http://www.sciencedirect.com/science/article/pii/S0168874X11002150

Farin G (2002) Curves and surfaces for CAGD. Morgan Kaufmann, San Francisco

Fernandez-Mendez S, Huerta A (2002) Coupling finite elements and particles for adaptivity: an application to consistently stabilized convection-diffusion. In: Meshfree methods for partial differential equations. Lecture notes in computational science and engineering, vol 26. Springer-Verlag, pp 117–129

Fernández-Méndez S, Huerta A (2004) Imposing essential boundary conditions in mesh-free methods. Comput Methods Appl Mech Eng 193:1257–1275

Filice L, Alfaro I, Gagliardi F, Cueto E, Micari F, Chinesta F (2009) A preliminary comparison between finite element and meshless simulations of extrusion. J Mater Process Technol 209(6): 3039–3049. doi:10.1016/j.jmatprotec.2008.07.013. http://www.sciencedirect.com/science/article/pii/S0924013608005694

Gagliardi F, Alfaro I, Ambrogio G, Filice L, Cueto E (2013) NEM-FEM comparison on porthole die extrusion of AA-6082. J Mech Sci Technol 27(4):1089–1095. doi:10.1007/s12206-013-0229-1

Galavis A, Gonzalez D, Cueto E, Chinesta F, Doblare M (2007) A natural element updated lagrangian approach for modelling fluid structure interactions. Eur J Comput Mech/Revue Europeenne de Mecanique Numerique 16(3–4):323–336. doi:10.3166/remn.16.323-336

Galavis A, Gonzalez D, Cueto E (2012) A natural neighbour Lagrange-Galerkin method for the simulation of Newtonian and Oldroyd-B free surface flows. Int J Numer Methods Fluids 70(7):860–885. doi:10.1002/fld.2718

Galavis A, Gonzalez D, Alfaro I, Cueto E (2008) Improved boundary tracking in meshless simulations of free-surface flows. Comput Mech 42(3):467–479. doi:10.1007/s00466-008-0263-5

Garcia J, Gascon L, Cueto E, Ordeig I, Chinesta F (2009) Meshless methods with application to liquid composite molding simulation. Comput Methods Appl Mech Eng 198(33–36):2700–2709. doi:10.1016/j.cma.2009.03.010, http://www.sciencedirect.com/science/article/pii/S0045782509001352

Garcia-Aznar JM, Cueto E, Doblare M (2000) Simulation of bone internal remodeling by means of the α-shape based Natural Element Method. In: Proceedings of the ECCOMAS conference. Barcelona

Gavete L, Benito JJ, Falco S, Ruiz A (2000) Implementation of essential boundary conditions in a meshless method. Commun Numer Methods Eng 16(6):409–421. doi:10.1002/1099-0887(200006)16:6<409::AID-CNM349>3.0.CO;2-Z

Gingold R, Monahan JJ (1977) Smoothed particle hydrodynamics: theory and applications to non-spherical stars. Mon Not Roy Astron Soc 181:375–389

Gonzalez D, Cueto E, Doblare M (2004) Volumetric locking in natural neighbour Galerkin methods. Int J Numer Methods Eng 61:611–632

Gonzalez D, Cueto E, Martinez MA, Doblare M (2004) Numerical integration in natural neighbour Galerkin methods. Int J Numer Methods Eng 60(12):2077–2104

Gonzalez D, Bel D, Cueto E, Chinesta F, Doblare M (2007) Natural neighbour strategies for the simulation of laser surface coating processes. Int J Form Process 10:89–108

Gonzalez D, Cueto E, Chinesta F, Doblare M (2007) A natural element updated lagrangian strategy for free-surface fluid dynamics. J Comput Phys 223(1):127–150. doi:10.1016/j.jcp.2006.09.002, http://www.sciencedirect.com/science/article/pii/S0021999106004293

Gonzalez D, Cueto E, Doblare M (2008) Higher-order natural element methods: towards an isogeometric meshless method. Int J Numer Methods Eng 74(13):1928–1954. doi:10.1002/nme.2237

Gonzalez D, Cueto E, Doblare M (2009) A high order method using max-ent approximation schemes. Int J Mater Form 2(1):577–580. doi:10.1007/s12289-009-0627-1

Gonzlez D, Cueto E, Doblare M (2010) A higher order method based on local maximum entropy approximation. Int J Numer Methods Eng 83(6):741–764. doi:10.1002/nme.2855

Gosz J, Liu WK (1996) Admissible approximations for essential boundary conditions in the reproducing kernel particle method. Comput Mech 19:120–135

Guenther FC, Liu WK (1998) Implementation of boundary conditions for meshless methods. Comput Methods Appl Mech Eng 163(1-4):205–230

Oettinger HC (1996) Stochastic processes in polymeric fluids. Springer, New York

Hartmann S, Weyler R, Oliver J, Cante JC, Hernandez JA (2010) A 3D frictionless contact domain method for large deformation problems. Comput Model Eng Sci 55(3):211–269

Hiyoshi H, Sugihara K (1999) Two generalizations of an interpolant based on Voronoi diagrams. Int J Shape Model 5(2):219–231

Huerta A, Fernández-Méndez S (2000) Enrichment and coupling of the finite element and meshless methods. Int J Numer Methods Eng 48(11):1615–1636

Idelsohn SR, Onate E (2006) To mesh or not to mesh: that is the question. Comput Methods Appl Mech Eng 195(37–40):4681–4696

Idelsohn SR, Onate E, del Pin F (2004) The particle finite element method: a powerful tool to solve incompressible flows with free-surfaces and breaking waves. Int J Numer Methods Eng 61(7):964–989

Illoul L, Lorong P (2011) On some aspects of the CNEM implementation in 3D in order to simulate high speed machining or shearing. Comput Struct 89(11–12):940–958. doi:10.1016/j.compstruc.2011.01.018

Illoul LA, Le Menach Y, Clenet S, Chinesta F (2008) A mixed finite element/meshless natural element method for simulating rotative electromagnetic machines. Eur Phys J Appl Phys 43:197–208. doi:10.1051/epjap:2008102, http://www.epjap.org/action/article_S128600420800102X

Krongauz Y, Belytschko T (1996) Enforcement of essential boundary conditions in meshless approximations using finite elements. Comput Methods Appl Mech Eng 131(1–2):133–145. doi:10.1016/0045-7825(95)00954-X, http://www.sciencedirect.com/science/article/pii/004578259500954X

Krysl P, Zhu B (2008) Locking-free continuum displacement finite elements with nodal integration. Int J Numer Methods Eng 767:1020–1043. doi:10.1002/nme.2354

Li S, Liu WK (2002) Meshfree and particle methods and their applications. Appl Mech Rev 55:1–34

Liu GR (2002) Mesh free methods: moving beyond the finite element method. CRC Press

Liu H, Xing Z, Sun Z, Bao J (2011) Adaptive multiple scale meshless simulation on springback analysis in sheet metal forming. Eng Anal Boundary Elem 35(3):436–451. doi:10.1016/j.enganabound.2010.06.025, http://www.sciencedirect.com/science/article/pii/S0955799710002614, http://www.sciencedirect.com/science/article/pii/S0955799710002614

Liu WK, Chen Y (1995) Wavelet and multiple scale reproducing kernel methods. Int J Numer Methods Fluids 21:901–931

Liu WK, Jun S, Li S, Adee J, Belytschko T (1995) Reproducing kernel particle methods. Int J Numer Methods Eng 38:1655–1679

Lof J, Blokhuis Y (2002) FEM simulations of the extrusion of complex thin-walled aluminium sections. J Mater Process Technol 122:344–354

Lucy LB (1977) A numerical approach to the testing of the fission hypothesis. Astron J 82(12):1013–1024

Martinez MA, Cueto E, Alfaro I, Doblare M, Chinesta F (2004) Updated lagrangian free surface flow simulations with natural neighbour Galerkin methods. Int J Numer Methods Eng 60(13):2105–2129

Millan D, Rosolen A, Arroyo M (2013) Nonlinear manifold learning for meshfree finite deformation thin-shell analysis. Int J Numer Methods Eng 93(7):685–713. doi:10.1002/nme.4403

Nayroles B, Touzot G, Villon P (1992) Generalizing the finite element method: diffuse approximation and diffuse elements. Comput Mech 10:307–318

Nguyen VP, Rabczuk T, Bordas S, Duflot M (2008) Meshless methods: a review and computer implementation aspects. Math Comput Simul 79(3):763–813. doi:/10.1016/j.matcom.2008.01.003, http://www.sciencedirect.com/science/article/pii/S0378475408000062

Oliver J, Cante JC, Weyler R, Gonzalez C, Hernandez J (2007a). Particle finite element methods in solid mechanics problems. In: Onate E, Owen R (eds) Computational plasticity, computational methods in applied sciences, vol 7. European Community Computat Methods Appl Sci., Springer, Dordrecht, pp 87–103. 8th international conference on computational plasticity, CIMNE UPC, Barcelona, Sep. 05-08 2005. doi:f10.1007/978-1-4020-6577-4n6g

Oliver J, Cante JC, Weyler R, Hernandez J (2007). Possibilities of particle finite element methods in industrial forming processes. In: Cueto E, Chinesta F (eds) 10th ESAFORM Conference on Material Forming, Pts A and B. European Sci Assoc Mat Forming; European Community Computat Methods Appl Sci; Spanish Minist Educ & Sci; Reg Govt Aragon; Univ Zaragoza. AMER INST PHYSICS. 2 HUNTINGTON QUADRANGLE, STE 1NO1, MELVILLE, NY 11747-4501 USA. AIP CONFERENCE PROCEEDINGS, vol 907, pp 1484–1489. 10th ESAFORM Conference on Material Forming, Zaragoza, SPAIN, APR 18-20, 2007

Organ D, Fleming M, Terry T, Belytschko T (1996) Continuous meshless approximations for nonconvex bodies by diffraction and transparency. Comput Mech 18(3):225–235. doi:10.1007/BF00369940

Ortiz A, Puso M, Sukumar N (2011) Maximum-entropy meshfree method for incompressible media problems. Finite Elem Anal Des 47(6):572–585. doi:10.1016/j.finel.2010.12.009. The Twenty-Second Annual Robert J. Melosh Competition. http://www.sciencedirect.com/science/article/pii/S0168874X10002040

Puso MA, Chen JS, Zywicz E, Elmer W (2008) Meshfree and finite element nodal integration methods. Int J Numer Methods Eng 74(3):416–446. doi:10.1002/nme.2181

Quak W, Boogaard A, Huetink J (2009) Meshless methods and forming processes. Int J Mater Form 2(1):585–588. doi:10.1007/s12289-009-0442-8

Quak W, Boogaard A, Gonzalez D, Cueto E (2011) A comparative study on the performance of meshless approximations and their integration. Comput Mech 48(2):121–137. doi:10.1007/s00466-011-0577-6

Sibson R (1980) A vector identity for the Dirichlet Tesselation. Math Proc Camb Philos Soc 87:151–155

Sibson R (1981) A brief description of natural neighbour interpolation. In: Barnett V (ed) Interpreting multivariate data. Wiley, New York, pp 21–36

Sidibe K, Li G (2012) A meshfree simulation of the draw bending of sheet metal. Int J Sci Eng Res 3(10):1–5. doi:10.1007/s004660050463

Sukumar N (2004) Construction of polygonal interpolants: a maximum entropy approach. Int J Numer Methods Eng 61(12):2159–2181

Sukumar N, Moran B (1999) \(C^{1}\) Natural neighbour interpolant for partial differential equations. Numer Methods Partial Differential Equations 15(4):417–447

Sukumar N, Moran B, Belytschko T (1998) The natural element method in solid mechanics. Int J Numer Methods Eng 43(5):839–887

Sukumar N, Moran B, Semenov AY, Belikov VV (2001) Natural neighbor Galerkin methods. Int J Numer Methods Eng 50(1):1–27

Sukumar N, Dolbow J, Devan A, Yvonnet J, Chinesta F, Ryckelynck D, Lorong P, Alfaro I, Martinez MA, Cueto E, Doblare M (2005) Meshless methods and partition of unity finite elements. Int J Form Process 8:409–427

Tenenbaum JB, de Silva V, Langford JC (2000) A global framework for nonlinear dimensionality reduction. Science 290:2319–2323

Thiessen AH (1911) Precipitation averages for large areas. Mon Weather Rep 39:1082–1084

Yoon S, Wu CT, Wang HP, Chen JS (2000) Efficient meshfree formulation for metal forming simulations. J Eng Mater Technol 123(4):64–646

Yvonnet J, Ryckelynck D, Lorong P, Chinesta F (2004) A new extension of the Natural Element method for non-convex and discontnuous problems: the Constrained Natural Element method. Int J Numer Methods Eng 60(8):1452–1474

Yvonnet J, Chinesta F, Lorong P, Ryckelynck D (2005) The constrained natural element method (c-nem) for treating thermal models involving moving interfaces. Int J Thermal Sci 44(6):559–569. doi:10.1016/j.ijthermalsci.2004.12.007, http://www.sciencedirect.com/science/article/pii/S1290072905000347

Yvonnet J, Villon P, Chinesta F (2006) Natural element approximations involving bubbles for treating mechanical models in incompressible media. Int J Numer Methods Eng 66(7):1125–1152. doi:10.1002/nme.1586

Yvonnet J, Villon P, Chinesta F, Griebel M (2007) Bubble and hermite natural element approximations. In: Schweitzer M (ed) Meshfree methods for partial differential equations III. Lecture notes in computational science and engineering, vol 57. Springer, Berlin, pp 283–298

Zienkiewicz OC, Godbolet PN (1974) Flow of plastic and visco-plastic solids with special reference to extrusion and forming processes. Int J Numer Methods Eng 8:3–16

Zienkiewicz OC, Onate E, Heinrich JC (1978) Plastic flow in metal forming. (I) Coupled thermal (II) Thin sheet forming. In: Applications of numerical methods to forming processes. AMD-vol 28, pp 107–120

Zienkiewicz OC, Pain PC, Onate E (1978) Flow of solids during forming and extrusion: some aspects of numerical solutions. Int J Solids Struct 14:15–38

Author information

Authors and Affiliations

Corresponding author

Additional information

This work has been partially supported by the Spanish Ministry of Economy and Competitiveness, through grant number CICYTDPI2011-27778-C02-01.

Rights and permissions

About this article

Cite this article

Cueto, E., Chinesta, F. Meshless methods for the simulation of material forming. Int J Mater Form 8, 25–43 (2015). https://doi.org/10.1007/s12289-013-1142-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12289-013-1142-y