Abstract

In this paper, we introduce impulsive delayed matrix function and give its norm estimation. With the help of impulsive delayed Cauchy matrix and the variation of constants method, we obtain representation of solutions to linear impulsive delay differential equations. Moreover, we derive some sufficient conditions to guarantee the trivial solution is locally asymptotically stable. Finally, two examples are given to illustrate our theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent decades, there are only few development on seeking explicit formula of solution to delay differential/discrete equations by introducing continuous/discrete delayed exponential matrix [1, 2]. One of the most advantages of continuous/discrete delayed exponential matrix is to help transferring the classical idea to represent the solution of linear ODEs into linear delay differential/discrete equations. For more continued works, one can refer to existence and stability of solutions to several classes of delay differential/discrete equations [3,4,5,6,7,8,9,10,11,12,13] and some relative controllability problems [14,15,16,17].

Impulsive delay differential equations are widely used to characterize the situation subject to abrupt changes in their states depending on the previous time interval. For more recent development of theory and application, one can refer to [18,19,20,21] for the determined case and reference therein. Next, we also remark that there are interesting work on impulsive stochastic delay differential equations with Markovian jump [22,23,24,25,26,27,28], where the classical approach are used to address the exponential stability problem. More precisely, Lyapunov–Krasovskii functional, stochastic version Razumikhin technique as well as linear matrix inequalities technique are utilized to derive the sufficient conditions to ensure the exponential stability of the trivial solution in the mean square.

In general, it is difficult to derive explicit presentation of solution without knowing impulsive delayed Cauchy matrix even for linear impulsive delay differential equations by adopting the similar idea to derive a solution involving impulsive Cauchy matrix [29, p.108, (3.3)] for linear impulsive differential equations.

In this paper, we firstly introduce impulsive delayed Cauchy matrix and seek the formula of solutions for the following linear impulsive delay differential equations

where \(A,B,C_{i}\) be constant \(n\times n\) matrices, \(AB=BA\), \(AC_{i}=C_{i}A\) and \(BC_{i}=C_{i}B\) for each \(i=1,2,\ldots \), \(\varphi \in C_{\tau }^1:= C^1([-\tau ,0],\mathbb {R}^n)\), and \(x(t)\in \mathbb {R}^{n}\), and time sequences \(\{t_{k}\}^{\infty }_{k=1}\) satisfy \(0=t_{0}<t_{1}<\cdots< t_{k}<\cdots \), impulsive conditions \(\Delta x(t_{k}):=x(t_{k}^{+})-x(t_{k}^{-})\), \(x(t_{k}^{+})=\lim \limits _{\epsilon \rightarrow 0^{+}}x(t_{k}+\epsilon )\) and \(x(t_{k}^{-})=x(t_{k})\) represent respectively the right and left limits of x(t) at \(t=t_{k}\) and \(\lim \limits _{k\rightarrow +\infty }t_{k}=\infty \).

Secondly, we seek the possible presentation of solutions to linear impulsive nonhomogeneous delay differential equations

We would like to point that the main innovation is to derive the impulsive delayed Cauchy matrix for (1) and give its norm estimation. Further, we obtain explicit formula of solutions to (1) and (2) by virtue of new constructed impulsive delayed Cauchy matrix. Moreover, we present two sufficient conditions to guarantee the trivial solution to (1) is locally asymptotically stable. Next, we extend to show that the trivial solution to impulsive delay differential equations with nonlinear term is locally asymptotically stable. Finally, we make two numerically examples to illustrate our theoretical result.

The rest of the paper is organized as follows. In Sect. 2, we recall some notations and definitions, and give the concept on impulsive delayed Cauchy matrix and its norm estimation, and show that is the fundamental matrix for linear impulsive delay differential equations. In Sect. 3, we give the explicit formulas of solutions to linear impulsive homogeneous/nonhomogeneous delay differential equations via impulsive delayed Cauchy matrix and the variation of constants method. In Sect. 4, sufficient conditions ensuring local asymptotical stability of solutions are presented. Examples are given to illustrate our theoretical results in final section.

2 Preliminaries

For any \(x\in \mathbb {R}^n\) and \(A\in \mathbb {R}^{n\times n}\), we introduce vector norm \(\Vert x\Vert =\max _{1\le i \le n} |x_{i}|\) and matrix norm \(\Vert A\Vert =\max _{1\le i \le n}\sum _{j=1}^{n} |a_{ij}|\) respectively, where \(x_{i}\) and \(a_{ij}\) are the elements of the vector x and matrix A. Let \(L(\mathbb {R}^n)\) be the space of bounded linear operators in \(\mathbb {R}^n\). Denote by \(C(\mathbb {R}^{+}, \mathbb {R}^n)\) the Banach space of vector-value bounded continuous functions from \(\mathbb {R}^{+}\rightarrow \mathbb {R}^n\) endowed with the norm \(\Vert x\Vert _{C}=\sup _{t\in \mathbb {R}^{+}}\Vert x(t)\Vert \). We introduce a space \(C^1(\mathbb {R}^{+}, \mathbb {R}^n)=\{x\in C(\mathbb {R}^{+}, \mathbb {R}^n): \dot{x}\in C(\mathbb {R}^{+}, \mathbb {R}^n) \}\). Denote \(PC(\mathbb {R}^{+},\mathbb {R}^n):=\{x:\mathbb {R}^{+} \rightarrow \mathbb {R}^n:x\in C((t_{i},t_{i+1}],\mathbb {R}^n) \}\) and \(PC^1(\mathbb {R}^{+},\mathbb {R}^n):=\{x:\mathbb {R}^{+} \rightarrow \mathbb {R}^n:\dot{x}\in PC(\mathbb {R}^{+},\mathbb {R}^n)\}\).

Definition 2.1

A function \(x\in C^1([-\tau ,0],\mathbb {R}^n)\cup PC^1(\mathbb {R}^{+},\mathbb {R}^n)\) is called the solution of (1) (or (2)) if x satisfies \(x(t)=\varphi (t)\) for \(-\tau \le t\le 0\) and the first and second equations in (1) (or (2)).

Definition 2.2

(see [1, (11)] or [5, (11)]) \(e_{\tau }^{Bt}:\mathbb {R}\rightarrow \mathbb {R}^{n\times n}\) is called delayed matrix exponential if

where B is a constant \(n\times n\) matrix, \(\Theta \) and I are the zero and identity matrices, respectively.

Lemma 2.3

(see [1, Lemma 4] or [5, p.2253]) For all \(t\in \mathbb {R}\), \( \frac{d}{dt}e_{\tau }^{Bt}= Be_{\tau }^{B(t-\tau )}. \)

Lemma 2.4

(see [8, Lemma 12]) If \(\Vert B\Vert \le \alpha e^{\alpha \tau }\), \(\alpha \in \mathbb {R}^{+}\), then \( \Vert e_{\tau }^{B(t-\tau )}\Vert \le e^{\alpha t},~t\in \mathbb {R}. \)

Lemma 2.5

(see [30, 2.28, p.44]) Let \(A\in L(\mathbb {R}^n)\). For any \(\varepsilon >0\), there is a norm on \(\mathbb {R}^n\) such that \(\Vert A\Vert \le \rho (A)+{\varepsilon }\), where \(\rho (A)\) is the spectral radius of A.

Lemma 2.6

(see [29, (3.7), p.109]) Let \(A\in L(\mathbb {R}^n)\) and \(\alpha (A)=\max \{\mathfrak {R}\lambda \mid \lambda \in \sigma (A)\}\). For any \(\varepsilon >0\), there are \(K\ge 1\) such that \( \Vert e^{At}\Vert \le K e^{(\alpha (A)+\varepsilon )t} \) for any \(t\ge 0\). Here \(\sigma (A)\) is the spectrum of A.

In what follows, we introduce a concept of impulsive delay matrix function, an extension of delay matrix function for linear delay differential equations, which help us to seek explicit formula of solutions to impulsive delay differential equations.

By virtue of delayed matrix exponential (3), we define \(Y(\cdot ,\cdot ):\mathbb {R}\times \mathbb {R}\rightarrow \mathbb {R}^{n\times n}\) and

where

Here, we call \(Y(\cdot ,\cdot )\) defined in (4) as impulsive delayed Cauchy matrix associated with (1).

Remark 2.7

Obviously, \(Y(t,t)=X(t,t+\tau )=e_{\tau }^{B_1(t-t-\tau )}=I,\) and \(Y(t,s)=e^{A(t-s)}X(t,s+\tau )=e^{A(t-s)}e_{\tau }^{B_1(t-s-\tau )}=\Theta ,~t<s.\)

Lemma 2.8

Impulsive delayed Cauchy matrix \(Y(\cdot ,\cdot )\) is the fundamental matrix of (1).

Proof

We divide our proof into two steps.

Step 1 We verify that \(Y(\cdot ,\cdot )\) satisfies differential equation \(\frac{d}{dt}Y(t,s)= AY(t,s)+BY(t-\tau ,s),~t\in (t_i,t_{i+1}].\) In fact, having differentiated Y(t, s) for \(t\in (t_i,t_{i+1}]\) and \(t>s\), by using Lemma 2.3, we obtain

Step 2 We verify that \(Y(t_{i}^{+},s)-Y(t_{i}^{-},s)= C_{i}Y(t_{i},s).\) Note that \(e_{\tau }^{Bt^{+}}=e_{\tau }^{Bt^{-}}\) for all \(t>-\tau \), then

This ends the proof. \(\square \)

To give the norm estimation of \(Y(\cdot ,\cdot )\), we first consider the norm estimation of \(B_{1}=e^{-A\tau }B\).

For a given \(B_{1}\), one can find a \(\alpha \in \mathbb {R}^{+}\) such that

In fact, by Lemmas 2.5 and 2.6, we have \(\Vert B_{1}\Vert =\Vert e^{-A\tau }B\Vert \le K(\rho (B)+\varepsilon )e^{(\alpha (-A)+\varepsilon )\tau }.\) Choosing \(\alpha \ge \max {\{K(\rho (B)+\varepsilon ), \alpha (-A)+\varepsilon \}},~\varepsilon >0\), then we obtain (5). In addition, we can calculate the parameter \(\alpha \) for a given matrix by using MATLAB software.

Lemma 2.9

For any \(\varepsilon >0\) there exits \(K\ge 1\) such that

and

Proof

Without loss of generality, we suppose that \(t_{m}<s-\tau \le t_{m+1}\) and \(t_{m+n}<t\le t_{m+n+1},~m,n=0,1,2,\ldots \). Next, we apply mathematical introduction to complete our proof.

-

(i)

For \(n=0\), by Lemma 2.4 via (5),

$$\begin{aligned} \Vert X(t,s)\Vert \le \Vert e_{\tau }^{B_1(t-s)}\Vert \le e^{\alpha (t+\tau -s)}. \end{aligned}$$ -

(ii)

For \(n=1\), by Lemmas 2.4 and 2.5 via (5), we have

$$\begin{aligned} \Vert X(t,s)\Vert\le & {} \bigg \Vert e_{\tau }^{B_1(t-s)}+C_{m+1}e_{\tau }^{B_{1}(t-\tau -t_{m+1})}X(t_{m+1},s)\bigg \Vert \\\le & {} e^{\alpha (t+\tau -s)}+(\rho (C_{m+1})+\varepsilon )e^{\alpha (t-t_{m+1})}e^{\alpha (t_{m+1}+\tau -s)}\\\le & {} (\rho (C_{m+1})+1+\varepsilon )e^{\alpha (t+\tau -s)}. \end{aligned}$$ -

(iii)

Let \(i:=i(0,t)\) be the number of the impulsive points which belong to (0, t). For \(n=k\), suppose that

$$\begin{aligned} \Vert X(t,s)\Vert \le \bigg (\prod _{j=m+1}^{m+k}(\rho (C_{j})+1+\varepsilon )\bigg ) e^{\alpha (t+\tau -s)} = \bigg (\prod _{s-\tau<t_{j}<t}(\rho (C_{j})+1+\varepsilon )\bigg ) e^{\alpha (t+\tau -s)}. \end{aligned}$$ -

(iv)

For \(n=k+1\), by Lemmas 2.4 and 2.5 via (5) again,

$$\begin{aligned} \Vert X(t,s)\Vert\le & {} \left\| e_{\tau }^{B_1(t-s)}+\sum \limits _{s-\tau<t_{j}<t}C_{j}e_{\tau }^{B_{1}(t-\tau -t_{j})}X(t_{j},s)\right\| \\\le & {} e^{\alpha (t+\tau -s)}+\sum \limits _{j=m+1}^{m+k+1}(\rho (C_{j})+\varepsilon )e^{\alpha (t-t_{j})}\bigg (\prod _{r=m+1}^{j-1}(\rho (C_{r})\\&+\;1+\varepsilon )\bigg ) e^{\alpha (t_{j}+\tau -s)}\\\le & {} \bigg (1+\sum \limits _{j=m+1}^{m+k+1}(\rho (C_{j})+\varepsilon )\prod _{r=m+1}^{j-1}(\rho (C_{r})+1+\varepsilon )\bigg )e^{\alpha (t+\tau -s)}\\= & {} \bigg (\prod _{j=m+1}^{m+k+1}(\rho (C_{j})+1+\varepsilon )\bigg ) e^{\alpha (t+\tau -s)}\\= & {} \bigg (\prod _{s-\tau<t_{j}<t}(\rho (C_{j})+1+\varepsilon )\bigg ) e^{\alpha (t+\tau -s)}. \end{aligned}$$Linking mathematical induction, one can obtain (6). Finally, using (4) and (6) via Lemma 2.6, one can derive (7) immediately. The proof is finished.\(\square \)

3 Representation of solutions

In this section, we seek explicit formula of solutions to linear impulsive nonhomogeneous delay system by adopting the classical ideas to find solution of linear impulsive ODEs.

We drive explicit formula of solutions to linear impulsive homogeneous delay system.

Theorem 3.1

The solution of (1) has the form

Proof

Concerning on the solution of (1) and Lemma 2.8, one should search in the form

where c is an unknown constants, g(t) is an unknown continuously differentiable function. Moreover, it satisfies initial condition \(x(t)=\varphi (t),-\tau \le t\le 0\), i.e.,

Let \(t=-\tau \), we have

Thus \(c=\varphi (-\tau ).\) Since \(-\tau \le t\le 0\), one obtains

Thus on interval \(-\tau \le t\le 0\), one can derive that

Having differentiated (9), we obtain

Therefore,

The desired result holds. \(\square \)

Next, the solution of (2) can be written as a sum \(x(t)=x_{0}(t)+\overline{x(t)}\), where \(x_{0}(t)\) is a solution of (1) and \(\overline{x(t)}\) is a solution of (2) satisfying zero initial condition.

The following result show us how to derive the formula of \(\overline{x(\cdot )}\).

Theorem 3.2

The solution \(\overline{x(t)}\) of (2) satisfying zero initial condition, has a form

Proof

By using method of variation of constants, one will search \(\overline{x(t)}\) in the following form

where \(c_{j}(t),~j=0,1,\ldots ,i(0,t)\) is an unknown vector function. We divide our proofs into several steps as follows:

-

(i)

For any \(0<t\le t_{1}\), we have \(\overline{x(t)}=\int _{0}^t Y(t,s)c_{0}(s)ds.\) Let us differentiate \(\overline{x(t)}\) to obtain

$$\begin{aligned} \frac{d}{dt}\overline{x(t)}= & {} A\overline{x(t)}+B\int _{0}^{t-\tau } Y(t-\tau ,s)c_{0}(s)ds\\&+B\int _{t-\tau }^{t} Y(t-\tau ,s)c_{0}(s)ds+c_{0}(t)\\= & {} A\overline{x(t)}+B\overline{x(t-\tau )}+f(t). \end{aligned}$$From Remark 2.7, we know, \(Y(t-\tau ,s)=\Theta ,~s>t-\tau \), then

$$\begin{aligned} c_{0}(t)=f(t). \end{aligned}$$ -

(ii)

For any \(t_{1}<t\le t_{2}\), we have

$$\begin{aligned} \overline{x(t)}=\int _{0}^{t_{1}} Y(t,s)f(s)ds+\int _{t_{1}}^t Y(t,s)c_{1}(s)ds. \end{aligned}$$Let us differentiate \(\overline{x(t)}\) again to obtain

$$\begin{aligned} \frac{d}{dt}\overline{x(t)}= & {} \int _{0}^{t_{1}} [AY(t,s)+BY(t-\tau ,s)]f(s)ds\\&+\;\int _{t_{1}}^t [AY(t,s)+BY(t-\tau ,s)]c_{1}(s)ds+c_{1}(t)\\= & {} A\overline{x(t)}+B\overline{x(t-\tau )}+f(t), \end{aligned}$$which implies that

$$\begin{aligned} c_{1}(t)=f(t). \end{aligned}$$ -

(iii)

Suppose that \(c_{i-1}(t)=f(t)\) holds on subintervals \((t_{i-1},t_{i}], ~i=2,3\ldots \). For any \(t_{i}<t\le t_{i+1}\), we have

$$\begin{aligned} \overline{x(t)}=\sum \limits _{j=0}^{i-1}\int _{t_{j}}^{t_{j+1}}Y(t,s)f(s)ds +\int _{t_{i}}^t Y(t,s)c_{i}(s)ds. \end{aligned}$$Let us differentiate \(\overline{x(t)}\) again to obtain

This yields that \(c_{i}(t)=f(t)\). According to the mathematical induction, one can obtain \(c_{i}(t)=f(t),~i=1,2\ldots .\) Linking with (11), (10) is derived. \(\square \)

Corollary 3.3

The solution of (2) has the form

4 Asymptotical stability results

In this section, we discuss asymptotical stability of trivial solution of (1).

Definition 4.1

The trivial solution of (1) is called locally asymptotically stable, if there exists \(\delta >0\) such that \(\Vert \varphi \Vert _{1}:=\max _{[-\tau ,0]}\Vert \varphi (t)\Vert +\max _{[-\tau ,0]}\Vert \dot{\varphi }(t)\Vert <\delta \), the following holds:

To prove our main results on the stability of trivial solution, we introduce the following conditions.

\((H_{1})\) Suppose that the distance between the impulsive points \({t_k}\) and \({t_{k+1}}\) satisfies

and set

for some \(\alpha \) given in (5).

\((H_{2})\) Let \(\eta _{1}:=\alpha (A)+\alpha +\frac{1}{\theta }\ln (\rho (C)+1)\), where \(\rho (C):=\max \{\rho (C_{j}):j=1,\ldots ,i(0,t)\}\) and assume that \(\eta _{1}<0.\)

\((H_{3})\) There exists a positive constant p such that

\((H_{4})\) Suppose that \(\eta _{2}<0\), where

Now, we are ready to state our first stability result for trivial solution of (1).

Theorem 4.2

If \((H_{1})\) and \((H_{2})\) are satisfied, then the trivial solution of (1) is locally asymptotically stable.

Proof

Using (8), Lemma 2.9 and Theorem 3.1, we have

where

It follows from the condition \((H_{2})\) that one can choose \(\varepsilon >0\) such that

Then (14) gives

The proof is completed. \(\square \)

Next, we give second stability result for trivial solution of (1).

Theorem 4.3

If \((H_{3})\) and \((H_{4})\) are satisfied, then the trivial solution of (1) is locally asymptotically stable.

Proof

The proof is similar to Theorem 4.2, so we only give the details of main differences. Note that \((H_{3})\), we have \(i(0,t)< t(p+\varepsilon )\), \(\varepsilon >0\) arbitrarily small. Linking the formula (14), one has

By virtue of \((H_{4})\) we can find \(\varepsilon \) such that

The desired result holds. \(\square \)

To end this section, we extend the above results to nonlinear case. Consider the following linear impulsive delay system with nonlinear term

where \(f\in C(\mathbb {R}^{n},\mathbb {R}^{n})\).

We impose the following additional assumptions:

\((H_{5})\) Suppose exists \(l > 0\) such that \(\Vert f (x)\Vert < l\Vert x\Vert .\)

\((H_{6})\) Suppose that \(Kl+\frac{\eta _{2}}{2}<0,\) where \(\eta _{2}\) is defined in (13).

Theorem 4.4

Assume that \((H_{3})\), \((H_{5})\) and \((H_{6})\) are satisfied. Then the trivial solution of (15) is locally asymptotically stable.

Proof

By Corollary 3.3, any solution of (15) should have the form

By the conditions of \((H_{3})\), (7) reduces to

Taking norm in two sides of (16) via (17) and \((H_{5})\), one has

This yields that

Let

By using the well-known classical Gronwall inequality (see [31, Theorem 1]), we have

which yields that

due to \((H_{6})\). The proof is finished. \(\square \)

5 Examples

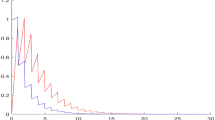

In this section, we give two examples to illustrate our above theoretical results. Here, we use MATLAB software to compute some parameters and draw the figures for the examples.

Example 5.1

Consider (1) with \(\tau =0.2\) and

and

where [x] is the biggest integer less than real x.

Obviously, \(AB=BA\), \(AC_{j}=C_{j}A\), \(BC_{j}=C_{j}B\), \(j=1,2,\ldots ,\) and \(\alpha (A)=-2.5\), \(\rho (C)=3\). Next, \(\Vert e^{At}\Vert =e^{-2.5t}\le Ke^{(\alpha (A)+\varepsilon )t}\), where \(K=1\) and \(\varepsilon >0\). By computation, \(\Vert \varphi \Vert _{1}=0.4=\Vert \varphi (-\tau )\Vert <\delta :=0.41\), \(p=\lim \limits _{t\rightarrow \infty }\frac{i(0,t)}{t}=\lim \limits _{t\rightarrow \infty }\frac{[\frac{t+1}{2}]}{t}=\frac{1}{2}.\) Next, by using MATLAB software, we obtain

and

Now all the conditions of Theorem 4.3 are satisfied. Thus,

that is, the trivial solution of (1); (18); (19) is locally asymptotically stable (see Fig. 1).

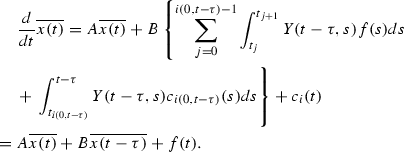

Example 5.2

Consider (15) with \(\tau =0.2\) and

and \(\varphi \), i(0, t) are defined in (19).

By the calculation, \(AB= \left( \begin{array}{cc} -2.64 &{} -0.66 \\ 0 &{} -1.98 \\ \end{array} \right) =BA\), \(AC_{j}= \left( \begin{array}{cc} -9.9 &{} -1.65 \\ 0 &{} -8.25 \\ \end{array} \right) =C_{j}A\),

\(BC_{j}= \left( \begin{array}{cc} 2.4 &{} 0.9 \\ 0 &{} 1.5 \\ \end{array} \right) =C_{j}B,~j=1,2,\ldots .\)

Obviously, \(\alpha (A)=-3.3\). and \(\Vert e^{At}\Vert =e^{-3.3t}\le Ke^{(\alpha (A)+\varepsilon )t}\), where \(K=1\) and \(\varepsilon >0\). In addition, \(\Vert f(x)\Vert < l\Vert x\Vert \), we can choose \(l=0.5\). Next, by using MATLAB software, we obtain

Moreover, \(\eta _{2}=\alpha (A)+\alpha +p\ln (\rho (C)+1)=-1.1586\), thus,

Note that \(Kl+\frac{\eta _{2}}{2}=-0.0793<0.\) Now all the conditions of Theorem 4.4 are satisfied. Then,

that is, the trivial solution of (15); (19); (20) is locally asymptotically stable (see Fig. 2).

References

Khusainov, D.Y., Shuklin, G.V.: Linear autonomous time-delay system with permutation matrices solving. Stud. Univ. Žilina 17, 101–108 (2003)

Diblík, J., Khusainov, D.Ya.: Representation of solutions of discrete delayed system \(x(k+1)=Ax(k)+Bx(k-m)+f(k)\) with commutative matrices. J. Math. Anal. Appl. 318, 63–76 (2006)

Diblík, J., Khusainov, D.Y.: Representation of solutions of linear discrete systems with constant coefficients and pure delay. Adv. Differ. Equ. 1, 1–13 (2006)

Khusainov, D.Ya., Diblík, J., Růžičková, M., Lukáčová, J.: Representation of a solution of the cauchy problem for an oscillating system with pure delay. Nonlinear Oscillations 11, 261–270 (2008)

Boichuk, A., Diblík, J., Khusainov, D.Ya., Růžičková, M.: Fredholm’s boundary-value problems for differential systems with a single delay. Nonlinear Anal. 72, 2251–2258 (2010)

Medved’, M., Pospišil, M., Škripokvá, L.: Stability and the nonexistence of blowing-up solutions of nonlinear delay systems with linear parts defined by permutable matrices. Nonlinear Anal. 74, 3903–3911 (2011)

Pospišil, M.: Representation and stability of solutions of systems of functional differential equations with multiple delays. Electron. J. Qual. Theory Differ. Equ. 54, 1–30 (2012)

Medveď, M., Pospišil, M.: Sufficient conditions for the asymptotic stability of nonlinear multidelay differential equations with linear parts defined by pairwise permutable matrices. Nonlinear Anal. 75, 3348–3363 (2012)

Diblík, J., Fečkan, M., Pospišil, M.: Representation of a solution of the cauchy problem for an oscillating system with two delays and permutable matrices. Ukrainian Math. J. 65, 58–69 (2013)

Diblík, J., Ya, D., Khusainov, D.Ya., Baštinec, J., Sirenko, A.S.: Exponential stability of linear discrete systems with constant coefficients and single delay. Appl. Math. Lett 51, 68–73 (2016)

Li, M., Wang, J.: Finite time stability of fractional delay differential equations. Appl. Math. Lett. 64, 170–176 (2017)

Diblík, J., Morávková, B.: Discrete matrix delayed exponential for two delays and its property. Adv. Differ. Equ. 2013, 1–18 (2013)

Diblík, J., Morávková, B.: Representation of the solutions of linear discrete systems with constant coefficients and two delays. Abstr. Appl. Anal. 2013, 1–19 (2014)

Khusainov, D.Ya., Shuklin, G.V.: Relative controllability in systems with pure delay. Int. J. Appl. Math. 2, 210–221 (2005)

Diblík, J., Khusainov, D.Ya., Růžičková, M.: Controllability of linear discrete systems with constant coefficients and pure delay. SIAM J. Control Optim. 47, 1140–1149 (2008)

Diblík, J., Khusainov, D.Y., Lukáčová, J., Růžičková, M.: Control of oscillating systems with a single delay. Adv. Differ. Equ. 1, 1–15 (2010)

Diblík, J., Fečkan, M., Pospišil, M.: On the new control functions for linear discrete delay systems. SIAM J. Control Optim. 52, 1745–1760 (2014)

Benchohra, M., Henderson, J., Ntouyas, S.K.: Impulsive Differential Equations and Inclusions. Hindawi Publishing Corporation, New York (2006)

Wang, Q., Lu, D.C., Fang, Y.Y.: Stability analysis of impulsive fractional differential systems with delay. Appl. Math. Lett. 40, 1–6 (2015)

Ivanov, I.L., Slyn’ko, V.I.: Conditions for the stability of an impulsive linear equation with pure delay. Ukrainian Math. J. 61, 1417–1427 (2009)

Slyn’ko, V.I., Denisenko, V.S.: The stability analysis of abstract Takagi–Sugeno fuzzy impulsive system. Fuzzy Set. Syst. 254, 67–82 (2014)

Zhu, Q., Cao, J., Rakkiyappan, R.: Exponential input-to-state stability of stochastic Cohen–Grossberg neural networks with mixed delays. Nonlinear Dyn. 79, 1085–1098 (2015)

Zhu, Q., Song, B.: Exponential stability of impulsive nonlinear stochastic differential equations with mixed delays. Nonlinear Anal. 12, 2851–2860 (2011)

Kao, Y., Zhu, Q., Qi, W.: Exponential stability and instability of impulsive stochastic functional differential equations with Markovian switching. Appl. Math. Comput. 271, 795–804 (2015)

Zhu, Q.: pth Moment exponential stability of impulsive stochastic functional differential equations with Markovian switching. J. Franklin Inst. 351, 3965–3986 (2014)

Zhu, Q., Cao, J.: Stability analysis of Markovian jump stochastic BAM neural networks with impulse control and mixed time delays. IEEE Trans. Neural Netw. Learn. Syst. 23, 467–479 (2012)

Zhu, Q., Cao, J.: Stability of Markovian jump neural networks with impulse control and time varying delays. Nonlinear Anal. 13, 2259–2270 (2012)

Zhu, Q., Rakkiyappan, R., Chandrasekar, A.: Stochastic stability of Markovian jump BAM neural networks with leakage delays and impulse control. Neurocomputing 136, 136–151 (2014)

Samoilenko, A.M., Perestyuk, N.A., Chapovsky, Y.: Impulsive Differential Equations. World Scientific, Singapore (1995)

Ortega, J.M., Rheinboldt, W.C.: Iterative Solution of Nonlinear Equations in Several Variables. Academic Press, New York (1970)

Bainov, D., Simeonov, P.: Integral Inequalities and Applications. Kluwer Academic Publishers, Dordrecht (1992)

Acknowledgements

The authors are grateful to the referees for their careful reading of the manuscript and valuable comments. The authors thank the help from the editor too. This work is partially supported by National Natural Science Foundation of China (11661016) and Training Object of High Level and Innovative Talents of Guizhou Province ((2016)4006), Unite Foundation of Guizhou Province ([2015]7640) and Graduate Course of Guizhou University (ZDKC[2015]003).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

You, Z., Wang, J. Stability of impulsive delay differential equations. J. Appl. Math. Comput. 56, 253–268 (2018). https://doi.org/10.1007/s12190-016-1072-1

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12190-016-1072-1