Abstract

In this paper, a Newton method is proposed to obtain efficient solutions for the optimization problems with interval-valued objective functions. In the concept of efficient solution of the problem, a suitable partial ordering for a pair of intervals is used. Through the notion of generalized Hukuhara difference of a pair of intervals, the generalized Hukuhara differentiability of multi-variable interval-valued functions is defined and analyzed to develop the proposed method. The objective function in the problem is assumed to be twice continuously generalized Hukuhara differentiable. Under this hypothesis, it is exhibited that the method has a local quadratic rate of convergence. In order to improve the local convergence of the method to a global convergence, an updated Newton method is also given. The sequential algorithms and the convergence results of the proposed methods are demonstrated. Several numerical examples are presented to illustrate the proposed methodologies.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The conventional mathematical description of an optimization problem appears with exact values of the involving parameters. However, most of the realistic optimization problems faced by engineers, management scientists, and applied mathematicians today exhibit that optimization problems are usually characterized by fuzzy- or interval-valued objectives and/or constraint functions, especially in the inexact, imprecise, or uncertain environment. Thus, each of the involving parameters in the problem, which are generated from the available data set of the optimization model, are suitably taken as a fuzzy number or an interval. The optimization problems whose involving parameters are given by intervals, are categorized as Interval Optimization Problems (IOPs). In the mathematical analysis on the IOPs, usually the parameters are considered as compact intervals.

Although there is a plethora of methods to solve conventional optimization problems, those methods cannot be easily/apparently applied on solving IOPs. For the IOPs, the main problem is that unlike the real numbers, intervals are not linearly ordered. Thus, obtaining an appropriate comparison of interval-valued objective functions with regard to optimization problem is an important issue. Much similar problem arises with fuzzy optimization problems where fuzzy numbers are not linearly ordered [7, 9]. Over the years there have been many attempts to obtain an appropriate ordering of intervals in connection with optimization problems [16, 17, 19, 21, 23–25, 27, 28, 35, 40, 41]. With the help of the existing orderings of a pair of intervals, IOPs have been analyzed by many scholars, for instance, see [39, 42] and the references therein.

Very recently, using a newly introduced ordering on intervals, Bhurjee and Panda [2] have given a notion of efficient solutions of interval optimization problems which is similar to the Pareto optimality concept in multi-objective optimization problems. This idea on efficient solution of IOPs is rapidly used by many authors; for instance, see [1, 3, 4, 22, 26].

In this paper, we try to characterize and obtain efficient solutions of IOPs. Towards this end, we attempt to propose a Newton method to solve a multi-variable unconstrained IOP. The objective function of the problem that we consider here is interval-valued. Thus, to develop the Newton method we require (i) an appropriate ordering of a pair of intervals and (ii) a notion of differentiability for interval-valued functions.

In this article, to define the efficient solutions of an IOP, we use the ordering of intervals that is developed by Bhurjee and Panda [2] due to two reasons. First, it is a partial ordering which is superior to the predecessors [2]. Second, it has an important connection on investigating efficient solutions of an interval optimization problem [2].

In characterizing an efficient solution, we introduce the notion of generalized Hukuhara differentiability of an interval-valued function which may be the most general concept of differentiability of an interval-valued function.

Using the notion of generalized Hukuhara differentiability, the Newton method is developed and its convergence result is analyzed. We show that the proposed Newton method is a local method and it has a convergence rate of order two. In order to improve the Newton method to have the global convergence property, an updated Newton method is also proposed.

This article is demonstrated in the following sequence. In the next section, we define the basic terminologies and describe the optimization problems that are used throughout the paper. In Sect. 2, we explore essential deficiencies of the existing ideas on differentiability of interval-valued functions. We, then, introduce and interrelate the notions of gH-differentiability and gH-partial derivative of an interval-valued function in Sect. 3. Section 4 presents the Newton method, an updated Newton method and their convergence analysis. Finally, we give a short summary and a few suggestions for future research in Sect. 5.

2 Preliminaries and terminologies

2.1 Notations and interval arithmetics

In this article, we employ the following notations.

-

The bold letters, \(\mathbf{A }\), \(\mathbf{B }\), \(\mathbf{C }\), \(\cdots \), denote the closed and bounded intervals of the real number set \(\mathbb {R}\). Any small letter, a, b, or \(c, \cdots \), represents a real number. To denote the interval [0, 0] we use \(\mathbf 0 \).

-

The notation \(I(\mathbb {R})\) represents the set of all closed and bounded intervals in \(\mathbb {R}\). The set \(I(\mathbb {R})^k\) is the Cartesian product \(I(\mathbb {R}) \times I(\mathbb {R}) \cdots \times I(\mathbb {R})\) (k times).

-

The notation \(\mathbf{C }_v^k\) is used to denote a k-tuple interval vector \((\mathbf{C }_1, \mathbf{C }_2, \cdots , \mathbf{C }_k)^T\) where \(\mathbf{C }_j\) is an element of \(I(\mathbb {R})\), \(j = 1, 2, \ldots , k\). Thus \(\mathbf{C }_v^k\) is an element of \(I(\mathbb {R})^k\).

In order to represent an \(\mathbf{A }\) in \(I(\mathbb {R})\), we write \(\mathbf{A } = [\underline{a}, \overline{a}]\). The interval \(\mathbf{A }\) in \(I(\mathbb {R})\) can also be parametrically presented by the collection of a(t)’s where \(a(t) = \underline{a} + t(\overline{a} - \underline{a})\), \(t \in [0,1]\).

It is well known that for two intervals \(\mathbf{A } = [\underline{a}, \overline{a}]\) and \(\mathbf{B } = [\underline{b}, \overline{b}]\) in \(I(\mathbb {R})\), their addition, denoted by \(\mathbf{A } \oplus \mathbf{B }\), is defined as:

Similarly, a scalar multiplication by a real constant \(\lambda \) to \(\mathbf{A }\), denoted by \(\lambda \odot \mathbf{A }\), is defined as:

From the literature on the arithmetic of intervals [29, 31, 32, 37, 38], it is clear that the usual definition of difference of two compact intervals \(\mathbf{A }\) and \(\mathbf{B }\), which is defined by \( \mathbf{A } \ominus \mathbf{B } = \) \(\left\{ a(t_1) - b(t_2)|~ t_1, t_2 \in [0,1]\right\} \), is not appealing, in connection with the calculus of interval-valued functions, due to the following two reasons:

-

(i)

difference between \(\mathbf{A }\) and \(\mathbf{A }\) is not \(\{0\}\), and

-

(ii)

if \(\mathbf{C } = \mathbf{A } \ominus \mathbf{B }\) then \(\mathbf{A } \) may not be equal to \(\mathbf{B } \oplus \mathbf{C }\). For instance, if we take \(\mathbf{A } = [1, 2]\) and \(\mathbf{B } = [3,5]\), then \(\mathbf{C } = \mathbf{A } \ominus \mathbf{B } = [-4, -1]\); thus, \(\mathbf{B } \oplus \mathbf{C } = [-1, 4] \ne \mathbf{A }\).

In order to overcome both the deficiencies of the usual difference between a pair of compact intervals \(\mathbf{A }\) and \(\mathbf{B }\), Hukuhara [20] have defined the difference as the interval \(\mathbf{C }\) such that \(\mathbf{A } = \mathbf{B } \oplus \mathbf{C }\). Although this Hukuhara-difference succeeded to overcome the deficiencies of the usual difference, this definition is again not so appealing, especially, when we employ it in defining differentiability of interval-valued functions through a limit of a Leibniz-like quotient. Because, the Hukuhara-difference may not always exist for an arbitrary pair of compact intervals; for instance, if we take \(\mathbf{A } = [1, 2]\) and \(\mathbf{B } = [3,5]\) then the Hukuhara-difference between \( \mathbf{A }\) and \(\mathbf{B }\) does not exist. It exists only when the length of \(\mathbf{A }\) is bigger than that of \(\mathbf{B }\) [6].

Therefore towards defining the notion of differentiability of a multi-variable interval-valued function, we require a suitable definition of difference between two compact intervals. In this article, we use the following generalized Hukuhara difference (gH-difference, in short) between two compact intervals.

Definition 1

(gH-difference of intervals [6]). Let \(\mathbf{A }\) and \(\mathbf{B }\) be two elements of \(I(\mathbb {R})\). The gH-difference between \(\mathbf{A }\) and \(\mathbf{B }\) is defined as the interval \(\mathbf{C }\) such that

It is to be noted that \(\mathbf{C } = \mathbf{A } \ominus _{gH} \mathbf{B } = [\min \{\underline{a}-\underline{b}, \overline{a} - \overline{b}\}, \max \{\underline{a}-\underline{b}, \overline{a} - \overline{b}\}]\).

For a detailed account of the interrelations between Hukuhara-difference and gH-difference, one can see [6]. Here it is to be mentioned that gH-difference between any arbitrary pairs of intervals exists and it satisfy the equation \(\mathbf{A } \ominus \mathbf{A } = \{0\}\).

2.2 Interval-valued functions

The interval-valued functions have been presented in different ways by many researchers since the pioneering work by Moore in 1966 [31]. The research articles by Hansen [16], Wu [40], Bhurjee and Panda [2] and the references therein are main stream of this topic. Broadly there are two different parametric representations of an interval-valued function. Those as mentioned below.

2.2.1 Parametric representation of interval-valued function

Let \(\mathbf{F }_{\mathbf{C }_v^k}: \mathbb {R}^n \rightarrow I(\mathbb {R})\) be an interval-valued function involving k interval coefficients \(\mathbf{C }_1\), \(\mathbf{C }_2\), \(\ldots \), \(\mathbf{C }_k\); we denote \(\mathbf{C }_v^k = (\mathbf{C }_1, \mathbf{C }_2, \ldots , \mathbf{C }_k)^T\). If \(\mathbf{C }_j\) is the interval \(\big [\underline{c_j},\overline{c_j}\big ]\), then the interval vector \(\mathbf{C}_v^k\) can be presented by

Thus, parametrically, the function \(\mathbf{F }_{\mathbf{C }_v^k}\) can be observed as a collection of bunch of functions \(f_{c(t)}\)’s where c(t) is a vector in \(\mathbf{C}_v^k\). That is to say for all x in \(\mathbb {R}^n\) we have

Example 1

For instance, if we consider the function \(\mathbf{F }_{\mathbf{C }_v^2} (x_1, x_2) = \mathbf{C }_1 x_1 \oplus \mathbf{C }_2 x_2^2 e^{x_1}\) with \(\mathbf{C }_1 = [1, 3]\) and \(\mathbf{C }_2 = [-2, 1]\), then \(\mathbf{F }_{\mathbf{C }_v^2}\) is the collection of functions \(f_{(c_1(t_1), c_2(t_2))^T}(x_1, x_2) = c_1(t_1) x_1 + c_2(t_2) x_2^2 e^{x_1} = (1 + 2t_1) x_1 + (-2 + 3t_2) x_2^2 e^{x_1}\), where \(t_1\) and \(t_2\) are in [0, 1].

We can also have one other presentation of \(\mathbf{F }_{\mathbf{C }_v^k}\). Towards this end, we assume that for each fixed x in \(\mathbb {R}^n\) all \(f_{c(t)}(x)\)’s are continuous in t. Let

Then, for each argument point x in \(\mathbb {R}^n\), \(\mathbf{F }_{\mathbf{C }_v^k} (x)\), being a connected set in \(\mathbb {R}\), can be presented by

Example 2

We consider the function \(\mathbf{F }_{\mathbf{C }_v^2}\) in Example 1. Here

and

Therefore, \(\mathbf{F }_{\mathbf{C }_v^k} (x_1, x_2)\) can be presented by the class of functions \(\left\{ f_\lambda (x)\big | \lambda \in [0, 1]\right\} \) where

Although according to the parametric representations (2.1) and (2.2) of \(\mathbf{F }_{\mathbf{C }_v^k}\), for each \(x \in \mathbb {R}^n\) we have

there are few important observations in this regard, which are mentioned below.

-

(1)

An \(f_{c(t)}\) may not be an \(f_\lambda \). In order to observe so, let \(x_0\) be an element of \(\mathbb {R}^n\) and we consider \(f_{c(t)}(x_0)\) for some fixed c(t) in \(\mathbf{C }_v^k\). Since the real number \(f_{c(t)}(x_0)\) lies in the interval \(\mathbf{F }_{\mathbf{C }_v^k}(x_0) = \big [\underline{f}(x_0), \overline{f}(x_0)\big ]\), there exists a \(\lambda _0 \in [0, 1]\) such that \(f_{c(t)}(x_0) = \lambda _0 \overline{f}(x_0) + (1 - \lambda _0)\underline{f}(x_0)\). This \(\lambda _0\) depends on the chosen c(t) as well as on \(x_0\). If we vary \(x_0\) to some other point \(x_1\) in \(\mathbb {R}^n\), then again there exists a \(\lambda _1 \in [0, 1]\) such that \(f_{c(t)}(x_1) = \lambda _1 \overline{f}(x_1) + (1 - \lambda _1)\underline{f}(x_1)\). It is quite natural that \(\lambda _1\) is different from \(\lambda _0\). This observation supports the claim.

-

(2)

\(\underline{f}\) and \(\overline{f}\) may not be identical to \(f_{c(0)}\) and \(f_{c(1)}\), respectively. For instance, consider the function \(\mathbf{F }_{\mathbf{C }_v^2}\) in Example 1. Here for \(t = (0, 0) \in [0, 1]^2\),

$$\begin{aligned} f_{c(t)}(x) = f_{(c_1(0),c_2(0))^T}(x_1, x_2) = x_1 -2 x_2^2 e^{x_1} \end{aligned}$$and for \(t = (1, 1) \in [0, 1]^2\),

$$\begin{aligned} f_{c(t)}(x) = f_{(c_1(1),c_2(1))^T}(x_1, x_2) = 3 x_1 + x_2^2 e^{x_1}. \end{aligned}$$We note that \(f_{(c_1(0),c_2(0))^T} \ne \underline{f}\) and \(f_{(c_1(1),c_2(1))^T} \ne \overline{f}\).

-

(3)

\(\int _0^1 f_\lambda (x) \mathrm {d}\lambda \) may not be equal to \(\int _{t \in [0, 1]^k} f_{c(t)}(x) \mathrm {d}t\). For example consider the function \(\mathbf{F }_{\mathbf{C }_v^2}(x) = [1, 2] \odot e^{x \odot [2, 3]}\), \(x \in \mathbb {R}\). Here

$$\begin{aligned} \int _0^1 f_\lambda (x) \mathrm {d} \lambda = \tfrac{1}{2}(\underline{f}(x) + \overline{f}(x)) = {\left\{ \begin{array}{ll} \tfrac{1}{2} e^{2x} + e^{3x} &{}\quad \text { if } x \ge 0\\ e^{2x} + \tfrac{1}{2} e^{3x} &{}\quad \text { if } x < 0. \end{array}\right. } \end{aligned}$$However,

$$\begin{aligned} \int _{t \in [0, 1]^2} f_{c(t)}(x) \mathrm {d}t = \int _0^1 \int _0^1 \left( (1 + t_1) e^{(2+ t_2)x}\right) \mathrm {d}t_2 \mathrm {d}t_1 = \tfrac{1}{4} \left[ 2 e^{3x} - 3e^{2x}\right] \text { for all } x \in \mathbb {R}. \end{aligned}$$

2.2.2 Note on the existing differentiability of interval-valued function

According to various parametric and classical representations, there are different approaches in the literature to develop calculus of interval-valued functions. The calculus of single variable interval-valued functions have been rigorously formalized in several articles (for details, see [6, 29, 37, 38]) since the pioneering work by Markov [30]. The research articles by Neumaier [33], Stahl [36] and the references therein are the main stream of this topic. Recently, Bhurjee and Panda [2] defined differentiability of a multi-variable interval-valued function \(\mathbf{F }_{\mathbf{C }_v^k}: \mathbb {R}^n \rightarrow I(\mathbb {R})\) through the existence of the limit

There are several issues against this definition. Those are mentioned below.

-

(1)

The motivation behind the differentiability that is defined in [2] reads as the existence of differentiability of an interval-valued function \(\mathbf{F }_{\mathbf{C }_v^k}(x) = \big [\underline{f}(x),~ \overline{f}(x)\big ]\) depends upon the differentiability of the boundary functions \(\underline{f}(x)\) and \(\overline{f}(x)\). However, this is not compatible with the definition of differentiability that is given in [2]. For instance, we consider the function \(\mathbf{F }_{\mathbf{C }_v^k}\) in Example 1. Here, all \(f_{c(t)}\)’s are differentiable and hence, according to [2], so is \(\mathbf{F }_{\mathbf{C }_v^k}\). However, the boundary functions \(\underline{f}\) and \(\overline{f}\) (see Example 2) are not differentiable at (0, 0).

-

(2)

The reference [2] defined differentiability of \(\mathbf{F }_{\mathbf{C }_v^k}\) under the assumption that all underlying \( f_{c(t)}\)’s are differentiable. Not only this is a very restrictive hypothesis, the equality of

$$\begin{aligned}&\lim _{h \rightarrow 0} \tfrac{1}{\Vert h\Vert } \left( \mathbf{F }_{\mathbf{C }_v^k}(x_0 + h) \ominus \mathbf{F }_{\mathbf{C }_v^k}(x_0)\right) \\ =&\left\{ \alpha (x_0, t) \big | t \in [0, 1]^k, \alpha (x_0, t) = \lim _{h \rightarrow 0} \tfrac{1}{\Vert h\Vert } \left( f_{c(t)}(x_0 + h) - f_{c(t)}(x_0)\right) \right\} \end{aligned}$$is questionable. For instance, consider the linear interval-valued function \(\mathbf{F }_{\mathbf{C }_v^2}(x_1, x_2) = [1, 2] x_1 \oplus [1,2] x_2\). Here, for any c(t) in \(\mathbf{C }_v^2\), \(f_{c(t)}(x_1, x_2) = (1 + t_1)x_1 + (1 + t_2)x_2\) is differentiable at \(x_0 = (0, 0)\). However the limit

$$\begin{aligned} \lim _{h \rightarrow 0} \tfrac{1}{\Vert h\Vert } \Bigg (\mathbf{F }_{\mathbf{C }_v^k}(x_0 + h) \ominus \mathbf{F }_{\mathbf{C }_v^k}(x_0)\Bigg ) = \lim _{(h_1, h_2) \rightarrow (0, 0)} \tfrac{[1, 2]h_1 \oplus [1,2]h_2}{\sqrt{h_1^2 + h_2^2}} \end{aligned}$$does not exist. Since, for \(h_2 = 0\) the limit reduces to \(\lim _{h_1 \rightarrow 0} \tfrac{[1, 2]h_1}{|h_1|}\) which does not exist. Thus, the question of the mentioned equality cannot arise.

-

(3)

A regular differentiable function may become nondifferentiable. For instance, we consider \(\mathbf{F }_{\mathbf{C }_v^4}(x_1, x_2) = \mathbf C _1 x_1^2 \oplus \mathbf C _2 x_2^2 \oplus \mathbf C _3 x_1\) where \(\mathbf C _1 = \mathbf C _2 = \mathbf C _3 = [1,1]\). This function is, evidently, differentiable everywhere. However, at \(x_0 = (0, 0)\) the limit

$$\begin{aligned} \lim _{h \rightarrow 0} \frac{1}{\Vert h\Vert } \Bigg (\mathbf{F }_{\mathbf{C }_v^k}(x_0 + h) \ominus \mathbf{F }_{\mathbf{C }_v^k}(x_0)\Bigg ) = \lim _{(h_1, h_2) \rightarrow (0, 0)} \frac{h_1^2 + h_2^2 + h_1}{\sqrt{h_1^2 + h_2^2}} \end{aligned}$$does not exist, and hence, \(\mathbf{F }_{\mathbf{C }_v^4}\) is nondifferentiable according to [2].

-

(4)

The equality of

$$\begin{aligned} \frac{\partial \mathbf{F }_{\mathbf{C }_v^k}(x^*)}{\partial x_i} = \left\{ \frac{\partial f_{c(t)}}{\partial x_i}\big | c(t) \in \mathbf{C }_v^k \right\} \end{aligned}$$is not always true. For example consider the linear interval-valued function \(\mathbf{F }_{\mathbf{C }_v^2}(x_1, x_2) = [1, 2] x_1 \oplus [1,2] x_2\). Here, for any c(t) in \(\mathbf{C }_v^2\), the partial derivatives of \(f_{c(t)}(x_1, x_2) = (1 + t_1)x_1 + (1 + t_2)x_2\) exist and

$$\begin{aligned} \tfrac{\partial f_{c(t)}}{\partial x_1} = 1 + t_1 = \tfrac{\partial f_{c(t)}}{\partial x_2} \end{aligned}$$for all \((x_1, x_2)\) in \(\mathbb {R}^2\). However, we note that at \(x_0 = (0, 0)\), none of the limits

$$\begin{aligned} \lim _{h_1 \rightarrow 0} \tfrac{[1, 2]h_1}{|h_1|} ~~\text { and}~~\lim _{h_2 \rightarrow 0} \tfrac{[1, 2]h_2}{|h_2|} \end{aligned}$$exist and hence the partial derivatives of \(\mathbf{F }_{\mathbf{C }_v^2}\) do not exist. Thus the question of the mentioned equality cannot arise.

-

(5)

A regular single variable differentiable function may become nondifferentiable. For example consider \(\mathbf{F }_{\mathbf{C }} = \mathbf{C } x\) where \(\mathbf{C } = [1, 1]\). This well-known identity function is differentiable and its derivative value is ‘1’ across the points in \(\mathbb {R}\). However, according to [2], \(\mathbf{F }_{\mathbf{C }}\) is not differentiable, since \(\lim _{h\rightarrow 0}\tfrac{1}{\Vert h\Vert } \big (\mathbf{F }_{\mathbf{C }}(x + h) \ominus \mathbf{F }_{\mathbf{C }}(x)\big ) = \lim _{h \rightarrow 0} \tfrac{h}{|h|}\) does not exist for any x in \(\mathbb {R}\).

The item (6) indicates that if the denominator \(\Vert h\Vert \) in Limit (2.3) is replaced by h, then the definition may become appropriate. But, this will not be suitable for multi-variable functions.

The aforementioned paragraphs show that the notion of derivative and the associated analysis given in [2] requires further enquiry. Also, in addition, we can attempt to redefine it for multi-variable interval-valued functions. One might guess that the numerator in Limit (2.3) should be replaced by \(\mathbf{F }_{\mathbf{C }_v^k}(x + h) \ominus \mathbf{F }_{\mathbf{C }_v^k}(x) \ominus h^T\odot \nabla \mathbf{F }_{\mathbf{C }_v^k}(x)\). However, surprisingly, this modification may not also be appropriate. Since, for any pair of intervals \(\mathbf{A }\) and \(\mathbf{B }\), the difference \(\mathbf{A } \ominus \mathbf{B }\) may not exist. It exists only when length of \(\mathbf{A }\) is bigger than that of \(\mathbf{B }\), since otherwise \(\mathbf{A } \ominus \mathbf{B }\) may not imply \(\mathbf{A } = \mathbf{B } \oplus \mathbf{C }\). At this situation, the notion of generalized-Hukuhara difference between a pair of intervals may be an appropriate choice, since it is the most general definition of difference (for details please see [6]).

Although, using the generalized-Hukuhara difference, Chalco-Cano et al. [6] have defined differentiability for a special category of single variable interval-valued function, the notion of differentiability, partial derivative and their interrelations for any general multi-variable interval-valued function is yet to be developed. The next section defines and analyzes the same.

3 Differentiability of interval-valued functions

In this section, we develop a notion of differentiability of multi-variable interval-valued functions which has a useful connection with efficient solution or non-dominated solution of an interval optimization problem. Towards this end, at first, we give the definition of continuity. Then, differentiability, partial derivatives and their interrelations will be investigated.

In the following definitions, we assume that \(D \subseteq \mathbb {R}^n\) is the domain of definition of the interval-valued function \(\mathbf{F }_{\mathbf{C }_v^k}\).

Definition 2

(gH-continuity). Let \(x_0 = (x^0_1, x^0_2, \cdots , x^0_n)\) be an interior point of D and \(h \in \mathbb {R}^n\) be such that \(x_0 + h \in D\). The function \(\mathbf{F }_{\mathbf{C }_v^k}\) is said to be continuous at \(x_0\), if there exists \(\mathbf E (\mathbf{F }_{\mathbf{C }_v^k}(x_0); h) \in I(\mathbb {R})\) with \(\lim _{\Vert h\Vert \rightarrow 0} \mathbf E (\mathbf{F }_{\mathbf{C }_v^k}(x_0); h) = \mathbf{0 }\) such that

Due to the definition of gH-difference, Eq. (3.1) implies and implemented by

Definition 3

(gH-partial derivative). Let \(x_0 = (x^0_1, x^0_2, \cdots , x^0_n)\) be an interior point of D and \(h = (h_1, h_2, \cdots , h_n) \in \mathbb {R}^n\) be such that \(x_0 + h \in D\). We define a function \({\mathbf \Phi }_i\) by \({\mathbf \Phi }_i (x_i) = \mathbf{F }_{\mathbf{C }_v^k} (x^0_1, x^0_2, \cdots , x^0_{i-1}, x_i, x^0_{i+1}, \cdots , x^0_n)\). If the generalized Hukuhara derivative (gH-derivative, in short) of \(\mathbf {\Phi }_i\) exists at \(x^0_i\), which is defined by the existence of the limit

then we say that \(\mathbf{F }_{\mathbf{C }_v^k}\) has the ith gH-partial derivative at \(x_0\).

We denote the i-th gH-partial derivative of \(\mathbf{F }_{\mathbf{C }_v^k}\) at \(x_0\) by \(D_i \mathbf{F }_{\mathbf{C }_v^k}(x_0)\), \(i = 1, 2, \ldots , n\).

Note 1

It is evident that if \(D_i \mathbf{F }_{\mathbf{C }_v^k}(x_0)\) exists, then \({\mathbf \Phi }_i(x^0_i + h_i) \ominus _{gH} {\mathbf \Phi }_i(x^0_i)\) can be written as \(h_i \odot \left( D_i \mathbf{F }_{\mathbf{C }_v^k}(x_0) \oplus \mathbf E _i(\mathbf{F }_{\mathbf{C }_v^k}(x_0); h) \right) \) where \(\lim _{\Vert h\Vert \rightarrow 0} \mathbf E _i (\mathbf{F }_{\mathbf{C }_v^k}(x_0); h) = \mathbf{0 }\).

Definition 4

(gH-gradient). The gH-gradient. of an interval-valued function \(\mathbf{F }_{\mathbf{C }_v^k}\) at a point \(x_0 \in D\) is defined by the interval vector

We denote this gH-gradient by \(\nabla \mathbf{F }_{\mathbf{C }_v^k} (x_0)\).

Proposition 1

Let \(x_0\) be an element of D, \(\mathbf {\Phi }_i\) be the function as defined in Definition 3 and \(\mathbf {\Phi }_i(x) = \big [ \underline{\phi }_i(x), \overline{\phi }_i(x) \big ]\) for \(x \in D\). If the i-th gH-partial derivative of \(\mathbf{F }_{\mathbf{C }_v^k}\) exists at \(x_0\), then one of the following cases holds

-

(i)

\(D_i\mathbf{F }_{\mathbf{C }_v^k}(x_0) = \Big [ \min \big \{\underline{\phi }'_i(x_0),~\overline{\phi }'_i(x_0)\big \},~ \max \big \{\underline{\phi }'_i(x_0),~\overline{\phi }'_i(x_0)\big \} \Big ];\)

-

(ii)

\((\underline{\phi }_i)'_-(x_0)\), \((\underline{\phi }_{\,i})'_+(x_0)\), \((\overline{\phi }_i)'_-(x_0)\) and \((\overline{\phi }_{i})'_+(x_0)\) exist and satisfy \((\underline{\phi }_{\,i})'_-(x_0)\) \(=\) \((\overline{\phi }_i)'_+(x_0)\) and \((\underline{\phi }_{\,i})'_+(x_0)\) \(=\) \((\overline{\phi }_i)'_-(x_0)\). Moreover,

$$\begin{aligned} D_i \mathbf{F }_{\mathbf{C }_v^k}(x_0) = \left\{ \begin{array}{l} \left[ \min \left\{ (\underline{\phi }_i)'_-(x_0),~(\overline{\phi }_i)'_-(x_0)\big \},~ \max \big \{(\underline{\phi }_i)'_-(x_0),~(\overline{\phi }_i)'_-(x_0)\right\} \right] \\ \left[ \min \left\{ (\underline{\phi }_i)'_+(x_0),~(\overline{\phi }_i)'_+(x_0)\big \},~ \max \big \{(\underline{\phi }_i)'_+(x_0),~(\overline{\phi }_i)'_+(x_0)\right\} \right] . \end{array}\right. \end{aligned}$$

Proof

The proof is followed by Theorem 3 of the article referred in [30].

Definition 5

(gH-differentiability). A function \(\mathbf{F }_{\mathbf{C }_v^k}: D \rightarrow I(\mathbb {R})\) is said to be gH-differentiable at \(x_0\) in D if there exist two interval-valued functions \(\mathbf{E }(\mathbf{F }_{\mathbf{C }_v^k}(x_0); h)\) and \(\mathbf L _{x_0} : \mathbb {R}^n \rightarrow I(\mathbb {R})\) such that

for \(\Vert h\Vert < \delta \) for some \(\delta > 0\), where \(\lim _{\Vert h\Vert \rightarrow 0} \mathbf{E }(\mathbf{F }_{\mathbf{C }_v^k}(x_0); h) = \mathbf 0 \) and \(\mathbf L _{x_0}\) is such a function that satisfies

-

(i)

\(\mathbf L _{x_0}(x + y) = \mathbf L _{x_0}(x) \oplus \mathbf L _{x_0}(y)\) for all \(x, y \in D\), and

-

(ii)

\(\mathbf L _{x_0}(cx) = c \odot \mathbf L _{x_0}(x)\) for all \(c \in \mathbb {R}\) and \(x \in D\).

Remark 1

It is worth noting that \(\lim _{\Vert h\Vert \rightarrow 0} \Big (\mathbf{F }_{\mathbf{C }_v^k}(x_0 + h) \ominus _{gH} \mathbf{F }_{\mathbf{C }_v^k}(x_0) \Big ) = \mathbf 0 \). Hence, every differentiable function \(\mathbf{F }_{\mathbf{C }_v^k}\) is continuous.

Theorem 1

Let \(\mathbf{F }_{\mathbf{C }_v^k}\) be gH-differentiable at \(x_0\). Then \(\mathbf L _{x_0}\) exists for every \(h = (h_1, h_2, \ldots , h_n)^T\) in \(\mathbb {R}^n\) and

Proof

At \(h = (0, 0, \cdots , 0)^{T} \in \mathbb {R}^n\), Eq. (3.3) is trivially true since both the sides are \(\mathbf{0 }\).

We assume that \(h \ne (0, 0, \cdots , 0)^{T}\).

Since \(\mathbf{F }_{\mathbf{C }_v^k}\) is differentiable at \(x_0\) we have

for \(\Vert h\Vert < \delta \) for some \(\delta > 0\), where \(\lim _{\Vert h\Vert \rightarrow 0} \mathbf{E }(\mathbf{F }_{\mathbf{C }_v^k}(x_0); h) = \mathbf 0 \) with the desirable properties of \(\mathbf L _{x_0}(h)\), which are mentioned in Definition 5.

In Eq. (3.4), we take \(h = t v\) for some \(t \ne 0\), \(v \in \mathbb {R}^n\) and \(|t|\Vert v\Vert < \delta \). Then \(\Vert h\Vert < \delta \).

Equation (3.4) now gives us

In particular, choosing \(v = e_i\), the ith unit vector in the standard basis of \(\mathbb {R}^n\), we obtain

Thus \(D_i\mathbf{F }_{\mathbf{C }_v^k}\) exists at \(x_0\) and equals to \(\mathbf L _{x_0}(e_i)\).

Using the properties of \(\mathbf L _{x_0}(h)\), we now have

Note 2

It is worth mentioning that existence of gH-partial derivatives at \(x_0\) under the assumption of gH-differentiability is a part of the conclusion of Theorem 1. It is also to note that \(\mathbf L _{x_0}(h)\) can be written as \(h^T \odot \nabla \mathbf{F }_{\mathbf{C }_v^k}(x_0)\).

Theorem 2

Let \(x_0\) be an element of D. Let the gH-partial derivatives \(D_1\mathbf{F }_{\mathbf{C }_v^k}\), \(D_2\mathbf{F }_{\mathbf{C }_v^k}\), \(\cdots \), \(D_n\mathbf{F }_{\mathbf{C }_v^k}\) exist in some neighborhood \(N_\delta (x_0)\) and be continuous at \(x_0\). Then \(\mathbf{F }_{\mathbf{C }_v^k}\) is gH-differentiable at \(x_0\).

Proof

We will show that

where \(\mathbf{E }(\mathbf{F }_{\mathbf{C }_v^k}(x_0); h) \rightarrow \mathbf{0 }\) as \(\Vert h\Vert \rightarrow 0\). This will prove the result.

Let \(\lambda = \Vert h\Vert \) and \(h = \lambda u\) where \(\Vert u\Vert = 1\). We take \(\lambda \) to be small enough so that \(x_0 + h \in N_\delta (x_0)\). Note that h can be expressed in terms of its components as \(u = u_1 e_1 + u_2e_2 + \cdots + u_n e_n\), where \(e_i\) is the i-th unit vector in the standard basis of \(\mathbb {R}^n\).

Let us write the gH-difference \(\mathbf{F }_{\mathbf{C }_v^k}(x_0 + h) \ominus _{gH} \mathbf{F }_{\mathbf{C }_v^k}(x_0)\) as the following sum

where \(w_0 = 0 \in \mathbb {R}^n\), \(w_i = w_{i-1} + u_i e_i\), \(i = 1, 2, \ldots , n\); here \(u_i \in \mathbb {R}\).

Note that

Therefore,

We note that \(\lim _{\Vert h\Vert \rightarrow 0} \mathbf{E }(\mathbf{F }_{\mathbf{C }_v^k}(x_0); h) = \lim _{\Vert h\Vert \rightarrow 0} \left( \mathbf E '_i(\mathbf{F }_{\mathbf{C }_v^k}(x_0); \lambda w_{i - 1}) \oplus u_i\odot \right. \left. \mathbf{E }_i(\mathbf{F }_{\mathbf{C }_v^k}(x_0); \lambda u_i)\right) = \mathbf 0 \). This completes the proof.\(\square \)

Proposition 2

Let at \(x_0 \in D\) and the function \(\mathbf{F }_{\mathbf{C }_v^k}\) is gH-differentiable. Then the real-valued function

is differentiable at \(x_0\).

Proof

As \(\mathbf{F }_{\mathbf{C }_v^k}\) is gH-differentiable at \(x_0 \in D\), \(\mathbf{F }_{\mathbf{C }_v^k}\) is continuous at \(x_0\).

We observe that each \(c_i(t_i) = \underline{c}_i + t_i \left( \overline{c}_i - \underline{c}_i\right) \) is a linear function in \(t_i\), \(0 \le t_i \le 1\), \(i = 1, 2, \cdots , k\).

The proof is now readily followed from the observation that \(f_{\left( c_1(t_1), c_2(t_2), \cdots , c_k(t_k)\right) ^T}(x_0)\) is continuous in \((t_1, t_2, \cdots , t_k)\) over the compact set \([0, 1]^k\).\(\square \)

In the rest of the paper, we write the function \(\varPsi (x)\) by the short expression \(\int _{t \in [0, 1]^k} f_{(c(t)}(x) \mathrm {d}t\) where c(t) is the vector \(\left( c_1(t_1), c_2(t_2), \cdots , c_k(t_k)\right) ^T\) and \(t = (t_1, t_2, \cdots , t_k)^T\).

Proposition 3

Let at \(x_0 \in D\), the function \(\mathbf{F }_{\mathbf{C }_v^k}\) is m-times gH-differentiable. Then, the real-valued function \(\varPsi \) is m-times differentiable at \(x_0\).

Proof

The proof follows from Proposition 2.\(\square \)

Definition 6

(gH-Hessian). Let the function \(\mathbf{F }_{\mathbf{C }_v^k}\) be twice gH-differentiable at \(x_0\). Then for each i, the function \(D_i\mathbf{F }_{\mathbf{C }_v^k}\) is gH-differentiable at \(x_0\). The second order partial gH-derivative can be calculated as \(D_{ij}^2 \mathbf{F }_{\mathbf{C }_v^k}= \left\{ \frac{\partial ^2 f_{c(t)}}{\partial x_i \partial x_j} \big | c(t) \in \mathbf{C }_v^k \right\} \). The gH-Hessian of \(\mathbf{F }_{\mathbf{C }_v^k}\) at \(x_0\) can be defined by the \(n \times n\) interval matrix

3.1 Interval optimization

In this section we consider the following unconstrained Interval Optimization Problem

where \(\mathbf F _{\mathbf{C }^k}: \mathbb {R}^n \rightarrow I(\mathbb {R})\) is a twice continuously gH-differentiable function.

Since \(\displaystyle \min _{x \in \mathbb {R}^n} \mathbf F _{\mathbf{C }_v^k} (x) = \displaystyle \min _{x \in \mathbb {R}^n} \big \{f_{c(t)}(x) \big | c(t) \in \mathbf{C }_v^k \big \}\), the IOP can be treated as a multi-objective optimization problem [2]. In order to investigate a solution concept for IOP, we use the following partial ordering relation, which is similar to the usual dominance relation between a pair of points in the Euclidean space.

Definition 7

(Dominance relation of intervals [2]). Let \(\mathbf A \) and \(\mathbf B \) be two intervals in \(I(\mathbb {R})\).

-

(i)

\(\mathbf B \) is said to be dominated by \(\mathbf A \) if \(a(t) \le b(t)\) for all \(t \in [0, 1]\), and then we write \(\mathbf A \preceq \mathbf B \);

-

(ii)

we say \(\mathbf A \ne \mathbf B \) if there exists a \(t_0 \in [0, 1]\) such that \(a(t_0) \ne b(t_0)\);

-

(iii)

\(\mathbf B \) is said to be strictly dominated by \(\mathbf A \) if \(\mathbf A \preceq \mathbf B \) and \(\mathbf A \ne \mathbf B \), and then we write \(\mathbf A \prec \mathbf B \).

Using this dominance relation on \(I(\mathbb {R})\), alike to the Pareto optimality or efficient solution concept in multi-objective optimization problems, we use the following efficient solution concept for IOPs.

Definition 8

(Efficient solution [2]) A feasible solution \(\bar{x} \in \mathbb {R}^n\) is called a local efficient solution of the IOP if there does not exist any \(x \in N_{\delta }(\bar{x})\) such that \(\mathbf F _{\mathbf{C }_v^k}(x) \prec \mathbf F _{\mathbf{C }_v^k}(\bar{x})\), where \(N_{\delta }(\bar{x})\) is a \(\delta \)-neighborhood of \(\bar{x}\).

If a solution \(\bar{x}\) is efficient, then we call \(\mathbf F _{\mathbf{C }_v^k}(\bar{x})\) as a non-dominated solution to IOP.

Theorem 3

(A characterization of efficient solutions). Let \(\mathbf F _{\mathbf{C }_v^k}\) be an interval-valued function. Let \(\bar{x}\) be a local minimizer of the function \(\varPsi (x) = \int _{t \in [0, 1]^k} f_{c(t)}(x) \mathrm {d}t\). Then \(\bar{x}\) is a local efficient solution of the IOP \(\displaystyle \min _{x \in \mathbb {R}^n} \mathbf F _{\mathbf{C }_v^k}(x)\).

Proof

If possible let \(\bar{x}\) is not a local efficient solution of the considered IOP. Then, for any \(\delta > 0\), there exists a point \(x_\delta \in N_\delta (\bar{x})\) such that \(\mathbf F _{\mathbf{C }_v^k}(x_\delta ) \prec \mathbf F _{\mathbf{C }_v^k}(\bar{x})\). Therefore, \(f_{c(t)}(x_\delta ) < f_{c(t)}(\bar{x})\) for all \(t \in [0,1]^k\). Hence

which is contradictory. This follows the result.\(\square \)

Remark 2

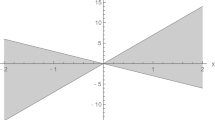

Theorem 3 gives a sufficient condition for a local efficient solution. It is to be noted that a point \(\bar{x} \in \mathbb {R}^n\) can be a local efficient solution to the IOP \(\displaystyle \min _{x \in \mathbb {R}^n} \mathbf F _{\mathbf{C }_v^k} (x)\), without being a local minimizer of either or both of the lower and the upper functions \(\underline{f}\) and \(\overline{f}\), respectively. For instance, if we take \(\mathbf{F }_{\mathbf{C }_v^2} (x_1, x_2)= [1, 3] x_1^2 \oplus [0, 1] x_1x_2 \oplus [-1, 3]x_2^2\), then (0, 0) is a local efficient solution; however, (0, 0) is not a point of local minimum of the lower function \(\underline{f}\), since for any \(\delta > 0\) we have \(\underline{f}(0, \delta ) = - \delta ^2 < 0 = \underline{f}(0, 0)\). It is also to worth-noting that (0, 0) is a local minimizer of the function \(\varPsi (x_1, x_2) = 2 x_1^2 + \tfrac{1}{2}x_1 x_2 + x_2^2\).

4 Newton method

In this section, a Newton method is developed to find an efficient solution of the IOP \(\displaystyle \min _{x \in \mathbb {R}^n} \mathbf F _{\mathbf{C }_v^k}(x)\). Towards this end, we assume the following conditions on the objective function \(\mathbf F _{\mathbf{C }_v^k}(x) : \mathbb {R}^n \rightarrow I(\mathbb {R})\):

-

(i)

at each term of the following generated sequence \(\{x^m\}\), \(\mathbf F _{\mathbf{C }_v^k}(x^m)\) is well defined, and

-

(ii)

the function \(\mathbf F _{\mathbf{C }_v^k}\) is twice continuously gH-differentiable at each point of \(\{x^m\}\).

Due to the assumption (ii), the values \(\nabla \mathbf F _{\mathbf{C }_v^k}(x^m)\) and \(\nabla ^2 \mathbf F _{\mathbf{C }_v^k}(x^m)\) are well defined. Let us consider the function

Taking into account Proposition 3, we can calculate \(\nabla \varPsi (x^m)\) and \(\nabla ^2\varPsi (x^m)\); also, \(\varPsi \) is twice differentiable.

With the help of Taylor’s formula, a quadratic approximation of \(\varPsi (x)\), at \(x^m\), gives us a function \(\phi \) as follows:

If \(\overline{x}\) is a minimizer of \(\varPsi \), for a given \(x^m\), let us try to approximate \(\overline{x}\) by a minimizer of \(\phi (x)\). The first order necessary condition of local optimality at \(\overline{x}\) gives us \(\nabla \phi (\overline{x}) = 0\). Hence,

Putting \(\overline{x} = x^{m+1}\), we arrive at the next sequential point

where \(\big [ \nabla ^2\varPsi (x^m)\big ]^{-1}\) is the inverse of the Hessian matrix \(\nabla ^2\varPsi (x^m)\).

Thus starting with an initial approximation to a minimizer of \(\phi \), we can generate a sequence of approximation to a minimizer of \(\varPsi \) through Eq. (4.1). We may employ the termination condition of the above process of generating the sequence \(\{x^m\}\) as \(\Vert x^{m+1} - x^m\Vert < \epsilon \) or \(\Vert \nabla \varPsi (x^m)\Vert < \epsilon \) where \(\epsilon \) is a pre-specified tolerance level of acceptable solution and \(\Vert .\Vert \) is the usual Euclidean norm on \(\mathbb {R}^n\).

The algorithmic implementation of the above Newton method is presented in Algorithm 1.

In the next subsection, we analyze the convergence of the developed Newton method. It is to be mentioned that if \(\{x^m\}\) converges and \(\displaystyle \lim _{m \rightarrow \infty } x^m = \overline{x}\), then by Theorem 3, \(\overline{x}\) is an efficient solution of the considered IOP.

4.1 Convergence result of the Newton method

In this section, we prove the convergence of the sequence \(\{x^m\}\) generated by Eq. (4.1).

At first, we show that for an interval-valued quadratic function, the sequence \(\{x^m\}\) in the Newton method converges in a single iteration. Then, the convergence rate of the sequence for any general non-quadratic and non-linear function is investigated.

Theorem 4

Let \(\mathbf F _{\mathbf{C }_v^k}(x) = \tfrac{1}{2} x^T \mathbf A x \oplus \mathbf B ^T x \oplus \mathbf D \) be an interval-valued quadratic function, where

- A :

-

is an \(n \times n\) interval matrix \(\big ( \mathbf A _{ij}\big )_{n \times n}\), \(\mathbf A _{ij} = \big [\underline{a}_{ij},~\overline{a}_{ij}\big ]\),

- B :

-

is an \(n \times 1\) interval matrix \(\big ( \mathbf B _{i}\big )_{n \times 1}\), \(\mathbf B _{i} = \big [\underline{b}_{i},~\overline{b}_{i}\big ]\) and

- D :

-

is the interval \([\underline{d},~\overline{d}]\).

Let the matrices \((\underline{a}_{ij})_{n\times n}\) and \((\overline{a}_{ij})_{n\times n}\) be positive definite. If we consider the IOP \(\displaystyle \min _{x \in \mathbf R ^n} \mathbf F _{\mathbf{C }_v^k}(x)\), then the Newton method converges to an efficient solution of the problem with a single iteration.

Proof

For the given objective function \(\mathbf F _{\mathbf{C }_v^k}(x) = \tfrac{1}{2} x^T \mathbf A x \oplus \mathbf B ^T x \oplus \mathbf D ~\) we have

where \(a_{ij}(t_{ij}) = \underline{a}_{ij} + t_{ij}\left( \overline{a}_{ij} - \underline{a}_{ij}\right) \), \(b_i(t_i) = \underline{b_i} + t_i (\overline{b_i} - \underline{b_i})\) and \(d(t') = \underline{d} + t'(\overline{d} - \underline{d})\); the parameters \(t_{ij}\), \(t_i\) and \(t'\) lie on [0, 1].

Let \(x^0\) be any arbitrary point of \(\mathbb {R}^n\).

With the help of a Taylor quadratic approximation of the functions \({f}_{c(t)}\), at the point \(x^0\), we obtain

If \(\overline{x}\) is a point such that \(\nabla \varPsi (\overline{x}) = 0\), then \(\overline{x}\) is a local minimum (indeed global) point of \(\varPsi \), since \(\nabla ^2 \varPsi (\overline{x}) = \big (\underline{a}_{ij} + \overline{a}_{ij}\big )_{n \times n}\) is a positive definite matrix. The equation \(\nabla \varPsi (\overline{x}) = 0\) gives

Theorem 3 shows that \(\overline{x}\) is an efficient solution to the considered IOP.

Next, we start applying Newton method with the point \({x}^0\) as the initial point. Then Eq. (4.1) gives the next iteration point as

This completes the proof.\(\square \)

Remark 3

Theorem 4 shows that if positive definiteness of an interval matrix is defined as that of Bhurjee and Panda [2], the proposed Newton method for a positive definite interval-valued quadratic form will converge to an efficient solution in one iteration. However, for a non-quadratic function, obviously, the same result cannot be established. Fortunately, appealing Propositions 2 and 3, for a twice continuously gH-differentiable function \(\mathbf F _{\mathbf{C }_v^k}\), near a local minimizer of \(\varPsi = \int _{t \in [0, 1]^k} f_{c(t)}\), \(\varPsi \) can be approximated in a quadratic form. Thus if the initial point of the algorithm is very close to a minimizer of \(\varPsi (x)\), the Newton method will converge in a very few iterations. The next Theorem 5 assures this convergence.

Theorem 5

Let \(\mathbf F _{\mathbf{C }_v^k}\) be a twice continuously gH-differentiable function and the m-th sequential point \(x^m\) generated by Eq. (4.1) be sufficiently close to a solution \(\overline{x}\) of \(\nabla \varPsi (x) = 0\). Let the Hessian \(\nabla ^2 \varPsi \) be positive definite at \(\overline{x}\) and each entry of \(\nabla ^2 {f}_{c(t)} (x) \) is bounded in a close enough neighborhood of \(\overline{x}\) for all c(t) in \({\mathbf{C }_v^k}\). Then, the sequence \(\{x^m\}\) in the Newton method is well defined and quadratically converges to \(\overline{x}\).

Proof

Let \(\epsilon ^m = x^m - \overline{x}\).

With the help of Taylor’s approximation of \(\nabla {f}_{c(t)}(x)\) at \(x^m\), we obtain

Since \(\mathbf F _{\mathbf{C }_v^k}\) is twice gH-differentiable, using Propositions 2 and 3, \(\varPsi \) is twice continuously differentiable. Thus a neighborhood property of a continuous function gives that \(\nabla ^2 \varPsi \) is positive definite in a neighborhood \(N(\overline{x})\) of \(\overline{x}\), because \(\nabla ^2 \varPsi \) is positive definite at \(\overline{x}\).

Therefore, in \(N(\overline{x})\), \(\nabla ^2\varPsi \) is invertible, and hence \(\{x^m\}\), defined by Eq. (4.1), is well defined.

According to the hypothesis, from Eq. (4.2), for all \(x \in N(\overline{x})\), we have

Let \(c = |c_1c_2| + 1\). Let \(\alpha \in (0, 1)\) be a number such that

If \(x^m \in N_{\tfrac{\alpha }{c}}(\overline{x})\), then \(\Vert \epsilon ^{m+1}\Vert < c \Vert \epsilon ^{m}\Vert ^2 < \tfrac{\alpha ^2}{c} < \tfrac{\alpha }{c}\). Thus, \(x^{m+1} \in N_{\tfrac{\alpha }{c}}(\overline{x})\).

Using the principle of induction, we note that Newton iteration sequence \(\{x^m\}\) is well-defined and \(\Vert \epsilon ^m\Vert \rightarrow 0\) as \(m \rightarrow \infty \). Therefore, under the stated conditions, \(\{x^m\}\), given by Eq. (4.1) converges quadratically to \(\overline{x}\).\(\square \)

4.2 An updated Newton method

Although Theorem 5 shows quadratic rate of convergence of the Newton sequence \(\{x^m\}\), unless the starting point and hence \(x^m\) is sufficiently closer to \(\overline{x}\) such that \(x^m\) lies in \(N_{\tfrac{\alpha }{c}}(\overline{x})\), the function \(\nabla ^2\varPsi \) may not be positive definite and \(\{x^m\}\) may not converge to \(\overline{x}\). Therefore, the developed Newton method is a local method. In order to excel the local convergence to a global convergence, we can update Eq. (4.1) as follows so as to maintain a sufficient decrease of the function \(\varPsi \). Instead of Eq. (4.1), we consider

where \(\alpha _m = \displaystyle {{\arg \min }_{\alpha \ge 0}}~~~ \varPsi (x^m + \alpha d^m)\) and \(d^m = - [\nabla ^2 \varPsi (x^m)]^{-1}~\nabla \varPsi (x^m)\). We call the sequence \(\{x^m\}\), generated in Eq. (4.3), as an updated Newton sequence.

The following theorem proves global convergence of the sequence generated by Eq. (4.3).

Theorem 6

Let the function \(\mathbf F _{\mathbf{C }_v^k}: \mathbb {R}^n \rightarrow I(\mathbb {R})\) be twice continuously gH-differentiable. Let \(x^0\) be any point of \(\mathbb {R}^n\). We consider \(x^0\) as the starting point of the updated Newton iteration \(\{x^m\}\) as in Eq. (4.3). Let the angle \(\theta _m = \angle (-\nabla \varPsi (x^m), d^m)\) be uniformly bounded away from \(\tfrac{\pi }{2}\), i.e., \(\theta ^m \le \tfrac{\pi }{2} - \theta \) for some \(\theta >0\) for all m in \(\mathbb {N}\). Then, \(\{x^m\}\) converges to an efficient solution \(\overline{x}\) of \(\displaystyle \min _{x \in \mathbb {R}^n} \mathbf F _{\mathbf{C }_v^k}(x)\) where \(\overline{x}\) lies in \(\mathcal {S}(\mathbf F _{\mathbf{C }_v^k}) = \{x\in \mathbb {R}^n: \mathbf F _{\mathbf{C }_v^k}(x) \preceq \mathbf F _{\mathbf{C }_v^k}(x_0)\}\).

Proof

We observe that the set \(\mathcal {S}(\mathbf F _{\mathbf{C }_v^k})\) is a compact subset of \(\mathbb {R}^n\), because

and each of \(f_{c(t)}\) is continuous for all t in \([0,1]^k\).

Since \(\varPsi (x)\) is monotonically descent along the direction of \(d^m\) and \(x_0 \in \mathcal {S}(\mathbf F _{\mathbf{C }_v^k})\), we have \(x^m \in \mathcal {S}(\mathbf F _{\mathbf{C }_v^k})\).

Hence there exists a limit point \(\overline{x}\) in \(\mathcal {S}(\mathbf F _{\mathbf{C }_v^k})\) with \(\lim _{m \rightarrow \infty } x^m = \overline{x}\); further, \(\lim _{m \rightarrow \infty } \varPsi (x^m) = \varPsi (\overline{x})\) and \(\lim _{m \rightarrow \infty } \nabla \varPsi (x^m) = \nabla \varPsi (\overline{x})\).

We will show that \(\nabla \varPsi (\overline{x}) = 0\).

If possible let \(\nabla \varPsi (\overline{x}) \ne 0\) and hence \(\Vert \nabla \varPsi (x^m)\Vert \ge \epsilon \) for all m in \(\mathbb {N}\) for some \(\epsilon > 0\).

Then,

Here we observe that for some \(\xi ^m\), lies in the line segment joining \(x^m\) and \(x^m + \alpha d^m\),

As \(\nabla \varPsi (x)\) is continuous on \(\mathcal {S}(\mathbf F _{\mathbf{C }_v^k})\) and \(\mathcal {S}(\mathbf F _{\mathbf{C }_v^k})\) is compact, \(\nabla \varPsi \) is uniformly continuous on \(\mathcal {S}(\mathbf F _{\mathbf{C }_v^k})\). Hence, there exists some \(c > 0\) such that satisfying \(0 \le \alpha \Vert d^m\Vert \le c\), we have \(\Vert \nabla \varPsi (\xi ^m) - \nabla \varPsi (x^m)\Vert \le \tfrac{\epsilon _0}{2}\).

Therefore,

which contradicts that \(\lim _{m \rightarrow \infty } \varPsi (x^m) = \varPsi (\overline{x})\). Hence \(\nabla \varPsi (\overline{x}) = 0\) and Theorem 3 completes the proof.\(\square \)

An algorithmic implementation of the above updated Newton method is given below

4.3 Illustrative examples

Example 3

Consider the following quadratic IOP:

In this example,

It is to observe that for any \(t \in [0, 1]^5\), \(f_{c(t)}\) is twice differentiable, and hence the function \(\varPsi (x_1, x_2)\) is a twice gH-differentiable function. Here,

Application of Algorithm 1 produces the Table 1 with the initial point \((x_1^0, x_2^0) = (3, 2)\) and the level of accuracy \(\epsilon = 10^{-4}\).

Table 1 shows that the point \((\overline{x}_1, \overline{x}_2) = (-0.367, -0.063)\) is an efficient solution of the IOP under consideration. Since the objective function is a quadratic interval-valued function, the proposed Newton method converges at the single iteration, as assured by Theorem 4.

Example 4

In this example we consider the following non-linear and non-quadratic IOP:

With the help of the parametric representation of intervals, for any \(t = (t_1, t_2, t_3, t_4, t_5) \in [0, 1]^5\) we obtain

Here, the function \(\mathbf F _{\mathbf{C }_v^5}(x_1, x_2)\) is a twice gH-differentiable function and

We now calculate the sequence \(\{x^m\}\) to produce an efficient solution \(\overline{x}\) using the following equation:

where

and

Applying Algorithm 1, with the accuracy level of \(\epsilon = 10^{-4}\) and the initial point \((x_1^0, x_2^0) = (-2,4)\), we get the following solution Table 2.

Table 2 shows that the point to which the sequence \(\{x^m\}\) converges in five iterations producing \((\overline{x_1}, \overline{x_2}) = (0, 2)\) as an efficient solution of the considered IOP.

Example 5

In this example we consider to solve Example 4 through the updated Newton method (Algorithm 2).

As observed in Table 2, with the accuracy level of \(\epsilon = 10^{-4}\) and the initial point \((x_1^0, x_2^0) = (\)-2, 4), the proposed Newton Algorithm 1 converges in five iterations. Surprisingly, the modified Newton Algorithm 2 converges in a single iteration as shown in the Table 3.

For this example, we can also observe that with the initial point \((x_1^0, x_2^0) = (-3, 6)\), the proposed Newton method (Algorithm 1) does not converge, because it is not sufficiently close to any efficient solution of the problem. The updated Newton method (Algorithm 2), however, with the same initial point, converges in three iterations with the level of accuracy \(\epsilon = 10^{-4}\). Algorithm 2 produces the following solution Table 4 in this case.

5 Conclusion

The contribution of the paper is two-fold. First, in this paper, a Newton method has been proposed to capture an efficient solution of an IOP. Second, towards developing the method, the notions of gH-differentiability and gH-partial derivative of interval-valued function are defined and interrelated. It is shown that the proposed Newton method for IOPs has a quadratic rate of convergence, and for an interval-valued quadratic function, the method converges with a single iteration. It order to improve the local convergence of the proposed Newton method to a global convergence, an updated Newton method is also demonstrated. Several numerical examples have been given towards illustrating the methodologies.

In the updated Newton sequence \(\{x^m\}\) (refer to Eq. (4.3)) an exact line search technique has been employed to promote that sequence to have a global convergence. An inexact line search [34] could have been also used in place of the exact one. A future research may be done in this topic. However, there is a reason to employ the exact line search. The proposed Newton method assumes that the objective function is twice continuously gH-differentiable. Under the differentiability assumption, an exact line search is usually preferable, and often outperforms an inexact line search technique. In order to further improve the proposed Newton method, a future research may be done similar to proposing quasi-Newton methods in classical optimization.

It is important to observe that this article intended to characterize only one efficient solution of an IOP. Alike to capturing the complete Pareto set in multi-objective optimization [13–15] the characterization of the complete efficient solution set for an IOP is not performed yet in the literature. This topic may be covered in the next step of this research work. It is worth mentioning here that the efficient solution concept solely depend on the ordering being used. In analogy to the methodology proposed here, future researchers can modify the steps in the proposed algorithms according to the different ordering or preference indexes [35].

It is also to note that in the literature efficient solutions of IOPs have not been observed form geometrical viewpoint as it is done for fuzzy multi-objective optimization problems [5, 7, 8, 10–12]. Future researchers can perform some research work on the topic of geometrical observation of the efficient points that are obtained through the results of this paper.

References

Ahmad, I., Jayswal, A., Banerjee, J.: On interval-valued optimization problems with generalized invex functions. J. Ineq. Appl. 2013, 313 (2013)

Bhurjee, A.K., Panda, G.: Efficient solution of interval optimization problem. Math. Methods Oper. Res. 76(3), 273–288 (2012)

Bhurjee, A.K., Panda, G.: Multi-objective interval fractional programming problems: an approach for obtaining efficient solutions. Opsearch 52(1), 156–167 (2015)

Bhurjee, A.K., Padhan, S.K.: Optimality conditions and duality results for non-differentiable interval optimization problems. J. Appl. Math. Comput. 50(1), 59–71 (2016)

Chakraborty, D., Ghosh, D.: Analytical fuzzy plane geometry II. Fuzzy Sets Syst. 243, 84–109 (2014)

Chalco-Cano, Y., Rufian-Lizana, A., Roman-Flores, H., Jimenez-Gamero, M.D.: Calculus for interval-valued functions using generalized Hukuhara derivative and applications. Fuzzy Sets Syst. 219, 49–67 (2013)

Ghosh, D., Chakraborty, D.: A method for capturing the entire fuzzy non-dominated set of a fuzzy multi-criteria optimization problem. Fuzzy Sets Syst. 272, 1–29 (2015)

Ghosh, D., Chakraborty, D.: A method for capturing the entire fuzzy non-dominated set of a fuzzy multi-criteria optimization problem. J. Intell. Fuzzy Syst. 26(2014), 1223–1234 (2014)

Ghosh, D., Chakraborty, D.: Quadratic interpolation technique to minimize univariable fuzzy functions. Int. J. Appl. Comput. Math. (2015). Accepted Manuscript

Ghosh, D., Chakraborty, D.: Analytical fuzzy plane geometry I. Fuzzy Sets Syst. 209, 66–83 (2012)

Ghosh, D., Chakraborty, D.: Analytical fuzzy plane geometry III. Fuzzy Sets Syst. 283, 83–107 (2016)

Ghosh, D., Chakraborty, D.: On general form of fuzzy lines and its application in fuzzy line fitting. J. Intell. Fuzzy Syst. 29(2015), 659–671 (2015)

Ghosh, D., Chakraborty, D.: A direction based classical method to obtain complete Pareto set of multi-criteria optimization problems. Opsearch 52(2), 340–366 (2015)

Ghosh, D., Chakraborty, D.: A new Pareto set generating method for multi-criteria optimization problems. Oper. Res. Lett. 42, 514–521 (2014)

Ghosh, D., Chakraborty, D.: Ideal cone: a new method to generate complete pareto set of multi-criteria optimization problems, mathematics and computing. Springer Proc. Math. Stat. 91, 171–190 (2013)

Hansen, W.G.E.: Global Optimization Using Interval Analysis. Marcel Dekker Inc., New York (2004)

Hladik, M.: Interval Linear Programming: A Survey. Nova Science Publishers, New York (2012)

Hu, B., Wang, S.: A novel approach in uncertain programming. Part I: new arithmetic and order relation of interval numbers. J. Ind. Manag. Optim. 2(4), 351–371 (2006)

Hu, B., Wang, S.: A novel approach in uncertain programming. Part II: a class of constrained nonlinear programming with interval objective function. J. Ind Manag. Optim. 2(4), 373–385 (2006)

Hukuhara, M.: Integration des applications mesurables dont la valeur est un compact convexe. Funkc Ekvacioj 10, 205–223 (1967)

Ishibuchi, H., Tanaka, H.: Multiobjective programming in optimization of the interval objective function. Eur. J. Oper. Res. 48(2), 219–225 (1990)

Jana, M., Panda, G.: Solution of nonlinear interval vector optimization problem. Oper. Res. Int. J. 14, 71–85 (2014)

Jayswal, A., Stancu-Minasian, I., Ahmed, I.: On sufficiency and duality for a class of interval-valued programming problems. Appl. Math. Comput. 218(8), 4119–4127 (2011)

Jeyakumar, V., Li, G.Y.: Robust duality for fractional programming problems with constraint-wise data uncertainty. Eur. J. Oper. Res. 151(2), 292–303 (2011)

Jiang, C., Han, X., Liu, G.R.: A nonlinear interval number programming method for uncertain optimization problems. Eur. J. Oper. Res. 188(1), 1–13 (2008)

Kumar, P., Panda, G., Gupta, U.C.: Generalized quadratic programming problem with interval uncertainty, FUZZ IEEE 2013. In: 2013 IEEE international conference on Fuzzy systems (FUZZ), (2013)

Li, W., Tian, X.: Numerical solution method for general interval quadratic programming. Appl. Math. Comput. 202(2), 589–595 (2008)

Liu, S.T., Wang, R.T.: A numerical solution method to interval quadratic programming. Appl. Math. Comput. 189(2), 1274–1281 (2007)

Luciana, T.G., Barrosb, L.C.: A note on the generalized difference and the generalized differentiability. Fuzzy Sets Syst. 280, 142–145 (2015)

Markov, S.: Calculus for interval function of a real variable. Computing 22, 325–337 (1979)

Moore, R.: Interval Analysis. Prentice-Hall, Englewood Cliffs (1966)

Moore, R.E., Kearfott, R.B., Cloud, M.J.: Introduction to Interval Analysis. SIAM, Philadelphia (2009)

Neumaier, A.: Interval Methods for Systems of Equation, Encyclopedia of Mathematics and its Applications, vol. 37. Cambridge University Press, Cambridge (1990)

Nocedal, J., Stephen, W.: Numerical Optimization, 2nd edn. Springer Science & Business Media, New York (2006)

Sengupta, A., Pal, T.K.: Fuzzy Preference Ordering of Interval Numbers in Decision Problems. Series on Studies in Fuzziness and Soft Computing, vol. 238. Springer, Berlin (2009)

Stahl, T.: Interval methods for bounding the range of polynomials and solving sytems of nonlinear equation, PhD thesis, Johannes Kepler University Linz, Austria (1994)

Stefanini, L.: A Generalization of Hukuhara Difference, Soft Methods for Handling Variability and Imprecision. Series on Advances in Soft Computing, vol. 48, pp. 203–210. Springer, New York (2008)

Stefanini, L.: A generalization of Hukuhara difference and division for interval and fuzzy arithmetic. Fuzzy Sets Syst. 161, 1564–1584 (2010)

Wang, H., Zhang, R.: Optimality conditions and duality for arcwise connected interval optimization problems. Opsearch 52(4), 870–883 (2015)

Wu, H.C.: On interval-valued nonlinear programming problem. J. Math. Anal. Appl. 338(1), 299–316 (2008)

Wu, H.C.: Duality theory for optimization problems with interval-valued objetive fuction. J. Optim. Theory Appl. 144(3), 615–628 (2009)

Wu, H.C.: Duality theory in interval-valued linear programming problems. J. Optim. Theory Appl. 150, 298–316 (2011)

Acknowledgments

The author is grateful to the editors and anonymous reviewers for their valuable suggestions. The financial supports through Research Initiation Grant and Outstanding Potential for Excellence in Research and Academics Award 2014, BITS Pilani, Hyderabad Campus, India are also thankfully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ghosh, D. Newton method to obtain efficient solutions of the optimization problems with interval-valued objective functions. J. Appl. Math. Comput. 53, 709–731 (2017). https://doi.org/10.1007/s12190-016-0990-2

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12190-016-0990-2