Abstract

Teaching-Learning-Based Optimization is one of the well-known metaheuristic algorithm in the research industry. Recently, various population-based algorithms have been developed for solving optimization problems. In this paper, a random scale factor approach is proposed to modify the simple TLBO algorithm. Modified Teaching-Learning-Based Optimization with Opposite-Based-Learning algorithm is applied to solve the Permutation Flow-Shop-Scheduling Problem with the purpose of minimizing the makespan. The OBL approach is used to enhance the quality of the initial population and convergence speed. PFSSP is used extensively for solving scheduling problem, which belongs to the category of NP-hard optimization problems. First, MTLBO is developed to effectively determine the PFSSP using the Largest Order Value rule-based random key, so that individual job schedules are converted into discrete schedules. Second, new initial populations are generated in MTLBO using the Nawaz–Enscore–Ham heuristic mechanism. Finally, the local exploitation ability is enhanced in the MTLBO using effective swap, insert and inverse structures. The performance of proposed algorithm is validated using ten benchmark functions and the Wilcoxon rank test. The computational results and comparisons indicate that the proposed algorithm outperformed over five well-known datasets such as Carlier, Reeves, Heller, Taillards and VRF benchmark test functions, compared to other metaheuristic algorithms. The p-value indicated the significance and superiority of the proposed algorithm over other metaheuristic algorithms.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Scheduling is a decision-making process, perform vital role in services, manufacturing and production industry. It is traditionally defined as a process of allocating job sequence on different machines that reduces the makespan (completion-time) of a job sequence [1]. Scheduling problems are found in many real-world industries such as textile [2], discrete manufacturing industries [3], electronics [4], chemical [5], production of concrete [6], manufacturing of photographic film [7], iron and steel [8], and internet service architecture [9]. Typically, scheduling problems are classified on the basis of production environments such as single machines, flow-shop, parallel-machines, open-shop, cyclic flow shop and Flexible Job-Shop (FJS). PFSSP is an ultimate engaging research problem in the manufacturing industry with an objective to minimize the total completion time. It is one of the simplified versions of the typical Flow Shop Scheduling Problem (FSSP). The first PFSSP pioneering work was carried out on two machines, with a view to minimize the completion-time [10]. In terms of complexity estimates, PFSSP is found to be NP hard optimization problem [11].

The approaches to solve PFSSP can be broadly grouped into three categories: exact, heuristics and metaheuristic algorithms. The exact-algorithms: dynamic-programming [12], branch and bound techniques [13], and linear-programming [14], that can be successively adopted for solving small problems. However, these techniques do not produce promising outcomes for large instances in reasonable time. Therefore, heuristic techniques are generally used for large instances. The heuristic techniques are simple, fast and can be used to frame scheduling solutions [15]. A solution can be obtained in stipulated time using the heuristic algorithms, but the produces outcomes may not be optimal. Hence, metaheuristic algorithms are proposed over heuristic algorithms. An existing metaheuristic algorithms for any given problem provide balance between diversification and intensification [16]. There are many nature-inspired algorithms that are used to solve the PFSSP such as genetic algorithm [17], ant colony optimization [18], differential evolution [19], harmony search [20], firefly algorithm [21], bat algorithm [22], cuckoo search [23], TLBO [24], grey wolf algorithm [25], earthworm optimization algorithm [26], monarch butterfly optimization [27], artificial bee colony algorithm [28], jaya algorithm [29], moth search algorithm [30], return-cost-based binary FFA (Rc-BBFA) [31] etc. To overcome the problems faced by single metaheuristic algorithm, recently hybridization of algorithms i.e. combination of two or more local or global search algorithms are used. This also results in increased performance of the metaheuristic algorithms. As the hybridization algorithms can find high-quality feasible outcomes in reasonable time, they are recently used in research development of PFSSP. The advantages for selection of TLBO algorithm, it is easy to implement and easily applicable for practical application. Selection of parameter tune problem is not here. It is not required any mutation and crossover parameters as like genetic algorithm. The performance of TLBO is better than comparative other metaheuristic algorithms. The proper precaution have to take for TLBO algorithm as convergence rate is quickly that is main disadvantage of this algorithm.

In recent decades, researchers are focusing on the hybridization of the genetic algorithm in search of minimum makespan of PFSSP [32]. Hybrid Genetic Algorithm (HGA) [33] was proposed to solve sequence independence of PFSSP. The objective of Particle Swarm Optimization (PSO) for PFSSP, i.e. PSOVNS [34] was to minimize the makespan and the total-flow time. Also, the effective PSO with local search simulated annealing algorithm was used for PFSSP, which balance the exploitation and exploration [35]. Tabu-search-algorithm (TSA) hybridized with improved global search algorithm for solving PFSSP [36]. Hybrid Differential Evolution (HDE) algorithm combined with greedy based local search and Individual Improving Scheme (IIS) was developed to elevate the feasible outcomes quality [37]. The novel Hybrid Cuckoo Search (HCS) was proposed to minimize the makespan and total flow time for solving PFSSP [38]. Enhanced Migrating Bird Optimization (MBO) algorithm was also used to solve a scheduling problem [39]. For minimizing the total flowtime, distributed PFSSP was introduced [40]. While, Improved Migrating Birds Optimization (IMMBO) and a Hybrid-multi-objective Discrete Artificial-Bee-Colony (HDABC) were used to minimize the makespan [41]. Flow shop-scheduling problem to minimize makespan by modified fruit-fly optimization algorithm [42]

Teaching-Learning-Based Optimization (TLBO) was suggested by Rao et al. [24]. It was inspired from the teaching-learning process and it proved to be efficient. The main advantage of using TLBO is that, it is free from any algorithm-parameters. Due to its characteristics, the TLBO algorithm has been gaining recognition in the research industry. The electrochemical discharge machining technique and electrochemical-machining mechanism have been designed using TLBO algorithm [43]. The efficiency of TLBO algorithm was tested on flow-shop and Job-Shop-Scheduling Problem (JSSP) [44]. Shao et al. [45] applied hybrid TLBO algorithm combined with simulated annealing for solving PFSSP. The discrete TLBO algorithm (DTLBO) for solving flowshop rescheduling problem [46]. The probabilistic TLBO algorithm was used to solve no-wait flow-shop scheduling problem (NWFSSO) with high completion-time [47]. The flexible flow shop scheduling problems were solved using the improved TLBO and the JAYA algorithm [48]. The Whale-Optimization-Algorithm (WOA) with a local search approach was also used for solving PFSSP [49].

With this background, the primary contributions of this paper are listed below:

-

1.

MTLBO with OBL method is applied for solving PFSSP. The OBL approach is used to enhance the quality of initial populations (individuals). Using Largest-Order-Value (LOV) rule based random key, MTLBO algorithm is developed to solve PFSSP effectively by changing individuals into discrete job sequences. Nawaz–Encore–Ham (NEH) heuristic method is combined with discrete job sequence to initialize a high quality and a diverse population. Moreover, to enhance the local exploitation ability, the effective swap, insert and inverse structures are included into the MTLBO algorithm.

-

2.

In order to evaluate and analyze the effectiveness of the proposed algorithm, computational experiments are conducted using ten benchmark test functions and Wilcoxon signed-rank test.

-

3.

Comprehensive extensive experiments are conducted using different well-known datasets such as Carlier, Reeves, Heller, Taillards and VRF benchmark test functions.

-

4.

To validate its performance, the MTLBO algorithm is compared with various well-known metaheuristic algorithms.

The structure of the paper is as follows: Sect. 2 presents the description of the PFSSP. Section 3 provides the related work of basic TLBO algorithm. Section 4 describes the proposed algorithm MTLBO. Section 5 presents computational results and discussion and at last Sect. 6 discusses the conclusions. The symbols used to describe the PFSSP and along with their explanations are shown in Table 1.

2 The description of the PFSSP

In the PFSSP, the set of n jobs \((j=j_1,j_2,\ldots ,j_n)\) are consecutively handled on the set of m machines \((M=M_1,M_2,\ldots ,M_m)\). As each machine can process only one job at a time, the handling time for each job is determined as \(P_{i,j}=(i=1,2,\ldots .,n,\) \(j=1,2,\ldots ,m)\). Each job is given a task or operation on each machine and is represented as \(T_j=T_{j1},T_{j2},\ldots ,T_{jm}\). The sequence followed by each job over each machine is the same. A schedule \(\varPi \) is denoted as a permutation \((\varPi =\pi _1,\pi _2,\ldots ,\pi _n)\), where \(\varPi \) represents set of all permutations for n jobs. Let us assume \(Z(\pi _i,m)\) to be the makespan of job (\(\pi _i\)) over the machine m. \(S_{1,\pi _i-1,\pi _i,}\) indicates initial time of job-permutation. Let us assume that \(P_{\pi _i,j}\) represents the handling time of job (\(\pi _i\)) over the machine j. Hence, the PFSSP can be mathematically represented as follows:

where n indicates the total number of jobs, m denotes the machines, P represents PFSSP and \(Z_{max}\) is the goal to minimize makespan of the last job on the last machine.

In PFSSP, main goal is minimization of the maximum makespan, which can be determined as

The main goal of PFSSP is to determine the optimal permutation from the set of all permutations:

where \(\pi ^{opt}\) denotes the optimal permutation.

3 Basic TLBO algorithm

Teaching-learning process is widely known since the ancient times, where one individual (learner) tries to learn from other individual. It has been applied in optimization of two thermoelectric coolers [50], cluster data [51] and image processing [52].

TLBO [24]is a population-based metaheuristic algorithm, that has two main fundamental phases: a teacher-phase and the learner-phase. In teacher phase of the algorithm, teacher teaches or shares their knowledge to learners (students). In the learning phase, the group of students or learners enhance their knowledge through teacher or interaction among themselves. Figure 1 illustrates the schematic flow of basic TLBO algorithm.

3.1 Teacher-phase (TP)

It invokes the exploration phase of TLBO where students learn from the teacher. In this stage, teacher attempts to enhance the mean result of the class. For the objective function f(Z) with N-dimensional variable, \(Z=(z_{i,1},z_{i,2},\ldots ,z_{i,N})\) represents the position of ith learner (or student) solution for N-dimensional problem. Thus, the class mean position with P learners (where P is the size of population) is represented as

The students with the optimal value (i.e. best value) in current generation are represented as \(Z_{teacher}\). The position of each student is shown in Eq. (8)

where \({Z}_{i,new}\) and \({Z}_{i,old}\) are the ith students (learners) new and old positions respectively. Rand is dispersed random number in the range of [0, 1]. \({T}_{F}\) is teacher-factor which decided average value to be altered. \({T}_{F}\) can be any 1 or 2, it can be generated randomly using Eq. (9) as

where \({T}_{F}\) is achieved randomly when the TLBO in range of [1–2], where “1” represent to no raise in proficiency-level and “2” represents to entire transmission of knowledge.

3.2 Learner-phase (LP)

The learner-phase is an exploitation phase of TLBO algorithm, where a learner randomly collaborates with other learners to improve his/her proficiency. Student (Learner) \(X_i\), learns new things if another student \(X_{j} (j \ne i)\) has more proficiency than him/her. Learning process can be depicted mathematically as

where rand is a random number in the range of [0, 1], \(Z_{i,new}\) it gives the preferable feasible value.

3.3 Duplication elimination

A duplicate solution should not be kept in the population as it may cause premature convergence of the algorithm [53, 54]. The duplication elimination strategy is given in Fig. 2.

4 Proposed MTLBO approach

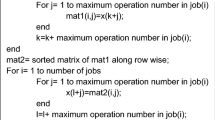

In this section, the proposed MTLBO is explained with the local search strategy for makespan minimising the makespan in PFSSP. The proposed algorithm flowchart is given in Fig. 3. The symbol and meaning of the proposed algorithm is shown in Table 2.

4.1 Representation of solution for MTLBO algorithm

The basic TLBO algorithm was initially proposed for solving different continuous-optimization examples. However, the PFSSP is a discrete optimization technique. The basic TLBO algorithm, therefore cannot be used to solve PFSSP directly. So, the basic TLBO has to be modified using the concept of differential evolution with random scale factor (DERSF) [55]. This term is scaled by scale factor (R) in a random way in the range (0.5, 1) and it is given as

To apply MTLBO to PFSSP, one of the key concerns is to create mapping rule between job sequence and the vector of individuals. To convert individual \(Z_i=[z_{i,1}, z_{i,2},\ldots , z_{i,n}]\) into job permutation \(\varPi _i=[\pi _{i,1},\pi _{i,2},\ldots ,\pi _{i,n}]\), the following random keys (called as robust representation [56]) are used namely the Smallest Positive Value (SPV) [34], Largest Ranked Value (LRV) [57] and Largest Order Value (LOV) [58]. The SPV, LRV and LOV rules are used in existing research [38]. The job sequence relationship scheme plays a vital role in the developmental mechanism of the proposed MTLBO. If exchange of job sequence is not proper in PFSSP, then it will increase the computational time of the proposed algorithm.

In this study, LOV rule is utilized. LOV is a very simple method, which is focused on random-key presentation. In LOV rule, by ranking the individual of \(Z_i=[z_{i,1},z_{i,2},\ldots .,z_{i,n}]\) with decreasing order, temp job permutation sequence is generated as \(\sigma _i=[\sigma _{i,1},\sigma _{i,2},\ldots ,\sigma _{i,n}] \). Then a job sequence permutation is obtained as \(\varPi _i=[\pi _{i,1},\pi _{i,2},\ldots ,\pi _{i,n}]\) using Eq. (12).

where \(j\in [1,\ldots .,n]\). In Table 3, LOV-rule is described with an easy example (n = 6) where the solution representation of individual vector (\(Z_i\)) is obtained with job dimension, position (location) and job permutation.

For \(Z_i\)= [0.6, 0.12, 1.8, − 0.48, 1.7, − 1.06], it can be seen that \(z_{i,3}\) has the largest value, and so it is selected as the first order of a job permutation. After that \(z_{i,5}\), \(z_{i,1}\), \(z_{i,4}\) and \(z_{i,6}\) are preferred as job-permutation. Hence, the job sequence \(\varPi _i\) = [3, 5, 1, 2, 4, 6] is obtained. From this formulation, the conversion using LOV rule is clear, which makes MTLBO suitable for solving PFSSP.

4.2 Population initialization

This phase plays a vital role and it is applied uniformly and randomly. A population vector \(Z_i=[z_{i,1},z_{i,2},\ldots ,z_{i,n}]\) is randomly generated. Nawaz–Enscore–Ham (NEH) method is used for producing good initial population. NEH is the heuristic method to obtain optimal solution to the PFSSP. The NEH steps are described below:

-

Step 1:

All jobs are arranged in non-increasing procedure based on their overall handling time on all machines. Then job permutation \(\varPi (i)=[\pi (1),\pi (2),\ldots .,\pi (3)] \) is achieved.

-

Step 2:

Select any two jobs permutations, for examples \(\pi (1)\) and \(\pi (2)\). Then, all forms of permutation records of these two jobs are calculated and then an optimal order is taken.

-

Step 3:

Select any job permutation \(\pi (j)\),(j=3,4,....,n) and determine best permutation of jobs by assigning the available position to which they had scheduled. The optimal arrangement is selected from the next iteration.

The outcome created by NEH algorithm is a discrete job-permutation. To implement the MTLBO algorithm, the job permutation sequences are converted into individual by using Eq. (13), the conversion process is performed as:

where \(I_{NEH,i}\) is job-index in ith dimension of job-permutation (\(\varPi \)). \(Z_{max,i}\) and \(Z_{min,i}\) are maximum and minimum bounds. \(Z_{NEH,i}\) is the individual value of given problem at the ith dimension.

Let us consider that all job-permutation (\(\varPi \)) achieved by NEH heuristic approach are given as \(\varPi \) = [2, 6, 3, 5, 1, 4]. Hence, the index of first carry on job sequence is given as value 5, index of second carry on job sequence is given as value 1. The index sequence (\(I_{NEH,i}\)) and individual value (\(Z_{NEH,i}\)) are shown in Table 4.

4.3 Opposition based learning

Many optimization techniques are developed using OBL. OBL is used to enhance the performance and search ability to find a solution of population based algorithms [59]. Rahnamayan et al. [60] first proposed OBL strategy in an optimization method. The main purpose of OBL is to choose a better current candidate outcome by simultaneous evaluation of the current outcomes and its opposite outcomes. Mainly, OBL is applied to any population based algorithm during two phases: initial population and evolutionary phase. We can improve the initial population of proposed MTLBO algorithm using OBL. The concept of OBL depends on opposite number and opposite point.

Opposite number : Let a \(z \in [x, y]\) be any real number \(\mathbb {R}\). its opposite number \(z^{opp}\) is given by

Opposite point: Let \(Y(z_1,z_2,\ldots ,z_n)\) be point in N- dimensional space where \(z_i=[x,y];\). The opposite point of opposite population (\(z_{1}^{opp},z_{2}^{opp},\ldots ,z_{n}^{opp}\)) is given as:

4.3.1 GOBL optimization in MTLBO algorithm

The convergence rate of MTLBO algorithm can be improved with the help of GOBL. Wang et al. [61] proposed Generalized OBL model (GOBL). The proposed MTLBO approach uses this method to improve the performance of algorithm. When the premature convergence and stagnation state are identified, re-initialization method (i.e. OBL concept) is stimulated to avoid premature convergence in MTLBO algorithm.

4.4 Local search approach

The local search approach is applied to improve the quality of best scheduling (let \( B^*\)) such as

-

(a)

In the job permutation, each job in the \(B^*\) may have variable dimension d, where move the job sequence to all other (d − 1) location.

-

(b)

For each movement, calculate the new schedule of the job permutation, i.e. consider \(B^*\)= new schedule.

-

(c)

Least search probability (LSP) is used for local search approach.

By using the Kaveh et al. [62], the probability value P is changed to each generation(iteration) such as

where iteration is current iteration and \(max\_iteration\) is total number of iterations required to perform the experiments. Thus, changing the value of P improves the solution of the outcomes.

4.5 Swap, insert and inverse operators

To enhance the performance and quality of solution for MTLBO, some operators are chosen such as swap, insert and inverse. These operators would enhance local search ability [63] and improve the outcome solution. Let us randomly take any two different positions m and n in a job permutation (\(\pi \) ), if \(m <u\), m will be inserted at the back of u. Otherwise, m is to be inserted in front of u. The details of these structures are as follows:

4.5.1 Swap operator

The Fig. 4 shows the swap operation. Randomly select any two separate locations from a job-permutation (\(\pi \)) such as m and u and swap them.

4.5.2 Insert operation

In Fig. 5, randomly select any two separate locations from a job permutation. Here job permutation (\(\pi \)) is removed from position m and inserted into other position u.

4.5.3 Inverse operation

In Fig. 6, randomly change the subsequence between two separate locations of a job permutation (\(\pi \)). By performing the inverse operation, jobs in solution \(\pi \) and \(\pi _{new}\) are interchanged.

4.6 Re-initialization structure

The experiment is conducted on the proposed algorithm, local search is used for fast convergence of the outcomes. Thus, at some extent the algorithm outcomes are trapped in local optimum i.e. premature convergence may occur. To overcome this situation, re-initialization mechanism is developed. If the feasible optimal solution is not found after 40 successive generation, then reinitialize the learners (populations). The re-initialization scenario is developed and it is given as: half of the populations are exchanged with the best populations achieved in the earlier process and another half of the populations are generated randomly.

4.7 Computational-complexity of MTLBO in term of big O notation

Let there be n jobs and m machines in PFSSP, the size of population is p. Generally in EA the computation complexity determine as of O\((d*p + fit*p)\), d is dimension of problem, fit is fitness or cost function (here minimization of maximum makespan is cost function). In the Initial stage of MTLBO computational complexity of NEH O() and remaining population generated by randomly using OBL technique is O(0.5 * \(n^2\) mp) . The computational complexity at initial phase is O(\(n^2mp\)) + O(\(0.5*n^2 mp\)). In teaching phase, due swap and local search computational complexity is O(\(n^2mp\)) . In learning phase, computational complexity is O(\(n^2m/2\)), due the learner gets information from teacher or exchange information themselves.

Total computation complexity of MTLBO for computing PFSSP :

O(n, m, p) = O(\(n^2mp\)) + O(\(0.5*n^2 mp\)) + O(\(n^2mp\)) + O(\(0.5*n^2 mp\))+ O(\(n^2m/2\)) \(\approx \)O(\(n^2mp\)) = O(50 \(\times \) 1000 \(n^2\)) = O(50000 \(n^2\))

5 Computational results and comparisons

This section represents the computational outcomes generated from the MTLBO algorithm. The MTLBO is tested over ten standard benchmark test functions which are taken from Liang [64]. These test functions are either unimodal or multimodal. The tuning for various performance measures are calculated from various evolutionary algorithms by the best, worst, mean (average) and SD (standard-deviation) outcomes [65]. The unimodal benchmark functions validate accuracy and the convergence speed of proposed algorithm whereas multimodal benchmark test functions validate using the global search ability of proposed algorithm. Then, the computational results of MTLBO algorithm are analyzed using Wilcoxon signed rank test method. There are various kinds of stopping criteria in the literature of NIAs, like maximum-number of iterations (G) , number of function evaluation (NFEs), limit of time and tolerance. The experiment uses G (i.e. number of generations) as stopping criteria for proposed MTLBO algorithm.

5.1 Experimental setup

In the following computational experiments, the performance and efficiency of MTLBO algorithm is applied on extensively used benchmark functions. There are mainly five well known benchmark functions such as

-

(a)

Carlier datasets (contains eight instances) consist of eight combinations, ranging from 11 jobs with 5 machines to 8 jobs with 8 machines [66].

-

(b)

Reeves and Yamada benchmark datasets (contains twenty-one instances) consist of seven combinations, ranging from 20-jobs with 5-machines to 75-jobs with 20-machines [67].

-

(c)

Heller datasets (contains two instances) consist of two combinations, ranging from 20 jobs with 10 machines to 10 jobs with 10 machines [68].

-

(d)

Taillard datasets (contains 120 instances) consist of twelve combinations, ranging from 20 jobs with 5 machines to 500 jobs with 20 machines [69].

-

(e)

VRF benchmark functions [70] (each contain 240 small and 240 large instances, which have upto 800 jobs on 60 machines) data is available at http://soa.iti.es.

The performance measure indicators for benchmark functions are ARPD (Average Relative Percentage Deviation ), BRPD (Best Relative Percentage Deviation ) and WRPD (Worst Relative Percentage Deviation) [71]. They are calculated in Eqs. (17), (18), and (19) respectively.

where \(Z_{opt}\) is best makespan value, \(Z_{best}\), \(Z_{i}\) and \(Z_{worst}\) are makespan solutions obtained in tested algorithms. Smaller value of ARPD indicates that the algorithm are better.

In Table 5, the given parameters are calibrate for MTLBO by conducting several experiments. All the experimentation and analysis of MTLBO algorithm have been implemented on an Intel 3.40 GHz core i5 processor where MTLBO algorithm was programmed with Matlab 8.4 (R2014b) under Win7(x64) with 8 GB RAM.

5.2 Testing proposed algorithm using benchmark functions

In this experiment, ten unconstrained benchmark functions are tested using MTLBO algorithm for population size (\(NP= 50\)) and dimension (\(D=50\)). The performance of the MTLBO is calculated by 25 independent runs over the given ten benchmark functions. In Table 6, the effective results of MTLBO are shows in terms of performance measures such as best, worst, mean and SD values on different population based algorithms.

5.2.1 Diversification and intensification of proposed algorithm

The unimodal optimization function (for e.g. Fun2 : Schwefels) is used to find the balance between diversification (exploration) and intensification (exploitation). In the Fig. 7 indicate the convergence of diversity of population with iterations for unimodal optimization function (i.e schwefels function). This is applied for five metaheuristic algorithms (i.e. GA, PSO, DE, TLBO and MTLBO). In the Fig. 7, the graph indicates that proposed algorithm MTLBO decrease gradually during the exploration, hence global optimal value obtained. The solution obtained during exploitation of algorithm is precise and stable.

5.2.2 Statistical analysis of proposed algorithm

To investigate the MTLBO algorithm, statistical procedure is applied to perform analysis such as parametric and non-parametric tests. The parametric test is based on assumptions made during the analysis of an experiment in computational intelligence. On the other hand, the non-parameter test is applied when the previous conclusions are not satisfied. Usually, this test deals with nominal or ordinal data, but it can also be applied to continuous data by performing the ranking based transformation.

The non-parametric test is applied to investigate the proposed algorithm. There are two types of non-parametric tests such as pair-wise comparison and multiple comparisons. To perform analysis of the proposed algorithm, pair-wise comparison is applied to MTLBO. In pair-wise procedure, comparison is made between two methods (algorithms), by obtaining p-value from one another. Wilcoxon signed rank test is a powerful non-parametric test for the pair-wise procedure as given in [72].

In Table 7, results of Wilcoxon signed rank test for MTLBO algorithm, with different metaheuristic algorithms over ten benchmark functions are shown. The test is conducted using 25 independent runs of the proposed algorithm, which differs significantly from other algorithms. Table 7 records the p-value for ten unconstrained benchmark functions with \(R+\) and \(R-\) values. The \(R+\) value indicate a sum of rank in which the first algorithm outperform compare to the second algorithm. \(R-\) value indicate a sum of ranks second algorithm outperforms compared to the first algorithm. The non significant p-value is represented in bold in Table 7.

From the Table 7, it is clear that the performance of the MTLBO algorithm is significantly better than other metaheuristic algorithms. Functions Fun1, Fun2, Fun3, Fun5, Fun7 and Fun10 have significant p-value (\(p\le 0.05\)), in MTLBO versus DE, whereas functions Fun4, Fun6 and Fun9 does not have significant value.

5.3 Comparison of MTLBO algorithm on Carlier benchmark datasets

The effectiveness of MTLBO is compared with various metaheuristic algorithms on Carlier benchmark datasets. Table 8, shows Carlier datasets range from Car01 to Car08 with size range from \(11 \times 5\) to \(8 \times 8\). The performance of MTLBO is calculated using BRPD, ARPD and WRPD along with best makespan value (\(Z_{opt}\)). The MTLBO algorithm is compared with L-HDE [37], PSOVNS [34], DBA [73], HBSA [74], and PSOMA [35].

From the Table 8, it is observed that performance of proposed algorithm is better than given population based algorithm. The effective values of MTLBO algorithm shows better performance by using parameters of BRPD, ARPD, WRPD and best makespan values.

5.4 MTLBO algorithm on Reeves and Heller benchmark datasets

The effectiveness of proposed algorithm is compared with Reeves and Heller datasets. In the Table 9 indicates Reeves datasets range from REC001 to REC041 with size range from 20\(\times \) 5 to 75\(\times \) 20. The performance of MTLBO is calculated using BRPD, ARPD and WRPD along with best makespan value (\(Z_{opt}\)). The MTLBO algorithm is compared with L-HDE, PSOVNS, DBA, HBSA, PSOMA, and HCS [34]. Note that, the best values are shown in bold.

From Table 9, it is clear that the efficiency of MTLBO is superior than given population based algorithm. The effective values of MTLBO algorithm shows better performance by using parameters of BRPD, ARPD, WRPD and best makespan values.

The comparison of the mean value of BRPD, WRPD and ARPD for the dataset Reeves is shown in (Figs. 8, 9, 10). MTLBO is compared with various population-based algorithms, it can be seen that MTLBO algorithm achieves best outcomes. In mean value of BRPD, the largest value PSOVNS is 2.1665. In average value of ARPD, the largest value on DBA is 2.5875. In mean value WRPD, the largest value on DBA is 3.346. MTLBO shows best and effective outcomes as compared to other population based algorithms. Hence, MTLBO is most reliable and effective algorithm for solving PFSSP.

In Table 10, Heller datasets have two instances such as Hel1 and Hel2 with the size range \(20 \times 10\) and \(10 \times 10\). Hamdi et al. [75] determined the upper and lower bound of these datasets. The MTLBO is also compared with NEH, DSOMA [76] and SG [11]. It can be seen from the outcomes that MTLBO achieves better value for upper bound for Hel1 and Hel2 problems.

5.5 MTLBO algorithm on Taillard benchmark dataset

The comparison of the MTLBO with basic TLBO and other metaheuristic algorithms are carried out using Taillards benchmark functions [69]. The problem size of Taillard benchmark functions range from \(20 \times 5\) to \(500 \times 20\). The minimum and maximum bounds of Taillard’s test function are given in OR-Library ( i.e. Eric Taillard’s webpage).

5.5.1 Comparison of MTLBO with basic TLBO

To validate the performance of MTLBO, the experimental outcomes and comparison of the proposed algorithm with basic TLBO for Taillard benchmark test functions are shown in Table 11. The performance measure indicators such as BRPD, ARPD and WRPD are given in Table 11.

MTLBO algorithm outperforms the basic TLBO algorithm on all Taillard benchmark functions. The total average values for ARPD, BRPD and WRPD of MTLBO are 0.830, 3.717 and 6.118 which are better than basic TLBO algorithm tested values.

The convergence plots of basic TLBO and MTLBO algorithms are listed in Fig. 11. For plotting the graph, makespan versus Taillard benchmark function (such as TA010, TA030, TA050, TA070, TA090 and TA0110) are used. From these graphs, it can be indicated that the proposed algorithm converges faster than basic TLBO algorithm. As the iterations increase, MTLBO hardly finds accurate solutions, but by embedding the local search operator into MTLBO, it can achieve better performance.

5.5.2 Comparison of MTLBO with other EAs

In this subsection, the proposed MTLBO algorithm is compared with IG1 [77], IG2 [77], HDDE [78] and TLBO algorithms using Taillard benchmark test functions. The performance is measured using ARPD indicator. Table 12 shows comparison of proposed algorithm over population based algorithms with respect to ARPD. The MTLBO algorithm obtained lowest average value i.e. 0.83 for ARPD, which is better than IG1 (2.31), IG2 (2.27), HDDE (4.51) and TLBO (3.11).

Later on, the proposed MTLBO algorithm is compared with GA [79], HPSO [78], ATPPSO [80] and NCS [81] on the Taillard benchmark test functions. The computation results achieved by GA, HPSO, ATPPSO and NCS are indicated in Table 13. The performance measure indicators such as Mean and ARPD (Average relative error) are also presented in Table 13. The best outcomes of the benchmark functions are indicated in bold.

In Table 13, MTLBO obtains the best computational outcomes with respect to all the given metaheuristic algorithms. The MTLBO algorithm obtained lowest total mean ARPD (i.e. 0.8) which is superior value than HPSO (2.74), ATPPSO (1.94), GA (4.31), and NCS (1.24). The performance of proposed algorithm significantly superior outcomes over Taillard benchmark test function. The mean values of proposed algorithm are within the lower and upper bound given as OR-Library.

In Table 14, the proposed algorithm compared total flowshop time criterion over Taillard benchmark problems which taken from http://www.cs.colostate.edu/sched/generator. MTLBO compared with PSO and RSA [82] . The mimumium and average results obtained by MTLBO is significantly superior than PSO and RSA.

To analyze the performance of MTLBO algorithm over other evolutionary algorithms, Wilcoxon sign ranked test was conducted by using achieved ARPD value. Table 15 shows significant p-value of proposed algorithm over other evolutionary algorithms. From these experimental outcomes, it is observed that MTLBO algorithm is more effective and significant than GA, HPSO, ATPPSO and NCS. It is to be noted that the p-value in this experiment is shown in bold letters for below 0.05 value.

5.6 MTLBO algorithm on VRF benchmark datasets

New benchmark test functions such as VRF_hard_small benchmark and VRF_hard_large benchmark are proposed in [70]. These benchmark functions are selected to perform experiments on PFSSP. These functions consist of 240 small instances and 240 large instances, with upto 800 jobs on 60 machines. The small instances have following combinations of n = number of jobs, m = number of machines i.e. n = [10, 20, 30, 40, 50, 60] and m = [5, 10, 15, 20] and large instances consist of n = [100, 200, 300, 400, 500, 600, 700, 800] and m = [20, 40, 60].

The stopping criterion to perform VRF benchmark function is evaluated with maximum running CPU time as follows

where t indicates the amount of time (t = 5 ms). Each of the instance runs at least 25 times to obtain the best outcomes.

To measure the effectiveness of the metaheuristic method, ARPD is calculated for each instance as in Eq. (17).

The computational results of the MTLBO over different metaheursitic algorithms with VRF small instance are given in the Table 16. The best outcomes of the experimental are shown in bold letters. The performance of MTLBO is calculated using ARPD and SD values. In Table 16, the average value for MTLBO with VRF small instances for ARPD and SD are 0.10 and 0.03 respectively. From Table 16, it is observed that ARPD and SD value of MTLBO is superior than DPSO, GA-VNS, HDE and HPSO algorithms.

The computational results of MTLBO over different metaheursitic algorithms with VRF Large instances are given in the Table 17. The best outcomes of the experiments is shown in bold letters. The performance of MTLBO algorithm is calculated using ARPD and SD values. In Table 17, the average value of the MTLBO with VRF small instances for ARPD and SD are 2.61 and 0.14 respectively. From Table 17, it is observed that obtained results using ARPD and SD value for MTLBO algorithm are statistically better than DPSO, GA-VNS, HDE and HPSO algorithms.

6 Conclusion

This paper proposed a Modified Teaching Learning Based Optimization with Opposite Based Learning approach to find solution of Permutation Flow-Shop Scheduling Problem. OBL approach is used to enhance the quality of the initial population and convergence speed. MTLBO is developed to determine the PFSSP efficiently using the Largest Order Value rule-based random-key, to change an individual into discrete job schedules. Based on Nawaz–Enscore–Ham heuristic mechanism, the new initial populations are generated in MTLBO. To enhance the local exploitation ability, the effective swap, insert and inverse structures are incorporated into the MTLBO.

The statistical result and analysis of the MTLBO based on ten benchmark functions and Wilcoxon signed rank test shows the effectiveness of the algorithm. The computational results over five well-known benchmark functions such as Carlier, Reeves, Heller, Taillard and VRF benchmark instances set indicates that MTLBO is the most powerful and convenient method to solve PFSSP. The performance measures of the proposed algorithm are calculated using ARPD, BRPD, and WRPD over all datasets. The performance of MTLBO is tested against state-of-the-art algorithms. It is observed that MTLBO algorithm works effectively in most of the cases. Thus, by conducting Wilcoxon signed test, it is proved that MTLBO algorithm is much more effective than other metaheuristic algorithms. The future research direction focus on hybrid flow shop scheduling and multi-objective flow shop problems.

References

Pinedo ML (2016) Scheduling: theory, algorithms, and systems. Springer, Berlin. https://doi.org/10.1007/978-1-4614-2361-4

Grabowski J, Pempera J (2000) Sequencing of jobs in some production system. Eur J Oper Res 125(3):535–550. https://doi.org/10.1016/S0377-2217(99)00224-6

Du W, Tang Y, Leung SYS, Tong L, Vasilakos AV, Qian F (2018) Robust order scheduling in the discrete manufacturing industry: a multiobjective optimization approach. IEEE Trans Ind Inf 14(1):253–264. https://doi.org/10.1109/TII.2017.2664080

Hidri L, Gharbi A (2017) New efficient lower bound for the hybrid flow shop scheduling problem with multiprocessor tasks. IEEE Access 5:6121–6133. https://doi.org/10.1109/ACCESS.2017.2696118

Bargaoui H, Driss OB, Ghédira K (2017) Towards a distributed implementation of chemical reaction optimization for the multi-factory permutation flowshop scheduling problem. Procedia Comput Sci 112:1531–1541. https://doi.org/10.1016/j.procs.2017.08.057

Deng F, He Y, Zhou S, Yu Y, Cheng H, Wu X (2018) Compressive strength prediction of recycled concrete based on deep learning. Constr Build Mater 175:562–569

Cho HM, Jeong IJ (2017) A two-level method of production planning and scheduling for bi-objective reentrant hybrid flow shops. Comput Ind Eng 106:174–181. https://doi.org/10.1016/j.cie.2017.02.010

Pan QK (2016) An effective co-evolutionary artificial bee colony algorithm for steelmaking-continuous casting scheduling. Eur J Oper Res 250(3):702–714. https://doi.org/10.1016/j.ejor.2015.10.007

Zhang Y, Wang J, Liu Y (2017) Game theory based real-time multi-objective flexible job shop scheduling considering environmental impact. J Clean Prod 167:665–679. https://doi.org/10.1016/j.jclepro.2017.08.068

Johnson SM (1954) Optimal two and three stage production schedules with setup times included. Naval Res Logist Q 1(1):61–68. https://doi.org/10.1002/nav.3800010110

Ruiz R, Stützle T (2008) An iterated greedy heuristic for the sequence dependent setup times flowshop problem with makespan and weighted tardiness objectives. Eur J Oper Res 187(3):1143–1159. https://doi.org/10.1016/j.ejor.2006.07.029

Ozolins A (2017) Improved bounded dynamic programming algorithm for solving the blocking flow shop problem. Central Eur J Oper Res. https://doi.org/10.1007/s10100-017-0488-5

Toumi S, Jarboui B, Eddaly M, Rebaï A (2017) Branch-and-bound algorithm for solving blocking flowshop scheduling problems with makespan criterion. Int J Math Oper Res 10(1):34–48. https://doi.org/10.1504/IJMOR.2017.080743

Selen WJ, Hott DD (1986) A mixed-integer goal-programming formulation of the standard flow-shop scheduling problem. J Oper Res Soc 37(12):1121–1128. https://doi.org/10.1057/jors.1986.197

Grabowski J, Wodecki M (2004) A very fast tabu search algorithm for the permutation flow shop problem with makespan criterion. Comput Oper Res 31(11):1891–1909. https://doi.org/10.1016/S0305-0548(03)00145-X

Xu J, Zhang J (2014) Exploration-exploitation tradeoffs in metaheuristics: survey and analysis. In: Proceedings of the 33rd Chinese control conference, pp 8633–8638. https://doi.org/10.1109/ChiCC.2014.6896450

Goldberg DE, Holland JH (1988) Genetic algorithms and machine learning. Mach Learn 3(2):95–99. https://doi.org/10.1023/A:1022602019183

Dorigo M, Blum C (2005) Ant colony optimization theory: a survey. Theoret Comput Sci 344(2):243–278. https://doi.org/10.1016/j.tcs.2005.05.020

Storn R, Price K (1997) Differential evolution—a simple and efficient heuristic for global optimization over continuous spaces. J Global Optim 11(4):341–359. https://doi.org/10.1023/A:1008202821328

Geem ZW, Kim JH, Loganathan G (2001) A new heuristic optimization algorithm: Harmony search. SIMULATION 76(2):60–68. https://doi.org/10.1177/003754970107600201

Yang XS (2009) Firefly algorithms for multimodal optimization. In: Proceedings of the 5th International Conference on Stochastic Algorithms: Foundations and Applications, Springer-Verlag, Berlin, Heidelberg, SAGA’09, pp 169–178

Yang XS (2010) A new metaheuristic bat-inspired algorithm. Springer, Berlin, pp 65–74

Gandomi AH, Yang XS, Alavi AH (2013) Cuckoo search algorithm: a metaheuristic approach to solve structural optimization problems. Eng Comput 29(1):17–35. https://doi.org/10.1007/s00366-011-0241-y

Rao R, Savsani V, Vakharia D (2011) Teaching learning based optimization: a novel method for constrained mechanical design optimization problems. Comput Aided Des 43(3):303–315. https://doi.org/10.1016/j.cad.2010.12.015

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007

Wang GG, Deb S, Coelho L (2015a) Earthworm optimization algorithm: a bio-inspired metaheuristic algorithm for global optimization problems. Int J Bioinspir Comput 12:1–22

Wang GG, Deb S, Cui Z (2015b) Monarch butterfly optimization. Neural Comput Appl. https://doi.org/10.1007/s00521-015-1923-y

Karaboga D, Basturk B (2007) Artificial bee colony (abc) optimization algorithm for solving constrained optimization problems. In: Foundations of fuzzy logic and soft computing. Springer, Berlin, pp 789–798

Rao R (2016) Jaya: a simple and new optimization algorithm for solving constrained and unconstrained optimization problems. Int J Ind Eng Comput 7(1):19–34

Wang GG (2016) Moth search algorithm: a bio-inspired metaheuristic algorithm for global optimization problems. Memet Comput. https://doi.org/10.1007/s12293-016-0212-3

Zhang Y, fang Song X, wei Gong D, (2017) A return-cost-based binary firefly algorithm for feature selection. Inf Sci 418–419:561–574. https://doi.org/10.1016/j.ins.2017.08.047

Reeves CR (1995) A genetic algorithm for flowshop sequencing. Comput Oper Res 22(1):5–13. https://doi.org/10.1016/0305-0548(93)E0014-K

Mirabi M, Fatemi Ghomi SMT, Jolai F (2014) A novel hybrid genetic algorithm to solve the make-to-order sequence-dependent flow-shop scheduling problem. J Ind Eng Int 10(2):57. https://doi.org/10.1007/s40092-014-0057-7

Tasgetiren MF, Liang YC, Sevkli M, Gencyilmaz G (2007) A particle swarm optimization algorithm for makespan and total flowtime minimization in the permutation flowshop sequencing problem. Eur J Oper Res 177(3):1930–1947. https://doi.org/10.1016/j.ejor.2005.12.024

Liu D, Tan KC, Goh CK, Ho WK (2007) A multiobjective memetic algorithm based on particle swarm optimization. IEEE Trans Syst Man Cybern Part B (Cybern) 37(1):42–50. https://doi.org/10.1109/TSMCB.2006.883270

Gao J, Chen R, Deng W (2013) An efficient tabu search algorithm for the distributed permutation flowshop scheduling problem. Int J Prod Res 51(3):641–651. https://doi.org/10.1080/00207543.2011.644819

Liu Y, Yin M, Gu W (2014) An effective differential evolution algorithm for permutation flow shop scheduling problem. Appl Math Comput 248:143–159. https://doi.org/10.1016/j.amc.2014.09.010

Li X, Yin M (2013) A hybrid cuckoo search via lévy flights for the permutation flow shop scheduling problem. Int J Prod Res 51(16):4732–4754. https://doi.org/10.1080/00207543.2013.767988

Sioud A, Gagné C (2018) Enhanced migrating birds optimization algorithm for the permutation flow shop problem with sequence dependent setup times. Eur J Oper Res 264(1):66–73. https://doi.org/10.1016/j.ejor.2017.06.027

Fernandez-Viagas V, Perez-Gonzalez P, Framinan JM (2018) The distributed permutation flow shop to minimise the total flowtime. Comput Ind Eng 118:464–477. https://doi.org/10.1016/j.cie.2018.03.014

Meng T, Pan QK, Li JQ, Sang HY (2018) An improved migrating birds optimization for an integrated lot-streaming flow shop scheduling problem. Swarm Evol Comput 38:64–78. https://doi.org/10.1016/j.swevo.2017.06.003

Han Y, Gong D, Li J, Zhang Y (2016) Solving the blocking flow shop scheduling problem with makespan using a modified fruit fly optimisation algorithm. Int J Prod Res 54(22):6782–6797. https://doi.org/10.1080/00207543.2016.1177671

Baykasolu A, Hamzadayi A, Köse SY (2014) Testing the performance of teachinglearning based optimization (tlbo) algorithm on combinatorial problems: flow shop and job shop scheduling cases. Inf Sci 276:204–218. https://doi.org/10.1016/j.ins.2014.02.056

Xie Z, Zhang C, Shao X, Lin W, Zhu H (2014) An effective hybrid teachinglearning-based optimization algorithm for permutation flow shop scheduling problem. Adv Eng Softw 77:35–47. https://doi.org/10.1016/j.advengsoft.2014.07.006

Shao W, Pi D, Shao Z (2016) A hybrid discrete optimization algorithm based on teachingprobabilistic learning mechanism for no-wait flow shop scheduling. Knowl Based Syst 107:219–234. https://doi.org/10.1016/j.knosys.2016.06.011

qing Li J, ke Pan Q, Mao K, (2015) A discrete teaching-learning-based optimisation algorithm for realistic flowshop rescheduling problems. Eng Appl Artif Intell 37:279–292. https://doi.org/10.1016/j.engappai.2014.09.015

Shao W, Pi D, Shao Z (2017) An extended teaching-learning based optimization algorithm for solving no-wait flow shop scheduling problem. Appl Soft Comput 61:193–210. https://doi.org/10.1016/j.asoc.2017.08.020

Buddala R, Mahapatra SS (2017) Improved teaching-learning-based and jaya optimization algorithms for solving flexible flow shop scheduling problems. J Ind Eng Int 1:1. https://doi.org/10.1007/s40092-017-0244-4

Abdel-Basset M, Manogaran G, El-Shahat D, Mirjalili S (2018) A hybrid whale optimization algorithm based on local search strategy for the permutation flow shop scheduling problem. Future Gener Comput Syst 85:129–145. https://doi.org/10.1016/j.future.2018.03.020

Rao RV, Patel V (2013) Multi-objective optimization of two stage thermoelectric cooler using a modified teachinglearning-based optimization algorithm. Eng Appl Artif Intell 26(1):430–445. https://doi.org/10.1016/j.engappai.2012.02.016

Satapathy SC, Naik A (2011) Data clustering based on teaching-learning-based optimization. In: Panigrahi BK, Suganthan PN, Das S, Satapathy SC (eds) Swarm, evolutionary, and memetic computing. Springer, Heidelberg, pp 148–156

Jin H, Wang Y (2014) A fusion method for visible and infrared images based on contrast pyramid with teaching learning based optimization. Infrared Phys Technol 64:134–142. https://doi.org/10.1016/j.infrared.2014.02.013

Rao RV, Patel V (2013) An improved teaching-learning-based optimization algorithm for solving unconstrained optimization problems. Sci Iran 20(3):710–720. https://doi.org/10.1016/j.scient.2012.12.005

Waghmare G (2013) Comments on a note on teachinglearning-based optimization algorithm. Inf Sci 229:159–169. https://doi.org/10.1016/j.ins.2012.11.009

Das S, Konar A, Chakraborty UK (2005) Two improved differential evolution schemes for faster global search. In: Proceedings of the 7th annual conference on genetic and evolutionary computation. GECCO ’05. ACM, New York, pp 991–998. https://doi.org/10.1145/1068009.1068177

Bean JC (1994) Genetic algorithms and random keys for sequencing and optimization. ORSA J Comput 6(2):154–160. https://doi.org/10.1287/ijoc.6.2.154

Li X, Yin M (2013) An opposition-based differential evolution algorithm for permutation flow shop scheduling based on diversity measure. Adv Eng Softw 55:10–31. https://doi.org/10.1016/j.advengsoft.2012.09.003

Qian B, Wang L, Hu R, Wang WL, Huang DX, Wang X (2008) A hybrid differential evolution method for permutation flow-shop scheduling. Int J Adv Manuf Technol 38(7):757–777. https://doi.org/10.1007/s00170-007-1115-8

Tizhoosh HR (2005) Opposition-based learning: a new scheme for machine intelligence. In: International conference on computational intelligence for modelling, control and automation and international conference on intelligent agents, web technologies and internet commerce (CIMCA-IAWTIC’06), vol 1, pp 695–701. https://doi.org/10.1109/CIMCA.2005.1631345

Rahnamayan S, Tizhoosh HR, Salama MMA (2008) Opposition-based differential evolution. IEEE Trans Evol Comput 12(1):64–79. https://doi.org/10.1109/TEVC.2007.894200

Wang H, Wu Z, Rahnamayan S, Kang L (2009) A scalability test for accelerated de using generalized opposition-based learning. In: 2009 ninth international conference on intelligent systems design and applications, pp 1090–1095. https://doi.org/10.1109/ISDA.2009.216

Kaveh A, Ghazaan MI (2017) Enhanced whale optimization algorithm for sizing optimization of skeletal structures. Mech Based Des Struct Mach 45(3):345–362. https://doi.org/10.1080/15397734.2016.1213639

Hansen P, Mladenovi N (2001) Variable neighborhood search: principles and applications. Eur J Oper Res 130(3):449–467. https://doi.org/10.1016/S0377-2217(00)00100-4

Liang J, Qu B, Suganthan P, G A, (2013) Problem definitions and evaluation criteria for the CEC 2013 special session on real-parameter optimization. Technical report 201212, Computational Intelligence Laboratory, Zhengzhou University, Zhengzhou, China and Nanyang Technological University, Singapore, pp 3–18

Eiben A, Smit S (2011) Parameter tuning for configuring and analyzing evolutionary algorithms. Swarm Evol Comput 1(1):19–31. https://doi.org/10.1016/j.swevo.2011.02.001

Carlier J, Pinson E (1989) An algorithm for solving the job-shop problem. Manage Sci 35(2):164–176. https://doi.org/10.1287/mnsc.35.2.164

Reeves CR, Yamada T (1998) Genetic algorithms, path relinking, and the flowshop sequencing problem. Evol Comput 6(1):45–60. https://doi.org/10.1162/evco.1998.6.1.45

Heller J (1960) Some numerical experiments for an m Œ j flow shop and its decision-theoretical aspects. Oper Res 8(2):178–184. https://doi.org/10.1287/opre.8.2.178

Taillard E (1993) Benchmarks for basic scheduling problems. Eur J Oper Res 64(2):278–285. https://doi.org/10.1016/0377-2217(93)90182-M

Vallada E, Ruiz R, Framinan JM (2015) New hard benchmark for flowshop scheduling problems minimising makespan. Eur J Oper Res 240(3):666–677. https://doi.org/10.1016/j.ejor.2014.07.033

Zhao F, Liu Y, Zhang Y, Ma W, Zhang C (2017) A hybrid harmony search algorithm with efficient job sequence scheme and variable neighborhood search for the permutation flow shop scheduling problems. Eng Appl Artif Intell 65:178–199. https://doi.org/10.1016/j.engappai.2017.07.023

Derrac J, Garcia S, Molina D, Herrera F (2011) A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol Comput 1(1):3–18. https://doi.org/10.1016/j.swevo.2011.02.002

Luo Q, Zhou Y, Xie J, Ma M, Li L (2014) Discrete bat algorithm for optimal problem of permutation flow shop scheduling. Sci World J

Lin Q, Gao L, Li X, Zhang C (2015) A hybrid backtracking search algorithm for permutation flow-shop scheduling problem. Comput Ind Eng 85:437–446. https://doi.org/10.1016/j.cie.2015.04.009

Hamdi Imen (2015) Upper and lower bounds for the permutation flowshop scheduling problem with minimal time lags. Optim Lett 9(3):465–482. https://doi.org/10.1007/s11590-014-0761-7

Davendra D, Bialic-Davendra M (2013) Scheduling flow shops with blocking using a discrete self-organising migrating algorithm. Int J Prod Res 51(8):2200–2218. https://doi.org/10.1080/00207543.2012.711968

Ribas I, Companys R, Tort-Martorell X (2011) An iterated greedy algorithm for the flowshop scheduling problem with blocking. Omega 39(3):293–301. https://doi.org/10.1016/j.omega.2010.07.007

Wang L, Pan QK, Tasgetiren MF (2011) A hybrid harmony search algorithm for the blocking permutation flow shop scheduling problem. Comput Ind Eng 61(1):76–83. https://doi.org/10.1016/j.cie.2011.02.013

Nearchou AC (2004) A novel metaheuristic approach for the flow shop scheduling problem. Eng Appl Artif Intell 17(3):289–300. https://doi.org/10.1016/j.engappai.2004.02.008

Zhang C, Ning J, Ouyang D (2010) A hybrid alternate two phases particle swarm optimization algorithm for flow shop scheduling problem. Comput Ind Eng 58(1):1–11. https://doi.org/10.1016/j.cie.2009.01.016

Wang H, Wang W, Sun H, Cui Z, Rahnamayan S, Zeng S (2017) A new cuckoo search algorithm with hybrid strategies for flow shop scheduling problems. Soft Comput 21(15):4297–4307. https://doi.org/10.1007/s00500-016-2062-9

Deb S, Tian Z, Fong S, Tang R, Wong R, Dey N (2018) Solving permutation flow-shop scheduling problem by rhinoceros search algorithm. Soft Comput 22(18):6025–6034. https://doi.org/10.1007/s00500-018-3075-3

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Balande, U., shrimankar, D. A modified teaching learning metaheuristic algorithm with opposite-based learning for permutation flow-shop scheduling problem. Evol. Intel. 15, 57–79 (2022). https://doi.org/10.1007/s12065-020-00487-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12065-020-00487-5